A Periodic Mapping Activation Function: Mathematical Properties and Application in Convolutional Neural Networks

Abstract

1. Introduction

2. Related Work

2.1. Sigmoid Linear Unit

2.2. Exponential Linear Units

2.3. Exponential Error Linear Unit

2.4. Rectified Linear Unit

2.5. Log-Softplus Error Activation Function

2.6. Gaussian Error Linear Unit

2.7. Mish

3. Proposed Activation Functions

3.1. Periodic Sine Rectifier (PSR)

3.2. Leaky Periodic Sine Rectifier (LPSR)

4. Experiments

4.1. Model

4.2. Experiment Establishment

5. Results

5.1. Result Analysis

5.2. Friedman Statistical Test

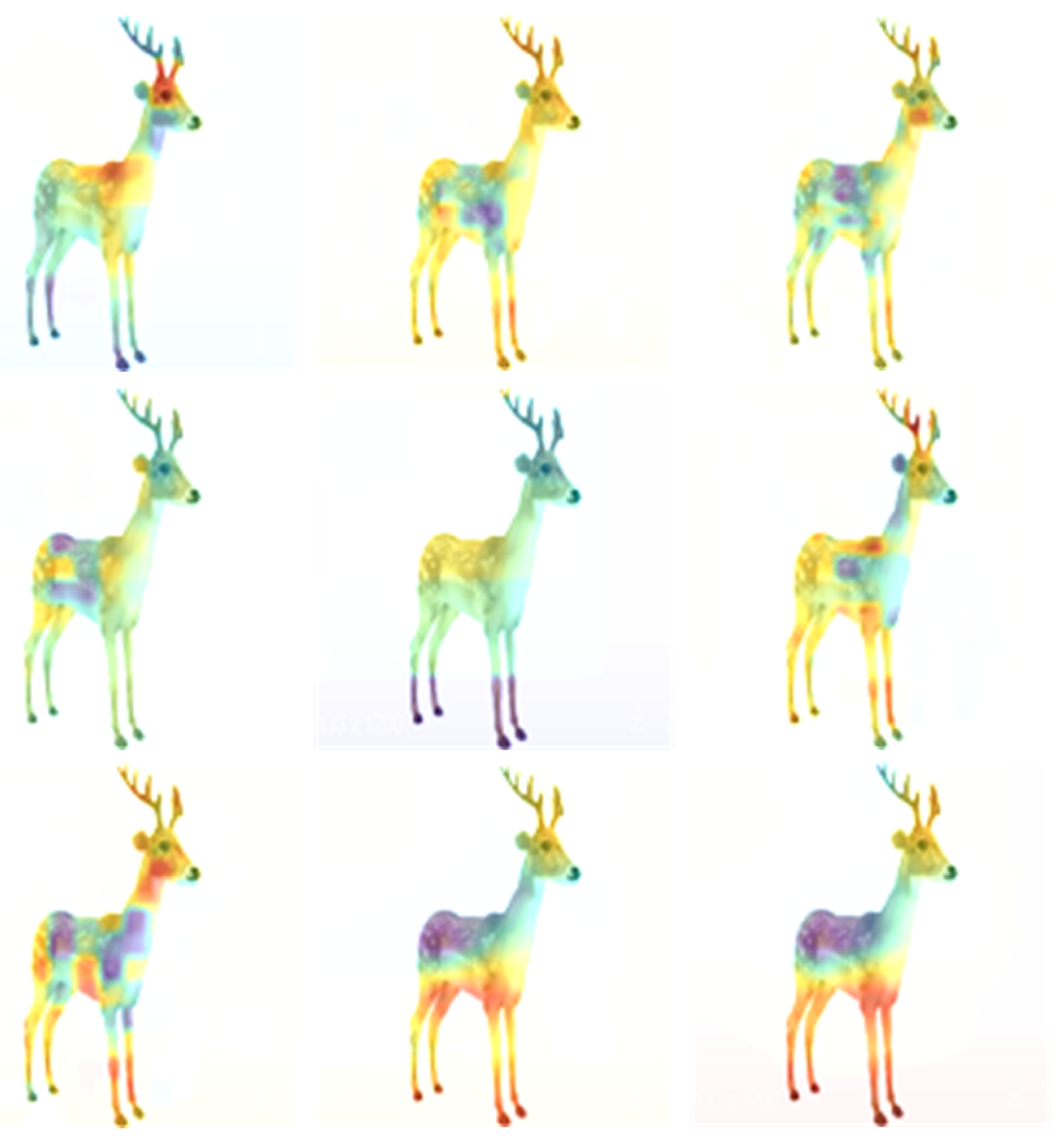

5.3. The Superior Performance of the Developed Activation Function

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yann, L.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Ahn, S. Exploring User Behavior Based on Metaverse: A Modeling Study of User Experience Factors. In Proceedings of the International Conference on Human-Computer Interaction, Washington DC, USA, 29 June–4 July 2024; Springer Nature: Cham, Switzerland, 2024; pp. 99–118. [Google Scholar]

- Wang, S.; Yu, J.; Yang, W.; Yan, W.; Nah, K. The impact of role-playing game experience on the sustainable development of ancient architectural cultural heritage tourism: A mediation modeling study based on S-O-R theory. Buildings 2025, 15, 2032. [Google Scholar] [CrossRef]

- ZahediNasab, R.; Mohseni, H. Neuroevolutionary based convolutional neural network with adaptive activation functions. Neurocomputing 2020, 381, 306–313. [Google Scholar] [CrossRef]

- Zhu, H.; Zeng, H.; Liu, J.; Zhang, X. Logish: A new nonlinear nonmonotonic activation function for convolutional neural network. Neurocomputing 2021, 458, 490–499. [Google Scholar] [CrossRef]

- Apicella, A.; Donnarumma, F.; Isgrò, F.; Prevete, R. A survey on modern trainable activation functions. Neural Netw. 2021, 138, 14–32. [Google Scholar] [CrossRef]

- Courbariaux, M.; Bengio, Y.; David, J.-P. Binaryconnect: Training deep neural networks with binary weights during propagations. Adv. Neural Inf. Process. Syst. 2015, 28, 3123–3131. [Google Scholar]

- Gulcehre, C.; Moczulski, M.; Denil, M.; Bengio, Y. Noisy activation functions. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; PMLR. pp. 3059–3068. [Google Scholar]

- Narayan, S. The generalized sigmoid activation function: Competitive supervised learning. Inf. Sci. 1997, 99, 69–82. [Google Scholar] [CrossRef]

- Hochreiter, S. The vanishing gradient problem during learning recurrent neural nets and problem solutions. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef]

- Li, Y.; Yuan, Y. Convergence analysis of two-layer neural networks with ReLU activation. Adv. Neural Inf. Process. Syst. 2017, 30, 597–607. [Google Scholar]

- Lu, L.; Shin, Y.; Su, Y.; Karniadakis, G.E. Dying ReLU and initialization: Theory and numerical examples. arXiv 2019, arXiv:1903.06733. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 11–13 April 2011; JMLR Workshop and Conference Proceedings. pp. 315–323. [Google Scholar]

- Xu, J.; Li, Z.; Du, B.; Zhang, M.; Liu, J. Reluplex made more practical: Leaky ReLU. In Proceedings of the 2020 IEEE Symposium on Computers and Communications (ISCC), Rennes, France, 7–10 July 2020; IEEE: New York, NY, USA, 2020; pp. 1–7. [Google Scholar]

- Devi, T.; Deepa, N. A novel intervention method for aspect-based emotion using Exponential Linear Unit (ELU) activation function in a Deep Neural Network. In Proceedings of the 2021 5th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 6–8 May 2021; IEEE: New York, NY, USA, 2021; pp. 1671–1675. [Google Scholar]

- Kim, D.; Kim, J.; Kim, J. Elastic exponential linear units for convolutional neural networks. Neurocomputing 2020, 406, 253–266. [Google Scholar] [CrossRef]

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (GELUs). arXiv 2016, arXiv:1606.08415. [Google Scholar]

- Misra, D. Mish: A self regularized non-monotonic activation function. arXiv 2019, arXiv:1908.08681. [Google Scholar]

- Krizhevsky, A. Convolutional Deep Belief Networks on CIFAR-10. 2010. Available online: https://www.semanticscholar.org/paper/Convolutional-Deep-Belief-Networks-on-CIFAR-10-Krizhevsky/bea5780d621e669e8069f05d0f2fc0db9df4b50f#extracted (accessed on 9 August 2025).

- Sharma, N.; Jain, V.; Mishra, A. An analysis of convolutional neural networks for image classification. Procedia Computer Science 2018, 132, 377–384. [Google Scholar] [CrossRef]

- Sharif, M.; Kausar, A.; Park, J.; Shin, D.R. Tiny image classification using Four-Block convolutional neural network. In Proceedings of the 2019 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 16–18 October 2019; IEEE: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: New York, NY, USA, 2009; pp. 248–255. [Google Scholar]

- Vargas, V.M.; Gutiérrez, P.A.; Barbero-Gómez, J.; Hervás-Martínez, C. Activation functions for convolutional neural networks: Proposals and experimental study. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 1478–1488. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; Li, J.; Xu, K. AReLU: Attention-based rectified linear unit. arXiv 2020, arXiv:2006.13858. [Google Scholar]

- Hoiem, D.; Divvala, S.K.; Hays, J.H. Pascal VOC 2008 challenge. World Literature Today 2009, 24, 1–4. [Google Scholar]

- Sharma, D.K. Information measure computation and its impact in MI COCO dataset. In Proceedings of the 2021 7th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 19–20 March 2021; IEEE: New York, NY, USA, 2021; Volume 1, pp. 1964–1969. [Google Scholar]

- Dubey, S.R.; Singh, S.K.; Chaudhuri, B.B. Activation functions in deep learning: A comprehensive survey and benchmark. Neurocomputing 2022, 503, 92–108. [Google Scholar] [CrossRef]

- Xia, Y.; Liu, Z.; Wang, S.; Huang, C.; Zhao, W. Unlocking the Impact of User Experience on AI-Powered Mobile Advertising Engagement. J. Knowl. Econ. 2025, 16, 4818–4854. [Google Scholar] [CrossRef]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; JMLR Workshop and Conference Proceedings. pp. 249–256. [Google Scholar]

- Srivastava, R.K.; Greff, K.; Schmidhuber, J. Highway networks. arXiv 2015, arXiv:1505.00387. [Google Scholar] [CrossRef]

- Elfwing, S.; Uchibe, E.; Doya, K. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Netw. 2018, 107, 3–11. [Google Scholar] [CrossRef]

- Paul, A.; Bandyopadhyay, R.; Yoon, J.H.; Geem, Z.W.; Sarkar, R. SinLU: Sinu-Sigmoidal Linear Unit. Mathematics 2022, 10, 337. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, X.; Yang, Y.; Shi, Z. Enhancing deep learning models for image classification using hybrid activation functions. Preprint 2023. [CrossRef]

- Clevert, D.-A. Fast and accurate deep network learning by exponential linear units (ELUs). arXiv 2015, arXiv:1511.07289. [Google Scholar]

- Deng, Y.; Hou, Y.; Yan, J.; Zeng, D. ELU-Net: An Efficient and Lightweight U-Net for Medical Image Segmentation. IEEE Access 2022, 10, 35932–35941. [Google Scholar] [CrossRef]

- Wei, C.; Kakade, S.; Ma, T. The implicit and explicit regularization effects of dropout. In Proceedings of the International Conference on Machine Learning, Online, 13–18 July 2020; PMLR; pp. 10181–10192. [Google Scholar]

- Daubechies, I.; DeVore, R.; Foucart, S.; Hanin, B.; Petrova, G. Nonlinear approximation and (deep) ReLU networks. Constr. Approx. 2022, 55, 127–172. [Google Scholar] [CrossRef]

- Andrearczyk, V.; Whelan, P.F. Convolutional neural network on three orthogonal planes for dynamic texture classification. Pattern Recognit. 2018, 76, 36–49. [Google Scholar] [CrossRef]

- Brownlowe, N.; Cornwell, C.R.; Montes, E.; Quijano, G.; Zhang, N. Stably unactivated neurons in ReLU neural networks. arXiv 2024, arXiv:2412.06829. [Google Scholar] [CrossRef]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. In Proceedings of the ICML, Atlanta, GA, USA, 17–19 June 2013; Volume 30, p. 3. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 1026–1034. [Google Scholar]

- Lin, G.; Shen, W. Research on convolutional neural network based on improved ReLU piecewise activation function. Procedia Comput. Sci. 2018, 131, 977–984. [Google Scholar] [CrossRef]

- Nag, S.; Bhattacharyya, M.; Mukherjee, A.; Kundu, R. SERF: Towards Better Training of Deep Neural Networks Using Log-Softplus ERror Activation Function. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 5324–5333. [Google Scholar]

- Miglani, V.; Kokhlikyan, N.; Alsallakh, B.; Martin, M.; Reblitz-Richardson, O. Investigating saturation effects in integrated gradients. arXiv 2020, arXiv:2010.12697. [Google Scholar] [CrossRef]

- Verma, V.K.; Liang, K.; Mehta, N.; Carin, L. Meta-learned attribute self-gating for continual generalized zero-shot learning. arXiv 2021, arXiv:2102.11856. [Google Scholar]

- Islam, M.S.; Zhang, L. A Review on BERT: Language Understanding for Different Types of NLP Task. Preprints 2024. [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Fanaskov, V.; Oseledets, I. Associative memory and dead neurons. arXiv 2024, arXiv:2410.13866. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-MNIST: A novel image dataset for benchmarking machine learning algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar] [CrossRef]

- Dosovitskiy, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Swaminathan, A.; Varun, C.; Kalaivani, S. Multiple plant leaf disease classification using DenseNet-121 architecture. Int. J. Electr. Eng. Technol 2021, 12, 38–57. [Google Scholar]

- Koonce, B. ResNet 50. In Convolutional Neural Networks with Swift for TensorFlow: Image Recognition and Dataset Categorization; Springer: Berlin/Heidelberg, Germany, 2021; pp. 63–72. [Google Scholar]

- Theckedath, D.; Sedamkar, R.R. Detecting affect states using VGG16, ResNet50 and SE-ResNet50 networks. SN Comput. Sci. 2020, 1, 79. [Google Scholar] [CrossRef]

- Kingma, D.P. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Liu, J.; Xu, Y. T-Friedman test: A new statistical test for multiple comparison with an adjustable conservativeness measure. Int. J. Comput. Intell. Syst. 2022, 15, 29. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Wang, S.; Nah, K. Exploring sustainable learning intentions of employees using online learning modules of office apps based on user experience factors: Using the adapted UTAUT model. Appl. Sci. 2024, 14, 4746. [Google Scholar] [CrossRef]

| Dataset | Classes | Training Images | Test Images | Image Shape |

|---|---|---|---|---|

| CIFAR10 | 10 | 50,000 | 10,000 | 32 × 32 × 3 |

| CIFAR100 | 100 | 50,000 | 10,000 | 32 × 32 × 3 |

| MNIST [50] | 10 | 60,000 | 10,000 | 32 × 32 × 1 |

| Fashion MNIST [51] | 10 | 60,000 | 10,000 | 32 × 32 × 1 |

| Dataset | Activation | ACC | Std | Precision | Recall |

|---|---|---|---|---|---|

| CIFAR10 | SiLU | 0.843 | 0.039 | 0.843 | 0.841 |

| CIFAR10 | ELU | 0.812 | 0.070 | 0.816 | 0.809 |

| CIFAR10 | EELU | 0.839 | 0.064 | 0.836 | 0.829 |

| CIFAR10 | ReLU | 0.818 | 0.038 | 0.818 | 0.807 |

| CIFAR10 | SERF | 0.827 | 0.049 | 0.829 | 0.819 |

| CIFAR10 | GELU | 0.818 | 0.039 | 0.819 | 0.809 |

| CIFAR10 | Mish | 0.829 | 0.038 | 0.828 | 0.819 |

| CIFAR10 | PSR | 0.873 | 0.012 | 0.844 | 0.852 |

| CIFAR10 | LPSR | 0.864 | 0.029 | 0.864 | 0.862 |

| CIFAR100 | SiLU | 0.589 | 0.036 | 0.278 | 0.279 |

| CIFAR100 | ELU | 0.576 | 0.039 | 0.375 | 0.374 |

| CIFAR100 | EELU | 0.448 | 0.049 | 0.549 | 0.538 |

| CIFAR100 | ReLU | 0.540 | 0.028 | 0.541 | 0.539 |

| CIFAR100 | SERF | 0.537 | 0.017 | 0.530 | 0.536 |

| CIFAR100 | GELU | 0.529 | 0.038 | 0.527 | 0.517 |

| CIFAR100 | Mish | 0.524 | 0.047 | 0.527 | 0.518 |

| CIFAR100 | PSR | 0.606 | 0.024 | 0.586 | 0.587 |

| CIFAR100 | LPSR | 0.596 | 0.245 | 0.576 | 0.578 |

| MNIST | SiLU | 0.991 | 0.004 | 0.989 | 0.989 |

| MNIST | ELU | 0.985 | 0.023 | 0.982 | 0.982 |

| MNIST | EELU | 0.975 | 0.003 | 0.973 | 0.972 |

| MNIST | ReLU | 0.963 | 0.003 | 0.964 | 0.967 |

| MNIST | SERF | 0.973 | 0.004 | 0.979 | 0.972 |

| MNIST | GELU | 0.959 | 0.003 | 0.954 | 0.954 |

| MNIST | Mish | 0.939 | 0.035 | 0.938 | 0.938 |

| MNIST | PSR | 0.994 | 0.003 | 0.991 | 0.991 |

| MNIST | LPSR | 0.901 | 0.270 | 0.889 | 0.899 |

| Fashion MNIST | SiLU | 0.911 | 0.018 | 0.910 | 0.909 |

| Fashion MNIST | ELU | 0.870 | 0.049 | 0.871 | 0.869 |

| Fashion MNIST | EELU | 0.924 | 0.049 | 0.928 | 0.914 |

| Fashion MNIST | ReLU | 0.914 | 0.041 | 0.915 | 0.907 |

| Fashion MNIST | SERF | 0.928 | 0.039 | 0.927 | 0.917 |

| Fashion MNIST | GELU | 0.909 | 0.049 | 0.903 | 0.897 |

| Fashion MNIST | Mish | 0.913 | 0.038 | 0.918 | 0.904 |

| Fashion MNIST | PSR | 0.931 | 0.009 | 0.929 | 0.929 |

| Fashion MNIST | LPSR | 0.929 | 0.402 | 0.917 | 0.928 |

| Dataset | Activation | ACC | Std | Precision | Recall |

|---|---|---|---|---|---|

| CIFAR10 | SiLU | 0.829 | 0.015 | 0.829 | 0.827 |

| CIFAR10 | ELU | 0.824 | 0.012 | 0.823 | 0.822 |

| CIFAR10 | EELU | 0.827 | 0.017 | 0.825 | 0.819 |

| CIFAR10 | ReLU | 0.807 | 0.037 | 0.802 | 0.804 |

| CIFAR10 | SERF | 0.797 | 0.004 | 0.798 | 0.793 |

| CIFAR10 | GELU | 0.768 | 0.001 | 0.769 | 0.768 |

| CIFAR10 | Mish | 0.789 | 0.014 | 0.784 | 0.780 |

| CIFAR10 | PSR | 0.846 | 0.009 | 0.846 | 0.845 |

| CIFAR10 | LPSR | 0.814 | 0.020 | 0.815 | 0.812 |

| CIFAR100 | SiLU | 0.535 | 0.023 | 0.253 | 0.254 |

| CIFAR100 | ELU | 0.557 | 0.015 | 0.262 | 0.263 |

| CIFAR100 | EELU | 0.460 | 0.028 | 0.459 | 0.459 |

| CIFAR100 | ReLU | 0.528 | 0.004 | 0.529 | 0.527 |

| CIFAR100 | SERF | 0.528 | 0.007 | 0.527 | 0.520 |

| CIFAR100 | GELU | 0.479 | 0.008 | 0.479 | 0.472 |

| CIFAR100 | Mish | 0.490 | 0.039 | 0.490 | 0.489 |

| CIFAR100 | PSR | 0.538 | 0.023 | 0.253 | 0.254 |

| CIFAR100 | LPSR | 0.467 | 0.032 | 0.219 | 0.221 |

| MNIST | SiLU | 0.992 | 0.002 | 0.990 | 0.990 |

| MNIST | ELU | 0.992 | 0.002 | 0.990 | 0.989 |

| MNIST | EELU | 0.987 | 0.006 | 0.982 | 0.986 |

| MNIST | ReLU | 0.968 | 0.003 | 0.961 | 0.968 |

| MNIST | SERF | 0.939 | 0.003 | 0.928 | 0.929 |

| MNIST | GELU | 0.957 | 0.002 | 0.958 | 0.957 |

| MNIST | Mish | 0.978 | 0.003 | 0.977 | 0.969 |

| MNIST | PSR | 0.992 | 0.002 | 0.989 | 0.989 |

| MNIST | LPSR | 0.992 | 0.003 | 0.989 | 0.989 |

| Fashion MNIST | SiLU | 0.903 | 0.008 | 0.908 | 0.907 |

| Fashion MNIST | ELU | 0.905 | 0.009 | 0.909 | 0.907 |

| Fashion MNIST | EELU | 0.900 | 0.008 | 0.901 | 0.904 |

| Fashion MNIST | ReLU | 0.897 | 0.003 | 0.897 | 0.893 |

| Fashion MNIST | SERF | 0.849 | 0.005 | 0.848 | 0.849 |

| Fashion MNIST | GELU | 0.879 | 0.007 | 0.872 | 0.875 |

| Fashion MNIST | Mish | 0.892 | 0.007 | 0.896 | 0.890 |

| Fashion MNIST | PSR | 0.911 | 0.008 | 0.909 | 0.909 |

| Fashion MNIST | LPSR | 0.906 | 0.010 | 0.906 | 0.904 |

| Dataset | Activation | ACC | Std | Precision | Recall |

|---|---|---|---|---|---|

| CIFAR10 | SiLU | 0.841 | 0.012 | 0.839 | 0.838 |

| CIFAR10 | ELU | 0.828 | 0.019 | 0.828 | 0.826 |

| CIFAR10 | EELU | 0.827 | 0.039 | 0.829 | 0.823 |

| CIFAR10 | ReLU | 0.839 | 0.038 | 0.839 | 0.835 |

| CIFAR10 | SERF | 0.838 | 0.063 | 0.830 | 0.839 |

| CIFAR10 | GELU | 0.851 | 0.074 | 0.851 | 0.850 |

| CIFAR10 | Mish | 0.840 | 0.038 | 0.840 | 0.840 |

| CIFAR10 | PSR | 0.859 | 0.011 | 0.857 | 0.857 |

| CIFAR10 | LPSR | 0.842 | 0.014 | 0.841 | 0.839 |

| CIFAR100 | SiLU | 0.588 | 0.018 | 0.277 | 0.278 |

| CIFAR100 | ELU | 0.572 | 0.023 | 0.269 | 0.271 |

| CIFAR100 | EELU | 0.559 | 0.039 | 0.559 | 0.553 |

| CIFAR100 | ReLU | 0.573 | 0.040 | 0.572 | 0.575 |

| CIFAR100 | SERF | 0.568 | 0.063 | 0.560 | 0.562 |

| CIFAR100 | GELU | 0.578 | 0.038 | 0.570 | 0.573 |

| CIFAR100 | Mish | 0.539 | 0.048 | 0.533 | 0.540 |

| CIFAR100 | PSR | 0.608 | 0.016 | 0.286 | 0.288 |

| CIFAR100 | LPSR | 0.589 | 0.018 | 0.278 | 0.279 |

| MNIST | SiLU | 0.992 | 0.002 | 0.989 | 0.989 |

| MNIST | ELU | 0.991 | 0.003 | 0.989 | 0.989 |

| MNIST | EELU | 0.978 | 0.003 | 0.979 | 0.970 |

| MNIST | ReLU | 0.959 | 0.002 | 0.958 | 0.950 |

| MNIST | SERF | 0.983 | 0.005 | 0.983 | 0.972 |

| MNIST | GELU | 0.939 | 0.004 | 0.938 | 0.928 |

| MNIST | Mish | 0.950 | 0.007 | 0.948 | 0.942 |

| MNIST | PSR | 0.993 | 0.002 | 0.991 | 0.991 |

| MNIST | LPSR | 0.992 | 0.002 | 0.990 | 0.989 |

| Fashion MNIST | SiLU | 0.905 | 0.008 | 0.904 | 0.903 |

| Fashion MNIST | ELU | 0.904 | 0.009 | 0.903 | 0.902 |

| Fashion MNIST | EELU | 0.894 | 0.006 | 0.893 | 0.891 |

| Fashion MNIST | ReLU | 0.893 | 0.005 | 0.890 | 0.884 |

| Fashion MNIST | SERF | 0.859 | 0.006 | 0.859 | 0.859 |

| Fashion MNIST | GELU | 0.889 | 0.007 | 0.882 | 0.881 |

| Fashion MNIST | Mish | 0.824 | 0.074 | 0.829 | 0.829 |

| Fashion MNIST | PSR | 0.912 | 0.007 | 0.909 | 0.909 |

| Fashion MNIST | LPSR | 0.907 | 0.009 | 0.907 | 0.905 |

| Dataset | Activation | ACC | Std | Precision | Recall |

|---|---|---|---|---|---|

| CIFAR10 | SiLU | 0.662 | 0.035 | 0.647 | 0.646 |

| CIFAR10 | ELU | 0.663 | 0.044 | 0.647 | 0.646 |

| CIFAR10 | EELU | 0.668 | 0.037 | 0.584 | 0.582 |

| CIFAR10 | ReLU | 0.668 | 0.048 | 0.588 | 0.587 |

| CIFAR10 | SERF | 0.648 | 0.039 | 0.577 | 0.576 |

| CIFAR10 | GELU | 0.629 | 0.058 | 0.558 | 0.556 |

| CIFAR10 | Mish | 0.659 | 0.039 | 0.565 | 0.564 |

| CIFAR10 | PSR | 0.672 | 0.029 | 0.669 | 0.668 |

| CIFAR10 | LPSR | 0.669 | 0.019 | 0.657 | 0.656 |

| CIFAR100 | SiLU | 0.527 | 0.015 | 0.516 | 0.514 |

| CIFAR100 | ELU | 0.543 | 0.020 | 0.522 | 0.520 |

| CIFAR100 | EELU | 0.547 | 0.013 | 0.532 | 0.530 |

| CIFAR100 | ReLU | 0.558 | 0.028 | 0.552 | 0.551 |

| CIFAR100 | SERF | 0.537 | 0.038 | 0.538 | 0.536 |

| CIFAR100 | GELU | 0.556 | 0.064 | 0.547 | 0.546 |

| CIFAR100 | Mish | 0.550 | 0.058 | 0.543 | 0.542 |

| CIFAR100 | PSR | 0.569 | 0.013 | 0.557 | 0.556 |

| CIFAR100 | LPSR | 0.569 | 0.017 | 0.552 | 0.550 |

| MNIST | SiLU | 0.729 | 0.009 | 0.718 | 0.717 |

| MNIST | ELU | 0.728 | 0.009 | 0.714 | 0.712 |

| MNIST | EELU | 0.718 | 0.008 | 0.709 | 0.708 |

| MNIST | ReLU | 0.719 | 0.007 | 0.704 | 0.702 |

| MNIST | SERF | 0.698 | 0.005 | 0.638 | 0.637 |

| MNIST | GELU | 0.717 | 0.004 | 0.708 | 0.706 |

| MNIST | Mish | 0.689 | 0.007 | 0.627 | 0.625 |

| MNIST | PSR | 0.730 | 0.007 | 0.708 | 0.707 |

| MNIST | LPSR | 0.731 | 0.005 | 0.729 | 0.727 |

| Fashion MNIST | SiLU | 0.656 | 0.008 | 0.648 | 0.647 |

| Fashion MNIST | ELU | 0.656 | 0.008 | 0.641 | 0.640 |

| Fashion MNIST | EELU | 0.622 | 0.004 | 0.625 | 0.624 |

| Fashion MNIST | ReLU | 0.638 | 0.004 | 0.634 | 0.633 |

| Fashion MNIST | SERF | 0.640 | 0.006 | 0.637 | 0.636 |

| Fashion MNIST | GELU | 0.648 | 0.008 | 0.637 | 0.636 |

| Fashion MNIST | Mish | 0.639 | 0.007 | 0.637 | 0.635 |

| Fashion MNIST | PSR | 0.656 | 0.006 | 0.649 | 0.648 |

| Fashion MNIST | LPSR | 0.657 | 0.003 | 0.659 | 0.658 |

| Activation | VGG16 | ResNet50 | DenseNet121 | ViT | Mean * |

|---|---|---|---|---|---|

| SiLU | 0.834 | 0.815 | 0.831 | 0.643 | 0.781 |

| ELU | 0.811 | 0.819 | 0.824 | 0.647 | 0.775 |

| EELU | 0.797 | 0.794 | 0.815 | 0.639 | 0.761 |

| ReLU | 0.809 | 0.800 | 0.816 | 0.647 | 0.768 |

| SERF | 0.817 | 0.778 | 0.812 | 0.631 | 0.759 |

| GELU | 0.804 | 0.771 | 0.814 | 0.637 | 0.757 |

| Mish | 0.801 | 0.787 | 0.788 | 0.634 | 0.753 |

| PSR | 0.851 | 0.822 | 0.843 | 0.657 | 0.793 |

| LPSR | 0.825 | 0.795 | 0.833 | 0.657 | 0.777 |

| Activation | VGG16 | ResNet50 | DenseNet121 | ViT | Average |

|---|---|---|---|---|---|

| SiLU | 3.8 | 3.0 | 3.3 | 5.3 | 3.8 |

| ELU | 6.0 | 2.5 | 5.5 | 5.0 | 4.8 |

| EELU | 5.0 | 5.5 | 7.0 | 6.3 | 5.9 |

| ReLU | 5.5 | 5.8 | 6.0 | 4.8 | 5.5 |

| SERF | 4.8 | 7.8 | 6.8 | 7.5 | 6.7 |

| GELU | 7.3 | 8.0 | 5.5 | 6.3 | 6.8 |

| Mish | 6.5 | 6.8 | 7.8 | 7.0 | 7.0 |

| PSR | 1.0 | 1.3 | 1.0 | 1.8 | 1.3 |

| LPSR | 5.5 | 4.8 | 2.3 | 1.3 | 3.4 |

| Activation Function | Dataset Based Rank | p-Value | Hypothesis Test Result | |

|---|---|---|---|---|

| SiLU | 6.25 | |||

| ELU | 3.5 | |||

| EELU | 5.0 | |||

| ReLU | 4.5 | |||

| SERF | 3.0 | 17.93 | < | Reject |

| GELU | 3.0 | |||

| Mish | 3.75 | |||

| PSR | 9.0 | |||

| LPSR | 7.0 |

| Activation Function | Dataset Based Rank | p-Value | Hypothesis Test Result | |

|---|---|---|---|---|

| SiLU | 6.25 | |||

| ELU | 3.75 | |||

| EELU | 4.75 | |||

| ReLU | 4.25 | |||

| SERF | 5.0 | 16.33 | < | Reject |

| GELU | 2.5 | |||

| Mish | 3.25 | |||

| PSR | 9.0 | |||

| LPSR | 6.25 |

| Property | SiLU | ELU | EELU | ReLU | SERF | GELU | Mish | PSR | LPSR |

|---|---|---|---|---|---|---|---|---|---|

| Nonlinear | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Upper-bounded | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✓ |

| Lower-bounded | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Differentiable | ✓ | ✓ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | ✓ |

| Non-monotonic | ✓ | ✗ | ✓ | ✗ | ✓ | ✓ | ✓ | ✓ | ✓ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, X.; Cheng, Y.; Wang, S.; Sang, G.; Nah, K.; Wang, J. A Periodic Mapping Activation Function: Mathematical Properties and Application in Convolutional Neural Networks. Mathematics 2025, 13, 2843. https://doi.org/10.3390/math13172843

Chen X, Cheng Y, Wang S, Sang G, Nah K, Wang J. A Periodic Mapping Activation Function: Mathematical Properties and Application in Convolutional Neural Networks. Mathematics. 2025; 13(17):2843. https://doi.org/10.3390/math13172843

Chicago/Turabian StyleChen, Xu, Yinlei Cheng, Siqin Wang, Guangliang Sang, Ken Nah, and Jianmin Wang. 2025. "A Periodic Mapping Activation Function: Mathematical Properties and Application in Convolutional Neural Networks" Mathematics 13, no. 17: 2843. https://doi.org/10.3390/math13172843

APA StyleChen, X., Cheng, Y., Wang, S., Sang, G., Nah, K., & Wang, J. (2025). A Periodic Mapping Activation Function: Mathematical Properties and Application in Convolutional Neural Networks. Mathematics, 13(17), 2843. https://doi.org/10.3390/math13172843