1. Introduction

In recent years, there has been a growing interest in the study of opinion dynamics, which explores how the beliefs of a typically large population of individuals evolve over time through repeated interactions within a social network [

1]. In continuous opinion dynamics, beliefs (referred to interchangeably as opinions or beliefs) are depicted as scalar values or vectors, with each individual’s belief moving towards a weighted average of other agents’ beliefs, reflecting the influence of social interactions [

2]. Traditional models generally suggest that, given a connected social network, consensus among agents will eventually be reached over time [

3]. However, exceptions arise in bounded confidence models, where agents disregard the influence of others whose beliefs diverge significantly from their own, and in models featuring stubborn agents who resist changing their opinions but can still sway the opinions of others. These stubborn agents may represent leaders, political parties, or media sources aiming to influence the beliefs of the broader population [

4]. Studies on scaling limits indicate that in a homogeneous population, as the population size increases, the observed distribution of beliefs tends to converge towards the solution of a specific deterministic mean-field differential equation in the space of probability measures. These findings align with the concept of the propagation of chaos in interacting particle systems [

3]. It is a well understood fact that when agents interact, their opinions tend to converge towards an average [

5]. Global interactions often lead to consensus, while local interactions typically result in clusters of similar opinions. This clustering happens when agents only engage with neighbors who share their views, avoiding those with differing opinions. To understand the differences in final opinion values, researchers have developed a Lagrangian model of

dissensus, employing graph theory and stochastic stability theory [

6].

Social networks exert significant influence on various aspects of behavior, such as educational attainment [

7], employment opportunities [

8], technology uptake [

9], consumer habits [

10], and even smoking habits [

11,

12]. Since social networks emerge from individual decisions, understanding the consensus plays a pivotal role in deciphering their formation. Despite extensive theoretical exploration of social networks, there has been minimal investigation into consensus as a Nash equilibrium within stochastic networks. Ref. [

12] characterizes the network as a simultaneous-move game, where social ties are formed based on the utility effects from indirect connections. Ref. [

12] also introduces a computationally feasible method for partially identifying large social networks. The statistical examination of network formation traces back to pioneering work [

13], where a random graph is constructed with independent links governed by a fixed probability. Beyond the Erdös–Rényi model, numerous techniques have been devised to simulate graphs exhibiting characteristics like varying degree distributions, small-world properties, and Markovian traits. Model-based approaches are valuable if they can be effectively fitted and yield realistic simulations. The Exponential Random Graph Model (ERGM) is widely used due to its ability to capture observed network statistics [

14,

15,

16,

17]. However, the ERGM lacks micro foundations crucial for counterfactual analyses, and economists consider network analysis as the domain of rational agents seeking to optimize their utilities. Another popular framework involves viewing networks as evolving through stochastic processes, where the focus lies on the parameters governing the process rather than on individual network realizations [

16].

A distinction should be drawn between the classical Nash equilibrium in finite-agent stochastic games and the Mean Field Game (MFG) equilibrium. In a Nash equilibrium, each of the

n agents optimizes their cost functional given the strategies of all other agents, and no individual agent can unilaterally reduce their cost. The resulting strategies depend explicitly on the empirical distribution of the population. In contrast, an MFG equilibrium arises in the limit

, where a representative agent optimizes against the distribution of the population, typically modeled by a McKean–Vlasov process. The MFG equilibrium is then defined by a fixed-point condition: the optimal strategy of the representative agent generates exactly the same distribution that the agent anticipates. This limiting framework was formalized by Lasry and Lions [

18,

19], and a rigorous probabilistic foundation was later developed by Carmona and Delarue [

20,

21]. In this paper, we derive the optimal strategy for each finite agent and interpret its limiting behavior as an approximation of the MFG equilibrium when the number of agents is large.

To provide intuition before introducing the technical framework, consider a simplified opinion dynamics model with

agents. Each agent’s opinion is represented by a point on a one-dimensional line, where proximity reflects similarity in views. At each time step, agents adjust their opinions toward those of nearby agents, with closer opinions exerting stronger influence. Control inputs may be applied to selected agents to steer the group toward a consensus or desired configuration, while random fluctuations represent unpredictable external influences.

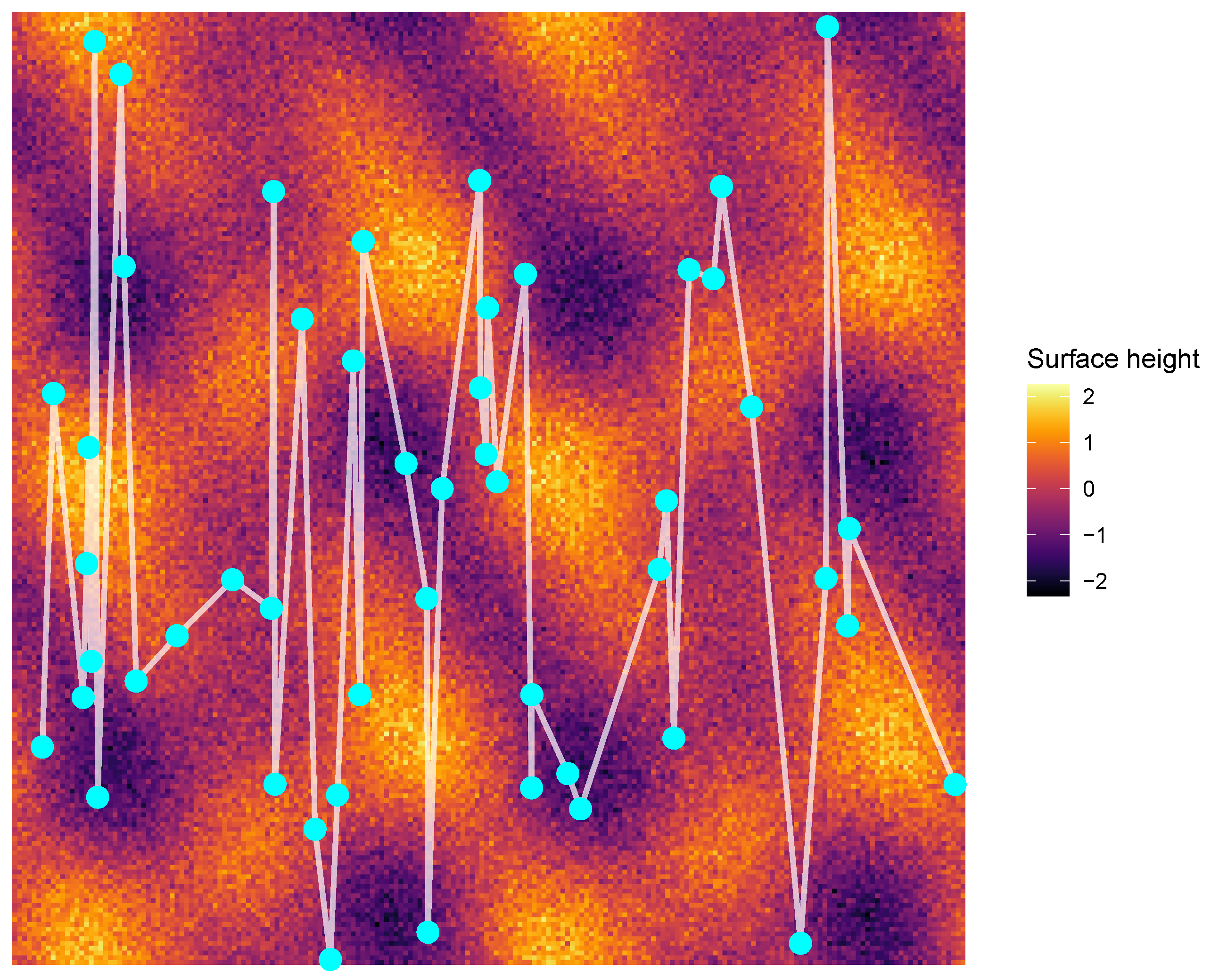

Figure 1 illustrates a group of 50 agents represented as dots on a random surface. The surface indicates an underlying field that changes across space. Each agent is connected to other agents by lines (i.e., shares opinion). These lines show that every agent interacts with other agents. The colors of the surface reflect variation in the background opinion environment. The positions of the agents can change over time in response to these interactions and the environment.

In this paper, we propose an approach to opinion dynamics within the framework of McKean–Vlasov dynamics. We focus on a stochastic model with time-continuous beliefs comprising a homogeneous population of agents. Pioneering research into McKean–Vlasov equations was conducted by [

22,

23], where they examined uncontrolled Stochastic Differential Equations (SDEs) to establish chaos propagation outcomes. Kac’s work delved into mean-field SDEs driven by classical Brownian motion, offering deeper insights into the Boltzmann and Vlasov kinetic equations. Ref. Snitman [

24] also contributed significantly to understanding this equation. While subsequent authors have expanded on this research, there has been a notable surge in interest over the past decade, largely due to its intersection with Mean Field Game (MFG) theory. This theory was independently introduced by [

19,

25]. The McKean–Vlasov equation is instrumental in analyzing scenarios where numerous identical agents, interacting via the empirical distribution of their state variables, aim for a Nash equilibrium. Conversely, for agents seeking a Pareto Equilibrium, stochastic control of McKean–Vlasov SDEs is considered. While these two equilibria are related, nuances exist, as highlighted by Carmona et al. [

26]. Certainly, as indicated by these studies, as the number of agents tends towards infinity, it is anticipated that the individual agents’ opinions evolve independently. Each agent’s opinion is governed by a specific stochastic differential equation, where the coefficients are contingent upon the statistical distribution of their private state. The optimization of each agent’s objective function within the confines of this new dynamic state equates to the stochastic control of McKean–Vlasov dynamics, a mathematical conundrum not universally comprehended. Refer to [

27] for an initial exploration in this direction. Returning to our original inquiry, one might question whether or not optimizing feedback strategies in this new control scenario yields a form of approximate equilibrium for the initial N-agent game. Regardless, if such a problem can be resolved, the crux lies in understanding how an optimal feedback strategy in this context aligns with the outcomes of the MFG theory.

Furthermore, it is possible to transform a class of non-linear HJB equations into linear equations through a logarithmic transformation. This technique dates back to the early days of quantum mechanics, where Schrödinger first used it to connect the HJB equation to the Schrödinger equation. Due to this linearity, backward integration of the HJB equation over time can be replaced by computing expectation values under a forward diffusion process, which involves stochastic integration over trajectories described by a path integral [

28,

29,

30]. In more complex cases, such as the Merton–Garman–Hamiltonian system, finding a solution through the Pontryagin’s maximum principle is impractical, but the Feynman path integral method provides a solution. Previous works using the Feynman path integral method include its application in motor control theory by [

31,

32,

33,

34]. The use of Feynman path integrals in finance has been extensively discussed by [

35]. Additionally, ref. [

36] introduced a Feynman-type path integral to determine a feedback control.

Classical mean-field game theory, initiated by Lasry and Lions [

18,

19,

37], provides a general framework for studying Nash equilibria in large populations of agents where the cost functional depends on the state distribution. In that setting, the analysis is typically conducted through a coupled system of HJB and Fokker–Planck (FP) equations. By contrast, the present work is based on controlled McKean–Vlasov dynamics, in which the emphasis is placed on the stochastic evolution of interacting particles and their empirical measure. This perspective avoids the need to solve the full PDE system and is closer to probabilistic methods for interacting diffusions. On the numerical side, a considerable body of work has focused on approximating mean-field equilibria using grid-based PDE solvers [

38,

39], as well as particle methods and deep learning approaches [

40,

41,

42]. Our contribution is complementary to these developments: rather than approximating the Lasry–Lions equations directly, we formulate an optimal control problem over the particle system itself, with numerical implementation carried out through simulation of interacting agents. This approach allows us to capture finite-population effects and to design control strategies that remain interpretable in terms of agent-level interactions.

From a numerical standpoint, the literature on the mean-field approach has largely been developed along two complementary directions. On the one hand, finite-difference and related PDE-based solvers have been extensively applied to the Lasry–Lions system, yielding accurate approximations of the coupled HJB and FP equations on discretized grids [

38,

39]. While these approaches provide precise resolution in low-dimensional settings, their computational cost grows rapidly with the dimension of the state space, which limits their applicability in high-dimensional environments. On the other hand, simulation-based approaches, often leveraging interacting particle systems, provide a more scalable alternative. By directly evolving a large but finite set of controlled dynamics, such methods approximate the mean-field limit probabilistically and naturally incorporate stochasticity and finite-population effects. More recently, machine learning and deep reinforcement learning techniques [

40,

41,

42] have extended this particle-based philosophy by introducing data-driven function approximations, enabling numerical treatment of high-dimensional and non-linear problems. The methodology developed in this paper belongs to this latter class; rather than discretizing PDEs, we simulate controlled particle systems and analyze their empirical distributions, thereby retaining interpretability at the agent level while avoiding the curse of dimensionality inherent in PDE solvers.

The rest of this paper is structured as follows: In

Section 3, we formulate the opinion dynamics along with the cost functional.

Section 4 outlines the assumptions and essential properties of stochastic McKean–Vlasov dynamics. In

Section 5, we derive the deterministic Hamiltonian and stochastic Lagrangian for the system.

Section 6 presents the main results related to Feynman-type path integral control and its applications to the Friedkin–Johnsen-type model. Finally,

Section 7 concludes the paper and suggests possible future extensions.

3. Construction of a Stochastic Differential Game of Opinion Dynamics

Following [

43], consider a social network of

n agents by a weighted directed graph

, where

is the set of all agents. Let,

be the set of all ordered pairs of all connected agents, and

be the influence of agent

j on agent

i for all

. There are two types of connections, one-sided or two-sided. For the principle–agent problem, the connection is one-sided (i.e., Stackelberg model), and for the agent–agent problem, it is two-sided (i.e., Cournot model). Suppose

be the opinion of agent

at time

with their initial opinion

. Then,

has been normalized into

, where

stands for a strong disagreement and

represents strong agreement and all other agreements stay in between. Let

be the opinion profile vector of

n agents at time

s, where T represents the transposition of a vector. Following [

43], define the cost function of agent

i as

where

is a parameter that weighs the susceptibility of agent

j to influence agent

i,

is agent

i’s stubbornness,

is an adaptive control process of agent

i taking values in a convex open set in

, and set of all agents with whom

i interacts is

and defined as

. In this paper,

represents agent

i’s control over their own opinion as well as influencing other agents’ opinions. The cost function

is twice differentiable with respect to time in order to satisfy Wick rotation, is continuously differentiable with respect to

agent’s control

, non-decreasing in opinion

, non-increasing in

, and convex and continuous in all opinions and controls [

44,

45]. The opinion dynamics of agent

i follow a McKean–Vlasov stochastic differential equation,

with the initial condition

, where

and

are the drift and diffusion functions and

is the probability law the opinion of agent

i with Brownian motion

. The reason behind incorporating Brownian motion in agent

i’s opinion dynamics is because of Hebbian Learning, which states that neurons increase the synaptic connection strength between them when they are active together simultaneously, and this behavior is probabilistic in the sense that resource availability from a particular place is random [

46,

47]. For example, for a given stubbornness, and influence from agent

j, agent

i’s opinion dynamics have some randomness in opinion. Suppose from other resources agent

i knows that the information provided by agent

j’s influence is misleading. Apart from that, after considering humans as automatons, motor control and foraging for food become a big example of minimization of costs (or the expected return) [

47]. As control problems like motor controls are stochastic in nature because there is a noise in the relation between the muscle contraction and the actual displacement with joints with the change of the information environment over time, we consider the Feynman path integral approach to determine the stochastic control after assuming the opinion dynamics; Equation (

2) [

48,

49]. The coefficient of the control term in Equation (

1) is normalized to 1, without loss of generality. The cost functional represented in Equation (

1) is viewed as a model of the motive of agent

i towards a prevailing social issue [

43]. The aim of this paper is to characterize a feedback Nash equilibrium

, such that

subject to the Equation (

2), where

represents the expectation on

at time 0 subject to agent

i’s opinion filtration

generated by the Brownian motion

starting at the initial time 0 for a complete probability space

. A solution to this problem is a feedback Nash equilibrium as the control of agent

i is updated based on the opinion at the same time

s.

4. Preliminaries

Let

be a fixed finite horizon. Assume

is a 1-dimensional Brownian motion defined on a probability space

, and

is its natural filtration augmented with an independent

-algebra

, where

is the probability law defined above. The McKean–Vlasov stochastic opinion dynamic of agent

i is represented in Equation (

2), where the drift and diffusion coefficients of opinion

are given by a pair of deterministic functions

, and

is the

admissible control of agent

i assumed to be a progressively measurable process with values in a measurable space

. In

,

is an open subset of an Euclidean space

, and

is a

-field induced by a Borel

-field in the same Euclidean space [

50]. For a metric space

E, if

is its Borel

-field, we use

as the notation for the set of all probability measures on

. We further assume

is endowed with the topology of weak convergence [

51]. If E is a Polish space

G, then for all

with metric

define

where

is arbitrary. For

Wasserstein distance

on

define

for all

. The space

is indeed a Polish space [

51]. The term ‘non-linear’, used to describe Equation (

2), does not mean the drift (

) and diffusion (

) coefficients are non-linear functions of

X, but instead they not only depend on the value of the unknown process

but also on its distributions

[

50]. In the

r-Wasserstein distance

the infimum is taken over all couplings

, such that the marginal laws

are satisfied. In other words,

has marginals

and

. The space

is indeed a Polish space. To get rid of more symbols we represent “A” by “.” In this paper,

.

The set

of

admissible controls as the set of

-valued progressively measurable processes

, where

is a Hilbert space,

with

being the collection of all

-valued progressive measurable processes on

. Let

be a sub-

-algebra of

so that the following assumption holds.

Assumption 1. (i). and the filtration generated by the Brownian motion of agent are independent.

In other words, for all there exists a continuous and Borel -process , such that , and Y has the law (distribution) equal to γ. Lemma 1

([

51]).

Let be another sub-σ-algebra of on the probability space . If Assumption 1 holds then the following statements are equivalent.(i). There exists a -measurable random variable with the Uniform distribution .

(ii). is “rich enough” by means of the following condition:

Remark 1.

Consider two sets of sub-σ-algebras and in the probability space so that , and with (atomless space). Then, statements (i) and (ii) in Lemma 1 are equivalent.

Assumption 2. (i) There exists a linear, unbounded operator , which facilitates a -semigroup of pseudo-contractions in .

(ii). The drift and the diffusion coefficients are measurable.

(iii). There exists a constant , such that

(iv). is differentiable in , and the derivative is bounded Lipschitz continuous. Hence, there exists a positive constant , such that

(v). Consider and . Therefore, is differentiable in and the derivatives , , and are bounded and Lipschitz continuous. Hence, for a given , there exists a constant , so that

Assumption 3.

Under a feedback control structure of a society, there exists a measurable function such that , for which ensures that Equation (2) admits a solution. Remark 2.

This assumption is standard in stochastic control theory, where feedback (or closed-loop) controls guarantee measurability and admissibility of control strategies [52,53]. In the context of social networks, feedback-type control structures capture the fact that agents adjust their opinions dynamically based on their current state and the observed states of their neighbors. Empirical and theoretical studies in opinion dynamics also support this modeling choice, since adaptive responses to social influence are more realistic than open-loop strategies [1,54,55]. Such assumptions have also been employed in the control of mean-field models and networked systems, where measurable feedback ensures both tractability and real-world interpretability [56]. Assumption 4. (i) Let denote the information (or knowledge) space of the society, assumed to be a measurable space. For each agent i, we define an information set , which represents the collection of signals or knowledge available to agent i at time . The family is such that whenever , reflecting heterogeneity in access to information across agents. Moreover, we assume that each is non-empty, measurable, and evolves monotonically with respect to time, i.e., , whenever .

(ii) The initial cost functional of the society is given bywhich is concave in its arguments. For each agent i, the individual cost functional is denoted , satisfying , and the concavity of is assumed. This condition is equivalent to the Slater condition (see [57]), ensuring feasibility and dual attainability in the associated optimization problem. (iii) For each admissible control , there exists sufficiently small, such that the quadratic formholds for all with , where . This ensures a uniform coercivity condition on the expected quadratic cost. Remark 3.

Assumption 4 reflects the realistic heterogeneity of information across agents in social networks, a feature emphasized in the literature on bounded confidence models, and Bayesian learning in networks [1]. The monotonicity of information sets formalizes the idea that knowledge is non-decreasing over time, consistent with cumulative learning models [58]. The concavity of the cost functional and its agent-specific restrictions parallels standard convex optimization assumptions (e.g., Slater condition), ensuring well-posedness of equilibrium and duality [57]. Finally, the uniform coercivity condition in (iii) is common in quadratic cost formulations of stochastic control and mean-field games [20,56], guaranteeing the stability of optimal strategies and preventing degenerate solutions. Remark 4.

Assumption 3 guarantees the possibility of at least one fixed point in the knowledge space. It is important to note that the agent makes decisions based on all available information. Then, the following Lemma 2 shows that the fixed point is indeed unique. Assumption 4 implies that each agent has some initial cost functional at the beginning of , and the conditional expected cost functional is positive throughout this time interval.

Lemma 2.

Suppose agent’s initial opinion is independent of , and and satisfy Assumptions 1 and 2. Then there exists a unique solution to opinion dynamics represented by the Equation (2) in . Moreover, there exists some positive constant on time t and Lipschitz constants and ; the unique solution satisfies for all . Remark 5.

Lemma 2 guarantees that the stochastic opinion dynamics shown in Equation (2) exhibits a unique fixed point, and the expectation is bounded in the polish space G. Assume the set of admissible strategies

is convex and

. Define

as the optimal opinion, which is the solution of Equation (

2) with the initial opinion

. The first objective is to determine the Gâteaux derivative of the cost functional

at

in all directions. Consider another strategy

such that

for another admissible strategy

. Hence,

.

can be considered as the direction of the Gâteaux derivative of

[

59]. For every

small enough, define a strategy

, and the corresponding controlled opinion vector

. Furthermore, define the variational process

as the solution of the equation

where

and

with

being an independent copy of

. A Fréchet differentiability has been used to define

. This type of functional analytic differentiability was introduced by Pierre Lions at the

Collége de France [

50,

59]. This is a type of differentiability based on the lifting of functions

into functions

defined on Hilbert space

on some probability space

after setting

for all

, with

being a Polish space and

an atomless measure [

50]. Since there are

n number of agents in the system, such that

, instead of considering the opinions of the other agents, agent

i considers the distribution of all opinions in the system

and makes their opinions. Therefore, in this case the distribution function of opinions

is said to be differentiable at

if there exists a set of random opinions

with probability distribution

(i.e.,

). The Fréchet derivative of

at

is the element of Hilbert space

by identifying itself and its dual [

50]. One important aspect of the Fréchet differentiation in this type of environment is that the distribution of the derivative depends on

, not on

. The Fréchet derivative of

is

where

is the Fréchet derivative, the dot is the inner product of the Hilbert space over

, and

is a norm of that Hilbert space. For a deterministic function

, it is well understood that the Fréchet derivative of the form

is uniquely defined

almost everywhere in

[

59,

60]. The unique equivalence class of

is denoted by

.

is the partial derivative of

at

, such that

The partial derivative

allows one to express the Fréchet derivative

as a function of any random variable

with its law

, irrespective of the definition of

. If

for some scalar differentiable function

g on

. Here,

and

, where

is thought to be a deterministic function

[

50].

Lemma 3.

For a small , the admissible strategy defined as , with the opinion of agent i as , the following condition holdswhere is agent i’s opinion at time s coming from the set of all opinions in the environment. Remark 6.

Based on Assumptions 1–3, Lemma 3 guarantees the existence and the uniqueness of . Furthermore, for any this satisfies . Above Lemma 3 in some Hilbert space , is derivative of the opinion driven by agent’s strategy when the direction of the derivative is changed.

Lemma 4.

For small enough and the time interval , there exists some so that the admissible strategy function of agent i denoted as is Gâteaux differentiable andwhere for all . Remark 7.

The above Lemma determines the directional derivative of the cost functional for some small enough, .

5. The Lagrangian and the Adjoint Processes

Let for

,

be an opinion dynamics of

agent with initial and terminal points

and

, respectively, such that the line path integral is

, where

. In this paper, a functional path integral approach is considered where the domain of the integral is assumed to be the space of functions [

36]. In [

61], theoretical physicist Richard Feynman introduced the

Feynman path integral and popularized it in quantum mechanics. Furthermore, mathematicians developed the measurability of this functional integral, and in recent years it has become popular in probability theory [

49]. In quantum mechanics, when a particle moves from one point to another, between those points it chooses the shortest path out of infinitely many paths, such that some of them touch the edge of the universe. After introducing

n number of small intervals of equal length

with

small enough, and using the

Riemann–Lebesgue lemma if at time

s one particle touches the end of the universe, then at a later time point it would come back and go to the opposite side of the previous direction to make the path integral a measurable function [

62]. Similarly, since agent

i has infinitely many opinions, they choose the opinion associated with least cost given by the constraint explained in Equation (

2). Furthermore, the Feynman approach is useful in both linear and non-linear stochastic differential equation systems where constructing an HJB equation numerically is quite difficult [

35].

Definition 1.

For a particle, let be the Lagrangian in the classical sense in generalized coordinate y with mass , where and are kinetic and potential energies, respectively. The transition function of the Feynman path integral corresponding to the classical action function is defined as , where and is an approximated Riemann measure that represents the positions of the particle at different time points s in [36]. Remark 8.

Definition 1 describes the construction of the Feynman path integral in a physical sense. This definition is important to construct the stochastic Lagrangian of agent i.

From Equation (45) of [

63] for agent

i, the stochastic Lagrangian at time

is defined as

where

is the Lagrangian multiplier and

.

Proposition 1

([

63,

64]).

Suppose for agent i, is an admissible strategy and is the corresponding opinion. Furthermore, assume that there exists a progressively measurable Lagrangian multiplier so that following two conditions hold: Moreover, assume that the mapping is almost surely concave in . Therefore, the admissible strategy is an optimal strategy of agent i. Remark 9.

Following [63], we know that if is the solution of the system represented by Equations (1) and (2) and Condition 7, then there exists a progressively measurable Itô process , such that Equations (5) and (6) hold. In Proposition 1, the Lagrangian multiplier is indeed a progressively measurable process. Since at the beginning of the continuous interval

for all

, agent

i does not have any future information to build their opinion. Thus,

. Furthermore, as

, the Lagrangian expressed in Equation (

4) becomes

where

.

The adjoint process of the system is

where

and

are two new dual variables belong to the dual spaces of the spaces from where

and

take their values, such that

like

, and that

. Notice that the deterministic Hamiltonian of the system is

The differences between the above Hamiltonian and Equation (

4) are the presence of

,

,

,

and

. If

, under the deterministic case, the Hamiltonian and Lagrangian share a similar structure. Since the Feynman path integral approach has been used,

determines the true fluctuation of

, and further inclusion of

facilitates the conditional expectation of a forward looking process for

. The Lagrangian used in Equation (

4) is stochastic, but the usual Hamiltonian of the control theory is deterministic.

Definition 2.

For a set of admissible strategies of agent i, denote the set of corresponding controlled opinions, and let be any coupled adjoint progressively measurable stochastics processes satisfyingwhere is the independent copy of and is the expectation of the independent copy. In the adjoint equation, and . Remark 10.

If and are independent with the marginal distributions of the process, the extra terms appearing in the adjoint equation in Definition 2 vanishes, and indeed this equation becomes the classical adjoint equation.

In the present setup, the adjoint equation can be written as

It is important to note that for a given admissible strategy

and the controlled opinion

, despite the boundedness assumptions of the partial derivatives of

and

, and despite that the first part of the above adjoint equation is linear with respect to

and

, the existence and uniqueness of a solution

of the adjoint equation cannot be determined by the standard process (for example, Theorem 2.2 in [

59]). The main reason for this is that the joint distribution of the solution process appears in

and

[

50,

59].

Lemma 5.

Under (v) of Assumption 2, there exists a unique adapted solution of the coupled adjoint progressively measurable stochastics processes, satisfyingin , whereand Sketch of Proof. Here we provide the sketch of the proof. Details are discussed in

Appendix A. We first define the weighted norm,

For a candidate pair

, Proposition 2.2 of [

65] and Theorem 2.2 of [

59] ensure the existence of a solution

to the adjoint equation

This defines a mapping

on

. To establish uniqueness, let

and

. Denote the differences

Applying Itô’s formula to

yields the estimate

where

depends on the Lipschitz constants of the derivatives of

from Assumption (v). Choosing

sufficiently large ensures

, i.e.,

M is a contraction under

.

By the Banach fixed point theorem, there exists a unique fixed point , which is the unique adapted solution of the adjoint system. □

Remark 11.

Lemma 5 states that for each admissible strategy , there exists a couple of adjoint processes so that .

For a normalizing constant

, define a transition function from

s to

as

where

is the value of the transition function based on opinion

at time

s with the initial condition

. The penalization constant

is chosen in such a way that the right hand side of the expression (

10) becomes unity. Therefore, the action function of agent

i in time interval

is

where

is an Itô process so that,

It is important to note that the implicit form of the McKean–Vlasov SDE is replaced by an Itô process. The action

tells us that within

the action of agent

i depends on their opinion

under a feedback structure.

Definition 3.

For optimal opinion there exists an optimal admissible control , such that for all the conditional expectation of the cost function issuch that Equation (2) holds, where is the optimal filtration satisfying . 6. Main Results

Consider that, for an opinion space

and agent

i’s control space

, there exists an admissible control

, and for all

define the integrand of the cost function

as

Proposition 2.

For agent i

(i). The feedback control is a continuously differentiable function,

(ii). The cost functional is smooth on .

(iii). If is an opinion trajectory of agent i, then the feedback Nash equilibrium would be the solution of the following equation:

where, for an Itô process Remark 12.

The central idea of Proposition 2 is to choose appropriately. Therefore, one natural candidate should be a function of the integrating factor of the stochastic opinion dynamics represented in Equation (2). To demonstrate the preceding proposition, we present a detailed example to identify an optimal strategy in McKean–Vlasov SDEs and the corresponding systems of interacting particles. Specifically, we examine a stochastic opinion dynamics model involving six unknown parameters. To construct this example, we are going to combine the equations from [

66,

67,

68]. Following [

67], consider a one-dimensional stochastic opinion dynamics model, parameterized by

of the form

where

,

is standard Brownian motion, and the interaction kernel

, which has the form

This model is often described in terms of the corresponding system of interacting particles, which is represented by

where

is the scale and

is the range parameters. Models of this type appear in a variety of fields, ranging from biology to the social sciences, where

determines how the behavior of one particle (such as an agent’s opinions) affects the behavior of other particles (such as the opinions of others). For a comprehensive discussion of these models, see [

66,

69,

70,

71]. In deterministic versions of these models, it is well established that, over time, particles converge into clusters. The number of clusters depends on both the interaction kernel (i.e., the scope and intensity of interactions between particles) and the initial conditions.

Figure 2 presents contour plots that illustrate the dependence of opinion dynamics on the interaction parameters

(the strength of interaction) and

(the effective range of interaction) for different population sizes

in the McKean–Vlasov framework. Each panel corresponds to a different value of

n and depicts how the joint influence of

and

shapes the stability and convergence of agents’ opinions over time. In the top-left panel (

), where the population is relatively small, the contour structure is irregular and less symmetric. This reflects the strong influence of stochastic fluctuations due to the limited number of interacting agents. In this regime, opinion clusters form in a less predictable way, with random effects playing a central role in determining whether consensus or polarization emerges. The system is therefore more sensitive to both initial conditions and noise.

As the population increases to (top-right), the contours become more structured and approximately symmetric. This indicates that as the number of agents grows, the law of large numbers begins to smooth out random fluctuations, and the underlying mean-field effect of the interaction kernel starts to dominate. At this stage, opinion formation exhibits more stable patterns, and clusters are determined less by chance and more by systematic interaction dynamics. For (bottom-left), the contour lines become even more regular, exhibiting near-circular symmetry centered around the interaction parameter region where the system is most stable. This suggests that the population is now large enough for the McKean–Vlasov approximation to provide an accurate description of the collective dynamics. The influence of randomness is significantly reduced, and the system’s macroscopic behavior is largely governed by the deterministic mean-field equation. Opinion clusters become sharper, and their positions in the opinion space depend primarily on and .

Finally, in the bottom-right panel (), the contour structure shows almost perfect symmetry and smoothness. At this scale, the dynamics are essentially deterministic, with negligible stochastic deviations. The clustering process is entirely driven by the balance between the strength of interactions (how strongly agents adjust their opinions toward their neighbors) and the range of interactions (how far across the opinion space such influence extends). As a result, the opinion distribution evolves in a predictable manner, converging toward stable equilibrium clusters.

Following [

68], we use a modified version of the Friedkin and Johnsen model [

72]

where

,

defines the set of neighbors of

agent such that

, for all

r be the radius of neighborhood, and

be the actuator dynamics [

68]. After assuming

and

, Equation (

16) becomes

To justify the structure of Equation (

17), we note that the model synthesizes key elements from opinion dynamics, mean-field interaction kernels, and stochastic control theory. The first term,

, captures the natural tendency of an agent’s opinion to decay toward a neutral baseline over time and reflects internal anchoring behavior consistent with the Friedkin–Johnsen-type framework. The second term incorporates the aggregate influence from other agents through a non-linear interaction kernel

, whose support is bounded and shaped by parameters

and

. This kernel modulates the strength and scope of pairwise interactions based on opinion proximity and is consistent with kinetic models of opinion exchange. The third term,

, arises from an actuator-based control framework, where the actuator gain is assumed to be state-dependent, i.e., proportional to the current opinion magnitude. This formulation ensures that control input scales with opinion intensity, aligning with models where individuals exert greater effort when holding stronger views. Finally, the multiplicative noise term

introduces stochasticity into the dynamics, with volatility increasing with opinion magnitude a structure motivated by empirical observations in behavioral and social systems. Collectively, these components yield a state-dependent, stochastic differential equation with non-linear drift, capturing the coupled effects of self-dynamics, social influence, control, and uncertainty in the evolution of individual opinions.

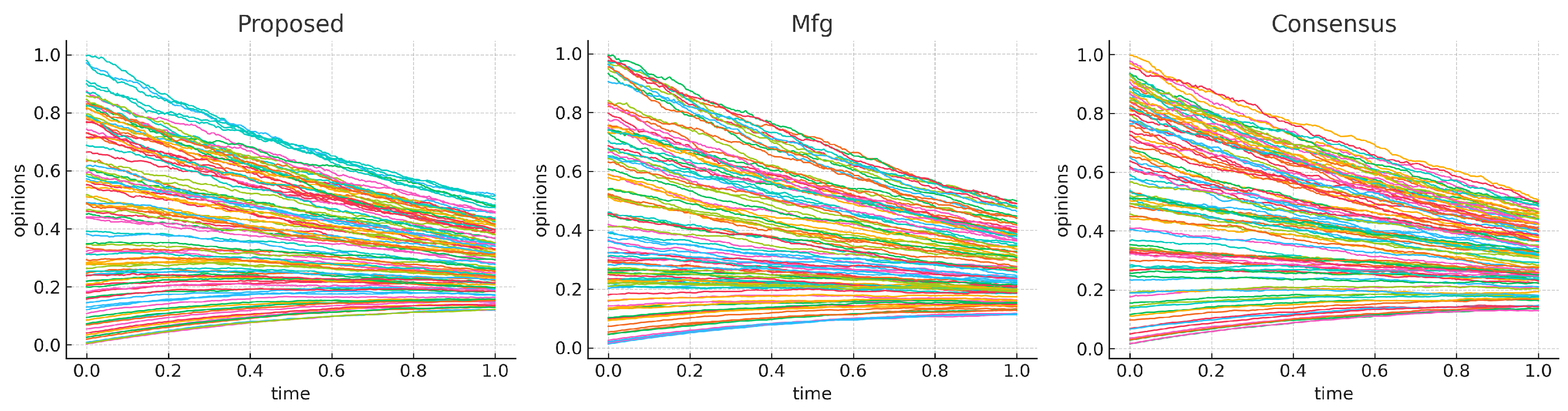

Figure 3 presents the temporal evolution of opinions in the McKean–Vlasov opinion dynamics (

17) for different population sizes:

and 500. Each panel corresponds to a specific value of

n, with the standardized opinion values plotted against standardized time. The colored trajectories represent the dynamics of individual agents, while the bold black curve traces the average opinion trajectory across the population.

The opinion dynamics are governed by the stochastic differential equation

where the terms capture, respectively, opinion decay due to stubbornness (

), attraction to neighbors through the interaction kernel

(the second term), a quadratic reinforcement or destabilization effect due to control (

), and stochastic diffusion in opinions driven by multiplicative noise (

).

The panels reveal a clear pattern: although the trajectories appear organized and the average opinion stabilizes around a central trend, the opinions do not converge to a single cluster as might be expected in classical deterministic consensus models. This lack of convergence can be explained directly by the structure of (

17). In particular, the multiplicative noise term

continually injects variability into the system, preventing the formation of stable consensus clusters. Furthermore, the presence of the quadratic control term

amplifies deviations in opinion trajectories, acting as a self-reinforcing mechanism that counteracts the attractive forces of the interaction kernel. Unless

is carefully regulated, this term destabilizes the trajectories and disperses opinions rather than concentrating them.

The influence of the attraction kernel is also crucial in determining whether or not convergence occurs. If the scale parameter or the range parameter are too small, the effective strength of interactions between agents is insufficient to dominate the stochastic fluctuations and control-induced dispersion. As a result, even though the decay term biases opinions toward zero and the interaction kernel exerts a weak consensus force, these effects cannot fully overcome the opposing forces of noise and quadratic reinforcement. This balance explains why the trajectories remain spread out, with only partial alignment visible in the average opinion curve.

The dependence on n highlights the statistical smoothing properties of the system. For small populations (), individual trajectories are highly dispersed and the average opinion curve is relatively volatile. As n increases to , and 500, the law of large numbers exerts a stabilizing effect: the average opinion trajectory becomes smoother and more predictable, and fluctuations of the population mean diminish. Nevertheless, even in the largest population size shown (), individual opinions remain widely scattered and do not collapse into consensus clusters. This illustrates that simply increasing the number of agents does not resolve the fundamental non-convergence induced by the interplay of noise, self-reinforcement, and weak interaction forces.

Our main aim is to minimize Equation (

1) subject to Equation (

17). The integrating factor of Equation (

17) is

. Therefore, the

function is

Equation (

12) becomes

Now,

Using the above results, Equation (

11) yields

Clearly, Equation (

20) is a quadratic equation with respect to strategy

and can be written as

. Therefore, the optimal strategy of agent

i is

where

and

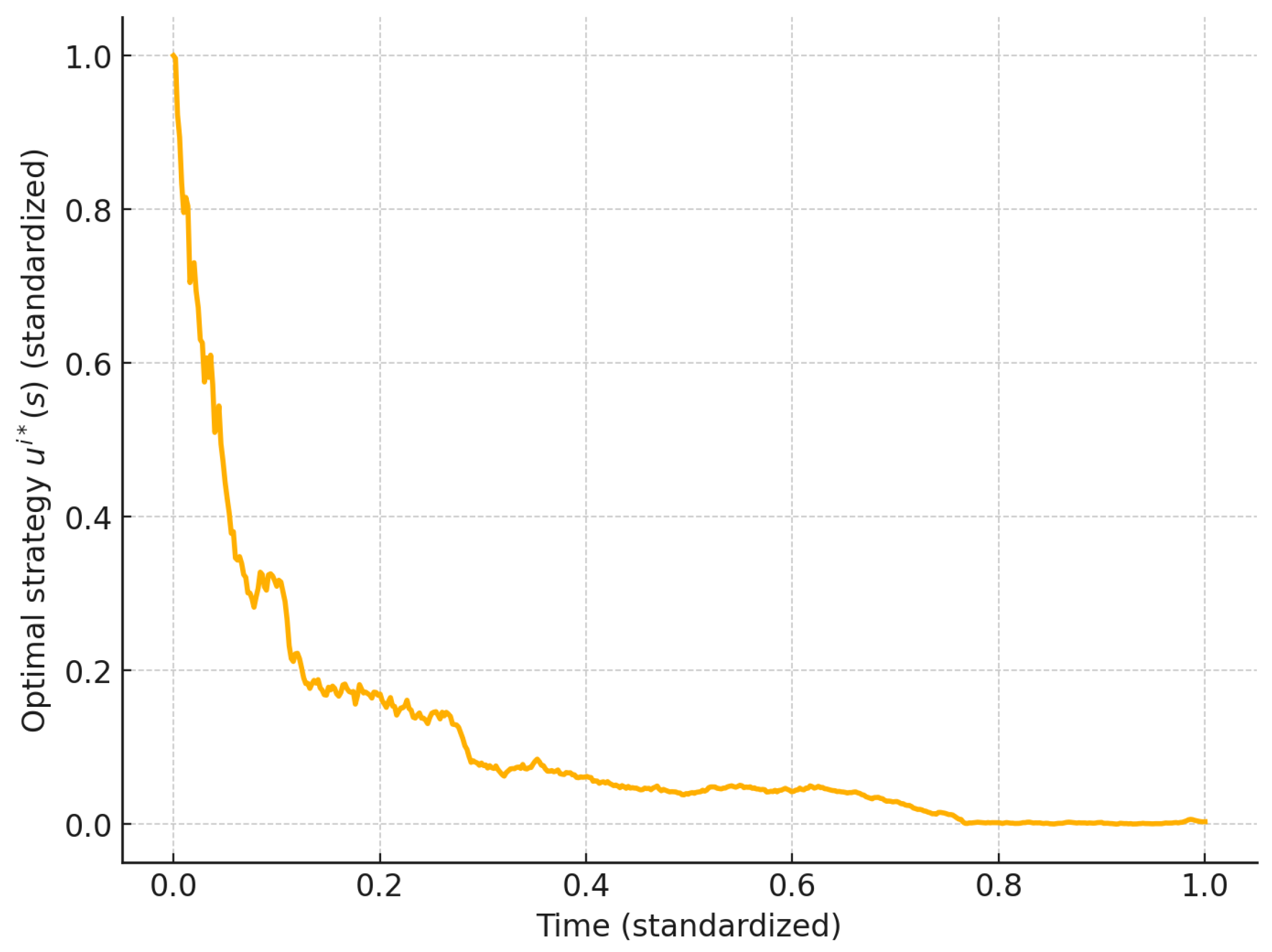

Figure 4 shows the time evolution of the optimal control strategy

for a representative agent. The strategy starts at a high level near

, reflecting strong initial adjustment efforts, and then monotonically decreases as time progresses. The decay indicates that agents exert less control over their opinions in the long run, as the system stabilizes and the marginal benefit of intervention diminishes.

Figure 5 illustrates how the optimal control strategy

varies as a function of the system parameters

and

. Parameter

represents the decay rate of opinion inertia, while

captures the intensity and effective range of interactions through the kernel

. The figure demonstrates that higher values of

lead to stronger suppression of opinion deviations, thus requiring lower control intensity by the agents. Conversely, when

is small, the system exhibits slower natural convergence, and agents must apply stronger control (larger

) to stabilize their opinions. Similarly, parameter

regulates the sensitivity of opinion interactions: when

is large, peer effects dominate, diminishing the necessity for active control. These results highlight the non-linear interplay between individual control efforts and collective opinion dynamics, showing that the optimal control strategy adapts endogenously to both internal resistance (governed by

) and external interaction strength (captured by

).

It is important to clarify the admissible choice of root in (

21). Since

by construction, the quadratic equation is strictly convex in

. Hence, the minimizer of the quadratic cost is uniquely determined by the smaller root of (

21), i.e.,

The larger root would correspond to a maximizer of the quadratic form and is therefore not admissible for an optimal control. Moreover, the discriminant condition

guarantees real-valued strategies. In the case

, both roots coincide and yield the same admissible control. Thus, under the standing assumption of

and the discriminant condition, the unique optimal strategy is given by the negative branch of the quadratic solution above.

Remark 13.

From a dynamical perspective, the choice of the negative branch in (21) is also consistent with stability of the controlled state process. Indeed, substitution of the larger root into the dynamics amplifies the control magnitude and leads to trajectories that may diverge due to the exponential terms present in . In contrast, the smaller root ensures that the control remains bounded and that the associated quadratic cost functional is coercive. This observation is consistent with standard results in stochastic control, where admissible controls must not only minimize the cost functional but also guarantee well-posedness of the controlled dynamics [52,73]. Let us discuss how the optimal strategy of agent

i derived in Equation (

21) varies with the difference in the opinions between

and

agents.

Case I.

Suppose there is no difference in opinions between agents

i and

j. In other words,

, which implies

, and

Therefore,

Since

, the sign of the above partial derivative depends on the terms on two sides of ±. Furthermore, as the optimal strategy cannot be negative and the term after ± in Equation (

21) is a negative dominant term, we ignore the + sign. Moreover, assuming

, Equation (

22) yields

The above result is true for all positive values of

and

. This implies that an agent’s opinion positively influences their optimal strategy.

Case II.

Consider the opinion of agent

i is less influential than agent

j, or

. By construction

. The terms

and

take the same value as in Case I. The other term is

Hence,

After assuming

, Equation (

25) becomes Equation (

24). Therefore,

. This implies that agent

i’s opinion positively influences

, even if agent

j’s opinion is more influential in the society. Furthermore,

The above equation shows a negative correlation between the

agent’s optimal strategy and the

agent’s opinion. This implies that as the opinion of the more influential

agent becomes stronger, the

agent becomes more hesitant to make a decision.

10. Conclusions

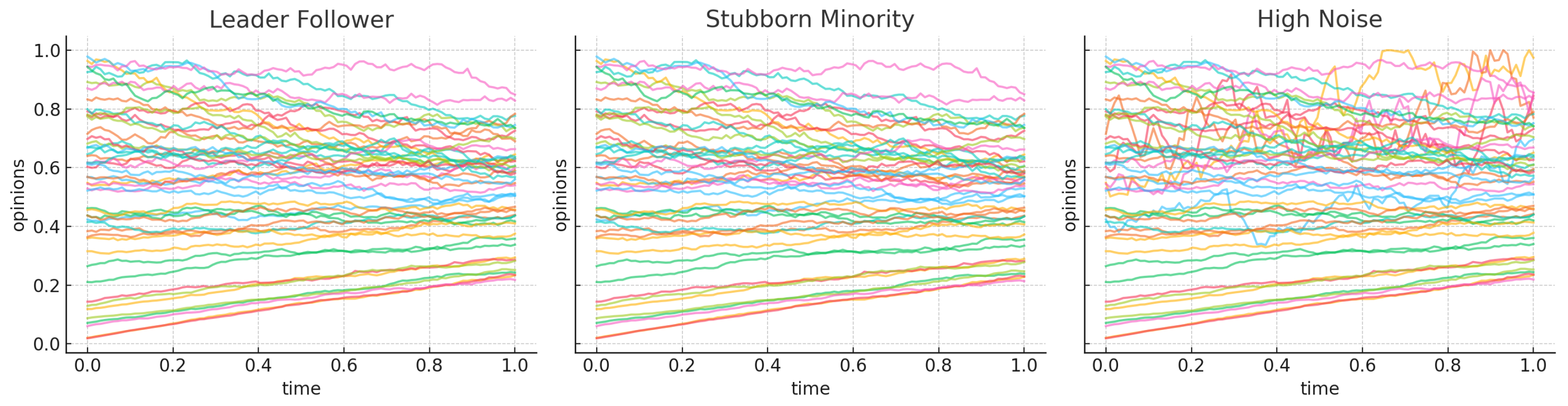

In this paper, we have investigated the estimation problem of an optimal strategy for an agent within a stochastic McKean–Vlasov opinion dynamics framework and its related system of weakly interacting particles. By employing a Feynman-type path integral approach with an integrating factor, we identify a for a social cost function governed by a stochastic McKean–Vlasov equation. Utilizing a variant of the Friedkin–Johnsen-type model, we derive a closed-form solution for agent i’s optimal strategy. We analytically examine the effects of and on agent i’s optimal strategy when and . Due to the complexity of the terms involved, we cannot conclusively determine the impact of and on . However, we find that the optimal strategy of agent i is positively correlated with their own opinion, irrespective of agent j’s influence. We also developed a novel analytical and computational framework for the control of stochastic opinion dynamics modeled through McKean–Vlasov SDE. Building upon the modified Friedkin–Johnsen-type structure, we introduced a Feynman-type path integral formulation of the control problem, which enables us to bypass the curse of dimensionality inherent in conventional approaches such as HJB equations. Specifically, we derived the explicit form of the optimal control strategy for each agent, expressed as the solution of a quadratic equation, and rigorously clarified the admissible root selection conditions under convexity and discriminant constraints. The proposed strategy minimizes a quadratic cost functional that balances peer influence, individual stubbornness, and control effort, while being consistent with the stochastic dynamics of agents’ opinions. The path integral approach further provides a tractable and scalable method for evaluating the optimal strategy, offering both computational efficiency and analytical transparency compared to traditional stochastic control methods. We also examined the relationship between Nash equilibria in finite-agent settings and MFG equilibria, showing that the proposed control law converges to the MFG solution as the number of agents grows large. To ensure practical relevance, we relaxed the assumption of agent homogeneity by incorporating heterogeneous influence weights, stubbornness levels, and noise intensities, and demonstrated that the control framework remains functional under such heterogeneity, while producing distinctive phenomena such as leader–follower effects, stubborn minorities, and persistent fluctuations in high-noise subgroups. Through numerical experiments and sensitivity analyses, we illustrated the dynamic evolution of opinions under varying network configurations and parameters, confirming that stronger peer influence accelerates consensus up to a saturation threshold. Comparative results highlighted that, although conventional HJB equation-based methods perform adequately in small-scale systems, the path integral control is more efficient and scalable in large-scale networks. Taken together, our results establish a unified framework that not only advances the theoretical understanding of controlled opinion dynamics but also provides practical computational tools for analyzing consensus, polarization, and control in large and heterogeneous social networks. Future work may extend this framework to adaptive network topologies, incorporate learning-based strategies for real-time control, and explore empirical calibration of model parameters in applications ranging from online social media dynamics to collective decision-making systems.

The optimal control strategy

obtained in (

21) should be understood as the Nash equilibrium strategy in the finite-agent stochastic differential game defined by (

17). A natural question concerns its relation to the MFG equilibrium. Under standard regularity conditions, including Lipschitz continuity of the drift and diffusion coefficients, convexity of the cost functional, and exchangeability of the agents, the sequence of Nash equilibria of the

n-player game converges to the unique MFG equilibrium as

; see, e.g., refs. [

19,

20,

37]. Thus, the explicit strategy derived here may be interpreted not only as a finite-agent Nash equilibrium but also as an approximation to the MFG solution when the agent population is sufficiently large. This interpretation provides an important bridge between the finite stochastic control formulation used in this paper and the classical MFG framework.

For future research, extending our results to scenarios where the diffusion coefficient is unknown and conducting numerical analyses under various social network structures would be valuable. This extension is intriguing because, for a wide range of McKean–Vlasov SDEs, the uniqueness (or non-uniqueness) of the invariant measure(s) is influenced by the noise coefficient’s magnitude. Additionally, extending this problem to fractional McKean–Vlasov opinion dynamics could more accurately observe the influence of and on .