Abstract

This paper presents a comprehensive analysis of artificial intelligence-powered automated grading systems (AI AGSs) in STEM education, systematically examining their algorithmic foundations, mathematical modeling approaches, and quantitative evaluation methodologies. AI AGSs enhance grading efficiency by providing large-scale, instant feedback and reducing educators’ workloads. Compared to traditional manual grading, these systems improve consistency and scalability, supporting a wide range of assessment types, from programming assignments to open-ended responses. This paper provides a structured taxonomy of AI techniques including logistic regression, decision trees, support vector machines, convolutional neural networks, transformers, and generative models, analyzing their mathematical formulations and performance characteristics. It further examines critical challenges, such as user trust issues, potential biases, and students’ over-reliance on automated feedback, alongside quantitative evaluation using precision, recall, F1-score, and Cohen’s Kappa metrics. The analysis includes feature engineering strategies for diverse educational data types and prompt engineering methodologies for large language models. Lastly, we highlight emerging trends, including explainable AI and multimodal assessment systems, offering educators and researchers a mathematical foundation for understanding and implementing AI AGSs into educational practices.

Keywords:

automated grading systems; machine learning algorithms; mathematical modeling; AI-powered assessment; STEM education; educational technology MSC:

68T02

1. Introduction

The assessment and quantification of students’ knowledge acquisition is central to evaluating educational effectiveness. Formative assessment provides ongoing feedback to students through assignments, while summative assessment methods like written examinations serve as crucial tools for measuring learning outcomes [1]. In recent years, educational institutions have increasingly explored automated grading systems (AGSs) powered by artificial intelligence (AI) to enhance efficiency, reduce grading bias, and provide timely feedback. While automated grading practices span across various disciplines, this paper focuses specifically on STEM (Science, Technology, Engineering and Mathematics) education.

1.1. Manual vs. Automated Grading

Manual grading has long been standard practice for teachers to assess student performance in traditional classroom learning. In so doing, they are able to give personalized feedback and capture nuances in students’ answers. However, this method’s scalability is limited. As student enrolment numbers and administrative workloads grow [2], teachers increasingly struggle to provide timely feedback to students. This situation is exacerbated when teachers have to grade assessments that are more time-consuming and complex such as programming assignments or essays, leading them to give fewer assignments to students despite the critical need for regular practice to obtain mastery [3,4]. Moreover, for Massive Open Online Courses (MOOCs) which attract millions of students globally, manual grading is simply an infeasible task [5].

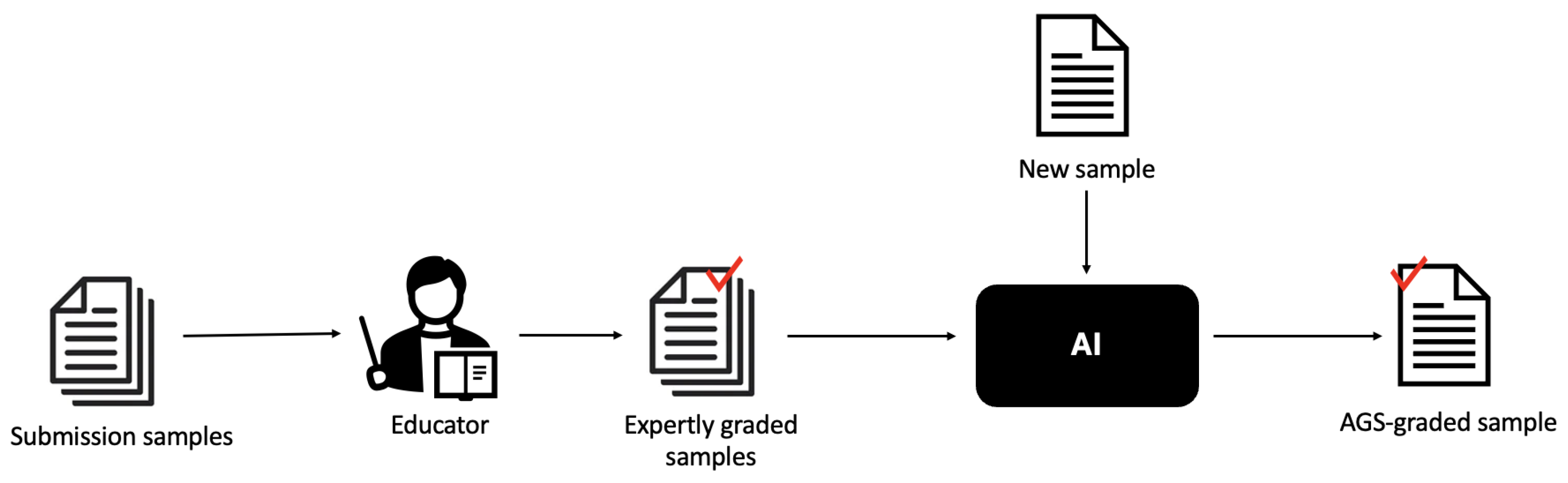

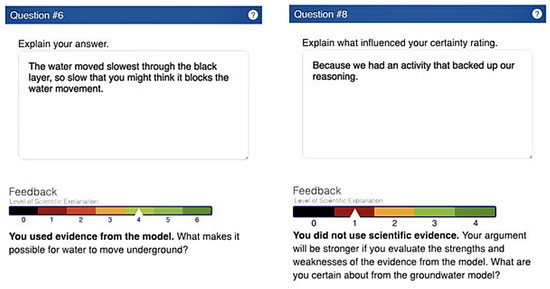

In light of such challenges, the AGS has emerged as a promising solution to shorten the grading process and provide rapid feedback to a large student cohort [6]. AGSs are online software or platforms that automatically output a grade (and/or feedback) given an input of a student’s work. AGSs provide students with almost instant scores and feedback upon submission of their solutions, helping them pinpoint and rectify misconceptions quickly and independently [7,8]. Furthermore, by reducing the grading burden for teachers, AGSs also free up time for them to spend on tasks such as giving one-to-one support to students, engaging them in academic discussions, preparing for class or working on professional development [9]. For example, instructors using zyLabs, a dynamic analysis AGS for coding exercises, save an average of 9 h (with some reporting over 20 h) per week on grading [10]. Consequently, educators can now give more frequent assignments for students to do [11], improving their knowledge as they practice more. That said, some educators may still prefer a human-in-the-loop approach, which allows them to review and overwrite grades in the rare case that the AGS had produced an inaccurate grade. Even so, the AGS would still have significantly reduced the overall time spent on grading by automating the bulk of routine assessments. The interactions between educators and students with the AGS are depicted in Figure 1.

Figure 1.

Interaction flows between students, educators and the AGS.

In addition to scalability, AGSs differ from manual grading in various ways. For example, the perceived subjectivity of manual grading (especially for more creative assignments like essays) as shown through variations in scores awarded by different or even the same assessors, is seen as unfair by students [12]. The AGS tends to be more objective and consistent, as it grades the same assignment with the same score even on multiple resubmissions. While educators’ grading can be inadvertently influenced by personal knowledge of some students [13], the AGS is free of relationships with students and prioritizes merit, minimizing potential biases.

Some AGSs may also allow for multiple submissions prior to the final deadline, providing feedback each time. This allows students to revise their work after evaluating their progress and learning from their initial misconceptions as identified from previous submissions, promoting active learning and improving student performance [11,14]. Students especially value this benefit, as evidenced from Manzoor et al. [15] implementation of Web-CAT AGS for coding exercises done on Jupyter Notebooks. Not only did this AGS significantly reduce instructors’ workloads, it also improved the student learning experience as 89.7% of students reported that immediate feedback enhanced their performance, and 91% would recommend Web-CAT to future instructors as such. Each student had an average of 11 submissions on each homework, as they made incremental and continuous improvements after viewing the feedback given on each submission. Since they are able to view their results right away, they are more motivated to upload an improved answer to achieve higher scores before the deadline [16]. Such an iterative learning process can help students increase mastery levels in different domains [17]. In fact, students who carry out self-regulated learning and persist through the “productive struggle” (e.g., submitting multiple times and working on their solutions till they got a better grade) ultimately achieve stronger results [18].

An added benefit of using AGSs is borne from the online data amassed from a student’s submissions and results, which can be organized into informative learning analytics dashboards. These dashboards can be given to teachers as feedback to analyze the gaps in their teaching approach, assessment curation (e.g., whether questions set were too easy or difficult) or disseminated to students for reflection [19,20,21]. For example, Coursera, an online platform that hosts MOOCs and utilizes AGSs, informs teachers when many students answer a certain question wrongly on an assignment [7]. Using insights gleaned from these data, teachers can further improve their strategies to respond to students’ needs.

1.2. AI vs. Non-AI Grading

STEM assessments must evaluate multiple dimensions of student understanding: mathematical accuracy in calculations and formula derivation, logical reasoning in multi-step problem solving, spatial understanding in geometric and scientific diagrams, procedural knowledge in laboratory techniques and programming implementations, and the ability to interpret and create visual representations such as graphs, molecular structures, and engineering schematics [22]. These diverse assessment requirements, combined with the precision demanded in STEM fields where a single computational error can invalidate an entire solution, create particular challenges for automated grading systems that must balance accuracy with partial credit assignment.

AGSs can be further classified into AI- and non-AI-powered tools. Non-AI AGSs are typically used for assessment types that have clear cut correctness criteria or fixed solutions. This includes multiple choice and True-or-False questions where students’ work can be matched and graded using a predefined answer key. For programming courses, a non-AI AGS may assess student code submissions using dynamic program analysis. This involves compiling a submitted program, unit-testing it against a set of predefined inputs and comparing the student’s program outputs to the expected outputs (to check if the student’s work passed the test cases) [23,24]. While such graders can efficiently automate a teacher’s work, it lacks nuance by solely grading based on the correctness of outputs. It fails to evaluate partially correct or non-running programs which could have been due to students’ minor errors or oversights, despite having the correct approach [17]. Similar complaints about rigidity were raised by Engineering university students about an AGS for physics problems that accepted a student’s answer if it fell within a predefined numeric interval. Citing that it “was only able to correct answers, but not assess the method used to solve the problem”, students were concerned how small errors (e.g., a wrong sign or a miscalculation in one step) can result in losing all points despite using the right strategy. This may cause students to be dissatisfied and perceive the system as incapable of evaluating true conceptual understanding [25] and problem-solving skills. Such reactions are unfavorable as student perception of learning tools is an important determinant of depth of learning and learning outcomes [14]. AGSs that grade based on keyword matching face similar problems, as they reward rote memorization of specific terms rather than conceptual understanding. For example, such a grader may mark a student’s answer as ‘wrong’ despite him using a synonym of the keyword defined in the answer key [26].

Furthermore, non-AI AGSs are limited in their ability to process unstructured data (e.g., text, images and audio). As such, these AGSs are incapable of evaluating open-ended assessments such as essays, diagrams and more, which can evoke higher-order thinking that more efficiently reflects a student’s ability to apply what they have learned, as compared to simple multiple-choice questions [22].

In contrast, AI-powered AGSs address many of these limitations by leveraging advanced technologies such as natural language processing and machine learning. They are capable of understanding both structured and unstructured data, and can even assess the quality of students’ work, their creativity and depth of understanding, offering a more nuanced and comprehensive evaluation of student work. This is because AI “simulates the natural intelligence of human into machines” [27], allowing them to take on human tasks such as language translation, speech and visual recognition, and make complex decisions [28] for different domains, depending on the algorithm used and the corpus of knowledge it was trained on. Consequently, AI AGSs are able to competently score more complex assessments, such as class diagrams [29], textual scientific explanations [30] and more.

Given the transformative potential of AI AGSs to provide nuanced and versatile assessments, this review will focus on exploring their applications, benefits, and challenges in detail.

1.3. Evaluating AGSs

The efficacy of an AGS can be evaluated in various ways. How accurate and reliable it is in scoring a student’s submission is typically measured by machine-human score agreement–that is, by comparing machine-generated grades to human-scored grades (i.e., the gold standard). Various statistical metrics have been employed to evaluate this agreement and provide a comprehensive understanding of the AGS’ performance [31]. They include precision (measures the number of actually correct model predictions out of all predictions it labeled as correct), recall (measures the proportion of actually correct responses that the AGS successfully identifies), and F1-score (integrates precision and recall) [32]. Accuracy of the model refers to the number of samples the AGS correctly predicts the grade for out of all samples. Cohen’s Kappa measures inter-rater reliability–it assesses how much raters agree on the grade assignment beyond chance. It ranges from 0 (agreement due to random chance) to 1 (perfect agreement), calculated as , where is observed agreement among raters and is hypothetical probability of chance agreement [33].

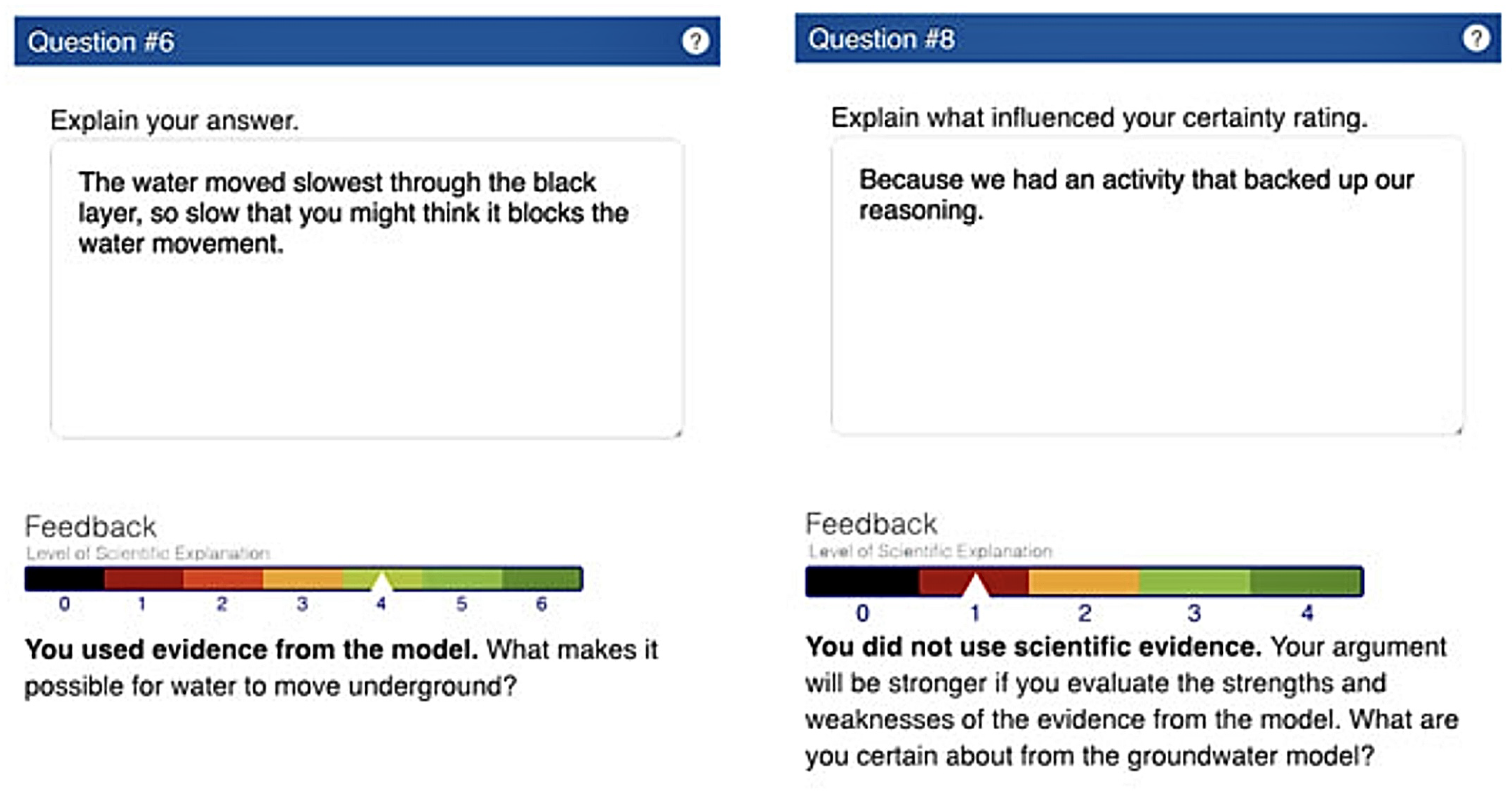

On the other hand, it is also meaningful to measure how useful an AGS is in affecting student learning outcomes, perceptions and experiences. For example, García-Gorrostieta, López-López and González-López [34] developed an AGS that scored the argumentation level of computer engineering students’ final course project reports using machine learning techniques. To assess students’ views of the AGS, the team disseminated a satisfaction survey based on the Technology Acceptance Model to users. Users were expected to rate (using a five-point Likert scale) the system’s usefulness (e.g., did it improve their argumentation skills?), ease of use (e.g., was learning to use the system easy for them?), adaptability (e.g., did the level provided by the AGS—low, medium, high—help them try to improve their arguments?), and intention to use (e.g., would they be willing to use it in other courses to enhance their argumentation skills?). To measure how an AGS could impact learning gains, Lee et al. [35] developed one that could assess and provide feedback on middle and high schoolers’ scientific argumentation skills as in Figure 2, and analyzed pre-test and post-test scores after using it.

Figure 2.

An AGS that uses c-rater-ML to automatically score and give feedback to students’ scientific arguments called HASbot. Reproduced from [35] under CC BY-NC 4.0.

Building upon this theoretical foundation, this paper presents a comprehensive analysis of AI-powered AGS implementations in STEM education. STEM was selected as the focus due to the diversity of available assessment types—ranging from coding exercises and essays to short answers and diagrammatic representations. In addition to textual assessments, STEM assessments often require evaluating the correctness of computational logic, the structure of equations, and the interpretation of visual information. These multimodal data formats demand specialized AI models and feature engineering strategies, making STEM grading both more complex and richer in opportunities for innovation. By examining these unique aspects, this paper provides a focused exploration of how AI AGSs can be effectively designed and evaluated in STEM education.

2. Methods

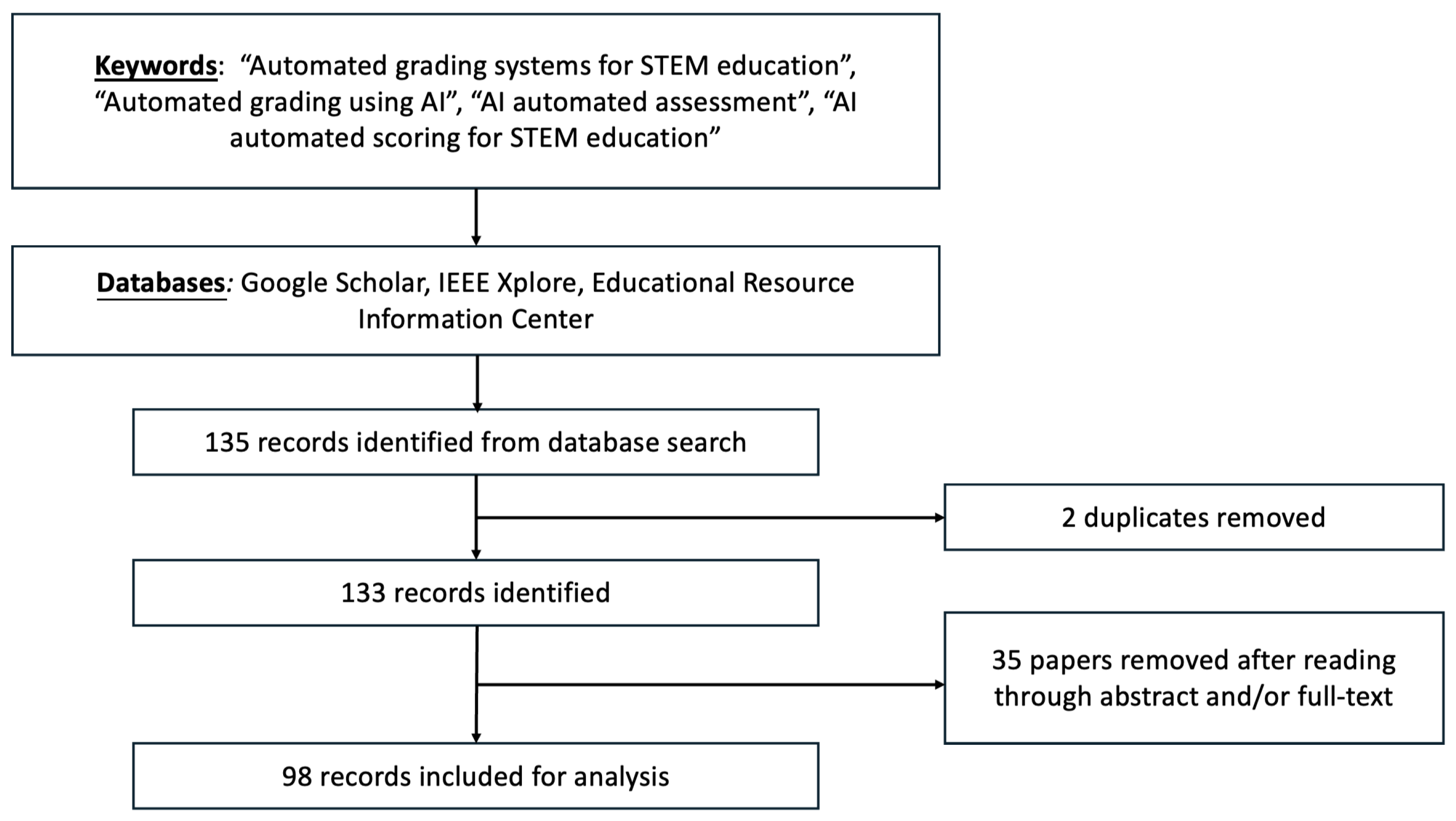

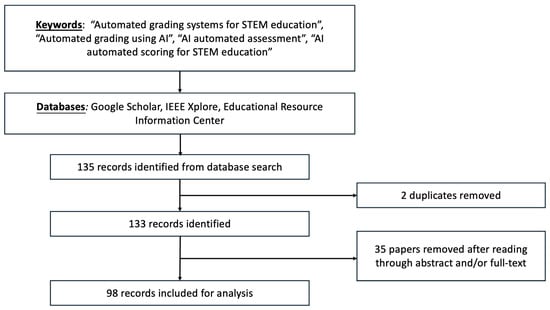

A search for papers on AGSs was conducted as shown in the PRISMA flow diagram in Figure 3. The search was largely limited to papers within the period of 2015 to 2024 to capture recent developments in the rapidly-evolving field. That said, some influential works from earlier than this time period were also included to ensure a comprehensive review of foundational methodologies.

Figure 3.

PRISMA flow diagram for selecting papers to include in this review.

135 resources were initially gathered from the following databases: Google Scholar, IEEE Xplore, and Educational Resource Information Center. The following search keywords were used: “automated grading systems for STEM education”, “automated grading using AI”, “AI automated assessment”, “AI automated scoring for STEM education” and more. Only peer-reviewed papers were included to enhance the validity and credibility of this review.

2 duplicated papers were then removed from the inclusion list during the first screening, while the second screening involved excluding 35 other papers after reading their abstracts and/or full-texts carefully. Only studies that addressed the usage of AGSs in some capacity were retained. Empirical research and review papers were included to maintain a focus on evidence-based sources.

The final inclusion list consists of 98 papers, and will be investigated to provide answers to the following questions:

- 1.

- What are the key AI techniques used in AGSs?

- 2.

- How are AGSs being implemented in STEM education and what are their implications?

- 3.

- What are some challenges faced during implementation and the corresponding solutions?

Addressing these questions is crucial in understanding how AGSs can be effectively utilized to lessen educators’ workload and improve student outcomes.

Our analytical framework systematically categorizes the identified AI techniques based on their mathematical foundations and algorithmic approaches. For each technique, we examine the underlying optimization principles, feature representation strategies, and performance evaluation metrics to provide a comprehensive understanding of their applicability in educational assessment contexts.

3. Key AI Techniques Used in Automated Grading

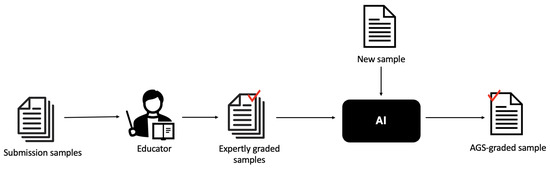

AGSs leverage AI to assess student responses across a range of assessment types–typically, such techniques are incorporated in the feature engineering and machine learning stages. Initially, educators manually grade some submission samples, producing an expertly graded dataset as the ground truth. This dataset will then be used to train the AI model. Once trained, the model evaluates incoming unseen samples and generates automated grades as in Figure 4.

Figure 4.

How AI AGSs are typically trained.

Feature engineering involves the selection and transformation of raw data into meaningful features, which are then sometimes used by machine learning algorithms to make predictions of students’ grades [36]. It is important to note that while traditional machine learning relies heavily on these handcrafted features, modern deep learning and generative AI approaches may sometimes bypass explicit feature engineering by learning directly from raw input data. This section delineates the AI techniques used in AGSs deployed in the contemporary educational landscape.

3.1. Feature Engineering

Natural Language Processing is an AI field that analyses and converts human language into meaningful representations [26]. In automated grading for STEM education, feature engineering faces unique challenges due to the complex mathematical symbols, scientific formulas, diagrams, and multimodal expressions inherent in STEM disciplines. The choice of features is critical to the success of the automated grading model, as they directly influence the model’s ability to predict grades accurately across diverse STEM assessment types.

For textual responses in STEM fields, NLP techniques are typically used to pre-process and extract features from students’ responses such as essays, short answer questions [37], and programming assignments. However, STEM texts require specialized handling of mathematical expressions and scientific terminology. For example, Angelone et al. [38] extracted Levenshtein string similarity distance between submitted and reference R code snippets as key features for programming assessment. In physics education, Çınar et al. [39] utilized Term Frequency-Inverse Document Frequency (TF-IDF) and n-gram tokenization techniques to transform textual responses containing scientific concepts into meaningful numerical features, achieving over 80% accuracy across four physics questions in Turkish.

STEM education extensively involves handwritten mathematical formulas, scientific diagrams, and experimental documentation. Optical handwritten recognition [40] techniques are used to extract features from images of handwritten assignments, which are widely disseminated in STEM education. Rowtula et al. [41] developed feature extraction methods for handwritten scientific responses, employing word spotting to match keyword images from reference answers to word images from each submission. Speech tagging and Named Entity Recognition were also used to classify words grammatically and into different scientific entities (chemical compounds, physical quantities, etc.), demonstrating the complexity of processing scientific handwritten content compared to general text.

Advanced STEM assessments often require evaluation of practical skills and complex problem-solving processes captured in video format. Computer vision techniques extract features directly from image or video data relating to student performance. Levin et al. [42] introduced AGSs that evaluate surgical skills using computer vision techniques, which extract features relating to hand, tool and eye motion such as distance covered, speed, number of movements. These features are then used as inputs to deep learning or machine learning algorithms to predict surgical proficiency. Similar approaches can be extended to laboratory assessments in chemistry and physics, where procedural accuracy, equipment handling, and safety compliance can be quantified through automated analysis of experimental videos.

Unlike general educational content, STEM feature engineering must address specialized requirements including syntactic, semantic and structural similarity metrics between submitted and reference materials (as demonstrated in class diagram assessment by Bian et al. [43]), mathematical expression recognition and validation, scientific notation standardization, and multi-step reasoning process evaluation. The complexity increases when dealing with interdisciplinary STEM problems that combine mathematical calculations with scientific explanations, requiring sophisticated feature extraction methods that can capture both computational accuracy and conceptual understanding.

Importantly, features are not generalizable [44]–feature engineering remains context-dependent as there is no universal feature set that guarantees optimal grading accuracy across all assessment types and discipline. Successful AGS implementations typically combine multiple feature types and adapt them to specific educational contexts and learning objectives.

3.2. Machine Learning

The landscape of machine learning approaches in AGSs has evolved significantly, encompassing three main paradigms: traditional machine learning (ML) algorithms that operate on handcrafted features, deep learning (DL) models that may use handcrafted features or learn representations directly from raw data, and generative AI (GenAI) systems that can process and evaluate student work through natural language understanding. Table 1 outlines works that employ the two former approaches.

Table 1.

AI algorithms used in AGSs.

From a mathematical modeling perspective, these AI approaches can be viewed as supervised learning problems where the system learns a function that maps features extracted from student submissions X (such as text, code, or diagrams) to corresponding grades or scores Y. The optimization objective typically involves minimizing prediction error while ensuring the model generalizes well to diverse student response patterns.

The algorithms presented in Table 1 demonstrate diverse mathematical approaches to automated grading, ranging from linear models like logistic regression to complex deep learning architectures like transformers. The choice of algorithm depends on factors such as data availability, interpretability requirements, and the specific characteristics of the assessment task. Traditional machine learning approaches often require explicit feature engineering, while deep learning models can learn representations directly from raw data, offering different trade-offs between performance and computational complexity.

3.3. GenAI Approaches

Large language models (LLMs) represent a paradigm shift in automated grading, moving from feature-based classification to direct natural language understanding and generation. Unlike traditional ML and DL approaches that require explicit feature engineering or learn representations from structured data, GenAI systems can process and evaluate student work through contextual language understanding [55].

From a mathematical perspective, GenAI approaches can be formulated as conditional text generation problems where the system learns a probability distribution over possible grades given the assessment context, student submission, and carefully crafted prompts. The key advantage lies in their ability to leverage pre-trained knowledge and adapt to new assessment tasks through prompt engineering rather than requiring extensive task-specific training data. LLMs form the backbone of GenAI, a type of AI algorithm capable of creating new content by learning patterns from existing data. An example is OpenAI’s ChatGPT 3.5, which was developed using the Generative Pre-trained Transformer (GPT) architecture, and is able to execute tasks like content generation, machine translation and more whilst maintaining a human-like conversation [56]. It has often been cited as an appealing and versatile tool for an automated grader, as it is akin to a human tutor by producing quick, accurate and personalized feedback for students [57].

As it is already pre-trained, it does not require significant data and computational resources to train it from scratch for different tasks (it is task-agnostic), unlike other machine learning techniques. In fact, its outputs can be shaped by just giving it a prompt—a carefully designed set of instructions in natural language—which is developed through an iterative process of writing and refining called “prompt engineering” [58]. This makes automated grading more accessible to educators as instead of requiring specialized expertise in machine learning, educators can achieve high-quality automated grading outcomes by simply crafting intuitive prompts, seamlessly integrating technology into their teaching workflows. Table 2 summarizes representative GenAI implementations in STEM automated grading.

Table 2.

GenAI Approaches used in AGSs.

The approaches presented in Table 2 demonstrate the versatility of GenAI in automated grading, ranging from zero-shot applications to fine-tuned domain-specific models. Selecting approach depends on factors such as available computational resources, assessment complexity, and the need for domain specialization. Prompt engineering emerges as a critical factor, with structured approaches like Chain-of-Thought reasoning showing significant improvements over basic prompting strategies. Unlike traditional ML approaches that require extensive feature engineering, GenAI systems offer more accessible implementation paths for educators while maintaining competitive performance levels.

Evidently, AI techniques such as machine learning, deep learning, and GenAI provide the foundation for AGSs, enabling them to assess a wide range of student outputs with increasing accuracy and sophistication. These advancements are pivotal in enhancing the efficiency, fairness, and depth of evaluations in educational settings.

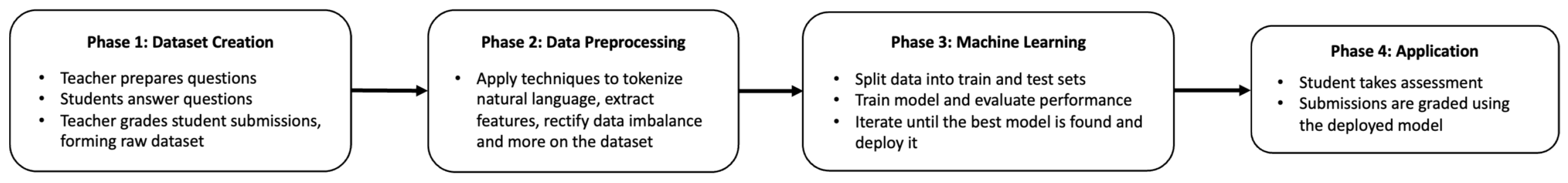

4. AGS Implementation

Current STEM implementations of AI grading systems span a wide range of educational levels and disciplines, from elementary schools [63] to universities [64], programming [65] to biology [66], showcasing their versatility and growing adoption in diverse learning environments. Figure 5 depicts the general workflow of how AI AGSs are trained and deployed in educational settings. This section analyzes the unique challenges encountered at each implementation phase and examines how current research addresses these challenges.

Figure 5.

Diagram showing how AI AGSs are typically developed and implemented for real-world usage. Simplified from [39].

4.1. Phase 1: Dataset Creation—Expert Annotation and Ground Truth Establishment

The initial phase of AGS implementation faces critical challenges in establishing reliable ground truth data through expert annotation. A primary challenge is ensuring inter-rater reliability among human experts, as inconsistent scoring can undermine model training effectiveness.

Weegar and Idestam-Almquist [67] addressed this challenge by implementing a systematic human-in-the-loop approach for computer science short answer submissions. They used a random forest classifier to predict scores using features such as cosine similarity scores (calculated by comparing student answers to reference solutions using multilingual Sentence BERT embeddings) and answer lemmas. Human graders then reviewed all classifier outputs, correcting any wrongly predicted ones to ensure high accuracy. This quality control mechanism established reliable ground truth data while achieving a 74% reduction in grading time for the entire exam from 36.0 h to 9.2 h, demonstrating the potential of automated grading in offloading the marking burden on top of a human-in-the-loop workflow for high-stakes assessments (e.g., final examinations).

Another significant challenge is the scarcity of large, expertly-scored datasets, particularly in specialized STEM domains. Zhu et al. [68] tackled this issue in their scientific argumentation assessment system by leveraging the c-rater-ML tool’s existing linguistic knowledge base. Their approach utilized support vector regression on linguistic, semantic and syntactic features to assess American highschoolers’ scientific argumentation skills. Students studying a climate change module were instructed to answer constructed response questions with a claim, explanation and uncertainty in their work, reducing the need for extensive domain-specific annotation while maintaining assessment quality.

4.2. Phase 2: Data Preprocessing—Multimodal Feature Engineering

The preprocessing phase presents unique challenges in STEM contexts due to the multimodal nature of educational content, including text, mathematical expressions, code, and scientific diagrams. Traditional NLP preprocessing techniques often prove insufficient for specialized STEM notation and terminology.

Vittorini, Menini and Tonelli [69] addressed these challenges in their R programming assessment system by implementing hybrid preprocessing approaches. They deployed an AGS that grades R assignments as part of a Health Informatics course for Master’s students. Such assignments typically involve students coding some calculations, before making a comment (in natural language) on the outcome. The AGS employs static code analysis to compare whether students’ commands and outputs match the answer key, as well as a support vector machine classifier to predict the correctness of comments. Features used for the classifier include the sentence embeddings of the student submission and correct solution, as well as distance-based features like cosine similarity sentence embeddings, token overlap and more. This dual-pathway preprocessing effectively handled the mixed code-text nature typical of programming assignments.

Tisha et al. [70] tackled preprocessing challenges for code quality assessment in their system that classifies the quality of high schoolers’ Haskell visual output codes into elegant or non-elegant for a computational thinking course. They addressed the challenge of extracting meaningful features from code by using clustered features (e.g., Halstead complexity measures, number of local variables), demonstrating how domain-adapted preprocessing can improve assessment accuracy in programming contexts.

4.3. Phase 3: Machine Learning—Model Training and Optimization

The machine learning phase encounters challenges related to model selection, hyperparameter optimization, and generalization across diverse student response patterns. STEM assessments particularly struggle with partial credit assignment and handling of multiple valid solution approaches.

The training process presents challenges in achieving robust performance across diverse student populations and response styles. Zhu et al. [68] demonstrated successful model optimization in their scientific argumentation assessment, where real-time scores and feedback were provided to students. Their results showed that 77% of students made revisions and re-submitted after receiving feedback, with each revision resulting in an average score increase of 0.55 points. Ultimately, the mean argumentation score increased from 5.2 to 5.84 for those who made revisions, suggesting that students could produce better scientific arguments as they continued to improve on their work over time.

García-Gorrostieta et al. [34] addressed model effectiveness challenges by developing an AGS that classified computer engineering students’ written argumentation levels into “Low”, “Medium” or “High”. Their experimental validation showed that the experimental group (with unrestricted access to the AGS) outperformed the control group (with access to the instructor only during class hours), achieving 91.48% argumentative paragraphs versus 59.66%, with statistically significant improvements in justification and conclusion sections.

Tisha et al. [70] tackled model optimization by implementing a decision tree model that achieved precision and recall rates of 71–94% for code quality assessment, demonstrating how appropriate algorithm selection can address subjective assessment challenges in computational thinking evaluation.

4.4. Phase 4: Application and Deployment—System Integration and User Acceptance

The deployment phase faces challenges related to system integration with existing educational infrastructure, real-time performance requirements, and user acceptance by both educators and students.

System integration and performance optimization present significant implementation challenges. Vittorini, Menini and Tonelli [69] successfully addressed integration challenges by developing a system that seamlessly handles both formative and summative assessment modes. Students surveyed demonstrated high satisfaction with the AGS as a formative assessment tool. They mentioned using the tool as intended, submitting multiple times and improving on their weaknesses until they achieve the highest grade, as well as using it to prepare for final exams. As a summative assessment tool, it was reported that the mean time required to grade an assignment reduced 43% from 148 to 85 s as well. Students who regularly used the tool as formative assessment also achieved higher grades (26.3) than those who did not use the system at all (21.7). This implementation effectively demonstrates the multi-benefits of AGSs–not only does it reduce instructor workload, it also serves as a valuable learning tool that positively impacts student performance when actively utilized.

User acceptance challenges require careful attention to stakeholder needs and perceptions. As noted by Nunes et al. [71], when students perceive a learning tool to be useful, the “adoption, use and effectiveness” of said tool will be positively impacted. Wan and Chen [72] tackled student skepticism by implementing GPT-3.5 with prompt engineering and few-shot learning for physics question feedback. Few-shot learning involves embedding multiple examples of student responses paired with expert feedback in the prompt to guide GPT’s output. On surveying users, results showed that GPT feedback were rated equally correct yet more useful as compared to human feedback. This can be attributed to how the GPT feedback is typically more comprehensive, addressing student responses in greater detail. It encouraged deeper reasoning for correct explanations and provided hints to guide students when explanations were missing, making the feedback more actionable and useful.

Educator acceptance represents another critical deployment challenge. Tisha et al. [70] demonstrated successful educator adoption through transparent system operation and clear educational benefits. Survey results from 32 teachers revealed high acceptance of the system, with 24 finding it helpful for unbiased grading, 27 expressing interest in its future use and 27 agreeing that such an AGS is useful.

Student engagement and motivation present ongoing deployment challenges that require careful system design. García-Gorrostieta et al. [34] addressed the challenge of student engagement through effective feedback mechanisms, where students were motivated to revise their texts approximately three times, aiming for a “high” argumentation level. Students rated the system highly useful (average score 4.24), indicating it encouraged better argumentation and improved their writing, demonstrating successful deployment that encouraged authentic learning behaviors rather than system gaming.

Through these implementation phases, current STEM AGS deployments have demonstrated systematic approaches to overcoming technical, pedagogical, and social challenges. The evidence shows that successful implementations require careful attention to each phase’s unique challenges, from establishing reliable ground truth data to ensuring sustainable user adoption in educational contexts. These STEM implementations have demonstrated AGS value proposition in multiple dimensions: alleviating instructor workload, enabling rapid iterative feedback cycles that improve student performance, maintaining grading consistency across large cohorts, and garnering positive reception from both educators and students.

5. Challenges and Solutions

While AI automated graders offer significant benefits in capturing nuances in submissions, scalability and efficiency, they are not without challenges. The main challenges facing AI-powered AGSs in STEM education include:

- Student Trust and Perceived Fairness: Concerns about the accuracy and fairness of automated assessments

- Teacher Acceptance: Resistance due to algorithm aversion and fear of replacement

- Vulnerability to System Gaming: Students exploiting system weaknesses for higher grades

- Over-reliance on Immediate Feedback: Encouraging superficial learning approaches

- Limited Expert-Scored Data: Insufficient training datasets for algorithm development

- Bias in Algorithmic Assessment: Unfair treatment of minority groups and disabled students

- Institutional Readiness: Infrastructure and support requirements

This section examines these challenges in detail and explores potential solutions to overcome them.

5.1. Student Trust and Perceived Fairness

Students may not fully trust an AI AGS to correctly grade their submissions even if it has high accuracy, especially if they perceive its outputs as unfair or unreliable sometimes. Hsu et al. [73] surveyed university students’ perceptions surrounding an approximately 90% accurate automated short-answer grader for computer science. 70% of the interviewees were unhappy with the existence of false negatives (wrongly graded correct answers), and 59% thought it was unfair that false positives (correctly graded wrong answers) meant that less proficient students got higher marks than deserved. Such reactions may lead to algorithm aversion, a behavioral phenomenon where humans “quickly lose trust in algorithmic predictions after seeing mistakes, even when the systems outperform humans”. Even the most accurate AGS is not infallible–to address students’ concerns, educators must prioritize transparency by explaining how the system was trained and how it makes decisions. A procedure for students to appeal wrongly graded submissions should be set up too. Should AGSs be utilized for high-stakes assessments (e.g., national examinations), protocols should be established to keep humans in the loop (e.g., giving them permission to overwrite automatically generated scores) to ensure that no student’s submission is misjudged or overlooked.

5.2. Teacher Acceptance

Such trust issues exist among teachers too. A study involving Israeli K-12 science teachers interacting with an open-ended biology AI AGS found that they lack knowledge on AI decision-making, and have strong algorithm aversion. Teachers often believed their grading abilities were superior, distrusted the AGS when its recommendations differed from their own, and viewed its errors as less acceptable than those made by humans. That said, after participating in a professional development program aimed at improving AI literacy, these biases were mitigated. The teachers were able to correctly interpret the AG’s results, justify its potential mistakes and recognize scenarios where the AGS might outperform human graders. They expressed increased willingness to use it in their classrooms and adopted the perception that AI may sometimes be more reliable than humans [74]. Another reason why teachers may be resistant to adopting automated grading is their fear of being replaced by AI [75]. To address this apprehension, it is crucial to emphasize AI as a tool to relieve them of routine and time-consuming tasks, reduce stress and workload, and help them focus on “higher-level pedagogic work” [76]. AGSs are meant to assist, not replace them.

5.3. Vulnerability to System Gaming

Another concern is the potential for students to exploit system vulnerabilities or “trick the system” to secure better grades, often at the expense of genuine learning. For example, it was demonstrated that submitting an inappropriate essay that repeated the same paragraph multiple times to the an essay AGS gave a high score [3]. In another case, some university undergraduates discovered and exploited a loophole in their AGS such that just submitting essays including an introduction, thesis statement, supporting paragraphs, and conclusion received higher scores even if the content deviated from the assigned essay topic [77]. Students may superficially write longer essays by adding unnecessary details if they figure out that essay length is positively correlated to their grades for the AGS they use. Such behavior shifts the focus from learning course content to learning how to manipulate an automated system, stifling their creativity and critical thinking. Instead of “improving writing”, students aim to “improve scores” instead [78]. Such behavior can be extrapolated to STEM-related essay assessments. To combat this issue, AGSs should be integrated with robust capabilities to detect unorthodox answers (e.g., repetition, off-topic content). Educators can consider using more sophisticated AGSs that award points for features relating to essay quality over shallow features like essay length and structure. It is also important to educate students on academic integrity and growth mindsets, emphasizing authentic learning over merely achieving high scores.

5.4. Over-Reliance on Immediate Feedback

Furthermore, the immediacy and comprehensiveness of feedback available can sometimes be a double-edged sword, as it may lead to students’ over-reliance on AGS. In fact, this problem extends to traditional, non-AI AGSs as well. For example, in programming courses, students often have the opportunity to submit their code multiple times and receive instant feedback on whether their submissions pass the test cases designed by the instructor. While this feedback loop is intended to facilitate learning, it can inadvertently encourage a surface-level, trial-and-error approach to problem-solving. Instead of reflecting on their code and independently testing its validity, they may rely solely on the AGS feedback provided with each submission to guide their code revisions. This discourages critical thinking, as students skip the essential process of analysing the code, hypothesizing potential issues, and testing their solutions themselves rigorously before submitting, instead depending on instructor-provided tests without truly understanding the underlying issues [79]. They then miss out on developing essential testing skills needed for real-world software development, where they must independently ensure their work is robust and error-free before deploying to their clients [80]. Such behaviour also causes the correctness of students’ code (AGS grade) to fluctuate with every submission, as they submit partially tested solutions and wait for feedback to make corrections repeatedly. This is not reflective of real-world practice where changes pushed to production must be meticulously validated, always improve results and never regress. To instil better programming principles, Baniassad et al. introduced permanent score penalties every time a student’s AGS grade decreased. Ultimately, the number of AGS submissions decreased by half with only a 1% drop in the median final grade. Students also reported checking and testing their solutions more than they would have without the penalty [81]. Instructors can also consider setting a limit on the maximum number of submissions for each assignment as well to ensure students slow down to reflect in between attempts [82].

5.5. Limited Availability of Expert-Scored Data

Another challenge in developing AGSs is the scarcity of large datasets pre-scored expertly with a common performance evaluation criteria to train machine learning algorithms. Without a substantial corpus, the accuracy of the AGS remains limited as they are unable to generalize across diverse answering styles, especially in small assessment settings [12,75,83]. To combat this problem, researchers can consider using GenAI models such as GPT-4 for data augmentation (i.e., to generate more training samples) [84]. This technique was used by Morris et al.–as high-scoring constructed responses to mathematical questions were under-represented in their dataset (compared to low-scoring ones), the Coedit LLM was used to rephrase and generate more high-scoring responses to resolve data imbalances [85]. That said, some time may still be required to establish the ground truth labels (i.e., expert scores) as one or more experts will need to grade each sample and agree on a suitable score [86].

5.6. Bias in Algorithmic Assessment

Additionally, algorithms reflect the biases of their human creators [87]. The under-representation of certain minorities in the training datasets of models powering AGSs can lead to unfair outcomes for them. In a study of a MOOC Pharmacy course on edX, the AGS was found to disadvantage non-native English speakers by grading them lower scores on open-ended assessments compared to native speakers. The study revealed a significant correlation between higher English proficiency and better essay scores, and lower score-agreement between the AGS and humans for non-native speakers. This casts doubts on the accuracy and validity of AGSs as they fail to capture the true knowledge of non-native speakers who may be proficient in the domain, but unable to express themselves well in English. To prevent the marginalization of such groups, translation mechanisms can be incorporated into current AGSs, or AGSs capable of scoring essays in multiple languages can be used instead, allowing students to submit answers in their native language for a more inclusive learning environment [88]. Developers should diversify training data by obtaining samples from from groups with different linguistic, cultural and ethnic backgrounds, ensuring a more representative dataset for the AI model to learn from. Elementary teachers have also reported some inadvertent biases against students with disabilities. Some AGSs have algorithms to detect and block essays with high percentages of misspelled words, excessive repetition, or insufficient text, aiming to ensure only “good faith” attempts are scored. Unfortunately, this penalizes students with learning disabilities by flagging their legitimate efforts as invalid, denying them feedback and hence limiting their participation in writing activities [78]. While such filters have merits in discouraging low-effort submissions, they may end up alienating certain groups of people. To give fairer evaluations, assistive technologies like speech-to-text functions can be integrated into the AGS, or a separate model can be trained on datasets containing samples written by students with disabilities.

5.7. Institutional Readiness

Finally, the successful transition to online assessments and AI-powered grading systems requires institutions to ensure robust infrastructure and support as well, such as hiring dedicated IT teams to handle technical challenges [89]. This readiness is crucial for maintaining system reliability, addressing user concerns, and facilitating smooth integration into the educational process.

6. Future Outlook

The future of AI-powered AGSs will be shaped by the integration of virtual reality (VR) [90,91], advancements in adaptive learning, and breakthroughs in assessing soft skills, paving the way for more immersive, personalized, and holistic educational evaluations. This section explores such trends in detail.

First, advancements in interactive technologies are redefining the mediums through which AGSs assess student performances. As VR becomes more ubiquitous in STEM classrooms, AGSs are expected to revolutionize how practical skills are assessed, enabling the evaluation of complex problem-solving within simulated environments. For example, Bogar et al. [92] employed a custom-developed VR simulator for peg transfer laparoscopic training, and trained a YOLOv8-based AI model on 22,675 annotated images to detect objects and mistakes such as invalid handovers. Results showed the AGS achieved 95% agreement with manual expert evaluations and reduced evaluation time from 22 h to 2 h for 240 videos. Such applications can be extended to disciplines like chemistry, where the AGS may be used to grade students in virtual laboratories (e.g., performing experiments like titration) based on their precision, adherence to safety protocols, and practical skills in real-time.

Besides VR, adaptive learning platforms (ALP) are also gaining increasing popularity for their ability to cater to diverse learning needs. ALPs are e-learning platforms that adaptively adjust content to be delivered to each student, catering to their unique learning needs. In so doing, research has shown that students become more engaged and achieve better learning outcomes [93]. In ALPs, the difficulty or type of questions presented to each student may be adapted to his knowledge level [94] to ensure that he achieves mastery at his own pace, in a way most suited for his learning profile. As such, AGSs play a pivotal role in ALPs. By providing instant feedback, the ALP is able to update the learner model (i.e., student characteristics such as proficiency) to enable further adaptation. ALPs are also often deployed for large student cohorts, whilst concurrently ensuring each student has an individualized learning trajectory—as such, it is difficult or even infeasible for teachers to keep up with grading the sheer volume of submissions manually. As mentioned previously, the AGSs will be able to improve efficiency in this aspect.

While technical skills have been discussed extensively in this paper, there is increasing recognition of the importance of soft skills in producing well-rounded STEM professionals. Moving forward, more AGSs that focus on assessing skills like collaboration and communication will emerge, reflecting a broader trend towards more holistic student evaluations. For example, Tomic et al. [95] developed an AGS to grade undergraduate collaboration in programming projects by analyzing individual contributions on GitHub. The system uses fuzzy logic to assess factors like the percentage of domain classes, tests and documentation written by each student, awarding badges (Bronze, Silver, Gold) based on contribution levels. For example, the Silver badge is awarded by evaluating those factors, each categorized into fuzzy sets such as “high”, “medium”, and “low”, eventually giving a binary output on whether the student qualified for the badge. Som et al. [96] proposed using CNN models to assess and provide feedback on group collaboration by analyzing spatio-temporal representations of student behavior during a teamwork task, which are extracted from audio and video recordings.

The future of AGSs will be shaped by advancements in educational technology and a shift towards nurturing students proficient in both technical and soft competencies. However, issues like trust, biased grading outcomes and faculty readiness will need to be addressed. Future research will focus on tackling these challenges while exploring aforementioned innovations to better serve the needs of educators and students alike.

7. Conclusions

This paper examines the usage of AI in automated grading, delineating the types of algorithms used and assessing its efficacy in real-world applications through user perceptions and learning gains. This comprehensive review demonstrates that STEM automated grading systems require fundamentally different approaches, particularly in handling mathematical expressions, scientific diagrams, and multi-step problem-solving processes. While challenges like algorithm aversion, over-reliance and biased grading may occur, various solutions have been suggested to mitigate them. To tap into the full potential of AGSs, researchers should continue to expand on current implementations to assess more holistic skill sets, and incorporate them with innovative learning technologies.

Key STEM-specific contributions of this review include the identification of mathematical reasoning evaluation as a critical challenge, analysis of multimodal assessment approaches, and recognition of precision-oriented evaluation requirements in computational fields. AI-enabled AGSs not only offload the grading burden from teachers, but also free up valuable time that can be redirected toward supporting students’ learning in more impactful ways. Educators can focus on inculcating complex skills such as creativity, critical thinking, and problem-solving [97]. That said, while the grading process can be significantly streamlined, it does not have to be entirely hands-off. Teachers could still review the automatically generated grades (especially in high-stakes assessments) to ensure high accuracy and value-add insights to the generated feedback to guide student improvement [98]. They can also play a crucial role in the development of AGSs, such as through engineering prompts to guide GenAI models in grading submissions effectively. In this way, AI-powered grading becomes a tool for assistance rather than replacement, empowering educators to focus on the aspects of teaching that matter most.

Author Contributions

Conceptualization, K.H.C.; methodology, L.Y.T., S.H., K.H.C.; validation, L.Y.T., S.H., D.J.Y. and K.H.C.; formal analysis, L.Y.T., S.H., D.J.Y. and K.H.C.; investigation, L.Y.T. and S.H.; resources, K.H.C.; data curation, L.Y.T. and S.H.; writing—original draft preparation, L.Y.T.; writing—review and editing, L.Y.T., S.H., D.J.Y. and K.H.C.; visualization, L.Y.T.; supervision, D.J.Y. and K.H.C.; project administration, K.H.C.; funding acquisition, K.H.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Singapore Ministry of Education (MOE) Tertiary Education Research Fund (TRF) Grant No. MOE2022-TRF-029.

Data Availability Statement

No new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Haller, S.; Aldea, A.; Seifert, C.; Strisciuglio, N. Survey on Automated Short Answer Grading with Deep Learning: From Word Embeddings to Transformers. arXiv 2022, arXiv:2204.03503. [Google Scholar] [CrossRef]

- Magalhães, P.; Ferreira, D.; Cunha, J.; Rosário, P. Online vs traditional homework: A systematic review on the benefits to students’ performance. Comput. Educ. 2020, 152, 103869. [Google Scholar] [CrossRef]

- Zupanc, K.; Bosnic, Z. Advances in the Field of Automated Essay Evaluation. Informatica 2015, 39, 383–395. [Google Scholar]

- Staubitz, T.; Klement, H.; Renz, J.; Teusner, R.; Meinel, C. Towards practical programming exercises and automated assessment in Massive Open Online Courses. In Proceedings of the 2015 IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE), Zhuhai, China, 10–12 December 2015; pp. 23–30. [Google Scholar] [CrossRef]

- Zhu, M.; Bonk, C.J.; Sari, A.R. Instructor Experiences Designing MOOCs in Higher Education: Pedagogical, Resource, and Logistical Considerations and Challenges. Online Learn. 2018, 22, 203–241. [Google Scholar]

- Kumar, D.; Haque, A.; Mishra, K.; Islam, F.; Kumar Mishra, B.; Ahmad, S. Exploring the Transformative Role of Artificial Intelligence and Metaverse in Education: A Comprehensive Review. Metaverse Basic Appl. Res. 2023, 2, 55. [Google Scholar] [CrossRef]

- Verma, M. Artificial Intelligence and Its Scope in Different Areas with Special Reference to the Field of Education. Int. J. Adv. Educ. Res. 2018, 3, 5–10. [Google Scholar]

- Barana, A.; Marchisio, M. Ten Good Reasons to Adopt an Automated Formative Assessment Model for Learning and Teaching Mathematics and Scientific Disciplines. Procedia-Soc. Behav. Sci. 2016, 228, 608–613. [Google Scholar] [CrossRef]

- Ahmad, S.F.; Alam, M.M.; Rahmat, M.K.; Mubarik, M.S.; Hyder, S.I. Academic and Administrative Role of Artificial Intelligence in Education. Sustainability 2022, 14, 1101. [Google Scholar] [CrossRef]

- Gordon, C.L.; Lysecky, R.; Vahid, F. The Rise of Program Auto-grading in Introductory CS Courses: A Case Study of zyLabs. In Proceedings of the 2021 ASEE Virtual Annual Conference Content Access, Online, 26–29 July 2021; Available online: https://peer.asee.org/37887 (accessed on 26 July 2025).

- Combéfis, S. Automated Code Assessment for Education: Review, Classification and Perspectives on Techniques and Tools. Software 2022, 1, 3–30. [Google Scholar] [CrossRef]

- Valenti, S.; Neri, F.; Cucchiarelli, A. An Overview of Current Research on Automated Essay Grading. J. Inf. Technol. Educ. Res. 2003, 2, 319–330. [Google Scholar] [CrossRef]

- Denis, I.; Newstead, S.E.; Wright, D.E. A new approach to exploring biases in educational assessment. Br. J. Psychol. 1996, 87, 515–534. [Google Scholar] [CrossRef] [PubMed]

- Barra, E.; López-Pernas, S.; Alonso, A.; Sánchez-Rada, J.F.; Gordillo, A.; Quemada, J. Automated Assessment in Programming Courses: A Case Study during the COVID-19 Era. Sustainability 2020, 12, 7451. [Google Scholar] [CrossRef]

- Manzoor, H.; Naik, A.; Shaffer, C.A.; North, C.; Edwards, S.H. Auto-Grading Jupyter Notebooks. In Proceedings of the 51st ACM Technical Symposium on Computer Science Education, SIGCSE ’20, Portland, OR, USA, 11–14 March 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1139–1144. [Google Scholar] [CrossRef]

- Hagerer, G.; Lahesoo, L.; Anschutz, M.; Krusche, S.; Groh, G. An Analysis of Programming Course Evaluations Before and After the Introduction of an Autograder. In Proceedings of the 2021 19th International Conference on Information Technology Based Higher Education and Training (ITHET), Sydney, Australia, 4–6 November 2021; pp. 1–9. [Google Scholar] [CrossRef]

- Bey, A.; Jermann, P.; Dillenbourg, P. A Comparison between Two Automatic Assessment Approaches for Programming: An Empirical Study on MOOCs. J. Educ. Technol. Soc. 2018, 21, 259–272. [Google Scholar]

- Chen, X.; Breslow, L.; DeBoer, J. Analyzing productive learning behaviors for students using immediate corrective feedback in a blended learning environment. Comput. Educ. 2018, 117, 59–74. [Google Scholar] [CrossRef]

- Mekterović, I.; Brkić, L.; Milašinović, B.; Baranović, M. Building a Comprehensive Automated Programming Assessment System. IEEE Access 2020, 8, 81154–81172. [Google Scholar] [CrossRef]

- Macha Babitha, M.; Sushama, D.C.; Vijaya Kumar Gudivada, D.; Kutubuddin Sayyad Liyakat Kazi, D.; Srinivasa Rao Bandaru, D. Trends of Artificial Intelligence for Online Exams in Education. Int. J. Eng. Comput. Sci. 2023, 14, 2457–2463. [Google Scholar] [CrossRef]

- Cope, B.; Kalantzis, M. Sources of Evidence-of-Learning: Learning and assessment in the era of big data. Open Rev. Educ. Res. 2015, 2, 194–217. [Google Scholar] [CrossRef]

- Zhai, X. Practices and Theories: How Can Machine Learning Assist in Innovative Assessment Practices in Science Education. J. Sci. Educ. Technol. 2021, 30, 139–149. [Google Scholar] [CrossRef]

- Rao, D.M. Experiences With Auto-Grading in a Systems Course. In Proceedings of the 2019 IEEE Frontiers in Education Conference (FIE), Covington, KY, USA, 16–19 October 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Kitaya, H.; Inoue, U. An Online Automated Scoring System for Java Programming Assignments. Int. J. Inf. Educ. Technol. 2016, 6, 275–279. [Google Scholar] [CrossRef]

- Riera, J.; Ardid, M.; Gómez-Tejedor, J.A.; Vidaurre, A.; Meseguer-Dueñas, J.M. Students’ perception of auto-scored online exams in blended assessment: Feedback for improvement. Educ. XX1 2018, 21, 79–103. [Google Scholar]

- Rokade, A.; Patil, B.; Rajani, S.; Revandkar, S.; Shedge, R. Automated Grading System Using Natural Language Processing. In Proceedings of the 2018 Second International Conference on Inventive Communication and Computational Technologies (ICICCT), Coimbatore, India, 20–21 April 2018; pp. 1123–1127. [Google Scholar] [CrossRef]

- Hooda, M.; Rana, C.; Dahiya, O.; Rizwan, A.; Hossain, M.S. Artificial Intelligence for Assessment and Feedback to Enhance Student Success in Higher Education. Math. Probl. Eng. 2022, 2022, 5215722. [Google Scholar] [CrossRef]

- Braiki, B.; Harous, S.; Zaki, N.; Alnajjar, F. Artificial intelligence in education and assessment methods. Bull. Electr. Eng. Inform. 2020, 9, 1998–2007. [Google Scholar] [CrossRef]

- Bian, W.; Alam, O.; Kienzle, J. Automated Grading of Class Diagrams. In Proceedings of the 2019 ACM/IEEE 22nd International Conference on Model Driven Engineering Languages and Systems Companion (MODELS-C), Munich, Germany, 15–20 September 2019; pp. 700–709. [Google Scholar] [CrossRef]

- Riordan, B.; Bichler, S.; Bradford, A.; King Chen, J.; Wiley, K.; Gerard, L.; C. Linn, M. An empirical investigation of neural methods for content scoring of science explanations. In Proceedings of the Fifteenth Workshop on Innovative Use of NLP for Building Educational Applications, Seattle, WA, USA, Online, 10 July 2020; pp. 135–144. [Google Scholar] [CrossRef]

- Zhai, X.; Shi, L.; Nehm, R. A Meta-Analysis of Machine Learning-Based Science Assessments: Factors Impacting Machine-Human Score Agreements. J. Sci. Educ. Technol. 2021, 30, 361–379. [Google Scholar] [CrossRef]

- von Davier, M.; Tyack, L.; Khorramdel, L. Scoring Graphical Responses in TIMSS 2019 Using Artificial Neural Networks. Educ. Psychol. Meas. 2022, 83, 556–585. [Google Scholar] [CrossRef] [PubMed]

- Condor, A. Exploring Automatic Short Answer Grading as a Tool to Assist in Human Rating. In Proceedings of the Artificial Intelligence in Education, Ifrane, Morocco, 6–10 July 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 74–79. [Google Scholar]

- García-Gorrostieta, J.M.; López-López, A.; González-López, S. Automatic argument assessment of final project reports of computer engineering students. Comput. Appl. Eng. Educ. 2018, 26, 1217–1226. [Google Scholar] [CrossRef]

- Lee, H.S.; Pallant, A.; Pryputniewicz, S.; Lord, T.; Mulholland, M.; Liu, O.L. Automated text scoring and real-time adjustable feedback: Supporting revision of scientific arguments involving uncertainty. Sci. Educ. 2019, 103, 590–622. [Google Scholar] [CrossRef]

- DiCerbo, K. Assessment for Learning with Diverse Learners in a Digital World. Educ. Meas. Issues Pract. 2020, 39, 90–93. [Google Scholar] [CrossRef]

- Zhang, Y.; Shah, R.; Chi, M. Deep Learning + Student Modeling + Clustering: A Recipe for Effective Automatic Short Answer Grading. In Proceedings of the 9th International Conference on Educational Data Mining (EDM), Raleigh, NC, USA, 29 June–2 July 2016. [Google Scholar]

- Angelone, A.M.; Vittorini, P. The Automated Grading of R Code Snippets: Preliminary Results in a Course of Health Informatics. In Proceedings of the Methodologies and Intelligent Systems for Technology Enhanced Learning, 9th International Conference, Ávila, Spain, 26–28 June 2019; Springer: Cham, Switzerland, 2020; pp. 19–27. [Google Scholar]

- Çınar, A.; Ince, E.; Gezer, M.; Yılmaz, Ö. Machine learning algorithm for grading open-ended physics questions in Turkish. Educ. Inf. Technol. 2020, 25, 3821–3844. [Google Scholar] [CrossRef]

- Julca-Aguilar, F.; Mouchère, H.; Viard-Gaudin, C.; Hirata, N. A general framework for the recognition of online handwritten graphics. IJDAR 2020, 23, 143–160. [Google Scholar] [CrossRef]

- Rowtula, V.; Oota, S.R. Towards Automated Evaluation of Handwritten Assessments. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, NSW, Australia, 20–25 September 2019; pp. 426–433. [Google Scholar] [CrossRef]

- Levin, M.; McKechnie, T.; Khalid, S.; Grantcharov, T.P.; Goldenberg, M. Automated Methods of Technical Skill Assessment in Surgery: A Systematic Review. J. Surg. Educ. 2019, 76, 1629–1639. [Google Scholar] [CrossRef]

- Bian, W.; Alam, O.; Kienzle, J. Is automated grading of models effective? assessing automated grading of class diagrams. In Proceedings of the 23rd ACM/IEEE International Conference on Model Driven Engineering Languages and Systems, MODELS ’20, Virtual, 16–23 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 365–376. [Google Scholar] [CrossRef]

- Vajjala, S. Automated Assessment of Non-Native Learner Essays: Investigating the Role of Linguistic Features. Int. J. Artif. Intell. Educ. 2018, 28, 79–105. [Google Scholar] [CrossRef]

- Štajduhar, I.; Mauša, G. Using string similarity metrics for automated grading of SQL statements. In Proceedings of the 2015 38th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 25–29 May 2015; pp. 1250–1255. [Google Scholar] [CrossRef]

- Fowler, M.; Chen, B.; Azad, S.; West, M.; Zilles, C. Autograding “Explain in Plain English” questions using NLP. In Proceedings of the 52nd ACM Technical Symposium on Computer Science Education, SIGCSE ’21, Virtual, 13–20 March 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 1163–1169. [Google Scholar] [CrossRef]

- Latifi, S.; Gierl, M.; Boulais, A.; De Champlain, A. Using Automated Scoring to Evaluate Written Responses in English and French on a High-Stakes Clinical Competency Examination. Eval. Health Prof. 2016, 39, 100–113. [Google Scholar] [CrossRef]

- Oquendo, Y.; Riddle, E.; Hiller, D.; Blinman, T.A.; Kuchenbecker, K.J. Automatically rating trainee skill at a pediatric laparoscopic suturing task. Surg. Endosc. 2018, 32, 1840–1857. [Google Scholar] [CrossRef]

- Lan, A.S.; Vats, D.; Waters, A.E.; Baraniuk, R.G. Mathematical Language Processing: Automatic Grading and Feedback for Open Response Mathematical Questions. arXiv 2015, arXiv:1501.04346. [Google Scholar] [CrossRef]

- Hoblos, J. Experimenting with Latent Semantic Analysis and Latent Dirichlet Allocation on Automated Essay Grading. In Proceedings of the 2020 Seventh International Conference on Social Networks Analysis, Management and Security (SNAMS), Paris, France, 14–16 December 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Sung, C.; Dhamecha, T.I.; Mukhi, N. Improving Short Answer Grading Using Transformer-Based Pre-training. In Proceedings of the Artificial Intelligence in Education, Chicago, IL, USA, 25–29 June 2019; Springer International Publishing: Cham, Switzerland, 2019; pp. 469–481. [Google Scholar]

- Prabhudesai, A.; Duong, T.N.B. Automatic Short Answer Grading using Siamese Bidirectional LSTM Based Regression. In Proceedings of the 2019 IEEE International Conference on Engineering, Technology and Education (TALE), Yogyakarta, Indonesia, 10–13 December 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Riordan, B.; Horbach, A.; Cahill, A.; Zesch, T.; Lee, C.M. Investigating neural architectures for short answer scoring. In Proceedings of the 12th Workshop on Innovative Use of NLP for Building Educational Applications; Association for Computational Linguistics: Copenhagen, Denmark, 2017; pp. 159–168. [Google Scholar] [CrossRef]

- Baral, S.; Botelho, A.F.; Erickson, J.A.; Benachamardi, P.; Heffernan, N.T. Improving Automated Scoring of Student Open Responses in Mathematics. In Proceedings of the Proceedings of the 14th International Conference on Educational Data Mining (EDM), Online, 29 June –2 July 2021. [Google Scholar]

- Fagbohun, O.; Iduwe, N.; Abdullahi, M.; Ifaturoti, A.; Nwanna, O. Beyond Traditional Assessment: Exploring the Impact of Large Language Models on Grading Practices. J. Artif. Intell. Mach. Learn. Data Sci. 2024, 2, 1–8. [Google Scholar] [CrossRef]

- Qadir, J. Engineering Education in the Era of ChatGPT: Promise and Pitfalls of Generative AI for Education. In Proceedings of the 2023 IEEE Global Engineering Education Conference (EDUCON), Kuwait, Kuwait, 1–4 May 2023; pp. 1–9. [Google Scholar] [CrossRef]

- Rawas, S. ChatGPT: Empowering lifelong learning in the digital age of higher education. Educ. Inf. Technol. 2024, 29, 6895–6908. [Google Scholar] [CrossRef]

- Lee, S.S.; Moore, R.L. Harnessing Generative AI (GenAI) for Automated Feedback in Higher Education: A Systematic Review. Online Learn. 2024, 28, 82–106. [Google Scholar] [CrossRef]

- Lagakis, P.; Demetriadis, S.; Psathas, G. Automated Grading in Coding Exercises Using Large Language Models. In Proceedings of the Smart Mobile Communication & Artificial Intelligence; Springer Nature: Cham, Switzerland, 2024; pp. 363–373. [Google Scholar]

- Lee, G.G.; Latif, E.; Wu, X.; Liu, N.; Zhai, X. Applying large language models and chain-of-thought for automatic scoring. Comput. Educ. Artif. Intell. 2024, 6, 100213. [Google Scholar] [CrossRef]

- Chen, Z.; Wan, T. Achieving Human Level Partial Credit Grading of Written Responses to Physics Conceptual Question using GPT-3.5 with Only Prompt Engineering. arXiv 2024, arXiv:2407.15251. [Google Scholar] [CrossRef]

- Latif, E.; Zhai, X. Fine-tuning ChatGPT for automatic scoring. Comput. Educ. Artif. Intell. 2024, 6, 100210. [Google Scholar] [CrossRef]

- Smith, A.; Leeman-Munk, S.; Shelton, A.; Mott, B.; Wiebe, E.; Lester, J. A Multimodal Assessment Framework for Integrating Student Writing and Drawing in Elementary Science Learning. IEEE Trans. Learn. Technol. 2019, 12, 3–15. [Google Scholar] [CrossRef]

- Bernius, J.P.; Krusche, S.; Bruegge, B. A Machine Learning Approach for Suggesting Feedback in Textual Exercises in Large Courses. In Proceedings of the Eighth ACM Conference on Learning @ Scale, L@S ’21, Virtual, 22–25 June 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 173–182. [Google Scholar] [CrossRef]

- Messer, M.; Brown, N.C.C.; Kölling, M.; Shi, M. Machine Learning-Based Automated Grading and Feedback Tools for Programming: A Meta-Analysis. In Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education V. 1, ITiCSE 2023, Turku, Finland, 7–12 July 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 491–497. [Google Scholar] [CrossRef]

- Ariely, M.; Nazaretsky, T.; Alexandron, G. Machine Learning and Hebrew NLP for Automated Assessment of Open-Ended Questions in Biology. Int. J. Artif. Intell. Educ. 2023, 33, 1–34. [Google Scholar] [CrossRef]

- Weegar, R.; Idestam-Almquist, P. Reducing Workload in Short Answer Grading Using Machine Learning. Int. J. Artif. Intell. Educ. 2024, 34, 247–273. [Google Scholar] [CrossRef]

- Zhu, M.; Lee, H.S.; Wang, T.; Liu, O.L.; Belur, V.; Pallant, A. Investigating the impact of automated feedback on students’ scientific argumentation. Int. J. Sci. Educ. 2017, 39, 1648–1668. [Google Scholar] [CrossRef]

- Vittorini, P.; Menini, S.; Tonelli, S. An AI-Based System for Formative and Summative Assessment in Data Science Courses. Int. J. Artif. Intell. Educ. 2021, 31, 159–185. [Google Scholar] [CrossRef]

- Tisha, S.M.; Oregon, R.A.; Baumgartner, G.; Alegre, F.; Moreno, J. An Automatic Grading System for a High School-level Computational Thinking Course. In Proceedings of the 2022 IEEE/ACM 4th International Workshop on Software Engineering Education for the Next Generation (SEENG), Pittsburgh, PA, USA, 17 May 2022; pp. 20–27. [Google Scholar] [CrossRef]

- Nunes, A.; Cordeiro, C.; Limpo, T.; Castro, S.L. Effectiveness of automated writing evaluation systems in school settings: A systematic review of studies from 2000 to 2020. J. Comput. Assist. Learn. 2022, 38, 599–620. [Google Scholar] [CrossRef]

- Wan, T.; Chen, Z. Exploring generative AI assisted feedback writing for students’ written responses to a physics conceptual question with prompt engineering and few-shot learning. Phys. Rev. Phys. Educ. Res. 2024, 20, 010152. [Google Scholar] [CrossRef]

- Hsu, S.; Li, T.W.; Zhang, Z.; Fowler, M.; Zilles, C.; Karahalios, K. Attitudes Surrounding an Imperfect AI Autograder. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, CHI ’21, Yokohama, Japan, 8–13 May 2021; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Nazaretsky, T.; Ariely, M.; Cukurova, M.; Alexandron, G. Teachers’ trust in AI-powered educational technology and a professional development program to improve it. Br. J. Educ. Technol. 2022, 53, 914–931. [Google Scholar] [CrossRef]

- Bond, M.; Khosravi, H.; De Laat, M.; Bergdahl, N.; Negrea, V.; Oxley, E.; Pham, P.; Chong, S.W.; Siemens, G. A meta systematic review of artificial intelligence in higher education: A call for increased ethics, collaboration, and rigour. Int. J. Educ. Technol. High. Educ. 2024, 21, 4. [Google Scholar] [CrossRef]

- Selwyn, N. Less Work for Teacher? The Ironies of Automated Decision-Making in Schools. In Everyday Automation, 1st ed.; Routledge: London, UK, 2022. [Google Scholar]

- Wang, P.-l. Effects of an automated writing evaluation program: Student experiences and perceptions. Electron. J. Foreign Lang. Teach. 2015, 12, 79–100. [Google Scholar]

- Wilson, J.; Ahrendt, C.; Fudge, E.A.; Raiche, A.; Beard, G.; MacArthur, C. Elementary teachers’ perceptions of automated feedback and automated scoring: Transforming the teaching and learning of writing using automated writing evaluation. Comput. Educ. 2021, 168, 104208. [Google Scholar] [CrossRef]

- Gordillo, A. Effect of an Instructor-Centered Tool for Automatic Assessment of Programming Assignments on Students’ Perceptions and Performance. Sustainability 2019, 11, 5568. [Google Scholar] [CrossRef]

- Buffardi, K.; Edwards, S.H. Reconsidering Automated Feedback: A Test-Driven Approach. In Proceedings of the 46th ACM Technical Symposium on Computer Science Education, SIGCSE ’15, Kansas City, MO, USA, 4–7 March 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 416–420. [Google Scholar] [CrossRef]

- Baniassad, E.; Zamprogno, L.; Hall, B.; Holmes, R. STOP THE (AUTOGRADER) INSANITY: Regression Penalties to Deter Autograder Overreliance. In Proceedings of the 52nd ACM Technical Symposium on Computer Science Education, SIGCSE ’21, Virtual, 13–20 March 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 1062–1068. [Google Scholar] [CrossRef]

- Lawrence, R.; Foss, S.; Urazova, T. Evaluation of Submission Limits and Regression Penalties to Improve Student Behavior with Automatic Assessment Systems. ACM Trans. Comput. Educ. 2023, 23, 1–24. [Google Scholar] [CrossRef]

- Anjum, G.; Choubey, J.; Kushwaha, S.; Patkar, V. AI in Education: Evaluating the Efficacy and Fairness of Automated Grading Systems. Int. J. Innov. Res. Sci. Eng. Technol. (IJIRSET) 2023, 12, 9043–9050. [Google Scholar] [CrossRef]

- Ma, Y.; Hu, S.; Li, X.; Wang, Y.; Chen, Y.; Liu, S.; Cheong, K.H. When LLMs Learn to be Students: The SOEI Framework for Modeling and Evaluating Virtual Student Agents in Educational Interaction. arXiv 2024, arXiv:2410.15701. [Google Scholar]

- Morris, W.; Holmes, L.; Choi, J.S.; Crossley, S. Automated Scoring of Constructed Response Items in Math Assessment Using Large Language Models. Int. J. Artif. Intell. Educ. 2024, 35, 559–586. [Google Scholar] [CrossRef]

- Condor, A.; Litster, M.; Pardos, Z. Automatic Short Answer Grading with SBERT on Out-of-Sample Questions. In Proceedings of the International Conference on Educational Data Mining (EDM), Online, 29 June–2 July 2021. [Google Scholar]

- Akgun, S.; Greenhow, C. Artificial intelligence in education: Addressing ethical challenges in K-12 settings. AI Ethics 2022, 2, 431–440. [Google Scholar] [CrossRef]

- Reilly, E.D.; Williams, K.M.; Stafford, R.E.; Corliss, S.B.; Walkow, J.C.; Kidwell, D.K. Global Times Call for Global Measures: Investigating Automated Essay Scoring in Linguistically-Diverse MOOCs. Online Learn. 2016, 20, 217–229. [Google Scholar] [CrossRef][Green Version]

- Tuah, N.A.A.; Naing, L. Is Online Assessment in Higher Education Institutions during COVID-19 Pandemic Reliable? Siriraj Med. J. 2021, 73, 61. [Google Scholar] [CrossRef]

- Teo, Y.H.; Yap, J.H.; An, H.; Yu, S.C.M.; Zhang, L.; Chang, J.; Cheong, K.H. Enhancing the MEP coordination process with BIM technology and management strategies. Sensors 2022, 22, 4936. [Google Scholar] [CrossRef]

- Cheong, K.H.; Lai, J.W.; Yap, J.H.; Cheong, G.S.W.; Budiman, S.V.; Ortiz, O.; Mishra, A.; Yeo, D.J. Utilizing Google cardboard virtual reality for visualization in multivariable calculus. IEEE Access 2023, 11, 75398–75406. [Google Scholar] [CrossRef]

- Bogar, P.Z.; Virag, M.; Bene, M.; Hardi, P.; Matuz, A.; Schlegl, A.T.; Toth, L.; Molnar, F.; Nagy, B.; Rendeki, S.; et al. Validation of a novel, low-fidelity virtual reality simulator and an artificial intelligence assessment approach for peg transfer laparoscopic training. Sci. Rep. 2024, 14, 16702. [Google Scholar] [CrossRef] [PubMed]

- Tan, L.Y.; Hu, S.; Yeo, D.J.; Cheong, K.H. Artificial Intelligence-Enabled Adaptive Learning Platforms: A Review. Comput. Educ. Artif. Intell. 2025, 9, 100429. [Google Scholar] [CrossRef]

- Drijvers, P.; Ball, L.; Barzel, B.; Heid, M.K.; Cao, Y.; Maschietto, M. Uses of Technology in Lower Secondary Mathematics Education, 1st ed.; Number 1 in ICME-13 Topical Surveys; Springer: Cham, Switzerland, 2016; pp. 1–34. ISBN 978-3-319-33666-4. [Google Scholar] [CrossRef]