General Runge–Kutta–Nyström Methods for Linear Inhomogeneous Second-Order Initial Value Problems

Abstract

1. Introduction

2. General Runge–Kutta–Nyström Methods and Order Conditions

2.1. Butcher Tableau for GRKN Methods

2.2. Rooted Trees and Order Conditions

2.3. Embedded Pairs and Linear Problems

- -

- A primary method of order for accuracy.

- -

- A secondary method of order for error estimation.

- -

- Many elementary differentials vanish due to linearity.

- -

- This reduces the number of conditions and parameters needed.

- -

- Evolutionary optimization (e.g., Differential Evolution) can be employed to search for coefficients satisfying the order conditions while minimizing local error [8].

- a six-stage GRKN pair of orders ,

- an eight-stage GRKN pair of orders ,

| Algorithm 1 Adaptive embedded GRKN method |

Require: Initial values , step size h, tolerance TOL, coefficients Ensure: Approximate values at

|

3. Method Derivation and Symbolic Conditions for Linear Problems

3.1. Summary: A Theory for General Nyström Methods

3.2. Order Conditions for GRKN Methods

- True local error of the 7th-order method:

- Estimated local error (from the embedded pair):

- Global error (accumulated over steps):

3.3. Order Conditions for Linear Inhomogeneous Problems

- All higher-order derivatives vanish.

- Nonlinear combinations of trees reduce to simpler linear combinations.

- Many trees collapse into equivalent forms.

3.4. Producing the New Methods

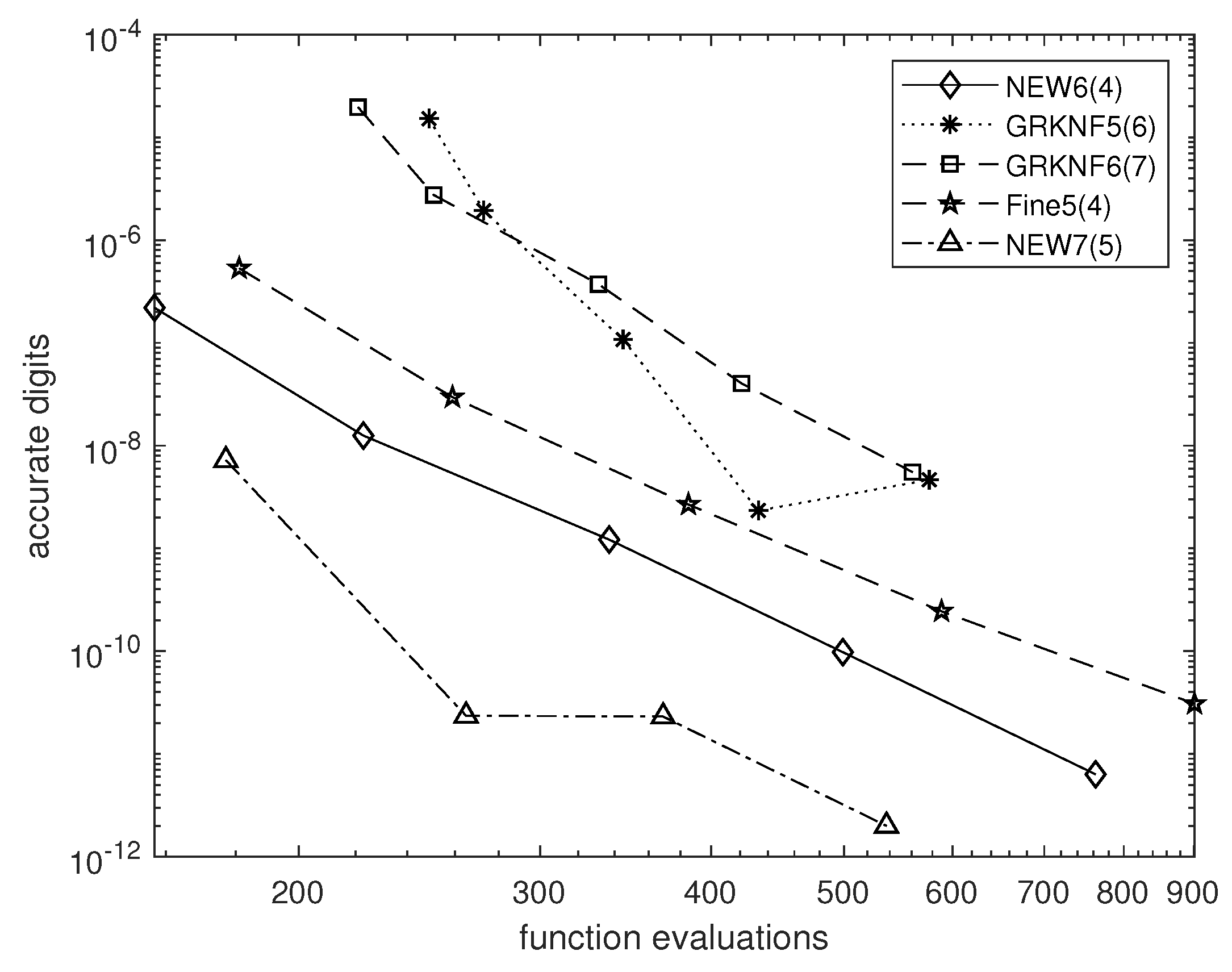

4. Numerical Tests

- GRKNF5(6), a fifth-order method, effectively using six stages pair step, appeared in [1]

- GRKNF6(7), a sixth-order method, effectively using ten stages pair step, appeared in [1].

- Fine5(4), an FSAL fifth-order method, effectively using six stages pair step, appeared in [2].

- NEW6(4), the FSAL pair constructed here, effectively using six stages pair step.

- NEW7(5), the FSAL pair constructed here, effectively using eight stages pair step.

4.1. Damped Harmonic Oscillator with External Forcing ()

4.2. Coupled Oscillators in a 2D Framework ()

4.3. Multi-Mass Coupled Oscillators with Damping and Forcing ()

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. MATLAB Code

References

- Fehlberg, E. Classical Seventh-, Sixth-, and Fifth-Order Runge–Kutta–Nyström Formulas with Stepsize Control for General Second-Order Differential Equations. NASA Technical Report R-432. 1974. Available online: https://ntrs.nasa.gov/api/citations/19740026877/downloads/19740026877.pdf (accessed on 2 July 2025).

- Fine, J.M. Low Order Practical Runge–Kutta–Nyström Methods. Computing 1987, 38, 281–297. [Google Scholar] [CrossRef]

- Kovalnogov, V.N.; Fedorov, R.V.; Karpukhina, M.T.; Kornilova, M.I.; Simos, T.E.; Tsitouras, C. Runge–Kutta–Nyström Methods of Eighth Order for Addressing Linear Inhomogeneous Problems. J. Comput. Appl. Math. 2023, 419, 114778. [Google Scholar] [CrossRef]

- Butcher, J.C. An Algebraic Theory of Integration Methods. Math. Comput. 1972, 26, 79–106. [Google Scholar] [CrossRef]

- Butcher, J.C. Numerical Methods for Ordinary Differential Equations, 2nd ed.; John Wiley & Sons: Chichester, UK, 2003. [Google Scholar]

- Hairer, E.; Wanner, G. A Theory for Nyström Methods. Numer. Math. 1976, 25, 377–395. [Google Scholar]

- Hairer, E.; Nørsett, S.P.; Wanner, G. Solving Ordinary Differential Equations I: Nonstiff Problems, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 1993. [Google Scholar]

- Simos, T.E.; Tsitouras, C. Evolutionary Derivation of Runge–Kutta Pairs for Addressing Inhomogeneous Linear Problems. Numer. Algorithms 2021, 87, 511–525. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential Evolution—A Simple and Efficient Heuristic for Global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- MATLAB, Version 7.10.0; The MathWorks Inc.: Natick, MA, USA, 2010.

- Storn, R.; Price, K.; Neumaier, A.; Zandt, J.V. DeMat. Available online: https://github.com/mikeagn/DeMatDEnrand (accessed on 2 July 2025).

- Mazraeh, H.D.; Parand, K. An innovative combination of deep Q-networks and context-free grammars for symbolic solutions to differential equations. Eng. Appl. Artif. Intell. 2025, 142, No109733. [Google Scholar] [CrossRef]

- Tsitouras, C.; Famelis, I.T. Symbolic Derivation of Runge–Kutta–Nyström Order Conditions. J. Math. Chem. 2009, 46, 896–912. [Google Scholar] [CrossRef]

- Nayfeh, A.H.; Mook, D.T. Nonlinear Oscillations; Wiley-VCH: Weinheim, Germany, 2008. [Google Scholar]

- Inman, D.J. Engineering Vibration, 4th ed.; Pearson: Boston, MA, USA, 2014. [Google Scholar]

- Bingham, C.M.; Birkett, N.M.; Sims, N.D. Control of satellite structures using passive and semi-active vibration isolation. J. Sound Vib. 2006, 294, 1–18. [Google Scholar]

- Craig, R.R., Jr.; Kurdila, A.J. Fundamentals of Structural Dynamics, 2nd ed.; Wiley: Hoboken, NJ, USA, 2006. [Google Scholar]

- Meirovitch, L. Analytical Methods in Vibrations; Macmillan: New York, NY, USA, 1967. [Google Scholar]

| † | † | † |

| † | † | |

| † | † | † |

| † | ||

| † | † | |

| † | ||

| † | † | |

| † | † | |

| † | ||

| † | ||

| † | † | |

| † | ||

| † | ||

| † | ||

| † | † | |

| † | † |

| † | † | † |

| † | † | |

| † | † | † |

| † | ||

| † | † | |

| † | ||

| † | † | |

| † |

| c | A | ||||||

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | |||

| 0 | 0 | 0 | 0 | ||||

| 0 | 0 | 0 | |||||

| 0 | 0 | ||||||

| 0 | |||||||

| 1 | |||||||

| 0 | 0 | 0 | 0 | 0 | 0 | ||

| 0 | 0 | 0 | 0 | 0 | 0 | ||

| 0 | 0 | 0 | 0 | 0 | |||

| 0 | 0 | 0 | 0 | ||||

| 0 | 0 | 0 | |||||

| 0 | 0 | ||||||

| 0 | |||||||

| c | A | ||||||||

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | |||

| 0 | 0 | 0 | 0 | 0 | 0 | ||||

| 0 | 0 | 0 | 0 | 0 | |||||

| 0 | 0 | 0 | 0 | ||||||

| 0 | 0 | 0 | |||||||

| 0 | 0 | ||||||||

| 0 | |||||||||

| 1 | |||||||||

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | |||

| 0 | 0 | 0 | 0 | 0 | 0 | ||||

| 0 | 0 | 0 | 0 | 0 | |||||

| 0 | 0 | 0 | 0 | ||||||

| 0 | 0 | 0 | |||||||

| 0 | 0 | ||||||||

| 0 | |||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alharthi, N.H.; Alqahtani, R.T.; Simos, T.E.; Tsitouras, C. General Runge–Kutta–Nyström Methods for Linear Inhomogeneous Second-Order Initial Value Problems. Mathematics 2025, 13, 2826. https://doi.org/10.3390/math13172826

Alharthi NH, Alqahtani RT, Simos TE, Tsitouras C. General Runge–Kutta–Nyström Methods for Linear Inhomogeneous Second-Order Initial Value Problems. Mathematics. 2025; 13(17):2826. https://doi.org/10.3390/math13172826

Chicago/Turabian StyleAlharthi, Nadiyah Hussain, Rubayyi T. Alqahtani, Theodore E. Simos, and Charalampos Tsitouras. 2025. "General Runge–Kutta–Nyström Methods for Linear Inhomogeneous Second-Order Initial Value Problems" Mathematics 13, no. 17: 2826. https://doi.org/10.3390/math13172826

APA StyleAlharthi, N. H., Alqahtani, R. T., Simos, T. E., & Tsitouras, C. (2025). General Runge–Kutta–Nyström Methods for Linear Inhomogeneous Second-Order Initial Value Problems. Mathematics, 13(17), 2826. https://doi.org/10.3390/math13172826