1. Introduction

Magnetic levitation is a technology that uses magnetic fields to suspend objects in the air without physical contact. By counteracting gravity through electromagnetic forces, this creates a frictionless environment, allowing for smooth and efficient movement [

1]. This technology not only promises to redefine high-speed travel but also extends its utility to diverse applications, from precision manufacturing to energy harvesting. Due to continuous progress in industrial technology, magnetic levitation systems (MLS) have become increasingly prevalent, particularly in high-precision positioning tasks [

2]. The choice of an effective yet uncomplicated control approach continues to pose a major challenge in MLS advancement, positioning research into these systems as a leading focus in the field.

In recent years, numerous studies have sought to improve MLS performance. In 2020, Wang et al. presented an adaptive terminal sliding mode control approach with improved disturbance rejection [

3]. In 2021, Vo et al. developed a fixed-time trajectory tracking controller for uncertain MLS [

4]. Zhang et al. designed and implemented a Takagi–Sugeno fuzzy controller for a magnetic levitation ball [

5]. Despite their effectiveness, these methods often involve complex computational processes, and there remains room for enhancing control performance. Meanwhile, fuzzy neural networks (FNNs) have several advantages, including fast learning speed, simple realization, and dealing with nonlinear systems. To solve the above problems in terms of better performance and simpler computation, a dynamic Petri fuzzy neural network (DPFNN) is developed in this study to achieve precise control of nonlinear MLS.

Given the substantial complexity and nonlinear dynamics of MLS, traditional model-based control strategies are often unsuitable for its regulation. As a result, model-free control approaches have been deemed more appropriate for such systems. In particular, neural networks (NNs) have attracted significant attention across various fields [

6,

7,

8,

9,

10,

11]. While NNs possess universal approximation capability for continuous functions, their learning process is global, requiring updates to all weights during each training iteration, which results in comparatively slow convergence. Furthermore, in fully connected NNs, approximation performance strongly depends on the quality and comprehensiveness of the training dataset, restricting their effectiveness in online learning applications. To counter these limitations, fuzzy neural networks (FNNs) appear to be a promising alternative. FNNs integrate the adaptive learning ability of neural networks with the linguistic reasoning and interpretability of fuzzy logic. FNNs offer lower computational complexity and faster learning speed while retaining the capability for efficient knowledge representation and inference.

Petri nets (PN) are a mathematical modeling language used to describe and analyze systems where concurrent, asynchronous, distributed, or parallel behaviors occur, particularly in computer science, manufacturing, workflows, and biological processes [

12]. Dynamic Petri (DP) extends the basic Petri net framework to model systems with dynamic behavior, such as those with variable structure, evolving state spaces, or changing topology. These models allow for the representation of systems in which transitions between states may depend on conditions that evolve over time [

13]. DP approaches have been utilized across multiple domains, including computer networks, manufacturing systems, workflow management, and biological systems [

14,

15,

16,

17,

18,

19]. Li et al. (2019) created an improved FMEA framework by integrating interval type-2 fuzzy sets with fuzzy Petri nets, thereby overcoming the shortcomings of the conventional RPN approach and enhancing the dependability of risk assessment in aerospace electronics production [

20]. Then, in 2020, Hu and Liang suggested a dynamic scheduling approach for flexible manufacturing systems using Petri nets and deep reinforcement learning combined with graph convolutional networks [

21]. More recently, Wang et al. (2022) developed a fuzzy Petri net model that integrates the synergistic effects of events, leading to improved reasoning abilities in knowledge-based systems [

22].

In this study, we propose a novel approach that integrates the dynamic Petri fuzzy neural network (DPFNN) with balanced composite motion optimization (BCMO) [

23,

24] for the control of nonlinear systems and chaotic synchronization [

25,

26,

27,

28,

29,

30]. The adoption of a multilayer framework strengthens both the learning capacity and adaptability of the DP mechanism. Unlike conventional designs, the proposed DP-based TSKCMAC architecture provides several distinct benefits. Its hierarchical structure improves generalization and reduces the number of fuzzy rules by efficiently covering the input space, thereby increasing computational efficiency. Moreover, embedding DP as an intermediate layer within TSKCMAC enables dynamic token generation and adaptive fuzzy rule selection according to the activated nodes, which enhances decision-making in complex environments. An additional advantage of the DP functionality is its ability to improve system robustness while simultaneously eliminating redundant fuzzy rules, resulting in a compact and effective rule base and further boosting overall system performance. Although the proposed method adopts a hybrid architecture consisting of a Petri net supervisor, a fuzzy CMAC controller, and a BCMO optimizer, it is specifically designed so that most computational effort is shifted to the offline phase. Compared to existing methods [

3,

4] that require iterative optimization or reinforcement learning computations during online execution, the online implementation of the proposed system only involves a Petri net-driven switching logic and a CMAC table lookup. These operations are of constant-time complexity have no need for real-time policy search or gradient-based adaptation. Therefore, in practice our approach achieves fast and lightweight online execution by reducing computational complexity, which is detailed in

Section 3.

This paper has been successfully employed in two test cases: a metal ball controlled in a magnetic levitation system [

31,

32,

33], and a mass–spring–damper setup [

34]. The proposed DPCMAC controller is constructed to avoid heavy computational demand while retaining the inherent benefits of the CMAC framework, which reduces the processing load associated with parameter learning. Furthermore, it is equipped with mechanisms to effectively compensate for approximation errors [

35,

36,

37,

38]. Based on adaptive tuning principles, optimal control performance can be attained by adjusting the control parameters. Additionally, the developed intelligent controller is executed using a field-programmable gate array (FPGA) chip [

39], which enables compact design, cost efficiency, high execution speed, and enhanced flexibility [

40]. The results from both the simulation and experiments of the magnetic levitation system have been provided to demonstrate the outstanding control capabilities of the proposed intelligent controller

3. Intelligent Control System Design

3.1. Petri Net

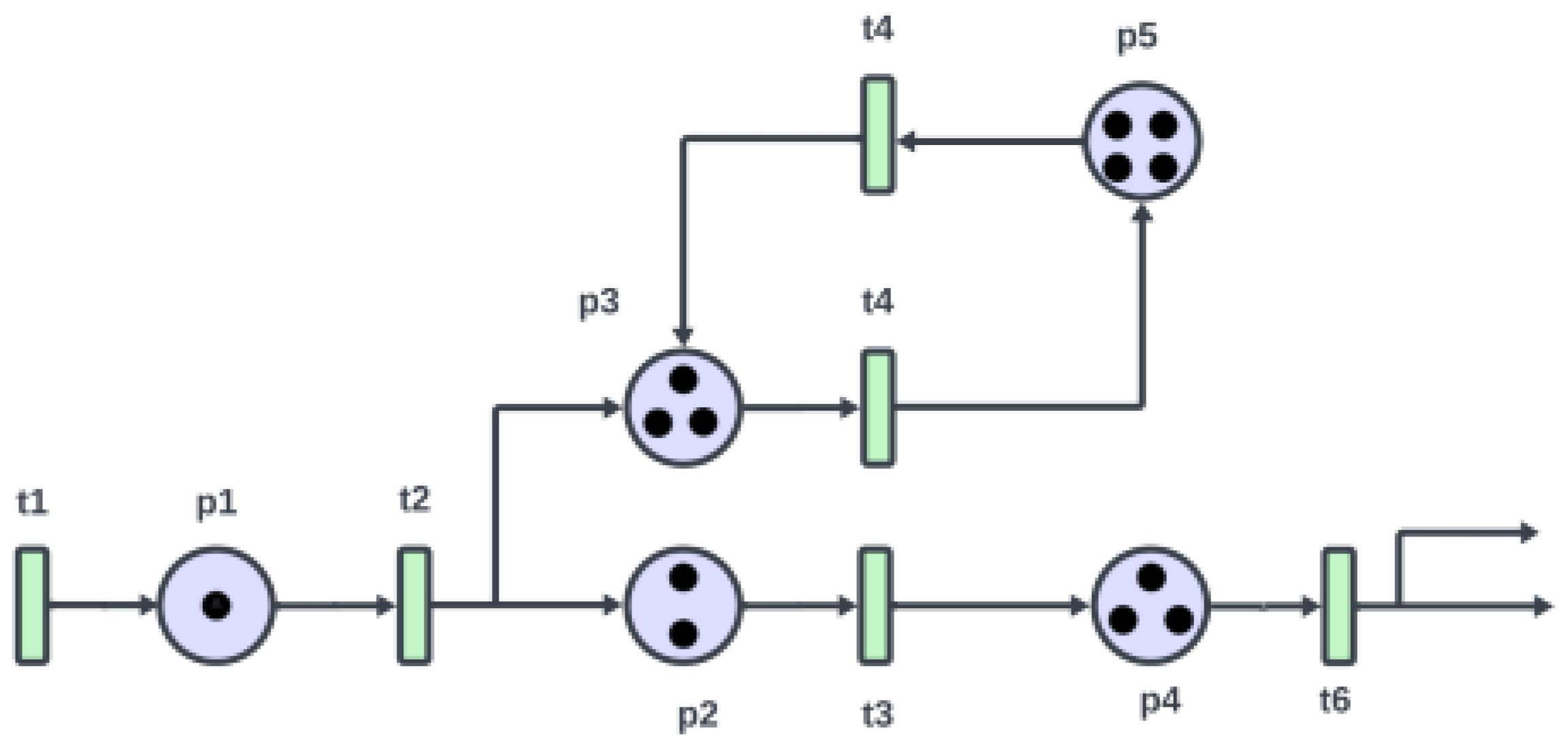

A directed bipartite network containing two different node types (referred to as “places” and “transitions”) is called a Petri net (PN). Directed arcs never connect two locations or two transitions; instead, they only connect certain locations and positions with exits. The transitions and locations are represented by rectangles and circles, respectively. One or more tokens may be represented by dots in each location. The system’s behavior is modeled thanks to these tokens. A vector containing positive integer values makes up a PN’s marking. The

component of this vector is equal to the number of tokens in the place numbered

n in the Petri net, and its dimension is equal to the number of places [

13].

Consideration of the PN represented in

Figure 3 illustrates the definition above. This PN contains five places and six transitions, denoted by

and

, respectively. Formally, the structure of a PN can be described as

where

is a set of places and

is a set of transitions. Given that both

and

are finite,

is a mapping between places and transitions, also known as the input function. In detail,

if there is a directed arc from

to

, while

if there are no directed arcs from

to

with

i in the range of 1 to

m and

j is in the range of 1 to

n. Finally,

is a mapping from transitions to places, also known as the output function.

Similarly to , if there is a directed arc from to , then ; otherwise, , with i in the range of 1 to m and j in the range of 1 to n. Here, is a mapping from places to real values between zero and one, which is called an association function.

According to the transition (firing) rule, a state or marking in a PN must be changed in order to replicate the dynamic behavior of real-world systems [

22].

- (1)

If a transition has input places that are marked as tokens, then is enabled.

- (2)

Depending on whether the event takes place, an enabled transition might or might not activate.

- (3)

One token is added to each output location, and the tokens from each input site are deleted when an enabled transition is triggered.

In a normal PN, a site accumulates tokens and initiates the output transition if the quantity of tokens exceeds a threshold value.

3.2. Fuzzy Neural Network

The fuzzy inference rules for the novel fuzzy neural network (FNN) depicted in

Figure 4 are described below.

If is , is ,…, and is , then provides the following statement.

The output is defined as , where , , and . Here, denotes the input dimension, represents the number of layers assigned to each input dimension, is the number of blocks within a layer, and corresponds to the total number of fuzzy rules. The set specifies the fuzzy region associated with the input, layer, and block, while is the singleton weight in the resulting part. In contrast to traditional fuzzy neural networks, the fuzzy CMAC uses an input space that is divided into layers and blocks. Furthermore, the fuzzy CMAC reduces to a typical fuzzy neural network in which each block has a single element (neuron) and each input dimension has a single layer. As a result, this fuzzy CMAC can be thought of as a generalization of a fuzzy neural network, and outperforms fuzzy neural networks in terms of generalization, learning features, and recall.

The input, association memory, receptive field, weight memory, and output are the five primary parts of the fuzzy CMAC architecture. Below, we provide a description of how signals travel via these parts.

- (1)

Input: Each input state variable can be quantized into distinct regions (referred to as elements or neurons) using the provided control space and a specified set of inputs . The resolution is determined by the number of elements .

- (2)

The association memory, also referred to as the membership function, involves assembling several components into a logical whole. Every single block serves as a receptive field basis inside this specified domain. The type-2 wavelet function is the receptive field basis function employed in this investigation, which has the following mathematical expression:

for

from 1 to

, respectively. In the above equation,

,

is the mean parameter, and

is the variance parameter.

- (3)

Receptive Field: Each block contains two movable parameters, variance

and mean

. The multi-dimensional receptive field function is expressed as follows:

for

and

, where

is indicated with the

layer and

block, that is, the product is used as the “and” computation in the antecedent section of the fuzzy rules in (X).

Multi-dimensional receptive field functions can be expressed in vector form as follows:

- (4)

Weight Memory: Each position of the receptive field with respect to a specific adjustable value in the weight memory space can be expressed as follows:

where

indicates the connecting weight value of the output linked with the

layer and

block.

- (5)

Output: The algebraic sum of the activated weighted receptive field indicates the output of fuzzy CMAC, with has the following representation:

that is, Equation (

17) represents the defuzzification of the fuzzy system in (

13). The fuzzy CMAC can be regarded as a generalized framework. When restricted to a single layer with each block containing only one element, the structure degenerates into a conventional fuzzy rule-based system [

21]. Under the same condition, the wavelet fuzzy CMAC likewise simplifies to a wavelet neural network [

17,

18,

19]. Furthermore, it simplifies to a fuzzy neural network [

11] if the wavelet mother function is removed, and can be simplified to a traditional CMAC [

14,

15,

16] if the fuzzy rules and wavelet mother function are removed.

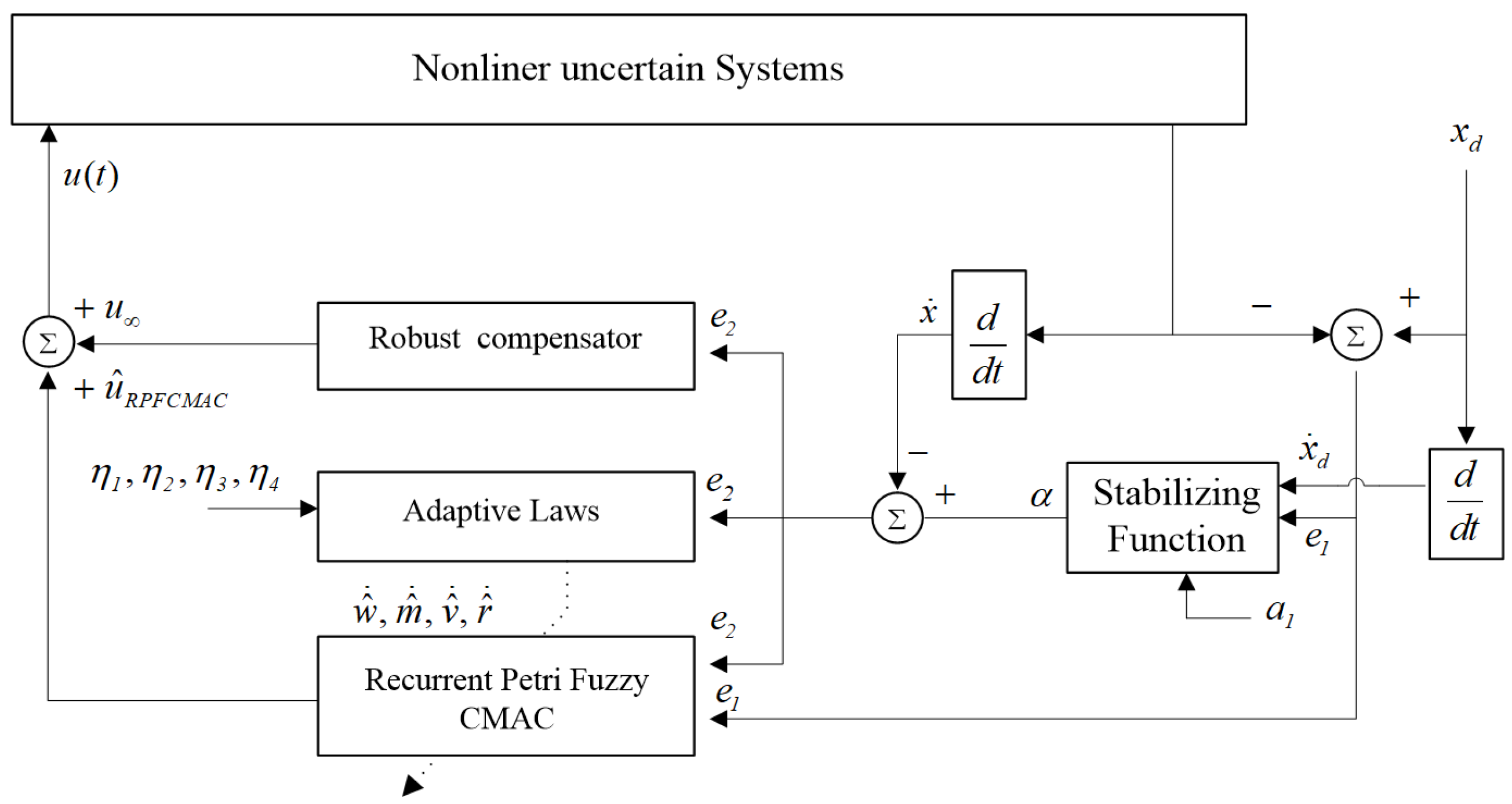

3.3. Architecture of a Recurrent Petri Fuzzy CMAC

The system can be asymptotically stabilized by the IBC in (

7). However, the system functions (

,

, and

) usually do not exist in their exact forms in practice. Therefore, it is not possible to directly implement the IBC in (

10). A recurrent Petri fuzzy CMAC-based robust adaptive backstepping (RPFCRAB) control system, shown in

Figure 5, is suggested as a solution to this constraint. The suggested controller’s structure follows

where

denotes the recurrent Petri fuzzy CMAC (RPFC) and

represents the

robust compensator.

A novel RPFC is introduced, formulated through the following fuzzy inference rule.

Rule i: If is a member of , is a member of , and is a member of , then , for and , where is the number of input dimensions, is the number of layers associated with each input dimension, indicates the number of blocks in each layer, and is the total number of fuzzy rules. The fuzzy region connected to the input, layer, and block is defined by the set , while the corresponding weight is indicated by . The following figure depicts a schematic of a fuzzy CMAC system with two inputs (), four layers (), and two blocks () per layer.

The input space, association memory, receptive field, weight memory, and output space are the five primary parts of the RPFC. Below, we provide a succinct description of signal transmission over these spaces.

Input Space: If is the number of input variables, then each input vector is discretized into regions (referred to as elements or neurons) based on the control domain. The resolution is defined by the number of such elements .

Association Memory (Membership Function): Blocks are created by combining groups of elements together. Every block serves as a framework for a receptive field. The receptive field basis function used in this work is a Gaussian function, which can be written as

for

. Here,

represents the

block of the

input

with

and variance

. Furthermore, this block’s input can be shown as

where

is the recurrent gain and

denotes the value of

through time delay

T. It is evident that the network’s historical data displays a dynamic mapping. This is the clear distinction between the traditional CMAC and the suggested ARPFCMAC. The schematic diagram of a 2D RPFCMAC with

and

is shown in

Figure 5, where

is split into blocks

and

, while

is divided into blocks

and

. The number of elements in a full block is denoted by

. Each variable can be shifted by one element to produce alternative blocks. Examples of potential shifted elements are blocks

and

for

and blocks

and

for

. Three parameters can be changed for each block in this area:

,

, and

[

32,

33].

Petri Memory Space

: The Petri memory space follows a learning rule to select suitable fired nodes:

where

denotes the transition and

is the predetermined fired number.

Receptive Field

: In this study, the receptive field number

is identical to

:

for

, where

and

. The vector representation of the multidimensional receptive field functions is as follows:

where

and

.

Weight Memory Space

: The expression for each location of

with respect to a specific adjustable value in the weight memory space is defined as

where

represents the output’s connecting weight value for the

receptive field.

Output Space

: The algebraic total of the activated weighted receptive field is the RPFC’s output, which can be written as

3.4. Online Learning Algorithm

The main tracking controller in Equation (

25) that is intended to imitate the IBC is the RPFC

u. To ensure robust tracking performance, the robust compensator

is introduced to minimize the disparity between the IBC and the RPFC. The relevant parameter adaptation rules for the RPFC will be created later. To make things easier, we can create the vectors

,

, and

to aggregate all of the RPFC’s parameters as

where

and

for which

and

where

Assume that there exists an optimal

to approach the IBC

such that

where

denotes the minimal reconstruction error and

,

,

,

, and

correspond to the optimal parameter matrix and vectors associated with

w,

m,

v,

, and

r, respectively. Because the exact optimal RPFC

is generally unattainable in practice, an online adaptive estimator of the RPFC is introduced to approximate it. Accordingly, from Equation (

25), the control law can be reformulated as

Subtracting (

33) from (

32), an estimation error

can be obtained as follows:

where

and

. Because the linearization technique can transform the multidimensional receptive field basis functions into a partially linear form, the expansion of

in a Taylor series can be obtained, i.e.,

where

,

,

, and

is a vector of higher-order terms. Here,

,

, and

are respectively defined as

Rewriting (

35) yields

while substituting (

35) and (

39) into (

34) yields

where the approximation error is expressed as

. Then, Equation (

7) can be expressed via (

10) into (

9) and (

38) as

Although ARPFC is applied to approximate the IBC, the presence of approximation errors prevents direct assurance of system stability. To address this issue, a robust compensator

is introduced to counteract the approximation uncertainty and ensure robust stability of the closed-loop system. When

is present, a prescribed

tracking performance is considered, as in [

28]:

where

,

,

, and

are positive learning rates and

denotes a disturbance or prescribed attenuation signal associated with

. Assuming that the system is initialized with

,

,

,

,

, and

, under these conditions the

tracking criterion in (

42) can be expressed as

where

and

. This inequality indicates that

characterizes the attenuation ratio between the uncertainty

and the output error

. In the limiting case

, the condition corresponds to pure tracking control without any attenuation of approximation errors [

28]. Consequently, the following theorem can be established.

Theorem 1. Consider the magnetic levitation system described by (2). Suppose that the ARPFC control scheme is specified by (33), with its parameter adaptation updated through (44)–(47), and that robust compensation is constructed as in (47). Under these conditions, the closed-loop dynamics satisfy the robust performance criterion (42) for the chosen attenuation level δ. Proof. The Lyapunov function candidate is provided by

Taking the derivative of the Lyapunov function and using (

41) yields

where

,

, and

are used because they are scales. Then, (

50) can be rewritten as

From (

44) and (

47), we can use (

48) to rewrite Equation (

49) as

Assuming

,

, integrating the above equation from

yields

Because

, the above inequality implies the inequality

Using (

49), this inequality is shown to be equivalent to (

42); thus, the proof is completed. Accordingly, the detailed design procedure can be summarized by the block diagram in

Figure 5. Specifically, the error signals

and

are first obtained from (

4)–(

6). Next, the adaptive parameter update laws derived in (

44)–(

47) are applied and the recurrent Petri fuzzy CMAC is constructed according to (

35). Finally, to ensure the convergence of the tracking error, the approximation error term

must belong to

. Moreover, the learning rates

,

,

, and

in (

44)–(

47) should be selected empirically through trial and adjustment.

The purpose of the online learning mechanism in the proposed CMAC structure is not to carry out full-scale reinforcement learning or dynamic programming, but rather to perform localized incremental adaptation during runtime. The update rule in (

34) only requires current tracking error and receptive field activation information, resulting in constant-time updates with negligible computational burden. This design permits the controller to refine its performance in the presence of varying disturbances or modeling uncertainties without the need to engage in the game-theoretic policy iteration required by recent safe reinforcement learning approaches [

41,

42]. Consequently, the proposed online learning scheme offers improved robustness while maintaining real-time applicability. □

3.5. BCMO Algorithm

The balancing composite motion optimization (BCMO) algorithm balances global and local search mechanisms to efficiently find optimal solutions. In order to find the optimal parameters , ,, and , let x represent the set of them, i.e., .

The following equation is used to uniformly initialize the population distribution in the solution space during the first generation:

where

is a d-dimensional vector that satisfies uniform distribution in the interval [0,1], while

and

represent the lower and upper bounds of the

individual, respectively.

The following formula can be used to determine the

individual’s motion vector in each generation with regard to the overall optimal point, represented as

:

where

and

are the movement vectors of the

and

individuals with respect to

O, respectively, and

is the relative movement vector of the

individual with regard to the

individual. The alternative overall optimal score, represented by

, can be determined using the following formula:

The best individual in the current generation

is determined using the population data from the previous generation:

where the search space’s lower and upper bounds are denoted by

and

, respectively, while the relative pseudo-movements of the

individual in relation to the

individual and of the

individual in relation to the previous best individual are denoted by

and

, respectively. Here,

is chosen at random from the range of [2, NP] and

, where NP is the number of individuals in the population. Lastly, the following formula determines the

individual’s position in the subsequent generation:

The objective function is chosen as , where e represents the error, in order to minimize the trajectory tracking error. The values of 0 and 500 are selected as the bottom and upper bounds, respectively. The maximum number of generations is 100000. The number of individuals is 100000. The CPU time is 3.32 s, and the optimization dimension is 4.

4. Numerical Simulation and Experimental Results

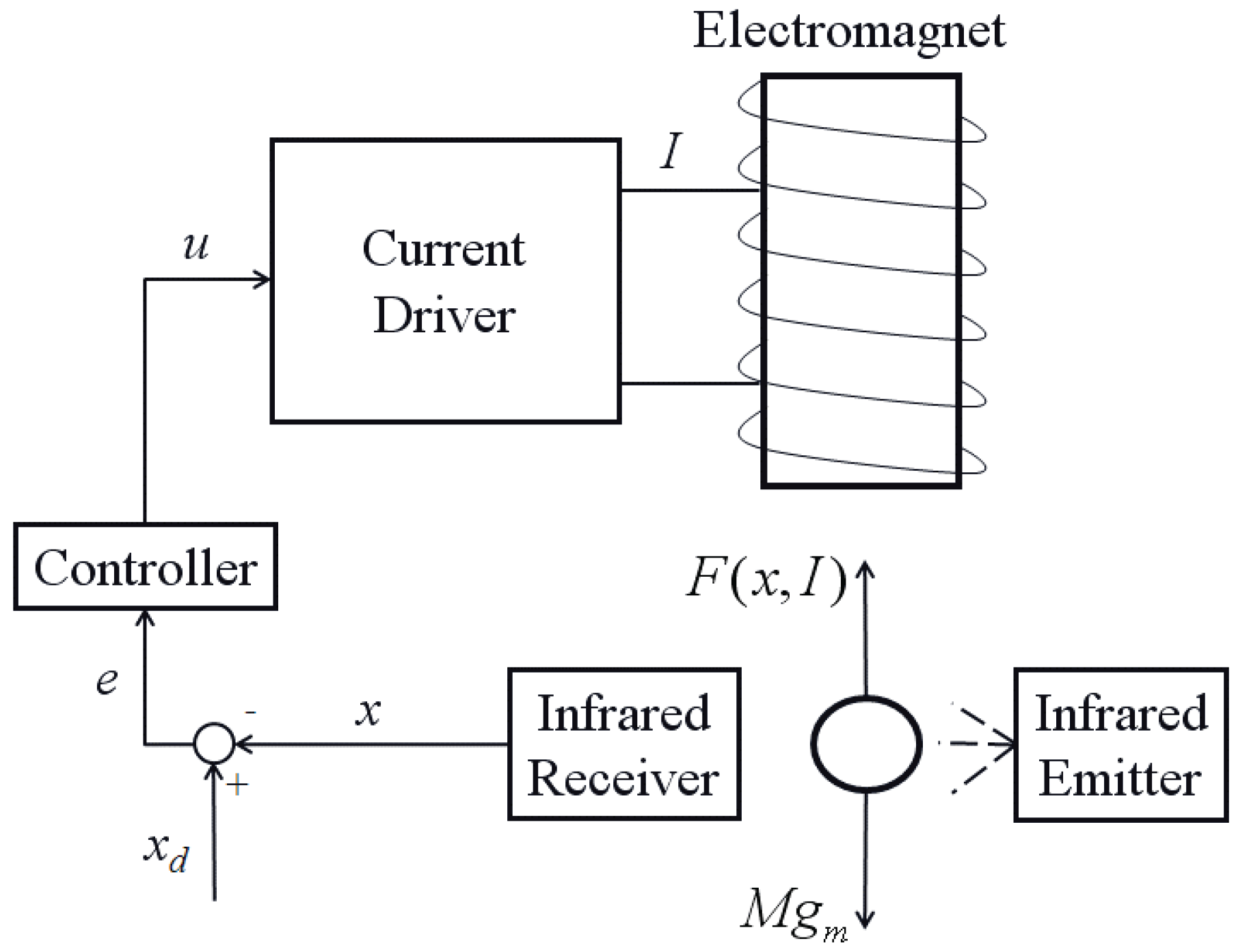

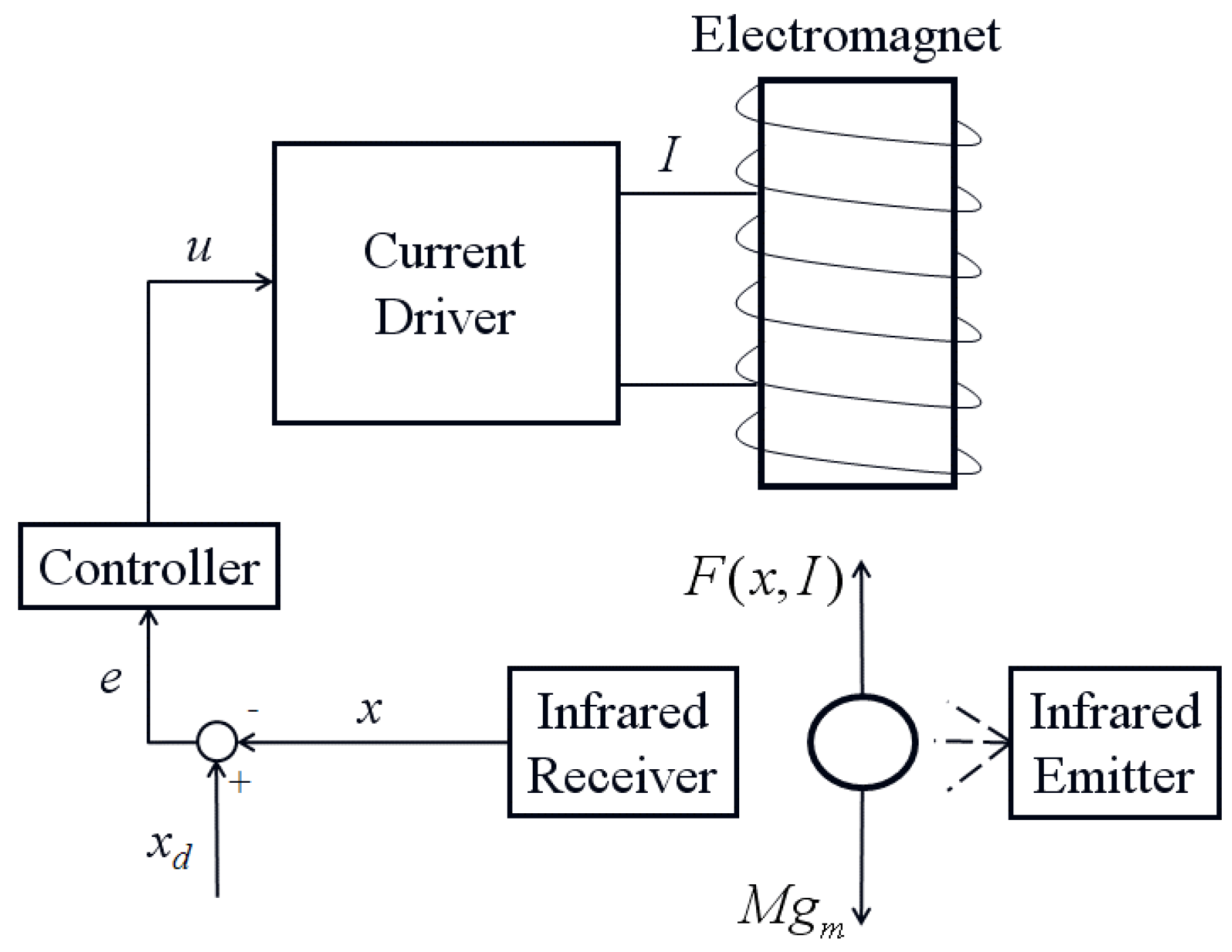

Example 1. The mechanism for single-axis magnetic levitation is shown in Figure 6. The current driver transforms the voltage signal that serves as the control input into current. The electromagnet is excited by this current, which creates the appropriate magnetic field nearby. An infrared sensor measures the metallic sphere’s position as it travels down the electromagnet’s vertical axis. The specifications of MLS is , , , , , , , , and .

The constant learning rates for the CMAC controller are

,

. The threshold value settings for the DPFNN controller are set to

,

, and

. To achieve sufficient control performance and meet stability requirements across a variety of operating situations, the initial values were chosen by running a genetic algorithm on the offline model. These parameters are then usually adjusted and improved through a trial-and-error procedure. Smaller learning rate values often make parameter convergence easier, but sacrifice learning efficiency in the process. On the other hand, learning proceeds more quickly if the learning rates is set high; however, the proposed control mechanism can become unstable if the parameters change. The metallic sphere is initially positioned at −1 mm.

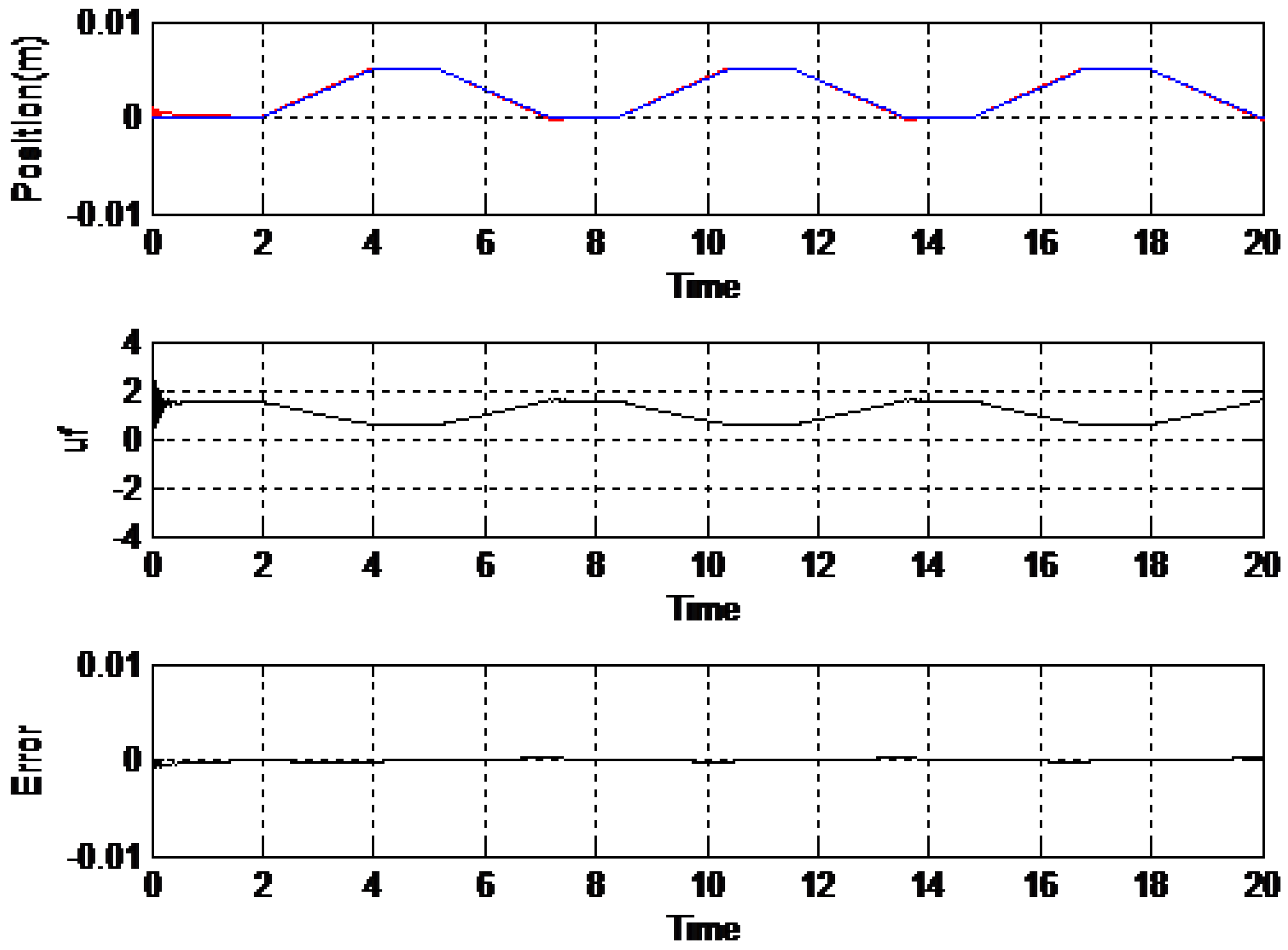

Figure 7 displays the CMAC controller’s results, while

Figure 8 displays the DPFNN controller’s results.

As shown in the

Figure 7, the CMAC controller struggles with position tracking, particularly at the peaks and valleys of the reference trajectory, leading to noticeable tracking errors. These errors are evidenced by the red dashed line deviating from the blue reference line, indicating that the CMAC controller is less precise in following the desired trajectory. Additionally, the control signal (

) associated with the CMAC controller exhibits significant oscillations and fluctuations, reflecting a higher control effort and potential instability. This suggests inefficiency in the control process, as the controller must work harder to maintain performance, yet still results in substantial errors.

In contrast, the DPFNN controller depicted in the second image demonstrates a marked improvement in both tracking accuracy and error minimization. The red dashed line closely follows the blue reference line, with reduced deviations indicating lower tracking errors and a more precise response to the desired trajectory. The control signal for the DPFNN controller is also noticeably more stable and exhibits fewer oscillations compared to the CMAC controller. This reduced fluctuation suggests that the DPFNN controller requires less control effort, enhancing overall efficiency and stability.

In terms of error, the DPFNN controller significantly outperforms the CMAC controller by maintaining lower error margins throughout the trajectory. Its improved accuracy and reduced control effort make the DPFNN controller a more robust and effective solution, particularly in scenarios requiring precise and stable control. Overall, the DPFNN controller’s superior performance in minimizing errors and enhancing control efficiency underscores its advantage over the traditional CMAC controller.

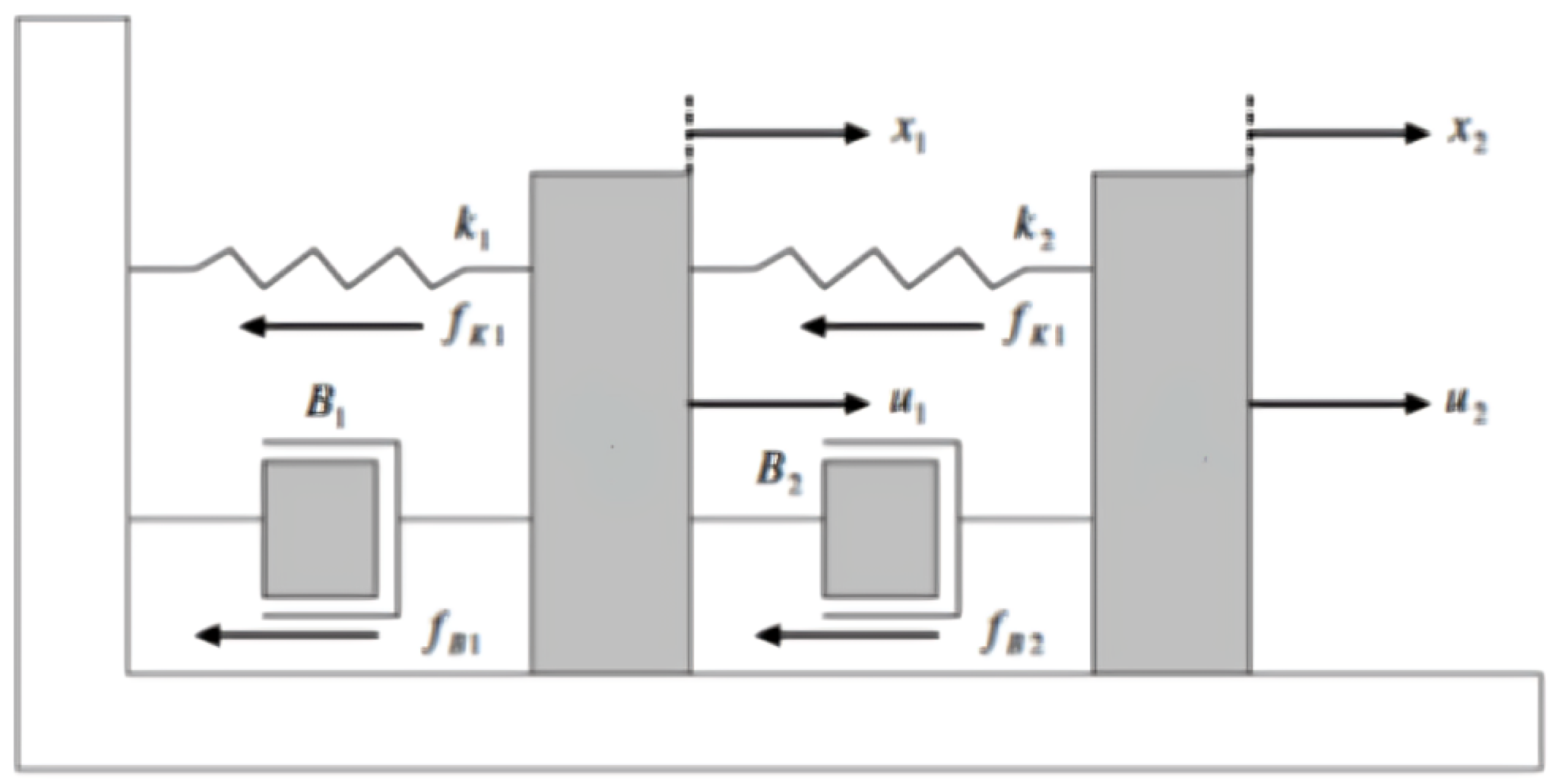

Example 2. A mass–spring–damper mechanical system

Figure 9 depicts a mass–spring–damper mechanical system. This mechanical system’s dynamic equations are written as follows:where and are the masses in the system and are the mechanical system’s locations and velocities. The spring forces are and , while the frictional forces are and . The parameters for the system are provided as , , , , , , , , , , , , , and . Thus, the mass–spring–damper mechanical system’s dynamic equation can be reformulated as follows:

where

in which

,

denotes the control input, and

denotes the external disturbance. The outputs of the reference model provide the intended trajectories, while

,

is the chosen reference model. The initial conditions for the mechanical system and reference model are provided as

,

,

,

,

,

,

, and

. The control parameters are selected as

,

,

,

,

,

,

,

, and

, whereas the remaining parameters are all random. The inputs used as references are

and

.

The FNN control method, as depicted in

Figure 10, is applied to the mass–spring–damper system shown in

Figure 9. This system involves two masses connected by springs and dampers, where

and

represent the positions of the first and second masses, respectively. The FNN control method attempts to regulate the positions

and

in order to follow the desired trajectories

and

. The response of the system under FNN control shows some oscillations and a phase lag between the actual positions and the desired trajectories. As illustrated in

Figure 10A,B, the tracking errors for

and

exhibit initial offsets and gradually converge, but stabilize around a slightly negative value (approximately −0.5). This indicates that while the FNN method is capable of reducing the tracking error, it cannot achieve perfect tracking, particularly in the steady state. Persistent steady-state errors result from this method’s difficulties in handling the dynamics of the system and external impacts.

The proposed control method, as illustrated in

Figure 10, demonstrates a significant improvement in controlling the mass–spring–damper system. Positions

and

closely follow their desired trajectories

and

, with minimal oscillations and rapid convergence to the desired state. The tracking errors for

and

, shown in

Figure 10E,F, are the smallest among the three methods, with errors converging close to zero and stabilizing around −0.1. This performance highlights the proposed controller’s superior accuracy and stability, effectively minimizing the effects of external disturbances and inherent system dynamics. The rapid error convergence and sustained low error levels underscore the capability to deliver precise and reliable control, outperforming both the FNN and CMAC methods. Thus, the proposed method proves highly effective for applications requiring stringent control accuracy and robustness in dynamic environments.

Table 1 shows a comparison of RMSE between NN, CMAC, and the proposed method for

and

. The proposed method has the lowest RMSE values for both

and

, indicating that it provides the best performance in minimizing errors among the three methods.