Abstract

The optical flow problem and image registration problem are treated as optimal control problems associated with Fokker–Planck equations with controller u in the drift term. The payoff is of the form , where is the observed final state and is the solution to the state control system. Here, we prove the existence of a solution and obtain also the Euler–Lagrange optimality conditions which generate a gradient type algorithm for the above optimal control problem. A conceptual algorithm to compute the approximating optimal control and numerical implementation of this algorithm is discussed.

Keywords:

Fokker–Planck equation; gradient flow; semigroup; optical flow; optimal control; optimality conditions; difference method; stochastic equations; tensor metric MSC:

60H15; 47H05; 47J05; 49Jxx; 49K20; 65M06

1. Introduction

The optical flow problem (see [1,2,3,4,5]) consists in finding the velocity field of an object motion on an interval if one knows the final image of the object. Thus, the problem that is treated here leads to an effective motion estimation that can be further applied to the video object detection and tracking tasks. This research is part of a larger computer vision project in that field [6]. In a similar way, the image registration problem in medical analysis is formulated ([7,8,9,10]). Namely, given two images , , where , , the registration problem is to find a mapping such that and , . This mapping generates a continuous flow of images , , which steers the image in in time T. In the literature on optical flow, as well as of image registration, the mapping m is taken as the brightness of the pattern at point and at time t. The pattern dynamics is given by the ordinary differential equation

where is a smooth vector-field and is a given diffeomorphism. Assuming that the brightness is constant on the trajectory , one obtains for m the hyperbolic first-order equation

Then, the image registration problem reduces to the following exact controllability problem:

Given the functions , determine the velocity field such that

Optical flow estimation and image registration are treated here as optimal control problems associated with some Fokker–Planck Equations ([11,12]). We shall use a different model by replacing the first-order hyperbolic Equation (2) by the Fokker–Planck equation:

where and is a probability density [11]. Then, the problem we address here is formulated as follows:

- (P)

- Given , find the velocity field such that .

In order to write the above problem (3) into this form, one should extend the diffeomorphism to a diffeomorphism . Such an extension is always possible for smooth domains . An alternative is to replace (4) by a similar problem on with a flux boundary condition (see Remark 1).

It is well known that Equation (4) is equivalent to the stochastic differential equation

is a probability space with a d-Brownian motion W [11]. More precisely, if X is a solution to (5) and the probability density , then , where is the probability density of the process . Conversely, if X is a solution to (5), then is a distributional solution to (4) (see [11], Section 5 for details). Then, in this formulation, the stochastic differential Equation (5) is used, while Equation (2) is replaced by the Fokker–Planck Equation (4). Here, we shall not solve the exact controllability problem (P) but we shall approach it through an optimal control problem ([2,4,7,13,14,15,16]). Such an approach has already been used in optical flow and image registration problems ([10,17]); the main difference is that here the controller u is taken in a larger class of inputs u (see problem (6)).

Notation.

If is an open set of , for , we denote by the space of all the Lebesgue p-integrable functions on , with the standard norm denoted . By , we denote the Sobolev space with the standard norm . Denote by the dual space of and by the duality pairing on , which coincide with the scalar product of on . For , we denote by the space of all -valued continuous functions By , we denote the space of functions with and similarly for the space .

2. The Optimal Control Problem

We shall approximate the controllability problem (P) by the optimal control problem [2]:

subject to and

Here, is a given positive constant, and . By definition, a solution y to (7) is a function such that

In [18], a similar optimal control problem with quadratic pay-off and free divergence controllers u was used to solve the optical flow problem. The problem (6) is considered here for a larger class of controllers, namely , which is an advantage for numerical simulation. This choice of U was dictated by necessity to derive some sharp estimates for the state y of control system (12) necessary to obtain existence in problem (6) and (7).

We have

Lemma 1.

Proof.

Assume first that . Then, the existence and uniqueness of a solution is a standard result in the literature. In fact, it follows from existence theory for the Cauchy problem [11]

where is the operator defined by

and, as easily seen,

Moreover, if y is the solution to (13) (equivalently, (7)), we have

Conversely, we have by Schwartz inequality

Next, by the interpolation inequality combined with Sobolev embedding theorem in , we have

This yields via Hölder inequality

Substituting in (14), we get

and so, by Gronwall’s lemma,

Now, if , we approximate it by . Then, for the corresponding solution to (6), we have estimate (15) and so is bounded in , is bounded in and so, by the Aubin–Lions compactness theorem [18], is compact in and weakly compact in [19]. Hence, on a subsequence, we have

Moreover, we also have

and since is bounded in , we also have

Then, letting in Equation (6), where , we see that is a solution to (6) as desired. Now, if we multiply (6), where , by and integrate we get

and, if , then, as easily seen, and

Then, for , we get (12) and that if . □

Theorem 1.

Let and . Then, there is at least one solution to the optimal control (6). Moreover, if , then , .

Proof.

Remark 1.

If in problem (6) one replaces by a domain , the control system (7) should be replaced by

where is the outward normal to . Theorem 1 remains true by the same argument in this situation.

3. The Euler–Lagrange Optimality System

Proof.

4. The Gradient Algorithm

If we denote by , the payoff function

its Gâteaux differential

is given by (see (22))

and, therefore,

where p is the solution to the equation

In terms of , the optimality system (3.5) can be rewritten as

Taking into account (24) for a fixed , the explicit gradient algorithm for computing the optimal controller is

that is,

5. Numerical Approximation

In this section, we present some conceptual algorithms of the gradient type in order to compute the approximating optimal control of the following problem

subject to

On the basis of Theorem 2, we know that the optimal control in problem (29) is given by

where p satisfies the adjoint state equation

We have developed two numerical algorithms to approximate the problem (29)–(32): the finite difference method ([1,16,20]) and 2D explicit difference method. We consider and assume that is a polygonal domain.

5.1. The Finite Difference Method

Given a positive value T and considering M as the number of equidistant nodes in which is divided the time interval , we set

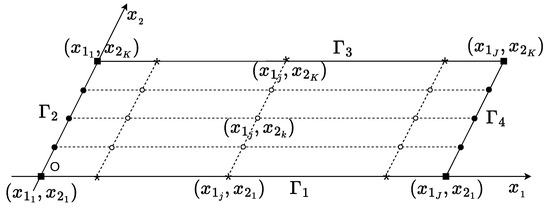

The problems (30) and (32) will be discretized on the 2D rectangular domain . Let us denote a generic point in ; we will build a grid on by considering equidistant discretization nodes for both axis and (see Figure 1). Thus, we discretize by

and by

The set (cartesian product)

provides the computational grid on .

Figure 1.

The boundary of the rectangle .

Let us denote by the approximate values in the points of the unknown function in (30), i.e.,

or, for later use

Corresponding to the initial condition (30)2, we have

To approximate the partial derivatives with respect to time, and , we employed a first-order scheme, that is:

, , .

5.2. 2D Explicit Difference Method

The partial differential equation in (30)1 can be written equivalently in the form

The laplacian is approximated by a central finite difference formula

while, to approximate the partial derivatives of and with respect to and , we shall use backward difference formula:

Finally, one obtains the following explicit numerical approximation scheme for (30)

, , .

We continue by treating the adjoint state in (32). Let us denote by the approximate values in the points of the unknown function , i.e.,

or, for later use

The partial differential equation in (32)1 can be written equivalently in the form

The laplacian is approximated by a central finite difference formula, that is

while, to approximate the partial derivative of with respect to and , we shall use backward difference formula:

.

Thus, we get a first explicit numerical approximation scheme for (32)

Now we are in position to present a conceptual algorithm (Algorithm 1) of gradient type (see [13,14,15,16] for more details) to compute the approximating optimal control , stated by Theorem 2.

| Algorithm 1: Algorithm OCP_FP_EDM (Optimal Control Problem—FokkerPlanck_EDM 2D) |

P0. Set ; Choose and compute (see (33)); Choose and compute ; Choose and compute ; Choose , , ; Choose (see (29)); Choose and compute , , (see (34)); P1. Determine , , , (see (38)); P2. Determine , , , (see (42)); P3. Compute (see (4.4)); P4. Compute solution of the minimization process: Set P5. If /* the stopping criterion */ then STOP else iter iter ; Go to P1. |

In the above, the variable iter represents the number of iterations after which the algorithm OCP_FP_EDM found the optimal value of the cost functional in (29).

Remark 2.

The stopping criterion in P5 could be

where η is a prescribed precision.

6. Numerical Experiments

In this section, we validate the explicit finite–difference implementation of the optimal-control algorithm OCP_FP_EDM described in the previous section. We detail the discretization, and comment on the numerical results obtained for a simple benchmark based on two smooth Gaussian densities.

The dual purpose of this section is to (a) verify the correctness of our forward/adjoint implementation on a benchmark that admits a smooth solution, and (b) document the practical limitations that arise when the control is driven by an explicit time-stepping scheme.

6.1. Discrete Setting and Algorithmic Details

The computational domain is the unit square , discretized on a uniform grid with nodes per spatial direction. The spacings are, therefore,

The final time is and we start with time steps . At every outer optimal–control iteration we recompute a CFL-adapted time step

where and similarly for . The number of sub-steps is , which is the classical CFL bound for a linear advection–diffusion equation. This value replaces the initial choice whenever it is smaller.

For the Fokker–Planck state Equation (30) and the adjoint Equation (32) we use standard second-order central differences for and first-order (upwind) backward differences for the flux terms. Dirichlet homogeneous boundary conditions () are enforced on .

Given the current iterate , we compute the point-wise gradient

and update with a fixed gradient step .

The value was selected so that and are of the same order of magnitude in the initial iterate; this prevents the search direction from being dominated by either term.

All parameters used in the run reported below are summarized in Table 1.

Table 1.

Numerical parameters for the 2D Gaussian test.

6.2. Benchmark Configuration

The initial and target densities are smooth, normalized Gaussians

with centres , and standard deviation . After discretization both images are normalized so that .

The optimization is initialized with a small random perturbation of the control field: , where represents independent Gaussian random variables with zero mean and unit variance. This random initialization breaks the symmetry of the zero initial guess and accelerates convergence by providing non-trivial gradient information from the first iteration.

6.3. Results

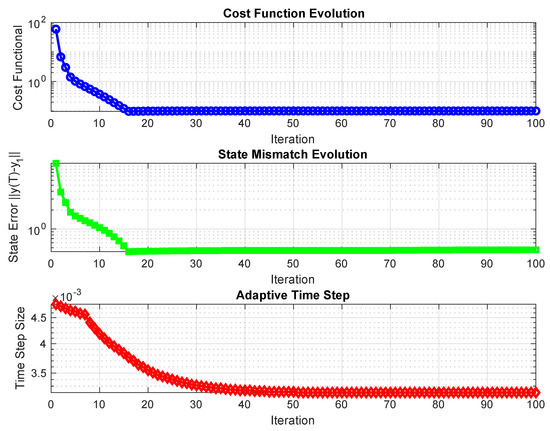

The explicit implementation demonstrates good convergence behavior and achieves successful optimal transport between the specified Gaussian distributions. Table 2 summarizes the quantitative performance metrics over 100 optimization iterations.

Table 2.

Cost functional and state mismatch evolution over 100 optimization iterations. The algorithm demonstrates consistent convergence with cost reduction (from 59.22 to 0.10) and error reduction (from 11.16 to 0.46).

The algorithm exhibits robust monotonic convergence in both cost functional and state tracking error. As shown in Figure 2, the cost functional decreases exponentially from an initial value of 59.22 to a final value of 0.10.

Figure 2.

Convergence analysis of the OCP-FP-EDM explicit algorithm over 100 iterations. Top: cost functional showing exponential decay from 59.22 to 0.10. Middle: state mismatch demonstrating consistent error reduction from 11.16 to 0.46. Bottom: adaptive time step evolution maintaining CFL stability throughout optimization. All metrics confirm good convergence behavior of the implementation.

The state mismatch demonstrates consistent improvement throughout the optimization process, reducing from 11.16 to 0.46. This error reduction confirms that the algorithm successfully drives the final state toward the target distribution . The smooth convergence curves indicate stable numerical behavior without oscillations or stagnation.

The adaptive time stepping mechanism maintains computational efficiency while ensuring CFL stability.

Figure 3 illustrates the complete optimal control solution after convergence. The top row demonstrates successful transport of the Gaussian probability mass from the initial position to the target location . The final state closely approximates the target distribution , with the residual mismatch showing only small deviations concentrated near the distribution boundaries.

Figure 3.

Optimal control solution after 100 iterations showing successful Gaussian transport. Top row: initial density (left), final state (center), and target density (right). Middle and bottom rows: adjoint field (left), mismatch error (center), and optimized control field u with magnitude visualization (right) at initial (middle) and final (bottom) iteration. The algorithm successfully transports the probability mass from to with minimal residual error.

The adjoint field exhibits the expected dual structure, with positive values driving mass away from the source region and negative values attracting mass toward the target. This dual behavior reflects the optimality conditions and provides the gradient information necessary for effective control updates.

7. Discussion

The present experiment confirms that the finite difference discretization and the adjoint implementation are consistent, and it highlights the practical difficulties of gradient-type control when used with explicit solvers.

As already mentioned, the research described in this work is part of the project in the traffic monitoring domain, which is acknowledged below. Thus, the proposed video optical flow estimation can be applied successfully to the detection and tracking of various moving objects, such as pedestrians and vehicles (see [1,6]). Therefore, some video detection and tracking frameworks based on this technique will also represent the focus of our future research in this project.

Author Contributions

Methodology, T.B. and S.-D.P.; Software, C.M. and S.-D.P.; Validation, C.M.; Investigation, S.-D.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research work was supported by a grant of the Romanian Academy, GAR-2023, Project Code 19.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Barbu, T. Digital Image Processing, Analysis and Computer Vision Using Nonlinear Partial Differential Equations; Springer Nature: Berlin/Heidelberg, Germany, 2025; Volume 1211. [Google Scholar]

- Barbu, V.; Marinoschi, G. An optimal control approach to the optimal flow problem. Syst. Control Lett. 2016, 87, 1–6. [Google Scholar] [CrossRef]

- Barron, J.L.; Fleet, D.J.; Beauchemin, S.S. Performance of optical flow techniques. Int. J. Comput. Vis. 1994, 12, 43–77. [Google Scholar] [CrossRef]

- Borzi, A.; Itô, K.; Kunish, K. Optimal control formulation for determining optical flow. SIAM J. Comput. 2002, 34, 818–847. [Google Scholar] [CrossRef]

- Horn, B.P.K.; Schunk, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Barbu, T. Deep learning-based multiple moving vehicle detection and tracking using a nonlinear fourth-order reaction-diffusion based multi-scale video object analysis. Discret. Contin. Dyn.-Syst.-Ser. S AIMS J. 2023, 16, 16–32. [Google Scholar] [CrossRef]

- Lee, E.; Gunzburger, M. An optimal control formulation of an image registration problem. J. Math. Vision 2010, 36, 69–80. [Google Scholar] [CrossRef]

- Mang, A.; Gholami, A.; Davatzikos, C.; Biros, G. CLAIRE: A Distributed-Memory Solver for Constrained Large Deformation Diffeomorphic Image Registration. SIAM J. Sci. Comput. 2019, 41, C548–C584. [Google Scholar] [CrossRef] [PubMed]

- Mang, A.; Gholami, A.; Davatzikos, C.; Biros, G. PDE-constrained optimization in medical image analysis. Optim. Eng. 2018, 19, 765–812. [Google Scholar] [CrossRef]

- Zhang, J.; Li, Y. Diffeomorphic image registration with an optimal control relaxation and its implementation. SIAM J. Imaging Sci. 2021, 14, 1890–1931. [Google Scholar] [CrossRef]

- Barbu, V.; Röckner, M. Nonlinear Fokker–Planck Flows and their Probabilistic Counterparts; Lecture Notes in Mathematics; Springer: Berlin/Heidelberg, Germany, 2024; Volume 2353. [Google Scholar]

- Jordan, R.; Kinderlehrer, D.; Otto, F. The variational formulation of the Fokker–Planck equation. SIAM J. Math. Anal. 1998, 29, 1–17. [Google Scholar] [CrossRef]

- Benincasa, T.; Favini, A.; Moroşanu, C. A Product Formula Approach to a Non-homogeneous Boundary Optimal Control Problem Governed by Nonlinear Phase-field Transition System. PART II: Lie-Trotter Product Formula. J. Optim. Theory Appl. 2011, 148, 31–45. [Google Scholar] [CrossRef]

- Hömberg, D.; Krumbiegel, K.; Rehberg, J. Optimal control of a parabolic equation with dynamic boundary conditions. Appl. Math. Optim. 2013, 67, 3–31. [Google Scholar] [CrossRef]

- Moroşanu, C. Boundary optimal control problem for the phase-field transition system using fractional steps method. Control Cybern. 2003, 32, 5–32. [Google Scholar]

- Moroşanu, C. Analysis and Optimal Control of Phase-Field Transition System: Fractional Steps Methods; Bentham Science Publishers: Potomac, MD, USA, 2012. [Google Scholar] [CrossRef]

- Lee, J.; Bertr, N.P.; Rozell, C.J. Unbalanced Optimal Transport Regularization for Imaging Problems. IEEE Trans. Comput. Imaging 2020, 6, 1219–1232. [Google Scholar] [CrossRef]

- Lions, J.L. Quelques Méthods de Résolution des Problèmes aux Limites Nonlinéaires; Dunod. Gauthier-Villars: Paris, France, 1969. [Google Scholar]

- Simon, J. Compact sets in the space Lp(O,T;B). Ann. Mat. Pura Appl. 1986, 146, 65–96. [Google Scholar] [CrossRef]

- Boole, G. Calculus of Finite Differences; BoD–Books on Demand; Salzwasser-Verlag: Bremen, Germany, 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).