1. Introduction

In real-world industrial machine monitoring scenarios, normal operational noise is readily available, while anomalous sounds are inherently rare and difficult to collect without risking machine damage. Moreover, manually labeling anomalous noise is highly resource-intensive. Therefore, the goal of anomalous sound detection (ASD) is to determine whether a machine is in an abnormal state during inference [

1], using only normal audio samples for training. This constraint makes unsupervised and semi-supervised learning particularly well-suited for this task.

Previous research on anomalous sound detection can be primarily categorized into two approaches: the first utilizes autoencoders to model normal audio samples and distinguishes anomalies through reconstruction error analysis [

2,

3,

4,

5,

6], while the second employs machine noise ID-based supervised classification models.

For autoencoder models like VIDNN [

2], since audio data contains multiple machine types, separate encoders must be constructed for each machine ID. In practical applications, this approach requires significantly more training time and computational resources [

6]. The interpolated neural network addresses this by building an autoencoder through intermediate frame prediction, which avoids the trivial solution problem common in traditional autoencoders and better captures the time-frequency structure of audio signals.

However, due to the excessive generalization capability of autoencoders [

7], when the distinction between normal and abnormal machine noise is subtle, the autoencoder may learn features of anomalous audio from normal data samples. This leads to reduced reconstruction error and consequently degrades detection performance.

Machine ID classification-based methods have demonstrated significant advantages in Automatic Sound Detection (ASD) tasks [

8,

9,

10,

11,

12], surpassing traditional autoencoder (AE) architectures in detection performance by leveraging the ability to jointly learn feature distributions across multiple machines. Current research primarily focuses on modifying the MobileFaceNetV2 [

13] backbone, with improvements mainly following two technical directions. The first is the diversification of input features, such as ST-gram [

8] enhancing the temporal representation of log-Mel spectrograms through a temporal feature network (TgramNet), and OS-SCL [

11] employing dilated convolutions to construct TFnet for capturing deep frequency-domain features. The second is the optimization of loss functions, including the use of ArcFace [

14] for inter-class angular margin penalties, or Noise-ArcMix [

10] employing mixup [

15] strategies to improve model generalization. Although studies like CLP-SCF [

9] optimize feature space distribution through contrastive learning, these approaches fail to address a fundamental limitation—the existing architecture is directly adapted from MobileFaceNetV2, originally designed for face recognition, where its underlying assumptions (e.g., reliance on shape/contour-based spatial features) inherently differ from the time-frequency characteristics of audio spectrograms.

However, facial images and audio spectrograms exhibit significant differences in data structure: the former relies on well-defined hierarchical shape structures and spatial continuity, while the latter, as a time-frequency representation, exhibits weak local correlations and globally dispersed patterns. This domain gap leads to three inherent drawbacks in current models regarding spectrogram feature fusion: (1) the lack of an adaptive attention mechanism for critical frequency bands; (2) the inefficiency of spatial convolution kernels in capturing long-range dependencies across frequency bands; and (3) the loss of time-frequency information during temporal feature aggregation.

To overcome the limitations of MobileFaceNetV2 in audio scene recognition, recent studies in the computer vision domain have demonstrated that attention mechanisms can effectively enhance model performance. However, traditional Squeeze-and-Excitation (SE) [

16] modules typically employ pooling operations to generate channel-wise weights, and such one-dimensional attention mechanisms struggle to fully capture the time-frequency characteristics of audio signals. In anomalous sound detection (ASD) tasks, model performance heavily relies on precise modeling of time-frequency features. Although attention mechanisms have been proven effective in processing sequential data, architectures such as the Vision Transformer (ViT) [

17,

18,

19] require partitioning the input tensor into patches, which inevitably leads to the loss of inherent information in the two-dimensional time-frequency structure. To address this issue, the proposed ATA-MSTF-Net adopts a separable convolution structure to directly generate two-dimensional time-frequency feature weights, enabling the model to adaptively enhance the representation of critical frequency bands and temporal frames, thereby achieving a more fine-grained modeling of audio textures.

From an architectural perspective, given that MobileNet extensively uses

convolutions to effectively reduce computational complexity, such operations introduce fixed channel dependency, which limits the representational capacity of the model. To alleviate this problem, we innovatively introduce a lightweight Channel Shuffle mechanism [

20], which periodically perturbs the feature channels. This mechanism effectively breaks fixed inter-channel dependencies and promotes cross-channel information exchange without significantly increasing computational overhead, thereby enhancing the model’s representational power. Considering the temporal characteristics of audio, we further integrate an LSTM [

21] module into TgramNet, significantly improving its ability to model long-term dependencies and better capture the dynamic variation patterns of machine noise.

It is worth noting that this fine-grained modeling approach for audio time-frequency textures is highly consistent with the concept of feature co-optimization in the fields of incomplete multi-view learning and multi-label learning. In incomplete multi-view clustering, existing work has enhanced representation capability through a self-attention two-stage autoencoder combined with missing data recovery (RecFormer) [

22], or achieved structured consensus representation via sparse regularization and local graph embedding (LSIMVC) [

23]. In incomplete multi-view multi-label classification, some studies have realized cross-view consistency alignment through label-driven contrastive learning and quality-aware weighting (RANK) [

22], while others have addressed double-incomplete scenarios by integrating missing-view and missing-label information into fusion and classification modules to improve performance [

23]. These methods share technical similarities with our approach in terms of multi-modal feature fusion, key feature enhancement, and incomplete information utilization, collectively demonstrating the importance of efficient multi-source information modeling for improving performance in complex tasks.

Further research reveals that the temporal weighting mechanism of the original TAgram module suffers from insufficient flexibility. Inspired by the CBAM module [

24], we propose an improved solution: computing max-pooled and average-pooled features in parallel, concatenating them, and generating attention weights to highlight the more discriminative key time frames in the Mel spectrogram. Experimental results demonstrate that this enhanced temporal dynamic feature modeling method exhibits stronger adaptive capabilities, achieving superior feature representation in the time dimension.

Our key contributions can be summarized as follows:

We propose a novel Audio Texture Attention Mechanism that employs separable convolution to directly model two-dimensional time-frequency feature correlations, overcoming the limitations of traditional SE modules in time-frequency feature modeling.

We enhance the temporal weight modeling of the TAgram module by incorporating a CABM-like structure, significantly improving the model’s ability to represent temporal dynamic features in audio signals.

We innovatively integrate an LSTM module into the lightweight network architecture, effectively strengthening the model’s ability to capture long-term dependencies.

By combining AT Attention, we propose the ATA-MSTF-Net (Audio Texture-Aware Multi-Spectro-Temporal Attention Fusion Network), which achieves state-of-the-art performance on the DCASE 2020 Task 2 dataset.

2. Methods

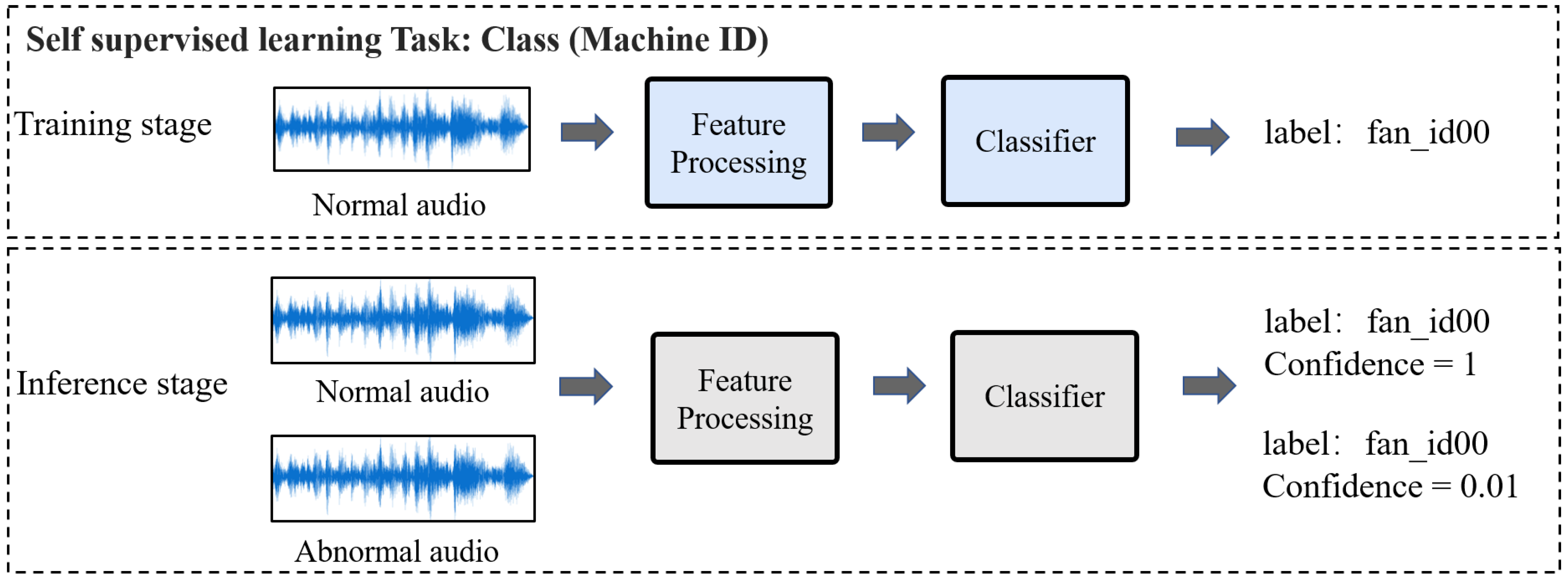

This study adopts a classification-based approach by constructing a classification model for normal audio to learn its data distribution characteristics. The overall framework is illustrated in

Figure 1. During the model training phase, we utilize the machine type and machine ID of the audio as classification labels and optimize the feature space using a classification loss function. This ensures that the feature distances between samples of the same class are minimized, while those between samples of different classes are maximized.

In the inference phase, since no anomalous samples are introduced during training, the model’s classification confidence for anomalous audio inputs will approach zero, whereas, for normal audio, the confidence remains close to one. To further enhance the discriminability between normal and anomalous audio, this study employs a negative logarithmic transformation, as shown in Equation (

1),

p represents the model’s output classification confidence (a probability value ranging between 0 and 1).

When p approaches 0 (indicating anomalous audio), tends toward .

When p approaches 1 (indicating normal audio), tends toward 0.

This transformation significantly amplifies the confidence differences between the two types of samples. The experimental results demonstrate that when the negative logarithmic value of the confidence exceeds 0.1, the audio can be determined as anomalous.

This chapter proposes an innovative ATA attention mechanism for mechanical noise audio classification tasks and constructs an ATA-MSTF-Net model based on this mechanism. The ATA attention mechanism, through its unique structural design, specifically enhances the feature extraction ability of the classifier, demonstrating significant advantages in time-frequency domain feature representation and effectively capturing key texture characteristics of audio signals.

Building upon this mechanism, the ATA-MSTF-Net model adopts a multi-modal feature fusion strategy. By processing multiple feature representations of the raw audio in parallel and incorporating complementary information from Mel spectrograms, the model significantly improves the discriminative power of input features, thereby optimizing its overall performance.

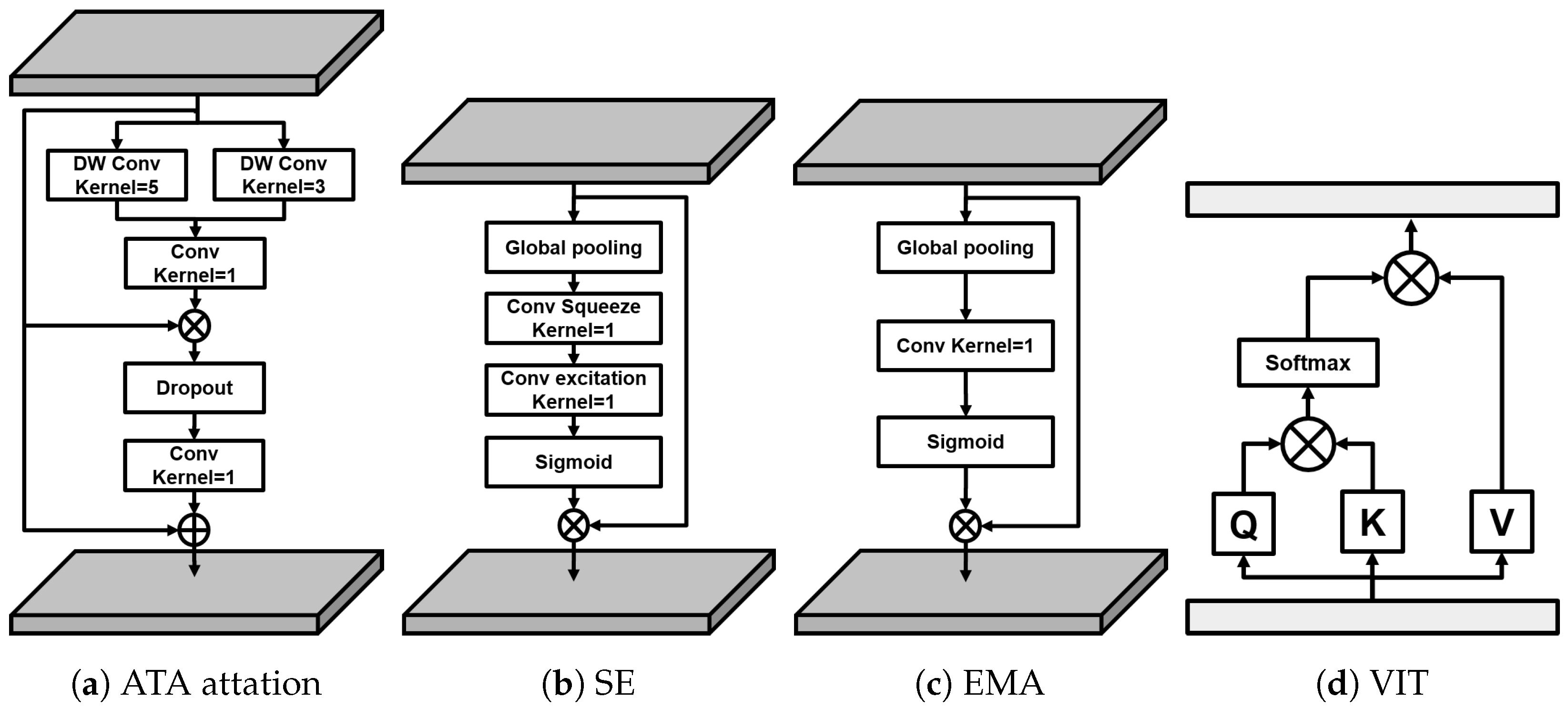

Figure 2a illustrates the structural design of the ATA attention mechanism tailored to the time-frequency characteristics of audio signals, while

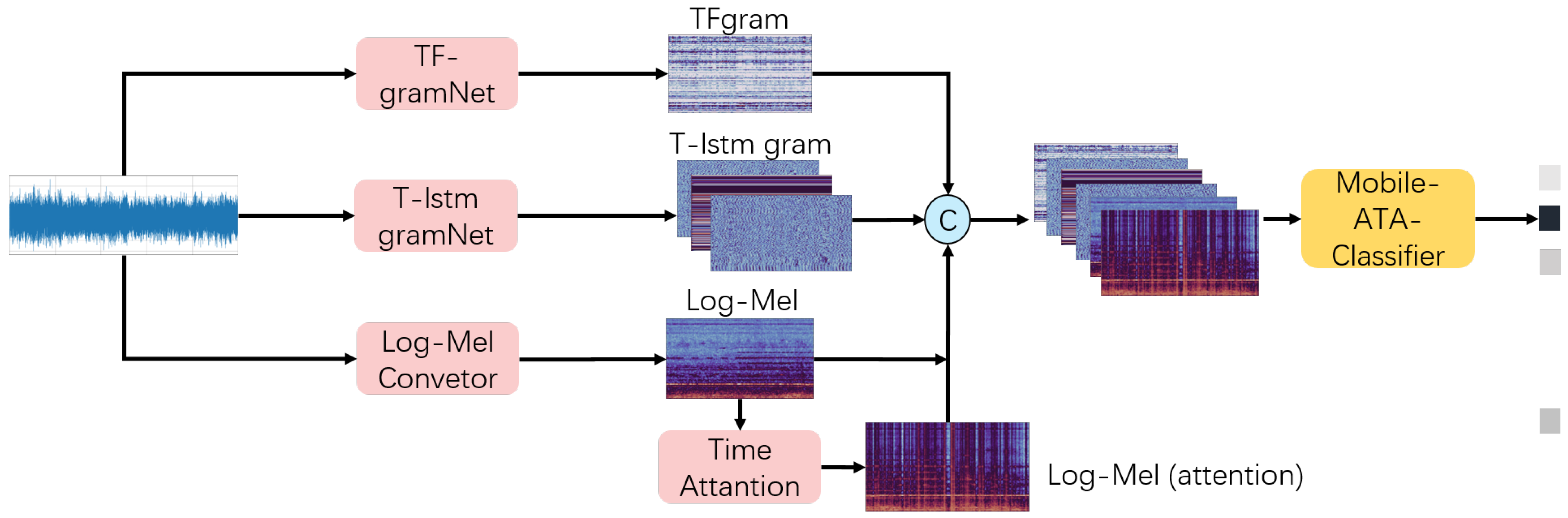

Figure 3 details the overall architecture of the ATA-MSTF-Net model and its core modules.

2.1. ATA Attention

The core of the attention mechanism lies in dynamically generating weights to adjust feature representations, thereby enhancing model performance by suppressing irrelevant information and emphasizing key features. Its effectiveness heavily depends on the design of the weight generation method, which must be closely aligned with the structural characteristics of the specific data. Current attention weight generation methods can be broadly categorized into two types, each with notable limitations.

The first type is based on self-attention mechanisms. As illustrated in the

Figure 2d, the model establishes long-range dependencies by computing the similarity between the query (Q) and key (K) matrices. However, such methods require flattening the input tensor into a one-dimensional sequence for processing. For audio spectrogram data, this leads to severe disruption of the time–frequency structure, resulting in the loss of local time–frequency correlations and a consequent decline in recognition performance.

The second type relies on convolutional structures, as shown in

Figure 2b,c, with the squeeze-and-excitation (SE) module being the most representative example. The SE mechanism uses global average pooling combined with fully connected layers to generate channel-wise attention weights. By performing dimensionality reduction followed by expansion, it filters out irrelevant information. However, it suffers from fundamental drawbacks: (1) the pooling operation leads to the loss of spatial detail; and (2) it can only produce a single weight per channel dimension, failing to capture the joint variation of two-dimensional time–frequency features. Although the subsequent EMA [

25] improves channel modeling without a dimensionality reduction, its weight generation approach still cannot establish fine-grained attention control for each pixel on the time–frequency plane.

To overcome these shortcomings, we propose the ATA mechanism, which directly uses a convolutional structure to generate attention weights, eliminating pooling and dimensionality reduction operations. It adopts Depthwise Separable Convolution as its core architecture, comprising three key components: Depthwise Convolution, Pointwise Convolution, and a Feed-Forward Enhancement Layer.

This design offers significant advantages in computational efficiency and feature extraction: First, depthwise convolution performs local feature extraction in the spatial dimensions (time–frequency plane), enabling the modeling of local time–frequency correlations at a very low computational cost.Second, pointwise convolution (1 × 1 convolution) is responsible for cross-channel feature integration, adaptively fusing the time–frequency features across channels. Through this cascaded operation, the model can accurately estimate the importance of each spatiotemporal location (time frame × frequency bin) in the input tensor, generating an attention map with fine-grained modulation capability.In the feed-forward enhancement layer, the generated attention map is multiplied element-wise with the original features. The result is then sequentially passed through a Dropout layer and a 1 × 1 convolution layer to enhance feature fusion, and finally combined via residual connections to achieve complementary and enhanced feature information.

As shown in the

Figure 2a, the ATA attention can be expressed as follows:

Among these, represents the input audio features. is the generated attention weight map, where each value of Attention indicates the importance of the corresponding element in F. The symbol ⊗ denotes element-wise multiplication.

Unlike traditional attention mechanisms (such as SE or EMA), the proposed ATA (Audio Texture Attention) mechanism abandons the constraints of normalization functions like sigmoid or softmax, and instead employs a fully convolutional structure to achieve adaptive feature weighting. This convolution operation can dynamically generate adjustment weights based on the local contextual information of the input features, rather than forcing the output into a uniform normalized distribution. This content-based adaptive modulation avoids the limitations of the attention weight range imposed by sigmoid/softmax.

We argue that the effectiveness of an attention mechanism lies in its ability to flexibly adjust according to the spatiotemporal distribution characteristics of the input features. In contrast, traditional normalization operations unnecessarily constrain the dynamic range of attention weights, thereby reducing the model’s representational capacity.

2.2. ATA-MSTF-Net

As shown in the

Figure 3, the network architecture of ATA-MSTF-Net features two key improvements.

First, the model adopts a multi-feature extraction architecture design, which integrates multiple signal processing methods to extract highly discriminative time-frequency features from raw audio data. This design is based on the theoretical considerations. Due to the complex time–frequency characteristics of audio signals, a single feature extraction method is insufficient to comprehensively characterize their full informational attributes. To address this, the proposed ATA-MSTF-Net model employs a multi-branch feature extraction structure, primarily comprising two core modules: TF-gramNet and T-LSTM-gramNet. The TF-gramNet module is specifically designed to extract global spectral features, while the T-LSTM-gramNet module enhances the ability to model temporal dynamic features through a long short-term memory (LSTM) network. This differentiated module design enables the model to simultaneously capture both the static texture features and dynamic evolutionary patterns of audio signals. Additionally, the model incorporates a time attention mechanism to optimize input feature representation by weighting critical time frames in the Mel spectrogram, thereby more effectively capturing key classification cues. During the feature fusion stage, the feature vectors extracted from each branch are concatenated and fed into the classifier to maximize the extraction of audio information, ultimately forming the final detection decision. The experimental validation results demonstrate that this multi-feature fusion strategy significantly improves the model’s robustness and generalization performance in complex acoustic environments, offering a novel technical approach to audio feature extraction.

The second improvement is that ATA-MSTF-Net innovatively integrates the Audio Texture Attention (ATA) mechanism with a lightweight MobileNet architecture to construct the Mobile-ATA-Classifier module, significantly improving the network’s ability to model audio texture features. The motivation for introducing ATA attention stems from recognizing the importance of audio textures in classification tasks—many critical classification cues do not reside in a single frequency band or time point but are instead distributed as texture patterns across the entire time–frequency map. The ATA attention mechanism can dynamically weight these regions, highlighting discriminative texture patterns while suppressing background noise. Combining this with the MobileNet architecture addresses efficiency and deployment considerations, ensuring strong expressive power while maintaining low computational resource requirements. This makes the entire classification module both lightweight and efficient, suitable for real-world production environments. Below, we provide a detailed explanation of each of these improved modules.

2.2.1. T-Lstm gramNet

In automatic sound detection (ASD) tasks, effective extraction of audio features is critical to model performance. The STgram architecture employs 1D large-kernel convolution (kernel size = 1024) to simulate the Mel-spectrogram extraction process, thereby supplementing high-frequency information in audio signals and enhancing feature representation through a three-layer convolutional structure. However, traditional convolution operations have inherent limitations in modeling temporal dependencies in audio data, making it difficult to effectively capture long-term temporal evolution characteristics such as machine noise patterns.

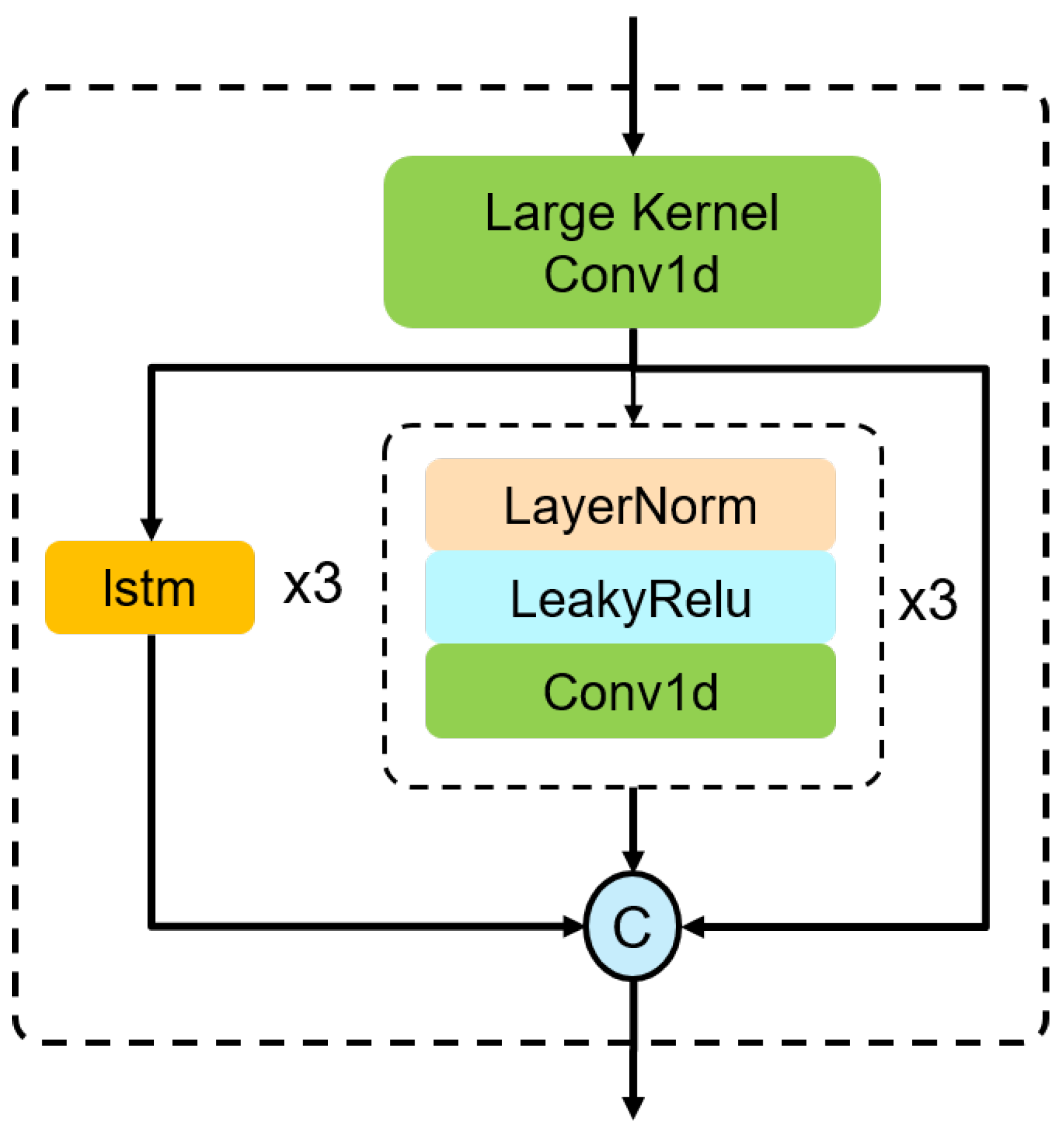

To address this limitation, we introduce an LSTM (Long Short-Term Memory) structure into the T-gram module, as illustrated in

Figure 4. The T-LSTM-gram module uses a three-layer LSTM network to capture trend variations in the raw audio while concatenating and fusing the results of the large-kernel convolution and convolutional modules, as described below:

As illustrated in

Figure 4, the architecture employs a Large Kernel 1D Convolution (LKConv) module to simulate the computation process of Mel-spectrograms. The LKConv module is configured with a kernel size of 1024 and a stride of 512, which correspond to the frame length and frame shift settings in audio-processing, respectively. By mapping the raw 1D audio signal to a 128-dimensional feature space, this module achieves a feature extraction functionality analogous to the combination of Fourier transform and Mel filter banks. For an input audio signal with a length of 160,000, the initial feature map

produced by this module has a dimensional structure of (Batch, 128, 313). Subsequent feature extraction is performed by a convolutional module (Convs), which consists of three cascaded convolutional layers for the progressive extraction of deep features. Temporal feature extraction is then accomplished through a three-layer LSTM network (LSTMs) to capture long-term dependencies in the audio signal. Finally, the concatenation (Concat) operation is applied along the channel dimension for three types of features, generating the final output feature

with a dimension of (Batch, 3, 128, 313).

The T-LSTM-gram module combines the advantages of CNNs in local time–frequency feature extraction with the strengths of LSTMs in modeling long-term temporal dependencies, thereby constructing a more discriminative audio feature representation. This innovative design effectively compensates for the limitations of traditional convolutional networks in temporal modeling.

2.2.2. Time Attention

To address the limitations of the temporal attention mechanism in the TAgram module, this study proposes an improved Time Attention mechanism. In the original TASTgramNet, the TAgram module generates the temporal attention map by directly summing the results of max pooling and average pooling applied to the Mel spectrogram. This approach has notable shortcomings: first, the simple linear addition operation lacks learnable parameters, resulting in an overly rigid attention weight generation process; second, this static fusion strategy fails to adaptively capture the dynamic variations in key time frames across different audio scenarios.

To overcome these limitations, this study innovatively introduces the attention weight generation mechanism inspired by the CABM (Context-Aware Attention Block Module). The improved design offers the following advantages: (1) by incorporating learnable attention weight parameters, it enables the adaptive weighting of temporal features; (2) it leverages context information modeling to enhance the model’s ability to discriminate important time frames; and (3) it employs a nonlinear fusion strategy to more precisely reflect the distribution of temporal importance in the Mel-spectrogram (

Figure 5).

The module adopts a dual pooling strategy for feature extraction along the frequency dimension: Max Pooling and Average Pooling are performed in parallel along the frequency axis to respectively capture the prominent spectral features and the overall distribution characteristics. These two complementary statistical features are then concatenated along the channel dimension, forming a joint time–frequency representation with dimension

. To enhance the feature representation capability, this joint representation is passed through a

convolutional layer for nonlinear transformation and feature fusion, enabling the convolution kernels to learn the optimal combination of features. Finally, after normalization by the Sigmoid activation function, probabilistic attention weights in the range

are generated, mathematically expressed as follows:

Here, denotes the Mel-spectrogram features, and represents the generated Mel-spectrogram attention weights. This design fully leverages the advantages of different pooling operations: Max Pooling captures salient feature responses, while Average Pooling preserves the overall distribution characteristics. The organic combination of the two can more comprehensively reflect the importance distribution along the time dimension. This design achieves the three following advantages: (1) It retains the salient features and global statistical properties of the spectrogram; (2) It realizes adaptive feature fusion through learnable convolutional parameters; (3) It generates attention weight distributions with a clear probabilistic interpretation.

2.2.3. Mobile ATA Classifier

In the field of audio recognition, traditional classifier modules commonly use depthwise separable convolutions from the MobileNet architecture as the basic building units. Although this structure excels at reducing computational complexity by decomposing standard convolutions into depthwise convolutions and pointwise convolutions, it still exhibits significant limitations when processing complex audio time–frequency features: Firstly, the channel-wise independence in the depthwise convolution stage results in insufficient cross-channel information interaction, making it difficult to capture critical time–frequency correlation features in audio signals; secondly, the fixed receptive field of the convolution kernels restricts the model’s ability to adapt to multi-scale time–frequency textures; thirdly, the simple combination of pointwise convolutions lacks a mechanism for channel fusion.

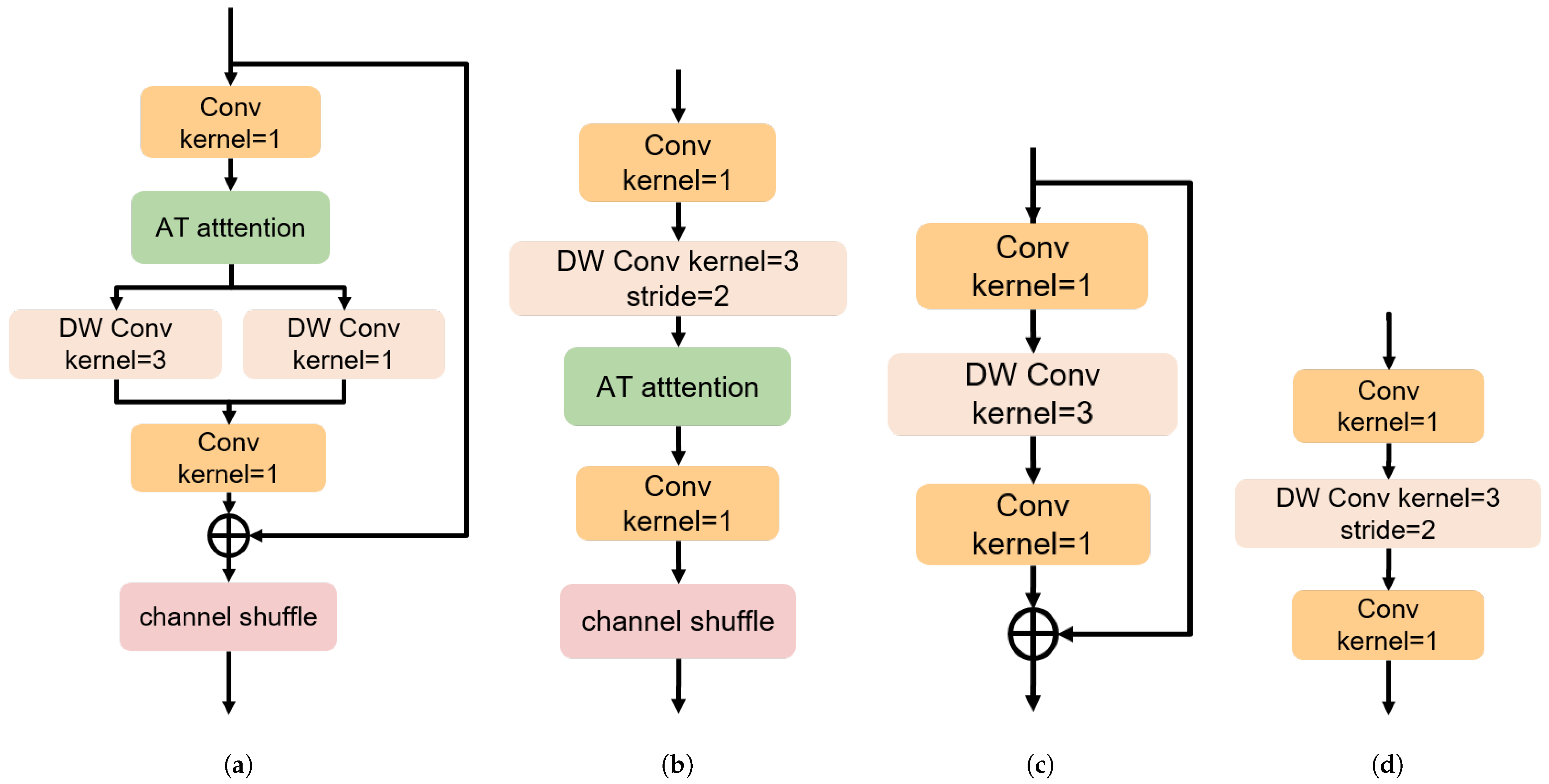

To overcome these limitations, this study innovatively combines the ATA attention mechanism with traditional convolution operations and proposes the Mobile-ATA-classifier module. This module adopts a three-layer cascade refinement design, where each layer consists of a standard feature learning module and a downsampling (as shown in

Figure 6a,b). The introduction of the ATA attention mechanism effectively compensates for the shortcomings of depthwise separable convolutions: by establishing attention weights across channels in the full dimension, it enhances the model’s perception of time–frequency features, enabling adaptive focus on key frequency regions. Meanwhile, the hierarchical structure design realizes progressive feature extraction from local to global. This innovative architecture significantly improves the model’s ability to represent complex audio features while maintaining computational efficiency advantages.

In the standard convolutional architecture, the model typically consists of three key components: a expansion convolution for channel interaction, a depthwise separable convolution (DWConv) with a kernel responsible for spatial information fusion, and a convolution for channel fusion to achieve feature recombination. To more effectively capture the texture features of audio spectrograms, we optimized the basic structure as follows: first, a parallel mapping branch was introduced alongside the original spatial convolution layer to enhance multi-scale feature extraction capability; second, the AT attention module was innovatively added before the spatial convolution to strengthen the representation of key texture components in the feature space through the attention mechanism. Notably, considering that the downsampling operation alters the spatial structure of the feature maps, the AT attention module in the downsampling layer was moved after the spatial convolution, enabling it to more accurately learn the spatial texture features of the downsampled audio.

This improved design allows the model to better focus on critical texture information in audio signals, thereby enhancing the effectiveness of feature representation.

In traditional convolutional architectures, as shown in

Figure 6c,d, convolutional layers and downsampling convolutional layers are typically connected by two consecutive

convolutions. This fixed connection pattern causes channel information to flow along predefined paths, limiting the diversity of feature interactions. To enhance the dynamic fusion capability of channel information, this work introduces a channel reorganization method.

As shown in

Figure 7, we evenly divide the input feature channels into

g groups, with each group containing

n channels, resulting in a total of

channels. As a concrete example, assume the features are divided into four groups (

). The original features can then be represented as

where

to

denote subsets of features, each comprising

n channels.

Next, the feature recombination operation is performed, which consists of the following three steps:

Reshape: The original

channels, initially arranged sequentially, are reorganized into a matrix with

n rows and

g columns:

Transpose: This matrix is transposed, resulting in a new matrix with

g rows and

n columns:

Flatten: The transposed matrix is then flattened back into a one-dimensional feature representation of size

:

After this transformation, the recombined features

are obtained. The key insight of this transformation is that it creatively cross-combines channels from the same positional index across the original groups. Specifically, the new

consists of the first channel from each original group,

consists of the second channel from each group, and so on.

This recombination mechanism effectively establishes dynamic connections across feature groups, enabling deep interaction and information fusion between different feature subsets. The process enhances cross-group feature integration while maintaining computational efficiency.

This innovative channel permutation strategy has several notable advantages: firstly, while maintaining computational efficiency, it significantly enhances feature representation by establishing cross-group feature correlations; secondly, the dynamic channel reorganization mechanism breaks the fixed connection pattern of traditional convolutions, enabling adaptive feature information interaction; lastly, by increasing feature diversity, this structure builds a richer representation space for subsequent feature extraction. Experiments demonstrate that this structured feature reorganization effectively promotes information fusion across different channel features, allowing the model to learn more discriminative channel interaction patterns and thereby improving the overall representational power of the network.

This structure breaks the original fixed channel combination pattern, allowing information to interact in a more random and flexible manner during propagation at each layer. Compared to the static connections in traditional convolutions, this method introduces an additional cross-channel feature fusion mechanism, enhancing the model’s expressive power and enabling it to learn more robust channel interaction patterns.

3. Experiments and Evaluation

In the experiments, we used the development and additional datasets from the DCASE 2020 Challenge Task 2 [

26] to evaluate our model. This dataset consists of parts of the MIMII dataset [

27] and the ToyADMOS dataset [

28]. The MIMII dataset contains four types of machines (i.e., fan, pump, slider, and valve), each with seven different machines. The ToyADMOS dataset includes two machine types (i.e., ToyCar and ToyConveyor), with seven and six different machines, respectively. In the experiments, we used the training data (normal sounds) from the Task 2 development and additional datasets as the training set, and the test data (normal and anomalous sounds) from the development dataset was used for evaluation.

In this study, we employed AUC (Area Under the ROC Curve), partial AUC (pAUC), and minimum AUC (mAUC) as performance evaluation metrics to comprehensively assess the classifier’s performance from different perspectives. AUC, as a conventional metric, reflects the overall ability of the model to distinguish positive and negative samples across all possible classification thresholds; values closer to 1 indicate a better global ranking performance. Considering the strict requirements for low false alarm rates in practical industrial applications, we computed the pAUC within the false positive rate (FPR) range , which better reflects model performance in critical operating regions. Furthermore, to evaluate the detection stability across different individual machines, we introduced the mAUC metric, defined as the minimum AUC among different machines of the same machine type. This design provides more practical guidance for model performance evaluation.

This study focuses on a classification task involving a dataset comprising 41 distinct machine types and their corresponding equipment IDs, with detailed parameter configurations provided in

Table 1. The experiment adopts the Mobile-ATA classifier architecture, where the input feature dimension of the model is set to

, and the channel shuffle operation is configured with eight groups. The time-frequency diagram (TF-gram) feature extraction strictly adheres to the standardized procedure outlined in Reference [

11]. The T-LSTM network employs a three-layer architecture, with both the hidden layer and input layer dimensions uniformly set to 128.

During the audio signal preprocessing stage, all sample data were standardized using a sampling rate of 16 kHz and subjected to a Hamming window function. The parameters for generating the Mel spectrogram are configured as follows: 128 Mel filter banks were utilized, with valve-type data employing a 1024-point FFT (the same configuration was applied by default to other data types), and a fixed frame shift of 512 sampling points.

The AdamW optimizer was employed for parameter optimization during model training, with an initial learning rate of 0.0001 and a cosine annealing learning rate scheduling strategy. The loss function adopts Noise-ArcMix [

10], configured with parameters

,

, and

. The training process maintains a batch size of 64 and runs for 300 epochs. To ensure the reliability and consistency of the experiment, all machine audio data were processed using uniform standards and training configurations, thereby guaranteeing the comparability and reproducibility of the feature extraction and model training processes.

3.1. Performance Comparison

Table 2 presents a comprehensive performance comparison between ATA-MSTF-Net and current mainstream methods, including IDNN, MobileNetV2, Glow_Aff, ST-gram-MFN, CLP-SCF, TASTgram (NAMix), and TFSTgram (OS-SCL). Experimental results show that, compared to the current state-of-the-art TFSTgram (OS-SCL), ATA-MSTF-Net achieves improvements of 0.44% and 0.35% in average AUC and pAUC respectively, setting a new performance benchmark. Although its performance on the Slider and Valve machine categories is on par with the existing best methods, it demonstrates significant advantages across most other machine types. Notably, on the ToyConveyor dataset—where existing models generally perform poorly—ATA-MSTF-Net attains a remarkable 2.2% improvement. This outstanding result not only validates the effectiveness of the proposed method but also highlights its strong generalization ability in complex industrial scenarios. These experimental findings fully demonstrate the advancement and practical value of ATA-MSTF-Net in the field of industrial anomaly detection.

Table 3 presents a detailed comparison of detection performance based on machine ID using the mAUC metric. Notably, the experimental results show that detection tasks among machines of the same type often have the most challenging performance. Among all tests, ATA-MSTF-Net demonstrates outstanding results on the ToyConveyor dataset, achieving a remarkable 9.89% improvement in mAUC compared to the current best model. This breakthrough strongly highlights the superiority of the proposed model. Although the performance on the Pump, Valve, and ToyCar datasets shows slight gaps compared to the optimal solutions, overall, ATA-MSTF-Net maintains competitive performance across all test datasets. These results convincingly confirm the excellent robustness and generalization capability of ATA-MSTF-Net in cross-machine-type anomaly detection tasks.

Table 4 highlights the number of parameters and the performance of our approach compared with those of the SOTA. Our system offers a good trade-off between model complexity and performance.

In the design of the Mobile-ATA-classifier, we innovatively introduced a Channel Shuffle mechanism to enhance the model’s discriminative ability for multi-source machine anomaly features. Through systematic experiments, we found that this module plays a key role in improving model performance. To further investigate the impact of the channel shuffle mechanism, we conducted an ablation study on the number of groups (g). The experimental results reveal a distinct nonlinear relationship between model performance and the number of groups: as g increases from 2 to 8, classification accuracy steadily improves; however, when g exceeds 16, performance begins to decline. This phenomenon can be explained from the perspective of feature learning:

When the number of groups is small (), inter-channel interactions are limited, making it difficult to fully explore the nonlinear correlations among deep features;

At a moderate number of groups (), the model can establish richer cross-channel connections between the two convolution layers, achieving enhanced feature expression through moderate feature perturbations;

However, when the number of groups is too large (), excessive feature perturbations lead to the dispersion of critical feature information, weakening the model’s ability to focus on discriminative features.

The results indicate that setting the number of groups to

yields the best classification performance, providing important guidance for parameter configuration of the channel shuffle mechanism (

Table 5).

3.2. Ablation Study

To validate the effectiveness of the proposed modules, we evaluate their impact by modifying the classifier inputs and types. The results are shown in the following table.

This study adopts the TF+mel+T-gram+mobile classifier as the baseline comparison scheme, where the TF module is derived from [

9], and both the T-gram and mobile classifier components are inherited from the ST-gram approach. By integrating these two methods to build the baseline model, the experimental results indicate that the primary performance improvement stems from the introduction of the mobile AT classifier. This module demonstrates significant advantages across multiple major datasets, with particularly strong results on the ToyConveyor and Pump datasets (

Table 6).

It is worth noting that although the Time Attention mechanism leads to a slight decrease in metrics on the Slider dataset (possibly due to the lack of pronounced periodic characteristics in the Slider’s acoustic signals, where anomalies are widely distributed across time frames rather than concentrated in specific periods, causing the attention mechanism to over-focus on certain time segments and thereby harming detection performance), its improvements on other datasets effectively compensate for this shortcoming. Overall, the experimental data clearly verify the effectiveness and general applicability of the proposed modules in enhancing anomaly detection performance.