Research on Endogenous Security Defense for Cloud-Edge Collaborative Industrial Control Systems Based on Luenberger Observer

Abstract

1. Introduction

1.1. Research Background

- Real-time performance [4]: ICSs require microsecond-level response times to maintain process stability. Complex security software, which introduces latency through deep packet inspection or signature matching, can disrupt control loops, leading to process oscillations or failures.

- Protocol specificity: OT relies on legacy protocols (e.g., Modbus, DNP3, PROFINET) that lack built-in security features. Firewalls designed for IT protocols (e.g., TCP/IP) often fail to filter malicious traffic over these specialized protocols.

- Resource constraints [10]: OT devices (e.g., programmable logic controllers, remote terminal units) typically have limited processing power and memory, making them unable to run heavyweight security tools.

- Adaptive threats [11]: Signature-based IDSs, which rely on known attack patterns, are ineffective against zero-day attacks or targeted campaigns (e.g., Advanced Persistent Threats (APTs)) tailored to specific ICS environments.

1.2. Research Objectives

- False Alarm Rate (FAR): Minimizing false alarms to avoid unnecessary process shutdowns (a single false alarm in a nuclear power plant could cost millions in downtime).

- Missed Detection Rate (MDR): Ensuring even low-intensity replay attacks (e.g., short windows of replayed data) are detected to prevent cumulative system drift.

- Detection Delay: Quantifying the time between attack initiation and alarm triggering, with a target of <5 control cycles for critical processes.

2. Related Work

2.1. Research on Industrial Control System Security Protection

2.2. Detection of Data Integrity Attacks

2.3. Application of Observers and Residual Analysis in Security Defense

3. Model and Algorithm Design

3.1. Introduction to ICS Security Modeling

3.2. Mathematical Modeling of Cyber-Physical ICS Systems

- is the internal state vector at time step k, where denotes the n-dimensional space of real numbers, representing system variables such as temperature, pressure, motor speed, etc.

- denotes the control input vector applied by the controller to the actuators.

- is the measurement output vector collected by sensors and used for control decisions.

- captures exogenous disturbances like ambient noise, load fluctuations, or supply variability.

- models potential adversarial actions including sensor spoofing or replay.

- and are zero-mean, uncorrelated Gaussian process and measurement noise, respectively.

3.3. State Observability and Sensor Configuration

3.4. Design of Secure State Observers

Robust Observer Design Considerations

3.5. Modeling Replay and Other Attack Types

- Replay Attack: ; historical data are re-sent to hide deviations.

- Bias Injection: ; attacker adds a persistent offset to mislead the controller.

- DoS Attack: is missing; the communication channel is jammed or blocked.

- False Data Injection: ; dynamic corruption injected via compromised sensor.

3.6. Residual Generation and Detection Algorithm

| Algorithm 1 Observer with Real-Time Residual Monitoring |

|

4. Theoretical Analysis and Performance Evaluation

4.1. Overview and Motivation

4.2. Estimation Error Dynamics and Stability Analysis

4.3. Residual Analysis Under Normal Conditions

4.4. Residual Behavior Under Replay Attacks

4.5. Detection Algorithms and Performance Metrics

4.6. Detection Delay and Sensitivity Analysis

4.7. Robustness Considerations

- Model mismatch: Incorrect matrices A, B, or C distort observer predictions.

- Parameter tuning: Thresholds and H and drift term must be calibrated based on historical data.

- Sensor placement: Redundant and strategically located sensors improve observability and residual quality.

- Correlated noise: Assumptions of Gaussian i.i.d. noise may not hold in practice.

5. Simulation Verification

5.1. Simulation Setup

- System and Observer Initialization: The simulation was run for 100 discrete time steps. The initial state of the system was set to , and the observer’s initial estimate was also initialized to to simulate a scenario where the observer starts with perfect knowledge, isolating the effects of subsequent attacks. A constant control input was applied throughout the simulation to ensure persistent system excitation.

- Noise and Process Parameters: The system dynamics were subject to zero-mean Gaussian process noise and measurement noise , with covariance matrices and , respectively. These values represent typical levels of process disturbances and sensor inaccuracies in industrial settings.

- Detection Threshold: Anomaly detection relies on a residual-based method. An attack is flagged if the absolute magnitude of the residual, , exceeds a predefined threshold . To balance detection sensitivity with a low false alarm rate, the threshold was determined based on the statistical properties of the residual during normal (attack-free) operation. The threshold was set to , where is the standard deviation of the residual under noise, effectively implementing a 3-sigma rule. This ensures that the probability of a false positive in any given time step is less than 0.3%.

5.2. System Simulation and Defense Model

5.3. Attack Scenarios and Detection Analysis

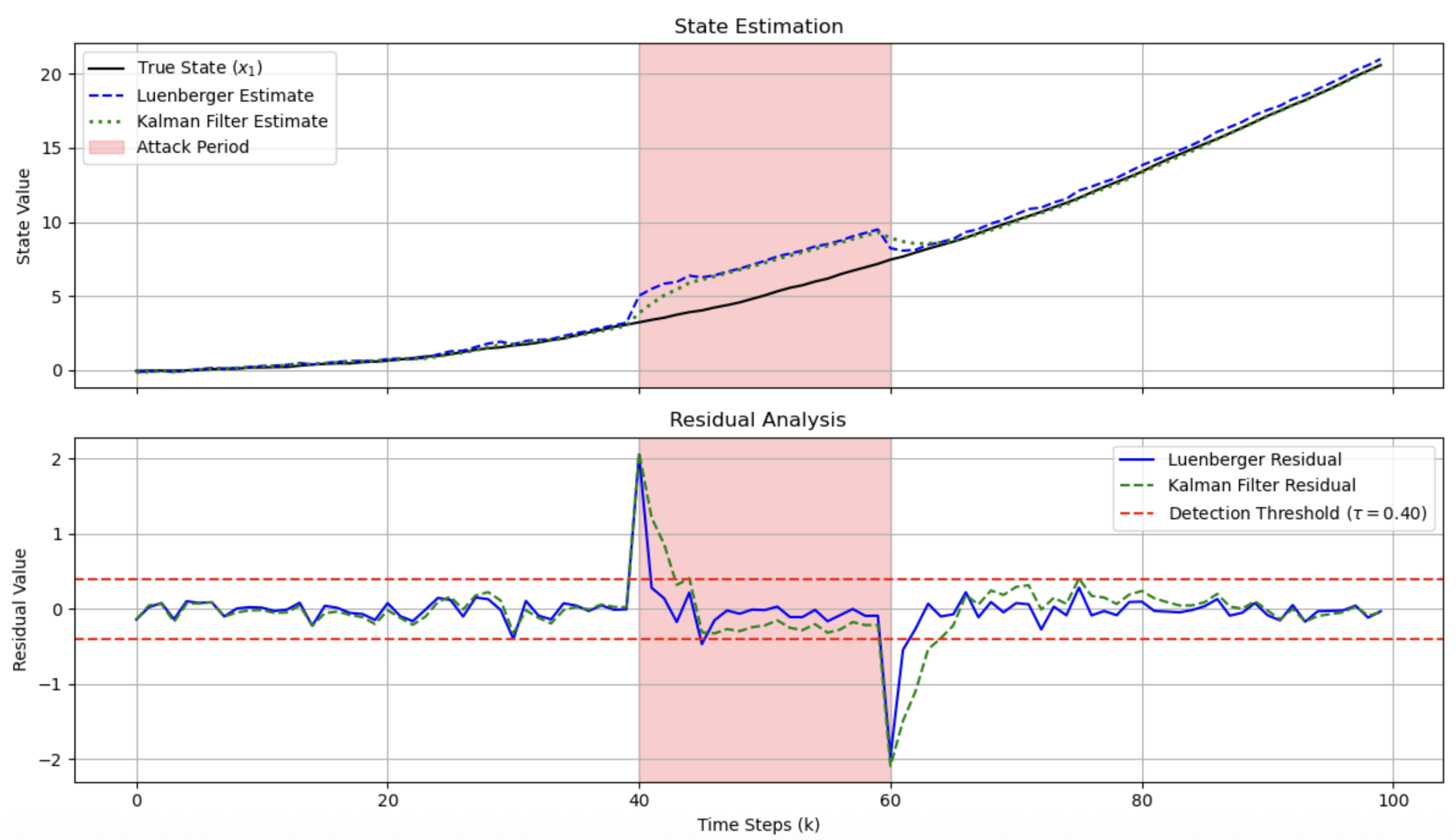

5.3.1. False Data Injection (FDI) Attack

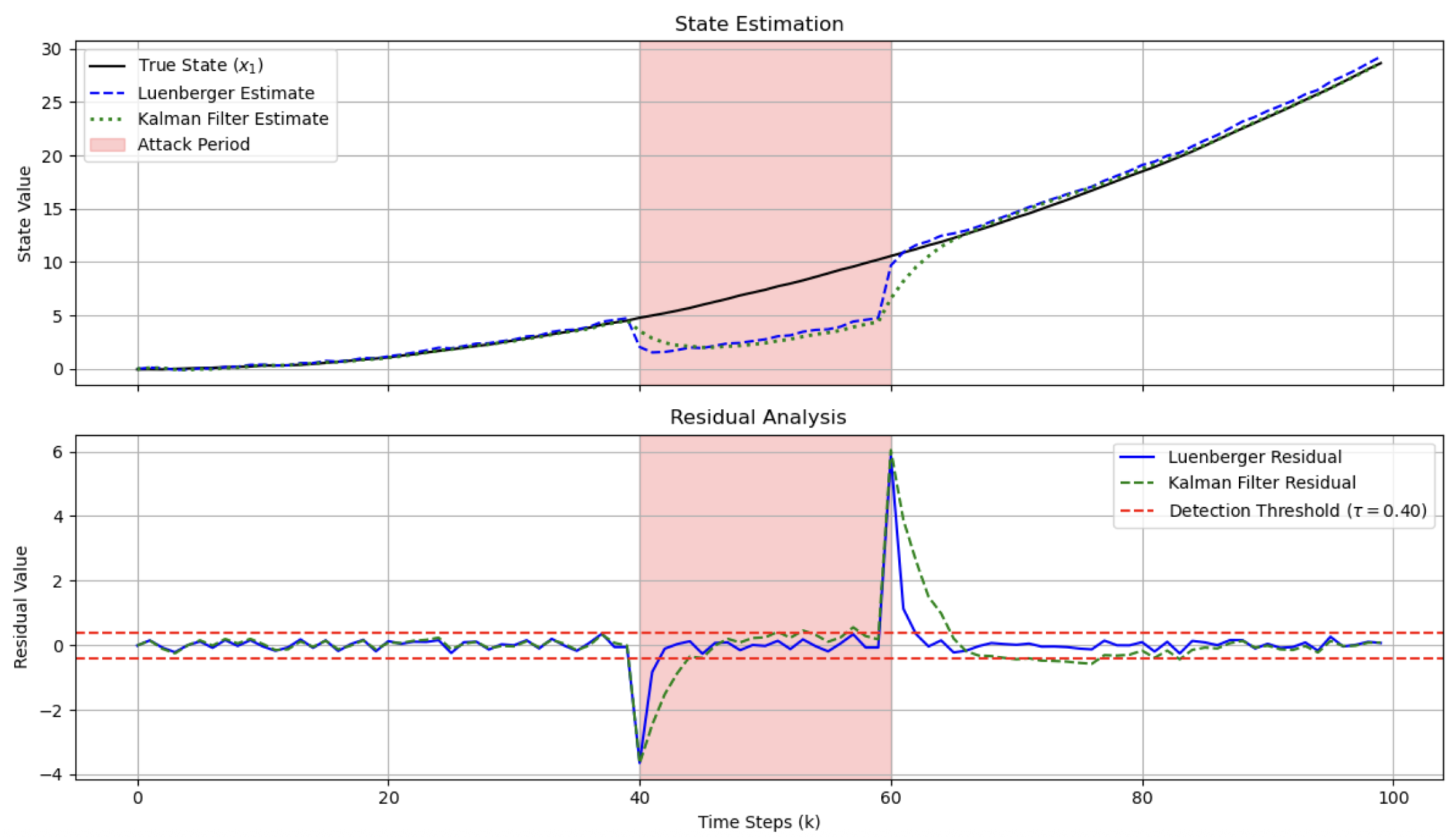

5.3.2. Replay Attack

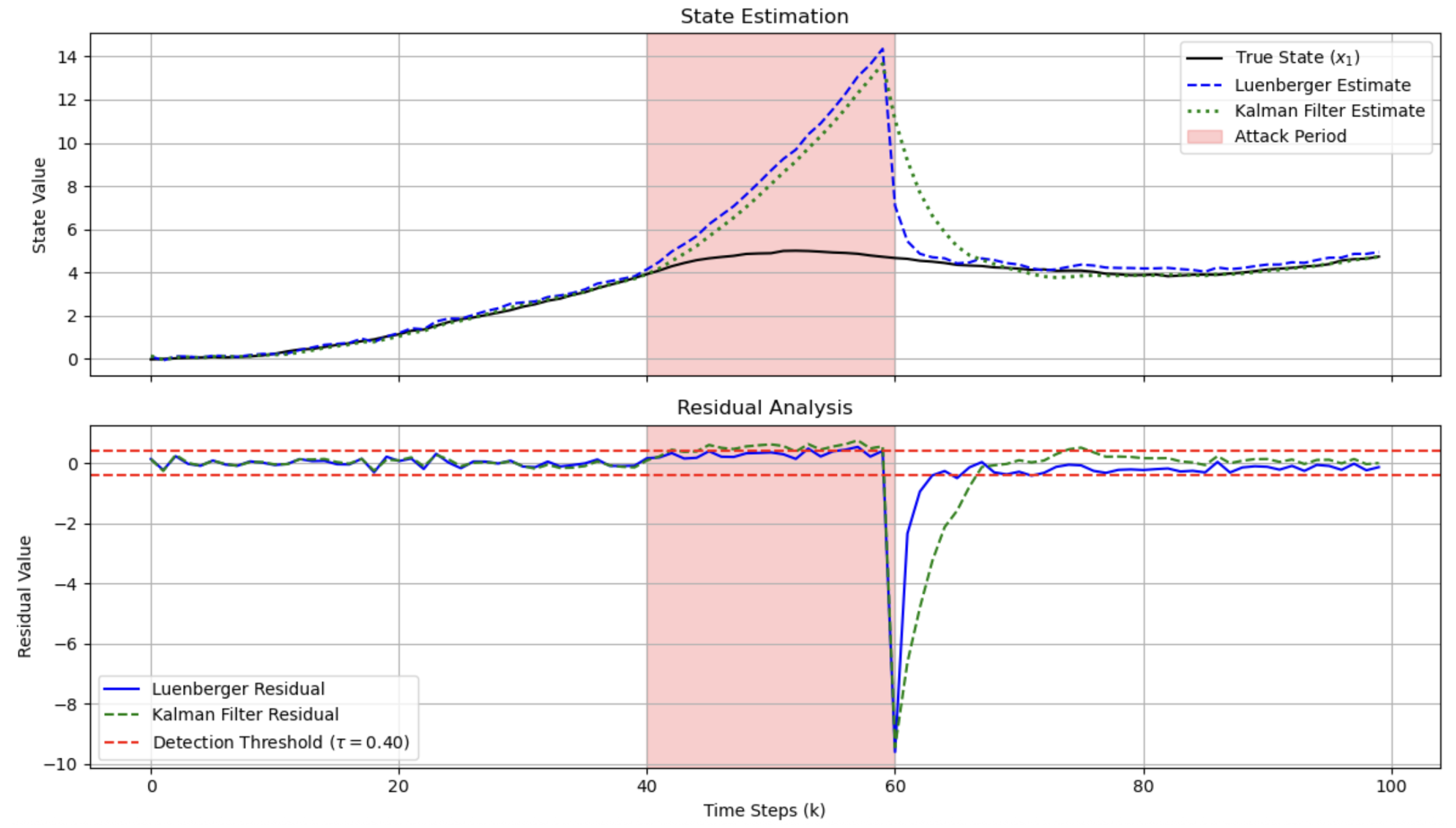

5.3.3. Covert Attack

5.4. Overall Performance and Discussion

6. Conclusions

Limitations and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Drias, Z.; Serhrouchni, A.; Vogel, O. Analysis of cyber security for industrial control systems. In Proceedings of the 2015 International Conference on Cyber Security of Smart Cities, Industrial Control System and Communications (SSIC), Shanghai, China, 5–7 August 2015; pp. 1–8. [Google Scholar]

- Stouffer, K.; Falco, J.; Scarfone, K. Guide to industrial control systems (ICS) security. Nist Spec. Publ. 2011, 800, 16. [Google Scholar]

- Cárdenas, A.A.; Amin, S.; Sastry, S. Research challenges for the security of control systems. HotSec 2008, 5, 1158. [Google Scholar]

- Koay, A.M.; Ko, R.K.L.; Hettema, H.; Radke, K. Machine learning in industrial control system (ICS) security: Current landscape, opportunities and challenges. J. Intell. Inf. Syst. 2023, 60, 377–405. [Google Scholar] [CrossRef]

- Moreno Escobar, J.J.; Morales Matamoros, O.; Tejeida Padilla, R.; Lina Reyes, I.; Quintana Espinosa, H. A comprehensive review on smart grids: Challenges and opportunities. Sensors 2021, 21, 6978. [Google Scholar] [CrossRef]

- Langner, R. Stuxnet: Dissecting a cyberwarfare weapon. IEEE Secur. Priv. Mag. 2011, 9, 49–51. [Google Scholar] [CrossRef]

- Yu, Y.; Yang, W.; Ding, W.; Zhou, J. Reinforcement learning solution for cyber-physical systems security against replay attacks. IEEE Trans. Inf. Forensics Secur. 2023, 18, 2583–2595. [Google Scholar] [CrossRef]

- Tian, Y.; Nogales, A.F.R. A Survey on Data Integrity Attacks and DDoS Attacks in Cloud Computing. In Proceedings of the 2023 IEEE 13th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–11 March 2023; pp. 788–794. [Google Scholar]

- Asiri, M.; Saxena, N.; Gjomemo, R.; Burnap, P. Understanding indicators of compromise against cyber-attacks in industrial control systems: A security perspective. ACM Trans.-Cyber-Phys. Syst. 2023, 7, 1–33. [Google Scholar] [CrossRef]

- Alsabbagh, W.; Langendörfer, P. Security of programmable logic controllers and related systems: Today and Tomorrow. IEEE Open J. Ind. Electron. Soc. 2023, 4, 659–693. [Google Scholar] [CrossRef]

- Iyer, K.I. From Signatures to Behavior: Evolving Strategies for Next-Generation Intrusion Detection. Eur. J. Adv. Eng. Technol. 2021, 8, 165–171. [Google Scholar]

- Wu, J. Cyberspace endogenous safety and security. Engineering 2022, 15, 179–185. [Google Scholar] [CrossRef]

- Pasqualetti, F.; Dörfler, F.; Bullo, F. Attack detection and identification in cyber-physical systems. IEEE Trans. Autom. Control. 2013, 58, 2715–2729. [Google Scholar] [CrossRef]

- Luenberger, D.G. Observing the state of a linear system. IEEE Trans. Mil. Electron. 1964, 8, 74–80. [Google Scholar] [CrossRef]

- Niazi, M.U.B.; Cao, J.; Sun, X.; Das, A.; Johansson, K.H. Learning-based design of Luenberger observers for autonomous nonlinear systems. arXiv 2022, arXiv:2210.01476. [Google Scholar]

- Specht, F.; Otto, J. Efficient Machine Learning-Based Security Monitoring and Cyberattack Classification of Encrypted Network Traffic in Industrial Control Systems. In Proceedings of the 2024 IEEE 29th International Conference on Emerging Technologies and Factory Automation (ETFA), Padova, Italy, 10–13 September 2024; pp. 1–7. [Google Scholar]

- Uysal, E.; Kaya, O.; Ephremides, A.; Gross, J.; Codreanu, M.; Popovski, P.; Assaad, M.; Liva, G.; Munari, A.; Soret, B.; et al. Semantic communications in networked systems: A data significance perspective. IEEE Netw. 2022, 36, 233–240. [Google Scholar] [CrossRef]

- Al-Muntaser, B.; Mohamed, M.A.; Tuama, A.Y. Real-Time Intrusion Detection of Insider Threats in Industrial Control System Workstations Through File Integrity Monitoring. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 326–333. [Google Scholar] [CrossRef]

- Çelebi, M.; Özbilen, A.; Yavanoğlu, U. A comprehensive survey on deep packet inspection for advanced network traffic analysis: Issues and challenges. Niğde Ömer Halisdemir Üniv. Mühendis. Bilim. Derg. 2023, 12, 1–29. [Google Scholar] [CrossRef]

- Guan, P.; Iqbal, N.; Davenport, M.A.; Masood, M. Solving inverse problems with model mismatch using untrained neural networks within model-based architectures. arXiv 2024, arXiv:2403.04847. [Google Scholar] [CrossRef]

- Yan, J.; Tang, Y.; Tang, B.; He, H.; Sun, Y. Power grid resilience against false data injection attacks. In Proceedings of the 2016 IEEE Power and Energy Society General Meeting (PESGM), Boston, MA, USA, 17–21 July 2016; pp. 1–5. [Google Scholar]

- Kim, M.; Jeon, S.; Cho, J.; Gong, S. Data-Driven ICS Network Simulation for Synthetic Data Generation. Electronics 2024, 13, 1920. [Google Scholar] [CrossRef]

- Ali, B.S.; Ullah, I.; Al Shloul, T.; Khan, I.A.; Khan, I.; Ghadi, Y.Y.; Abdusalomov, A.; Nasimov, R.; Ouahada, K.; Hamam, H. ICS-IDS: Application of big data analysis in AI-based intrusion detection systems to identify cyberattacks in ICS networks. J. Supercomput. 2024, 80, 7876–7905. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, W.; Shi, Y.; Duan, S.; Liu, J. Industrial big data analytics: Challenges, methodologies, and applications. arXiv 2018, arXiv:1807.01016. [Google Scholar] [CrossRef]

- Ahmad, S.; Ahmed, H. Robust intrusion detection for resilience enhancement of industrial control systems: An extended state observer approach. IEEE Trans. Ind. Appl. 2023, 59, 7735–7743. [Google Scholar] [CrossRef]

- Kordestani, M.; Saif, M. Observer-based attack detection and mitigation for cyberphysical systems: A review. IEEE Syst. Man, Cybern. Mag. 2021, 7, 35–60. [Google Scholar] [CrossRef]

- Kim, T.; Shim, H.; Cho, D.D. Distributed Luenberger observer design. In Proceedings of the 2016 IEEE 55th Conference on Decision and Control (CDC), Las Vegas, NV, USA, 12–14 December 2016; pp. 6928–6933. [Google Scholar]

- Cong, X.; Zhu, H.; Cui, W.; Zhao, G.; Yu, Z. Critical Observability of Stochastic Discrete Event Systems Under Intermittent Loss of Observations. Mathematics 2025, 13, 1426. [Google Scholar] [CrossRef]

| Attack Scenario | Detection Outcome | Detection Delay (Time Steps) |

|---|---|---|

| False Data Injection (FDI) | Success | 1 |

| Replay Attack | Success | 1 |

| Covert Attack | Fail | N/A 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guan, L.; Tao, C.; Chen, P. Research on Endogenous Security Defense for Cloud-Edge Collaborative Industrial Control Systems Based on Luenberger Observer. Mathematics 2025, 13, 2703. https://doi.org/10.3390/math13172703

Guan L, Tao C, Chen P. Research on Endogenous Security Defense for Cloud-Edge Collaborative Industrial Control Systems Based on Luenberger Observer. Mathematics. 2025; 13(17):2703. https://doi.org/10.3390/math13172703

Chicago/Turabian StyleGuan, Lin, Ci Tao, and Ping Chen. 2025. "Research on Endogenous Security Defense for Cloud-Edge Collaborative Industrial Control Systems Based on Luenberger Observer" Mathematics 13, no. 17: 2703. https://doi.org/10.3390/math13172703

APA StyleGuan, L., Tao, C., & Chen, P. (2025). Research on Endogenous Security Defense for Cloud-Edge Collaborative Industrial Control Systems Based on Luenberger Observer. Mathematics, 13(17), 2703. https://doi.org/10.3390/math13172703