1. Introduction

User-generated content has emerged as a critical asset for understanding the customer experience and making informed decisions across various domains [

1,

2]. Among the many types of digital content, text-based reviews provide a valuable perspective on individuals’ perceptions, expectations, and evaluations. Despite their richness, these reviews are often underutilized as a structured source of knowledge that could support the development of more responsive management strategies [

3].

In the context of cultural heritage, analyzing visitor reviews is guided by the principle highlighted in [

4], which states that effective interpretation is vital for both managing and conserving heritage sites and ensuring sustainable tourism. These publicly shared comments function not only as feedback, but as cultural messages in themselves—expressing interpretation, criticism, emotional response and suggestions for change. Building on Moscardo’s notion of “mindful visitors” [

4], this study approaches these digital traces as deliberate and reflective acts. Content analysis of these reviews can yield valuable insights into tourist behavior and inform adaptive strategies for both tourists and local authorities.

To fully leverage text-based reviews, we focus our research on advanced techniques in natural language processing (NLP). NLP has evolved significantly, progressing from traditional machine learning methods to sophisticated transformer-based architectures, such as those used in large language models (LLMs) [

5,

6]. Unlike conventional deep learning approaches, transformer-based models excel in capturing richer contextual relationships, supporting parallel sequence processing and modeling long-term dependencies [

7]. The availability of pre-trained models, such as OpenAi’s GPT or Google’s Gemini model, has expanded the applicability of LLMs across domains. These models enable the efficient execution of various NLP tasks, including summarization, topic modeling, and sentiment analysis. A common category of transformer models includes BERT and its variants, which are often designed to address specific limitations of the original BERT [

8]. For instance, DistilBERT [

9], which is a lightweight version of BERT, is frequently used for sentiment analysis. BERTopic [

10] is widely adopted for topic modeling, while recent models like FASTopic [

11] are designed to address the task efficiency. Throughout the related work section, we thoroughly reflect on the NLP tasks and cutting-edge transformer-based models. On the other hand, many existing studies integrate sentiment analysis into topic modeling [

12] or apply summarization at the topic level [

13], while others use these techniques separately [

14]. They fail to provide a single integral platform with a richer setting for review analysis that relates to different users and various scenarios.

This paper proposes a general approach to analyze text reviews by uniquely integrating topic modeling, sentiment analysis, and summarization from various perspectives. Unlike existing approaches, which provide features partially, we provide a richer setting for review analysis from the point of view of a particular site, but also a theme: positive and negative summaries at the site level, common topics, visitor sentiments towards key topics, and, especially, user-defined topics for advanced site exploration. The proposed framework is validated through a use case focusing on Kotor (Montenegro), a UNESCO World Heritage Site. The city’s layered history and evolving urban landscape provide a rich setting for exploring how digital traces intersect with official heritage values. Using Google Maps reviews, this study explores how visitors experience and evaluate the city, and whether their views align with the site’s UNESCO-designated criteria of outstanding universal value (OUV) [

15]. To implement a general framework, we have developed VisitorLens AI, a tailored system that performs text analysis at the site and topic level. It effectively combines DistilBERT for sentiment analysis, FASTopic for topic modeling, and the Gemini Flash 2.0 model for abstractive summarization [

16], creating a comprehensive tool for both tourists and local authorities to derive contextually meaningful insights from visitor reviews.

The contribution of this research is two-fold, comprising both conceptual novelties through the development of a general AI review analysis framework, and practical implications demonstrated via its specific realization tailored for cultural heritage management, VisitorLens AI:

The general AI framework for text review analysis introduces an integrated approach that uniquely combines NLP components for text summarization, topic modeling, and sentiment analysis. By combining multiple NLP techniques within a unified structure, it enables the comprehensive and insightful understanding of user reviews.

In addition to its integrative analytical capacity, the framework is designed to be domain-independent. It allows straightforward customization, enabling applications across various contexts that involve text-based feedback, ranging from product and service evaluation to tourism and cultural heritage.

The

practical relevance of the proposed framework is demonstrated through its domain-specific implementation,

VisitorLens AI, designed for the context of cultural heritage management. As part of an open-source initiative, its full implementation and data are available on GitHub

https://github.com/MateaLukiccc/Location-Based-Review-Insights (accessed on 24 July 2025), ensuring transparency, reproducibility, and ease of adoption in both academic research and practical applications.

VisitorLens AI responds to the informational needs of both tourists and cultural heritage authorities. For tourists, we have ensured that the extracted insights are both meaningful and context-specific via the evaluation of our framework (Q1). As the system facilitates meaningful synthesis of individual impressions, it encourages more participatory forms of heritage experience. For institutions, we have also conducted expert evaluations to ensure that these insights are actionable (Q2) and relevant for evaluation criteria defined by UNESCO’s outstanding universal value framework (Q3). VisitorLens AI empowers visitors as co-creators of cultural meaning while enhancing institutional responsiveness. Finally, the research is of interest to the NLP and LLM communities as a step forward in smart tourism.

Finally, this work is motivated not only by academic inquiry but also by a personal connection to the site. It reflects the belief that effective heritage management in the digital age must integrate public sentiment, institutional objectives, and technological innovation in a balanced and inclusive manner.

The remainder of this paper is organized as follows:

Section 2 reviews related work, and

Section 3 presents the theoretical background of the NLP techniques applied. In

Section 4, we describe the methodology, including both the general framework and its application in the heritage context. In

Section 5, we present evaluation results, both quantitative using standard metrics and qualitative/expert-based. After we discuss limitations in

Section 6,

Section 7 concludes the paper.

2. Related Work

A recent survey on emerging trends in NLP [

14] displays the dominance of deep learning architectures over traditional machine learning (ML) techniques. The latest advances in NLP [

17] have significantly enhanced the effectiveness of text review analysis, especially through DL architectures and pre-trained language models [

18,

19]. Techniques built on transformer models, such as BERT, provide a context-aware understanding of texts [

8], handling complexities such as sarcasm, negation, and aspect-specific sentiment. Fine-tuned on datasets from platforms like Amazon or Yelp [

20,

21], these models offer nuanced interpretations of feedback.

Building on these advances, topic modeling has emerged as a powerful technique for uncovering latent themes within large collections of reviews [

22]. As an unsupervised machine learning method, it identifies clusters of related words and recurring patterns, transforming unstructured text into interpretable topics. By revealing the hidden thematic structure in customer feedback, topic modeling serves as a decision-support tool, aiding in recommendations and highlighting user concerns, emerging trends, and shifts in consumer priorities, without relying on pre-labeled data [

23]. In practice, conventional topic modeling techniques like latent dirichlet allocation (LDA) and non-negative matrix factorization (NMF) are commonly applied to review datasets to uncover underlying themes in customer feedback. While these methods can extract interpretable topics from noisy and diverse data, they suffer from scalability and efficiency limitations [

22], largely due to their reliance on model-specific derivations [

11]. To overcome these challenges, neural topic models (NTMs) are introduced. Unlike traditional approaches, NTMs leverage modern GPU acceleration, enabling more efficient processing of large datasets. These models are highly diverse, with variational autoencoder (VAE)-based and clustering-based techniques being the most prominent today. However, each has its trade-offs: VAE-based models, such as CombinedTM [

24], which extends the earlier ProdLDA model [

25], are effective but require higher computational power, which can significantly limit their practicality for real-time or resource-constrained applications. In contrast, clustering-based methods like BERTopic offer greater efficiency but often struggle with topic collapsing, leading to redundant or repetitive topics [

26]. To address these limitations, we integrate FASTopic [

11] into our framework. FASTopic leverages semantic relations between document embeddings to efficiently uncover latent topics, offering a scalable solution that balances effectiveness, coherence, and adaptability across diverse scenarios.

Topic modeling becomes even more powerful when combined with complementary techniques like sentiment analysis, which has been widely used to assess user opinions about products [

12]. Similarly, recent research [

27] has combined topic modeling using BERTopic and RoBERTa for sentiment analysis to provide a comprehensive analysis of user-generated content. Integrating these approaches provides deeper insights by revealing not just what topics users discuss, but also how they feel about specific aspects of a product or service. This combined analysis moves beyond general product sentiment to uncover nuanced attitudes toward particular themes, enabling more targeted and actionable business insights. Such nuanced analysis goes beyond general sentiment classification, enabling businesses to uncover fine-grained attitudes and derive more targeted, actionable insights. In addition to traditional machine learning and deep learning methods, recent work has emphasized the role of large language models (LLMs) and pre-trained transformers in sentiment analysis [

28]. Among these, BERT-based models, including RoBERTa and DistilBERT, have become the most commonly used for sentiment analysis tasks.

Another valuable technique is text summarization, which can be effectively combined with topic modeling to enhance the interpretability of results. By integrating LLMs with topic models, researchers can automatically generate descriptive titles and concise summaries for discovered topics [

29]. This approach not only improves topic interpretability but also facilitates quicker insights from large volumes of text. Furthermore, summarization can be applied at the topic level to distill key user opinions from reviews associated with specific themes [

13]. This enables a more granular understanding of sentiment patterns within each topic, complementing traditional sentiment analysis by providing contextualized overviews of customer feedback. In addition, ref. [

30] explores an alternative direction in which LLMs first generate summaries that are then input into topic models like BERTopic, aiming to enhance topic modeling performance.

A wide variety of NLP techniques have been successfully integrated into end-to-end systems (e.g., the Amazon AI Product Review Summarizer) to deliver actionable insights and user value. These systems often combine topic modeling, sentiment analysis, and summarization, as previously discussed, to enable more sophisticated text analysis and decision-making. To our knowledge, there is a lack of academic research on AI-driven review analysis that explores joint topic–sentiment–summarization approaches.

Large Language Models in Tourism and Cultural Heritage

The increasing sophistication of NLP techniques has opened new possibilities for domain-specific applications. One area where these methods have shown a particularly strong impact is the tourism and hospitality sector, where vast volumes of reviews, ratings, and feedback are produced across platforms like TripAdvisor and Airbnb.

Recent research in tourism increasingly applies large language models (LLMs) to automate sentiment classification, aspect-based summarization, and topic modeling on user-generated content. For instance, studies have explored combining BERT with traditional classifiers for hotel review sentiment analysis [

31] and evaluated the performance and cost-effectiveness of advanced LLMs like GPT-4o-mini before and after fine-tuning [

32]. Others have used pre-trained models for summarizing feedback across hotel attributes such as ChatGPT in [

33] and proposed training-free summarization approaches using retrieval-augmented generation (RAG) and long-context LLMs to handle large volumes of review data [

34]. These efforts collectively demonstrate how LLMs support deeper insight into guest experiences and improve decision-making in the hospitality sector.

In the field of cultural heritage, LLMs have enabled personalized museum guides and improved website usability by offering tailored recommendations and contextualized content [

4,

35]. However, ethical concerns remain. Studies show LLMs may distort cultural narratives or reflect biases, underlining the need for careful evaluation and human oversight [

36]. As cultural narratives become increasingly shaped by automated processes, it is important to combine automated NLP techniques with human expertise to ensure that AI-assisted cultural heritage systems remain accurate, inclusive, and contextually appropriate.

3. Theoretical Background

In this section, we present the theoretical foundations, as well as the strengths and weaknesses, of the three core models underpinning our AI framework. For each model, we survey its design principles, detail its key equations, and discuss the trade-offs that motivate its use in a unified pipeline.

3.1. Topic Modeling with FASTopic

The FASTopic model [

11] is introduced as a cutting-edge model for topic modeling with efficiency in mind. This model integrates two key components to achieve effective topic discovery: dual semantic-relation reconstruction (DSR) and the embedding transport plan (ETP). The DSR framework is responsible for reconstructing documents from latent topic and word distributions within a unified semantic space where document, topic, and word embeddings reside. Specifically, each document of the

N document’s

is reconstructed as a weighted combination of topics and words, expressed as follows:

Here,

represents the document-specific topic distribution for document

i, and

is the topic-specific word distribution matrix. The DSR objective is to minimize the error between the original document representation and its reconstruction across all documents, using a negative log-likelihood:

Minimizing

encourages the model to learn topic and word embeddings such that their combination can effectively reconstruct the input documents, thereby ensuring the semantic meaningfulness and distinctiveness of the learned topics. The following formulas used in this section were adapted from Wu et al. [

11].

This DSR process is combined with the embedding transport plan (ETP). While DSR learns the document–topic (

) and topic–word (

) distributions through reconstruction, ETP provides a mechanism to explicitly model and regularize the underlying semantic relations that give rise to these distributions. ETP frames the document-to-topic and topic-to-word relationships as entropic regularized optimal transport problems, solved to find transport plans

and

[

11], where

K is the number of topics and

V is the number of word embeddings:

The document–topic distributions

and topic–word matrix

used in the DSR objective are directly derived from these optimal transport plans (

and

). The ETP framework introduces its own objective,

, which minimizes the total transport cost based on these learned plans, with

as the transport cost matrix element:

The overall objective function for FASTopic is the minimization of the sum of the ETP objective and DSR objective:

This joint minimization ensures that the model learns embeddings that allow for accurate document reconstruction () while simultaneously regularizing semantic relationships via optimal transport (), thereby effectively mitigating relation bias and leading to high-quality topic discovery. One of the main advantages is that this objective is extremely simple compared to complex encoder–decoder architectures, and it optimizes only four parameters: topic and word embeddings and their weights . However, a key limitation of the model is its strong reliance on document embeddings generated by a transformer model. If these embeddings fail to capture the semantic nuances of the documents accurately, the quality of the learned topics and their distributions will be affected.

3.2. Sentiment Analysis with DistilBERT

DistilBERT is a compact transformer-based language model designed to preserve much of BERT’s language understanding capabilities while significantly reducing computational requirements [

9]. It is created through a process called

knowledge distillation, where a smaller “student” model is trained to mimic the outputs of a larger “teacher” model. The primary goal of DistilBERT is to maintain high performance on natural language-understanding tasks with fewer parameters, faster inference, and lower memory consumption.

Compared to BERT-base, which has 12 transformer layers and 110 million parameters, DistilBERT has only 6 transformer layers and approximately 66 million parameters. Despite this reduction, DistilBERT retains around 97% of BERT’s performance on benchmark tasks while being 40% smaller and 60% faster [

9].

The training of DistilBERT involves a combined loss function designed to align the student model with the teacher model. This includes a masked language modeling (MLM) loss (), which encourages the student to accurately predict masked tokens within a sentence. It also includes a distillation loss, which uses Kullback–Leibler (KL) divergence to minimize the difference between the output probability distributions of the student and teacher models (). Additionally, cosine embedding loss () is employed to ensure that the internal hidden representations of the student closely match those of the teacher.

Mathematically, distillation loss can be expressed as follows:

where

are weighting coefficients for each component of the total loss function.

This tailored training strategy enables DistilBERT to serve as an efficient alternative to BERT in tasks such as sentiment analysis, without the need for extensive computational resources.

We used the

distilbert-base-uncased-finetuned-sst-2-english model from Hugging Face’s

transformers library for our sentiment analysis tasks [

37]. This pretrained model specifically leverages DistilBERT’s distilled architecture while adding specialized fine-tuning on the Stanford Sentiment Treebank (SST-2) corpus. Since the model comes pre-optimized for this specific task, we were able to implement high-quality sentiment analysis without additional fine-tuning, achieving optimal performance with minimal computational resources.

3.3. Abstractive Summarization with Gemini

The emergence of LLMs has significantly transformed the approach to NLP tasks, particularly abstractive text summarization. Rather than relying on multiple fine-tuned transformer-based models for specific tasks, recent trends favor using general-purpose LLMs with prompt engineering, eliminating the need for task-specific training data [

38]. In this study, we leverage Google’s Gemini Flash 2.0 model for abstractive summarization. Given the straightforward nature of our task, summarizing user text reviews, a single LLM suffices without the need for complex, multi-model systems [

39]. This shift aligns with a broader industry movement toward simplification, as highlighted in works like [

40], and underscores how modern LLMs can effectively handle the diverse summarization of out-of-the-box tasks.

Gemini Flash 2.0 is especially notable for its rapid processing capabilities and an exceptionally large context window of up to 1 million tokens [

16,

41]. This allows the model to process and summarize large documents or multiple related texts simultaneously, making it particularly well-suited for summarization use cases. However, its closed-source nature and reliance on a cloud-based API pose limitations in terms of transparency and control. Despite this, we later demonstrate that Gemini Flash 2.0 consistently produces fluent, concise, and informative summaries, going beyond mere extraction by generating novel sentence structures and synthesizing key insights from the source material [

42]. As the only freely accessible model of its capabilities, Gemini Flash 2.0 provided an accessible benchmark for our evaluations, enabling greater reproducibility of our experiments and facilitating broader verification of our results by the research community.

4. Methods

This section details the general framework and its specific implementation (VisitorLens AI) for the UNESCO World Heritage Site use case. Building on the concept of “mindful visitors”, i.e., individuals who actively reflect upon their heritage experiences, we designed our analytical framework to prioritize interpretive depth and narrative coherence. It describes how VisitorLens AI was developed as a concrete example of the general approach, illustrating the processes used to generate meaningful insights for tourists and local authorities.

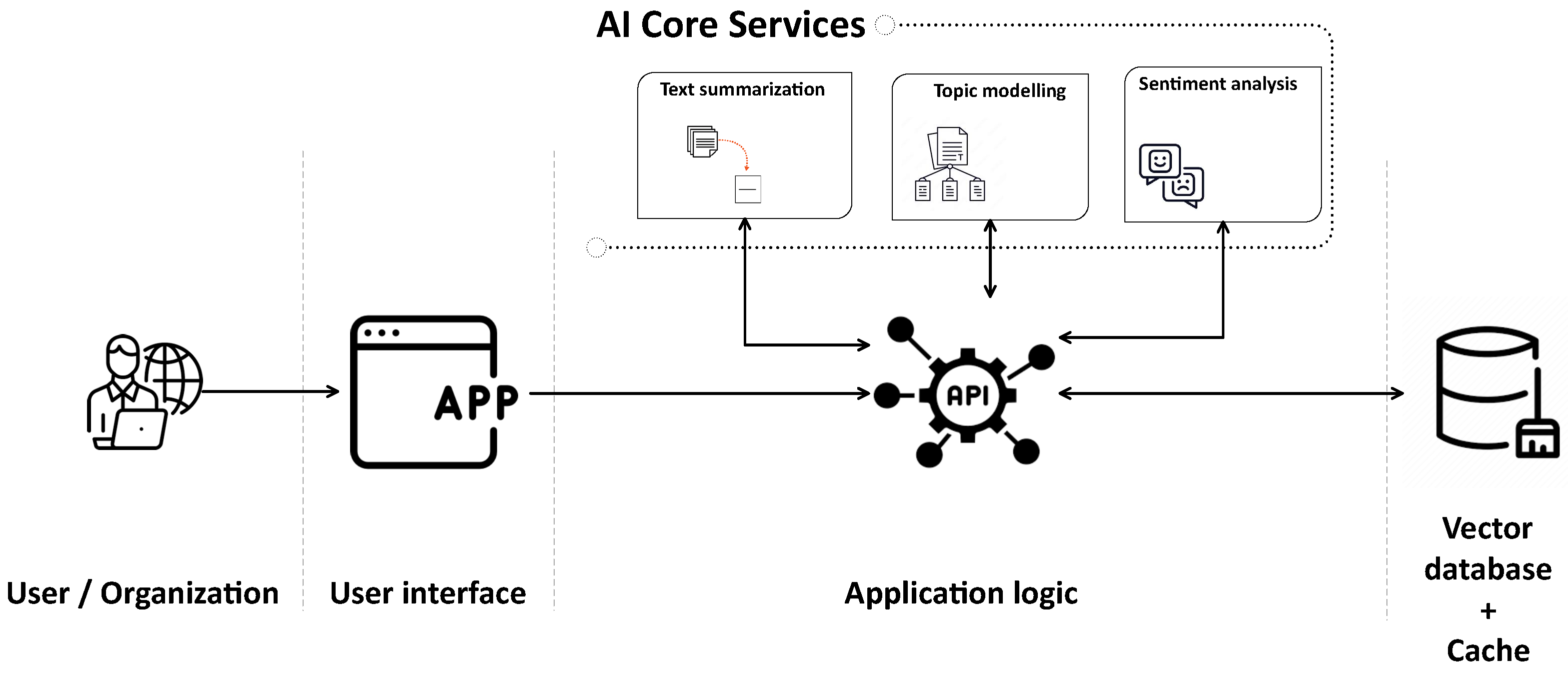

4.1. General AI Text Review Framework

This section introduces the proposed General AI text review framework and visually presents its architecture. The framework is based on the well-established model–view–controller (MVC) software architecture [

43], which separates the system into three layers: the data and business logic (model), user interface (view), and API (controller). This separation supports improved maintainability and other well-known advantages of the MVC pattern [

44]. As shown in

Figure 1, the framework includes key components such as a user interface, API, AI-powered core services, and both vector and cache databases. To avoid redundancy and improve clarity, we first provide a clear, high-level understanding in

Figure 1. In

Section 4.3, we present the step-by-step workflow and definitions of each component in a concrete, contextualized setting. The complete source code of our concrete implementation, as well as the code for generating a sequence diagram, is available in our project repository on GitHub

https://github.com/MateaLukiccc/Location-Based-Review-Insights (accessed on 24 July 2025).

The

user interface handles interaction between the user and the system.

API acts as an intermediary between AI-based core services, the vector database, and the user interface. The specific responsibilities and interactions among components are further illustrated through the implementation example of the framework in

Section 4.3, which includes a sequence diagram (

Figure 2) and a corresponding usage scenario description.

The foundation of the proposed framework is AI core services, designed to deliver actionable insights by providing three key services: summarization, topic extraction, and sentiment analysis. While existing approaches focus their review analysis on addressing one of the previous tasks or two combined for their specific business cases, the proposed framework is unique as it addresses all three aforementioned NLP tasks and combines them at different levels. For instance, summarization is provided to the user for the requested subject (e.g., a product) of the review, as well as the level of the topic for the analyzed subject.

A vector database is a specialized type of database designed for storing high-dimensional vectors. Textual data are transformed into embeddings using a transformer model, as transformer-based embeddings are now the standard for advanced NLP applications. To improve application performance and user experience in real time, cache storage is utilized, which is critical for tasks such as topic modeling that tend to be time-consuming. Retrieving user content from the vector database is based on a hybrid approach combining content retrieved using embeddings and keyword search.

Existing approaches to text-based review analysis in the literature can be viewed as special cases of our generalized framework. For example, in [

12], the authors integrate sentiment analysis with topic modeling, addressing only a subset of the tasks that our framework unifies. The generality of our approach also lies in its domain-independent design, making it applicable to various review contexts such as products, films, applications, etc. As demonstrated in

Section 4.2, the proposed framework will be validated using a real-world tourism use case, implemented without the need for fine-tuning. However, the practical deployment of the framework requires selecting specific AI models for sentiment analysis, topic modeling, and text summarization, which may vary depending on the application context.

4.2. UNESCO Cultural Heritage Site Case Study

This case study outlines a strategic initiative for local government and tourism management to leverage AI for informed decision-making. By analyzing visitor reviews from Google Maps, the aim is to derive actionable insights that enhance the visitor experience, guide tourism planning, and assess compliance with UNESCO criteria. The initial focus of this analysis is the iconic Kotor Fortress landmark.

Kotor is a historic city located on the Adriatic coast of Montenegro, within the Bay of Kotor. Since 1979, it has been listed as a UNESCO World Heritage Site, primarily due to its exceptional architectural, urban, and cultural qualities. Archaeological evidence confirms that the city was inhabited as early as the 5th century AD. The medieval structure of Kotor includes a fortified Old Town surrounded by city walls. UNESCO has designated Kotor’s OUV [

15] based on four key criteria: (i) the harmony between urban structures and the natural landscape; (ii) the role of aristocratic maritime towns in the creative development of the region; (iii) the authenticity and diversity of preserved cultural properties; (iv) the integration of high-quality architecture into a cohesive urban plan. However, Kotor faces ongoing threats to its integrity due to urbanization, infrastructure development, and unregulated tourism pressures.

Local government offices and tourism management entities often struggle with obtaining timely and actionable insights from the vast amount of unstructured visitor feedback available online. Traditional methods of analyzing such reviews are often time-consuming and prone to subjective interpretation. Thus, they fail to identify emerging trends in visitor sentiment and preferences, proactively address visitor pain points, and objectively evaluate the alignment of current tourism offerings with specific criteria, such as those set by UNESCO.

To address these challenges, we propose an AI-driven analytics solution designed to process and interpret Google Maps reviews. This solution utilizes NLP techniques to extract key themes, identify areas of satisfaction and dissatisfaction, and assess performance against predefined indicators. The primary objectives are as follows:

Generate Actionable Insights for Local Government Officials and Tourism Managers: This will provide local tourism management with clear, data-driven recommendations to inform strategic planning, promotional activities, and facility investments.

Enhance Visitor Experience: This will equip visitors with summarized feedback and consolidated experiences from a multitude of past tourists, enabling them to better plan their activities and set appropriate expectations.

Evaluate UNESCO Criteria Compliance: This allows us to objectively assess how well the visitor experience and the management of the landmark align with established UNESCO World Heritage criteria, based on textual feedback.

To evaluate the contribution and effectiveness of the AI framework, this case study seeks to answer the following critical questions:

Q1: Are AI-generated insights meaningful? Do the outputs provide clear, understandable, and relevant information on visitor perceptions?

Q2: Are the insights actionable? Can the insights directly translate into specific, implementable plans and decisions for tourism managers, visitors, and local authorities (e.g., “Invest in better signage here” and “Promote this lesser-known viewpoint”)?

Q3: Can UNESCO criteria be effectively evaluated using visitor text reviews? Is it feasible to map aspects of visitor feedback to specific UNESCO criteria for the site?

4.3. Ai-Driven Tourism Management-VisitorLens AI Implementation

We collected 3418 Google Maps reviews for Kotor Fortress, each with extensive metadata. We removed columns containing only null values and discarded metadata unrelated to the content of the reviews. Duplicate entries were identified and removed based on unique review IDs. We filtered reviews to include only English texts by using available translations provided by Google Maps and excluded entries without review text, resulting in 1768 relevant reviews.

Table 1 presents sample data after the preprocessing steps. The complete dataset, both before and after cleaning, is available in our project repository on GitHub,

https://github.com/MateaLukiccc/Location-Based-Review-Insights (accessed on 24 July 2025).

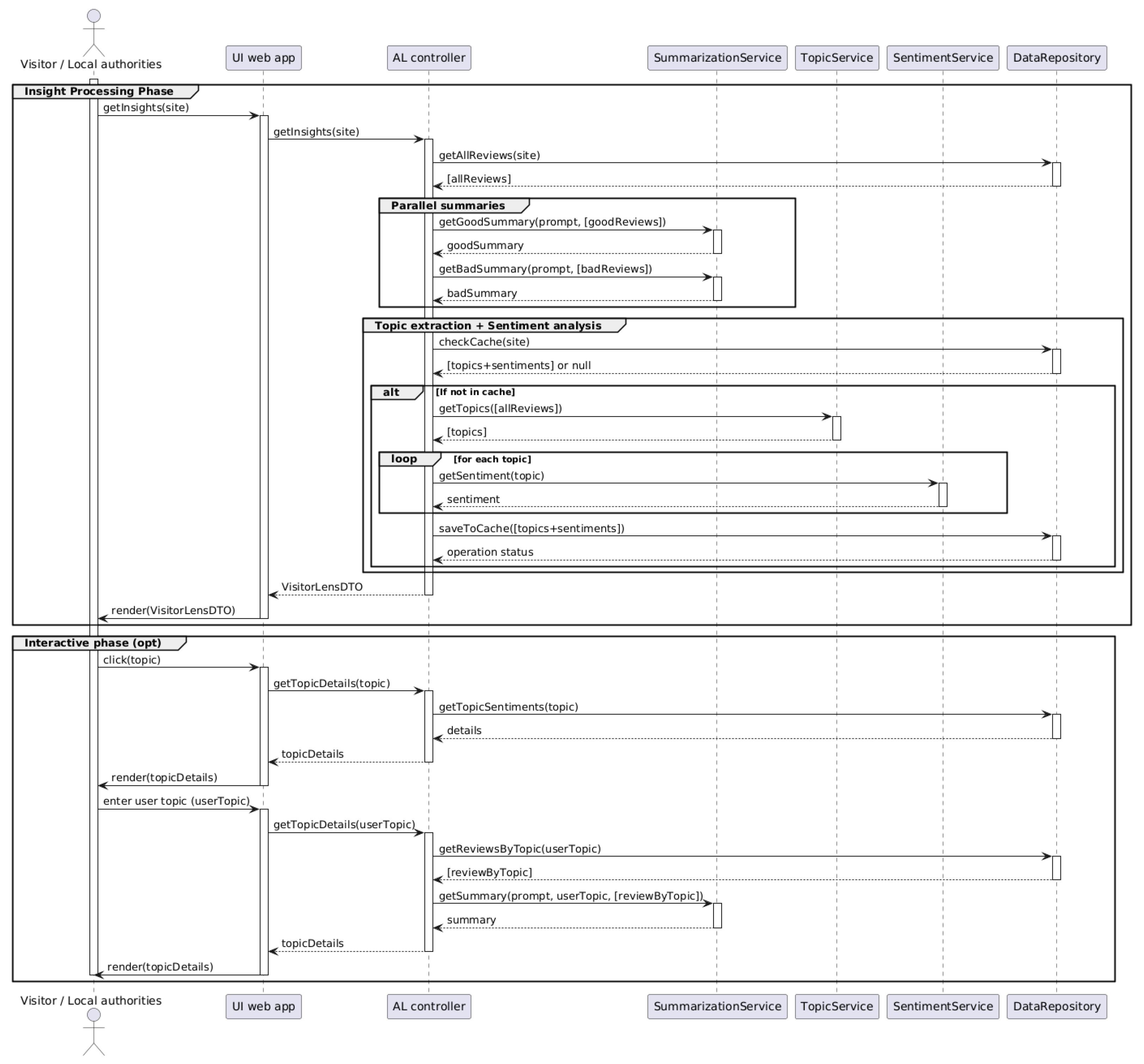

The implementation of VisitorLens AI follows the structure of the proposed general AI review framework, tailored for the domain of cultural heritage. It leverages modular NLP components orchestrated through a centralized controller layer. The system is designed as a web-based application that enables both tourists and institutional users (e.g., local authorities, cultural heritage management bodies, and tourism organizations) to retrieve structured insights from user-generated reviews related to heritage sites. The workflow and component interactions are presented in

Figure 2 through a sequence diagram.

The key components are as follows:

User Interface (UI web app): The entry point for end-users, implemented using ReactJS, allows them to request insights about a selected heritage site and to explore detailed topics and sentiment.

AL Controller: The application logic (AL) layer coordinates the entire insight retrieval pipeline and is based on the FastAPI framework. Upon receiving a request, it handles review fetching, invokes text analysis services, manages caching, and returns structured results.

Data Repository: A persistent access layer that mediates between the review database and cache storage. It orchestrates access to stored user reviews in the form of vector embeddings (ChromaDB) and precomputed analytical outputs (e.g., topics and sentiment scores stored using Redis), acting as an abstraction layer that ensures consistency, optimizes performance, and minimizes redundant computations. This component is queried at multiple points in the workflow to retrieve or store relevant data based on availability and caching status.

Summarization Service: This module is invoked twice in parallel to generate abstractive summaries, one for positive and one for negative reviews. Using Gemini Flash 2.0 via API access, it produces high-level, fluent narratives that reflect both favorable feedback and criticism.

Topic Modeling Service: If no cached results are available, this component applies the FASTopic model to identify latent topics from all reviews associated with the selected site. The model extracts semantically meaningful clusters that represent recurring themes.

Sentiment Analysis Service: For each identified topic, the sentiment service determines the dominant tone (positive or negative), based on DistilBERT, a transformer-based classifier. The results provide evaluative context to the discovered topics.

From the user’s perspective, the interaction with the system begins with a simple request for insights related to a selected heritage site. Upon submission through the user interface, this request is forwarded to the central controller, which initiates a coordinated processing pipeline. First, it retrieves all user reviews available for the site from the data repository. In parallel, two processes are triggered: one generates a concise summary of positive impressions, while the other focuses on negative experiences.

Simultaneously, the system checks whether the topic and sentiment analysis results already exist in the cache. If they do, it reuses them to accelerate the response. If not, it launches a new round of topic modeling and performs sentiment analysis for each identified theme. Once the topics and sentiments are computed, they are cached for future access, thus reducing computational overhead and improving the system’s responsiveness, ensuring that subsequent requests are handled more efficiently.

In this setup, we use a Redis SET to store data for a single location: the Kotor Fortress. This example runs without a time-to-live (TTL), which is enough for static or demo data. In a real-world case, especially with streaming or daily downloaded data, leaving out a TTL would be impractical. Data would accumulate indefinitely, leading to performance and storage issues. If we expanded this to track multiple locations, we would need a strategy to manage storage. Applying an eviction policy, such as least recently used (LRU), would help cap storage and remove old or unused data automatically. This setup is intentionally simple, but realistic systems require TTLs and eviction policies to remain efficient and scalable.

The user ultimately receives a structured insight package (VisitorLensDTO), which is automatically rendered on the screen. It contains summarized feedback, key topics of discussion, and overall sentiment distribution, providing a quick and intuitive overview of visitor experiences.

During the optional interactive phase, the user can choose to explore specific topics further by clicking on them. As a result, the system obtains detailed sentiment data related to the selected topic and presents it in a clear and interpretable format. This layered interaction enables both casual visitors and institutional stakeholders (e.g., local authorities and cultural heritage managers) to explore narratives and identify critical issues or strengths in a highly accessible and efficient manner.

In addition to exploring topics automatically identified by the system, users (e.g., representatives of local authorities, cultural organizations, and institutions involved in heritage site management) can manually input keywords they consider particularly relevant but not covered by the system’s default output. This feature also benefits tourists who wish to explore specific themes of personal interest, such as safety, cleanliness, or spiritual ambiance. Once the keyword is entered, the system retrieves all related visitor reviews, generates a real-time summary, and presents a structured overview. This functionality is especially valuable for users seeking to validate specific assumptions, uncover overlooked issues, or conduct focused analysis based on UNESCO evaluation criteria. By enabling user-defined keyword exploration, the framework strengthens the interpretability of insights and enhances their potential to support meaningful and actionable decisions.

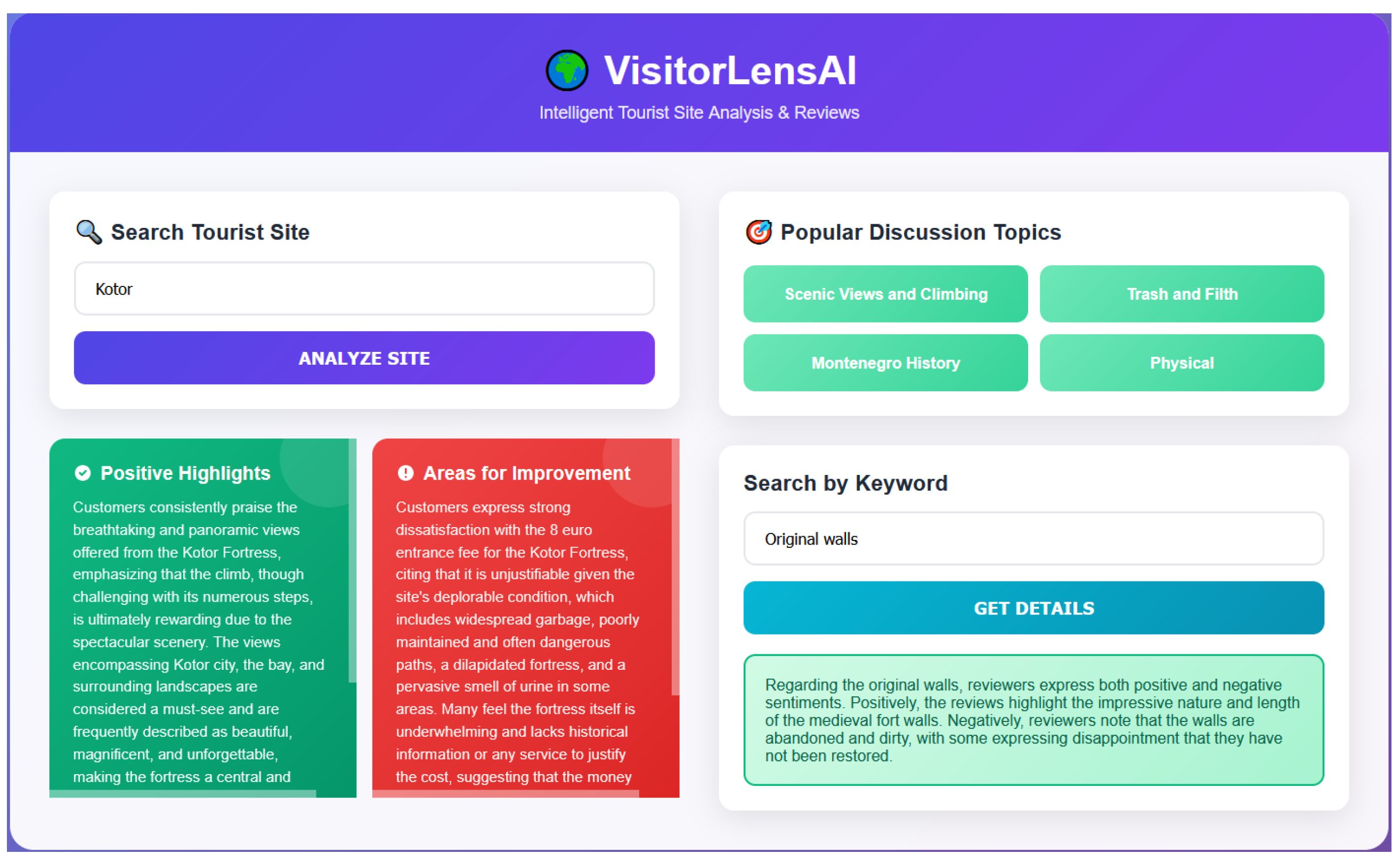

As shown in

Figure 3, the frontend of our web application is designed to reflect the workflow described in the sequence diagram in

Figure 2. For the example site “Kotor”, the interface displays a summary of positive reviews (based on 4- and 5-star ratings) in green and a summary of negative reviews in red.

On the right side of the screen, the system presents automatically identified topics, along with an interactive section that allows users to explore specific areas of interest related to the site. This feature enables local authorities to investigate whether visitor reviews reference aspects relevant to UNESCO evaluation criteria.

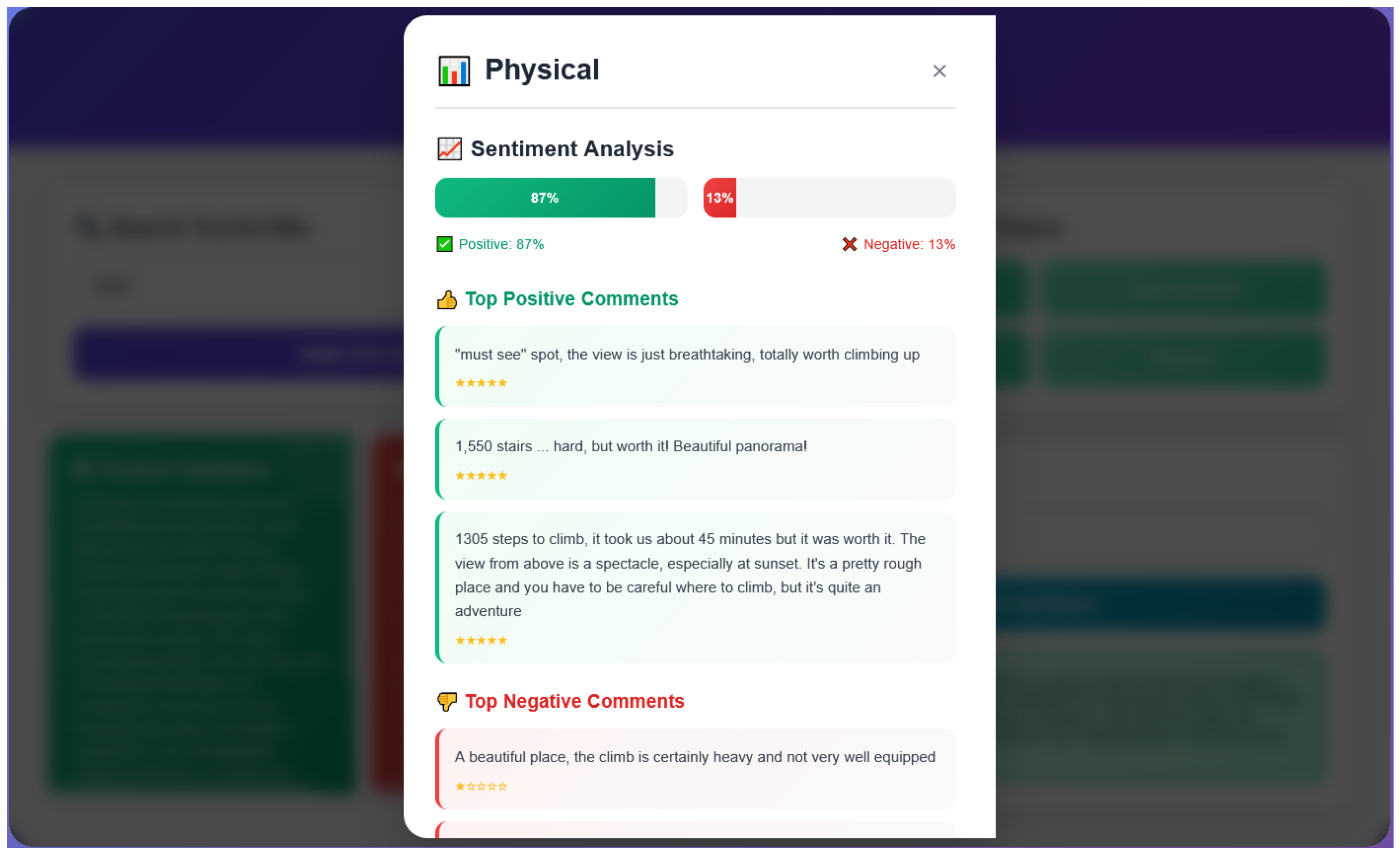

Figure 4 displays a modal screen that appears when users choose to further explore a specific topic identified through automatic topic modeling. Alongside the topic name, the interface presents the results of sentiment analysis for all comments associated with that topic, including examples of both positive and negative comments.

This implementation validates the effectiveness of the general framework through a realistic and valuable cultural heritage use case, demonstrating how multiple NLP services can be orchestrated to extract actionable knowledge from large-scale and unstructured visitor feedback.

5. Evaluation and Results

Both automatic and human evaluations were performed for the VisitorLens AI system. Even though automatic evaluation tends to save time, minimize subjective bias, and standardize the evaluation process, it may not be sufficient in cases of open-generation tasks, where human evaluation is more reliable [

36]. We follow a mixed-method design that fuses standard quantitative metrics with qualitative experts’ insights using a questionnaire.

5.1. Automatic Evaluation

Automatic evaluation is a widespread method that is typically based on standard metrics. To evaluate the VisitorLens AI system’s performance, we validated each NLP component independently and utilized appropriate metrics. Hereby, we addressed research question Q1: whether AI-generated summaries are meaningful.

5.1.1. Sentiment Analysis: Evaluation Metrics

As part of our framework evaluation, we analyzed 1768 filtered user-generated reviews for the Kotor Fortress, selected from an initial set of 3418 collected via Google Maps. Although each review includes a one- to five-star rating, we found these ratings often misaligned with the sentiment expressed in the accompanying text. For instance, several four- or five-star reviews contained overtly negative commentary:

“Dirty !!!!”

“Contrary to what other people write, I don’t think it’s worth paying 8 euros for admission. Pure rip-off, especially in high season with tourists on cruises. The money to go to conservation is probably not intended for that. Around full of trash and neglect…”

“Definitely worth a visit, BUT only on a free road. Initially, we did not know about it, but after reading other reviews, we realized that we were very wrong. Paying 8 euros per person for nothing is overkill…”

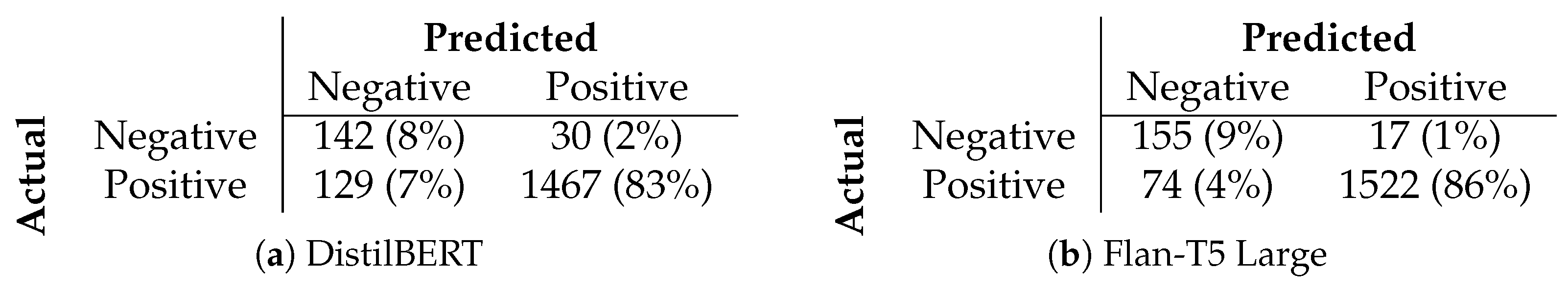

Due to these discrepancies, two domain experts independently labeled all instances, resulting in a Cohen’s kappa of 0.89, indicating almost perfect agreement. Disagreements were reviewed and resolved by the authors, producing the final dataset. This produced a more reliable ground truth: 172 reviews were labeled as negative and 1596 as positive. Although still imbalanced, this distribution better reflects the actual sentiment present in the data.

To assess the generalization capabilities of pre-trained models within our framework, without task-specific fine-tuning, we evaluated two popular transformer-based language models:

DistilBERT (≈66 million parameters), chosen for its efficiency and widespread use as a default sentiment model in the Hugging Face transformer pipeline [

37].

Flan-T5 Large (≈780 million parameters), which is currently among the highest-performing models in the <1B parameter category on the Hugging Face Open LLM leader board [

45].

Given the class imbalance, model performance on the minority class (negative sentiment) was a key concern. Notably, DistilBERT processed the dataset approximately 15 times faster than Flan-T5 Large, making it a strong candidate for latency-sensitive applications. Evaluation metrics are summarized in

Table 2.

Both models performed well on the majority class (positive sentiment). However, precision on the minority class (negative sentiment) was notably lower, particularly for DistilBERT. This is primarily due to class imbalance, where relatively few negative examples exist; even a modest number of false positives can significantly degrade precision. Nonetheless, both models achieved relatively high recall for the negative class, suggesting a strong ability to identify most of the truly negative cases. We did not place more emphasis on overall accuracy (91% for DistilBERT, as derived from the confusion matrices on

Figure 5) and micro-averaged scores, as they are imbalance-sensitive. These metrics tend to be dominated by the performance of the majority class and can give a misleading impression of model effectiveness, particularly when the minority class is of primary interest [

46].

To further investigate misclassifications, we conducted a qualitative analysis. A recurring theme was the presence of mixed sentiment, where a review expressed both praise and criticism. These nuanced cases often led to false negatives (i.e., being misclassified as negative), even when the review was overall positive. Examples include the following:

“Cool, but very tiring.”

“It’s worth going up for the view, but don’t expect much from the castle. Actually, it’s just one castle ruin.”

“Views are amazing but the ‘fortress’ isn’t much to speak of… worth doing for sure.”

“Beautiful view, but the trail needs improvement.”

In these cases, although the reviews were labeled as positive overall, they contained critical feedback that triggered a negative classification by the models. Rather than being considered failure cases, such classifications can be reframed as strengths within a practical application context. Specifically, identifying critical feedback, regardless of the overall sentiment, can provide valuable insights for stakeholders.

From an applied perspective, this aligns well with the goals of our framework. By highlighting both negative reviews and constructive criticism embedded within positive ones, the system can offer actionable intelligence for both visitors and local authorities. Misclassifying some positive reviews as negative is, therefore, not inherently problematic; instead, it can reveal opportunities for service enhancement and user experience optimization.

In this context, the framework’s ability to detect nuanced sentiment should be viewed as an asset. General-purpose sentiment models, when deployed through domain-aware pipelines such as ours, can yield rich insights that go beyond simplistic binary classification.

5.1.2. Topic Modeling: Evaluation Metrics

To extract latent themes from the review corpus, we applied two state-of-the-art topic modeling approaches: BERTopic and FASTopic. Both leverage transformer-based language models but differ in their strategies for topic generation and optimization. We evaluated each model across multiple topic configurations (4, 6, 8, and 10 topics) using two commonly adopted metrics: coherence (Cv) and diversity.

Coherence measures the semantic consistency of the top words within a topic and is often used to assess interpretability. However, especially in our application where surfacing a broader variety of distinct themes is desirable topic diversity plays a more critical role. A high diversity score indicates a lower redundancy across topics, which is crucial for generating actionable insights from large user-generated text corpora.

As summarized in

Table 3, the best-performing results are indicated in bold.

While BERTopic consistently achieves marginally higher coherence scores, FASTopic substantially outperforms in terms of topic diversity across all configurations. For example, with eight topics, FASTopic achieves a perfect diversity score of 1, compared to 0.7 for BERTopic. Notably, this increased diversity does not come at a significant cost to coherence. FASTopic’s coherence remains within 0.03–0.05 points of BERTopic’s in all cases.

Given our use case, which prioritizes the surfacing of distinct and non-redundant themes from a large volume of user reviews, we consider topic diversity to be the more critical metric. In this context, FASTopic’s ability to generate more differentiated topic clusters while maintaining reasonable semantic coherence makes it the more appropriate choice for downstream interpretation and visualization.

This aligns with our framework goal: maximize interpretability and thematic coverage rather than optimize for linguistic tightness within topics alone. In our scenario, FASTopic offers a better balance tailored to practical requirements, as it is important to understand and validate the trade-off between coherence and diversity.

5.1.3. Abstractive Summarization: Evaluation Metrics

Given that Gemini Flash 2.0 is currently the only publicly available model of its scale and capability, offering a 32,000-token context window with free API access, our evaluation primarily focused on its performance. To ensure robust benchmarking, we also incorporated baseline summaries generated by a human expert, particularly for evaluation metrics requiring reference summaries.

For evaluation, we utilized ROUGE-1 and ROUGE-L metrics to assess content overlap with reference summaries. However, acknowledging well-known limitations of ROUGE, such as its reliance on surface-level lexical matching and inability to capture semantic similarity [

47], we also incorporated the more sophisticated BERTScore [

48]. Unlike ROUGE, BERTScore leverages contextual embeddings from transformer models, enabling a more nuanced evaluation of semantic similarity. This combination of metrics provides a more comprehensive assessment of both content preservation and linguistic quality in generated summaries.

We evaluated our model on three types of generated summaries: one emphasizing the positive aspects of the site, another focusing on the negative aspects, and a third centered on a specific topic, namely “cleanliness”. For ROUGE-1, the average F1-score across three generated summaries was 0.42, while ROUGE-L got a lower F1-score of 0.29. In contrast, BERTScore, which captures semantic similarity rather than exact lexical overlap, reported a significantly higher score of 0.89.

5.2. Expert Evaluation

To assess the diagnostic capabilities of VisitorLens AI, we conducted an independent human evaluation. Cultural heritage experts were tasked with determining (Q2) whether the extracted insights were actionable for local authorities and (Q3) whether the VisitorLens AI can accurately identify review fragments that reflect the UNESCO OUV criteria. These use cases were demonstrated through the VisitorLens AI user interface (see

Figure 3).

5.2.1. Questionnaire Design

The questionnaire was designed to gather expert feedback on the quality and relevance of AI-generated summaries produced by the VisitorLens AI tool, specifically in the context of cultural heritage site analysis and management. The survey is structured around selected elements from the Operational Guidelines for the Implementation of the World Heritage Convention. The experts from all relevant cultural and heritage institutions (Tourist Organization, Kotor Museum, the Historical Archive of Kotor, the Faculty of Tourism and Hospitality, and Conservator) were consulted to identify relevant UNESCO categories for the Kotor Fortress. They singled out the following four categories: authenticity and integrity, historical and artistic value, functionality and interpretation, and visitor experience and infrastructure. In total, these four categories encompass 11 specific aspects.

For testing purposes, we designed a pilot study based on established methodologies for the human evaluation of LLMs, as outlined in [

36,

49]. Thus, we considered the following:

Number of evaluators: Seven domain experts with extensive, hands-on experience in Kotor’s cultural landscape were invited to assess the AI-generated outputs. As this was a pilot study, an emphasis was placed on recruiting highly relevant experts rather than achieving statistical significance through a larger sample size.

Evaluator’s expertise level: Among others, the expert panel included a representative of the Kotor Tourist Board, two conservation specialists, a senior museum curator, and a professor of tourism and hospitality at the University of Montenegro. This ensured that visitor–economy management, technical conservation, curatorial interpretation, and academic tourism perspectives were all represented in the evaluation.

Evaluation criteria: Each evaluator assessed the AI-generated summaries based on three dimensions widely adopted in the LLM evaluation literature [

36,

50]:

Factual Correctness (FC): To what extent does the summarized review accurately reflect the real characteristics of the site associated with the given keyword and UNESCO criterion?

Helpfulness (H): Based on your professional expertise in the field, to what extent do you find the summarized comment representation helpful for understanding visitor perspectives and informing decisions related to this aspect of the site?

Coherence (C): Based on your professional judgment, does the summarized comment representation appear coherent and logically consistent, without contradictions or thematic discontinuities?

The evaluation was conducted through guided interviews, incorporating an informative questionnaire. To mitigate bias, experts were not given direct access to the AI system. Instead, they evaluated model outputs that had been prepared in advance. For each aspect within a UNESCO category, predefined keywords (validated by an independent expert) were used to generate summaries. Experts then selected additional keywords of their choice and evaluated the resulting summaries using the same criteria (FC, H, and C).

As recommended in [

49], we employed a Likert scale to capture subjective ratings for each keyword–summary pair. When summaries lacked sufficient review content, evaluators could assign a score of 0 (“Cannot be evaluated”) to ensure that all ratings were based on meaningful content.

This pilot study lays the groundwork for scaling VisitorLens AI to other UNESCO-designated sites in Kotor and, potentially, World Heritage sites globally. More details on the questionnaire design, keyword–summary samples, and a downloadable version of the evaluation form are available in the GitHub (

https://github.com/MateaLukiccc/Location-Based-Review-Insights, accessed on 24 July 2025) repository.

5.2.2. Questionnaire Results

The results of the questionnaire were examined from several angles: general statistical and quality assessment, the identification of distinctive UNESCO aspects (e.g., particularly high or low), the detection of gaps between evaluation criteria for a single UNESCO aspect, and from the perspective of actionable points and managerial implications.

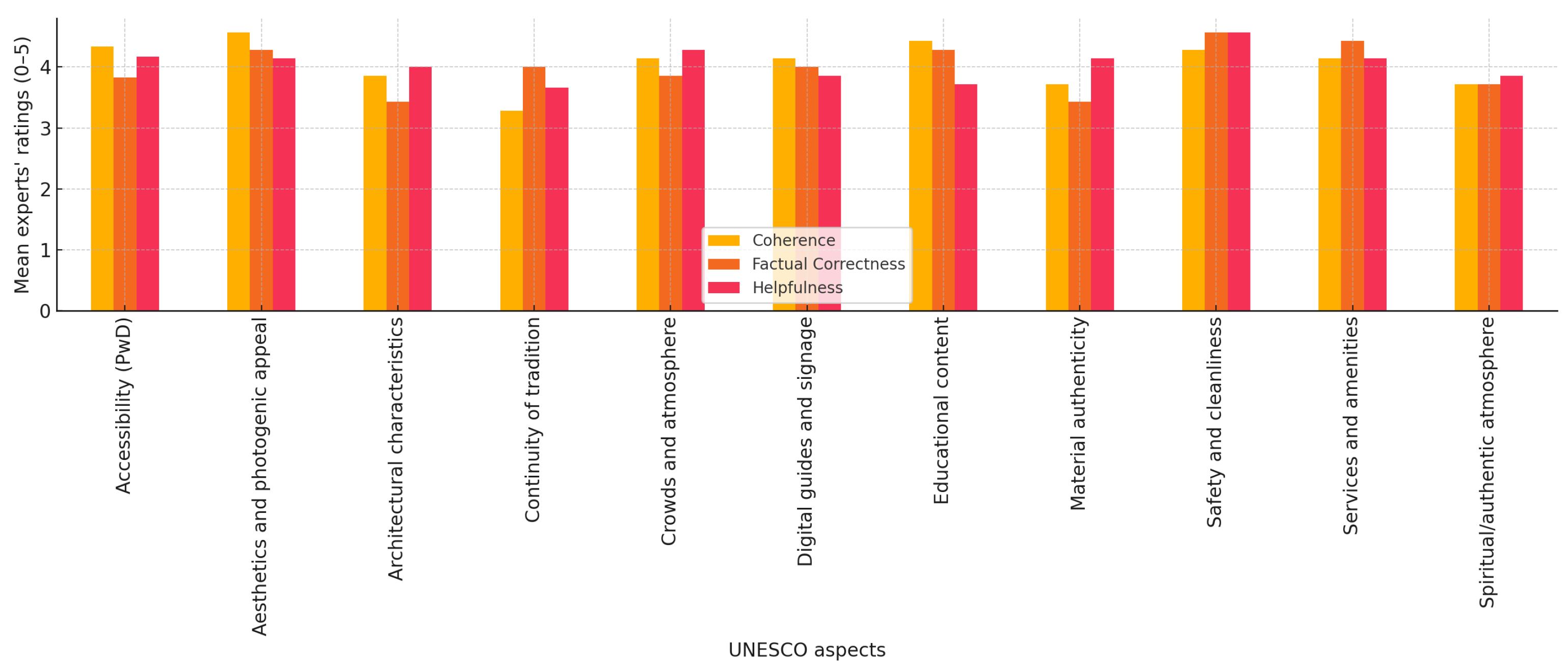

As shown in

Table 4, the majority of expert ratings reflect strong overall favorability, with 77% of evaluations falling in the high range (4–5-rating rating) and only 7% in the low range (0–2-star rating). Within the evaluation criteria profiles, helpfulness stands out with 81% high and 7% low ratings, while coherence (75% high and 8% low) and factual correctness (76% high and 7% low) also exhibit positive distributions, supporting the impression that the system produces linguistically sound and generally accurate content. The consensus strengths section further highlights levels of agreement across UNESCO aspects: aesthetics and photogenic appeal show high ratings (all metrics above 4) and strong expert consensus (range ≈ 1). Architectural characteristics receive lower scores (all below 4), but still with tight agreement (range ≈ 1). Safety and cleanliness are positively rated (scores above 4), though with slightly more variance (range ≈ 2), whereas continuity of tradition demonstrates both lower scores and the widest spread (range ≈ 3), indicating limited expert consensus on that dimension.

Figure 6 presents the mean expert scores for each UNESCO aspect across three quality axes, revealing a spectrum of signals when the bars are read from left to right. Confirmed good practices such as aesthetics and photogenic appeal, safety and cleanliness, and services and amenities score above 4 on all axes, indicating that these topics are clearly expressed (high clarity), factually sound (high factual Correctness), and readily actionable (high helpfulness). In these cases, managers can proceed directly with maintenance or promotional efforts. Identified gaps, including digital guides and signage and architectural characteristics, exhibit strong language quality (clarity ≥ 4) but show a decline on one of the other axes. This suggests that while VisitorLens articulates these aspects effectively, the supporting review data are inconsistent, pointing to areas where updated visitor feedback or additional metadata may be needed. Action points such as material authenticity, continuity of tradition, and spiritual/authentic atmosphere display a typical pattern of low factual correctness but high helpfulness. Visitors often introduce inaccuracies or myths, such as references to “Illyrian walls” or “ancient” revived festivals, leading experts to assess the summaries as factually weak. Paradoxically, this mismatch results in high helpfulness scores, as it highlights exactly which misconceptions require correction. Rather than indicating a model failure, the gap between factual correctness and helpfulness serves as a diagnostic signal for targeted interpretive improvements (see

Table 5). To strengthen the data-to-recommendation chain, the following anonymized review selections explicitly evidence the expert-flagged gaps and thereby support the proposed actions:

“Ancient Kotor Fortress. An exciting and interesting place to visit. The walls and individual elements of the fortress are fairly well preserved, which is nice and you can touch the history. Awesome view of the Bay of Kotor. From the old town there are two entrances to the fortress, take 8 euros per person. Robbery. From the northern part, behind the old mini power plant there is a free pass and a great road. Follow the red marks, then through the window of the fortress. Savings + great walk. And the rise is easier.”

“At first, it is rather hard to climb the narrow steps and slippery stones in the heat, but then the ancient ruins and stunning views fascinate, and the second breath opens. Rose 40 min, and it was worth it!”

“The fortress was built in Kotor by the ancient Illyrians, but most of it was built in the 13th century by the Venetians, when Kotor was the mainstay of the Venetian Republic. The wall of the fortress stretches for 4.5 km and rises up to 280 m above the level of the bay. A stunningly beautiful place, if you climb under the flag itself, to the top, the reward will be a stunning landscape on Kotor and the Gulf of Kotor, a tour of the fortress is best done from the very morning, until the sun is not so hot.”

For local authorities, the key management implications are to strengthen heritage protection messaging so visitors view ongoing works positively, enrich the interpretation of UNESCO criterion (ii) by highlighting Kotor’s intercultural trade history, and prioritize accessibility improvements, particularly the provision of safer pathways and facilities for disabled visitors, to promote both safety and inclusion.

5.3. Reflection on Research Questions

Q1: Are AI-generated insights meaningful? Yes. Automatic evaluation shows that the framework delivers reliable, fine-grained information about visitor perceptions. DistilBERT already achieves an F1-macro of 0.79 and Flan-T5-Large 0.87 on a manually labeled review set, with recall on the minority (negative) class above 0.90. Human assessment by heritage experts confirmed that the polarity, topic-labeling, and abstractive summaries are clear, self-explanatory, and contextually appropriate.

Q2: Are the insights actionable? The experts’ evaluation questionnaire clearly shows that insights are actionable. In

Table 5, the diagnostic gaps are paired with concrete remedial steps for heritage management. Helpfulness dominated the survey results, with 81% of expert ratings landing in the top band, far outstripping low scores (7%), which signals that practitioners find the AI-generated summaries immediately useful for decision-making.

Q3: Can UNESCO criteria be effectively evaluated using visitor text reviews? Is it feasible to map aspects of visitor feedback to specific UNESCO criteria for the site? The case study shows that aligning FASTopic clusters with criterion-specific keyword sets allows VisitorLens to trace how visitor narratives reflect Kotor’s outstanding universal xalue. Reviews frequently emphasize aesthetic harmony, authenticity, and atmosphere, corresponding to Criteria (i), (iii), and (iv). The absence of references to maritime-trade history (Criterion ii) suggests an interpretation gap. Negative feedback highlights poor maintenance, trash, and unclear pricing at key sites, echoing UNESCO’s concerns that the “ability of the overall landscape to reflect its value is being compromised by the gradual erosion of traditional practices and ways of life” [

51]. Expert validation confirms that AI-extracted insights capture both appreciation and critique, supporting the use of visitor reviews as a feasible, low-cost UNESCO compliance monitoring tool.

6. Limitations

This study identifies limitations from three perspectives: the general framework, the VisitorLens AI implementation, and the cultural heritage and tourism context. Although the General AI text review framework is modular and scalable, it faces performance challenges, particularly during real-time processing of tasks like topic modeling. The lack of adaptive tuning based on user feedback reduces its ability to improve over time. Additionally, keyword-based queries may introduce subjectivity, resulting in narrow or less coherent summaries.

The VisitorLens AI implementation presents further practical constraints. It offers a uniform user experience without specialized features for institutional users. Its reliance on external APIs, such as Gemini Flash 2.0, introduces potential instability due to evolving third-party services. We consider this research a pilot study, as the dataset used for the Kotor Fortress is moderate. The system also lacks native multilingual capabilities and depends on platform-based translations, which may lead to inconsistencies. Its current focus on Google Maps reviews limits integration with other platforms and restricts broader data analysis.

Since Kotor is a small historical town, the number of available tourism and cultural heritage experts is limited. For our research, we included seven experts from various backgrounds, which was the best possible outcome in this case. Still, we considered the questionnaire to be a pilot study. In the domain of cultural heritage and tourism, limitations arose from the nature of user-generated content. Reviews often represented the opinions of a small, self-selected group of visitors, based on particularly positive or negative experiences, and may under-represented the average experience. Content tended to focus on immediate, visible aspects rather than deeper cultural or historical themes. Furthermore, the absence of institutional engagement on public platforms reduced opportunities for dialogue, clarification, and collaborative interpretation.

7. Conclusions

This paper introduced a novel, general AI framework for analyzing text-based user reviews, integrating topic modeling, sentiment analysis, and summarization within a unified, domain-independent architecture. Rooted in the MVC paradigm, this framework offers a robust and scalable solution for transforming unstructured digital content into actionable insights. Its modular design allows for customization and application across diverse domains, from product evaluation to tourism and cultural heritage management.

The practical relevance of this framework was effectively demonstrated through VisitorLens AI, an open-source implementation specifically tailored for cultural heritage management. By analyzing Google Maps reviews of Kotor, a UNESCO World Heritage Site, VisitorLens AI provided a tangible example of how advanced NLP techniques can address real-world challenges. The system, combining DistilBERT for sentiment analysis, FASTopic for topic modeling, and Gemini Flash 2.0 for abstractive summarization, successfully extracted meaningful insights into visitor perceptions and experiences.

The case study addressed critical questions regarding the utility of AI-generated insights, their actionability, and their capacity to evaluate UNESCO criteria compliance. Our findings indicate that VisitorLens AI can indeed provide clear, understandable, and relevant information (Q1), directly translating into specific, implementable plans for tourism managers and visitors (Q2). Furthermore, the ability to map visitor feedback to UNESCO’s OUV criteria highlights the potential for objective assessment of heritage management alignment (Q3).

We achieved very good performance metrics, such as a sentiment F1-score of 0.87 on the minority class and an excellent topic diversity score of 1.0 for the FASTopic model. In addition to standard evaluation metrics, we conducted expert-based human evaluation. Addressing the concerns of non-reproducible and non-repeatable studies raised by [

52], we took deliberate steps to ensure that our human evaluation was replicable. To facilitate transparency and reproducibility, we have provided a detailed set-up throughout the paper and made all related materials available through our GitHub (

https://github.com/MateaLukiccc/Location-Based-Review-Insights, accessed on 24 July 2025) repository.

By empowering both tourists as co-creators of cultural meaning, VisitorLens AI encourages more participatory and informed heritage experiences. This work underscores the potential of integrating public sentiment, institutional objectives, and technological innovation for effective heritage management.

We acknowledged the limitations of this pilot study in the previous section. In future work, we will address the research limitations, with the intention to scale VisitorLens AI to additional UNESCO-designated sites in Kotor and to World Heritage sites around the world.

8. Future Directions

Future work should address the identified limitations and extend the applicability of VisitorLens AI. To mitigate the risk of biased or incomplete outputs from large language models, explainable AI methods and human-in-the-loop review will be applied, allowing cultural heritage professionals to verify and refine system outputs. Adaptive model tuning based on user feedback will improve responsiveness to changing visitor needs.

Analytical scope can be expanded by integrating multimodal data such as images and videos alongside text, and by aggregating content from multiple review platforms to reduce single-source bias. Real-time alerts for emerging issues and predictive models for visitor trends could support proactive management.

Finally, user interface developments, including interactive dashboards and customizable visual analytics, will increase accessibility for both institutional users and the public, fostering greater participation in heritage interpretation and management.