A Continuous-Time Distributed Optimization Algorithm for Multi-Agent Systems with Parametric Uncertainties over Unbalanced Digraphs

Abstract

1. Introduction

- A novel distributed continuous-time optimization algorithm is developed for multi-agent systems over unbalanced digraphs. Unlike existing methods such as [17,19,20], which are restricted to undirected or weight-balanced graphs, the proposed adaptive algorithm is applicable to more general directed graphs.

- The asymptotic convergence of the proposed algorithm is rigorously established through the integration of Lyapunov stability theory and input-to-state stability (ISS) analysis. Additionally, the method improves agent-level privacy by eliminating the need to access the cost function (sub)gradients of neighboring agents, in contrast to existing approaches such as [24,27].

2. Preliminaries and Problem Formulation

2.1. Notations

2.2. Graph Theory

- 1.

- L has a simple zero eigenvalue with the associated right eigenvector , and all other eigenvalues have positive real parts.

- 2.

- Let with for denote the left eigenvector of L corresponding to the zero eigenvalue. Define . Then the matrix satisfieswhere ϑ is any vector with positive entries and represents the second smallest eigenvalue of . Moreover, if and only if is weight-balanced.

2.3. Problem Formulation

3. Main Results

3.1. Algorithm Design

3.2. Convergence Analysis

- Prove the global uniform asymptotic stability of the unperturbed system ;

- Establish the ISS property of the system (10);

- Demonstrate the convergence of variables and in (8) to and , respectively, as .

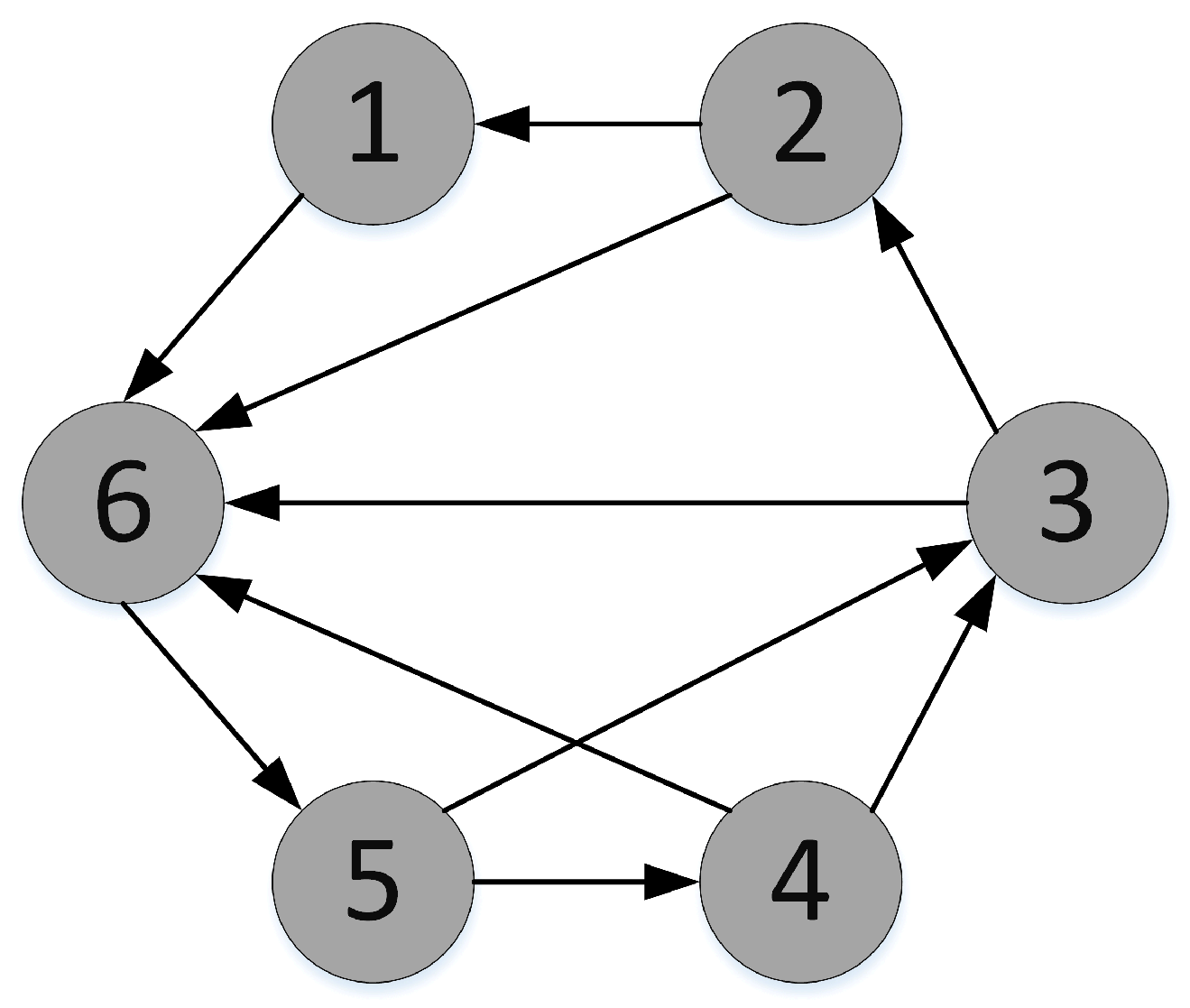

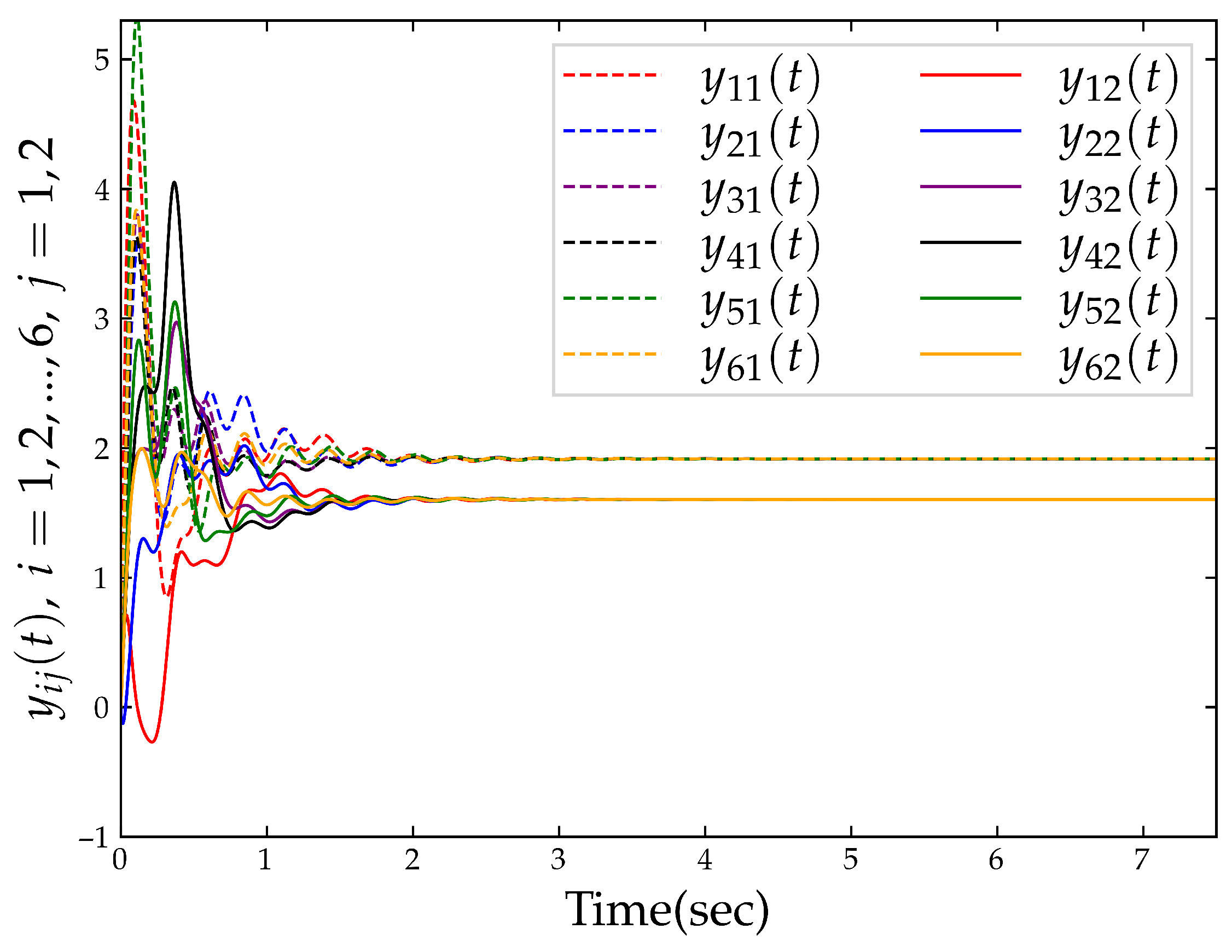

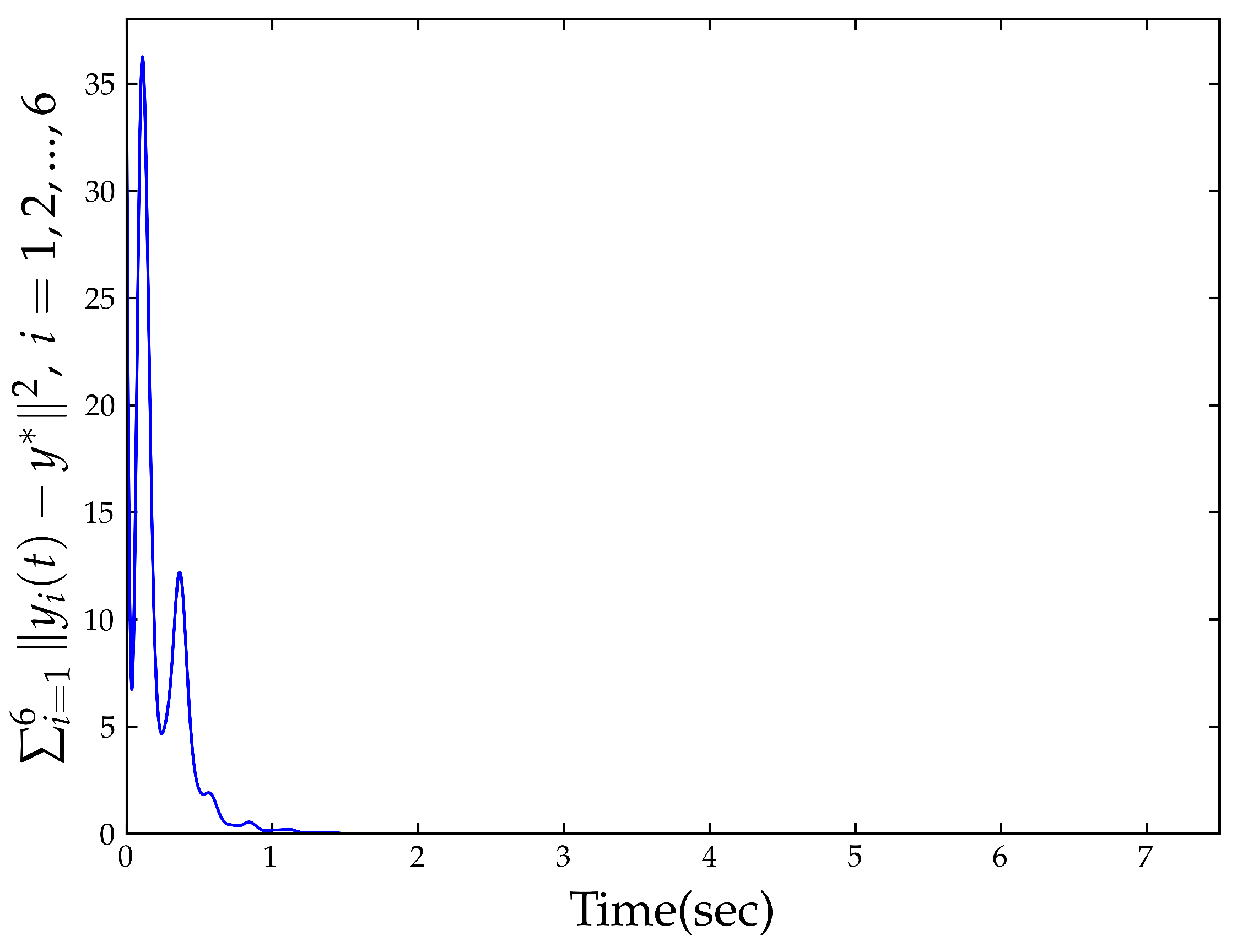

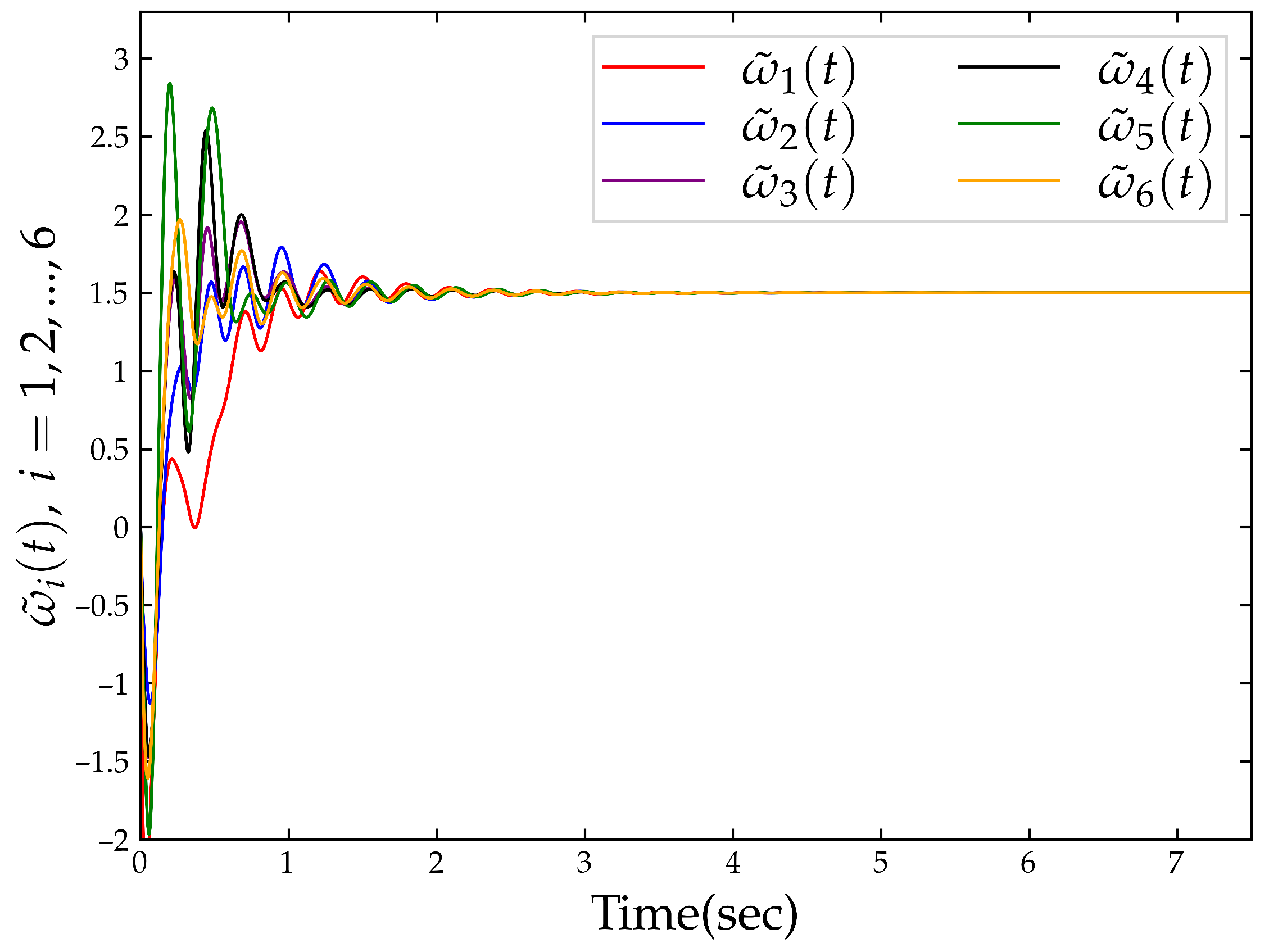

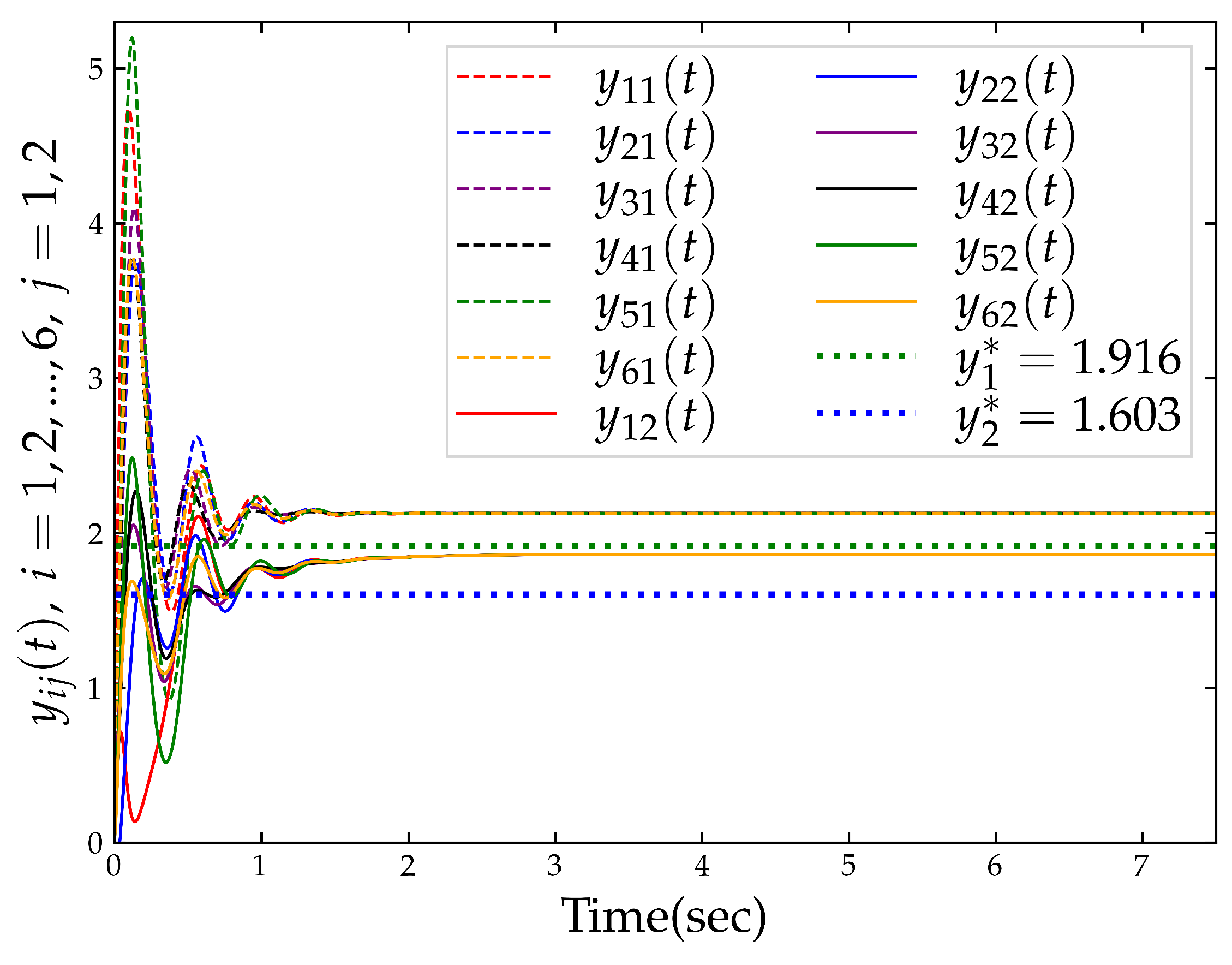

4. Numerical Simulation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| DOPs | Distributed optimization problems |

| ISS | Input-to-state stability |

References

- Cherukuri, A.; Cortes, J. Initialization-free distributed coordination for economic dispatch under varying loads and generator commitment. Automatica 2016, 74, 183–193. [Google Scholar] [CrossRef]

- Li, M.; Andersen, D.G.; Smola, A.; Yu, K. Communication efficient distributed machine learning with the parameter server. Adv. Neural Inf. Process. Syst. 2014, 27, 19–27. [Google Scholar]

- Yi, X.; Zhang, S.; Yang, T.; Chai, T.; Johansson, K.H. A primal-dual SGD algorithm for distributed nonconvex optimization. IEEE/CAA J. Autom. Sin. 2022, 9, 812–833. [Google Scholar] [CrossRef]

- Beck, A.; Nedic, A.; Ozdaglar, A.; Teboulle, M. Optimal distributed gradient methods for network resource allocation problems. IEEE Trans. Control Netw. Syst. 2014, 1, 64–74. [Google Scholar] [CrossRef]

- Doan, T.T.; Beck, C.L. Distributed resource allocation over dynamic networks with uncertainty. IEEE Trans. Autom. Control 2020, 66, 4378–4384. [Google Scholar] [CrossRef]

- Carnevale, G.; Camisa, A.; Notarstefano, G. Distributed online aggregative optimization for dynamic multirobot coordination. IEEE Trans. Autom. Control 2022, 68, 3736–3743. [Google Scholar] [CrossRef]

- Ning, B.; Han, Q.L.; Zuo, Z. Distributed optimization for multiagent systems: An edge-based fixed-time consensus approach. IEEE Trans. Cybern. 2017, 49, 122–132. [Google Scholar] [CrossRef]

- Olfati-Saber, R.; Fax, J.A.; Murray, R.M. Consensus and cooperation in networked multi-agent systems. Proc. IEEE 2007, 95, 215–233. [Google Scholar] [CrossRef]

- Nedic, A.; Ozdaglar, A. Distributed subgradient methods for multi-agent optimization. IEEE Trans. Autom. Control 2009, 54, 48–61. [Google Scholar] [CrossRef]

- Ho, Y.; Servi, L.; Suri, R. A class of center-free resource allocation algorithms. IFAC Proc. Vol. 1980, 13, 475–482. [Google Scholar] [CrossRef]

- Lü, Q.; Liao, X.; Li, H.; Huang, T. Achieving acceleration for distributed economic dispatch in smart grids over directed networks. IEEE Trans. Netw. Sci. Eng. 2020, 7, 1988–1999. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, H.; Li, Z. Distributed Optimization Control for the System with Second-Order Dynamic. Mathematics 2024, 12, 3347. [Google Scholar] [CrossRef]

- Shi, Y.; Ran, L.; Tang, J.; Wu, X. Distributed optimization algorithm for composite optimization problems with non-smooth function. Mathematics 2022, 10, 3135. [Google Scholar] [CrossRef]

- Zhou, H.; Zeng, X.; Hong, Y. Adaptive exact penalty design for constrained distributed optimization. IEEE Trans. Autom. Control 2019, 64, 4661–4667. [Google Scholar] [CrossRef]

- Li, W.; Zeng, X.; Liang, S.; Hong, Y. Exponentially convergent algorithm design for constrained distributed optimization via nonsmooth approach. IEEE Trans. Autom. Control 2021, 67, 934–940. [Google Scholar] [CrossRef]

- Guo, G.; Zhang, R.; Zhou, Z.D. A local-minimization-free zero-gradient-sum algorithm for distributed optimization. Automatica 2023, 157, 111247. [Google Scholar] [CrossRef]

- Guo, G.; Zhou, Z.D.; Zhang, R. Distributed fixed-time optimization with time-varying cost: Zero-gradient-sum scheme. IEEE Trans. Circuits Syst. II Express Briefs 2024, 71, 3086–3090. [Google Scholar] [CrossRef]

- Ji, L.; Yu, L.; Zhang, C.; Guo, X.; Li, H. Initialization-free distributed prescribed-time consensus based algorithm for economic dispatch problem over directed network. Neurocomputing 2023, 533, 1–9. [Google Scholar] [CrossRef]

- Lian, M.; Guo, Z.; Wen, S.; Huang, T. Distributed predefined-time algorithm for system of linear equations over directed networks. IEEE Trans. Circuits Syst. II Express Briefs 2023, 71, 2139–2143. [Google Scholar] [CrossRef]

- Su, P.; Wang, T.; Yu, J.; Dong, X.; Li, Q.; Ren, Z.; Tan, Q.; Lv, R.; Liang, Z. Continuous-Time Algorithms for Distributed Optimization Problem on Directed Digraphs. In Proceedings of the 2024 43rd Chinese Control Conference (CCC), Kunming, China, 28–31 July 2024; IEEE: New York, NY, USA, 2024; pp. 5772–5776. [Google Scholar] [CrossRef]

- Wang, J.; Liu, D.; Feng, J.; Zhao, Y. Distributed Optimization Control for Heterogeneous Multiagent Systems under Directed Topologies. Mathematics 2023, 11, 1479. [Google Scholar] [CrossRef]

- Zhu, Y.; Yu, W.; Wen, G.; Ren, W. Continuous-time coordination algorithm for distributed convex optimization over weight-unbalanced directed networks. IEEE Trans. Circuits Syst. II Express Briefs 2018, 66, 1202–1206. [Google Scholar] [CrossRef]

- Lian, M.; Guo, Z.; Wen, S.; Huang, T. Distributed adaptive algorithm for resource allocation problem over weight-unbalanced graphs. IEEE Trans. Netw. Sci. Eng. 2023, 11, 416–426. [Google Scholar] [CrossRef]

- Dhullipalla, M.H.; Chen, T. A Continuous-Time Gradient-Tracking Algorithm for Directed Networks. IEEE Control Syst. Lett. 2024, 8, 2199–2204. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, L.; Wang, X.; Ji, H. Fully distributed algorithm for resource allocation over unbalanced directed networks without global lipschitz condition. IEEE Trans. Autom. Control 2022, 68, 5119–5126. [Google Scholar] [CrossRef]

- Zhang, J.; Hao, Y.; Liu, L.; Wang, X.; Ji, H. Fully Distributed Continuous-Time Algorithm for Nonconvex Optimization over Unbalanced Digraphs. In Proceedings of the 2023 9th International Conference on Control, Decision and Information Technologies (CoDIT), Rome, Italy, 3–6 July 2023; IEEE: New York, NY, USA, 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Dai, H.; Jia, J.; Yan, L.; Fang, X.; Chen, W. Distributed fixed-time optimization in economic dispatch over directed networks. IEEE Trans. Ind. Inform. 2020, 17, 3011–3019. [Google Scholar] [CrossRef]

- Li, Z.; Duan, Z. Cooperative Control of Multi-Agent Systems: A Consensus Region Approach; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Zhou, S.; Wei, Y.; Cao, J.; Liu, Y. Multi/Single-Stage Sliding Manifold Approaches for Prescribed-Time Distributed Optimization. IEEE Trans. Autom. Control 2025, 70, 2794–2801. [Google Scholar] [CrossRef]

- Li, H.; Zhang, M.; Yin, Z.; Zhao, Q.; Xi, J.; Zheng, Y. Prescribed-time distributed optimization problem with constraints. ISA Trans. 2024, 148, 255–263. [Google Scholar] [CrossRef]

- Li, Z.; Ding, Z.; Sun, J.; Li, Z. Distributed adaptive convex optimization on directed graphs via continuous-time algorithms. IEEE Trans. Autom. Control 2017, 63, 1434–1441. [Google Scholar] [CrossRef]

- Khalil, H.K. Nonlinear Systems, 3rd ed.; Prentice Hall Inc.: Upper Saddle River, NJ, USA, 2002. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Q.; Jiang, C. A Continuous-Time Distributed Optimization Algorithm for Multi-Agent Systems with Parametric Uncertainties over Unbalanced Digraphs. Mathematics 2025, 13, 2692. https://doi.org/10.3390/math13162692

Yang Q, Jiang C. A Continuous-Time Distributed Optimization Algorithm for Multi-Agent Systems with Parametric Uncertainties over Unbalanced Digraphs. Mathematics. 2025; 13(16):2692. https://doi.org/10.3390/math13162692

Chicago/Turabian StyleYang, Qing, and Caiqi Jiang. 2025. "A Continuous-Time Distributed Optimization Algorithm for Multi-Agent Systems with Parametric Uncertainties over Unbalanced Digraphs" Mathematics 13, no. 16: 2692. https://doi.org/10.3390/math13162692

APA StyleYang, Q., & Jiang, C. (2025). A Continuous-Time Distributed Optimization Algorithm for Multi-Agent Systems with Parametric Uncertainties over Unbalanced Digraphs. Mathematics, 13(16), 2692. https://doi.org/10.3390/math13162692