Abstract

We propose a novel Bayesian wavelet regression approach using a three-component spike-and-slab prior for wavelet coefficients, combining a point mass at zero, a moment (MOM) prior, and an inverse moment (IMOM) prior. This flexible prior supports small and large coefficients differently, offering advantages for highly dispersed data where wavelet coefficients span multiple scales. The IMOM prior’s heavy tails capture large coefficients, while the MOM prior is better suited for smaller non-zero coefficients. Further, our method introduces innovative hyperparameter specifications for mixture probabilities and scale parameters, including generalized logit, hyperbolic secant, and generalized normal decay for probabilities, and double exponential decay for scaling. Hyperparameters are estimated via an empirical Bayes approach, enabling posterior inference tailored to the data. Extensive simulations demonstrate significant performance gains over two-component wavelet methods. Applications to electroencephalography and noisy audio data illustrate the method’s utility in capturing complex signal characteristics. We implement our method in an R package, NLPwavelet (≥1.1).

Keywords:

three component spike-and-slab prior; wavelet analysis; nonlocal prior; generalized logit decay; hyperbolic secant decay; generalized normal decay MSC:

62G08

1. Introduction

We focus on spike-and-slab mixture models for wavelet-based Bayesian nonparametric regression. There are several existing approaches that consider for the wavelet coefficients various spike-and-slab mixture models, such as mixtures of two Gaussian distributions with different standard deviations [1], mixtures of a Gaussian and a point mass at zero [2,3,4], mixtures of a heavy-tailed distribution and a point mass at zero [5,6], mixtures of a logistic distribution and a point mass at zero [7], and mixtures of a nonlocal prior and a point mass at zero [8]. Nonlocal priors [9] are a class of priors that assign zero probability density in a neighborhood of the null value (often zero) of the parameter, unlike local priors, which are positive everywhere. In contrast to the other works mentioned above that used traditionally used local priors for the wavelet coefficients, ref. [8] pioneered the use of nonlocal priors for wavelet regression. Specifically, ref. [8] used two different nonlocal priors, namely the moment prior (MOM) and the inverse moment (IMOM) prior, in their mixture model. In this work, we flexibly extend all the previous approaches by proposing a three-component spike-and-slab mixture model for the wavelet coefficients where, along with a point mass for the spike part, a mixture of nonlocal priors is used to model the slab component. In addition, we introduce novel hyperparameter specifications that are shown to provide improved estimates in extensive simulation experiments with highly dispersed data.

In the Bayesian paradigm, nonlocal priors have been shown to encourage model selection parsimony and selective shrinkage (unlike local priors) for spurious coefficients [10,11]. They create a gap around zero, yielding harder exclusion and lower bias for sizable effects. In contrast, local shrinkage priors provide continuous shrinkage that is particularly suitable for estimation but less effective for variable selection unless combined with explicit selection rules. Previous work [12] has observed, in the context of high-dimensional genomics data, that different nonlocal priors provide better support for large and small regression coefficients. In wavelet regression, the wavelet coefficients at multiple resolution levels capture both location and scale characteristics of the underlying function [13]. If the underlying function is highly dispersed, its energy is spread across a wide range of location or scale components. For such a function, while most of the wavelet coefficients will likely be small or near zero, non-zero wavelet coefficients will span across multiple scales. In other words, no single scale will dominate the wavelet coefficients, as different scales will capture different portions of the signal’s energy. This will lead to significant coefficients at both coarse and fine scales. We conjecture that, if the underlying function is highly dispersed, a mixture of nonlocal priors will provide better support to the distribution of the wavelet coefficients compared to individual nonlocal priors. Specifically, in this work, we consider for the wavelet coefficients a prior that is a mixture of a point mass at zero, a MOM prior, and an IMOM prior.

Our motivation for combining MOM and IMOM priors stems from the fact that they offer complementary strengths in sparse high-dimensional regression. The MOM prior places more mass around moderate non-zero values, thereby offering greater sensitivity to small but meaningful signals. On the other hand, the IMOM prior has heavier tails, allowing it to support large coefficients better. In the context of wavelet regression of highly dispersed data, a single prior, MOM or IMOM, may over-shrink large coefficients or over-allow small noise fluctuations. A mixture of MOM and IMOM provides adaptive flexibility, simultaneously supporting both ends of the coefficient spectrum. This idea echoes adaptive shrinkage principles in sparse Bayesian learning. From a signal processing perspective, the heterogeneous scaling behavior of wavelet coefficients for highly dispersed signals motivates a prior that can adaptively vary shrinkage across scales. This hybrid slab also enables the prior predictive distribution to cover a broader range of plausible signals, reducing prior-data conflict. While our empirical results support this mixture’s performance, the theoretical justification lies in its capacity to model heterogeneity in signal strengths, a feature not adequately captured by any one nonlocal prior alone.

In Bayesian wavelet regression, the probability weights associated with different component distributions of a spike-and-slab mixture prior, henceforth called mixture probabilities, and the scaling parameters of the component distributions are often governed by multiple hyperparameters [2,3,8]. For the mixture probabilities at different resolution levels, previous work considered exponential decay specification [3], Bernoulli distribution [2], and logit specification [8]. Further, for the scaling parameters, exponential decay [3] and polynomial decay specifications [8] have been considered. In this work, we propose several novel specifications that flexibly model the variations in the mixture probabilities and scaling components. For the mixture probabilities, we consider a generalized logit decay, a hyperbolic secant decay, and a generalized normal decay, whereas for the scaling parameter, we consider a double exponential decay. Each of these specifications is controlled by a few hyperparameters. Following an empirical Bayes approach, we estimate the hyperparameters from the data and develop a posterior inference conditional on the hyperparameter estimates.

Through extensive simulation studies, we assess the performance gains of our approach under the different hyperparameter specifications, comparing it to two-component spike-and-slab prior-based wavelet methods. Finally, applications to real-world data, including electroencephalography (EEG) data from a meditation study and audio data from a noisy musical recording, illustrate the practical utility of the proposed method.

Although this work focuses on Bayesian spike-and-slab priors for wavelet analysis, it is still relevant to acknowledge other types of priors explored in the wavelet literature. These include scale mixtures of Gaussians [14,15,16,17], hidden Markov model-based priors [18], the generalized Gaussian distribution prior [19], Jeffreys’ prior [20], the Bessel K Form prior [21], the double Weibull prior [22], Bayes factor thresholding based on mixtures of conjugate priors [23], the beta prior [24], and the logistic prior [25]. For comparative reviews and summaries of wavelet-based nonparametric regression methods, see [26,27].

In what follows, Section 2 describes the proposed Bayesian hierarchical model along with the hyperparameter specifications, and Section 3 describes the empirical Bayes inference procedure. Subsequently, Section 4 describes the simulation experiment and results. The real data applications appear in Section 5 and Section 6. Finally, Section 7 concludes with an overall discussion of our work and relevant remarks. Theoretical proofs are included in Appendix A.

2. Bayesian Hierarchical Model

2.1. Observation Model

Suppose represent n noisy observations from an unknown function , which we aim to estimate. We consider the observation model where represents the equispaced sampling points, and the errors are assumed to be independent and identically distributed (i.i.d.) normal random variables, , with unknown variance . Let denote the vector of observations, the vector of true functional values, and the vector of errors. The observation model can be expressed in matrix form as

where is the n-dimensional identity matrix. In wavelet regression, the goal is to estimate the function from the observations by decomposing into wavelet basis functions. This decomposition allows us to exploit the multiscale nature of wavelets to capture both local and global features of the function. A chief feature of wavelet regression is its ability to adapt to different levels of smoothness and to handle noisy data efficiently, making it especially useful in nonparametric function estimation problems.

2.2. Wavelet Coefficient Model

We represent using an orthogonal wavelet basis matrix as [28], where is the orthogonal basis matrix and is a vector whose elements include the scaling coefficient at the coarsest resolution level along with the wavelet coefficients at all resolution levels. Let denote the vector of empirical wavelet coefficients. Since is orthogonal, we can express as , where represents the transformed error vector with .

Suppose denotes the wavelet coefficient at position j and resolution level l, with defined similarly for the empirical wavelet coefficients. Then, the model in terms of individual coefficients is given by

2.3. Mixture Prior for the Wavelet Coefficients

For the wavelet coefficient , we consider a spike-and-slab mixture prior that is a mixture of three components—a MOM prior, an IMOM prior, and a point mass at zero—given by

where and are mixture probabilities. The prior with order r and variance component , involving the scale parameter , has the following density function:

where and . The prior with shape parameter and variance component , involving the scale parameter , has the following density function:

The conditional density of the wavelet coefficient given that can be expressed as

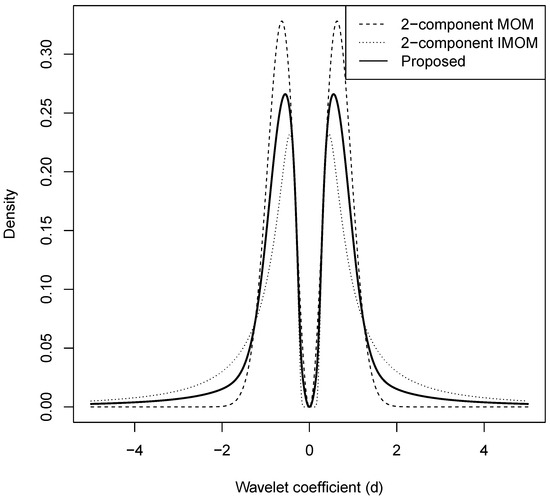

Figure 1 shows plots of our proposed three-component spike-and-slab mixture model (solid line) for with , , , and along with two-component spike-and-slab mixture models based on MOM (dashed line) and IMOM (dotted line) priors with , , and .

Figure 1.

Plots of our proposed three-component spike-and-slab mixture model (solid line) with , , , and along with two-component spike-and-slab mixture models based on MOM (dashed line) and IMOM (dotted line) priors with , , and .

2.4. Hyperparameter Specifications

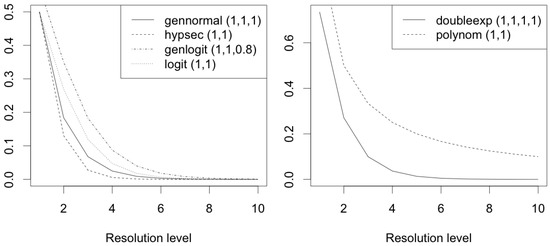

The mixture prior given in (3) depends on the mixture probabilities and , scale parameters and , order r, and shape parameter . This section considers different specifications for , , , and . Previous work in wavelet regression considered two-component spike-and-slab mixture priors, where the mixture probabilities were specified using exponential decay specification [3], Bernoulli distribution [2], and logit specification [8]. In this work, we examine three specifications for the mixture probabilities that flexibly model the variations in the mixture probabilities and, to the best of our knowledge, are hitherto unused in wavelet regression. These novel specifications were motivated by an exploratory data analysis where, for multiple highly dispersed data, we observed how the wavelet coefficients changed with resolution level. We compare these novel specifications with logit specification for and , given by where [8]. Figure 2 shows, in the left panel, the plots of the different specifications for the mixture probabilities against resolution level, with specific values of the hyperparameters. The novel specifications are described as follows:

Figure 2.

Plots of the different specifications considered for the mixture probabilities and (logit, generalized logit, hyperbolic secant, and generalized normal) and scale parameters and (polynomial decay and double exponential decay) against resolution level, with specified values of the hyperparameters.

- (a)

- Generalized logit or Richards decay specifications, given byThis form corresponds to a flexible S-shaped decay curve that reduces to standard logistic decay when (or ) and controls the steepness of the curve equal to one.

- (b)

- Hyperbolic secant decay specifications, given byThis form, although less intuitive, is also sigmoid-like but with heavier tails and slower decay than logistic, indicating that the mixture parameters approach one more slowly with a smoother transition. (or ) controls steepness and (or ) controls shift.

- (c)

- Generalized normal decay specifications, given byThis form is most flexible and can mimic normal CDF, Laplace CDF, and many other distributions, but also most complex. Whereas (or ) controls tail behavior with larger values indicating lighter tails, others control scale and shift.

For scale parameters of spike-and-slab mixture priors, previously considered specifications include exponential decay [3] and polynomial decay [8]. Here, for and , we consider the polynomial decay specification given by with . In addition, for modeling the variations in the scale parameters more flexibly, we novelly propose the following:

- (d)

- Double exponential decay specifications, given by

Figure 2 shows, in the right panel, the plots of the different specifications for the scale parameters against resolution level, with specific values of the hyperparameters.

Each of the proposed specifications admits interpretable controls over rate and shape of decay across scales, crucial for adaptivity in multi-resolution modeling, and is governed by a small number of hyperparameters. Note that the hyperparameters for the mixture probabilities are superscripted with and those for the scale parameters are superscripted with . In our simulation studies described in Section 4, we analyze each simulated dataset using configurations arising out of the combinations of the above specifications for the mixture probabilities and the scale parameters. While no formal optimality results are available for these specific forms, their empirical performance, as shown in Section 4, supports their practical relevance. Let generically denote the set of all hyperparameters for any given configuration.

3. Inference

For inference in our proposed Bayesian hierarchical wavelet regression model based on three-component spike-and-slab mixture priors, we adopt the empirical Bayes approach [5,8]. This methodology estimates the hyperparameters from the data and performs posterior inference conditioned on these estimated hyperparameters. For simplicity, in our simulation analysis, we set and . Further, we estimate the error variance using the median absolute deviation estimator [29] , which is a well-established practice in wavelet regression [2,3,5,6].

3.1. Hyperparameter Estimation

Let denote the value of the point mass function at x. The following result is used to obtain the hyperparameter estimates.

Result 1.

Integrating out from the wavelet coefficient model (2) using the mixture prior of the wavelet coefficients in (3) and using the Laplace approximation for the IMOM prior component, we get the marginal distribution of the empirical wavelet coefficients, , as

where, in the offshoot of the MOM component,

and in the offshoot of the IMOM component,

is the global maxima of , and , with .

The proof of Result 1 is given in Appendix A. Using (4), the marginal likelihood function is approximately given by

This is a function only of the data and the hyperparameters. Hence, we maximize this function with respect to the hyperparameters to obtain their estimates, . With , the prior distribution in (3) is fully known.

3.2. Posterior Distribution

The following two results provide the posterior estimates of the wavelet coefficients.

Result 2.

The conditional posterior density of the wavelet coefficient , given that and the hyperparameter estimates , by using the Laplace approximation for the IMOM prior component, can be expressed as

where

and

The proof is given in Appendix A.

Result 3.

The posterior expectation of the wavelet coefficients is

where

The proof of Result 3 is immediate from the proof of Result 2 and hence is omitted. Let denote the vector of posterior means of the wavelet coefficients. We use in the inverse discrete wavelet transform to obtain the posterior mean of the unknown function f, given by

4. Simulation Study

In this section, we conduct an extensive simulation analysis to comparatively evaluate the performance of the proposed method with different hyperparameter configurations. We consider three well-known test functions proposed by [30]—blocks, bumps, and doppler—that are used as standard test functions in the wavelet literature. However, we modify the coefficients used by [30] to obtain more highly dispersed signals. We define our modified test functions as

- ,

- ;

- ,

- ,

- ;

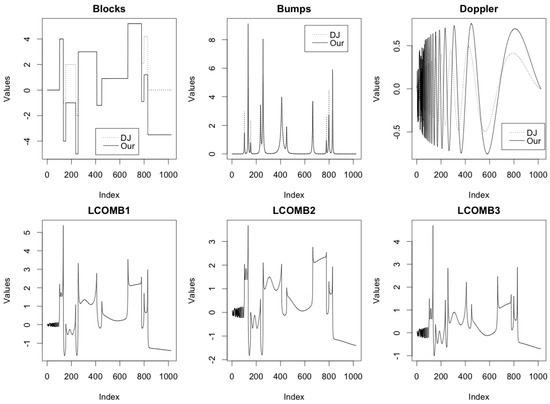

Figure 3 shows the plots of the test functions blocks, bumps, and doppler using Donoho–Johnstone specifications (dashed line) and our specifications (solid line), and lcomb1, lcomb2, and lcomb3 based on our specifications, all evaluated at 1024 equally spaced in (0,1). We evaluate each considered test function at n = 512, 1024, 2048, and 4096 equidistant points in the interval (0,1) and add random Gaussian noise with mean 0 to generate data with signal-to-noise ratio, SNR . For each combination of n and SNR, we consider 100 replications. The simulated datasets are analyzed using the proposed methodology with eight different hyperparameter configurations described in Section 2. For comparison, we also analyzed the datasets using individual MOM and IMOM prior-based two-component mixture models [8]. Thus, a total of 24 analysis methods were applied to each dataset. Note that the prior literature [8] has already shown that nonlocal prior-based wavelet analysis generally performs better than other existing wavelet-based methods such as sure [28], BayesThresh [3], cv [31], fdr [32], and Ebayesthresh [6]. So, for brevity, we do not compare with these methods in this work. For wavelet transformation, we consider the Daubechies least asymmetric wavelet with six vanishing moments and periodic boundary conditions. Wavelet computations are implemented using the R package wavethresh [33].

Figure 3.

Plots of the test functions blocks, bumps, and doppler using the Donoho–Johnstone (DJ) specifications (dashed line) and our specifications (solid line), and three linear combinations of them—lcomb1, lcomb2, and lcomb3—based on our specifications, all evaluated at 1024 equally spaced in (0,1).

Table 1 presents, for each test function and analysis method, the number of (n, SNR) combinations where the method achieved the lowest mean squared error (MSE). For each test function, the method with the highest frequency of best performance (i.e., the lowest MSE) is highlighted in bold. We observe the following:

Table 1.

Method comparison: Data were simulated for each test function across 12 combinations of sample size (n) and SNR, with 100 replications per combination. A total of 24 analysis methods were applied to each dataset—8 methods with MOM-based two-component mixture prior, 8 methods with IMOM-based two-component mixture prior, and 8 methods with the proposed three-component mixture prior. The average MSE was computed for each method across the replications. The table presents, for each test function and analysis method, the number of combinations where the method achieved the lowest MSE. For each test function, the method with the highest frequency of best performance is highlighted in bold.

- (a)

- For every function, the highest frequency of best performance was shown by a three-component mixture method (with one tie with a two-component mixture method for the blocks function), which is proposed in the current work.

- (b)

- Out of all eight three-component mixture methods, the one with generalized normal specification for the mixture probabilities and double exponential decay specification for the scale parameters (mixture-gennormal-doubleexp) showed the maximum number of best performances in total (12 times) for all the test functions. This was followed by the method using logit specification for the mixture probabilities and polynomial decay specification for the scale parameters (mixture-logit-polynom) (11 times) and the method using generalized normal specification for the mixture probabilities and polynomial decay specification for the scale parameters (mixture-logit-polynom) (9 times).

- (c)

- Considering only the test functions lcomb1, lcomb2, and lcomb3 that represent signals with mixed characteristics in various proportions, the mixture-gennormal-doubleexp method showed the maximum number of best performances (10 times).

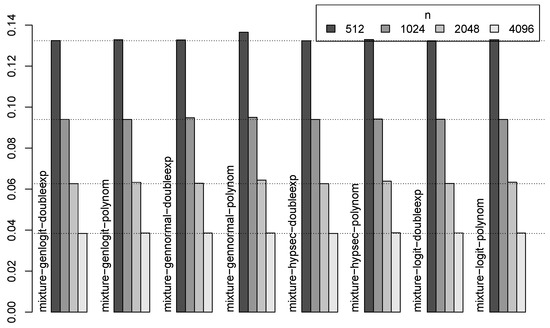

For real data, SNR and the original function will not be known. So, next, in Figure 4, we show, for each of the eight three-component mixture methods, the MSE for the four different sample sizes (n), averaged over the three SNRs and six test functions considered in our study. Overall, methods with a double exponential decay specification for the scale parameters showed a better performance. Specifically, for the two largest sample sizes (2048 and 4096), the method with hyperbolic secant and double exponential decay specifications (mixture-hypsec-doubleexp) and the method with generalized logit and double exponential decay specifications (mixture-genlogit-doubleexp) provided top performances. Notably, the method with generalized normal and polynomial decay specifications showed a worse performance in Figure 4, even though the methods with generalized normal specifications showed the best performance in Table 1. This implies that the methods with generalized normal specifications, although most often provide the best denoising, may sometimes produce large bias that negatively affects their overall average performance. So, the results obtained by their usage should be validated by field knowledge or external means (such as auditory assessment for sound signals).

Figure 4.

For the proposed three-component mixture-based methods, MSE for different sample sizes (n), averaged over 3 SNRs and 6 test functions.

5. EEG Meditation Study

We demonstrate the utility and flexibility of the proposed methodology through the analysis of EEG data from a meditation study, investigating the relation between mind wandering and meditation practice. Detailed information about the study is provided in [34], and the dataset is available on the OpenNeuro platform (see the Supplementary Materials Section S1 for the link). The meditation experiment involved 24 subjects—12 experienced meditators (10 males, 2 females) and 12 novices (2 males, 10 females). Participants meditated while being interrupted approximately every two minutes to report their level of concentration and mind wandering via three probing questions. Each participant completed two to three sessions lasting 45 to 90 min, with a minimum of 30 probes per participant. EEG data were collected using a 64-channel Biosemi system (channels A1–A32 and B1–B32) with a Biosemi 10–20 head cap montage at a sampling rate of 2048 Hz, providing spatial information about brain activity across different scalp regions. The dataset available on OpenNeuro has already been downsampled to 256 Hz.

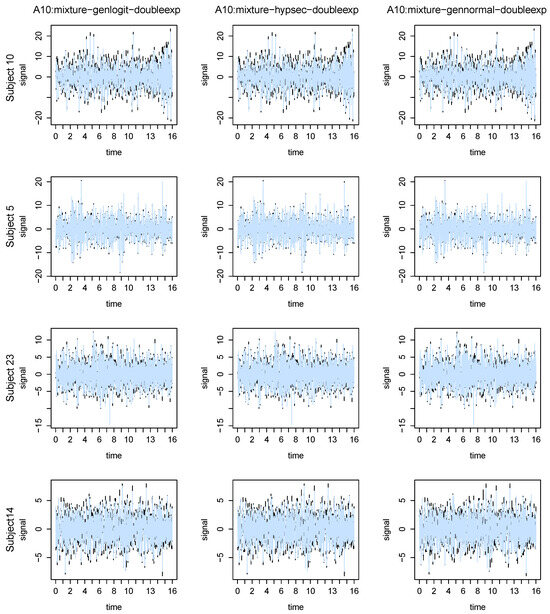

For our analysis, we focused on the data from session 1 of four participants: two expert meditators (one male and one female, identified as subjects 10 and 5 in the original dataset, respectively) and two novices (one male and one female, identified as subjects 23 and 14, respectively). The data were average-referenced to mitigate common noise and artifacts by calculating the mean signal across all electrodes at each time point and subtracting it from the signal at each electrode. Following this, a 2 Hz high-pass filter was applied using an infinite impulse response (IIR) filter with a transition bandwidth of 0.7 Hz and an order of six. Denoting the time of probe 1 by t, we analyzed the EEG signal within the 16 s interval . With a sampling rate of 256 Hz, this interval comprised 256 × 16 = 4096 data points, representing noisy observations of the underlying signal. We analyzed the EEG data using the mixture-hypsec-doubleexp, mixture-genlogit-doubleexp, and mixture-gennormal-polynom methods (see Section 4), employing the Daubechies least asymmetric wavelet transform with six vanishing moments and periodic boundary conditions.

Figure 5 presents the plots of the posterior means of the EEG signal (in slategray) based on the mixture-genlogit-doubleexp (left column), mixture-hypsec-doubleexp (middle column), and mixture-gennormal-doubleexp (right column) methods, superimposed on the observed data (in black), obtained during the 16 s interval from the A10 channel of the four considered participants. Similar plots for some other channels are presented in the Supplementary Materials Section S3. The plots clearly indicate that our method, with all the considered hyperparameter configurations, yielded significantly denoised estimates.

Figure 5.

Plots of the posterior means of the EEG signal (in slate gray) based on the imom-logit-polynom (left), mixture-genlogit-doubleexp (middle), and mixture-gennorm-polynom (right) methods, superimposed on the observed data (in black), obtained during the 16 s interval , t being probe 1 onset time, from the A10 channel of the 4 considered participants.

6. Musical Sound Study

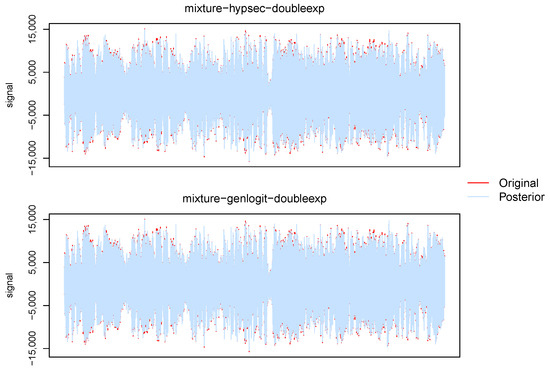

To further demonstrate the utility of the proposed method, we consider analyzing musical sounds that often exhibit sudden frequency changes over short periods, resulting in highly dispersed signals. For this study, we focus on a vocal music recording from 1934, originally published on a 78 RPM record of Hindustani classical music (one of India’s two classical music traditions) and performed by eminent Ustad Amir Khan. A noisy copy of this recording was sourced from YouTube (link provided in the Supplementary Materials Section S1). From the recording, we extracted a 15 s segment representing a wide frequency range and saved it as a 16-bit WAV audio file, which represents noisy data.

For our analysis, we divided each of the two audio file channels (left and right) into sections of 4096 data points to enhance computational efficiency. Each section was independently analyzed using the mixture-genlogit-doubleexp and mixture-hypsec-doubleexp methods (see Section 4) employing the Daubechies coiflets wavelet transform with five vanishing moments and periodic boundary conditions. The posterior estimates of the individual sections were then combined to reconstruct the posterior estimate of the entire audio segment.

In Figure 6, we present the posterior mean of the right-channel signal of the selected audio segment (in slate gray), superimposed on the noisy data (in black). The audio files corresponding to these posterior estimates, along with the original data and posterior estimates using the hard thresholding rule, are provided in the Supplementary Materials for auditory comparison and assessment. The posterior estimates show significant denoising and precise recovery of the signal. There is a slight systematic noise present in the posterior audio files, which is due to analyzing the whole segment in disjoint sections for computational convenience. That can be mitigated by analyzing larger sections or analyzing partially overlapping sections with weighted averaging (e.g., cross-fading or Hann windowing) to smoothly combine overlapping regions and reduce boundary artifacts.

Figure 6.

Plots of the posterior means (in slate gray) of the right-channel signal of the chosen audio segment of the vocal music recording, superimposed on the noisy data (in red).

7. Discussion

In this work, we proposed the use of nonlocal prior mixtures for wavelet-based nonparametric function estimation. The main innovations of our methodology are as follows:

- (a)

- We introduce a three-component spike-and-slab prior for the wavelet coefficients. This structure is particularly suited for modeling highly dispersed signals. The slab component is a mixture of two nonlocal priors—the MOM and IMOM priors, which offer enhanced adaptability to signal characteristics.

- (b)

- We propose flexible and previously unexplored hyperparameter specifications. These include generalized logit (or Richards), hyperbolic secant, and generalized normal decay specifications for the mixture probabilities, as well as a double exponential decay structure for the scale parameter. These enhancements provide improved flexibility and accuracy in modeling complex signal patterns, as demonstrated in our simulation study.

- (c)

- We implement our methodology within the R programming language [35] as a package named NLPwavelet [36], which performs nonlocal prior (NLP)-based wavelet analysis.

In the simulation study, using more dispersed versions of the Donoho–Johnstone test functions and various linear combinations of them, we compared the performance of the proposed approach with the existing two-component spike-and-slab mixture prior and demonstrated the superior flexibility of the proposed approach. Further, analysis using several novel hyperparameter configurations provided valuable insights into the relative advantages. Although no formal optimality results are available for these specific forms, their flexibility is supported by established theoretical principles for scale-dependent shrinkage, and their empirical performance, demonstrated in the simulation study of Section 4, provides strong evidence of their practical relevance.

Note that, in our empirical Bayes implementation, we did not encounter convergence failures. However, in smaller samples or high-noise scenarios, certain decay specifications—particularly those involving generalized normal parameters—showed greater variability in estimated hyperparameters. To reduce potential overfitting and identifiability issues, we recommend sensitivity checks across multiple decay specifications and inspection of hyperparameter estimates for plausibility. Such practices can help ensure the robustness of conclusions in applied settings.

A necessary limitation of the proposed approach is its higher computational cost relative to two-component mixture priors. Supplementary Materials Section S2 reports the average runtime (in seconds) for 24 analysis methods across the sample sizes n considered in our simulation study. Within each method, the specification of the mixture probability has only a modest effect on runtime, while for the scaling parameter, doubleexp options are generally slightly slower than polynom options. The runtime growth of the mixture methods suggests approximately linear scaling with sample size. For instance, for mixture-logit-polynom, the runtime for is about 1.96 times that for ; for , it is about 1.96 times that for ; and for , about 1.97 times that for . That near doubling of runtime when n doubles is consistent across the other mixture methods too. This near doubling with each doubling of n is consistent across other mixture methods, indicating a computational complexity of roughly , which is generally considered good and efficient for large-scale problems. Nonetheless, the larger constant factor of the proposed three-component methods, relative to two-component methods, results in longer absolute runtimes, which is an anticipated trade-off for their enhanced modeling flexibility. While our simulations focus on one-dimensional signals, the linear scaling behavior suggests the approach can extend to higher-dimensional settings (e.g., 2D/3D images), subject to the corresponding increase in the number of coefficients.

Further, while the EEG and audio denoising examples illustrate the practical use of the proposed method, their evaluation is primarily qualitative due to the lack of ground truth reference signals. For EEG data, objective measures such as SNR improvement or reconstruction error cannot be computed reliably without a known clean signal. Similarly, in the audio example, perceptual quality metrics such as PESQ [37] or STOI [38] require access to a clean reference, which was not available for the real-world recording used here. Future work, incorporating controlled experiments in which synthetic noise is added to high-quality EEG or audio recordings, would allow the computation of such quantitative metrics and hence direct, reproducible comparisons with existing denoising techniques, complementing the qualitative assessments presented in this study.

An obvious extension of the proposed approach is to adapt it to multidimensional wavelet regression, such as 2D or 3D image processing tasks. Another possible avenue is to develop fully Bayesian hierarchical models by specifying prior distributions for the hyperparameters and employing Markov chain Monte Carlo tools or variational methods for posterior inference. In addition, one can explore combining wavelet decompositions with local polynomial regression to mitigate the boundary bias issues. Further, adapting our methodology to non-Gaussian or skewed data can be an interesting enterprise. We leave all these to future research.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/math13162642/s1, Figure S1: Plots of the posterior means of the EEG signal (in slate gray) based on the imom-logit-polynom (left column), mixture-genlogit-doubleexp (middle column), and mixture-gennorm-polynom (right column) methods, suporimposed on the observed data (in black), obtained during the 16-second interval , t being probe 1 onset time, from the A20 channel of the 4 considered participants; Figure S2: Plots of the posterior means of the EEG signal (in slate gray) based on the imom-logit-polynom (left column), mixture-genlogit-doubleexp (middle column), and mixture-gennorm-polynom (right column) methods, suporimposed on the observed data (in black), obtained during the 16-second interval , t being probe 1 onset time, from the B10 channel of the 4 considered participants; Figure S3: Plots of the posterior means of the EEG signal (in slate gray) based on the imom-logit-polynom (left column), mixture-genlogit-doubleexp (middle column), and mixture-gennorm-polynom (right column) methods, suporimposed on the observed data (in black), obtained during the 16-second interval , t being probe 1 onset time, from the B20 channel of the 4 considered participants; Table S1: Average runtime (seconds) for 24 analysis methods applied to simulated datasets from 12 combinations of sample size (n) and SNR, with 100 replications each. Methods include eight MOM-based, eight IMOM-based, and eight proposed three-component mixture priors.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are openly available in [OpenNeuro] [https://openneuro.org/datasets/ds001787/versions/1.1.0].

Acknowledgments

The authors thank the JAKAR High-Performance Cluster at the University of Texas at El Paso for providing computational resources free of charge.

Conflicts of Interest

The authors declare no conflicts of interest.

Correction Statement

This article has been republished with a minor correction to the Data Availability Statement. This change does not affect the scientific content of the article.

Abbreviations

The following abbreviations are used in this manuscript:

| MOM prior | Moment prior |

| IMOM prior | Inverse moment prior |

| NLP | Nonlocal prior |

| SNR | Signal-to-noise ratio |

| MSE | Mean squared error |

| EEG | Electroencephalogram |

Appendix A

Appendix A.1. Proof of Result 1

From the wavelet coefficient model (2) and the mixture prior of the wavelet coefficients in (3), using the Laplace approximation for the IMOM prior component, we get the marginal distribution of the empirical wavelet coefficients, , as

where

is the global maxima of , and , with .

Appendix A.2. Proof of Result 2

The proportionality constant is

Thus,

from which Result 2 follows.

References

- Chipman, H.A.; Kolaczyk, E.D.; McCulloch, R.E. Adaptive Bayesian Wavelet Shrinkage. J. Am. Stat. Assoc. 1997, 92, 1413–1421. [Google Scholar] [CrossRef]

- Clyde, M.; Parmigiani, G.; Vidakovic, B. Multiple shrinkage and subset selection in wavelets. Biometrika 1998, 85, 391–401. [Google Scholar] [CrossRef]

- Abramovich, F.; Sapatinas, T.; Silverman, B. Wavelet thresholding via a Bayesian approach. J. R. Stat. Soc. Ser. B 1998, 60, 725–749. [Google Scholar] [CrossRef]

- Sanyal, N.; Ferreira, M.A. Bayesian hierarchical multi-subject multiscale analysis of functional {MRI} data. NeuroImage 2012, 63, 1519–1531. [Google Scholar] [CrossRef] [PubMed]

- Clyde, M.; George, E.I. Flexible empirical Bayes estimation for wavelets. J. R. Stat. Soc. Ser. B 2000, 62, 681–698. [Google Scholar] [CrossRef]

- Johnstone, I.M.; Silverman, B.W. Empirical Bayes Selection of Wavelet Thresholds. Ann. Stat. 2005, 33, 1700–1752. [Google Scholar] [CrossRef]

- dos Santos Sousa, A.R. A Bayesian wavelet shrinkage rule under LINEX loss function. Res. Stat. 2024, 2, 2362926. [Google Scholar] [CrossRef]

- Sanyal, N.; Ferreira, M.A. Bayesian wavelet analysis using nonlocal priors with an application to FMRI analysis. Sankhya B 2017, 79, 361–388. [Google Scholar] [CrossRef]

- Johnson, V.; Rossell, D. On the use of non-local prior densities in Bayesian hypothesis tests. J. R. Stat. Soc. Ser. B 2010, 72, 143–170. [Google Scholar] [CrossRef]

- Johnson, V.; Rossell, D. Bayesian model selection in high-dimensional settings. J. Am. Stat. Assoc. 2012, 107, 649–660. [Google Scholar] [CrossRef]

- Rossell, D.; Telesca, D. Nonlocal priors for high-dimensional estimation. J. Am. Stat. Assoc. 2017, 112, 254–265. [Google Scholar] [CrossRef]

- Sanyal, N.; Lo, M.T.; Kauppi, K.; Djurovic, S.; Andreassen, O.A.; Johnson, V.E.; Chen, C.H. GWASinlps: Non-local prior based iterative SNP selection tool for genome-wide association studies. Bioinformatics 2019, 35, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Mallat, S. A Wavelet Tour of Signal Processing, Third Edition: The Sparse Way, 3rd ed.; Academic Press, Inc.: Cambridge, MA, USA, 2008. [Google Scholar] [CrossRef]

- Vidakovic, B. Nonlinear Wavelet Shrinkage with Bayes Rules and Bayes Factors. J. Am. Stat. Assoc. 1998, 93, 173–179. [Google Scholar] [CrossRef]

- Vidakovic, B.; Ruggeri, F. BAMS Method: Theory and Simulations. Sankhyā Indian J. Stat. Ser. B 2001, 63, 234–249. [Google Scholar]

- Portilla, J.; Strela, V.; Wainwright, M.; Simoncelli, E. Image denoising using scale mixtures of Gaussians in the wavelet domain. IEEE Trans. Image Process. 2003, 12, 1338–1351. [Google Scholar] [CrossRef]

- Cutillo, L.; Jung, Y.Y.; Ruggeri, F.; Vidakovic, B. Larger posterior mode wavelet thresholding and applications. J. Stat. Plan. Inference 2008, 138, 3758–3773. [Google Scholar] [CrossRef]

- Crouse, M.; Nowak, R.; Baraniuk, R. Wavelet-based statistical signal processing using hidden Markov models. IEEE Trans. Signal Process. 1998, 46, 886–902. [Google Scholar] [CrossRef]

- Chang, S.; Yu, B.; Vetterli, M. Adaptive wavelet thresholding for image denoising and compression. IEEE Trans. Image Process. 2000, 9, 1532–1546. [Google Scholar] [CrossRef]

- Figueiredo, M.; Nowak, R. Wavelet-based image estimation: An empirical Bayes approach using Jeffrey’s noninformative prior. IEEE Trans. Image Process. 2001, 10, 1322–1331. [Google Scholar] [CrossRef]

- Boubchir, L.; Boashash, B. Wavelet denoising based on the MAP estimation using the BKF Prior with application to images and EEG signals. IEEE Trans. Signal Process. 2013, 61, 1880–1894. [Google Scholar] [CrossRef]

- Reményi, N.; Vidakovic, B. Wavelet shrinkage with double Weibull prior. Commun. Stat. Simul. Comput. 2015, 44, 88–104. [Google Scholar] [CrossRef]

- Afshari, M.; Lak, F.; Gholizadeh, B. A new Bayesian wavelet thresholding estimator of nonparametric regression. J. Appl. Stat. 2017, 44, 649–666. [Google Scholar] [CrossRef]

- Sousa, A.R.d.S.; Garcia, N.L.; Vidakovic, B. Bayesian wavelet shrinkage with beta priors. Comput. Stat. 2021, 36, 1341–1363. [Google Scholar] [CrossRef]

- dos Santos Sousa, A.R. Bayesian wavelet shrinkage with logistic prior. Commun. Stat. Simul. Comput. 2022, 51, 4700–4714. [Google Scholar] [CrossRef]

- Vidakovic, B. Statistical Modeling by Wavelets; Wiley Series in Probability and Statistics; Wiley: Hoboken, NJ, USA, 1999. [Google Scholar] [CrossRef]

- Antoniadis, A.; Bigot, J.; Sapatinas, T. Wavelet Estimators in Nonparametric Regression: A Comparative Simulation Study. J. Stat. Softw. 2001, 6, 1–83. [Google Scholar] [CrossRef]

- Donoho, D.L.; Johnstone, I.M. Adapting to Unknown Smoothness via Wavelet Shrinkage. J. Am. Stat. Assoc. 1995, 90, 1200–1224. [Google Scholar] [CrossRef]

- Donoho, D.L.; Johnstone, I.M.; Kerkyacharian, G.; Picard, D. Wavelet Shrinkage: Asymptopia? J. R. Stat. Soc. Ser. B 1995, 57, 301–369. [Google Scholar] [CrossRef]

- Donoho, D.; Johnstone, J. Ideal spatial adaptation by wavelet shrinkage. Biometrika 1994, 81, 425–455. [Google Scholar] [CrossRef]

- Nason, G.P. Wavelet Shrinkage Using Cross-Validation. J. R. Stat. Soc. Ser. B 1996, 58, 463–479. [Google Scholar] [CrossRef]

- Abramovich, F.; Benjamini, Y. Adaptive thresholding of wavelet coefficients. Comput. Stat. Data Anal. 1996, 22, 351–361. [Google Scholar] [CrossRef]

- Nason, G. Wavethresh: Wavelets Statistics and Transforms, version 4.7.3; R Foundation for Statistical Computing: Vienna, Austria, 2024. [Google Scholar] [CrossRef]

- Brandmeyer, T.; Delorme, A. Reduced mind wandering in experienced meditators and associated EEG correlates. Exp. Brain Res. 2018, 236, 2519–2528. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2024. [Google Scholar]

- Sanyal, N. NLPwavelet: Bayesian Wavelet Analysis Using Non-Local Priors, version 1.1; R Foundation for Statistical Computing: Vienna, Austria, 2025. [Google Scholar] [CrossRef]

- International Telecommunication Union. Perceptual Evaluation of Speech Quality (PESQ): An Objective Method for End-to-End Speech Quality Assessment of Narrow-Band Telephone Networks and Speech Codecs; Rec. P.862; International Telecommunication Union: Geneva, Switzerland, 2001. [Google Scholar]

- Taal, C.H.; Hendriks, R.C.; Heusdens, R.; Jensen, J. A short-time objective intelligibility measure for time-frequency weighted noisy speech. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010; pp. 4214–4217. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).