1. Introduction

Trajectory optimization is a vital technology for solving optimal control problems, holding significant theoretical research importance and engineering application value [

1,

2]. The trajectory optimization problem is a type of multi-stage, nonlinear optimal control challenge with fixed objective states, control variables, and path constraints. Numerical methods to solve these problems are mainly divided into indirect and direct approaches [

3]. Indirect methods include Pontryagin’s principle of extreme value [

4] and the variational method [

5], which derive control strategies by constructing the accompanying equations and applying optimality conditions. Their strengths lie in their theoretical rigor and ability to precisely satisfy optimality conditions. For instance, the literature [

6] has used the variational method to derive dynamic optimality control conditions for rocket flight parameters. However, indirect methods involve complex accompanying equations, which require intricate mathematical derivations, limiting their practical application in engineering. Conversely, direct methods, such as targeting [

7] and pseudospectral methods [

8], convert continuous optimal control problems into nonlinear planning problems through discretization, which are then solved using optimization algorithms, like sequential quadratic programming (SQP) [

9,

10]. Direct methods are favored for their ease of implementation and adaptability to complex constraints, making them more prevalent in industry and engineering practice. For example, the literature [

11,

12] has explored the use of the Gauss pseudospectral method in local orbit optimization for deep space exploration with small-thrust rockets and in trajectory optimization during the climb phase of a hybrid-power aircraft. The literature [

13] has employed the targeting method to optimize the trajectory of a second-stage rocket and the recovery process for the first stage. Recently, machine learning approaches have introduced new ideas for solving optimal control problems. Reinforcement learning (RL) [

14], for example, can handle optimal control in both model-free and model-based scenarios, making it suitable for complex, high-dimensional, nonlinear control tasks. Model predictive control (MPC) [

15] is a model-based optimal control strategy for roll optimization, with the literature [

16] demonstrating its use for trajectory optimization during a rocket’s vertical landing phase. When an accurate system model is available, MPC is characterized by high computational efficiency.

The differential dynamic programming (DDP) method [

17,

18] has significantly improved robot trajectory optimization in recent years. DDP breaks down the global optimization problem into multiple local optimization problems over time. Its use of backward propagation and second-order approximate dynamic updates of the control strategy helps avoid the high-dimensional computational burden of SQP when handling the full-variable Hessian matrix. Additionally, it overcomes the numerical stability issues of variational methods in solving two-point boundary value problems. It offers high computational efficiency for nonlinear system optimal control problems [

19]. However, the iterative process of the DDP method does not consider control variable constraints. This leads to challenges in trajectory optimization tasks that involve control variable constraints. As a result, researchers have introduced various improvement strategies, including penalty function methods [

20], augmented Lagrangian methods [

21], truncated DDP methods [

22], and projected DDP methods [

23]. Among these, the penalty function method is a soft-constraint approach that incorporates penalty terms into the loss function to manage constraints, with the advantage of straightforward implementation. For example, Howell et al. [

21] transformed the constrained optimization problem into an unconstrained problem by adding penalty terms and Lagrange multipliers into the objective function, thus integrating constraints within the DDP framework. However, since the penalty function method is a soft-constraint technique, it may still cause the control variables to exceed their allowable range. The truncated DDP method [

22] offers a direct approach to constraint handling: control variables are forcibly truncated to the boundary values if they surpass the specified limits. Nonetheless, this method can introduce issues with gradient discontinuity at the boundaries, potentially resulting in unstable optimization or complicated convergence. The projected DDP method [

23] mitigates this by mapping control variables into the constraint range via projection functions, which provides better smoothness at the boundaries than the truncated approach. However, gradient vanishing remains problematic as control variables approach the boundaries.

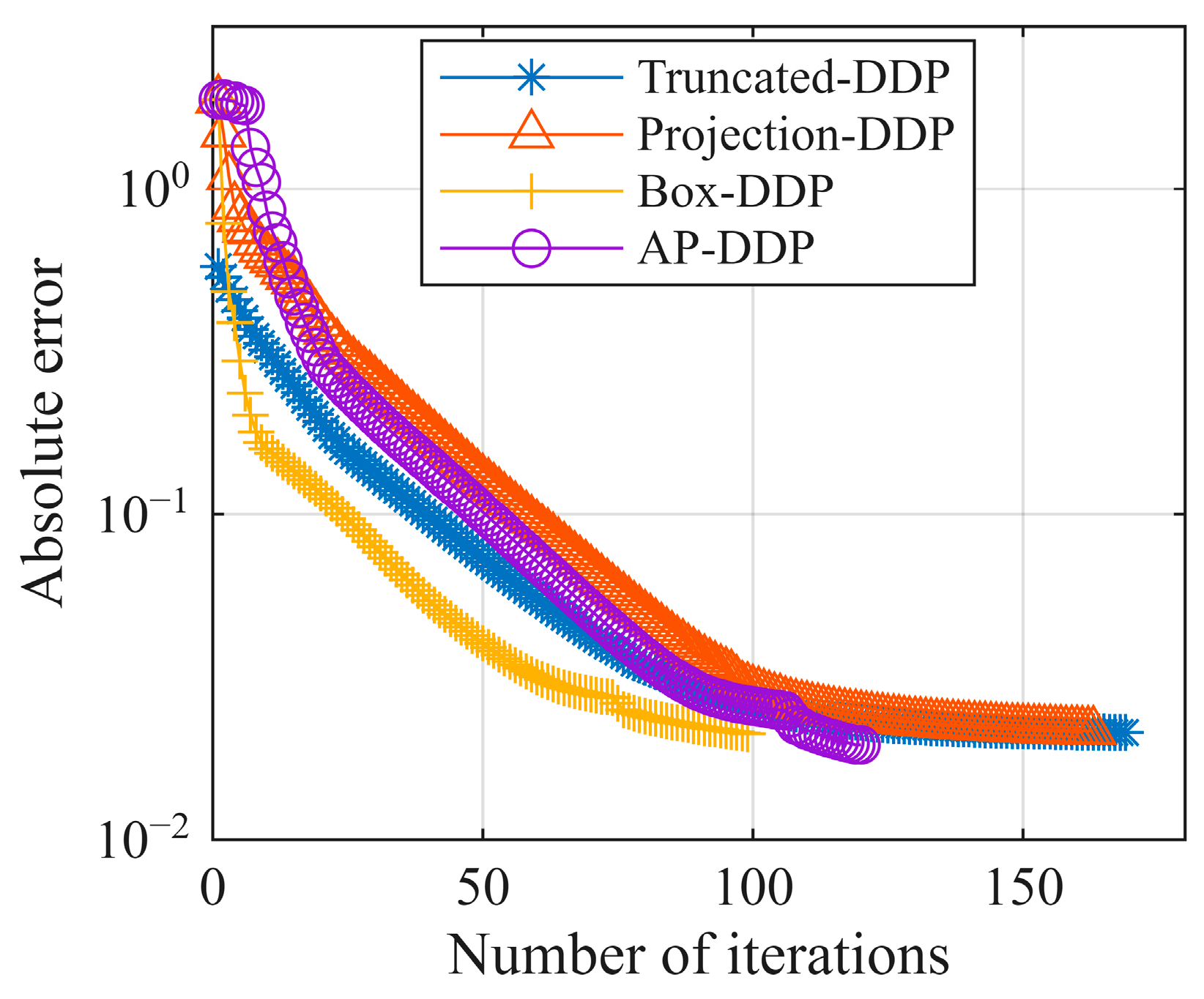

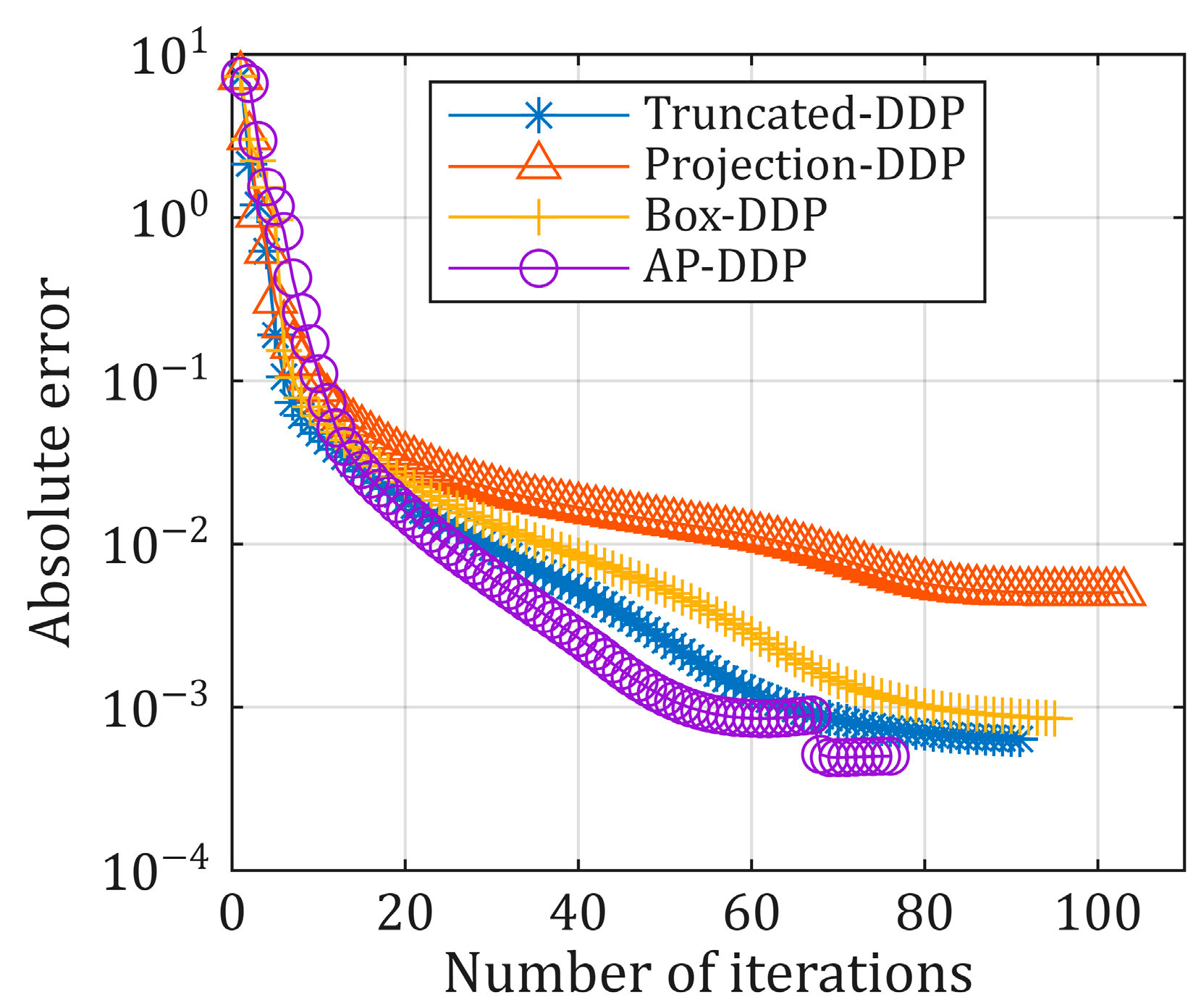

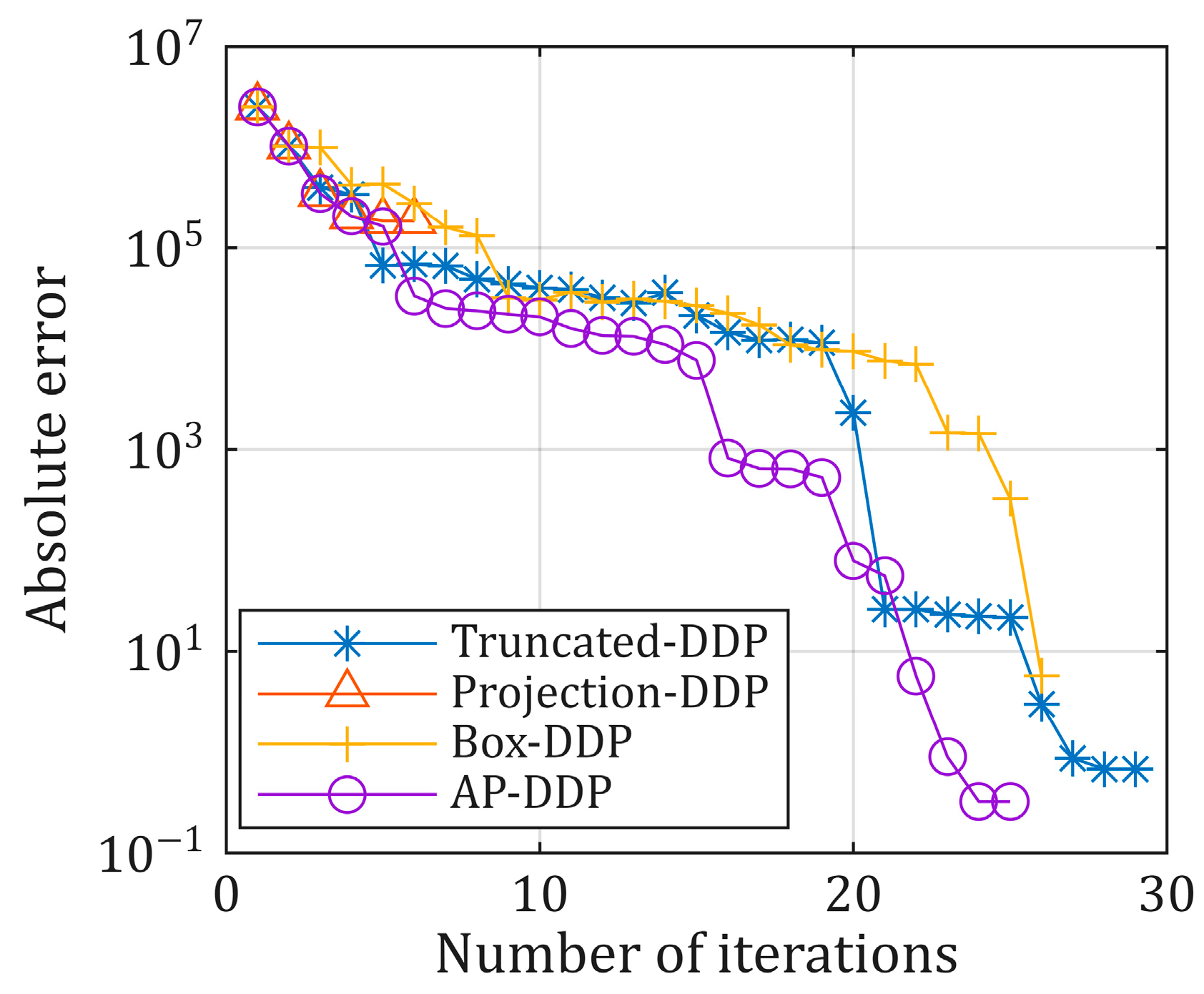

Compared to truncated methods, the projected method provides the advantage of smooth gradient variations in control variables. It prevents the issue of gradient discontinuity during the iterative process. However, the projected DDP method might struggle to reach the boundaries of control variables during iteration, and the cost function can fluctuate slightly between iterations, possibly leading to premature convergence and causing the algorithm to get stuck in a local optimum. Box-DDP is a box-constraint method specifically designed for control variables; unlike the previous two methods, which adjust control ranges during backward propagation, it directly accounts for control restrictions at each time step. It incorporates the box constraints of control variables into the quadratic programming sub-problem for solution. When minimizing the quadratic approximation of the action value function, the constraint condition is the box constraint of the control variables. Nonetheless, the Box-DDP method requires solving a quadratic programming sub-problem at each time step, which increases complexity and decreases computational efficiency.

Researchers have suggested several ways to improve further the performance of the DDP method in dealing with constraint problems. For example, Cao et al. [

18] converted the polynomial trajectory generation problem into an optimal control problem in a state space form. They guaranteed the safety and feasibility of the trajectory by adding control points and dynamic feasibility constraints. Finally, they used dynamic programming to solve the constrained optimal control problem. Xie et al. [

19] introduced the constrained differential dynamic programming method, developed the recursive quadratic approximation formula with nonlinear constraints, and identified a set of active constraints at each time step, enabling the practical addition of control constraints. Aoyama et al. [

24] further enhanced the DDP method’s ability to handle nonlinear constraint problems by combining slack variables with augmented Lagrangian techniques. In summary, the projection DDP method effectively limits the control variables to a fixed range through the projection function. However, gradient disappearance may occur when the control variables are near the constraint boundary, which can cause the algorithm to get stuck in a local optimum.

To address the above issues, this paper improves the existing projection function and introduces an adaptive projection differential dynamic programming (AP-DDP) method. The main innovation of this approach is the addition of an adaptive relaxation coefficient to dynamically adjust the smoothness of the projection function, effectively preventing the gradient disappearance that can occur when control variables are near the constraint boundary, which can cause the algorithm to get stuck in a local optimum. The iterative relaxation coefficient also helps accelerate the search for feasible solutions in the early stage, increasing the algorithm’s efficiency. The structure of this paper is as follows:

Section 2 explains the principle of the DDP method;

Section 3 details the AP-DDP method proposed here;

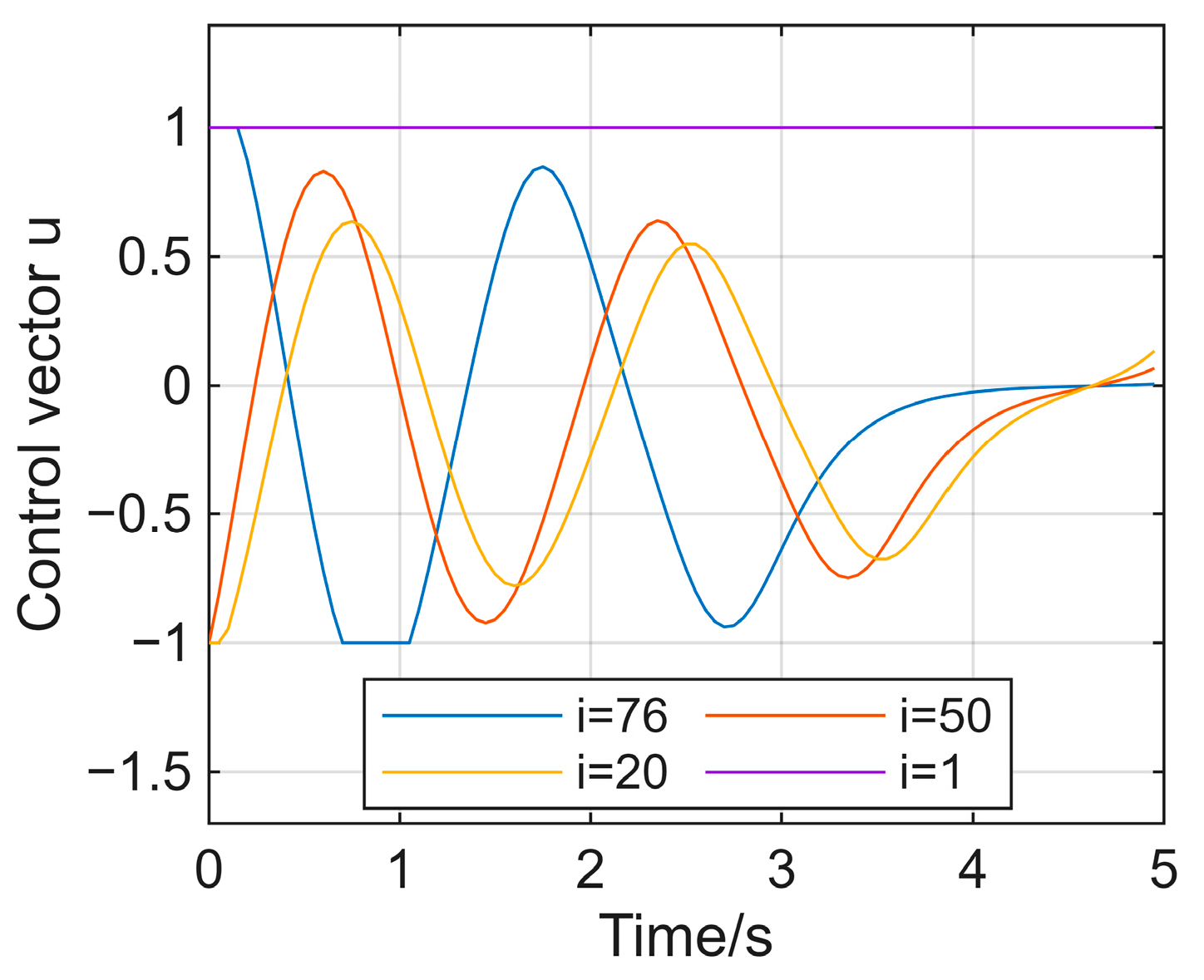

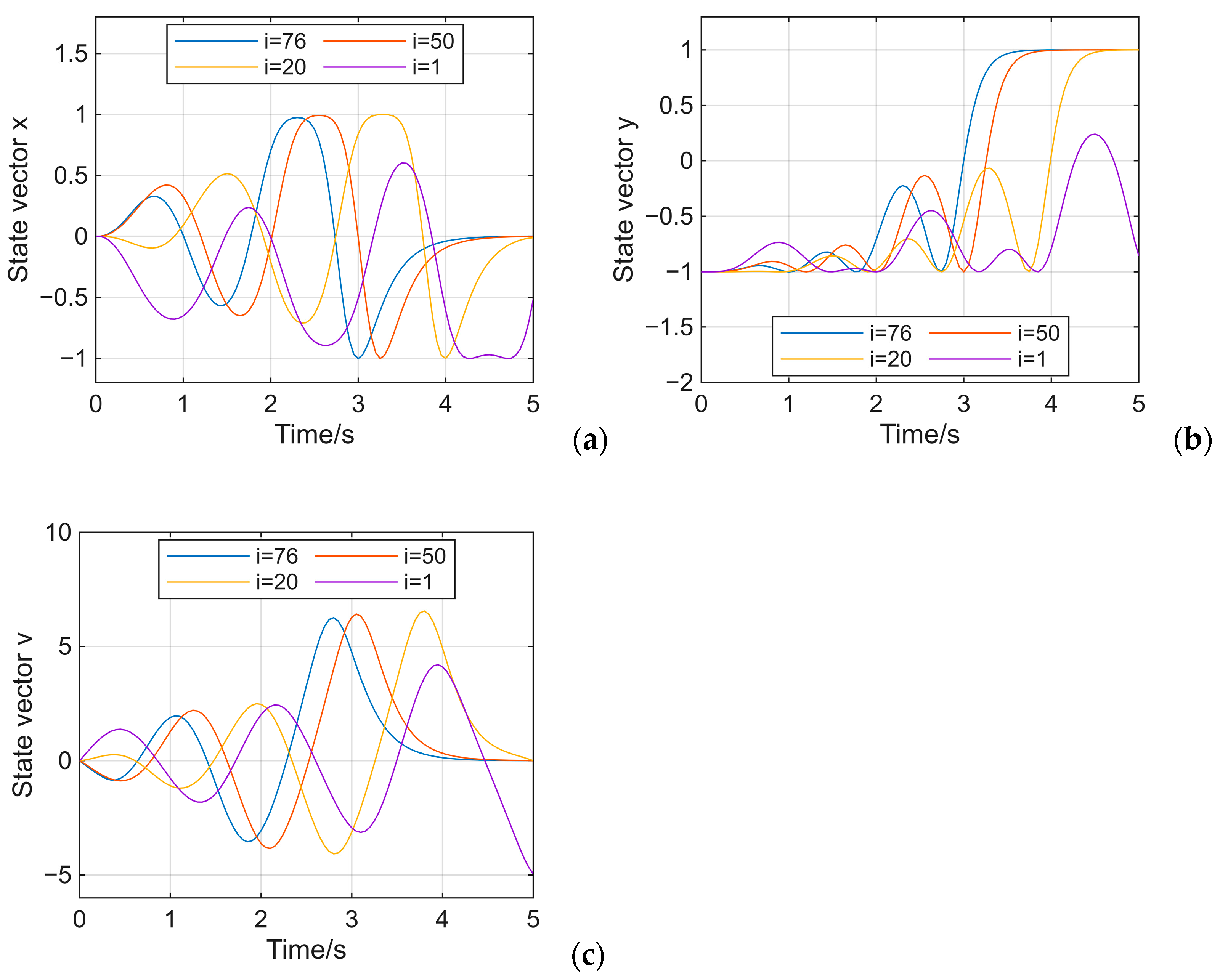

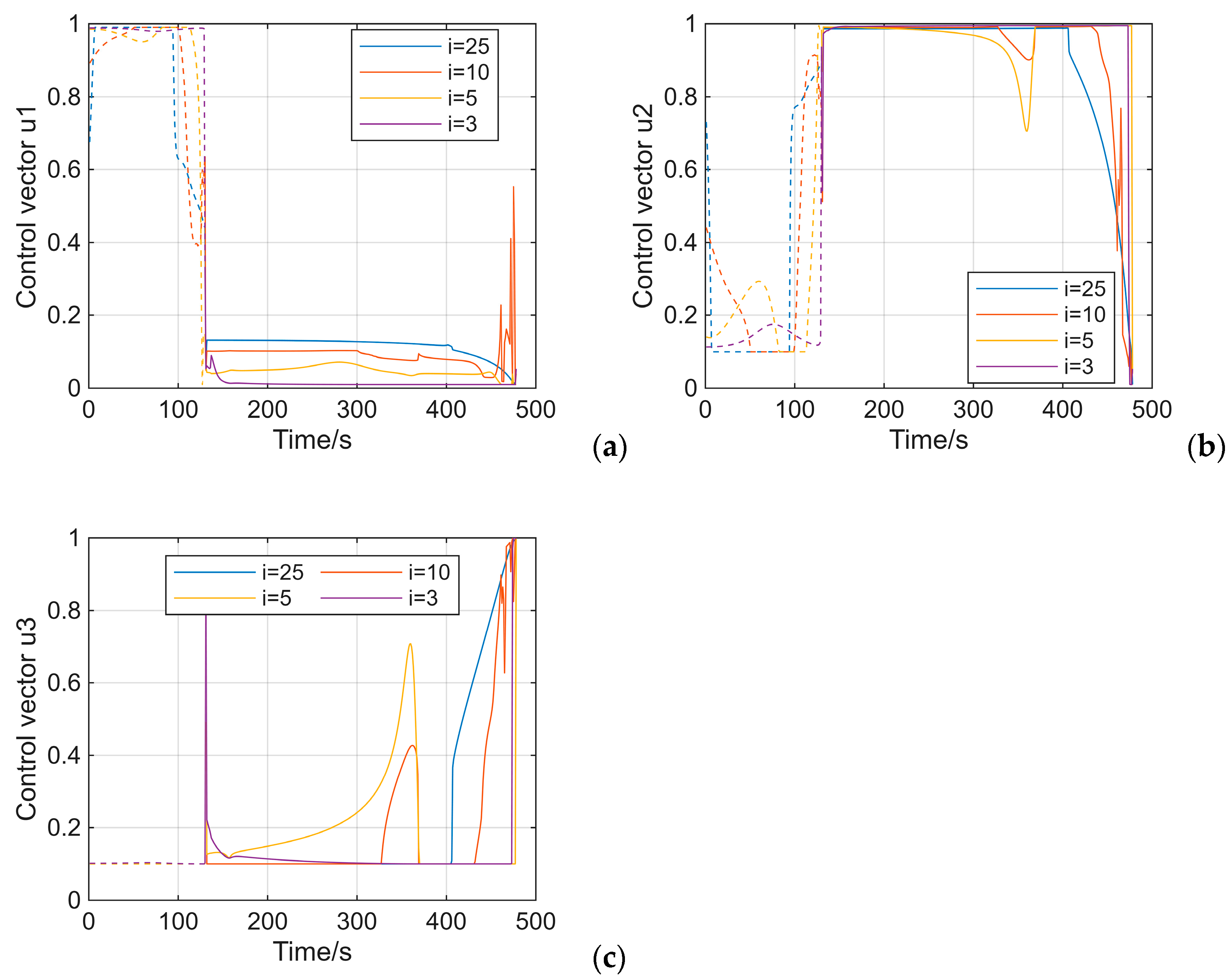

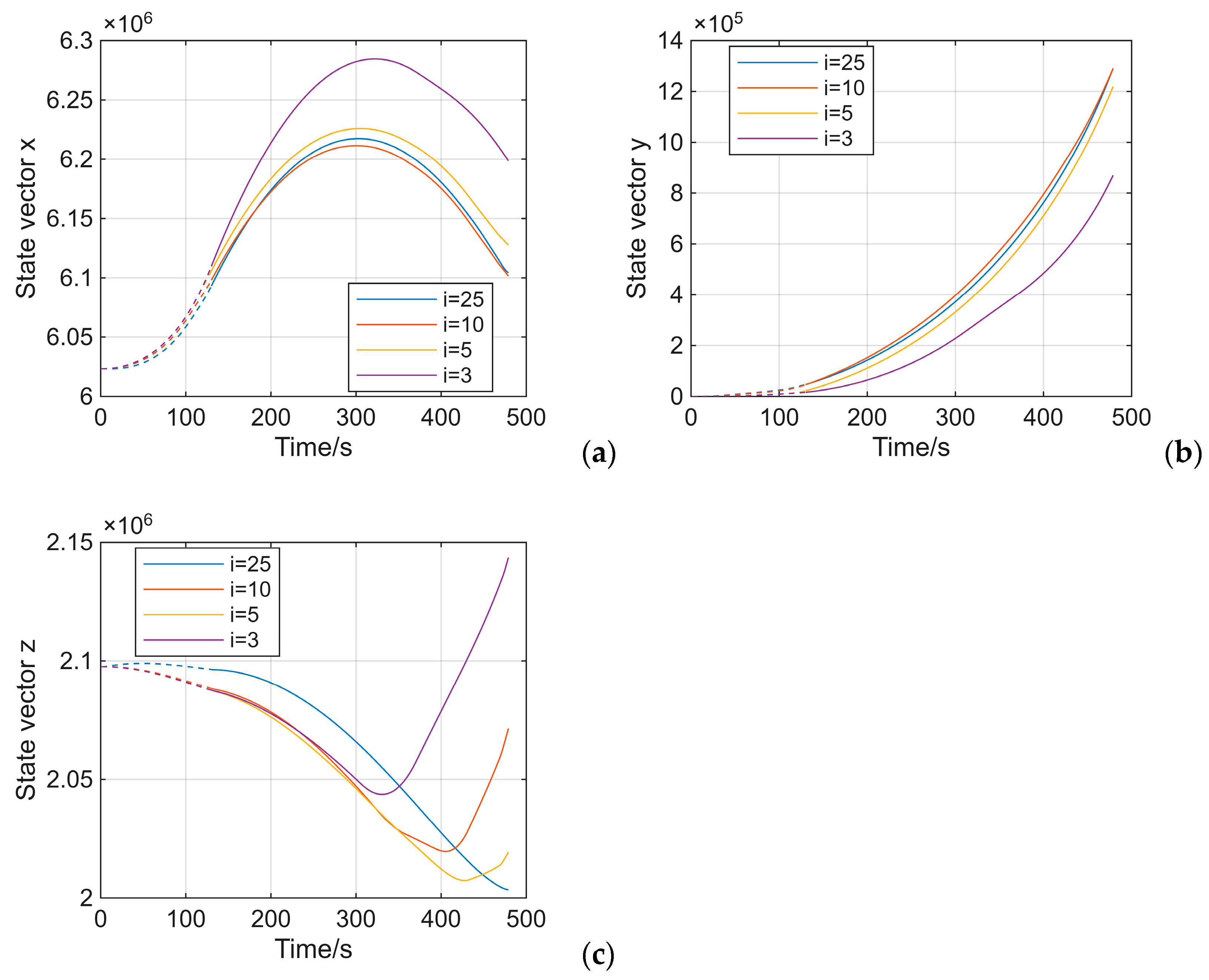

Section 4 applies this method to three trajectory optimization examples, generating optimal trajectories and comparing them with other similar methods;

Section 5 summarizes the research results and discusses future prospects.

2. Related Technology

DDP is an optimal control algorithm for trajectory optimization. It uses local quadratic approximation for dynamic processes and cost functions. Compared to the SQP and the variational method, it has notable advantages in memory efficiency and solution speed. Consider a model with discrete-time dynamics, which is calculated as follows:

where

represents the control vector. The state sequence

is generated by the initial state

and the control sequence

. The total cost is the sum of running cost and final cost, which is calculated as follows:

where

represents the final cost and

represents the running cost. The optimization objective is to solve for the optimal control sequence

to minimize the total cost

, as follows:

where

represents the optimal control sequence. The DDP algorithm iteratively optimizes the control sequence through backward pass and forward pass, gradually reducing the total cost until convergence. The backward pass starts from the final time and goes back to the initial time, updating progressively the value function, which is calculated as follows:

where V represents the minimum cost at time

. According to Bellman’s principle, the sub-strategy of the global optimal policy is also the optimal solution. To further analyze the optimal control problem, the action value function

is introduced to explore the impact of control inputs on the value function. Its definition is calculated as follows:

The derivatives part can be expanded as follows:

where

represents the gradient of the value function, and

represents the Hessian matrix of the value function. By minimizing the action value function, the control variation can be obtained as follows:

Then, the value function is backtracked to update its gradient and the Hessian matrix at the current time, as follows:

After the backward pass is completed, the forward pass begins. Starting from the initial state, the control and state are gradually updated to generate new trajectories, as follows:

In the above DDP algorithm iteration process, the constraints of the control variables were not considered, which led to the algorithm’s limitations when solving the optimal control problem with constraints.

The way to add control constraints in DDP usually involves using truncation or projection methods to limit the control trajectory within a specified range. For example, the BOX-DDP method calculates a series of quadratic programming problems in each iteration, which results in low computational efficiency. The truncation DDP method has the problem of discontinuous gradient changes at the constraint boundaries, making it difficult to converge to the optimal solution. The DDP method has the issue that the gradient information becomes extremely small at the contact boundaries, which hinders the formation of an effective direction for updating the gradient, thereby leading to getting trapped in a local optimal solution trap.

3. Adaptive Projection Differential Dynamic Programming

To address the shortcomings of the projected DDP, this section presents the proposed adaptive projection differential dynamic programming (AP-DDP) method and the search strategy.

3.1. The Framework of AP-DDP

AP-DDP effectively tackles issues of premature convergence and low computational efficiency when control constraints are added to the existing DDP method. The main innovation of AP-DDP is the introduction of a relaxation coefficient that dynamically adjusts the smoothness of the projection function, enabling the optimization of control variable constraints.

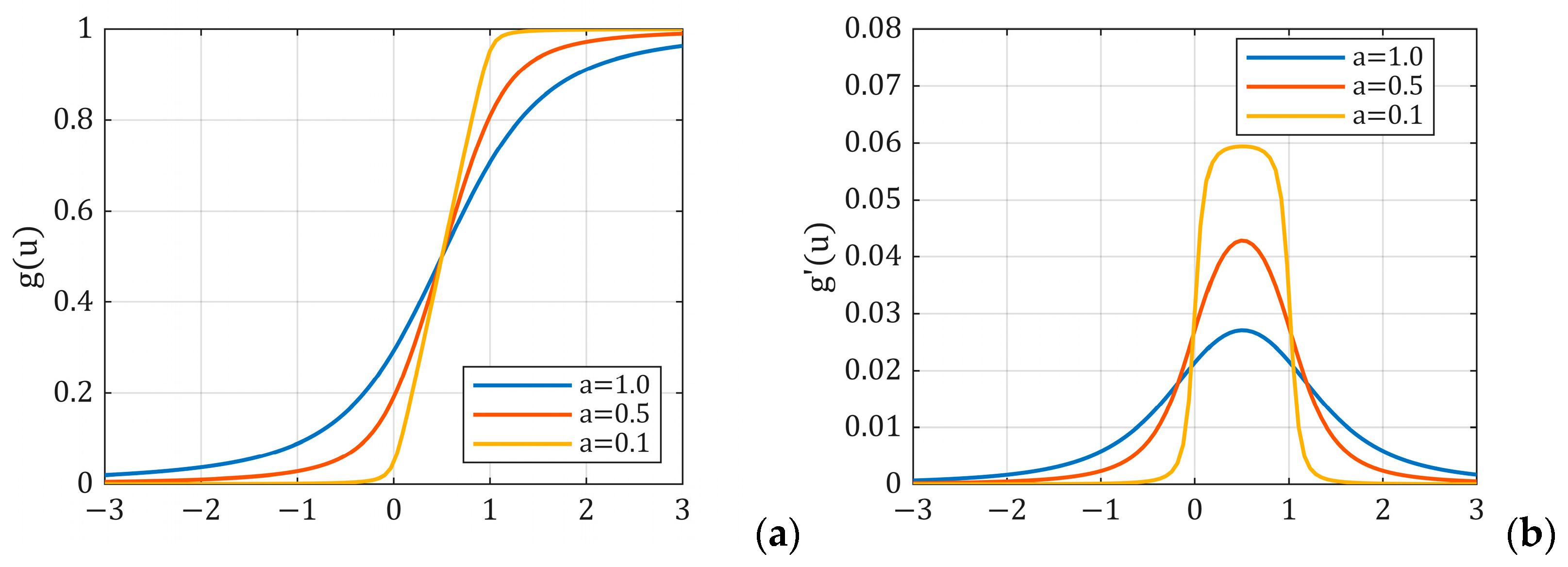

Figure 1 illustrates how different relaxation coefficients influence the projection function and the derivatives of the projection functions corresponding to different relaxation coefficients.

In the initial stage of the AP-DDP algorithm, a relatively large relaxation coefficient is assigned to the projection function. This makes the gradient not tend to zero when the control vector approaches the constraint boundary, and the algorithm will continue to update and reduce the risk of getting stuck in a local optimum. As the algorithm progresses, the relaxation coefficient gradually decreases, causing the boundary of the projection function to align with the constraints of the control variables, thus ensuring the accuracy of the results. Specifically, the projection function, including the relaxation coefficient, is as follows:

where

is the relaxation coefficient. To ensure that the relaxation coefficient gradually decreases during the iteration process, this paper uses a decay function with monotonic characteristics to establish the connection between the relaxation coefficient and the value function, achieving the adaptive iteration of the relaxation coefficient. The attenuation function is as follows:

where

is the initial cost function and

is the current cost function.

The decay function is composed of a linear term and a power term, and its derivative is as follows:

where

is always smaller than

during the iteration process; therefore, the derivative of the decay function is always positive, and the relaxation coefficient decreases as

gradually decreases. As seen from Equation (11), the relaxation coefficient in this article gradually decreases from 0.5 to nearly 0. The framework of AP-DDP is shown in

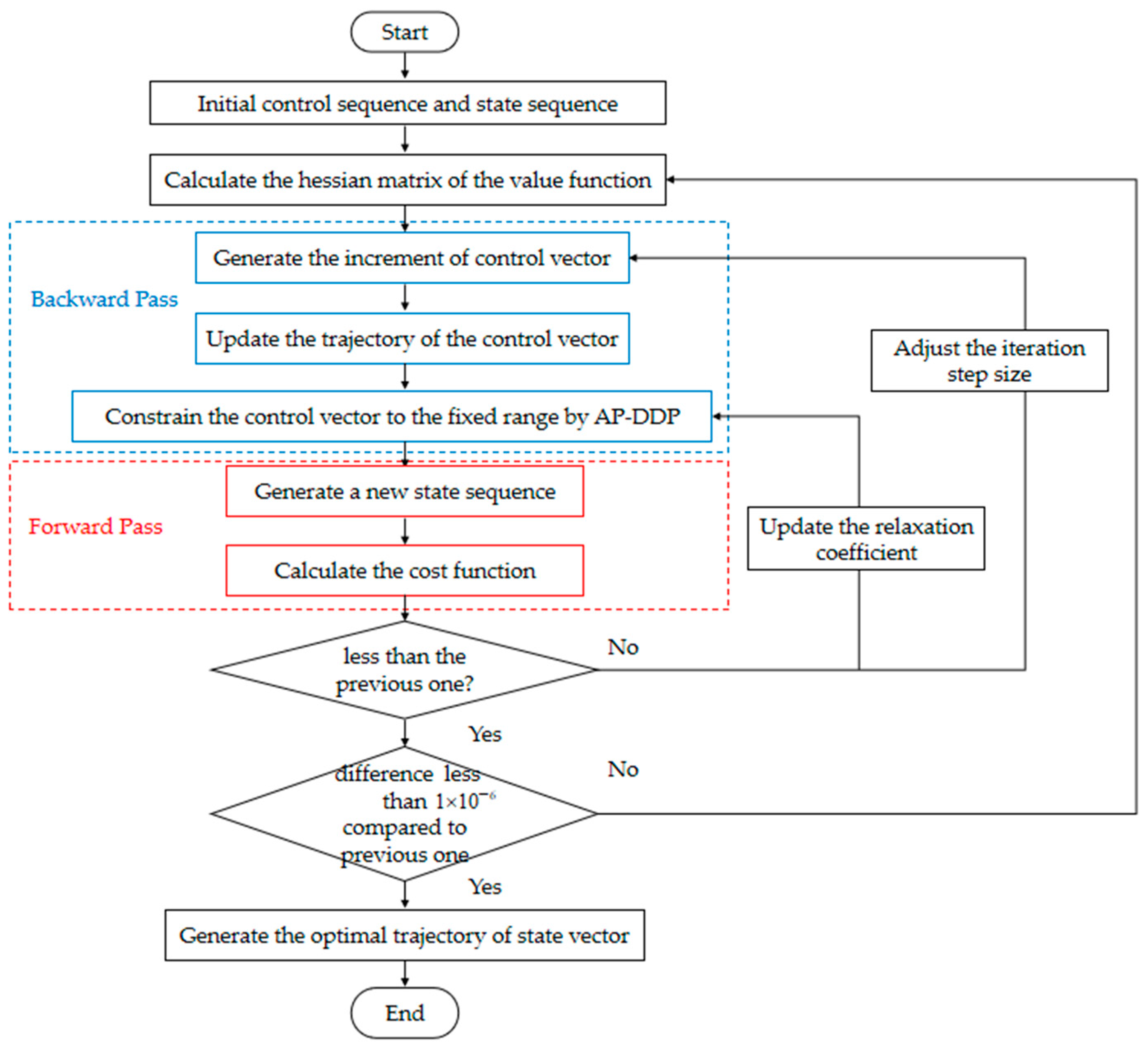

Figure 2.

Specifically, the iterative process of AP-DDP is divided into seven steps, as follows, and including two cycles.

Step 1: Initialize the state and control vectors each time, then generate the initial cost function.

Step 2: Compute the Hessian matrix of the value function at each time.

Step 3: Compute the control variable updates via backpropagation and produce the trajectory.

Step 4: Apply the AP-DDP method to restrict the control variable’s trajectory within a fixed interval.

Step 5: Generate the state variable trajectory by forward propagation using the constrained control variable trajectory, and then compute the new cost function.

Step 6: If the cost function is lower than the value calculated in the previous iteration, accept the current state variable trajectory; otherwise, use a linear search strategy to adjust the iteration step size, generate a new relaxation coefficient based on the decay function, and regenerate the control variable trajectory.

Step 7: Repeat steps 2 to 6 multiple times until the difference between the two iterations is less than (or another convergence criterion). Then, the optimal trajectory is considered to have been generated.

The advantage of the adaptive projection function is that it enhances its performance. Early in the iterations, a larger relaxation coefficient makes the projection function smoother, and the control increase is less likely to approach zero, allowing DDP to quickly reach the optimal solution and avoid getting stuck in local optima. As the iterations progress, the relaxation coefficient gradually decreases, enabling the control trajectory to reach the constraint boundary and improve the solution’s accuracy.

3.2. Linear Search Strategy

The AP-DDP method uses a line search strategy during the backward pass to ensure the algorithm converges to the optimal solution. The formula for the line search strategy is as follows:

where

represents the line search step size; it is set to be within the range of 0 to 1 in this paper, and it is used to adjust the magnitude of the control strategy update during each iteration. Specifically, in each iteration, AP-DDP calculates the adjustment direction of the control strategy based on the local quadratic model. The step size is determined through a line search method (such as the Armijo criterion) to find an appropriate value that aligns the actual decrease in the loss function with the predicted reduction by the model. This mechanism can prevent divergence of the iteration or trajectory deviation caused by a huge step size and avoid slow convergence resulting from a tiny step size. Therefore, it balances the stability and efficiency of the algorithm.