1. Introduction

As shown in the International Roadmap for Devices and Systems (IRDS) edited by the IEEE Computer Society or in works such as [

1,

2,

3], in a System on a Chip (SoC) or Network on a Chip (NoC), the memory part has a growing share, currently covering 75–85% of the integrated area. Therefore, in the production of reliable integrated circuits at competitive costs, efficient testing of the memory part is a very important requirement.

In this paper, the authors address the issue of testing

RAMs with regard to the complex fault model of static unlinked neighborhood NPSFs as a subclass of the coupling fault (CF) model [

4,

5]. According to the classification of memory faults, as presented for example in [

2,

6,

7,

8], the “static fault” class refers to those faults sensitized by performing at most one memory operation, while the “dynamic fault” class refers to those faults sensitized by two or more operations performed sequentially. According to the same taxonomy presented in [

6] or [

7], for example, CFs are said to be unlinked when they do not influence each other. In this paper, the class of static unlinked CFs is addressed.

The CF model reflects imperfections in the memory cell area that cause two or more cells to influence each other in their operation [

2,

3]. Depending on the number of cells involved in a memory fault, there are several classes of coupling faults, such as two-cell coupling, three-cell coupling, four-cell coupling, or five-cell coupling. In the two-cell CF model, it is considered that the cells involved in a CF can be anywhere in the memory [

9]. In this way, this model also addresses memory faults [

6]. For more complex

-cell CF models, where

, it is assumed that the cells involved in a memory fault are physically adjacent [

5,

10,

11]. It is therefore necessary to know the memory structure, or more precisely the correspondence between logical addresses and physical addresses, in the form of row and column addresses. This realistic limitation allows the complexity of these fault models to be more easily managed [

12,

13,

14]. For these complex CF models, testing is usually performed with additional integrated architectures called built-in self-test (BIST) logic [

15,

16,

17,

18,

19,

20].

Regarding the

-cell CFs, where

, depending on the technology used, the accuracy of the testing, and the costs involved, two classes of fault models have been defined to cover the most common memory errors, namely, (a) the classical model class in which it is considered that a memory fault can be sensitized only by a transition write operation, and (b) an extended class of models in which it is admitted that memory faults can be sensitized by transition write operations, as well as by non-transition writes or read operations—extended CF (ECF) models [

9,

12,

13,

14]. With the increase in density and the decrease in supply voltage, the range of memory defects has diversified, and, as a result, for high testing accuracy, ECF models are increasingly used. The authors appreciate, however, that a memory test covering the classical CF model can be adapted relatively easily to cover the corresponding ECF model [

12]. This is also highlighted in this work.

For three-cell or four-cell classical CF models, reference tests are reported in [

10,

11,

21,

22,

23,

24]. For three-cell or four-cell ECF models, near-optimal tests are presented in [

14].

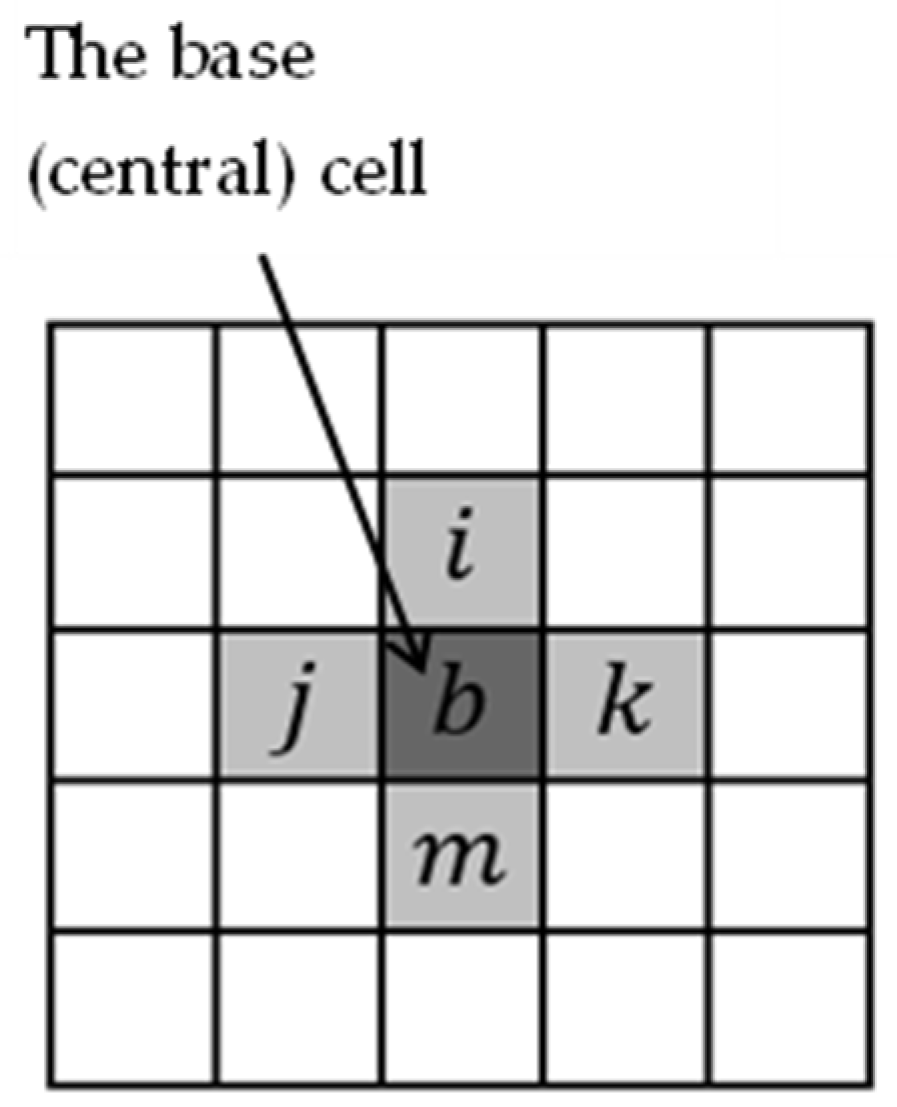

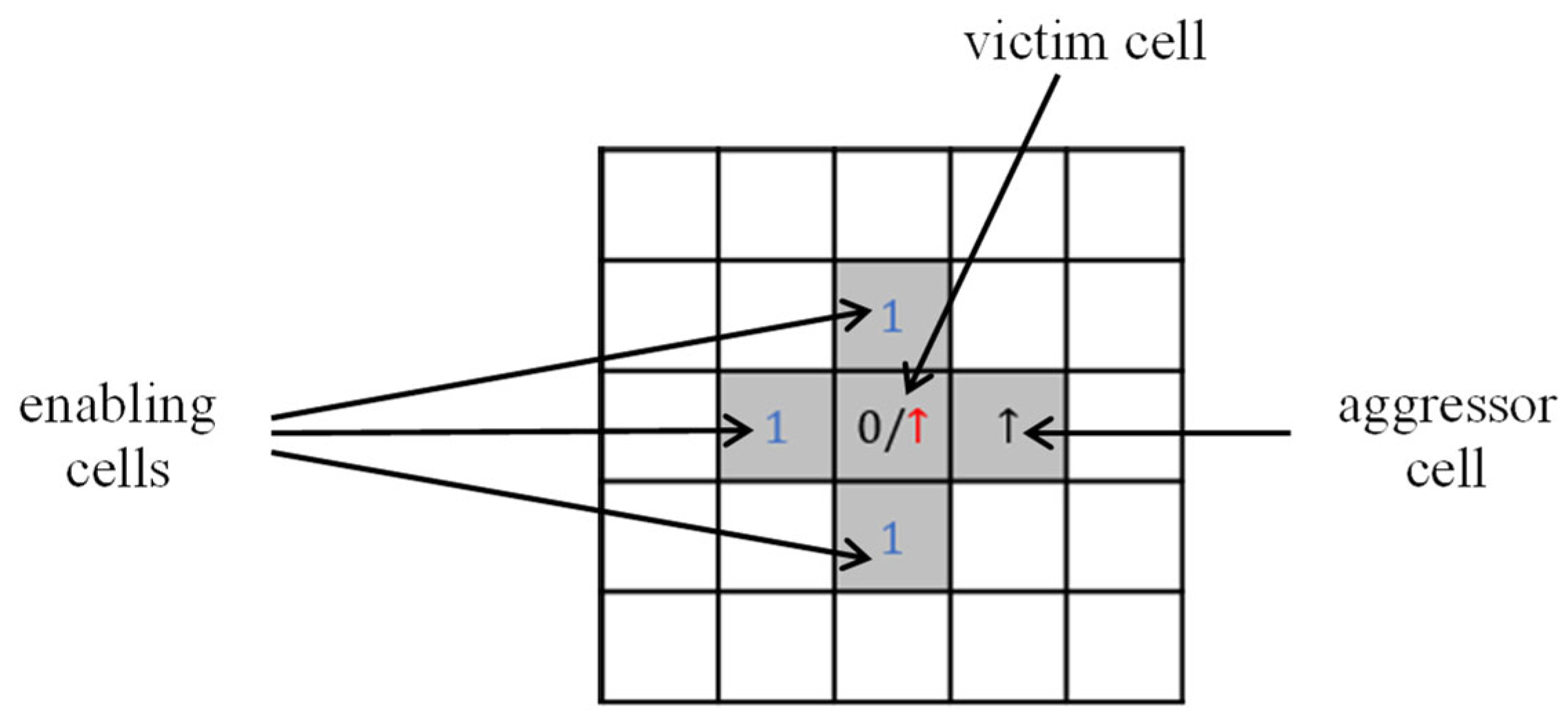

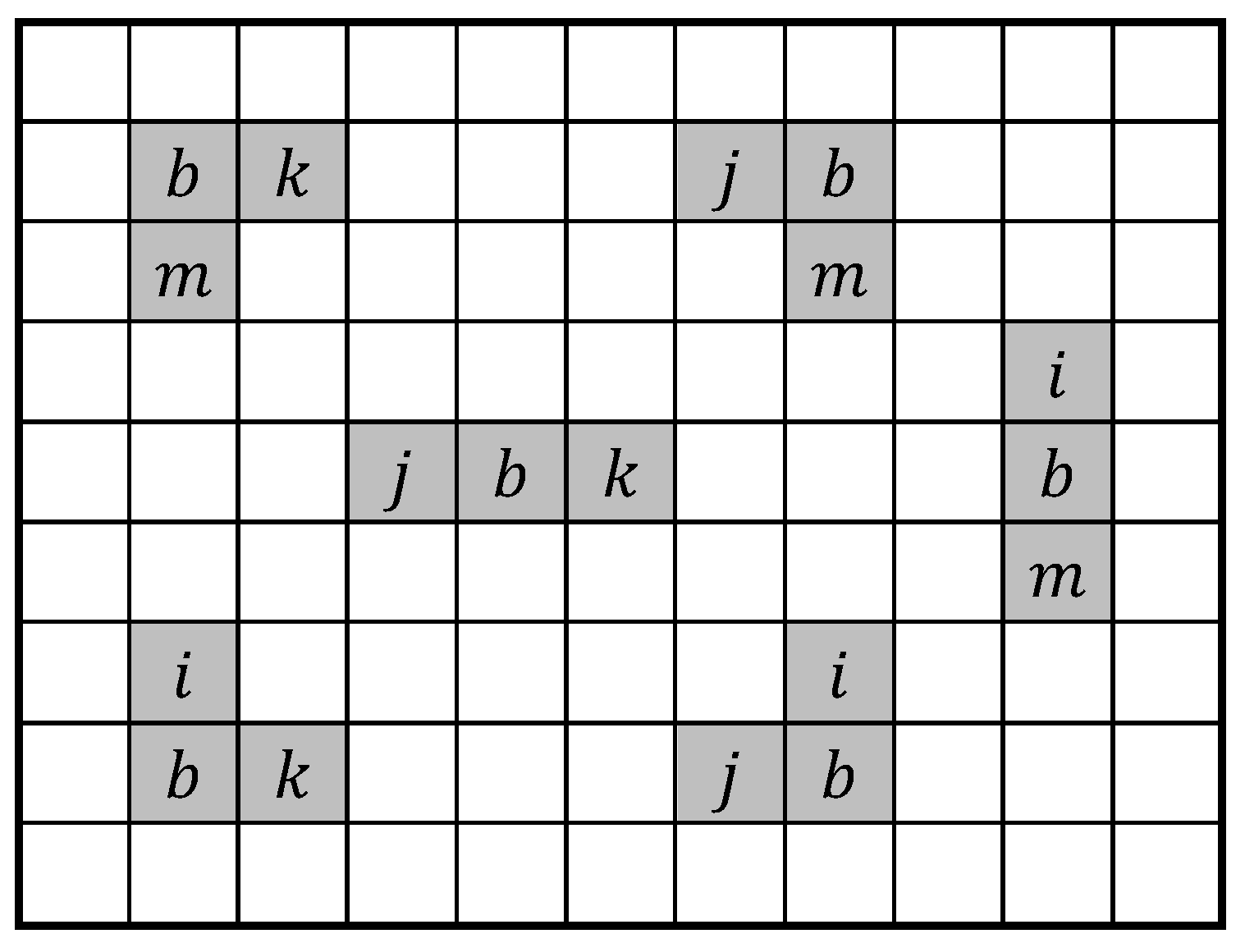

In this paper, we focus exclusively on the NPSF model, which is a subclass of

-cell CFs, where

. The NPSF model involves five physically adjacent memory cells arranged in a configuration like the one shown in

Figure 1. Note that this five-cell configuration also covers the six configurations of three-cell CF model involving neighboring memory cells arranged in a corner or on a row or column, as considered in [

5,

13,

14], for example, but does not completely cover the configuration of four-cell CF model in which neighboring memory cells are arranged in a square [

14].

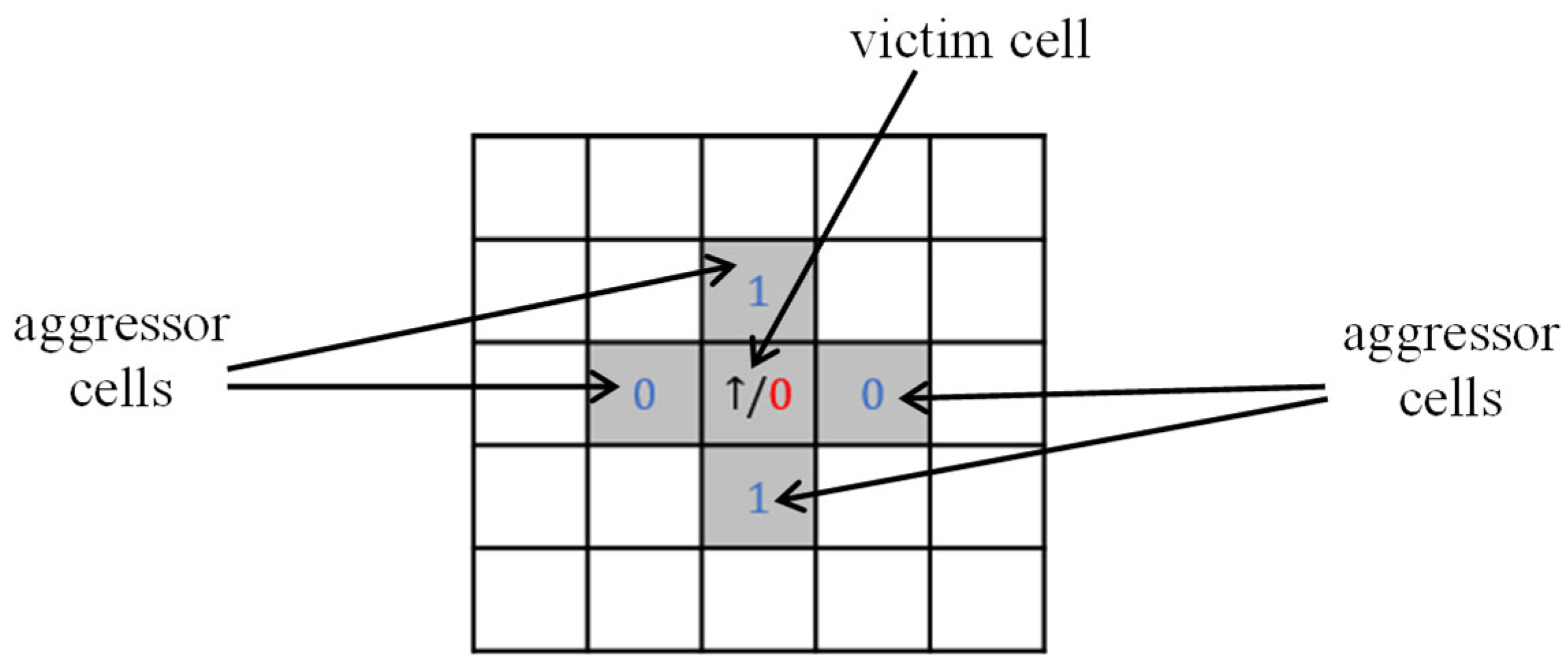

This fault model, which reflects the influence of neighboring cells on the central memory cell, has been defined since the 1970s [

25]. Specifically, we refer here to the classical NPSF model in which a fault sensitization involves a transition write operation in a cell in the group, and the other four cells are in a certain state that favors the occurrence of the memory error affecting the central cell. The scientific community has continuously studied the NPSF model because of its importance and complexity. Memory testing for NPSF models is covered extensively in books, like [

2,

3,

4,

26], or in review papers, such as [

16,

17,

19].

For the NPSF model, some memory tests have been proposed since the 1980s that were quite effective for the memory sizes of that period [

27,

28]. For example, the memory test given by Suk and Reddy [

28], which completely covers this model, is based on an algorithm for dividing the set of memory cells into two halves, based on row and column addresses, and is

long. A reduction in testing time was possible by applying march-type tests on different memory initialization patterns (data backgrounds). This idea was first proposed by Cockborn [

23]. This technique, called “multirun march memory testing”, was then used to identify efficient memory tests for this complex NPSF model [

29,

30,

31,

32]. For example, in [

31], to reduce the length of the test, Buslowska and Yarmolik propose a multirun technique with a random background variation. However, in that case, the NPSF model is only partially covered. A first near-optimal deterministic memory test for the NPSF model was proposed by Cheng, Tsai, and Wu in 2002 [

32]. This memory test called CM-79N is

long and uses 16 memory initialization patterns of the size 3 × 3. Ten years later, Huzum and Cașcaval [

33] improved this result with another near-optimal memory test (called March-76N) that was slightly shorter, of length

Compared to CM-79N, the March-76N memory test applies a different testing technique, but also uses 3 × 3 data background patterns. CM-79N and March-76N are the shortest deterministic memory tests that completely cover this complex fault model. Their authors consider these memory tests to be near-optimal and not optimal, as additional write operations are required for multiple memory initializations. Although near-optimal, these tests are not exactly suitable for BIST implementation due to the complexity of the address-based data generation logic. More on this issue can be found in the works [

34,

35,

36,

37,

38,

39], but an in-depth analysis is also presented in this paper. For this reason, Cheng, Tsai and Wu also proposed in the same article [

32] another memory test (March-100N), which, although it is longer (and obviously non-optimal), is easier to implement in BIST-RAM architectures due to its 4 × 4 memory initialization patterns. A modified version of the March-100N memory test for the diagnosis of SRAM is given by Julie, Sidek, and Wan Zuha [

36].

In this work, a new near-optimal test (MT_NPSF_81N) able to completely cover the classical NPSF model is proposed. This new test is of length and uses 16 data backgrounds with patterns of 4 × 4 in size, making it suitable for implementation in BIST-RAM architectures. Compared to the March-100N test, this new memory test is significantly shorter and has a simpler structure. The synthesis of the address-based data generation logic for this memory test, considering a possible BIST implementation, is also discussed. This work also highlights the simplicity of address-based data generation logic in the case of 4 × 4 data backgrounds compared to 3 × 3 data backgrounds. For the extended NPSF model (ENPSF), a near-optimal test of length is proposed.

The remainder of this paper is organized as follows:

Section 2 includes assumptions, notations, a short description of the NPSF model, and some considerations related to multirun memory tests, or to the necessary and sufficient conditions for detecting coupling faults.

Section 3 describes new near-optimal memory tests for the classical NPSF model and the extended NPSF model that use 4 × 4 data background patterns.

Section 4 presents a synthesis of address-based data generation logic for memory operations for a possible BIST implementation of the proposed memory tests. To emphasize the advantage of the proposed tests, compared to other known memory tests that use 3 × 3 background patterns, where modulo 3 residues are required for row and column addresses,

Section 5 presents a synthesis of address-based data generation logic for the March-76N memory test. A discussion related to the proposed new memory tests, covering several different aspects, is included in

Section 6. Some experimental results that complete the comparative analysis between the proposed tests and other known tests are presented in

Section 7. Some conclusions regarding this work, presented in

Section 8, complete this paper.

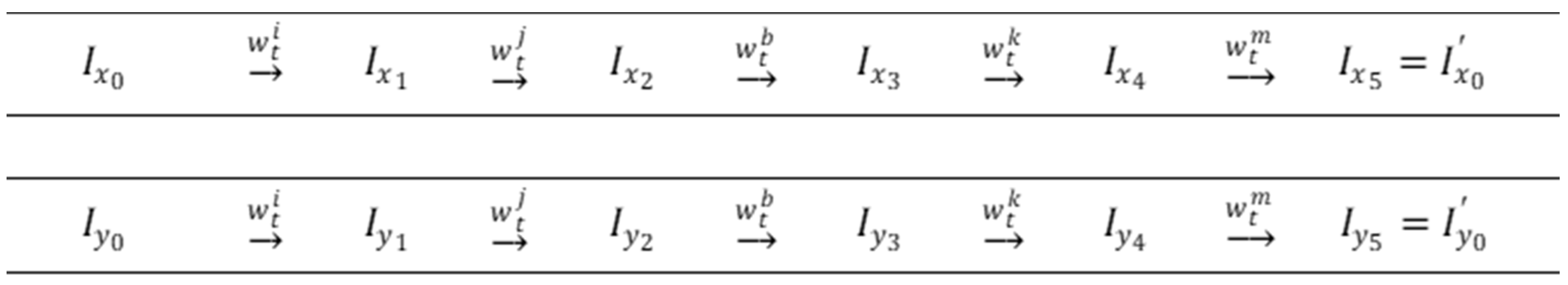

3. Near-Optimal March Tests for the NPSF Model

To cover the classical NPSF model, where a fault sensitization involves a transition write operation in a cell of the coupled cell group with the configuration shown in

Figure 1, a new memory test is proposed, called MT_NPSF_81N. In this test, two march elements are applied sixteen times on different data backgrounds (i.e., a multirun technique). The description of this new march multirun memory test is given in

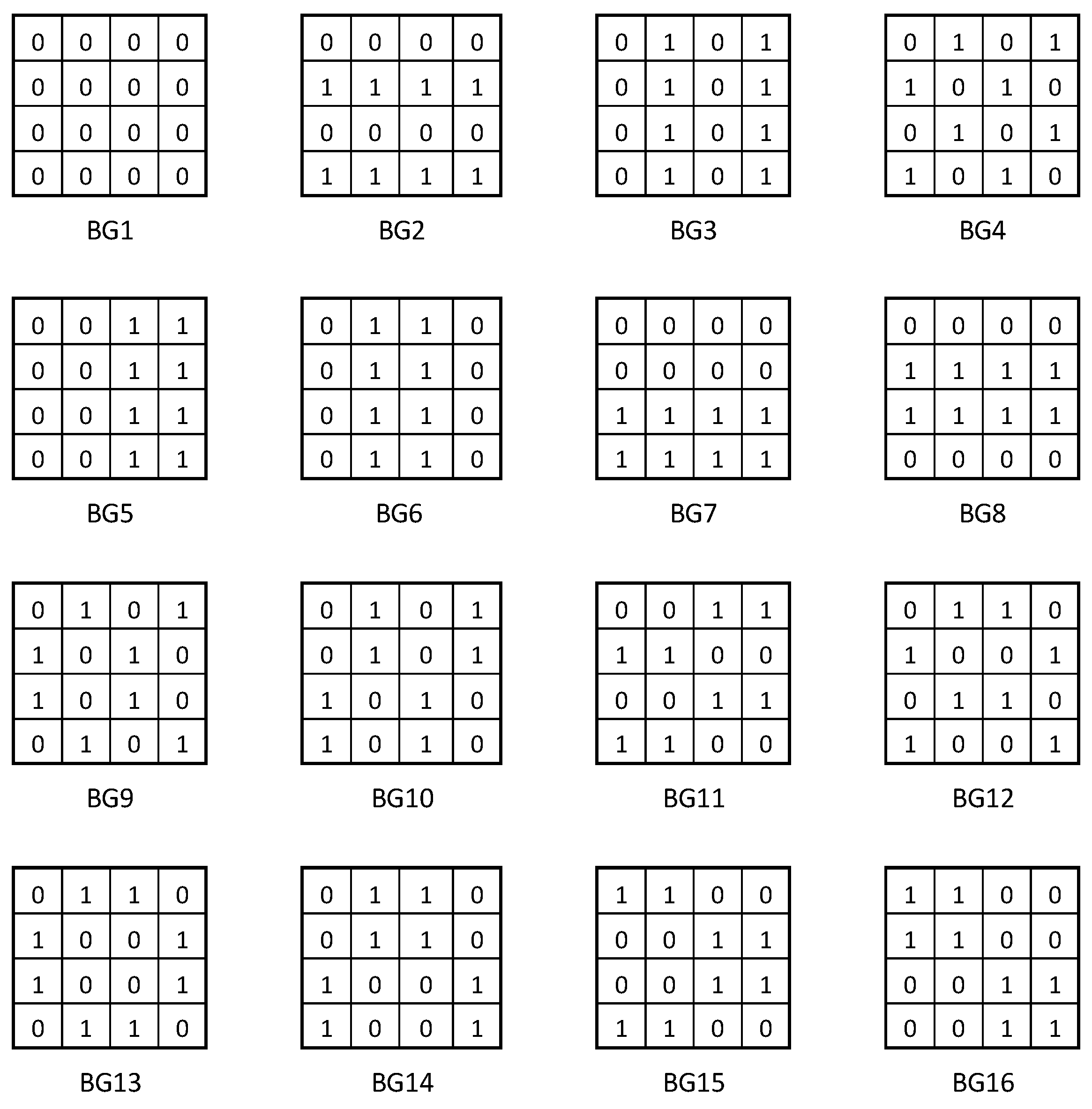

Figure 4, and the sixteen patterns used for memory initialization are shown in

Figure 5.

In this description,

indicates the primary memory initialization sequence while

,

, represent test sequences with background changes, according to the patterns shown in

Figure 5. Note that, any change in the data background involves a status update only for half of the memory cells. On the other hand, in order to satisfy the

observability condition, any write operation to change the state of a memory cell is preceded by a read operation. Thus, the initialization sequence

of the form

involves

write operations, while a test sequence with a background change,

,

, requires

read operations and

write operations (so, the same number

of memory operations). Thus, the length of the memory test is

The ability of the MT_NPSF_81N memory test to fully cover the classical NPSF model is discussed in the following.

Theorem 1. The test algorithm is able to detect all unlinked static faults of this classical NPSF model.

Proof. Let

be an arbitrary group of five neighboring memory cells corresponding to the well-known NPSF configuration shown in

Figure 1. The ability of the MT_NPSF_81N test algorithm to sensitize and observe any fault that may affect a cell in the g-group of cells is demonstrated in the following.

To prove this statement, it is shown that during memory testing, MT_NPSF_81N performs all possible operations in this g-group of cells. In other words, MT_NPSF_81N completely covers the graph of states describing the normal operation of cells in this group.

But the -group of cells can be anywhere in memory. The operations performed during memory testing differ from one group of cells to another because the initializations of the cells in a group depend on their position in memory (a position fully specified by the base cell address). But how can we monitor the operations performed within a group of cells under these circumstances when the number of distinct cases is so large? The following analysis aims to answer this question or, at least, to provide a solution to master the complexity of this problem.

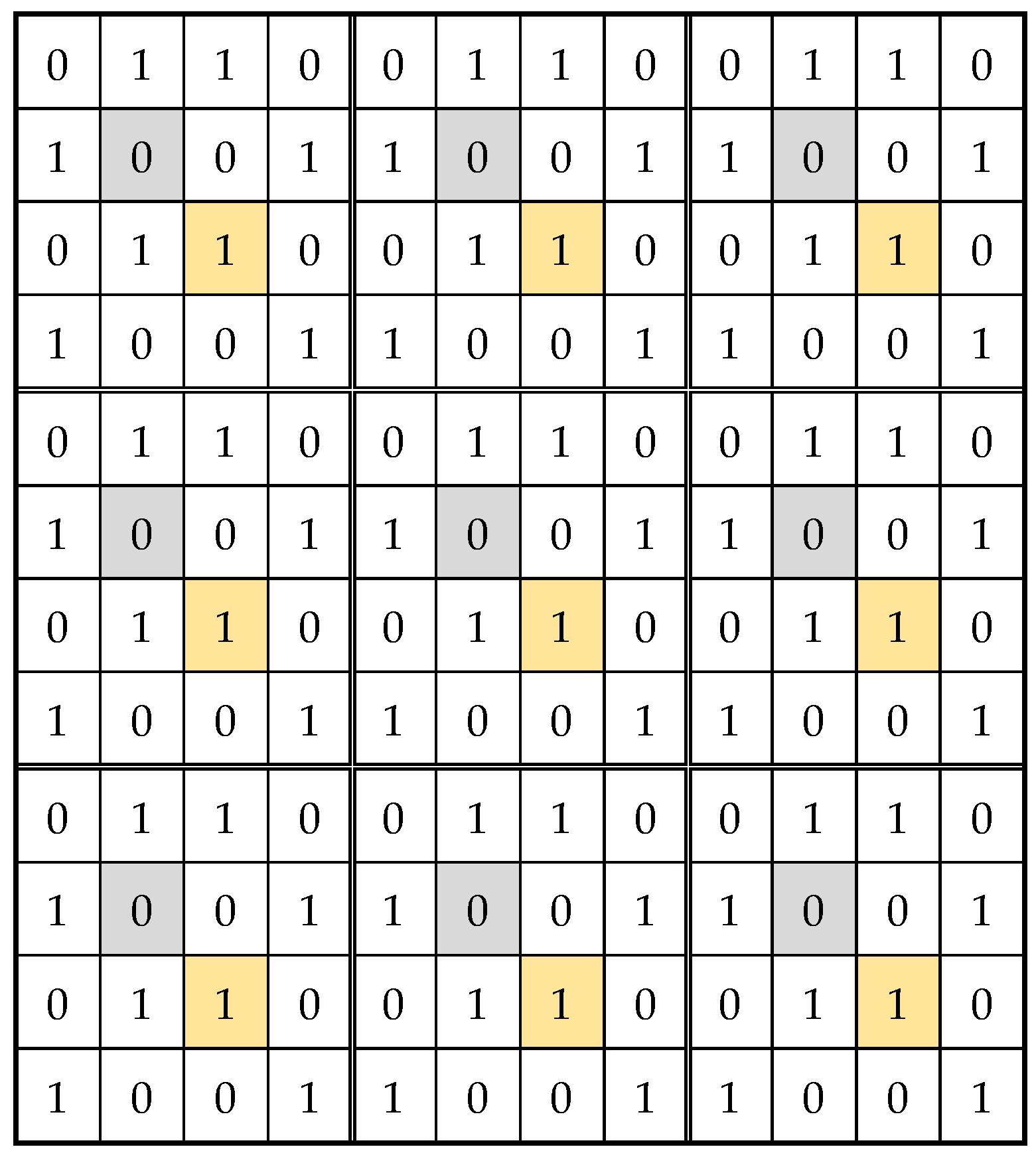

As it is known, a data background is obtained by multiplying a data pattern in memory both horizontally and vertically. Given that this test algorithm uses 4 × 4 memory initialization patterns, it follows that the cells selected 4 by 4 per row or column are brought to the same initial state. This aspect is illustrated in

Figure 6, where the BG12 pattern is applied. For easier understanding, two groups of cells identically initialized are highlighted in this figure: a group of cells marked in gray, initialized with zero, and another group of cells marked in yellow, initialized with one.

Note that for cells initialized with the same value, the same memory operations will be performed when a march element is applied. Starting from this observation, the memory cells are divided into 16 classes (subsets), denoted by

depending on the residues of the row address modulo 4 (

) and the column address modulo 4 (

), as shown in

Table 3. For example, the cells highlighted in gray in

Figure 6 belong to class

because for any of these cells,

and

, while the cells highlighted in yellow belong to class

, for which

and

. This division of the memory cell set into 16 classes according to row and column addresses is illustrated in

Figure 7.

Let

now be the class (subset) of all

-groups of five cells in the known configuration for which the base cell

. This class division of the

-groups of five cells is also illustrated in

Figure 7 in different colors. This figure shows that the cells in the neighborhood of the base cells also belong to the same classes. For example, for the groups of cells in the subset

, the cells identified with

belong to

, those identified with

belong to

, and so on. As a result, for all groups within the same class, exactly the same operations will be performed during the testing process, and therefore the same state transitions will result. Given this, the study of monitoring state transitions in a group of cells

is limited to 16 distinct groups, one for each class

. This idea is the basis for understanding this proof.

Given this simplification, in the following, let us analyze the initial state for a -group of five memory cells in the known configuration, depending on the data background and the class of groups it belongs to .

The status code for a

-group of cells is a five-bit word corresponding to the component cells in the form

. It is worth noting that a group

of cells in the state

will reach the complementary state

when the march element

is applied. We say that

and

are complementary states taking into account the following property:

Figure 8 illustrates the initial and complementary states,

and

, for two groups of cells

and

, depending on the data background, BG

. For the other 14 cell groups, the values of

and

are obtained in the same way.

Table 4 lists the initial and complementary states for any group of cells in the known configuration, depending on the initialization pattern

and the class to which the group belongs

.

The hexadecimal values in

Figure 8 are listed in

Table 4 in columns

and

. In this table, it can be verified that for any group

of five neighboring cells in the known configuration, regardless of the class to which the group belongs (i.e., any column), the 16 background changes result in 16 distinct initial states, such that

Furthermore, for any column in

Table 3, the following equation is valid:

If

represents the set of all

states (as in the truth table), based on Equations (3) and (4) we can write the following equation:

Note that the states in any of the 16 columns of

Table 4 satisfy Equation (5), which means that all initial complementary states are different from each other and all together form the set

.

As a remark, identifying those initialization models for which this property is fulfilled has involved a considerable research effort.

It is also important to note that, starting from an initial state , upon the execution of the first march element (of the form ), a -group of cells is brought to the complementary state , and after the next march element (identical to the first), that group of cells is returned to the initial state .

Based on all these aspects, we can conclude that any -group of cells in the known configuration is brought into each of the 32 possible states, and then a test sequence of the form is applied. In a full memory scan with the march element , five different transitions are performed in any group of five cells. On the whole, in a group of five cells, transitions are performed.

Figure 9 shows the transitions performed in a

-group of cells of class

in the first six of the sixteen stages of the memory test. The initial states after the six background changes are highlighted in bold. For easier identification, the transitions performed at each iteration are shown in different colors, and for the first transition within an iteration, an identification number is written on the edge.

For example, the 10 blue arcs that appear on the outer edge of the graph reflect the transitions made in a -group of cells in the first stage of the test, when the memory is initialized with zero everywhere (BG1-0 solid data background) and then the full memory is scanned twice in ascending order by applying the test sequence of the following form: . The cells in group are accessed in the order . During the first scan of the memory, as these cells are accessed, the state evolution for group is as follows: 00000, 10000, 11000, 11100, 11110, and 11111. Then, during the second scan, the group of cells successively reaches the following states: 01111, 00111, 00011, 00001, and finally 00000. So, the group of cells is returned to the initial state from which it started. In the same way, the transitions in the graph can be tracked for the other iterations of the memory test. Isolated nodes in the graph, without incident edges, reflect states that have not yet been reached in the first six iterations of the memory test. It should be noted that a complete graph with 160 edges would be difficult to draw and track.

Next, it is shown that all 160 transitions performed in a group

of cells are different transitions and therefore the state transition graph is completely covered.

Figure 10 shows the state evolution for a

-group of cells starting from two different initial states,

, when the march element

is applied.

The intermediate states that appear in the figure,

and

,

are detailed in

Table 5.

From

Table 5 it follows that, since

and

Note that although often

,

in the two cases, the write operations are performed on different memory cells and therefore the respective transitions are also different. Several such cases are found in

Figure 9, such as the transitions made in the states 10000, 10100, 10010, or 01001.

In conclusion, by applying the MT_NPSF_81N memory test, different transitions are performed in the -group of cells, completely covering the state transition graph. Moreover, each of these transitions is performed only once. As a result, the sensitization condition for all coupling faults specific to this classical NPSF model is met.

- II.

MT_NPSF_81N detects any sensitized fault in a group g of coupled cell

Proving this property involves verifying the fulfillment of the two observability conditions,

and

, presented in

Section 2. For an easier and more direct verification of the fulfillment of the observability conditions,

Figure 11 shows all the operations performed in a group of cells

during the execution of the MT_NPSF_81N memory test. In the sequence of operations performed on cells in group

, write operations for initialization or background change are written in normal font, and those with the role of memory fault sensitization are highlighted in blue. Read operations that have the role of detecting possible errors resulting from fault sensitization are written in red.

Analyzing the sequence of operations shown in

Figure 11, it can be seen that when a cell is accessed, it is first read, thus checking whether a state change has occurred in the cell as a result of an influence from another aggressor cell. This check is required by condition

. Also, by analyzing the sequence of operations illustrated in this figure, it can be seen that after performing a write operation on a cell, it is then read (immediately or later) to verify that the operation was performed correctly. Thus, condition

is also met.

With the verification of both conditions regarding the sensitization of all considered memory faults and the ability to detect any resulting errors, the proof of the theorem is complete. □

Remark 1. For any group of cells that corresponds to the considered NPSF model, the state transition graph is completely covered, and furthermore, each transition is performed only once. That means that the graph of states is Eulerian. The only redundant operations are used for background changes. With this argument, it can be said that this memory test is near-optimal.

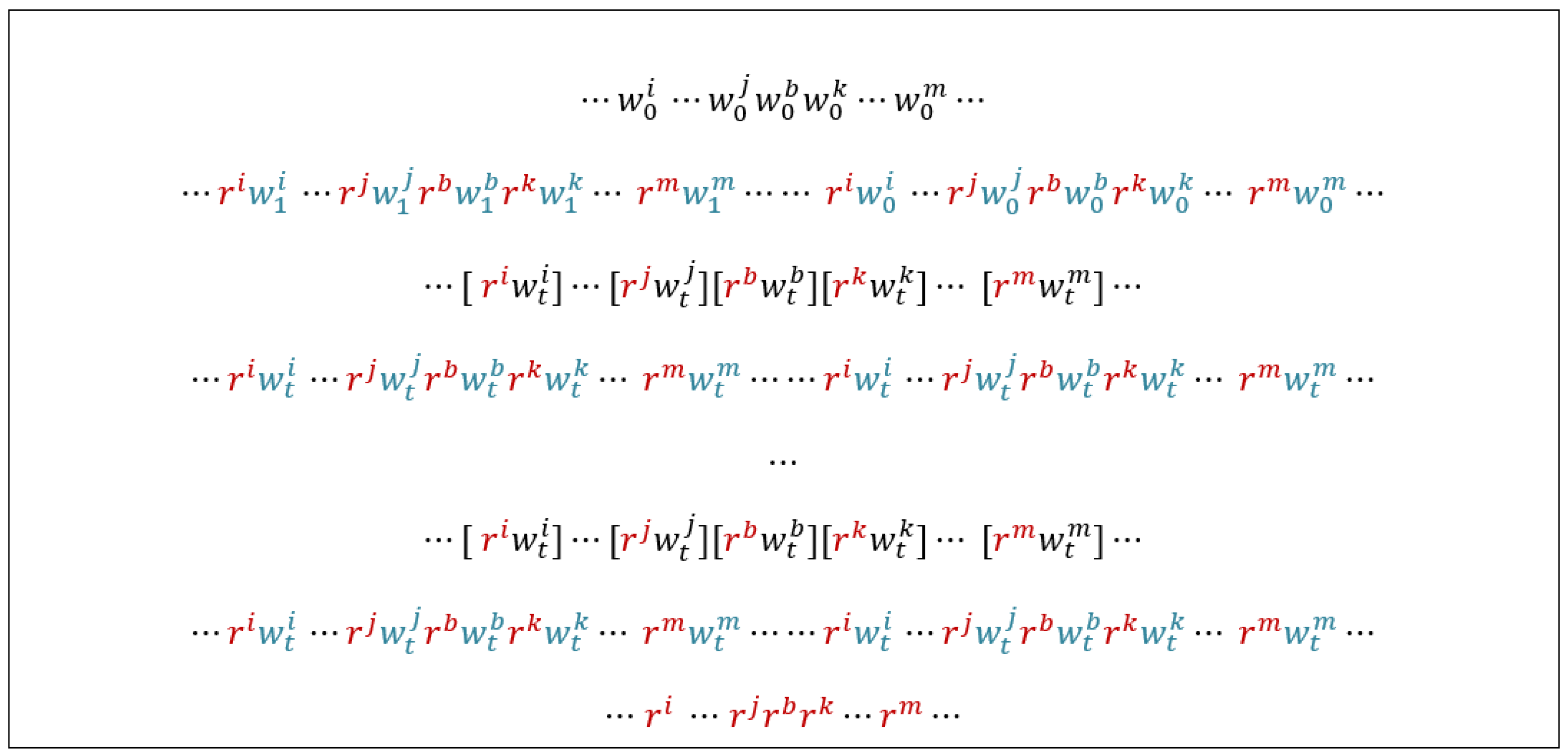

The proposed memory test can be adapted to cover the extended NPSF model (ENPSF), in which memory faults can be sensitized by transition write operations but also by read or non-transition write operations [

12,

14]. To this end, the two march elements of form

are extended to the form

. This extension leads to a near-optimal memory test for the ENPSF model, called MT_ENPSF, of length

, as shown in

Figure 12.

Note that in this case, when a certain state is reached, the and operations are first executed and checked, and then the state is changed by a operation.

Remark 2. The ability of each of the two memory tests to cover the considered NPSF model was also verified by simulation.

Verifying by simulation the ability of a memory test to detect faults within the considered fault model is not a difficult problem. The following clarifications are made regarding this experiment:

- (1)

The memory is simulated by a binary matrix of appropriate size (in our case, a matrix of 16 × 16 was sufficient). The operations described in the test algorithm are performed on this matrix.

- (2)

Within the matrix, a group of five cells is chosen for each of the sixteen classes, , which are then studied and monitored in turn.

- (3)

The faults considered (see

Table 1) are simulated/injected within the current

-group of cells and verified one by one. For each simulated fault, the test program is run to verify whether the fault is detected or not.

- (4)

For the classical NPSF model, simulating a fault requires permanent evidence of the state of the five cells in group : , , , , and . Monitoring all write operations in group allows any change in state of a cell in the group to be captured. Based on the state of the other cells, it is checked whether the sensitizing condition of the current fault is met. When this condition is met, the simulation of the memory error is performed by changing the value in the binary matrix at the location corresponding to the victim cell (cell in this model).

- (5)

To simulate a memory error, it is more advantageous to use the facility provided by the TRAP interrupt. In some microcontroller-based systems (as be STM32 Nucleo-64 development board with STM32F103RB MCU), this facility is available to users. Thus, the operations for simulating a fault are implemented in the TRAP interrupt handling routine and, in this way, the test program remains unchanged.

- (6)

For the extended NPSF model, the simulation experiment is more complex but does not pose any particular problems for an experienced programmer. The facility provided by the TRAP interrupt is no longer useful in this case.

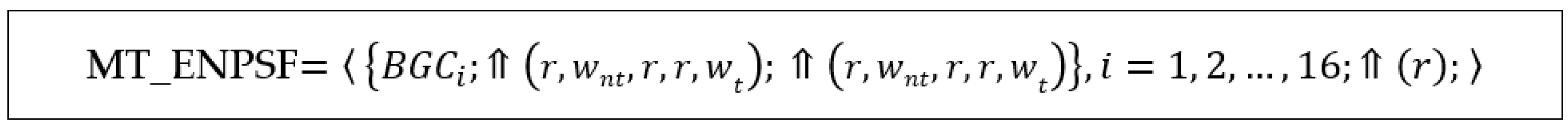

4. Considerations for BIST Implementation of the Proposed Memory Tests

In this section, the issue of a possible BIST implementation of the proposed memory tests is addressed. Considering the number of memory areas tested in parallel and the number of bits that form the memory words (the number of bits accessed simultaneously), there are four memory BIST architectures, namely [

8,

17,

18,

19,

20], SASB (Single-Array Single-Bit), SAMB (Single-Array Multiple-Bit), MASB (Multiple-Array Single-Bit), and MAMB (Multiple-Array Multiple-Bit).

Since SASB is the basic architecture, in the following section, we will limit ourselves to this architecture only. As mentioned in [

19], adaptation for other architectures does not pose particular problems.

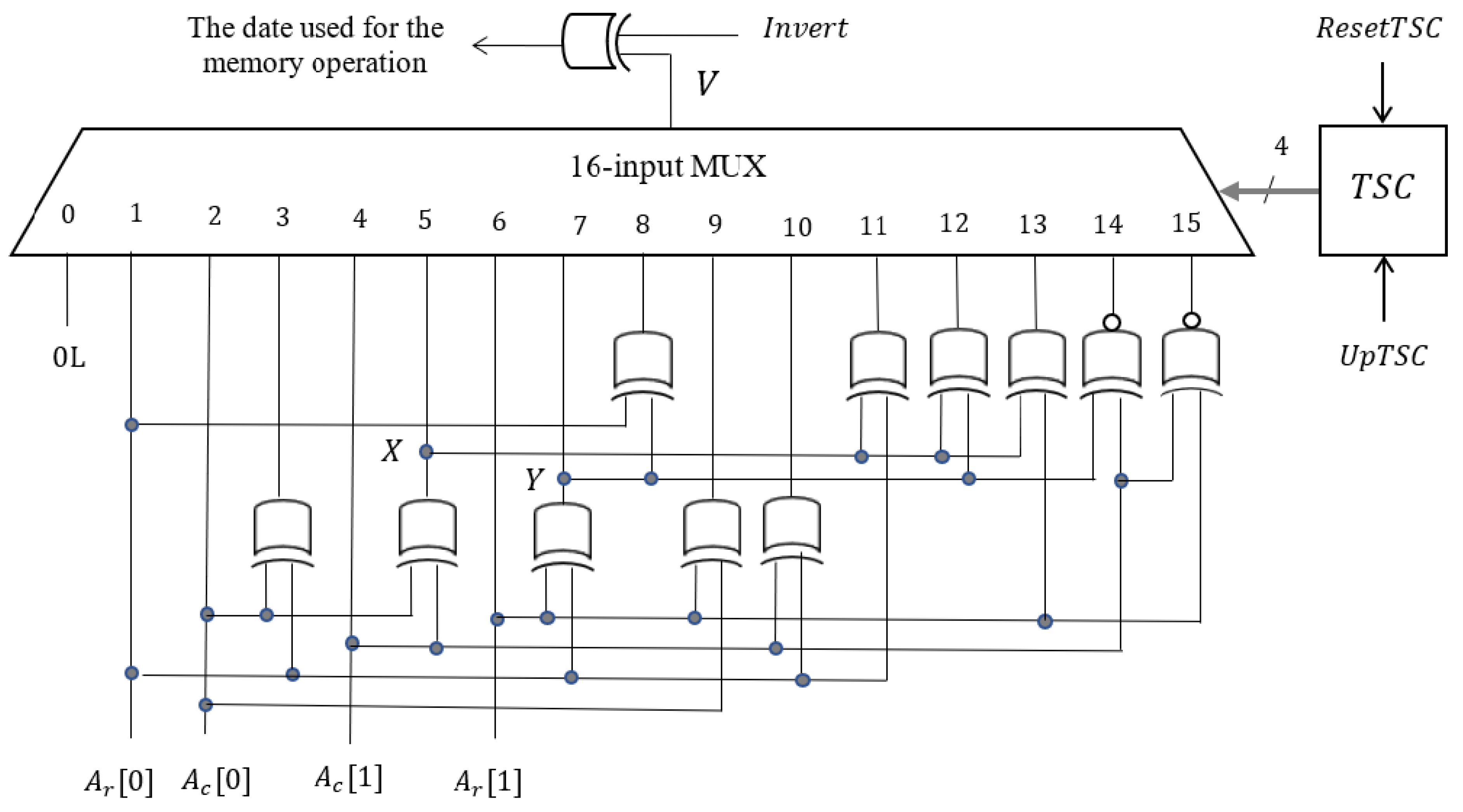

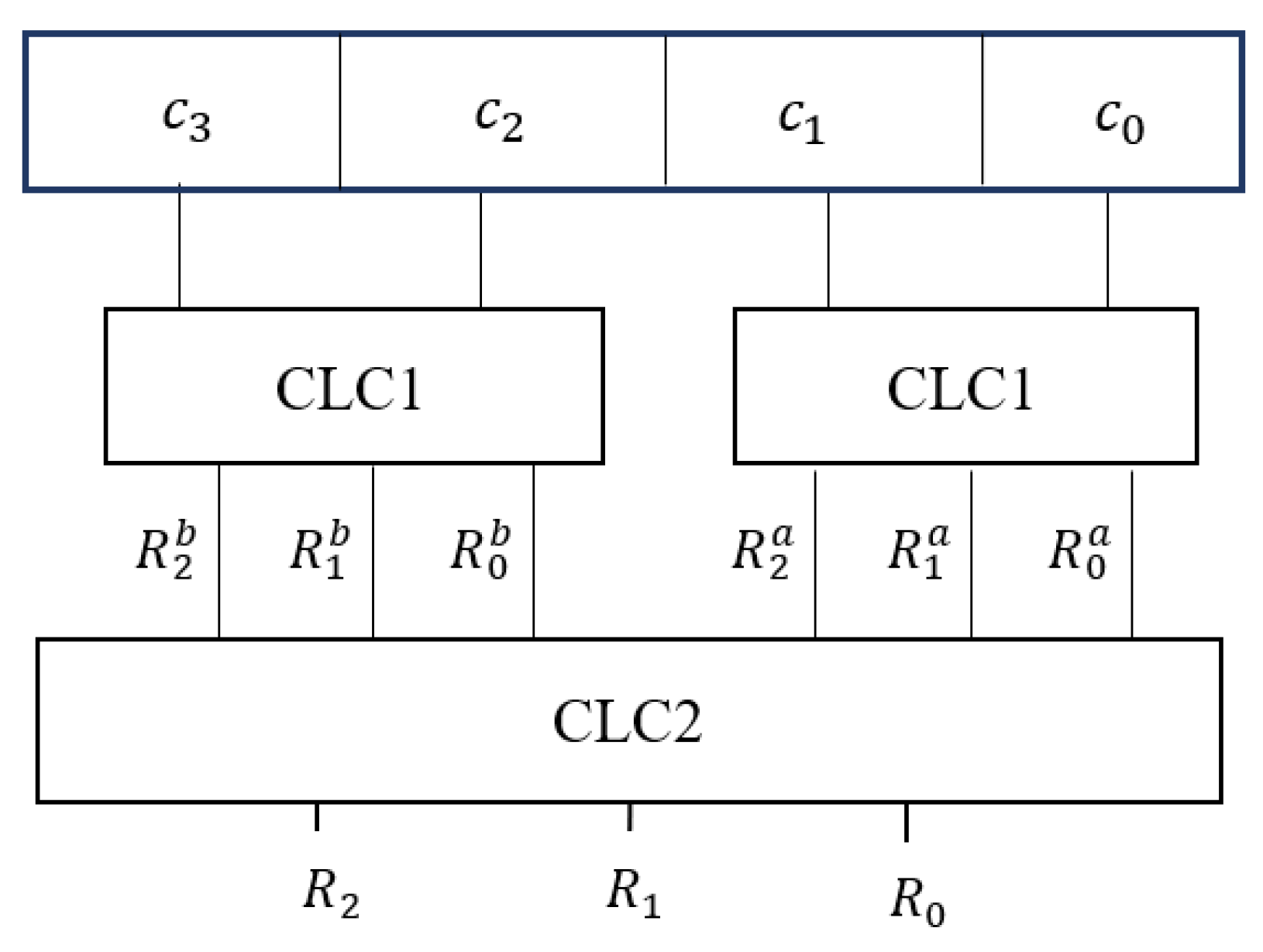

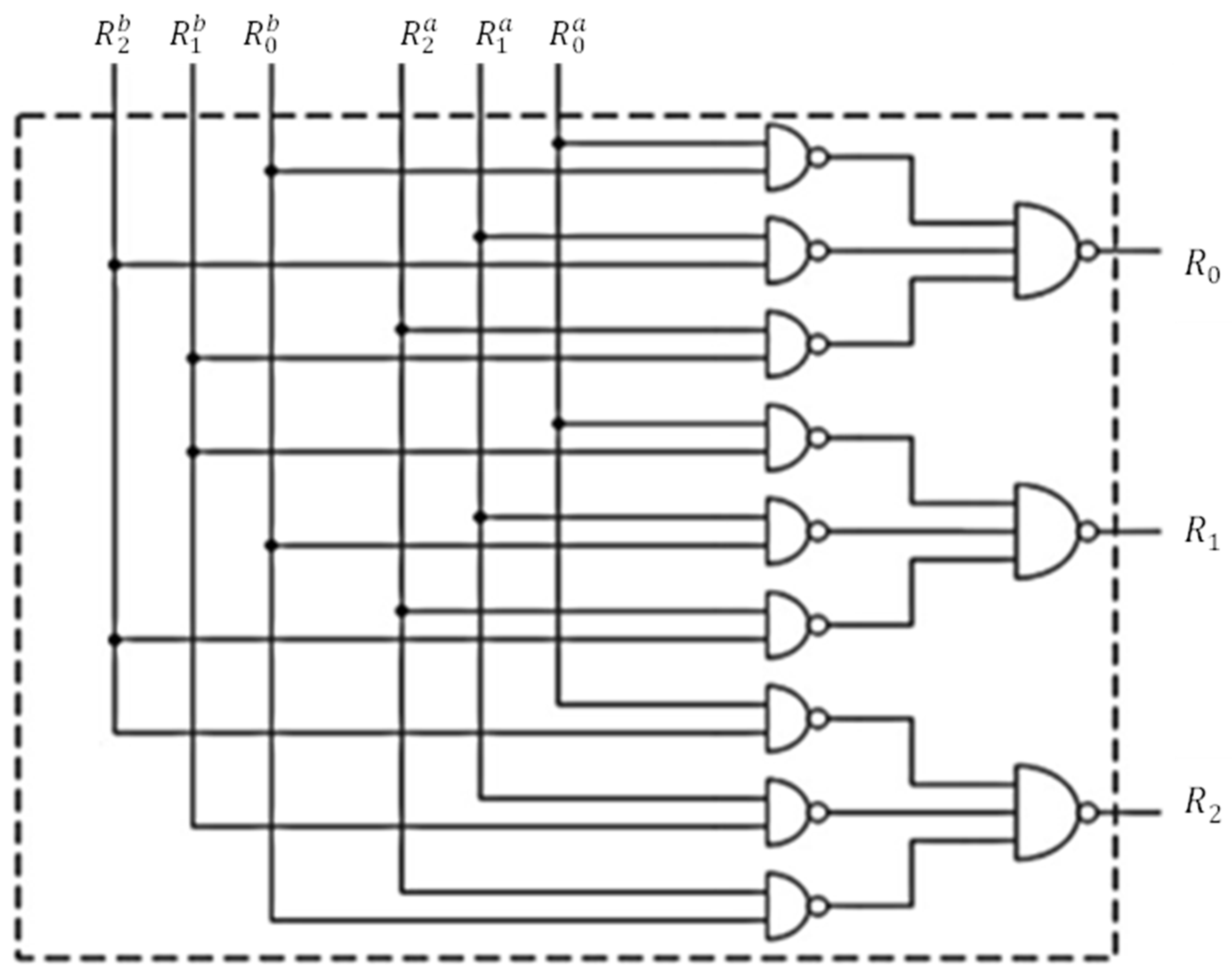

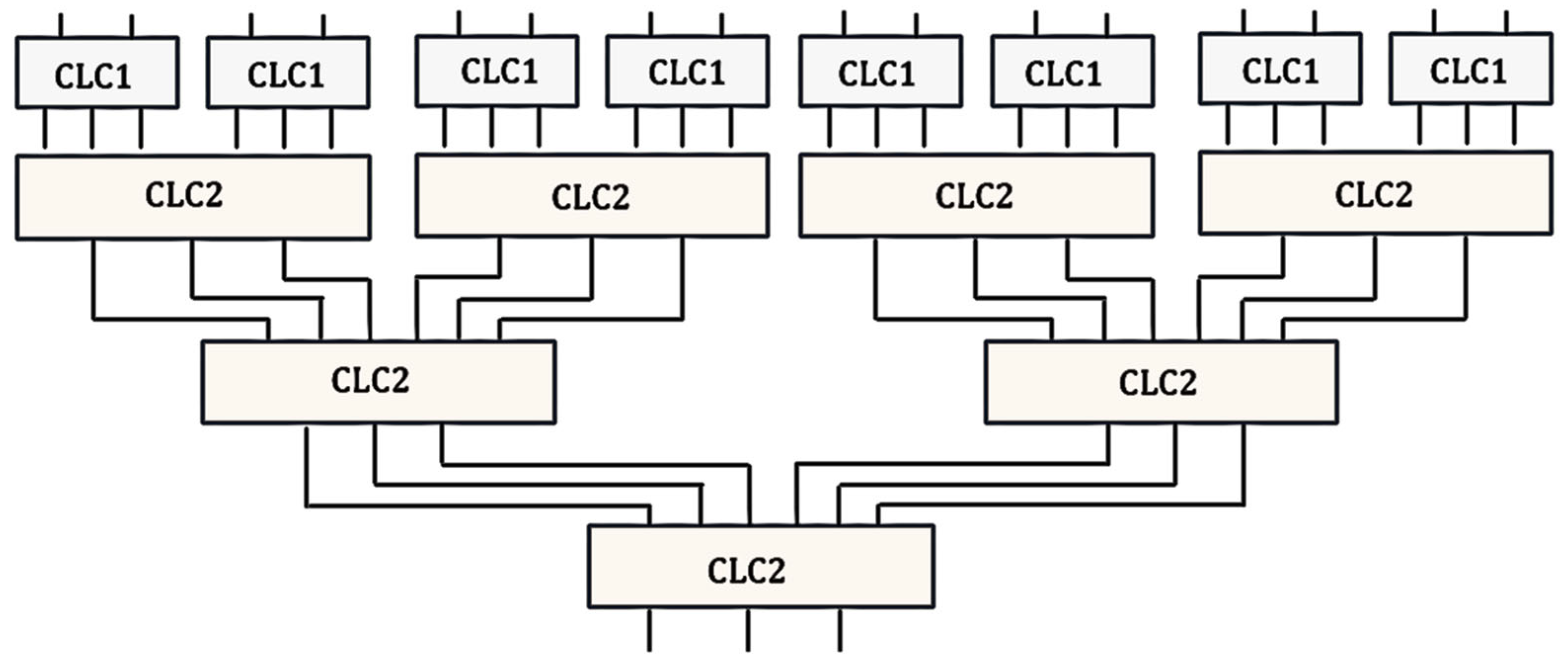

A block diagram of the self-test logic considered for implementing the proposed memory tests is shown in

Figure 13. This self-test test logic has three parts: microcode control logic, address generation logic, and data generation and response verification logic. The other two flip-flops reflect the status of the test process and the test result.

The address generation logic is essentially composed of two counters, one for the row address denoted by and the other for the column address denoted by . If during the testing process the memory is scanned only in ascending order, the counters are simple, and if the memory is scanned in both directions, the counters must be reversible. In our case, the counters are simple. The commands in the address generation logic are for resetting the address () or for incrementing the address (). When the upper limit of the tested memory area is reached, the status signal is generated.

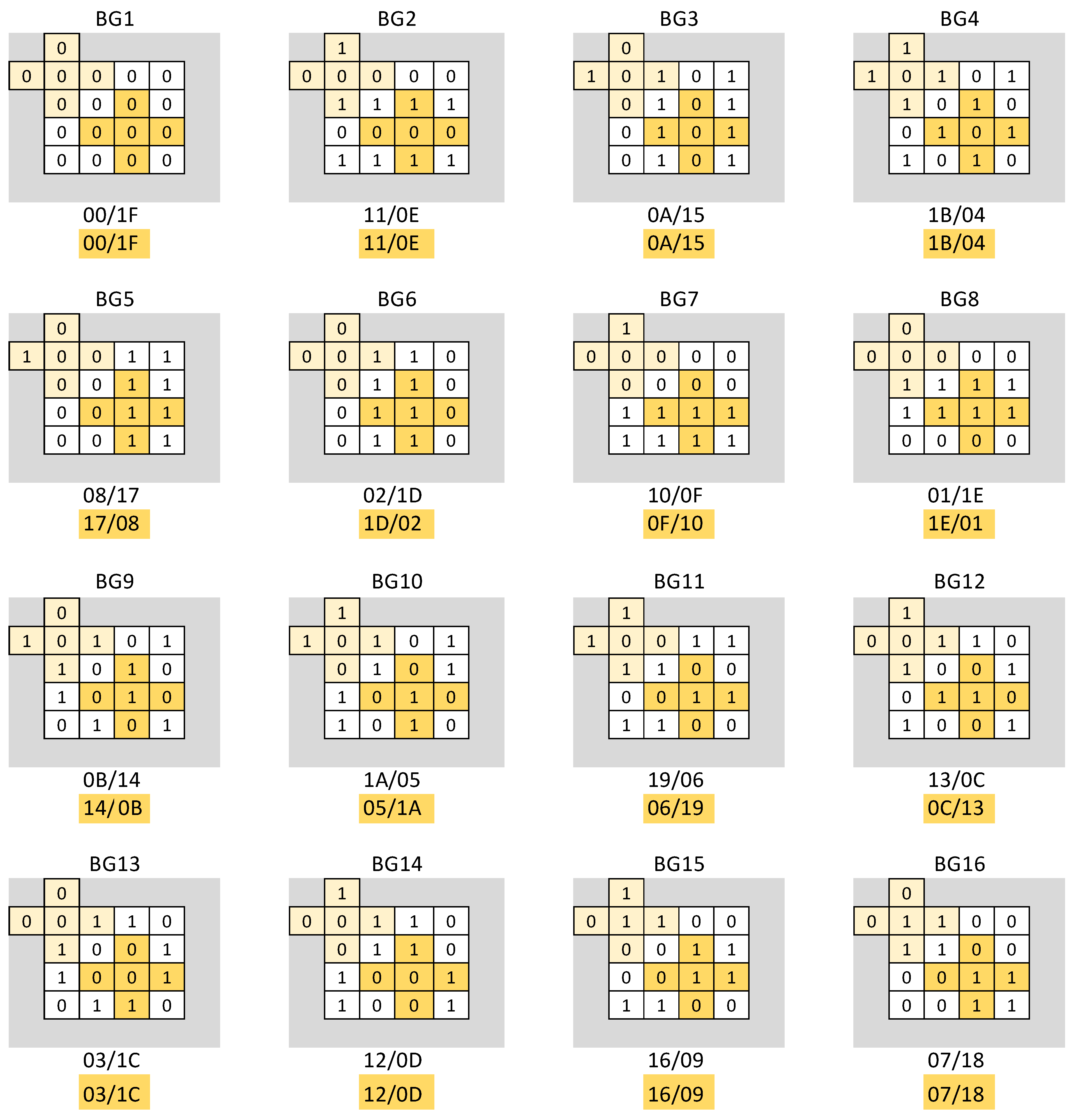

Remember that the tests proposed in this paper are multirun-type march memory tests. Specific to such a multirun test is the dependency between the value of a memory cell and its address. The proposed tests are distinguished from other known memory tests dedicated to the NPSF model, especially by the data patterns used for memory initializations. For this reason, this analysis focuses mainly on the synthesis of the address-based data generation logic (data generator, in brief).

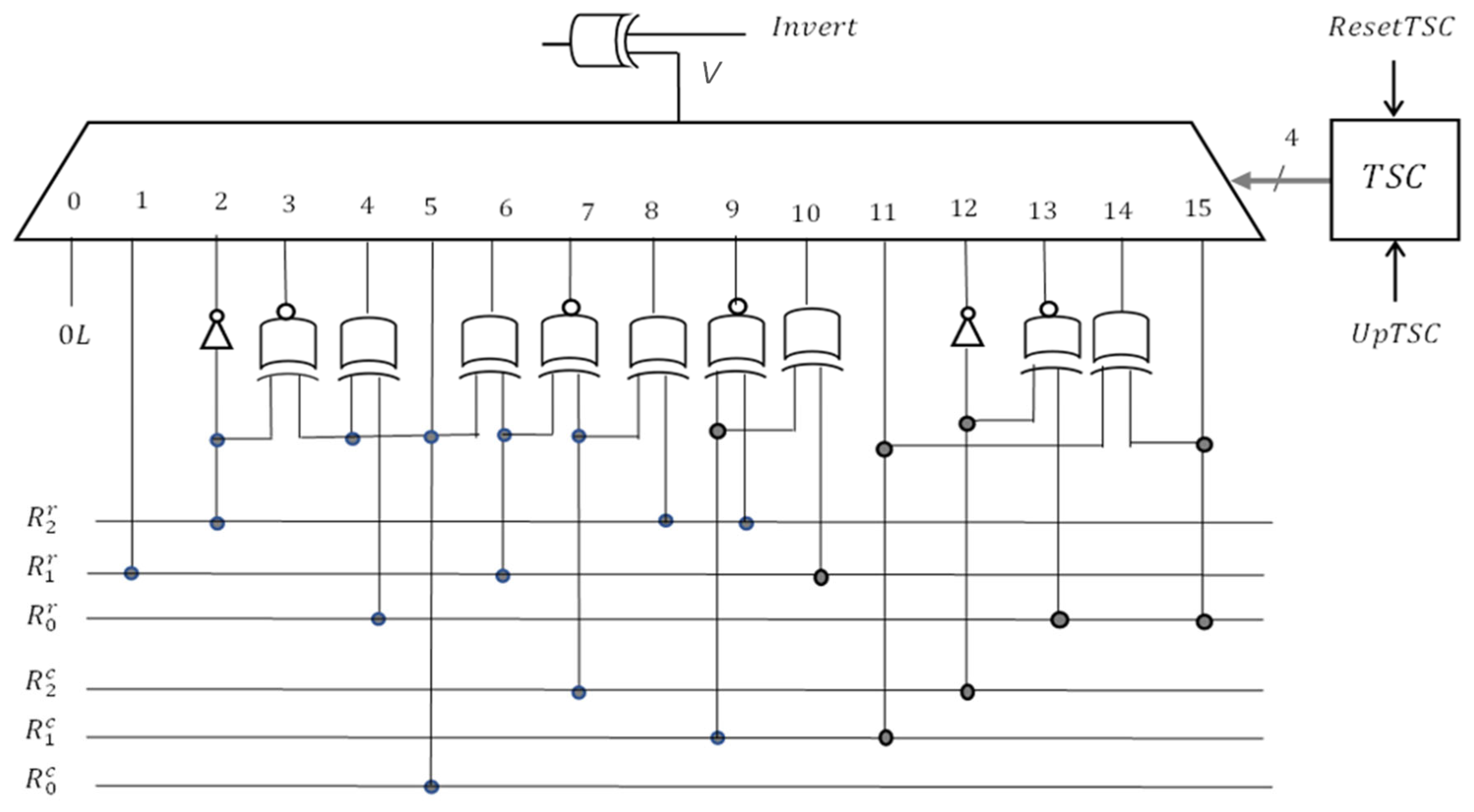

The dependency between the date and address differs from one test step to another (from one iteration to another) because a different memory initialization pattern is used each time. To adapt the date generation logic to the current data background, a test sequence counter () of length four is used. The commands for this counter are reset () and increment (). Next, we will show that the 4 × 4 background patterns used by the proposed memory tests lead to the synthesis of a simple data generation logic, which is incomparably simpler than that implied by the 3 × 3 background patterns. This is due to the fact that the logical value for the current cell is generated based on the two least significant bits of the row address and/or the column address

Let us denote by

and

the two least significant bits of the row address and by

and

the two least significant bits of the column address. Depending on the background pattern, the calculation relationship of the initialization value of a memory cell according to the address, denoted by

, is shown in

Table 6. The background patterns are shown in the table for easier verification.

The MT_NPSF_81N memory test repeats two march elements 16 times, on different data backgrounds. Consequently, in order to adapt the logical value of the accessed cell to the data background, a 16-input multiplexer is required. The 4-bit code provided by the test sequence counter, denoted by

, is applied to the selection inputs. Considering the relationships between date and address depending on the data background, as presented in

Table 6, a possible solution for the data generation logic is shown in

Figure 14.

The command that appears in the data generation logic and provided by the control logic is required to perform transition write operations in the first memory scan and read operations in the second scan, as highlighted in green in this test sequence: .

This synthesis shows that the MT_NPSF_81N memory test, despite using a large number of initialization patterns with some irregular structures, can be implemented quite easily in BIST-RAM architectures. For comparison, the following section presents aspects regarding to the synthesis of address-based data generation logic for a test that uses 3 × 3 memory initialization patterns, such as March-76N, the shortest near-optimal test currently known [

34].

6. Discussion

In relation to the new tests proposed in this paper, additional considerations covering several different aspects are made in this section.

In this research paper, only the optimal memory tests in terms of the ability to detect faults of the NPSF model were considered. Specifically, all three memory tests dedicated to the classical NPSF model, CM-79N, March-76N, and MT_NPSF_81N, fully cover this model with a minimum number of test operations. Additional write operations are used only for background changes. For this reason, the paper does not refer to “fault coverage” as the main performance indicator of memory tests. The other performance indicator regarding the number of memory operations or the length of the test is found in the name of the memory test itself. This paper targeted another aspect regarding memory tests, namely, the ease of implementation in self-testing structures of the BIST-RAM type, and the proposed memory tests, MT_NPSF_81N and MT_ENPSF, were designed specifically for this purpose.

The difference in length at the three near-optimal tests comes from the different share of memory cells that update to a background change, which is 33.3% for March_76N, 44.4% for CM_79N, and 50% for MT_NPSF_81N. This difference is reflected in the duration of the memory testing. However, in [

32], it is pointed out that selectively updating memory cells for background changes raises some implementation difficulties without a significant time gain. Therefore, designers often prefer to scan the entire memory on a background change. In these situations, the small disadvantage of the proposed test in terms of length disappears, and the advantage of simplicity of implementation in BIST-RAM type structures becomes even more important.

The main disadvantage of memory tests using 3 × 3 data background patterns comes from the fact that additional logic is required to generate modulo 3 residues for row and column addresses. Related to this, it should also be noted that if the word length of the row or column address is not a power of two (and most often this condition is not met), an asymmetry appears in the expression for calculating the modulo 3 residues, which further complicates the synthesis of additional logic.

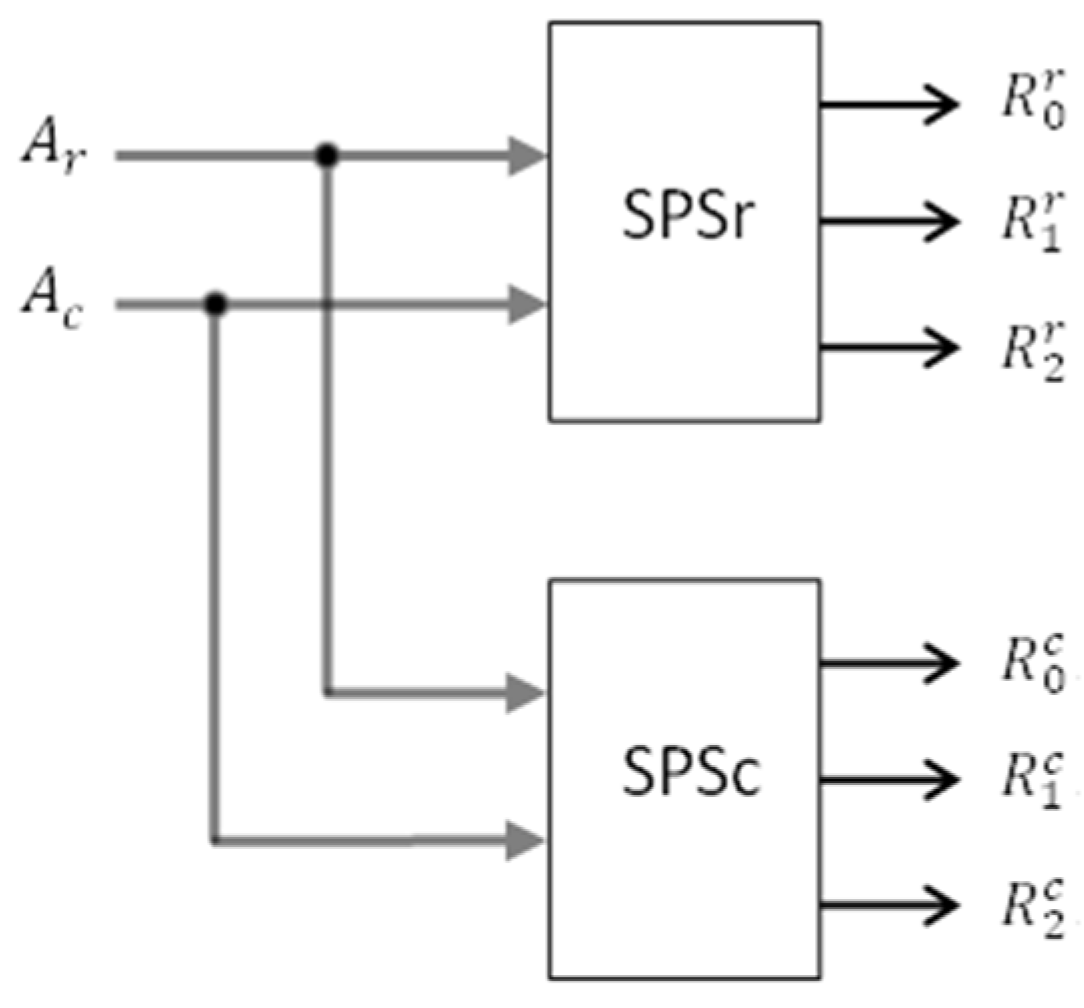

In the case of tests using 2 × 2 or 4 × 4 background patterns, the address-based data generation logic does not depend on the size of the memory being tested. The same can be said about the control logic. Only the address counters must be sized according to the geometry of the memory area. However, in the case of tests using 3 × 3 background patterns, the two additional logics for calculating the modulo 3 residues of the row and column addresses (SPSr and SPSc) differ depending on the length of the address words and therefore differ from one memory to another. The propagation time through these additional logics is also variable. And this is a major disadvantage of the March_76N and CM_79N tests compared to the proposed test, MT_NPSF_81N.

BIST structures are implemented especially in SRAM memories, which have a short response time (on the order of nanoseconds) and, as a result, can operate at high frequencies. To detect faults that manifest at high frequencies, the memory must be tested in its normal operating mode. For memories with self-test facilities, an important limitation on the speed of operation in memory test mode is related to how the control logic is implemented. If the frequency at which the memory needs to be tested is critical, then the control logic can be implemented hardwired, rather than microprogrammed, as is usually performed. In such a situation, the propagation time through the additional logic for generating the modulo 3 residues of the row and column addresses has a greater influence on the speed at which the memory test is performed. However, a quantitative assessment of this influence requires detailed knowledge of the structure of the control unit.

In BIST-RAMs, the share of additional self-test logic in the entire structure, also reflected in terms of area overhead or additional power consumption, depends largely on the size of the memory being tested. This is because, as previously mentioned, the complexity of the additional logic that provides self-test facilities is almost the same, regardless of the size of the memory being tested.

As mentioned in the previous section, more complex architectures are commonly found in memory circuits, such as the MAMB architecture in 3D memories. From a testing perspective, these architectures do not present any different problems, since their structure is designed so that multiple areas can be tested in parallel. Therefore, the basis of any testing experiment is the fault models and tests designed for SASB architectures.

The proposed tests also cover the three-cell coupling model involving neighboring memory cells arranged in a corner or on a row or column [

5,

10,

11,

12,

13,

14] in the classical and extended version, respectively, since the three-cell configurations are included in the five-cell configuration (see

Figure 1).

The background models used when approaching the NPSF model of the size 3 × 3 or 4 × 4 also influence to some extent the ability of memory tests to cover other simpler coupling fault models, such as two-cell coupling, three-cell coupling, or four-cell coupling. To highlight this point, the following section presents the experimental results obtained through a simulation of the ability of the March_76N and MT_NPSF_81N memory tests to cover these simpler fault models. Note that the two tests apply the same testing technique but use different background patterns of the size 3 × 3 and 4 × 4, respectively.

7. Simulation Results

To evaluate the ability of a memory test to cover a specific fault model, the simulation technique briefly described in

Section 3 was used. The following fault models were considered in this study: (1) two-cell coupling, where the cells in a group can be any memory cells; (2) three-cell coupling; (3) four-cell coupling; and (4) the classical NPSF model. As presented in the Introduction, for the three-cell and four-cell coupling models, the evaluation is limited to cases where the cells in a group are physically adjacent.

Depending on the fault model considered and the memory test evaluated, a large number of cell groups were selected for monitoring to cover all write operations performed during memory testing that may sensitize faults within the studied model.

For this purpose, when evaluating the MT_NPSF_81N test, which uses 4 × 4 background patterns, the set of memory cells is divided into sixteen classes (

), as known from

Section 3, while when evaluating the March_76N test, which uses 3 × 3 background patterns, the set of memory cells is divided into nine classes (

), depending on the modulo 3 residues of the row and column addresses, as shown in

Table 11.

Next, the fault models considered and how the cell groups for monitoring and fault injection were selected are briefly described.

- (1)

Two-cell coupling

Considering that when applying the studied tests to two cells of the same class, identical operations are performed, in order to evaluate the ability of the tests to detect faults that may affect pairs of memory cells in the binary matrix that simulates the tested memory, in the simulation experiment, pairs of cells (

) were chosen, such that

and

Specifically, in the simulation experiment, for the March_76N memory test, 9 × 9 pairs of cells were checked, monitoring 9 × 9 × 8 = 648 different transitions, while in the case of the MT_NPSF_81N memory test, 16 × 16 pairs of cells were checked, monitoring 16 × 16 × 8 = 2048 different transitions. For verification, 10 different experiments were performed, each time obtaining the same result.

- (2)

Three-cell coupling

For this coupling fault model, the following six configurations of three physically neighboring cells are usually considered, as shown in

Figure 21. All these six neighboring cell configurations are part of the more complex NPSF model (see

Figure 1). However, in this model, any of the three cells in the group can be a victim cell, not just the central cell, as is the case in the five-cell NPSF model.

To select groups of cells for simulation, the address of the central cell, denoted by , is indicated, around which all six configurations are formed in turn. In a group of three cells, transitions are possible. In the case of the memory test March_76N, a cell address is selected in the binary matrix for each of the nine classes , around which the six configurations are formed in turn. Thus, in the simulation experiment, distinct transitions are monitored.

In the case of the memory test MT_NPSF_81N, a cell address is selected for each of the sixteen classes , so that in a simulation experiment, distinct transitions are monitored. For verification, 10 different experiments were performed, each time obtaining the same result.

- (3)

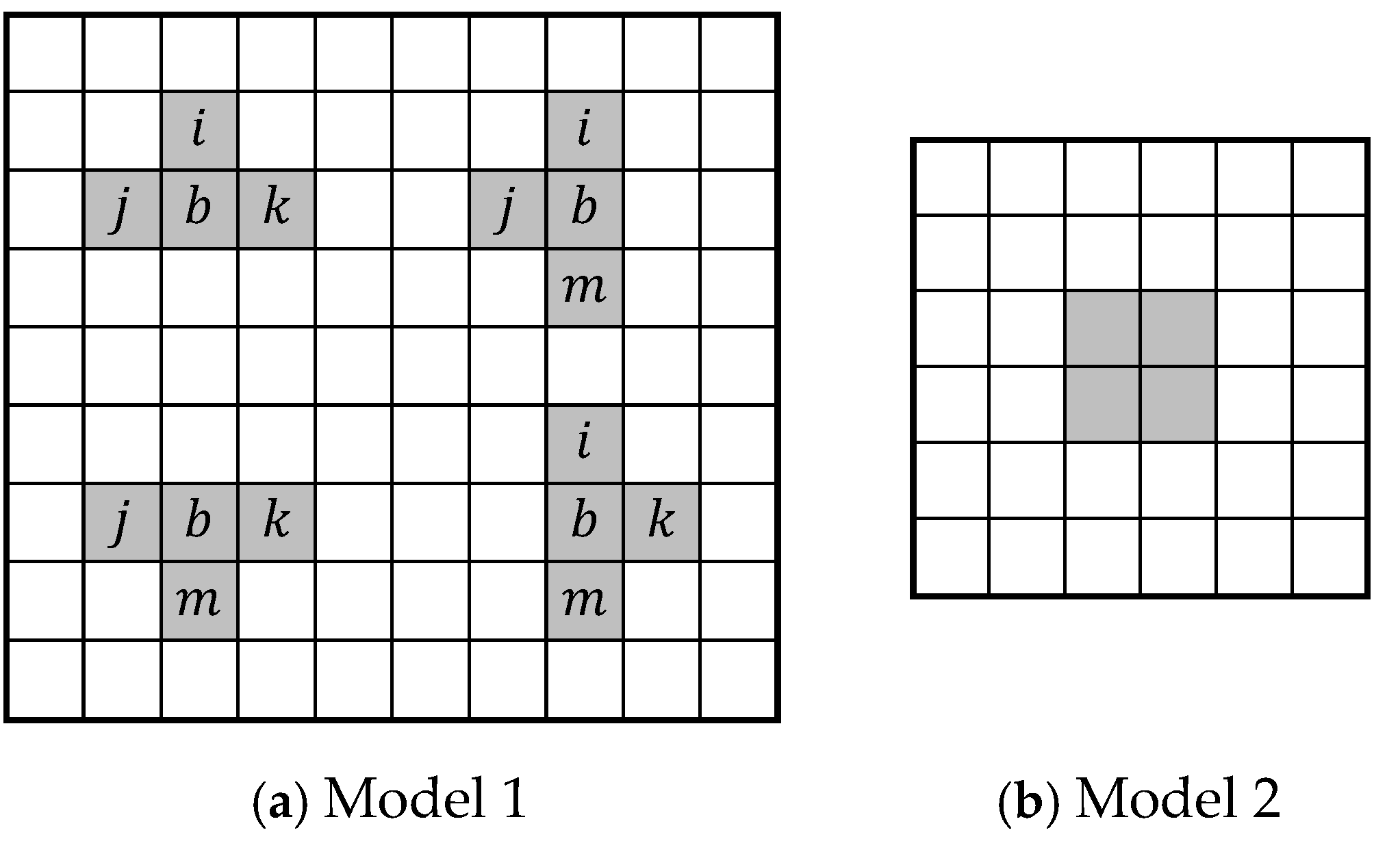

Four-cell coupling

Two distinct cases were studied for this complex fault model, as shown in

Figure 22. The first model (Model 1) includes four configurations derived from the five-cell configuration of the NPSF model. The other model (Model 2) considers the configuration of four cells arranged in a square. In both cases, any cell in the group can play the role of victim, aggressor, or enabling cell.

In a group of four cells, transitions are possible. In this case too, when forming the groups of cells to be monitored, only the address of the central cell is indicated in Model 1 (cell ) and of the cell in the upper left corner in Model 2. The group of cells is formed around this base cell in the desired configuration.

As for the previous model, one base cell from each class is chosen in the binary matrix. Thus, for the first model, in the simulation experiment, transitions are monitored when evaluating the March_76N memory test, and transitions are monitored when evaluating the MT_NPSF_81N memory test. For the second model, the number of monitored transitions is four times smaller.

For all these fault models, the simulation results regarding fault coverage by the two memory tests are presented in

Table 12. The fault coverage is expressed as the ratio between the number of detected faults and the total number of simulated faults. For verification, the simulation program also calculates the ratio between the number of transitions performed in all considered cell groups and the total number of monitored transitions. Both calculation relationships led to the same result.

The simulation results confirm that the two dedicated memory tests fully cover the NPSF model. As a verification, both memory tests also fully cover the fault models including simpler three-cell and four-cell models derived from the five-cell configuration of the NPSF model.

However, the two memory tests only partially cover the four-cell coupling fault model with the cells arranged in a square (Model 2), as these tests were not designed for this purpose. Most surprising, however, is the fact that neither of the two tests completely covers the two-cell coupling fault model, the most often used model in practice. But this is a characteristic of all tests dedicated to the NPSF model. For example, the test proposed Suk and Reddy [

28] of the length

covers the two-cell coupling model by 76.04%, and the four-cell coupling, Model 2, by only 54.3%.

To detect all coupling faults between any two memory cells, these dedicated tests should be supplemented with other test sequences. For example, for both the and MT_NPSF_81N memory tests, a new initialization with one everywhere (one-solid data background) followed by a simple memory scan using the march element before the final check is sufficient to cover this class of faults as well.

Comparing the two memory tests, March_76N and MT_NPSF_81N it results that the new test proposed in this paper offers slightly better performance in terms of covering other fault models. Since the two memory tests apply the same testing technique, this difference in fault coverage is explained exclusively by the contribution of the 4 × 4 background patterns.