To fully evaluate the classification performance of both the MOO-PSO-RF and MOO-PSO-XGBoost models, a set of quantitative assessment measures was formulated. These include the F1 score, recall, accuracy, precision, and the Kappa coefficient, which provides information beyond random variability about the consistency. Through cross-validation, the models were tested on two benchmark datasets to ensure robustness, dependability, and generalization. This section presents and interprets the findings, with a particular focus on the impact of feature selection and classifier tuning on detection performance.

4.1. Performance Comparison Across Models and Datasets

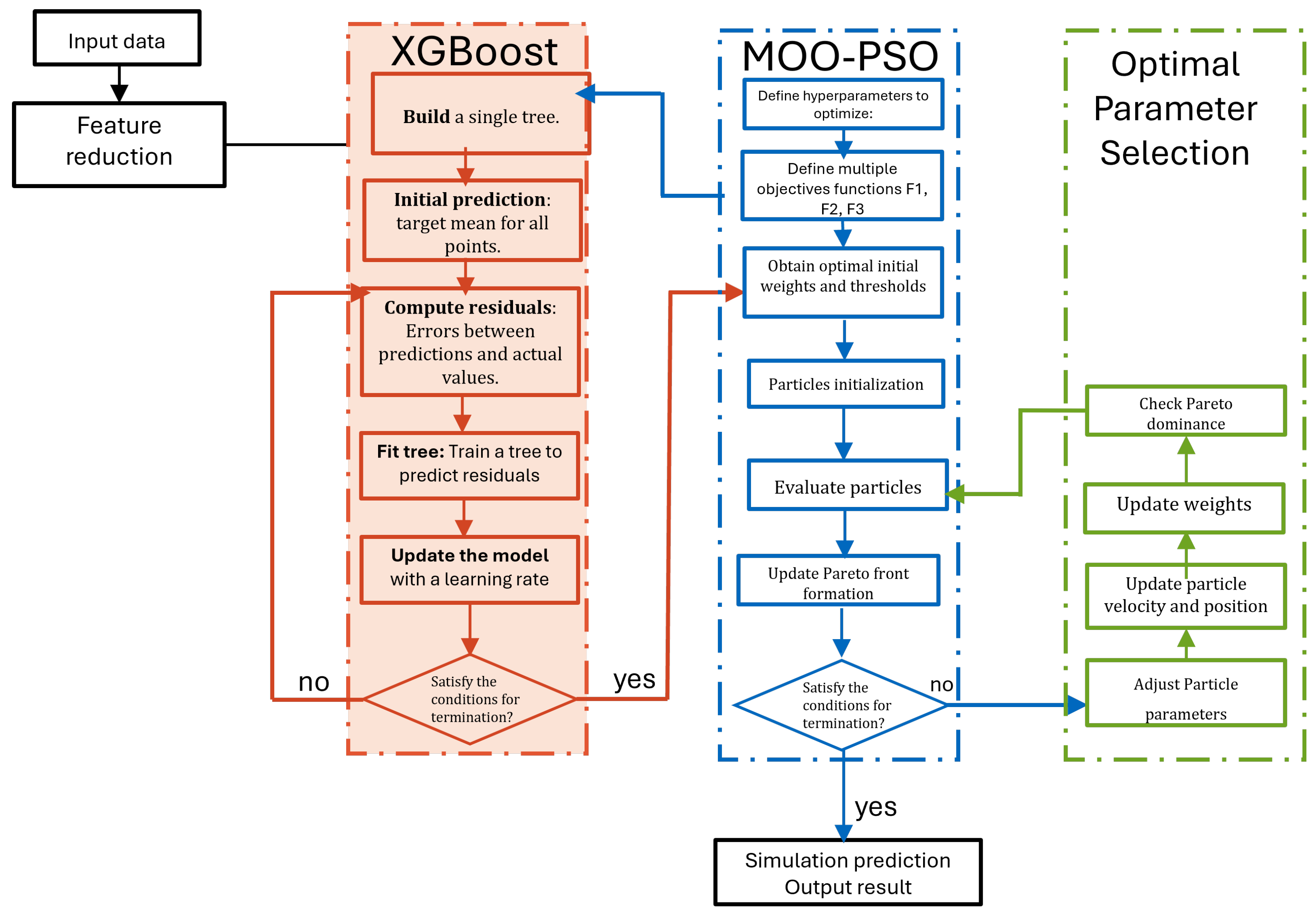

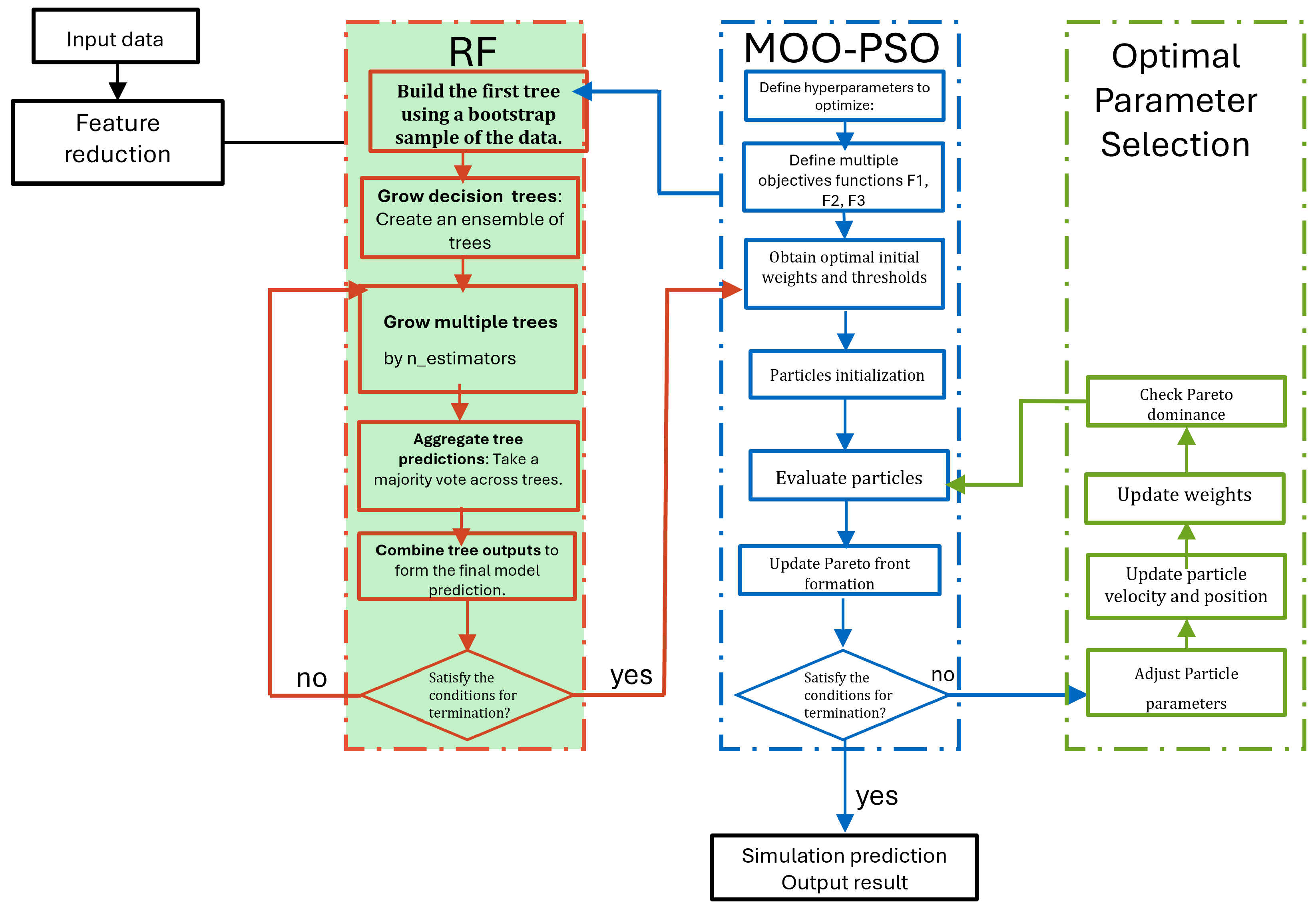

To assess the model’s flexibility in response to various network conditions and attack patterns, a dynamic scoring mechanism is employed that adapts according to the input feature distribution. By using GA, different feature subsets were generated based on the unique distribution and characteristics of each dataset, with 16 features for CICIoT2023 and 20 features for NSL-KDD, classified using MOO-PSO-XGBoost. Additionally, MOO-PSO-RF trained 12 and 15 features for CICIoT2023 and NSL-KDD, respectively.

As shown in

Table 8, the optimized XGBoost model achieved a Cohen’s Kappa of 99.85% and 99.91% accuracy on a smaller dataset. The Optimized RF model, on the other hand, achieved a significantly higher level of accuracy on low-dimensional data, with a score of 97.94%. As a result of incorporating the feature reduction mechanism (

Table 9), on the NSL-KDD dataset, RF performance gains of 3% (accuracy), 3.09% (precision), 3.08% (recall), and 3.89% (F1 score) and a significant increase in Kappa were achieved. The GA-selected feature subsets resulted in significantly shorter training times but at the expense of substantially lower accuracy. For instance, MOO-PSO-XGBoost’s accuracy decreased from 99.28% to 98.38% in high-dimensional data and from 99.91% to 98.46% in low-dimensional data. This behavior is technically justified by the nature of GA-based feature selection, which utilizes stochastic search to identify feature subsets that optimize a fitness function, typically accuracy or composite scores. Nevertheless, a GA may lead to locally optimal feature sets, leaving out key relevant traits that are essential for capturing complex feature interactions. However, the decreased input dimensionality results in lower tree-building costs, resulting in a faster training process (as explored in

Section 4.3) and reduced model complexity. Real-time deployability and computational efficiency are frequently more critical than slight accuracy gains in Internet of Things applications.

To provide a thorough comparative analysis of the classifiers, we analyzed precision, recall, and F1 score using a heatmap representation. In

Figure 5a–d, the columns correspond to the assessment metrics from both models, while the rows represent the attack types (DoS, Normal, Probe, R2L, and U2R). Both models demonstrated their ability to generalize successfully under multi-objective optimization by achieving perfect scores (1.00) for most classes, particularly for U2r and R2l. Compared to MOO-PSO-XGBoost, the Probe class exhibits significant improvements in MOO-PSO-RF.

In each attack class in the CICIOT2023 dataset, MOO-PSO-XGBoost consistently outperformed RF in terms of accuracy, recall, and F1 score, as shown in (

Figure 5c,d). It is more noticeable in complicated and minority classes, where MOO-PSO-XGBoost maintained higher F1 scores. As a result, label imbalance and interclass relationships are effectively handled. On the smaller dataset (

Figure 5a,b), MOO-PSO-RF performed marginally better than MOO-PSO-XGBoost across most metrics after reduction due to its use of ensemble bagging, which is more robust to small-sample variance and has a lower dimensionality in low-data conditions. However, MOO-PSO-XGBoost demonstrated its built-in regularization and resistance to overfitting both before and after feature selection, even with a smaller feature set. The performance gap between the two classifiers declined considerably with a larger dataset.

Following GA-based reduction, the two classifiers performed about equally on the larger dataset (CICIoT2023). This convergence can be explained by the fact that both models are capable of learning discriminative patterns from the optimal feature subset when sufficient data is available. Additionally, the GA was successful at eliminating noisy and redundant features, improving model convergence, and shortening training times, particularly in situations involving high-dimensional data.

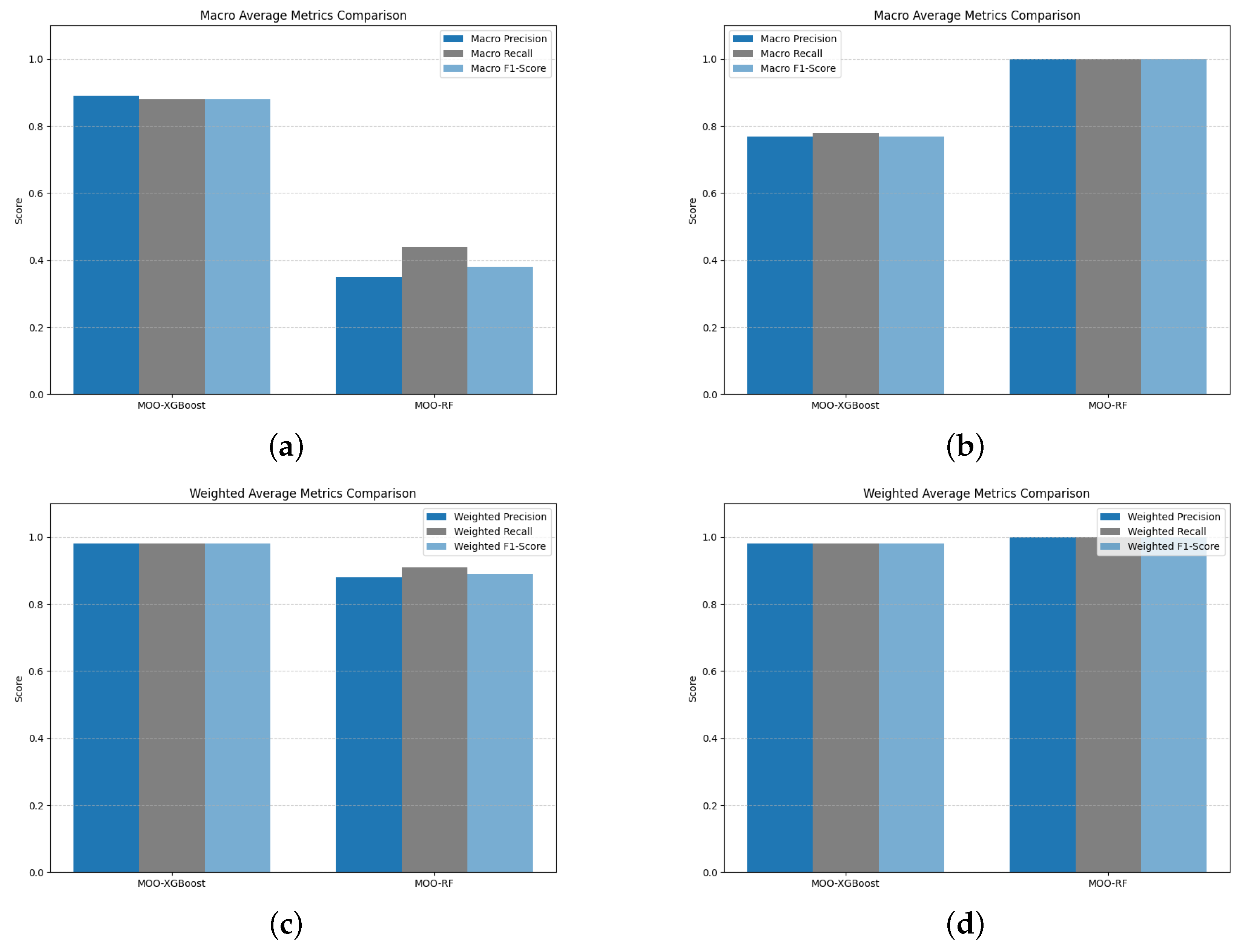

Figure 6a,b compare the macro-averaged precision, recall, and F1 score of the MOO-PSO-XGBoost and MOO-PSO-RF models. Regardless of the frequency of classes, macro averages provide a balanced perspective across classes, emphasizing the overall balance of performance. A comparison of weighted accuracy, recall, and F1 scores between MOO-PSO-RF and MOO-PSO-XGBoost is shown in

Figure 6c,d. By taking into account the relative size of each class, weighted averages reflect the overall performance on unbalanced datasets. According to the macro average metrics plot, MOO-PSO-XGBoost performs noticeably better than MOO-PSO-RF in terms of macro precision, recall, and F1 score on a larger dataset. The macro performance of MOO-PSO-RF is much worse, especially in terms of F1 score (0.38) and accuracy (0.35), indicating a less balanced performance across all classes. MOO-PSO-XGBoost maintains high precision, recall, and F1 scores (all around 0.98). The MOO-PSO-RF algorithm performs well on the majority classes but struggles with minority classes, as evidenced by the fact that its weighted scores (0.88 precision, 0.91 recall, and 0.89 F1 score) are significantly higher than its macro scores.

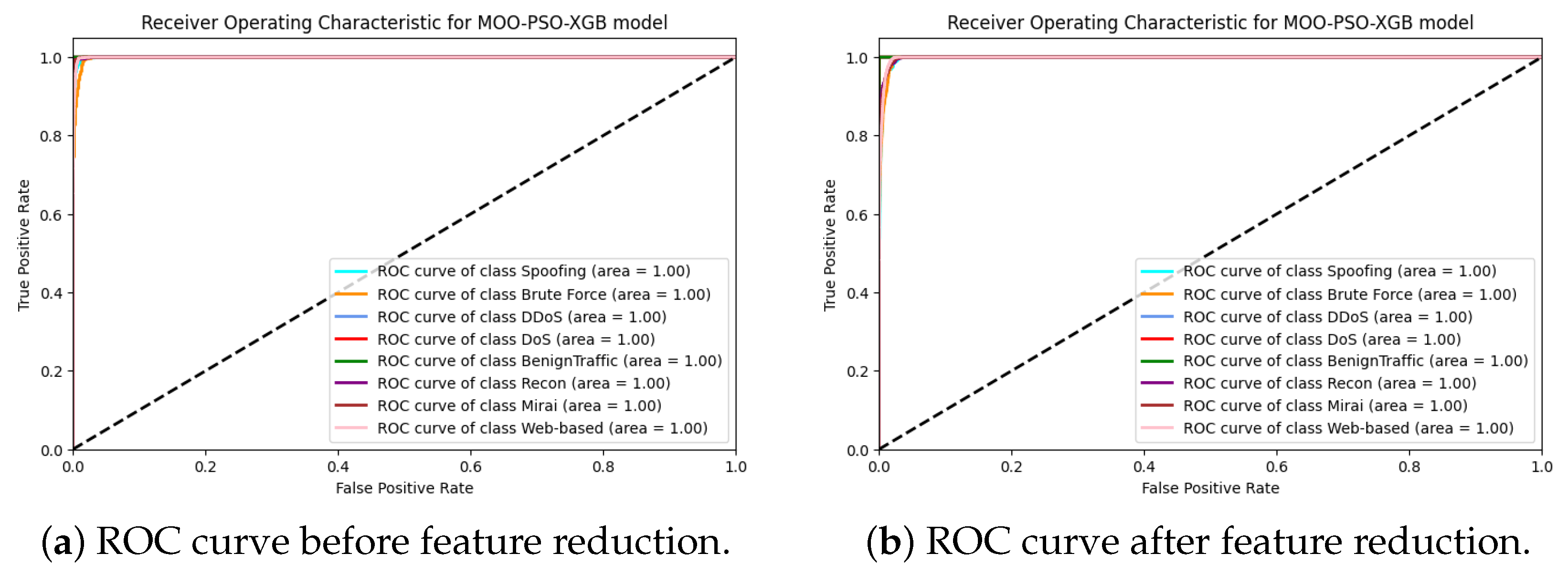

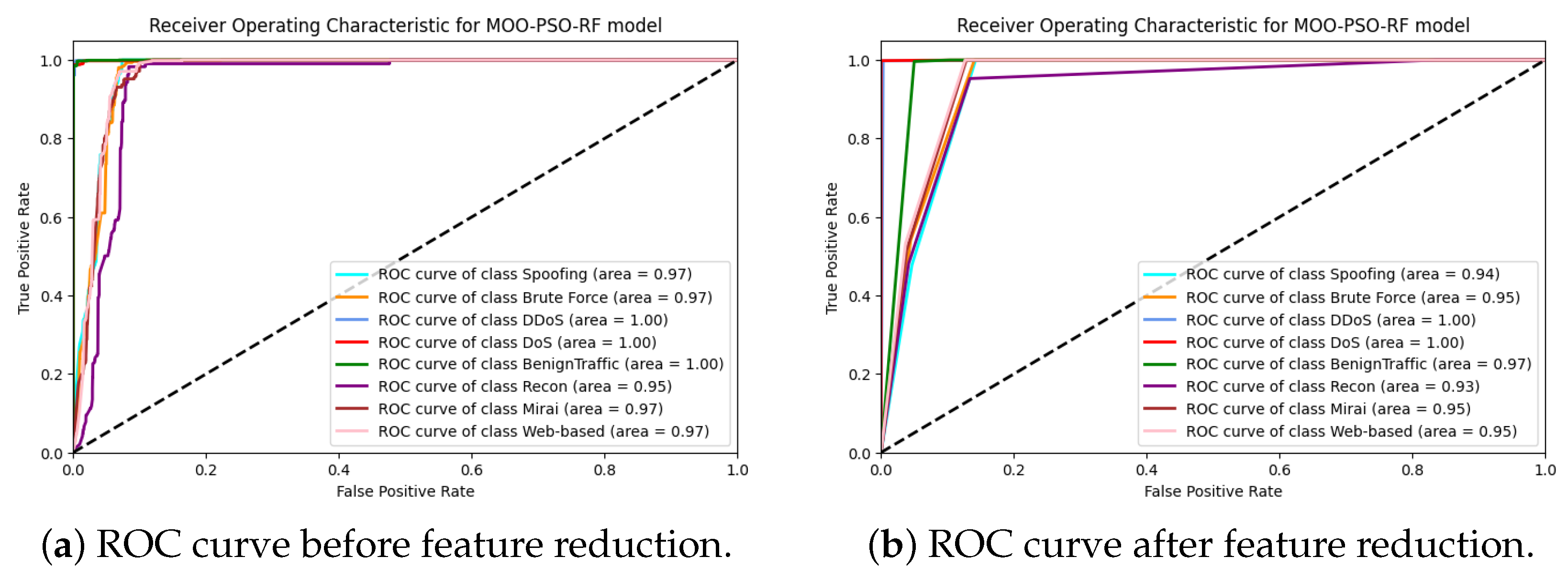

During the feature reduction process, several duplicate and irrelevant features were identified, resulting in a reduction in the distinction between the ROC curves for MOO-PSO-XGBoost, as illustrated in

Figure 7 and

Figure 8, particularly for a smaller dataset (i.e., the NSL-KDD dataset). After feature reduction, both large and small datasets showed improved classification performance, as indicated by the ROC curves. The large dataset (i.e., the CICIoT2023 dataset) demonstrated excellent class separation, with high sensitivity and specificity, as indicated by the substantial increase in the ROC curve towards the top-left corner.

Before feature reduction, MOO-PSO-RF generated slightly more cluttered ROC curves with lower AUCs and greater overlap between class predictions, as illustrated in

Figure 9 and

Figure 10. A particularly significant effect was observed in small datasets, where an increase in the number of features led to an increase in variance. The ROC values were also improved following the application of feature reduction. In large datasets, the ROC curves become sharper and steeper, indicating improved discriminatory power and fewer classification errors.

To validate the statistical superiority of the proposed MOOIDS-IoT framework, comprising MOO-PSO-XGBoost and MOO-PSO-RF models, over baseline models, we conducted paired statistical tests on the performance metrics obtained from the CICIoT2023 and NSL-KDD datasets. The baseline models used for comparison are standard XGBoost and Random Forest (RF) with default hyperparameters, as commonly employed in prior IoT intrusion detection studies. The analysis focuses on the accuracy metric, derived from 10-fold cross-validation experiments, to ensure robustness and consistency. We employed both paired

t-tests and Wilcoxon signed-rank tests to assess the statistical significance of performance differences between MOOIDS-IoT models and their respective baselines. The Shapiro–Wilk test was used to evaluate the normality of the performance data, revealing a non-normal distribution (

p < 0.05). Consequently, the Wilcoxon signed-rank test was included as a non-parametric alternative to complement the paired

t-test, ensuring reliable results, regardless of data distribution. The tests were conducted on the accuracy scores obtained from 10-fold cross-validation runs for both datasets. The results of the statistical tests are summarized in

Table 10 below. For MOO-PSO-XGBoost compared to the baseline XGBoost, a t-statistic of 13.7472 and a

p-value of 0.0000 indicate a statistically significant improvement at the 0.05 significance level. In the Wilcoxon signed-rank test, a statistic of 0.0000 and a

p-value of 0.0020 confirm the considerable superiority of MOO-PSO-XGBoost, even under non-parametric assumptions.

For MOO-PSO-RF compared to the baseline Random Forest model, a t-statistic of 14.5000 and a p-value of 0.0000 demonstrate a statistically significant improvement at the 0.05 significance level. Additionally, a statistic of 0.0000 and a p-value of 0.0015 further validate the superior performance of MOO-PSO-RF, particularly after feature reduction, where it outperformed MOO-PSO-XGBoost on the NSL-KDD dataset due to its stability in low-dimensional settings.

The statistical tests confirm that both MOO-PSO-XGBoost and MOO-PSO-RF significantly outperform their respective baseline models (XGBoost and Random Forest) across both datasets, with p-values well below the 0.05 threshold. Significantly, MOO-PSO-RF demonstrates superior performance on the NSL-KDD dataset after feature reduction, leveraging the effectiveness of MOO-PSO optimization and GA-based feature selection to achieve higher accuracy and stability in low-dimensional settings. These results underscore the robustness of the MOOIDS-IoT framework for lightweight and real-time IoT intrusion detection, making it well-suited for resource-constrained environments.

4.2. Optimization Strategy and Tuned Hyperparameters

In this section, the performance of MOO-PSO is evaluated using the following standards:

For large data, the MOO-PSO-XGBoost model convergence metric steadily declined over time, as illustrated in

Figure 11, tarting at 85% with 0.0245 loss and reaching a maximum of 98% convergence with 0.023 loss, indicating steady progress toward the optimal Pareto front. Despite dealing with massive amounts of data, complexity was kept under control with MOO-PSO-XGBoost’s regularized learning approach, which penalizes unnecessary exponential expansion. As shown in

Figure 12, despite the smaller feature space, the model was able to be trained more effectively while maintaining a rich representational capacity, achieving a loss value of less than 0.003 after four iterations. On the other hand, the convergence plot displays a more dynamic trend, from 45 at iteration 1 to 98 at iteration 3, with the convergence metric gradually declining afterwards; the optimization process either introduced or explored new solution candidates that temporarily increased convergence, even if they were advantageous for other purposes. This is a typical and often consciously chosen strategy in multi-objective particle swarm optimization, where striking a balance between convergence and diversity is essential.

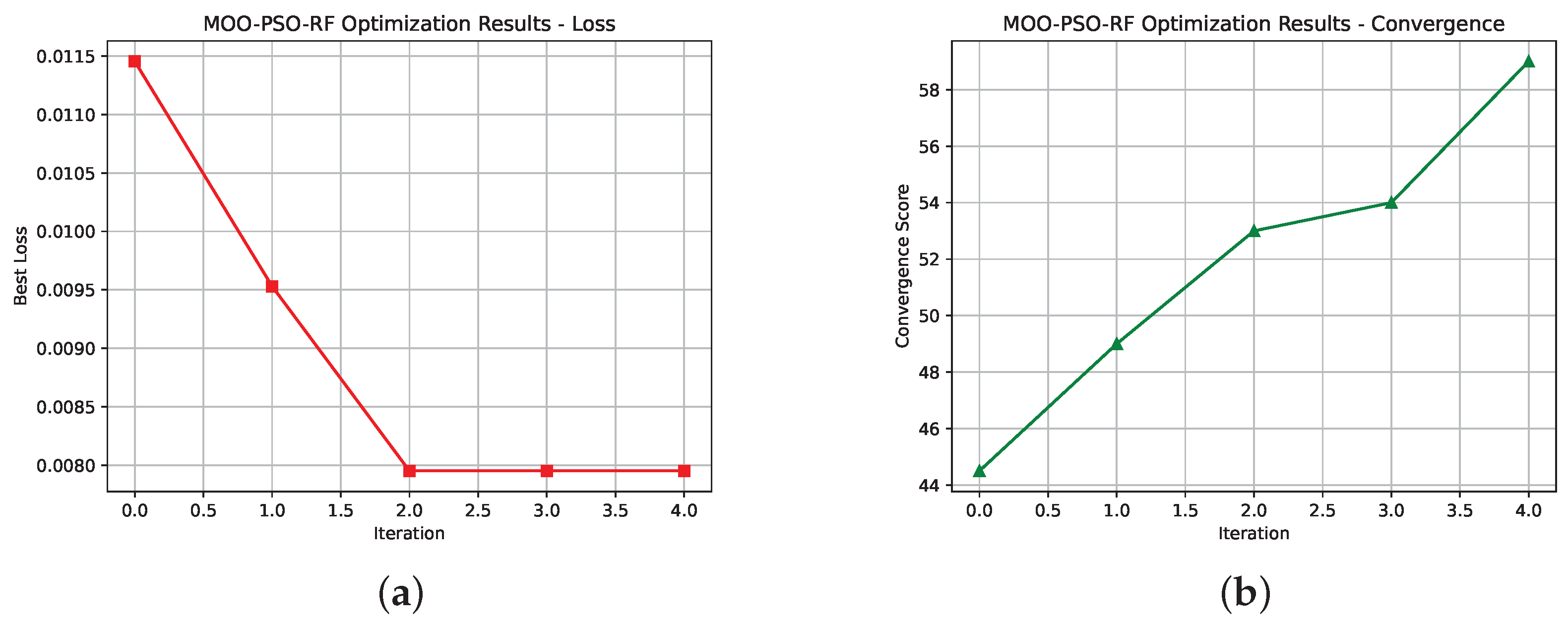

MOO-PSO-RF models (

Figure 13 and

Figure 14) exhibit unique optimization characteristics on both small and large datasets. By consistently improving the convergence metric, the optimizer successfully refined the forest structure toward well-balanced solutions, achieving a fixed loss of 0.018 and convergence of over 72% across four iterations. There are slight variations in the final repetitions, however, which indicate planned exploration to ensure diversity within the ensemble. When compared with large datasets, the benefits of feature reduction were significantly greater. With a smaller feature space, there was less chance of overfitting, allowing the optimizer to produce simpler forests while maintaining excellent accuracy.

Overall, these findings support the incorporation of GA-based feature reduction into multilabel classification pipelines, demonstrating competitive and scalable performance across various classifiers and dataset sizes, as well as improved computational efficiency.

Particle swarm optimization (PSO) techniques exhibit fundamental differences when applied to single-objective versus multi-objective situations.

Figure 15 illustrates the difference between single-objective PSO (SOPSO) and multi-objective PSO (MOO-PSO) search dynamics. As illustrated in

Figure 15a, all particles in single-objective PSO (SOPSO) collectively converge toward a single optimal solution (such as loss), a tightly clustered set of positions in the decision space, and a single point in the objective space is obtained. As convergence occurs, the behavior is primarily motivated by the need to efficiently exploit the optimal solution, with little attention paid to preserving diversity. During the early stages of the process, particles are dispersed and investigated for their possibilities. The particles begin to cluster under the influence of the global best. In the final stage, when the particles have nearly converged, the optimal solution is exploited.

Figure 15b illustrates the convergence progress in the multi-objective PSO model, which represents a Pareto front in objective space, showing trade-offs between loss (f1), accuracy (f2), and complexity (f3) through a color gradient. Due to the multiplicity of options available, MOPSO supports the decision-making process for various design goals by preserving diversity to accommodate a range of trade-offs. MOPSO offers a variety of solutions, each of which balances objectives in a manner different from SOPSO, where all particles converge on a single optimum.

4.3. Model Scalability Analysis

This section examines the computational complexity and performance characteristics of the optimized RF and MOO-PSO-XGBoost models after implementing the suggested multi-objective and feature reduction approach. By focusing on training time, model simplicity, and the effects of lower hyperparameter configurations, the advantages of the best-tuned models are demonstrated.

To assess the effectiveness of the suggested feature reduction technique,

Table 11 provides pre- and post-reduction parameter measurements for each classifier (RF and MOO-PSO-XGBoost). A significant finding is that essential hyperparameters consistently decrease after feature selection. Both MOO-PSO-RF and MOO-PSO-XGBoost showed a downward trend in parameters such as

,

,

, and

. The reduction indicates a higher signal-to-noise ratio for the chosen features, suggesting that shallower trees, fewer estimators, and less regularization complexity were required to achieve similar or better performance. Due to the reduced input space’s higher discriminative potential, MOO-PSO-XGBoost, for example, requires less extensive pruning and regularization, as evidenced by the lower

and

values. Furthermore, MOO-PSO-RF’s lower

and

indicate better data separation, resulting in fewer splits and trees that accurately model class boundaries.

Furthermore, both models demonstrated reduced training and optimization times, as shown in

Table 12, resulting in a much smaller computational footprint. In contexts where resource limits are crucial, such as edge computing and the Internet of Things, this is particularly advantageous. The PSO-MOO-XGBoost model takes 28.2 s to train after feature reduction and 1 h and 47 min to optimize on the CICIoT2023 dataset. MOO-PSO-RF achieved a training time of 55 s and an optimization time of 2 h and 33 min on the same dataset. When it comes to the computational overhead or resources required for intrusion detection systems, longer training times and larger models can result in a sluggish system response, especially in real-time monitoring environments. Although the model training procedure is complex and time-consuming, MOO-PSO-XGBoost’s reduced training time suggests that it can respond rapidly to real-world situations and is suitable for situations requiring prompt decision-making.

Generally, the best parameter configurations resulting from feature reduction confirm the method’s strength and generalizability, thereby demonstrating its applicability in high-dimensional and security-sensitive environments.

On the NSL-KDD dataset, MOO-PSO-RF demonstrated comparatively long optimization and training times of 3 h, 59 min, and 37 s, and 25 s, respectively. Therefore, the model may be effective for training, but it may require longer analysis times for real-time detection, which could limit its use in high-speed network environments. The MOO-PSO-RF and MOO-PSO-XGBoost datasets have relatively moderate sizes (116 estimators and a maximum depth of 4.8), suggesting that these models may be appropriate in contexts with limited resources. The advent of genetic algorithms (GAs) for feature selection and multi-objective optimization approaches for the optimization of Random Forest (RF) hyperparameters has resulted in additional processing overhead. The overhead is primarily evident in longer training times (due to repeated training of RF models under different setups) and longer optimization times (due to recurrent search operations spanning generations of particles).

4.4. Comparison

To evaluate the performance of our proposed method, we compare it to state-of-the-art (SOTA) studies that have evaluated different machine learning methods using the CICIoT2023 dataset, as illustrated in

Table 13, and the NSL-KDD dataset, as illustrated in

Table 14. The CICIoT2023 dataset features a diverse range of IoT devices and a comprehensive network design, which collectively contribute to the current state of IoT security research. Although the CICIoT2023 dataset is extensive and rich, it has been utilized in only a limited number of studies in the literature, particularly in the context of swarm-based intelligence. Some research has examined full-feature models without dimensionality reduction, while others employed feature reduction strategies to improve model efficiency and performance [

6,

10,

33]. By comparing our suggested strategy to these various approaches, we seek to demonstrate its accuracy and computational efficiency.

In comparison with these neural models, the proposed optimized conventional MOO-PSO-XGBoost is significantly more accurate and simpler. It is usually more effective with tabular data and can be trained with a high level of accuracy in a shorter time.

Using the low-dimensional NSL-KDD dataset as a benchmark, we further evaluated the efficacy of the proposed framework model on this dataset. Based on the results of our evaluation, we compared our model to three cutting-edge approaches. Despite the incredible complexity of the attention-based model, MOO-PSO-XGBoost ranks second, after the attention-based GNN, in terms of key parameters including F1 score, accuracy, and precision. Compared to CNN, VLSTM, and MIX-CNN-LSTM, this model achieves the lowest false-alarm rate, indicating a slight compromise in the recognition of all positive cases. Based on the NSL-KDD dataset, these results suggest that MOO-PSO-RF achieves a high level of success in reducing false positives and providing balanced, trustworthy classification results within 25 s.