Robust JND-Guided Video Watermarking via Adaptive Block Selection and Temporal Redundancy

Abstract

1. Introduction

Main Contributions

- Perceptual-driven block selection: Unlike methods that uniformly or randomly select embedding regions, we combine multiscale Laplacian-based texture analysis, spectral saliency contrast, and semantic masking (face/object detection) to create a composite perceptual map. This map drives an adaptive, content-aware selection of robust insertion blocks.

- Spatial-domain DCT estimation: Instead of computing full DCT transforms, we estimate selected coefficients directly from the spatial domain using analytical approximations. This improves computational efficiency while preserving compatibility with frequency-domain robustness.

- Adaptive QIM modulation using JND maps: The quantization step is locally modulated using a JND model that incorporates luminance, spatial masking, and contrast features. This improves imperceptibility while preserving watermark recoverability.

- Redundancy via multiframe embedding: We implement a lightweight redundancy mechanism that spreads the watermark across multiple scene-representative frames, selected through histogram-based keyframe detection. Majority voting is used for recovery, improving resilience to temporal desynchronization, noise, and compression.

- End-to-end blind architecture with low complexity: The entire method operates without access to the original video at extraction time, and is designed for low runtime overhead, enabling practical deployment in resource-constrained environments.

2. Related Works

2.1. Overview of the Original Method

- Spatial-domain DCT-based embedding: Rather than computing the full DCT/IDCT transform on every frame, we developed a method for estimating and modifying selected DCT coefficients directly through spatial operations, dramatically reducing computational burden.

- Visual attention-modulated JND profile: Watermark energy was determined by combining JND thresholds with saliency analysis in a perceptual model that was computed on keyframes. This allowed stronger embedding in visually insensitive regions while preserving perceptual transparency [16].

- Scene-based watermark modulation: The video was segmented into scenes, each represented by a keyframe [26]. Watermark parameters were calculated only on these keyframes and propagated through the rest of the scene using motion tracking and saliency consistency, reducing redundant computation.

2.2. Redundant Encoding Strategies in Watermarking

2.3. Block Selection for Robust Embedding in Video Watermarking

3. Proposed Method

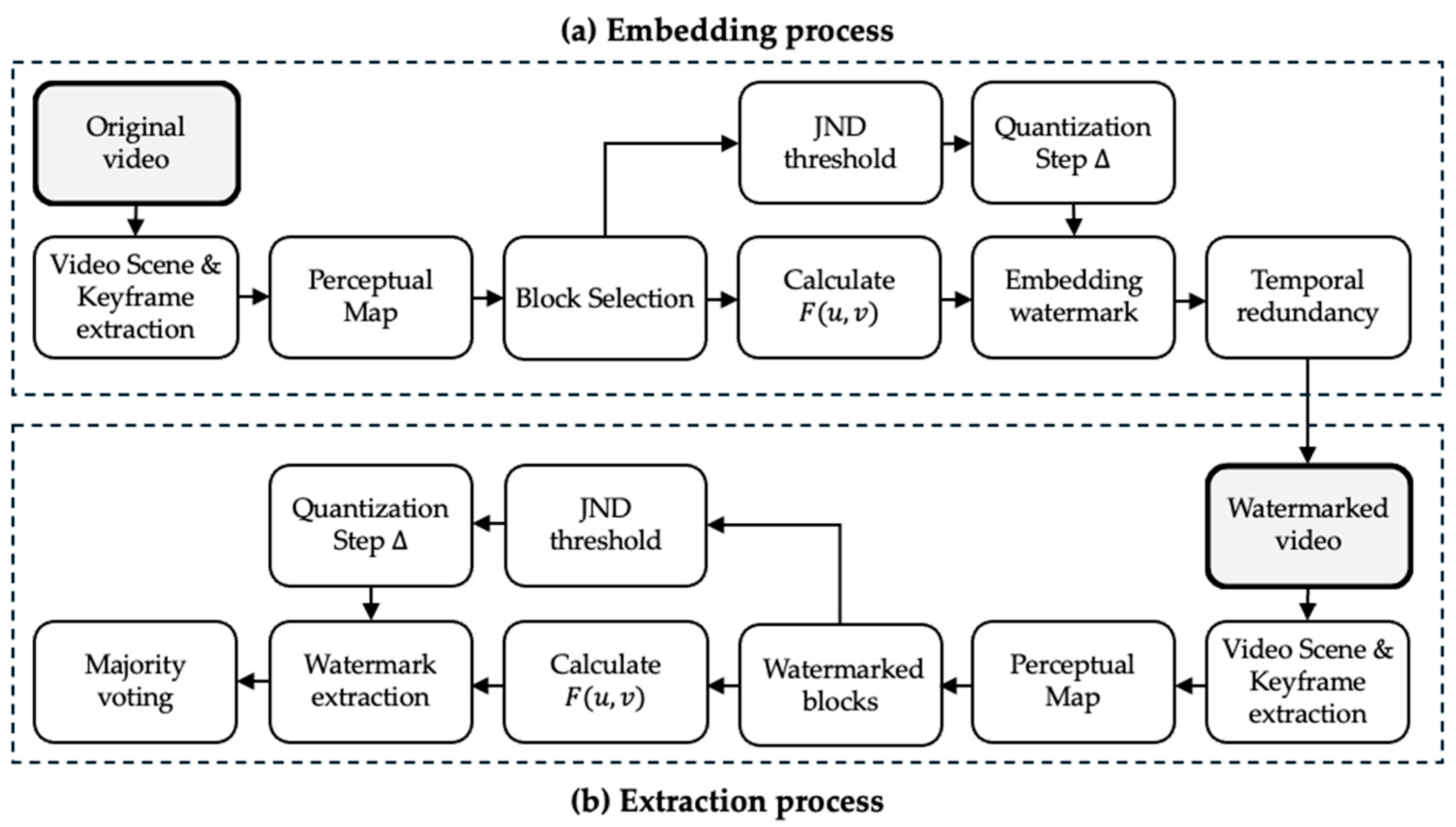

3.1. General Architecture

3.1.1. Step 1: Perceptual Map Computation

- A multiscale detailed map using Laplacian of Gaussian filters applied at M different scales () [36]. This map captures texture richness and edge strength across multiple spatial scales. The Laplacian of Gaussian () filter enhances local variations, such as corners and edges, which are less perceptually sensitive. The equation is as follows:computes the average response across M different Gaussian scales , typically chosen in the range between 1 and 3. High values of indicate structurally complex areas where watermark energy can be safely embedded.

- The saliency map is computed using spectral residual contrast [37]. This map identifies low-attention regions in the frequency domain based on spectral irregularities. This approach has been widely used due to its simplicity and effectiveness in modeling human visual attention. Since this method is used without modification, full mathematical details are omitted here for brevity.

- In this work, a semantic mask is constructed using the Haar cascade frontal face detector proposed by Viola and Jones [38], applied to exclude sensitive regions such as faces or foreground objects from the embedding process. This ensures that watermark insertion is avoided in semantically important areas, preserving visual fidelity. No changes were made to the underlying detector beyond default parameters. While more accurate methods based on deep learning (e.g., YOLO, RetinaFace) are available, Haar cascades provide a good balance between performance and computational cost, making them suitable for real-time and embedded watermarking applications.

3.1.2. Step 2: Robust Block Selection

3.1.3. Step 3: Calculate DCT Coefficients Directly from Spatial Domain

3.1.4. Step 4: JND Modulation and Embedding with QIM

3.1.5. Step 5: Redundancy Encoding and Temporal Distribution

3.1.6. Step 6: Watermark Extraction and Voting

3.2. Block-Based Watermark Embedding Scheme

- Each keyframe is divided into a non-overlapping 8 × 8 block.

- Compute the perceptual score map and select robust blocks .

- For each selected block , compute the DCT coefficient directly from the spatial domain using Equation (4).

- Estimate the JND threshold for the block .

- Compute the quantization step as , where is a fixed scaling factor.

- Modify using QIM, i.e., applying Equation (6) with .

- Replace the original coefficient with its modified version .

3.3. Watermark Extraction Procedure

- Divide each keyframe into non-overlapping 8 × 8 blocks.

- Compute the perceptual score map for each block using the same method as in Section 3.1.1. and select the top-ranked blocks as candidates for decoding.

- For each selected block , compute the DCT coefficient directly from the spatial domain using Equation (4).

- Recompute the quantization step using .

- Decode the embedded bit from the quantized coefficient using the inverse QIM rule with Equation (13).

- Repeat the decoding for the same bit across the redundant keyframes.

- Apply majority voting to recover the final bit by using Equation (15).

4. Experimental Results

4.1. Configuration

4.2. Baseline Performance Evaluation

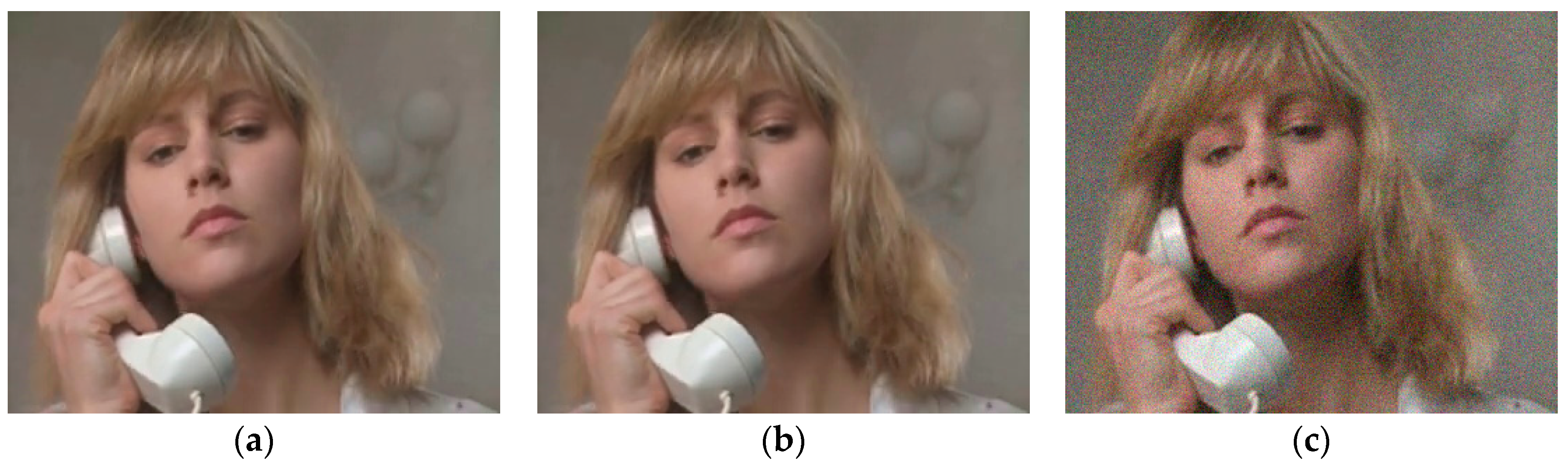

4.2.1. Impact of Adaptive Modulation

4.2.2. Impact of Redundant Coding

4.2.3. Impact of Multiframe Insertion Strategy

4.3. Evaluation Under Attacks

4.4. Sensitivity Analysis

5. Analysis and Discussion

5.1. Error and Recovery Analysis

5.2. Interaction Between Redundancy and Modulation

5.3. Computational Cost Evaluation

5.4. Comparison with State-of-the-Art Methods

5.5. Statistical Robustness

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, G.; Xiang, R.; Liu, J.; Pan, R.; Zhang, Z. An invisible and robust watermarking scheme using convolutional neural networks. Expert Syst. Appl. 2022, 210, 118529. [Google Scholar] [CrossRef]

- Agarwal, N.; Singh, A.K.; Singh, P.K. Survey of robust and imperceptible watermarking. Multimed. Tools Appl. 2019, 78, 8603–8633. [Google Scholar] [CrossRef]

- Wan, W.; Wang, J.; Zhang, Y.; Li, J.; Yu, H.; Sun, J. A comprehensive survey on robust image watermarking. Neurocomputing 2022, 488, 226–247. [Google Scholar] [CrossRef]

- Zhong, X.; Das, A.; Alrasheedi, F.; Tanvir, A. A Brief, In-Depth Survey of Deep Learning-Based Image Watermarking. Appl. Sci. 2023, 13, 11852. [Google Scholar] [CrossRef]

- Charfeddine, M.; Mezghani, E.; Masmoudi, S.; Amar, C.B.; Alhumyani, H. Audio watermarking for security and non-security applications. IEEE Access 2022, 10, 12654–12677. [Google Scholar] [CrossRef]

- Aberna, P.; Agilandeeswari, L. Digital image and video watermarking: Methodologies, attacks, applications, and future directions. Multimed. Tools Appl. 2023, 82, 5531–5591. [Google Scholar] [CrossRef]

- Yu, X.; Wang, C.; Zhou, X. A survey on robust video watermarking algorithms for copyright protection. Appl. Sci. 2018, 8, 1891. [Google Scholar] [CrossRef]

- Asikuzzaman, M.; Pickering, M.R. An overview of digital video watermarking. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 2131–2153. [Google Scholar] [CrossRef]

- Abdelwahab, K.M.; Abd El-Atty, S.M.; El-Shafai, W.; El-Rabaie, S.; Abd El-Samie, F.E. Efficient SVD-based audio watermarking technique in FRT domain. Multimed. Tools Appl. 2020, 79, 5617–5648. [Google Scholar] [CrossRef]

- Zainol, Z.; Teh, J.S.; Alawida, M.; Alabdulatif, A. Hybrid SVD-based image watermarking schemes: A review. IEEE Access 2021, 9, 32931–32968. [Google Scholar] [CrossRef]

- Masmoudi, S.; Charfeddine, M.; Ben Amar, C. A semi-fragile digital audio watermarking scheme for MP3-encoded signals. Circ. Syst. Signal Process. 2020, 39, 3019–3034. [Google Scholar] [CrossRef]

- Chen, L.; Wang, C.; Zhou, X.; Qin, Z. Robust and Compatible Video Watermarking via Spatio-Temporal Enhancement and Multiscale Pyramid Attention. IEEE Trans. Circuits Syst. Video Technol. 2024, 35, 1548–1561. [Google Scholar] [CrossRef]

- Farri, E.; Ayubi, P. A robust digital video watermarking based on CT-SVD domain and chaotic DNA sequences for copyright protection. J. Ambient Intell. Humaniz. Comput. 2023, 14, 13113–13137. [Google Scholar] [CrossRef]

- Wan, W.; Zhou, K.; Zhang, K.; Zhan, Y.; Li, J. JND-guided perceptually color image watermarking in spatial domain. IEEE Access 2020, 8, 164504–164520. [Google Scholar] [CrossRef]

- Lin, W.; Ghinea, G. Progress and opportunities in modelling just-noticeable difference (JND) for multimedia. IEEE Trans. Multimed. 2021, 24, 3706–3721. [Google Scholar] [CrossRef]

- Cedillo-Hernandez, A.; Cedillo-Hernandez, M.; Miyatake, M.N.; Meana, H.P. A spatiotemporal saliency-modulated JND profile applied to video watermarking. J. Vis. Commun. Image R. 2018, 52, 106–117. [Google Scholar] [CrossRef]

- Yang, L.; Wang, H.; Zhang, Y.; He, M.; Li, J. An adaptive video watermarking robust to social platform transcoding and hybrid attacks. Signal Process. 2024, 224, 109588. [Google Scholar] [CrossRef]

- Cedillo-Hernandez, A.; Cedillo-Hernandez, M.; García-Vázquez, M.; Nakano-Miyatake, M.; Perez-Meana, H.; Ramirez-Acosta, A. Transcoding resilient video watermarking scheme based on spatio-temporal HVS and DCT. Signal Process. 2014, 97, 40–54. [Google Scholar] [CrossRef]

- Huan, W.; Li, S.; Qian, Z.; Zhang, X. Exploring stable coefficients on joint sub-bands for robust video watermarking in DT CWT domain. IEEE T. Circ. Syst. Vid. 2022, 32, 1955–1965. [Google Scholar] [CrossRef]

- Chen, B.; Wornell, G.W. Quantization index modulation: A class of provably good methods for digital watermarking and information embedding. IEEE Trans. Inform. Theory 2001, 47, 1423–1443. [Google Scholar] [CrossRef]

- Lin, E.T.; Delp, E.J. Temporal synchronization in video watermarking. IEEE Trans. Signal Process. 1999, 51, 1053–1069. [Google Scholar] [CrossRef]

- Koz, A.; Alatan, A.A. Oblivious spatio-temporal watermarking of digital video by exploiting the human visual system. IEEE Trans. Circuits Syst. Video Technol. 2008, 18, 326–337. [Google Scholar] [CrossRef]

- Luo, X.; Li, Y.; Chang, H.; Liu, C.; Milanfar, P.; Yang, F. Dvmark: A deep multiscale framework for video watermarking. IEEE Trans. Image Process. 2023, 32, 4769–4782. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, H.; Wang, G.; Wu, X. Hide and track: Towards blind video watermarking network in frequency domain. Neurocomputing 2024, 579, 127435. [Google Scholar] [CrossRef]

- Cedillo-Hernandez, A.; Velazquez-Garcia, L.; Cedillo-Hernandez, M.; Conchouso-Gonzalez, D. Fast and robust JND-guided video watermarking scheme in spatial domain. J. King Saud Univ.–Comput. Inf. Sci. 2024, 36, 102199. [Google Scholar] [CrossRef]

- Hernandez, A.C.; Hernandez, M.C.; Ugalde, F.G.; Miyatake, M.N.; Meana, H.P. A fast and effective method for static video summarization on compressed domain. IEEE Lat. Am. Trans. 2016, 14, 4554–4559. [Google Scholar] [CrossRef]

- Yamamoto, T.; Kawamura, M. Method of spread spectrum watermarking using quantization index modulation for cropped images. IEICE Trans. Inf. Syst. 2015, 98, 1306–1315. [Google Scholar] [CrossRef]

- He, M.; Wang, H.; Zhang, F.; Abdullahi, S.M.; Yang, L. Robust blind video watermarking against geometric deformations and online video sharing platform processing. IEEE Trans. Dependable Secur. Comput. 2022, 20, 4702–4718. [Google Scholar] [CrossRef]

- Nayak, A.A.; Venugopala, P.S.; Sarojadevi, H.; Ashwini, B.; Chiplunkar, N.N. A novel watermarking technique for video on android mobile devices based on JPG quantization value and DCT. Multimed. Tools Appl. 2024, 83, 47889–47917. [Google Scholar] [CrossRef]

- Prasetyo, H.; Hsia, C.H.; Liu, C.H. Vulnerability attacks of SVD-based video watermarking scheme in an IoT environment. IEEE Access 2020, 8, 69919–69936. [Google Scholar] [CrossRef]

- Pinson, M.H.; Wolf, S. A new standardized method for objectively measuring video quality. IEEE Trans. Broadcast. 2004, 50, 312–322. [Google Scholar] [CrossRef]

- Ma, Y.F.; Zhang, H.J. Contrast-based image attention analysis by using fuzzy growing. In Proceedings of the Eleventh ACM International Conference on Multimedia, Berkeley, CA, USA, 2–8 November 2003; pp. 374–381. [Google Scholar]

- Wang, C.; Wang, Y.; Lian, J. A Super Pixel-Wise Just Noticeable Distortion Model. IEEE Access 2020, 8, 204816–204824. [Google Scholar] [CrossRef]

- Li, D.; Deng, L.; Gupta, B.B.; Wang, H.; Choi, C. A novel CNN based security guaranteed image watermarking generation scenario for smart city applications. Inform. Sci. 2019, 479, 432–447. [Google Scholar] [CrossRef]

- Lin, W.; Kuo, C.C.J. Perceptual visual quality metrics: A survey. J. Vis. Commun. Image Represent. 2011, 22, 297–312. [Google Scholar] [CrossRef]

- Wang, H.; Yu, L.; Yin, H.; Li, T.; Wang, S. An improved DCT-based JND estimation model considering multiple masking effects. J. Vis. Commun. Image Represent. 2020, 71, 102850. [Google Scholar] [CrossRef]

- Hou, X.; Zhang, L. Saliency detection: A spectral residual approach. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Viola, P.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vision 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Byun, S.W.; Son, H.S.; Lee, S.P. Fast and robust watermarking method based on DCT specific location. IEEE Access 2019, 7, 100706–100718. [Google Scholar] [CrossRef]

- Wu, J.; Li, L.; Dong, W.; Shi, G.; Lin, W.; Kuo, C.C.J. Enhanced just noticeable difference model for images with pattern complexity. IEEE Trans. Image Process. 2017, 26, 2682–2693. [Google Scholar] [CrossRef] [PubMed]

- Hernandez, J.R.; Perez-Gonzalez, F. Statistical analysis of watermarking schemes for copyright protection of images. Proc. IEEE 2000, 87, 1142–1166. [Google Scholar] [CrossRef]

- Video Sequences for Testing. Available online: https://sites.google.com/site/researchvideosequences (accessed on 9 July 2025).

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Li, X.; Guo, Q.; Lu, X. Spatiotemporal statistics for video quality assessment. IEEE Trans. Image Process. 2016, 25, 3329–3342. [Google Scholar] [CrossRef] [PubMed]

| Parameter | Value |

|---|---|

| Block size | 8 × 8 |

| Embedding DCT coefficient | |

| 0.75 | |

| 5 keyframes per bit | |

| Watermark payload | 128 bits |

| 30 | |

| Perceptual map weights | = 0.3 |

| Metric | Improvement | ||

|---|---|---|---|

| PSNR (dB) | 39.42 | 50.12 | +10.70 |

| SSIM | 0.918 | 0.996 | +0.076 |

| VMAF | 83.1 | 97.3 | +14.2 |

| BER% | 2.3 | 1.4 | −0.9 |

| Redundancy | Avg. BER (%) | Frames Used | Recovery Accuracy |

|---|---|---|---|

| 1 | 5.2 | 128 | 94.8% |

| 2 | 3.1 | 256 | 96.9% |

| 3 | 1.8 | 384 | 98.2% |

| 4 | 1.1 | 512 | 98.9% |

| 5 | 0.2 | 640 | 99.8% |

| Strategy | BER (%) | VMAF | Avg. Sync Error |

|---|---|---|---|

| Random Frame Insertion | 2.7 | 89.7 | 1.8 |

| Scene-Based Insertion | 0.3 | 95.2 | 0.5 |

| Atack Type | BER % | SSIM Drop | PSNR Drop | VMAF Drop | |||

|---|---|---|---|---|---|---|---|

| CIF | 4CIF | HD | Avg. | ||||

| Gaussian noise | 0.6 | 1.1 | 1.4 | 1.03 | 0.085 | 6.8 | 10.5 |

| H.264 Compression (CRF 28) | 0.9 | 1.5 | 1.8 | 1.40 | 0.120 | 10.3 | 16.2 |

| VP8 Compression (Q = 30) | 0.7 | 1.4 | 1.7 | 1.26 | 0.098 | 9.2 | 14.9 |

| Temporal Desync (±3 frames) | 0.7 | 1.2 | 1.5 | 1.13 | 0.094 | 7.9 | 11.5 |

| Attack | BER (%)/SSIM | |||

|---|---|---|---|---|

| CIF | 4CIF | HD | Overall | |

| Rotation ±5° | 3.9/0.87 | 4.5/0.85 | 4.8/0.83 | 4.4/0.85 |

| Rescaling to 90% | 2.5/0.90 | 3.2/0.88 | 3.5/0.86 | 3.1/0.88 |

| Cropping 10% | 1.9/0.92 | 2.1/0.91 | 2.4/0.89 | 2.1/0.91 |

| Parameter | Values Tested | BER (%) | PSNR (dB) |

|---|---|---|---|

| 0.2/0.3/0.4 | 0.21/0.09/0.07 | 52.7/51.2/49.4 | |

| Quantization scaling factor (Q) | 0.5/0.75/1.0 | 0.27/0.10/0.09 | 53.1/52.1/49.3 |

| Block threshold (τ) | 25/30/35 | 0.10/0.08/0.08 | 52.3/50.9/48.2 |

| Stage | Avg. Time (CIF) | Avg. Time (HD) | % Total Time | Parallelizable |

|---|---|---|---|---|

| Perceptual Map | 1.6 s | 4.3 s | 45% | Yes |

| Keyframe Detection + Redundancy | 0.5 s | 1.2 s | 15% | Partial |

| QIM Embedding (Spatial-DCT) | 0.4 s | 0.9 s | 10% | Yes |

| Extraction (sync + voting) | 0.7 s | 1.6 s | 30% | Yes |

| Method | Domain | BER (%) | PSNR (dB) | SSIM | VMAF | Runtime (HD) | Comments |

|---|---|---|---|---|---|---|---|

| Proposed method | Spatial + JND | 1.03 | 50.1 | 0.996 | 97.3 | ~7.8 s/frame | Low complexity, perceptual model, blind |

| DvMark (2023) [23] | Frequency + DL | 1.6 | 46.4 | 0.982 | 93.5 | ~2.5 s/frame (GPU) | DL-based, high GPU demand |

| Hide-and-Track (2024) [24] | Frequency + DL | 1.4 | 44.9 | 0.974 | 91.1 | ~2.3 s/frame (GPU) | DL-based, blind, less perceptually tuned |

| Yang et al. (2024) [17] | Hybrid (DCT) | 2.1 | 43.5 | 0.967 | 89.7 | ~12 s/frame | Resilient to transcoding, slow |

| Huan et al. (2022) [19] | DT-CWT | 1.7 | 45.1 | 0.972 | 90.6 | ~9.6 s/frame | Strong under cropping, complex |

| Cedillo-H. et al. (2024) [25] | Spatial + JND | 2.5 | 48.0 | 0.990 | 95.5 | ~5.6 s/frame | Fast but no temporal redundancy |

| Video | Metric | Average | Standard Deviation (σ) |

|---|---|---|---|

| Akiyo | BER (%) | 0.11 | 0.04 |

| PSNR (dB) | 51.2 | 0.18 | |

| SSIM | 0.991 | 0.006 | |

| VMAF | 96.7 | 0.90 | |

| Foreman | BER (%) | 0.15 | 0.05 |

| PSNR (dB) | 50.8 | 0.22 | |

| SSIM | 0.992 | 0.009 | |

| VMAF | 97.8 | 1.20 | |

| Suzie | BER (%) | 0.09 | 0.03 |

| PSNR (dB) | 49.6 | 0.16 | |

| SSIM | 0.989 | 0.004 | |

| VMAF | 97.1 | 0.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cedillo-Hernandez, A.; Velazquez-Garcia, L.; Cedillo-Hernandez, M.; Dominguez-Jimenez, I.; Conchouso-Gonzalez, D. Robust JND-Guided Video Watermarking via Adaptive Block Selection and Temporal Redundancy. Mathematics 2025, 13, 2493. https://doi.org/10.3390/math13152493

Cedillo-Hernandez A, Velazquez-Garcia L, Cedillo-Hernandez M, Dominguez-Jimenez I, Conchouso-Gonzalez D. Robust JND-Guided Video Watermarking via Adaptive Block Selection and Temporal Redundancy. Mathematics. 2025; 13(15):2493. https://doi.org/10.3390/math13152493

Chicago/Turabian StyleCedillo-Hernandez, Antonio, Lydia Velazquez-Garcia, Manuel Cedillo-Hernandez, Ismael Dominguez-Jimenez, and David Conchouso-Gonzalez. 2025. "Robust JND-Guided Video Watermarking via Adaptive Block Selection and Temporal Redundancy" Mathematics 13, no. 15: 2493. https://doi.org/10.3390/math13152493

APA StyleCedillo-Hernandez, A., Velazquez-Garcia, L., Cedillo-Hernandez, M., Dominguez-Jimenez, I., & Conchouso-Gonzalez, D. (2025). Robust JND-Guided Video Watermarking via Adaptive Block Selection and Temporal Redundancy. Mathematics, 13(15), 2493. https://doi.org/10.3390/math13152493