Federated Learning Based on an Internet of Medical Things Framework for a Secure Brain Tumor Diagnostic System: A Capsule Networks Application

Abstract

1. Introduction

2. Literature Review

3. Federated Learning-Based IOMT for Brain Tumor Analysis by Capsule Networks

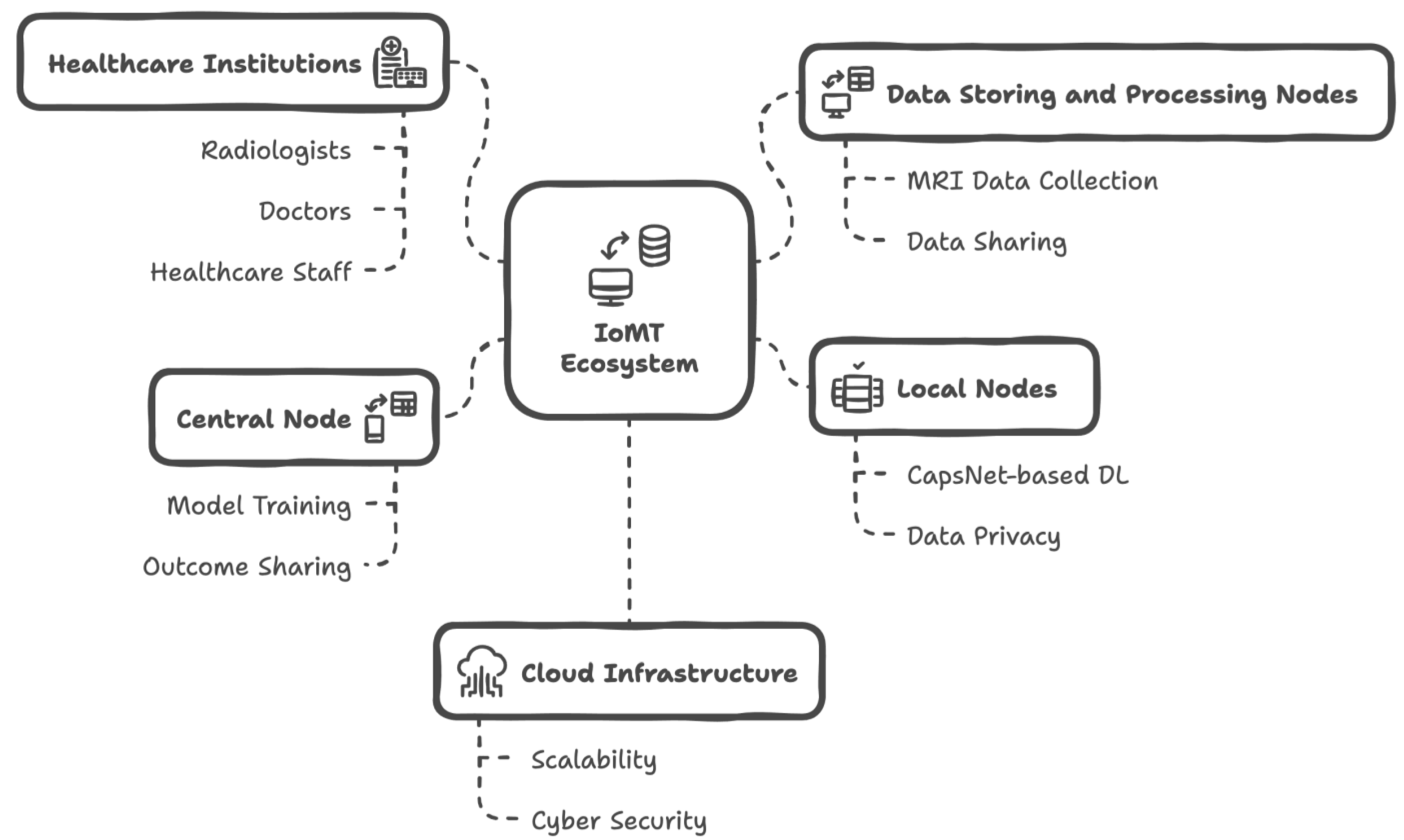

3.1. IoMT Ecosystem

- In this IoMT framework, radiologists, doctors, patients, and healthcare staff actively participate in data usage and communication.

- MRI data from patients are traditionally collected using screening devices. However, these data are automatically shared with data storage and processing nodes, which operate independently across different healthcare institutions.

- The data storage and processing nodes perform pre-processing tasks on the MRI data to make a dataset suitable for the deep learning (DL) model known as CapsNet. Additionally, the original MRI data can be shared with radiologists, enabling them to write reports if needed.

- In this ecosystem scenario, multiple healthcare institutions can connect to the IoMT infrastructure, allowing for the sharing of MRI data, along with any supplementary metadata or reports, among all relevant actors. This facilitates communication among all parties involved—except for patients—and contributes to the data storage and management capability of the system. To support this, a software platform may be utilized, and simple interaction methods (such as Bluetooth beacons, QR codes, or NFC components) can be employed to help individuals access data within healthcare institutions. It is important to note that the entire data-sharing mechanism is restricted with a federated learning (FL) approach.

- Each healthcare institution hosts local nodes, which receive processed MRI data from the data storage and processing nodes. These local nodes are integrated with the FL framework, ensuring data privacy. By default, the FL framework eliminates any patient data while enhancing the overall performance of deep learning within the ecosystem. Nonetheless, original MRI data can be shared among multiple institutions if necessary; however, this falls outside the preferred FL-based IoMT approach presented in this study.

- Local nodes execute their CapsNet-based deep learning processes and share local data with the global server node of both the IoMT ecosystem and the FL framework, which is referred to as the central node. The central node is responsible for finalizing the trained CapsNet model, which can diagnose brain tumors from newly acquired MRI data. It also shares the results with authorized radiologists, doctors, and healthcare staff.

- The mechanisms described above are designed within a cloud infrastructure, leveraging the advantages of cloud computing, such as scalability, flexibility, load balancing, and performance optimization, in the IoMT ecosystem. It is also noteworthy that both the FL and cloud solutions contribute to the cybersecurity aspects of the developed system.

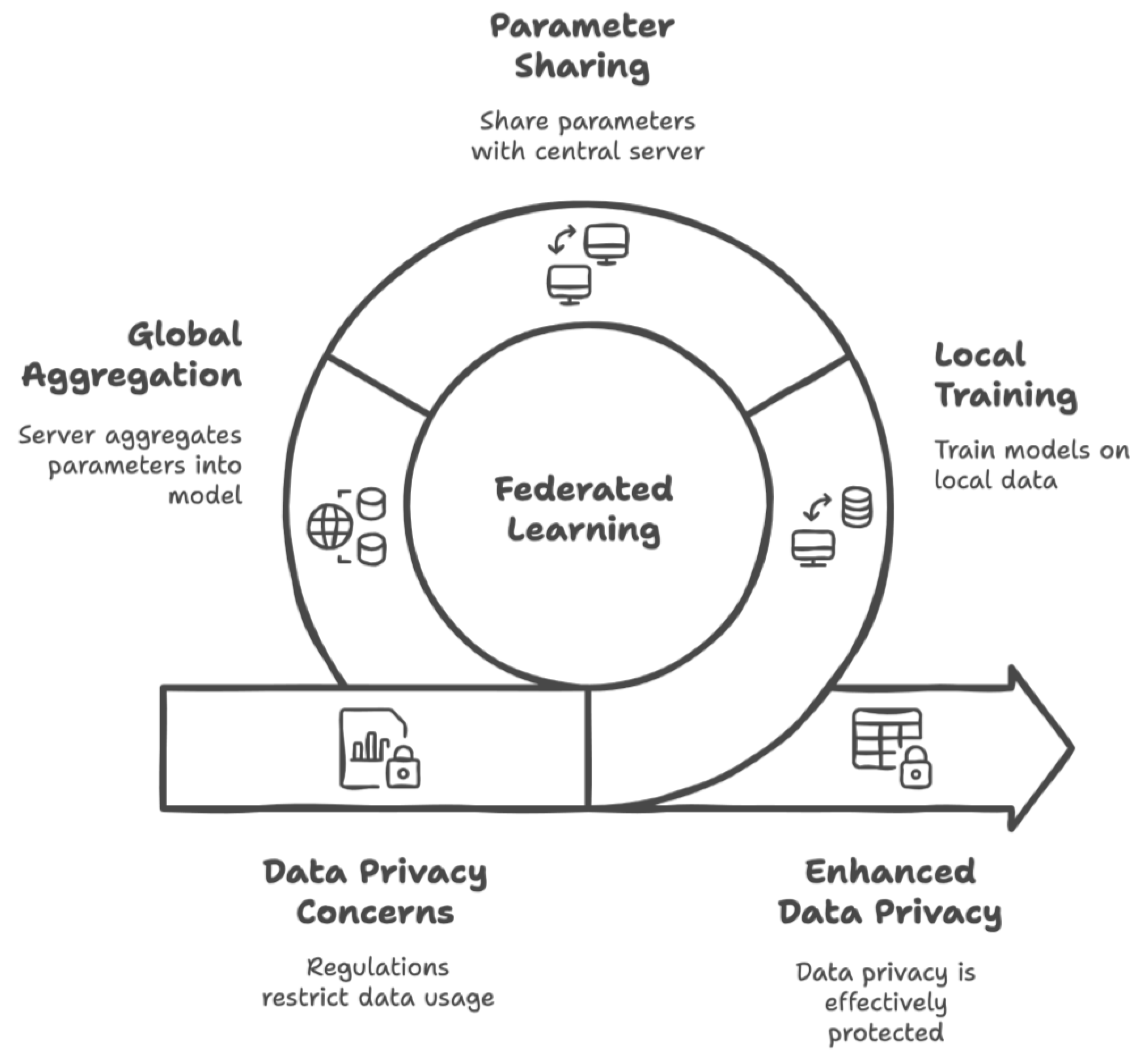

3.2. Federated Learning

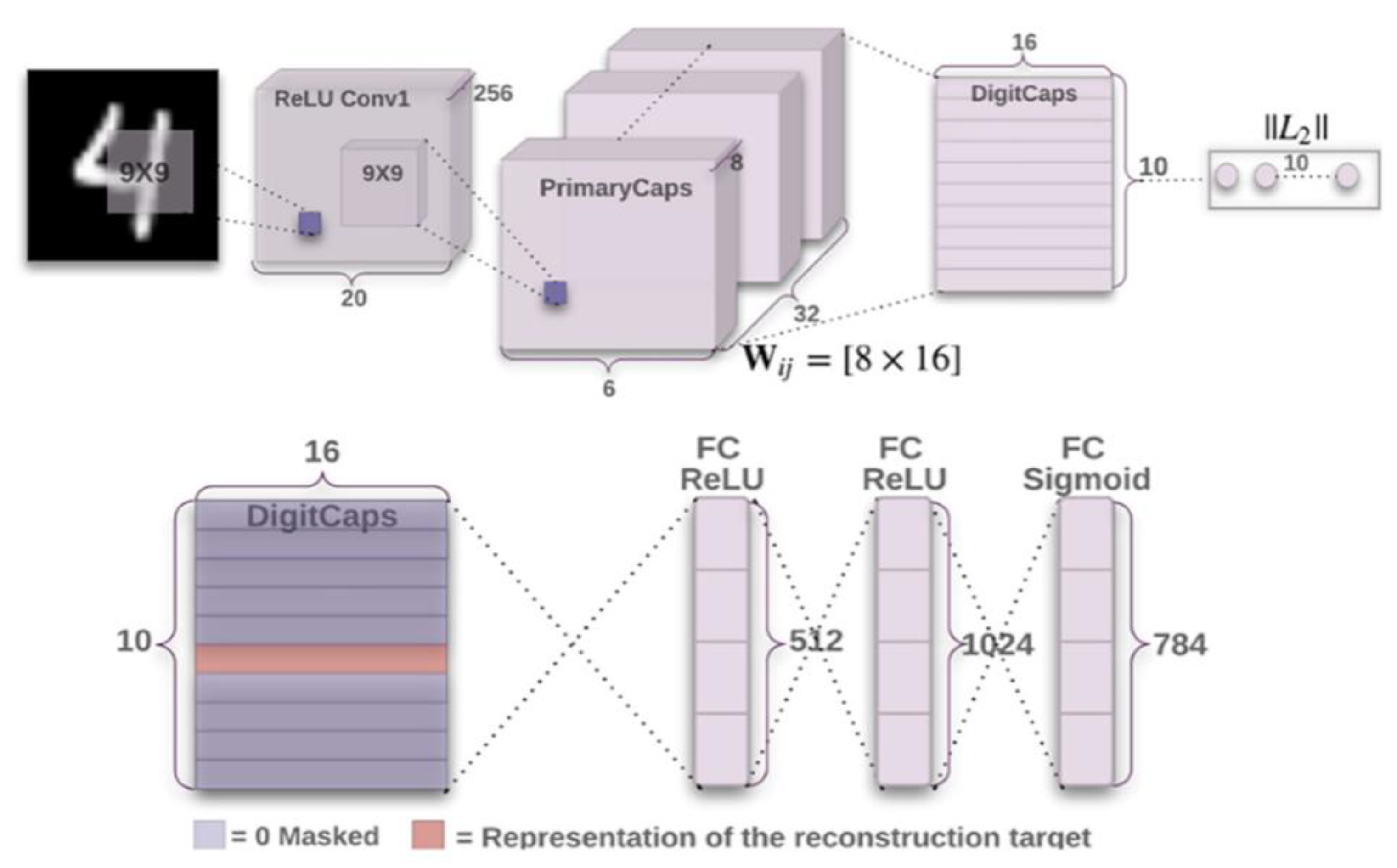

3.3. CapsNet-Based Deep Learning

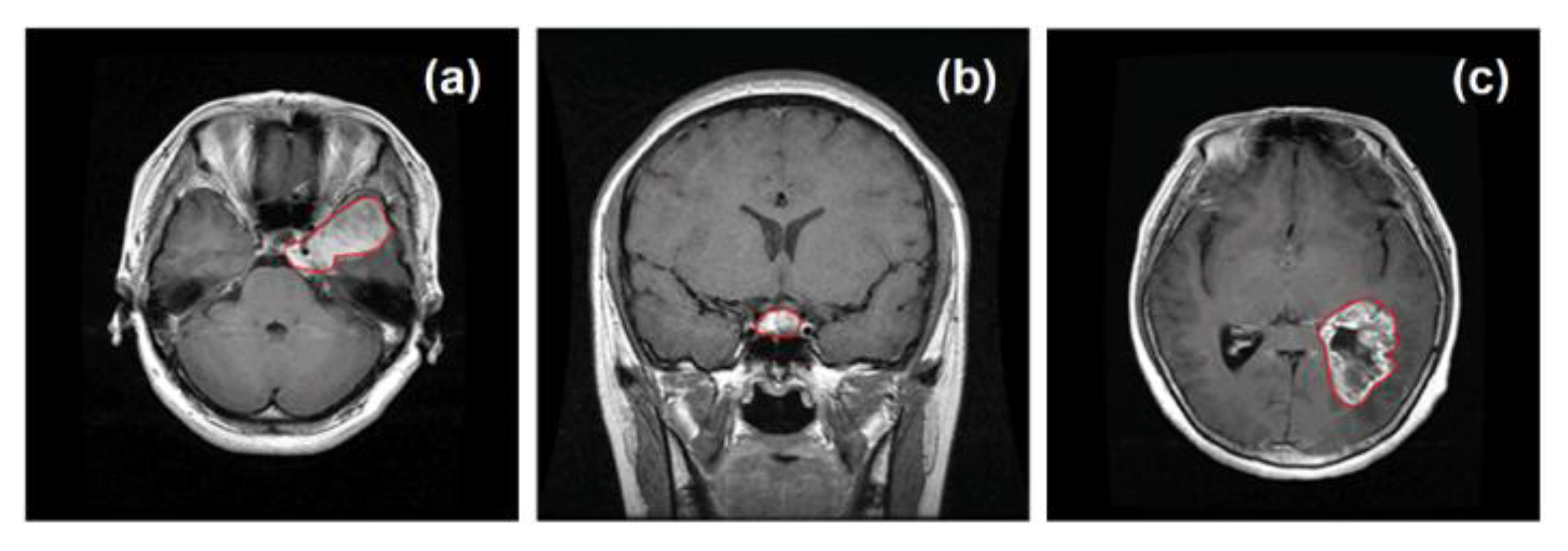

3.4. Brain Tumor Diagnosis Using MRI Data

4. Results

4.1. Federated Learning-Based IoMT Setup

4.2. Applications and Findings for Brain Tumor Diagnosis

4.3. Findings for the Comparative Evaluation

4.4. Findings for User Evaluation

4.5. Threat Analysis

5. Discussion and Limitations

- The modern world requires the distributed use of technology for wide-area communication, especially in healthcare. The findings emphasize the role of the Internet of Medical Things (IoMT) in supporting diagnostic needs.

- AI components, particularly deep learning (DL) models, have shown effectiveness in cancer applications. CapsNet was applied successfully to brain tumor diagnosis in a three-class problem, outperforming other DL models. This study contributes to the literature by addressing multi-class challenges in AI and healthcare while highlighting the importance of effective data feeding and model balance.

- Medical imaging is a prominent application of AI, and this study utilized MRI data on brain tumors, encouraging further research in this area.

- Despite advancements in healthcare technology, the demand for more data raises privacy concerns. This study demonstrated that federated learning (FL) within an IoMT system can effectively ensure data privacy without requiring local healthcare institutions to share MRI data while it is still training a global CapsNet model.

- User evaluations indicated that the developed FL-IoMT system was both usable and effective in cancer research. Healthcare professionals, including radiologists and doctors, provided positive feedback on its diagnostic potential and user-friendliness.

- Overall, the study highlights the collaborative role of IoT and AI in enhancing healthcare applications and decision support, paving the way for a better future in healthcare.

Limitations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Bonaccorso, G. Machine Learning Algorithms; Packt Publishing Ltd.: Birmingham, UK, 2017. [Google Scholar]

- Sarker, I.H. Machine learning: Algorithms, real-world applications and research directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef]

- Kononenko, I. Machine learning for medical diagnosis: History, state of the art and perspective. Artif. Intell. Med. 2001, 23, 89–109. [Google Scholar] [CrossRef]

- Garg, A.; Mago, V. Role of machine learning in medical research: A survey. Comput. Sci. Rev. 2021, 40, 100370. [Google Scholar] [CrossRef]

- Shailaja, K.; Seetharamulu, B.; Jabbar, M.A. Machine learning in healthcare: A review. In Proceedings of the 2018 Second International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 29–31 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 910–914. [Google Scholar]

- Mohanty, S.N.; Nalinipriya, G.; Jena, O.P.; Sarkar, A. (Eds.) Machine Learning for Healthcare Applications; John Wiley & Sons: Hoboken, NJ, USA, 2021. [Google Scholar]

- Lundervold, A.S.; Lundervold, A. An overview of deep learning in medical imaging focusing on MRI. Z. Med. Phys. 2019, 29, 102–127. [Google Scholar] [CrossRef]

- Domingues, I.; Pereira, G.; Martins, P.; Duarte, H.; Santos, J.; Abreu, P.H. Using deep learning techniques in medical imaging: A systematic review of applications on CT and PET. Artif. Intell. Rev. 2020, 53, 4093–4160. [Google Scholar] [CrossRef]

- Meedeniya, D.; Kumarasinghe, H.; Kolonne, S.; Fernando, C.; De la Torre Díez, I.; Marques, G. Chest X-ray analysis empowered with deep learning: A systematic review. Appl. Soft Comput. 2022, 126, 109319. [Google Scholar] [CrossRef] [PubMed]

- Westbrook, C.; Talbot, J. MRI in Practice; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Debelee, T.G.; Kebede, S.R.; Schwenker, F.; Shewarega, Z.M. Deep learning in selected cancers’ image analysis—A survey. J. Imaging 2020, 6, 121. [Google Scholar] [CrossRef] [PubMed]

- Munir, K.; Elahi, H.; Ayub, A.; Frezza, F.; Rizzi, A. Cancer diagnosis using deep learning: A bibliographic review. Cancers 2019, 11, 1235. [Google Scholar] [CrossRef]

- Boldrini, L.; Bibault, J.E.; Masciocchi, C.; Shen, Y.; Bittner, M.I. Deep learning: A review for the radiation oncologist. Front. Oncol. 2019, 9, 977. [Google Scholar] [CrossRef]

- Zheng, J.; Lin, D.; Gao, Z.; Wang, S.; He, M.; Fan, J. Deep learning assisted efficient AdaBoost algorithm for breast cancer detection and early diagnosis. IEEE Access 2020, 8, 96946–96954. [Google Scholar] [CrossRef]

- Shen, L.; Margolies, L.R.; Rothstein, J.H.; Fluder, E.; McBride, R.; Sieh, W. Deep learning to improve breast cancer detection on screening mammography. Sci. Rep. 2019, 9, 12495. [Google Scholar] [CrossRef] [PubMed]

- Levine, A.B.; Schlosser, C.; Grewal, J.; Coope, R.; Jones, S.J.; Yip, S. Rise of the machines: Advances in deep learning for cancer diagnosis. Trends Cancer 2019, 5, 157–169. [Google Scholar] [CrossRef] [PubMed]

- Kourou, K.; Exarchos, T.P.; Exarchos, K.P.; Karamouzis, M.V.; Fotiadis, D.I. Machine learning applications in cancer prognosis and prediction. Comput. Struct. Biotechnol. J. 2015, 13, 8–17. [Google Scholar] [CrossRef]

- Vellido, A.; Lisboa, P.J. Neural networks and other machine learning methods in cancer research. In Proceedings of the International Work-Conference on Artificial Neural Networks, San Sebastián, Spain, 20–22 June 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 964–971. [Google Scholar]

- Mccarthy, J.F.; Marx, K.A.; Hoffman, P.E.; Gee, A.G.; O’neil, P.; Ujwal, M.L.; Hotchkiss, J. Applications of machine learning and high-dimensional visualization in cancer detection, diagnosis, and management. Ann. N. Y. Acad. Sci. 2004, 1020, 239–262. [Google Scholar] [CrossRef]

- Sattlecker, M.; Stone, N.; Bessant, C. Current trends in machine-learning methods applied to spectroscopic cancer diagnosis. TrAC Trends Anal. Chem. 2014, 59, 17–25. [Google Scholar] [CrossRef]

- Echle, A.; Rindtorff, N.T.; Brinker, T.J.; Luedde, T.; Pearson, A.T.; Kather, J.N. Deep learning in cancer pathology: A new generation of clinical biomarkers. Br. J. Cancer 2021, 124, 686–696. [Google Scholar] [CrossRef]

- Tran, K.A.; Kondrashova, O.; Bradley, A.; Williams, E.D.; Pearson, J.V.; Waddell, N. Deep learning in cancer diagnosis, prognosis and treatment selection. Genome Med. 2021, 13, 152. [Google Scholar] [CrossRef]

- Zeng, Z.; Mao, C.; Vo, A.; Li, X.; Nugent, J.O.; Khan, S.A.; Clare, S.E.; Luo, Y. Deep learning for cancer type classification and driver gene identification. BMC Bioinform. 2021, 22, 491. [Google Scholar] [CrossRef]

- Sun, W.; Zheng, B.; Qian, W. Computer aided lung cancer diagnosis with deep learning algorithms. In Proceedings of the Medical imaging 2016: Computer-Aided Diagnosis, San Diego, CA, USA, 27 February–3 March 2016; SPIE: Bellingham, WA, USA, 2016; Volume 9785, pp. 241–248. [Google Scholar]

- Ardila, D.; Kiraly, A.P.; Bharadwaj, S.; Choi, B.; Reicher, J.J.; Peng, L.; Tse, D.; Etemadi, M.; Ye, W.; Corrado, G.; et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat. Med. 2019, 25, 954–961. [Google Scholar] [CrossRef]

- Lakshmanaprabu, S.K.; Mohanty, S.N.; Shankar, K.; Arunkumar, N.; Ramirez, G. Optimal deep learning model for classification of lung cancer on CT images. Future Gener. Comput. Syst. 2019, 92, 374–382. [Google Scholar]

- Riquelme, D.; Akhloufi, M.A. Deep learning for lung cancer nodules detection and classification in CT scans. AI 2020, 1, 28–67. [Google Scholar] [CrossRef]

- Rossetto, A.M.; Zhou, W. Deep learning for categorization of lung cancer ct images. In Proceedings of the 2017 IEEE/ACM International Conference on Connected Health: Applications, Systems and Engineering Technologies (CHASE), Philadelphia, PA, USA, 17–19 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 272–273. [Google Scholar]

- Chaunzwa, T.L.; Hosny, A.; Xu, Y.; Shafer, A.; Diao, N.; Lanuti, M.; Christiani, D.C.; Mak, R.H.; Aerts, H.J. Deep learning classification of lung cancer histology using CT images. Sci. Rep. 2021, 11, 5471. [Google Scholar] [CrossRef] [PubMed]

- Wang, L. Deep learning techniques to diagnose lung cancer. Cancers 2022, 14, 5569. [Google Scholar] [CrossRef] [PubMed]

- Jiang, J.; Hu, Y.C.; Tyagi, N.; Zhang, P.; Rimner, A.; Deasy, J.O.; Veeraraghavan, H. Cross-modality (CT-MRI) prior augmented deep learning for robust lung tumor segmentation from small MR datasets. Med. Phys. 2019, 46, 4392–4404. [Google Scholar] [CrossRef]

- Bębas, E.; Borowska, M.; Derlatka, M.; Oczeretko, E.; Hładuński, M.; Szumowski, P.; Mojsak, M. Machine-learning-based classification of the histological subtype of non-small-cell lung cancer using MRI texture analysis. Biomed. Signal Process. Control 2021, 66, 102446. [Google Scholar] [CrossRef]

- Wang, C.; Rimner, A.; Hu, Y.C.; Tyagi, N.; Jiang, J.; Yorke, E.; Riyahi, S.; Mageras, G.; Deasy, J.O.; Zhang, P. Toward predicting the evolution of lung tumors during radiotherapy observed on a longitudinal MR imaging study via a deep learning algorithm. Med. Phys. 2019, 46, 4699–4707. [Google Scholar] [CrossRef]

- Hu, Q.; Whitney, H.M.; Giger, M.L. A deep learning methodology for improved breast cancer diagnosis using multiparametric MRI. Sci. Rep. 2020, 10, 10536. [Google Scholar] [CrossRef]

- Witowski, J.; Heacock, L.; Reig, B.; Kang, S.K.; Lewin, A.; Pysarenko, K.; Patel, S.; Samreen, N.; Rudnicki, W.; Łuczyńska, E.; et al. Improving breast cancer diagnostics with deep learning for MRI. Sci. Transl. Med. 2022, 14, eabo4802. [Google Scholar] [CrossRef]

- Eskreis-Winkler, S.; Onishi, N.; Pinker, K.; Reiner, J.S.; Kaplan, J.; Morris, E.A.; Sutton, E.J. Using deep learning to improve nonsystematic viewing of breast cancer on MRI. J. Breast Imaging 2021, 3, 201–207. [Google Scholar] [CrossRef]

- Schelb, P.; Kohl, S.; Radtke, J.P.; Wiesenfarth, M.; Kickingereder, P.; Bickelhaupt, S.; Kuder, T.A.; Stenzinger, A.; Hohenfellner, M.; Schlemmer, H.P.; et al. Classification of cancer at prostate MRI: Deep learning versus clinical PI-RADS assessment. Radiology 2019, 293, 607–617. [Google Scholar] [CrossRef]

- Liu, S.; Zheng, H.; Feng, Y.; Li, W. Prostate cancer diagnosis using deep learning with 3D multiparametric MRI. In Proceedings of the Medical Imaging 2017: Computer-Aided Diagnosis, Orlando, FL, USA, 11–16 February 2017; SPIE: Bellingham, WA, USA, 2017; Volume 10134, pp. 581–584. [Google Scholar]

- Wang, X.; Yang, W.; Weinreb, J.; Han, J.; Li, Q.; Kong, X.; Yan, Y.; Ke, Z.; Luo, B.; Liu, T.; et al. Searching for prostate cancer by fully automated magnetic resonance imaging classification: Deep learning versus non-deep learning. Sci. Rep. 2017, 7, 15415. [Google Scholar] [CrossRef]

- De Vente, C.; Vos, P.; Hosseinzadeh, M.; Pluim, J.; Veta, M. Deep learning regression for prostate cancer detection and grading in bi-parametric MRI. IEEE Trans. Biomed. Eng. 2020, 68, 374–383. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Yin, H.; Huang, Z.; Zhao, J.; Zheng, H.; He, D.; Li, M.; Tan, W.; Tian, S.; Song, B. Development and validation of MRI-based deep learning models for prediction of microsatellite instability in rectal cancer. Cancer Med. 2021, 10, 4164–4173. [Google Scholar] [CrossRef] [PubMed]

- Soomro, M.H.; De Cola, G.; Conforto, S.; Schmid, M.; Giunta, G.; Guidi, E.; Neri, E.; Caruso, D.; Ciolina, M.; Laghi, A. Automatic segmentation of colorectal cancer in 3D MRI by combining deep learning and 3D level-set algorithm-a preliminary study. In Proceedings of the 2018 IEEE 4th Middle East Conference on Biomedical Engineering (MECBME), Tunis, Tunisia, 28–30 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 198–203. [Google Scholar]

- Yang, T.; Liang, N.; Li, J.; Yang, Y.; Li, Y.; Huang, Q.; Li, R.; He, X.; Zhang, H. Intelligent imaging technology in diagnosis of colorectal cancer using deep learning. IEEE Access 2019, 7, 178839–178847. [Google Scholar] [CrossRef]

- Zhen, S.H.; Cheng, M.; Tao, Y.B.; Wang, Y.F.; Juengpanich, S.; Jiang, Z.Y.; Jiang, Y.K.; Yan, Y.Y.; Lu, W.; Lue, J.M.; et al. Deep learning for accurate diagnosis of liver tumor based on magnetic resonance imaging and clinical data. Front. Oncol. 2020, 10, 680. [Google Scholar] [CrossRef]

- Velichko, Y.S.; Gennaro, N.; Karri, M.; Antalek, M.; Bagci, U. A Comprehensive Review of Deep Learning Approaches for Magnetic Resonance Imaging Liver Tumor Analysis. Adv. Clin. Radiol. 2023, 5, 1–15. [Google Scholar] [CrossRef]

- Trivizakis, E.; Manikis, G.C.; Nikiforaki, K.; Drevelegas, K.; Constantinides, M.; Drevelegas, A.; Marias, K. Extending 2-D convolutional neural networks to 3-D for advancing deep learning cancer classification with application to MRI liver tumor differentiation. IEEE J. Biomed. Health Inform. 2018, 23, 923–930. [Google Scholar] [CrossRef]

- Hamm, C.A.; Wang, C.J.; Savic, L.J.; Ferrante, M.; Schobert, I.; Schlachter, T.; Lin, M.; Duncan, J.S.; Weinreb, J.C.; Chapiro, J.; et al. Deep learning for liver tumor diagnosis part I: Development of a convolutional neural network classifier for multi-phasic MRI. Eur. Radiol. 2019, 29, 3338–3347. [Google Scholar] [CrossRef]

- Işın, A.; Direkoğlu, C.; Şah, M. Review of MRI-based brain tumor image segmentation using deep learning methods. Procedia Comput. Sci. 2016, 102, 317–324. [Google Scholar] [CrossRef]

- Sajid, S.; Hussain, S.; Sarwar, A. Brain tumor detection and segmentation in MR images using deep learning. Arab. J. Sci. Eng. 2019, 44, 9249–9261. [Google Scholar] [CrossRef]

- Haq, E.U.; Jianjun, H.; Li, K.; Haq, H.U.; Zhang, T. An MRI-based deep learning approach for efficient classification of brain tumors. J. Ambient Intell. Humaniz. Comput. 2021, 14, 6697–6718. [Google Scholar] [CrossRef]

- Paul, J.S.; Plassard, A.J.; Landman, B.A.; Fabbri, D. Deep learning for brain tumor classification. In Proceedings of the Medical Imaging 2017: Biomedical Applications in Molecular, Structural, and Functional Imaging, Orlando, FL, USA, 12–14 February 2017; SPIE: Bellingham, WA, USA, 2017; Volume 10137, pp. 253–268. [Google Scholar]

- Ranjbarzadeh, R.; Bagherian Kasgari, A.; Jafarzadeh Ghoushchi, S.; Anari, S.; Naseri, M.; Bendechache, M. Brain tumor segmentation based on deep learning and an attention mechanism using MRI multi-modalities brain images. Sci. Rep. 2021, 11, 10930. [Google Scholar] [CrossRef]

- Hashemzehi, R.; Mahdavi, S.J.S.; Kheirabadi, M.; Kamel, S.R. Detection of brain tumors from MRI images base on deep learning using hybrid model CNN and NADE. Biocybern. Biomed. Eng. 2020, 40, 1225–1232. [Google Scholar] [CrossRef]

- Aamir, M.; Rahman, Z.; Dayo, Z.A.; Abro, W.A.; Uddin, M.I.; Khan, I.; Imran, A.S.; Ali, Z.; Ishfaq, M.; Guan, Y.; et al. A deep learning approach for brain tumor classification using MRI images. Comput. Electr. Eng. 2022, 101, 108105. [Google Scholar] [CrossRef]

- Ottom, M.A.; Rahman, H.A.; Dinov, I.D. Znet: Deep learning approach for 2D MRI brain tumor segmentation. IEEE J. Transl. Eng. Health Med. 2022, 10, 1800508. [Google Scholar] [CrossRef] [PubMed]

- Chattopadhyay, A.; Maitra, M. MRI-based brain tumour image detection using CNN based deep learning method. Neurosci. Inform. 2022, 2, 100060. [Google Scholar] [CrossRef]

- Nazir, M.; Shakil, S.; Khurshid, K. Role of deep learning in brain tumor detection and classification (2015 to 2020): A review. Comput. Med. Imaging Graph. 2021, 91, 101940. [Google Scholar] [CrossRef]

- Jyothi, P.; Singh, A.R. Deep learning models and traditional automated techniques for brain tumor segmentation in MRI: A review. Artif. Intell. Rev. 2023, 56, 2923–2969. [Google Scholar] [CrossRef]

- Akinyelu, A.A.; Zaccagna, F.; Grist, J.T.; Castelli, M.; Rundo, L. Brain tumor diagnosis using machine learning, convolutional neural networks, capsule neural networks and vision transformers, applied to MRI: A survey. J. Imaging 2022, 8, 205. [Google Scholar] [CrossRef]

- Arabahmadi, M.; Farahbakhsh, R.; Rezazadeh, J. Deep learning for smart Healthcare—A survey on brain tumor detection from medical imaging. Sensors 2022, 22, 1960. [Google Scholar] [CrossRef]

- Taha, A.M.; Ariffin, D.S.B.B.; Abu-Naser, S.S. A Systematic Literature Review of Deep and Machine Learning Algorithms in Brain Tumor and Meta-Analysis. J. Theor. Appl. Inf. Technol. 2023, 101, 21–36. [Google Scholar]

- Dubey, A.; Agrawal, S.; Agrawal, V.; Dubey, T.; Jaiswal, A. Breast Cancer and the Brain: A Comprehensive Review of Neurological Complications. Cureus 2023, 15, e48941. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. (TIST) 2019, 10, 12. [Google Scholar] [CrossRef]

- Chowdhury, A.; Kassem, H.; Padoy, N.; Umeton, R.; Karargyris, A. A review of medical federated learning: Applications in oncology and cancer research. In Proceedings of the International MICCAI Brainlesion Workshop, Virtual Event, 27 September 2021; Springer International Publishing: Cham, Germany, 2021; pp. 3–24. [Google Scholar]

- Yi, L.; Zhang, J.; Zhang, R.; Shi, J.; Wang, G.; Liu, X. SU-Net: An efficient encoder-decoder model of federated learning for brain tumor segmentation. In Proceedings of the International Conference on Artificial Neural Networks, Bratislava, Slovakia, 15–18 September 2020; Springer International Publishing: Cham, Germany, 2020; pp. 761–773. [Google Scholar]

- Islam, M.; Reza, M.T.; Kaosar, M.; Parvez, M.Z. Effectiveness of federated learning and CNN ensemble architectures for identifying brain tumors using MRI images. Neural Process. Lett. 2023, 55, 3779–3809. [Google Scholar] [CrossRef]

- Sheller, M.J.; Reina, G.A.; Edwards, B.; Martin, J.; Bakas, S. Multi-institutional deep learning modeling without sharing patient data: A feasibility study on brain tumor segmentation. In Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries: 4th International Workshop, BrainLes 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 16 September 2018; Revised Selected Papers, Part I 4; Springer International Publishing: Cham, Germany, 2019; pp. 92–104. [Google Scholar]

- Naeem, A.; Anees, T.; Naqvi, R.A.; Loh, W.K. A comprehensive analysis of recent deep and federated-learning-based methodologies for brain tumor diagnosis. J. Pers. Med. 2022, 12, 275. [Google Scholar] [CrossRef]

- Rose, K.; Eldridge, S.; Chapin, L. The Internet of Things: An Overview; The Internet Soc. (ISOC): Reston, VI, USA, 2015; Volume 80, pp. 1–53. [Google Scholar]

- Vishnu, S.; Ramson, S.J.; Jegan, R. Internet of medical things (IoMT)-An overview. In Proceedings of the 2020 5th International Conference on Devices, Circuits and Systems (ICDCS), Coimbatore, India, 5–6 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 101–104. [Google Scholar]

- Wei, K.; Zhang, L.; Guo, Y.; Jiang, X. Health monitoring based on internet of medical things: Architecture, enabling technologies, and applications. IEEE Access 2020, 8, 27468–27478. [Google Scholar] [CrossRef]

- Kose, G.; Colakoglu, O.E. Health Tourism with Data Mining: Present State and Future Potentials. Int. J. Inf. Commun. Technol. Digit. Converg. 2023, 8, 23–33. [Google Scholar]

- Li, L.; Fan, Y.; Tse, M.; Lin, K.Y. A review of applications in federated learning. Comput. Ind. Eng. 2020, 149, 106854. [Google Scholar] [CrossRef]

- Bonawitz, K.; Eichner, H.; Grieskamp, W.; Huba, D.; Ingerman, A.; Ivanov, V.; Kiddon, C.; Konečný, J.; Mazzocchi, S.; McMahan, B.; et al. Towards federated learning at scale: System design. Proc. Mach. Learn. Syst. 2019, 1, 374–388. [Google Scholar]

- Qi, P.; Chiaro, D.; Guzzo, A.; Ianni, M.; Fortino, G.; Piccialli, F. Model aggregation techniques in federated learning: A comprehensive survey. Future Gener. Comput. Syst. 2023, 150, 272–293. [Google Scholar] [CrossRef]

- McMahan, H.B.; Ramage, D.; Talwar, K.; Zhang, L. Learning differentially private recurrent language models. arXiv 2017, arXiv:1710.06963. [Google Scholar]

- Wink, T.; Nochta, Z. An approach for peer-to-peer federated learning. In Proceedings of the 2021 51st Annual IEEE/IFIP International Conference on Dependable Systems and Networks Workshops (DSN-W), Taipei, Taiwan, 21–24 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 150–157. [Google Scholar]

- Li, H.; Li, C.; Wang, J.; Yang, A.; Ma, Z.; Zhang, Z.; Hua, D. Review on security of federated learning and its application in healthcare. Future Gener. Comput. Syst. 2023, 144, 271–290. [Google Scholar] [CrossRef]

- Rieke, N.; Hancox, J.; Li, W.; Milletari, F.; Roth, H.R.; Albarqouni, S.; Bakas, S.; Galtier, M.N.; Landman, B.A.; Maier-Hein, K.; et al. The future of digital health with federated learning. npj Digit. Med. 2020, 3, 119. [Google Scholar] [CrossRef] [PubMed]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Sewak, M.; Karim, M.R.; Pujari, P. Practical Convolutional Neural Networks: Implement Advanced Deep Learning Models Using Python; Packt Publishing Ltd.: Birmingham, UK, 2018. [Google Scholar]

- Patrick, M.K.; Adekoya, A.F.; Mighty, A.A.; Edward, B.Y. Capsule networks–a survey. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 1295–1310. [Google Scholar] [CrossRef]

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic routing between capsules. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), LongBeach, CA, USA, 4–9 December 2017; p. 30. [Google Scholar]

- Hinton, G.E.; Krizhevsky, A.; Wang, S.D. Transforming auto-encoders. In Artificial Neural Networks and Machine Learning–ICANN 2011, Proceedings of the 21st International Conference on Artificial Neural Networks, Espoo, Finland, 14–17 June 2011; Proceedings, Part I 21; Springer: Berlin/Heidelberg, Germany, 2011; pp. 44–51. [Google Scholar]

- Cheng, J.; Huang, W.; Cao, S.; Yang, R.; Yang, W.; Yun, Z.; Wang, Z.; Feng, Q. Enhanced performance of brain tumor classification via tumor region augmentation and partition. PLoS ONE 2015, 10, e0140381. [Google Scholar] [CrossRef] [PubMed]

- Beutel, D.J.; Topal, T.; Mathur, A.; Qiu, X.; Fernandez-Marques, J.; Gao, Y.; Sani, L.; Li, K.H.; Parcollet, T.; De Gusmão, P.P.; et al. Flower: A friendly federated learning research framework. arXiv 2020, arXiv:2007.14390. [Google Scholar]

- Shekar, B.H.; Dagnew, G. Grid search-based hyperparameter tuning and classification of microarray cancer data. In Proceedings of the 2019 Second International Conference on Advanced Computational and Communication Paradigms (ICACCP), Gangtok, India, 25–28 February 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–8. [Google Scholar]

- Chaki, J.; Woźniak, M. A deep learning based four-fold approach to classify brain MRI: BTSCNet. Biomed. Signal Process. Control 2023, 85, 104902. [Google Scholar] [CrossRef]

- Ruba, T.; Tamilselvi, R.; Beham, M.P. Brain tumor segmentation using JGate-AttResUNet–A novel deep learning approach. Biomed. Signal Process. Control 2023, 84, 104926. [Google Scholar] [CrossRef]

- Bai, L.; Hu, H.; Ye, Q.; Li, H.; Wang, L.; Xu, J. Membership Inference Attacks and Defenses in Federated Learning: A Survey. ACM Comput. Surv. 2024, 57, 89. [Google Scholar] [CrossRef]

- Xia, G.; Chen, J.; Yu, C.; Ma, J. Poisoning attacks in federated learning: A survey. IEEE Access 2023, 11, 10708–10722. [Google Scholar] [CrossRef]

- Huang, Y.; Gupta, S.; Song, Z.; Li, K.; Arora, S. Evaluating gradient inversion attacks and defenses in federated learning. Adv. Neural Inf. Process. Syst. 2021, 34, 7232–7241. [Google Scholar]

- Chen, W.N.; Choquette-Choo, C.A.; Kairouz, P. Communication efficient federated learning with secure aggregation and differential privacy. In Proceedings of the NeurIPS 2021 Workshop Privacy in Machine Learning, Turku, Finland, 6–14 December 2021. [Google Scholar]

- Boenisch, F.; Dziedzic, A.; Schuster, R.; Shamsabadi, A.S.; Shumailov, I.; Papernot, N. Reconstructing individual data points in federated learning hardened with differential privacy and secure aggregation. In Proceedings of the 2023 IEEE 8th European Symposium on Security and Privacy (EuroS & P), Delft, The Netherlands, 3–7 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 241–257. [Google Scholar]

- Adnan, M.; Kalra, S.; Cresswell, J.C.; Taylor, G.W.; Tizhoosh, H.R. Federated learning and differential privacy for medical image analysis. Sci. Rep. 2022, 12, 1953. [Google Scholar] [CrossRef]

| The Parameter | Values |

|---|---|

| Number of Convolutional Layers | {3, 4, 5, 7} |

| Number of Capsule Layers | {3, 4, 5, 6, 7} |

| Activation | {RELU, TANH, SOFT-MAX} |

| Kernel Size Value | {3 × 3, 4 × 4, 5 × 5, 7 × 7, 8 × 8, 9 × 9,} |

| Kernel Initializer | {UNIFORM, NORMAL} |

| Stride | {1, 2, 3} |

| Routing | {1, 3, 5} |

| Batch | {1, 10, 50, 75} |

| Scenario | Delay Rate * | Training Time ** |

|---|---|---|

| 5 healthcare institutions | 1.00 | 124 |

| 5 healthcare institutions | 1.50 | 141 |

| 5 healthcare institutions | 2.00 | 168 |

| 5 healthcare institutions | 2.50 | 196 |

| 10 healthcare institutions | 2.00 | 137 |

| 10 healthcare institutions | 2.50 | 157 |

| 10 healthcare institutions | 3.00 | 179 |

| 10 healthcare institutions | 3.50 | 212 |

| 15 healthcare institutions | 2.50 | 133 |

| 15 healthcare institutions | 3.00 | 156 |

| 15 healthcare institutions | 3.50 | 183 |

| 15 healthcare institutions | 4.00 | 207 |

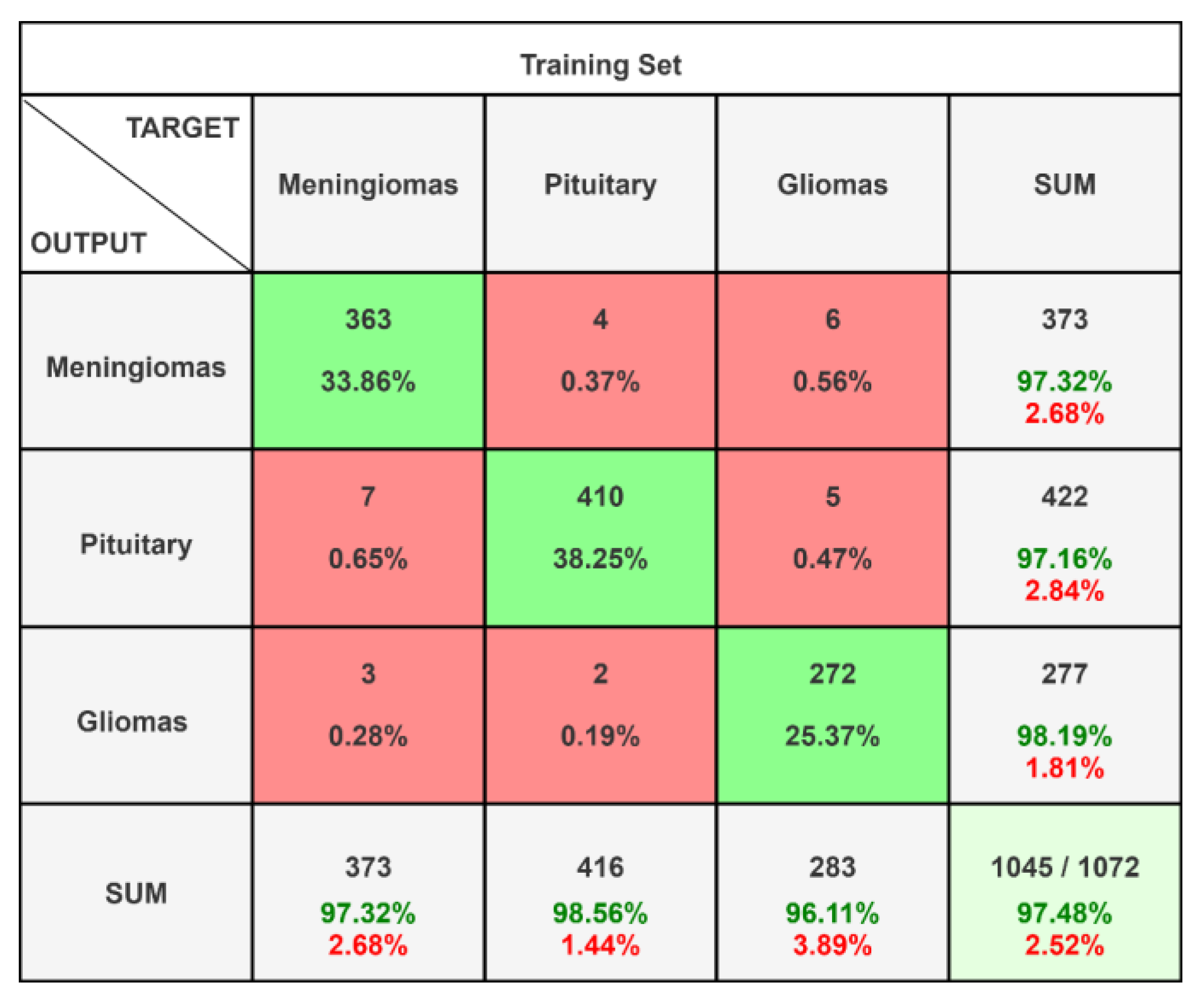

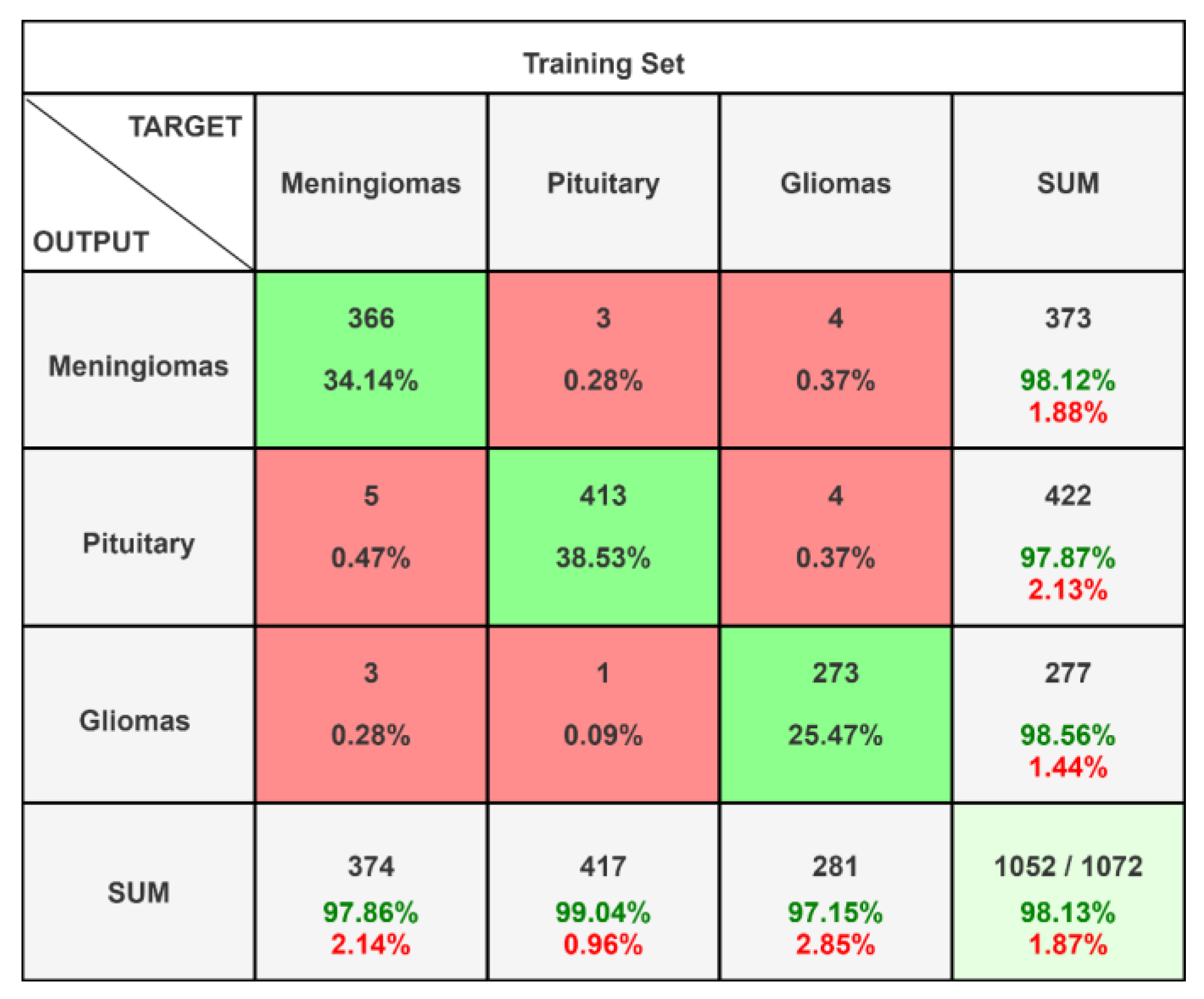

| Scenario | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| 5 healthcare institutions | 0.9748 | 0.9612 | 0.9688 | 0.9642 |

| 10 healthcare institutions | 0.9813 | 0.9705 | 0.9648 | 0.9671 |

| 15 healthcare institutions | 0.9888 | 0.9791 | 0.9819 | 0.9762 |

| Class | Precision | Recall | F1-Score | AUC-ROC | AUC-PRC |

|---|---|---|---|---|---|

| Meningiomas | 0.9732 | 0.9732 | 0.9732 | 0.9812 | 0.9709 |

| Pituitary | 0.9716 | 0.9856 | 0.9785 | 0.9910 | 0.9817 |

| Gliomas | 0.9819 | 0.9611 | 0.9714 | 0.9808 | 0.9713 |

| Class | Precision | Recall | F1-Score | AUC-ROC | AUC-PRC |

|---|---|---|---|---|---|

| Meningiomas | 0.9812 | 0.9786 | 0.9799 | 0.9731 | 0.9784 |

| Pituitary | 0.9787 | 0.9904 | 0.9845 | 0.9750 | 0.9837 |

| Gliomas | 0.9856 | 0.9715 | 0.9785 | 0.9733 | 0.9761 |

| Class | Precision | Recall | F1-Score | AUC-ROC | AUC-PRC |

|---|---|---|---|---|---|

| Meningiomas | 0.9793 | 0.9919 | 0.9906 | 0.9771 | 0.9893 |

| Pituitary | 0.9882 | 0.9882 | 0.9882 | 0.9760 | 0.9861 |

| Gliomas | 0.9892 | 0.9856 | 0.9874 | 0.9763 | 0.9855 |

| Scenario | EfficientNet-B0, ResNet50 [55] | Znet (DNN) [56] | CNN [57] | BTSCNet [90] | JGate-AttResUNet [91] | CapsNet (This Study) |

|---|---|---|---|---|---|---|

| 5 healthcare institutions | 0.9621 | 0.9478 | 0.9312 | 0.9643 | 0.9811 | 0.9748 |

| 10 healthcare institutions | 0.9432 | 0.9317 | 0.9356 | 0.9614 | 0.9674 | 0.9813 |

| 15 healthcare institutions | 0.9682 | 0.9625 | 0.9187 | 0.9726 | 0.9772 | 0.9888 |

| No | Statement | Responses in the 5-Point Likert Scale | Average | ||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | |||

| 1 | “I found this system useful.” | 0 | 0 | 1 | 2 | 7 | 4.6 |

| 2 | “The system is successful enough in diagnosing brain tumors.” | 0 | 0 | 2 | 1 | 7 | 4.5 |

| 3 | “The IoMT infrastructure of the system allows a collaborative application among different institutions.” | 0 | 1 | 1 | 2 | 6 | 4.3 |

| 4 | “I found the usage period boring.” | 7 | 2 | 1 | 0 | 0 | 1.4 |

| 5 | “I think the system can be expanded to alternative healthcare applications apart from diagnosis.” | 0 | 0 | 0 | 2 | 8 | 4.8 |

| 6 | “The DL in the system is effective in MRI analysis.” | 0 | 0 | 2 | 2 | 6 | 4.4 |

| 7 | “The AI solution can be used for decision support on cancer research.” | 0 | 0 | 0 | 2 | 8 | 4.8 |

| 8 | “The system is successful enough in ensuring data privacy.” | 0 | 0 | 1 | 1 | 8 | 4.7 |

| 9 | “The whole system has a good performance in communication and diagnosis flow.” | 0 | 0 | 1 | 3 | 6 | 4.5 |

| 10 | “This system can be used in real applications within healthcare institutions.” | 0 | 0 | 0 | 3 | 7 | 4.7 |

| No | Statement | Responses in the 5-Point Likert Scale | Average | ||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | |||

| 1 | “The IoMT infrastructure of the system allows a collaborative application among different institutions.” | 0 | 0 | 2 | 1 | 7 | 4.5 |

| 2 | “The system is successful enough in diagnosing brain tumors.” | 0 | 1 | 1 | 1 | 7 | 4.4 |

| 3 | “I found this system useful.” | 0 | 0 | 0 | 1 | 9 | 4.9 |

| 4 | “I think the system can be expanded to alternative healthcare applications apart from diagnosis.” | 0 | 0 | 0 | 3 | 7 | 4.7 |

| 5 | “The DL in the system is effective in MRI analysis.” | 0 | 0 | 1 | 2 | 7 | 4.6 |

| 6 | “I think this system cannot support cancer research.” | 7 | 1 | 2 | 0 | 0 | 1.5 |

| 7 | “This system can help me with efficiency and better report writing.” | 0 | 0 | 1 | 1 | 8 | 4.7 |

| 8 | “The system is successful enough in ensuring data privacy.” | 0 | 0 | 0 | 2 | 8 | 4.8 |

| 9 | “This system can be used in real applications within healthcare institutions.” | 0 | 1 | 1 | 2 | 6 | 4.3 |

| 10 | “This system can help me in improving efficiency for screening.” | 0 | 1 | 1 | 1 | 7 | 4.4 |

| 11 | “This system can help me in better report writing.” | 0 | 1 | 0 | 1 | 8 | 4.6 |

| No | Statement | Responses in the 5-Point Likert Scale | Average | ||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | |||

| 1 | “The IoMT infrastructure of the system allows a collaborative application among different institutions.” | 0 | 0 | 1 | 1 | 8 | 4.7 |

| 2 | “I found the usage period boring.” | 9 | 1 | 0 | 0 | 0 | 1.1 |

| 3 | “I found this system useful.” | 1 | 1 | 2 | 0 | 8 | 4.9 |

| 4 | “This system can be used in real applications within healthcare institutions.” | 0 | 0 | 1 | 1 | 8 | 4.7 |

| 5 | “I think this system cannot be used effectively by healthcare staff.” | 8 | 1 | 1 | 0 | 0 | 1.3 |

| 6 | “The system is successful enough in ensuring data privacy.” | 0 | 0 | 1 | 0 | 9 | 4.8 |

| 7 | “The whole system has a good performance in communication and diagnosis flow.” | 0 | 1 | 2 | 1 | 6 | 4.2 |

| 8 | “The system can optimize my tasks regarding patient records.” | 0 | 0 | 1 | 2 | 7 | 4.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rodriguez-Aguilar, R.; Marmolejo-Saucedo, J.-A.; Köse, U. Federated Learning Based on an Internet of Medical Things Framework for a Secure Brain Tumor Diagnostic System: A Capsule Networks Application. Mathematics 2025, 13, 2393. https://doi.org/10.3390/math13152393

Rodriguez-Aguilar R, Marmolejo-Saucedo J-A, Köse U. Federated Learning Based on an Internet of Medical Things Framework for a Secure Brain Tumor Diagnostic System: A Capsule Networks Application. Mathematics. 2025; 13(15):2393. https://doi.org/10.3390/math13152393

Chicago/Turabian StyleRodriguez-Aguilar, Roman, Jose-Antonio Marmolejo-Saucedo, and Utku Köse. 2025. "Federated Learning Based on an Internet of Medical Things Framework for a Secure Brain Tumor Diagnostic System: A Capsule Networks Application" Mathematics 13, no. 15: 2393. https://doi.org/10.3390/math13152393

APA StyleRodriguez-Aguilar, R., Marmolejo-Saucedo, J.-A., & Köse, U. (2025). Federated Learning Based on an Internet of Medical Things Framework for a Secure Brain Tumor Diagnostic System: A Capsule Networks Application. Mathematics, 13(15), 2393. https://doi.org/10.3390/math13152393