Abstract

This paper addresses the stability of stochastic delayed recurrent neural networks (SDRNNs), identifying challenges in existing scalar methods, which suffer from strong assumptions and limited applicability. Three key innovations are introduced: (1) weakening noise perturbation conditions by extending diagonal matrix assumptions to non-negative definite matrices; (2) establishing criteria for both mean-square exponential stability and almost sure exponential stability in the absence of input; (3) directly handling complex structures like time-varying delays through matrix analysis. Compared with prior studies, this approach yields broader stability conclusions under weaker conditions, with numerical simulations validating the theoretical effectiveness.

Keywords:

mean-square exponential input-to-state stability; mean-square exponential stability; almost sure exponential stability; Lyapunov functional; martingale convergence theorem MSC:

35Q93; 47A08; 47G10; 47J05; 60G52; 65Q20

1. Introduction

The concept of stochastic delayed recurrent neural networks (SDRNNs) emerged as a natural extension of traditional recurrent neural networks (RNNs), incorporating stochastic processes and time delays to model more complex and realistic dynamical systems [1,2,3,4,5]. The utility of SDRNNs extends across a broad spectrum of disciplines.For instance, SDRNNs have achieved outstanding results in pattern recognition domains, including speech recognition, handwriting analysis and image classification. Moreover, their inherent stochastic characteristics enable them to effectively adapt and generalize under noisy and uncertain conditions. In addition, SDRNNs are adapted to handling the intricacies of time series data with complex temporal relationships. As a result, they have become indispensable for critical applications like stock market trend prediction, weather forecasting and advanced financial market analysis [6,7,8].

The stability of a dynamical system refers to the network’s ability to reach a steady state or to converge to a bounded trajectory over time, despite the presence of disturbances or initial conditions. In the context of SDRNNs, which include both stochastic processes and time delays, stability is particularly important. For instance, stable SDRNNs exhibit robustness to noise, enabling them to handle the inherent randomness of real-world data and maintain consistent performance.

Conventional stability involves the network’s states progressively nearing an equilibrium point as time approaches infinity, encompassing types such as asymptotic stability [9,10,11,12], exponential stability [13,14,15,16,17,18,19,20,21,22,23] and the probabilistic variant of almost sure exponential stability [24,25]. On the other hand, there also exist alternative stability definitions, such as input-to-state stability. Input-to-state stability focuses on the network’s ability to respond to inputs while preserving boundedness in the face of external disturbances, as detailed in [26,27,28,29,30,31,32,33,34,35].

However, a critical analysis of the literature reveals significant limitations in conventional stability studies. Most existing works rely on scalar approaches, which impose restrictive assumptions (e.g., positive coefficients and diagonal noise structures) that fail to capture the complexity of coupled dynamics in SDRNNs. For instance, the scalar-based mean-square exponential input-to-state stability analysis in [36] assumes Lipschitz noise perturbations with positive diagonal matrices, severely limiting its applicability to systems with non-diagonal or time-varying noise. Additionally, few studies address the coexistence of multiple stabilities (e.g., mean-square and almost sure exponential stability) in input-free systems, leaving a crucial gap in understanding robust dynamics in complex stochastic environments.

To bridge the gaps in the existing literature (e.g., scalar approaches in [36] requiring diagonal noise matrices and positive coefficients), this study introduces a matrix-theory framework. The main contributions, distinguished from prior works, are as follows:

- Assumption relaxation: The authors of [36] assume Lipschitz noise with positive diagonal matrices, while we generalize to non-negative definite matrices (Assumptions 1 and 2), expanding stability analysis to broader noise structures;

- Multiple stabilities coexistence: Unlike single-stability analyses, we concurrently prove mean-square and almost sure exponential stabilities for input-free systems (Theorems 3–5). As far as we are aware, this has not been reported in other studies.

- Complex dynamics handling: Scalar methods struggle with time-varying delays, but our matrix approach directly addresses such structures without equivalent scalar representation.

The structure of this paper is outlined as follows: Section 2 begins with the introduction of essential definitions and preliminaries concerning stochastic delayed recurrent neural networks, placing particular emphasis on the significance of stability analysis. It also addresses the constraints of scalar methods and underscores the requirement for a matrix-based strategy. In Section 3, the primary theorems of the paper are presented. Section 4 offers two numerical examples, thereby illustrating the effectiveness of the obtained results. Section 5 provides a detailed exposition of the proof procedures for the aforementioned theorems. Ultimately, Section 6 serves as the conclusion of the paper, where we summarize our contributions.

2. Model Description and Problem Formulation

Zhu et al. considered the mean-square exponential input-to-state stability of SDRNNs in [36]:

for any with i ranging from 1 to n.

Firstly, an explanation of the functions appearing in Itô-type Equation (1) is provided. denotes the state variable of the neuron at a given time t. Moreover, , and represent the activation functions of the neuron at the same time instance. Additionally, the function signifies the regulatory input for the neuron at that moment, and belongs to , where denotes the space of essentially bounded measurable functions satisfying .

Furthermore, the stochastic term , which is a function from to , is Borel-measurable. Let be a complete probability space where the filtration is non-decreasing, contains all -null sets and is naturally generated by the underlying Wiener processes , see [37]. Note that both constant and time-varying delays are considered simultaneously. The constant delay typically corresponds to the fixed physical transmission delay in the system, while the time-varying delay is used to describe the delay factors that fluctuate over time in the system. We assume that is differentiable and fulfills and , with and being positive constants.

Next, we provide an explanation for the parameters within Equation (1). The coefficient signifies the intensity of the self-feedback link for the unit. The coefficients , and denote the influence strengths of the unit on the unit at the moment t.

Finally, we introduce some notations that will be used throughout this paper. is defined as the collection of essentially bounded functions from the interval into , satisfying . We use to denote the set of continuous functions over into , equipped with . The notation refers to the collection of all -measurable random variables that take values in and satisfy , with denoting the expectation operator with respect to the probability measure .

Remark 1.

It is important to observe that recasting Equation (1) into the vector format (2) constitutes more than an equivalent formulation. The vector notation facilitates a more relaxed discussion of the general forms of functions and For instance, we can broaden the condition of to

and the integrity of all subsequent discussions remains unaffected. In such cases, discussing the problem using the component form becomes inconvenient. This relaxation suggests that the scope of our work extends to applications beyond the realm of neural networks.

We will also consider system (2) with In this case, it simplifies to the following system

It is crucial to note that Equations (2) and (3) are Itô-type stochastic differential equations, consistent with Equation (1). Below, we present several definitions of stability for the trivial solution of Equations (2) and (3).

Definition 1.

The trivial solution of system (2) is mean-square exponentially input-to-state-stable if, for any and , there exist positive scalars such that

Definition 2.

The trivial solution of system (3) is mean-square exponentially stable if, for all , there exist positive scalars such that

Definition 3.

The trivial solution of system (3) is almost surely exponentially stable if, for each , there exists a positive scalar γ such that

Throughout the paper, we adhere to foundational assumptions as follows.

Assumption 1.

Assume that and there exist positive scalars such that for any ,

Furthermore, we assume that there exist symmetric nonnegative-definite matrices such that

Assumption 2.

Assume that there exist symmetrical matrices and symmetrical positive definite matrix P such that are non-positive definite with

Remark 2.

It follows from Assumptions 1 that for any ,

Remark 3.

In this study, we assume that the matrices in Equation (5) are non-negative definite. In comparison, the assumption made in [36] only considers the case where these matrices are diagonal. The reader is referred to [36] for the requirement

for any , and are defined to be nonnegative constants.

Remark 4.

Remark 5.

Pertaining to Assumption 2, the following question naturally emerges: given an matrix A, what criteria should be applied to select symmetric matrices and such that the block matrix

is non-positive definite? Utilizing Lemma A1 from the Appendix A, we know that in order for the matrices in Assumption 2 to be non-positive definite, the corresponding matrices must be non-positive definite where .

Remark 6.

Under Assumption 2, let denote the smallest eigenvalues of , respectively. Then we derive from Remark 5

3. Main Results

We begin by presenting the theorem on mean-square exponentially input-to-state stability for Equation (1) as detailed in [36].

Assumption 3

([36]). Assume that

for any , and are defined to be positive constants.

Assumption 4

([36]). Assume that

for any , and are defined to be nonnegative constants.

Assumption 5

([36]). .

Theorem 4

([36]). If Assumptions 3–5 are satisfied, the trivial solution of system (1) is mean-square exponentially input-to-state-stable, provided that there exist positive scalars λ, , and for such that

Subsequently, we introduce the stability criteria of this paper and compare them with the results of Theorem 4. To establish the next theorems, we define the following matrices

where are presented in Remark 6 and I represents the identity matrix.

Theorem 5.

If Assumptions 1 and 2 are fulfilled, the trivial solution of system (2) is mean-square exponential input-to-state-stable if there exist positive scalars λ and positive definite matrices such that are all non-positive definite.

Remark 7.

Let the matrices in Assumption 1 and in Theorem 5 be diagonal matrices, and it can be readily observed that Theorem 5 reduces to Theorem 4. This implies that our theorem encompasses the results of [36].

On the other hand, if , Theorem 5 reduces to the mean-square exponential stability of system (3). This reduction is stated as follows.

Theorem 6.

If Assumptions 1 and 2 are satisfied, the trivial solution of system (3) is mean-square exponential-stable if there exist positive scalars λ and positive definite matrices such that are all non-positive definite.

Theorem 7.

If Assumptions 1 and 2 are fulfilled, the trivial solution of system (3) is almost surely exponential-stable if there exist positive scalars λ and positive definite matrices such that is non-positive definite. To be exact, we have

Remark 8.

Theorem 2 and Theorem 3, respectively, provide the conditions for the mean-square exponential stability and almost surely exponential stability for the trivial solutions of Equation (3). It is well known that exponential stability implies the almost surely exponential stability under certain conditions. By comparing our two theorems, this fact is further corroborated.

Below, we present the conditions under which the trivial solution of Equation (3) satisfies both types of stability.

Theorem 8.

If Assumptions 1 and 2 are fulfilled, the trivial solution of system (3) is both mean-square exponential-stable and almost surely exponential-stable if there exist positive scalars λ and positive definite matrices such that are non-positive definite.

Remark 9.

In Theorems 6–8, we obtain several stability criteria for system (3). By choosing appropriate Lyapunov–Krasovskii functional and a refined application of stochastic analysis theory, we achieve both mean-square exponential stability and almost sure exponential stability for SDRNNs with no input. As far as we are aware, this has not been reported in other studies.

4. Illustrative Examples

In this section, we will present simulation experiments in two-dimensional and three-dimensional spaces. Numerical solutions were obtained via the Euler–Maruyama scheme with fixed step-size . The strong convergence order of 0.5 under global Lipschitz conditions was first proven by Gikhman and Skorochod [38]. We note that more advanced methods exist, such as the stochastic balanced drift-implicit Theta methods [39], which may offer improved stability for stiff systems. Reference [40] provides additional implementation insights for stochastic simulation.

Example 1.

(2-D case) Consider the 2-D SDRNNs (2) with

- (1)

- Select the following functions and parameters:With these choices, Assumption 1 is satisfied.

- (2)

- Define matrices as followswith it can be obtained by Definition (6) thatIt is straightforward to verify that are non-positive definite, which means that Assumption 2 is satisfied.

- (3)

- It follows from Definition (8) thatIt is straightforward to demonstrate that are non-positive definite.

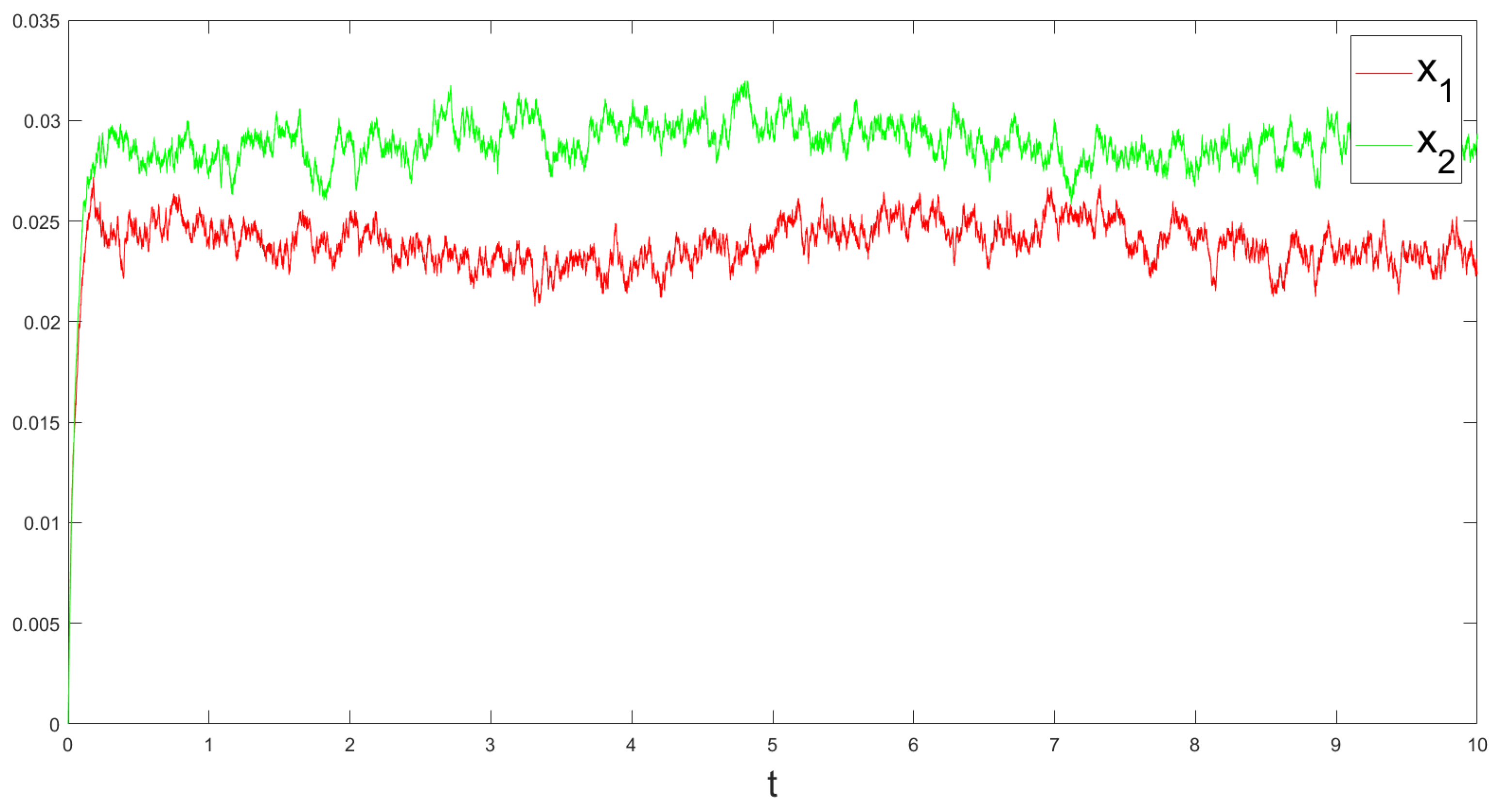

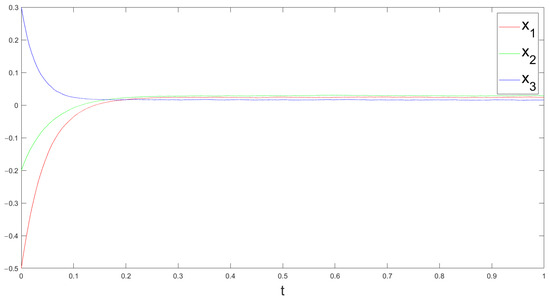

In summary, for the parameters considered here, the requirements of Theorem 1 are met, thereby ensuring the mean-square exponential input-to-state stability of the solution to the Equation (2). The numerical solutions are presented in Figure 1, offering a graphical demonstration that supports the stability of the solution. As observed, the trajectories remain within certain bounds, suggesting that the solution is stable. Nevertheless, it is not guaranteed that they will converge to the equilibrium state.

Figure 1.

The state response of system (2) in Example 1.

Example 2.

(3-D case) Consider the 3-D SDRNNs (3) with

- (1)

- Assume thatWith these selections, Assumption 1 is fulfilled.

- (2)

- Define matrices as the followingIt is readily apparent that are non-positive definite, thereby confirming that Assumption 2 holds.

- (3)

- It can be deduced from (8) thatand It can be easily established that are non-positive definite.

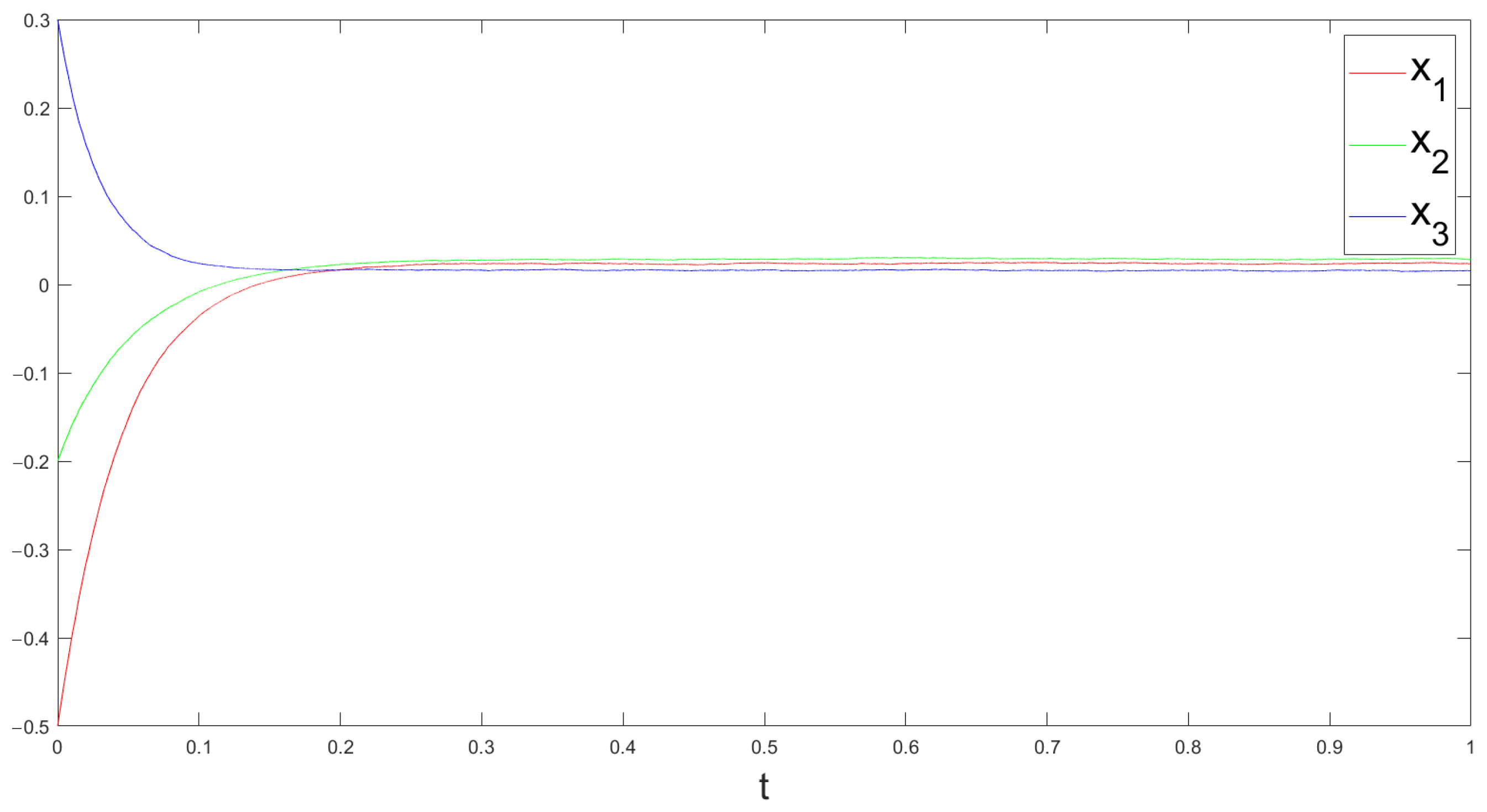

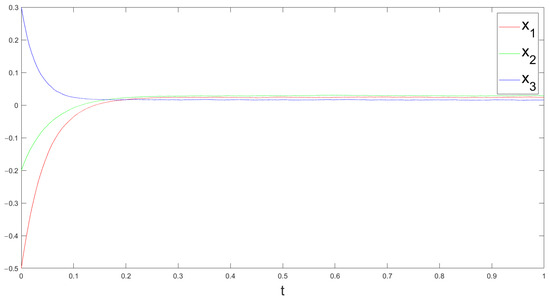

In conclusion, given the parameters under consideration, the conditions of Theorem 4 are satisfied, which guarantees both mean-square exponential stability and almost sure exponential stability for the solution to Equation (3). The numerical solutions illustrated in Figure 2 provide a visual confirmation of the solution’s stability. They reveal that the solution rapidly converges to the equilibrium state, indicating a swift stabilization of the system.

Figure 2.

The state response of network (3) in Example 2.

5. Proof of Main Theorems

5.1. Preliminary Lemmas

Let represent all nonnegative functions defined over that are twice continuously differentiable with respect to x and once continuously differentiable with respect to t. For every , we introduce an operator , which is related to the stochastic delay function (2) as follows:

with

Subsequently, we will proceed to estimate for specific Lyapunov functions associated with the stochastic delay function (2).

Lemma 1.

Proof.

Combing these results with the fact that are symmetric non-positive definite, we get

To establish almost sure exponential stability for the system (3), we necessitate the following results. The subsequent discussion is inspired by the work presented in [41].

Lemma 2

(Semimartingale convergence theorem [41]). Consider and as two continuous, adapted and increasing processes on with initial conditions almost surely (a.s.). Let be a real-valued continuous local martingale, also starting at a.s. Let ζ be a nonnegative random variable that is -measurable with a finite expected value, . Define the process

If remains nonnegative, then the following implication holds:

Here, a.s. indicates that the probability of the intersection of and the complement of is zero, . Specifically, if a.s., then for almost all outcomes ,

This suggests that both the process Y and tend to approach finite random values as .

Lemma 3.

Let , and let be any continuous function, then

Proof.

It is sufficient to demonstrate the validity of the first relation, as the second relation is merely a specific instance of the first one. Through direct computation, we arrive at the following result:

The final equality is valid because is less than or equal to by definition. □

5.2. Theorem Demonstration: A Systematic Exposition of the Proofs

Proof.

Theorem 5’s proof: Let be defined in accordance with (12), and consider to be the solution of Equation (2). When we take into account that are all non-positive definite matrices and couple this observation with the conclusions of Lemma 1, we derive the following conclusion:

Next, we introduce a stopping time

It can be inferred from Dynkin’s formula that

By taking the limit on both sides of Equation (15) and applying the monotone convergence theorem along with Equation (14), we easily derive

Combining this with the definitions provided in (12), we obtain the following result

That is,

This in conjunction with Definition 1 confirms that the trivial solution of system (2) is mean-square exponentially input-to-state-stable. □

Proof.

Theorem 3’s proof: Let be defined in (12), and consider as the solution to the Equation (3). It follows from Lemma 1 with the assumption that

Thus

This together with Lemma 3 implies that

Making use of (19) and the non-positiveness of yields that

where

which constitutes a nonnegative martingale, and Lemma 2 demonstrates that

Consequently, Equation (20) leads to

6. Conclusions

The investigation conducted in this research, employing a matrix-based methodology, has successfully demonstrated the stability of solutions for SDRNNs. Our findings rest on less restrictive assumptions compared to scalar methods and yield a broader range of stability conclusions. Beyond enhancing the mean-square exponential input-to-state stability results previously introduced in [36], we have also provided insights into mean-square exponential stability and almost sure exponential stability for cases with no input. The effectiveness of our theoretical results has been confirmed through numerical simulations, highlighting their practical applicability.

While our work focuses on theoretical stability analysis, future research could explore several promising directions. First, extending our analysis to more general system configurations, such as those with different types of time-varying delays or noise structures, would further broaden the applicability of the results. Second, developing efficient numerical methods to compute the controller parameters based on the derived stability conditions could bridge the gap between theory and practical implementation. Third, investigating the resilience of SDRNNs to parameter uncertainties and external disturbances would enhance the robustness of the stability results. These future directions align with the growing demand for more sophisticated and reliable neural network models in real-world applications.

Author Contributions

All four authors have contributed equally to this paper. H.X. conducted theoretical analysis and wrote the paper. S.W. proposed the research problem and verified the correctness of the theoretical analysis. M.X. provided the data for the study. Y.Z. performed the numerical simulations. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the specialized research fund of Yibin University (Grant No. 412-2021QH027).

Data Availability Statement

No new data were created.

Acknowledgments

All procedures and data handling in this study were in accordance with relevant ethical standards and were approved by the Ethics Committee of Yibin University prior to the commencement of the research. The data collection and processing in this study were conducted in accordance with the principle of informed consent, ensuring the privacy and rights of the participants. We sincerely thank the anonymous reviewers for their expert guidance and constructive engagement, which profoundly improved the scholarly rigor of this work. Their insightful critique enhanced foundational mathematical frameworks, refined probabilistic conventions, and enriched the specialist literature. We are particularly grateful for their valuable recommendations on key references, which strengthened the theoretical foundations and contextual depth of this study.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A

In this section, we aim to address the issue proposed in Assumption 2: given an matrix A, how can we choose symmetric matrices such that is non-positive definite?

Lemma A1.

Let A be an matrix, if there exist symmetrical matrices such that is non-positive definite, then must be non-positive definite.

Proof.

Let 0 be n-dimensional column vector with all elements being zero. , it can by deduced by the non-positiveness of matrix that

or equivalently . This ensures that both are non-positive definite. □

Lemma A2.

Let A be an matrix, if there exists symmetrical matrices and scalar such that and are non-positive definite, then is non-positive definite.

Proof.

For all , we can compute as follows:

It should be noted that the last inequality holds due to the assumption that and are non-positive definite. □

References

- Duan, L.; Ren, Y.; Duan, F. Adaptive stochastic resonance based convolutional neural network for image classification. Chaos Solitons Fractals 2022, 162, 112429. [Google Scholar] [CrossRef]

- Morán, A.; Parrilla, L.; Roca, M.; Font-Rossello, J.; Isern, E.; Canals, V. Digital implementation of radial basis function neural networks based on stochastic computing. IEEE J. Emerg. Sel. Top. Circuits Syst. 2022, 13, 257–269. [Google Scholar] [CrossRef]

- Hofmann, D.; Fabiani, G.; Mentink, J.H.; Carleo, G.; Sentef, M.A. Role of stochastic noise and generalization error in the time propagation of neural-network quantum states. Scipost Phys. 2022, 12, 165. [Google Scholar] [CrossRef]

- Yamakou, M.E.; Zhu, J.; Martens, E.A. Inverse stochastic resonance in adaptive small-world neural networks. Chaos Interdiscip. J. Nonlinear Sci. 2024, 34, 113119. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Liang, F. A kernel-expanded stochastic neural network. J. R. Stat. Soc. Ser. B Stat. Methodol. 2022, 84, 547–578. [Google Scholar] [CrossRef]

- Joya, G.; Atencia, M.A.; Sandoval, F. Hopfield neural networks for optimization: Study of the different dynamics. Neurocomputing 2022, 43, 219–237. [Google Scholar] [CrossRef]

- Yang, J.; Ma, L.; Chen, L. L2–L∞ state estimation for continuous stochastic delayed neural networks via memory event-triggering strategy. Int. J. Syst. Sci. 2022, 53, 2742–2757. [Google Scholar] [CrossRef]

- Yang, L.; Gao, T.; Lu, Y.; Duan, J.; Liu, T. Neural network stochastic differential equation models with applications to financial data forecasting. Appl. Math. Model. 2023, 115, 279–299. [Google Scholar] [CrossRef]

- Yang, X.; Deng, W.; Yao, J. Neural network based output feedback control for DC motors with asymptotic stability. Mech. Syst. Signal Process. 2022, 164, 108288. [Google Scholar] [CrossRef]

- Yuan, H.; Zhu, Q. The well-posedness and stabilities of mean-field stochastic differential equations driven by G-Brownian motion. SIAM J. Control Optim. 2025, 63, 596–624. [Google Scholar] [CrossRef]

- McAllister, D.R.; Rawlings, J.B. Nonlinear stochastic model predictive control: Existence, measurability, and stochastic asymptotic stability. IEEE Trans. Autom. Control 2022, 68, 1524–1536. [Google Scholar] [CrossRef]

- Xu, W.; Chen, R.; Li, X. Infinitely deep bayesian neural networks with stochastic differential equations. Int. Conf. Artif. Intell. Stat. 2022, 721–738. [Google Scholar]

- Zhao, Y.; Wang, L. Practical exponential stability of impulsive stochastic food chain system with time-varying delays. Mathematics 2022, 11, 147. [Google Scholar] [CrossRef]

- Zhu, Q. Event-triggered sampling problem for exponential stability of stochastic nonlinear delay systems driven by Levy processes. IEEE Trans. Autom. Control 2025, 70, 1176–1183. [Google Scholar] [CrossRef]

- Zhu, Q.; Cao, J. Robust exponential stability of Markovian jump impulsive stochastic Cohen-Grossberg neural networks with mixed time delays. IEEE Trans. Neural Netw. Learn. Syst. 2010, 21, 1314–1325. [Google Scholar]

- Tran, K.Q.; Nguyen, D.H. Exponential stability of impulsive stochastic differential equations with Markovian switching. Syst. Control Lett. 2022, 162, 105178. [Google Scholar] [CrossRef]

- Xu, H.; Zhu, Q. New criteria on pth moment exponential stability of stochastic delayed differential systems subject to average-delay impulses. Syst. Control Lett. 2022, 164, 105234. [Google Scholar] [CrossRef]

- Li, Z.; Xu, L.; Ma, W. Global attracting sets and exponential stability of stochastic functional differential equations driven by the time-changed Brownian motion. Syst. Control Lett. 2022, 160, 105103. [Google Scholar] [CrossRef]

- Zhu, Q.; Cao, J. Exponential stability analysis of stochastic reaction-diffusion Cohen-Grossberg neural networks with mixed delays. Neurocomputing 2011, 74, 3084–3091. [Google Scholar] [CrossRef]

- Zhu, Q.; Li, X.; Yang, X. Exponential stability for stochastic reaction-diffusion BAM neural networks with time-varying and distributed delays. Appl. Math. Comput. 2011, 217, 6078–6091. [Google Scholar] [CrossRef]

- Liu, Y.; Xu, J.; Lu, J.; Gui, W. Stability of stochastic time-delay systems involving delayed impulses. Automatica 2023, 152, 110955. [Google Scholar] [CrossRef]

- Kechiche, D.; Khemmoudj, A.; Medjden, M. Exponential stability result for the wave equation with Kelvin-Voigt damping and past history subject to Wentzell boundary condition and delay term. Math. Methods Appl. Sci. 2023, 47, 1546–1576. [Google Scholar] [CrossRef]

- Zhang, J.; Nie, X. Coexistence and locally exponential stability of multiple equilibrium points for fractional-order impulsive control Cohen-Grossberg neural networks. Neurocomputing 2024, 589, 127705. [Google Scholar] [CrossRef]

- Zhu, D.; Zhu, Q. Almost sure exponential stability of stochastic nonlinear semi-Markov jump T-S fuzzy systems under intermittent EDF scheduling controller. J. Frankl. Inst. 2024, 361, 107188. [Google Scholar] [CrossRef]

- Liu, X.; Cheng, P. Almost sure exponential stability and stabilization of hybrid stochastic functional differential equations with Lévy noise. J. Appl. Math. Comput. 2023, 69, 3433–3458. [Google Scholar] [CrossRef]

- Pan, L.; Cao, J. Input-to-state Stability of Impulsive Stochastic Nonlinear Systems Driven by G-Brownian Motion. Int. J. Control Autom. Syst. 2021, 19, 1–10. [Google Scholar] [CrossRef]

- Li, X.; Zhang, T.; Wu, J. Input-to-State Stability of Impulsive Systems via Event-Triggered Impulsive Control. IEEE Trans. Cybern. 2022, 52, 7187–7195. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Russo, A. Further characterizations of integral input-to-state stability for hybrid systems. Automatica 2024, 163, 111484. [Google Scholar] [CrossRef]

- Yang, X.; Zhao, T.; Zhu, Q. Aperiodic event-triggered controls for stochastic functional differential systems with sampled-data delayed output. IEEE Trans. Autom. Control 2025, 70, 2090–2097. [Google Scholar] [CrossRef]

- Huang, F.; Gao, S. Stochastic integral input-to-state stability for stochastic delayed networked control systems and its applications. Commun. Nonlinear Sci. Numer. Simul. 2024, 138, 108177. [Google Scholar] [CrossRef]

- Xu, G.; Bao, H. Further results on mean-square exponential input-to-state stability of time-varying delayed BAM neural networks with Markovian switching. Neurocomputing 2020, 376, 191–201. [Google Scholar] [CrossRef]

- Song, Q.; Zhao, Z.; Liu, Y.; Alsaadi, F. Mean-square input-to-state stability for stochastic complex-valued neural networks with neutral delay. Neurocomputing 2021, 470, 269–277. [Google Scholar] [CrossRef]

- Wu, K.; Ren, M.; Liu, X. Exponential input-to-state stability of stochastic delay reaction-diffusion neural networks. Neurocomputing 2020, 412, 399–405. [Google Scholar] [CrossRef]

- Wang, W. Mean-square exponential input-to-state stability of stochastic delayed recurrent neural networks with local Lipschitz condition. Math. Methods Appl. Sci. 2023, 46, 17788–17797. [Google Scholar] [CrossRef]

- Radhika, T.; Chandrasekar, A.; Vijayakumar, V.; Zhu, Q. Analysis of Markovian Jump Stochastic Cohen–Grossberg BAM Neural Networks with Time Delays for Exponential Input-to-State Stability. Neural Process. Lett. 2023, 55, 11055–11072. [Google Scholar] [CrossRef]

- Zhu, Q.; Cao, J. Mean-square exponential input-to-state stability of stochastic delayed neural networks. Neurocomputing 2014, 131, 157–163. [Google Scholar] [CrossRef]

- Mao, X. Stochastic Differential Equations and Applications, 2nd ed.; Woodhead Publishing: Cambridge, UK, 2007. [Google Scholar]

- Gikhman, I.; Skorochod, A.V. Stochastic Differential Equations (SDEs); Naukova Dumka, Kiev, 1968; English and German Translation; Springer: Berlin/Heidelberg, Germany, 1971. (in Russian) [Google Scholar]

- Schurz, H. Basic concepts of numerical analysis of stochastic differential equations explained by balanced implicit theta methods. In Stochastic Differential Equations and Processes; Mounir, Z., Filatova, D.V., Eds.; Springer Proceedings in Mathematics 7; Springer: New York, NY, USA, 2012; pp. 1–139. [Google Scholar]

- Graham, C.; Talay, D. Discretization of Stochastic Differential Equations. Stochastic Simulation and Monte Carlo Methods. In Mathematical Foundations of Stochastic Simulation; Springer: New York, NY, USA, 2013; pp. 155–195. [Google Scholar]

- Liptser, R.S.; Shiryaev, A.N. Statistics of Random Processes I: General Theory, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 1989. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).