1. Introduction

Critical Path Method (CPM) is commonly used in network analysis and project management. A fundamental problem in CPM is optimizing the total project cost subject to a prescribed completion date [

1]. The general setting is that the project manager can expedite a few jobs by investing extra resources, hence reducing the duration of the entire project and thus meeting the desired completion date. Meanwhile, the extra resources spent should be as few as possible. A formal description of the problem is given in

Section 1.1.

In this paper, we revisit a simple incremental algorithm for solving this problem and prove that it has an approximation ratio upper bound

, where

k denotes the amount of days the duration of the project has to be shortened (which is easily determined by the prescribed completion date and the original duration of the project). The same result is also obtained for a similar but different

k-extending problem, and we will go through that by the end of this paper. See more about this algorithm in [

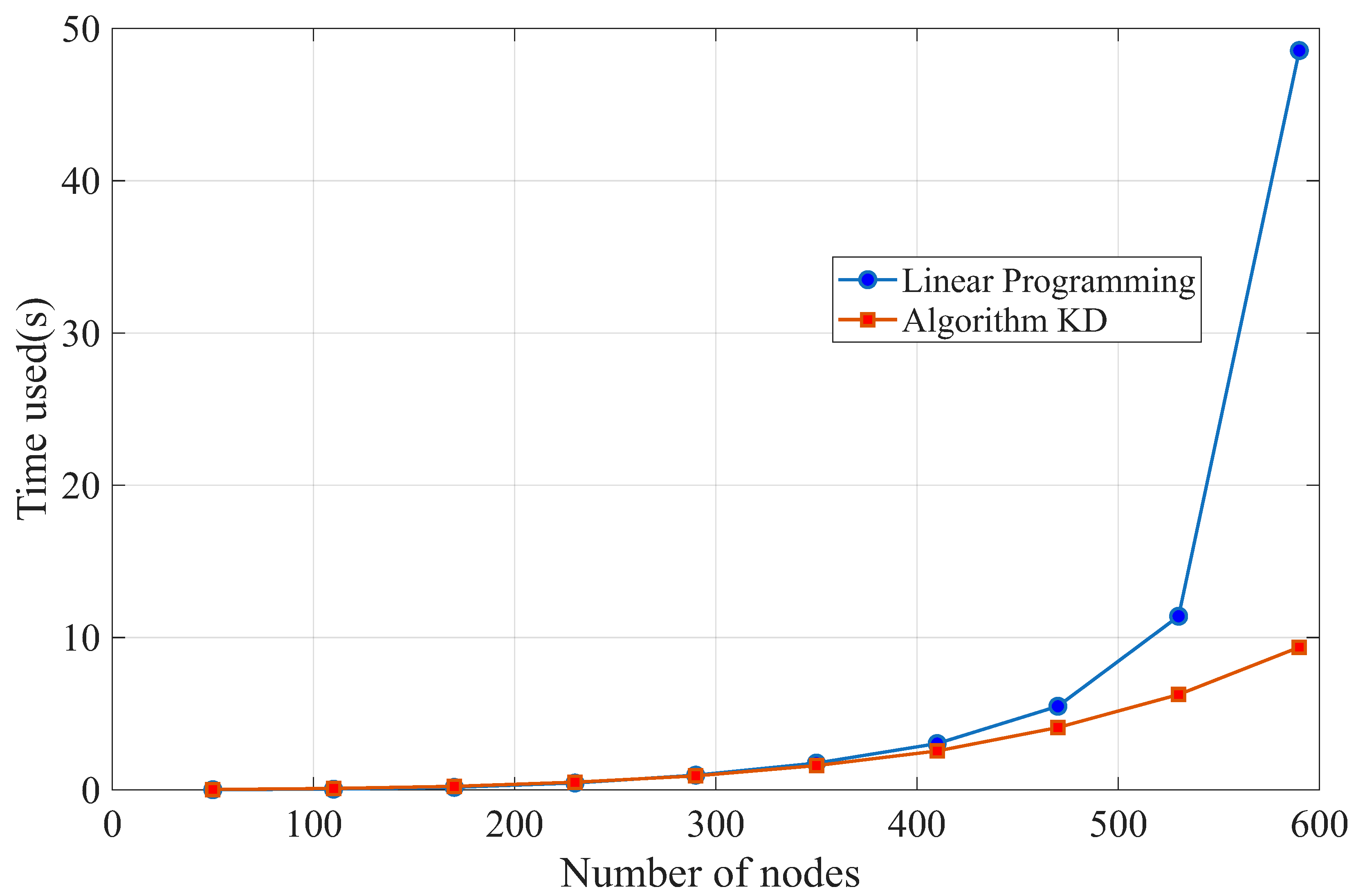

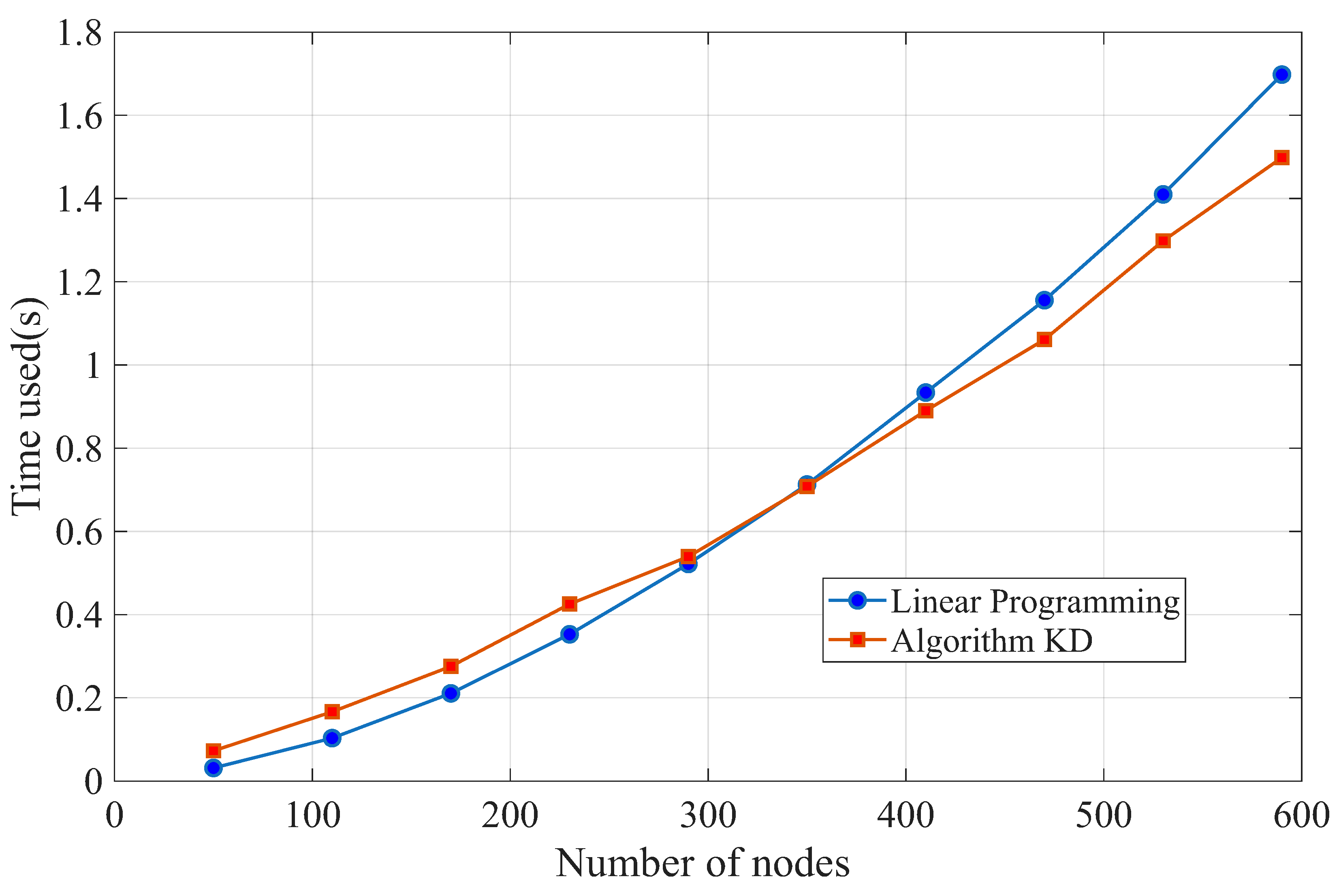

2], where it is called the “Kaufmann and Desbazeille Algorithm” (denoted by Algorithm KD) and is given a constructive example indicating its cost can be arbitrarily far from the optimum cost in terms of the absolute value of their difference (meanwhile, in this paper, we study the ratio between the two costs).

The mentioned algorithm is based on a greedy approach. It speeds up the project by only one day at a time, and repeats it

k times. Each time it adopts the minimum cost strategy to shorten the project (by one day). This does not guarantee the minimum total cost when

(see an example in

Section 5.1). Nevertheless, it is a simple, efficient, and practical algorithm, which has been implemented in certain applications [

3]. Therefore, a theoretical analysis of its approximation performance seems to be important and may benefit the relevant researchers and engineers. Such an analysis is not easy and is absent in the literature, hence we cover it in this paper.

1.1. Description of the k-Crashing Problem

A project is considered as an activity-on-edge network (AOE network, which is a directed acyclic graph) N, where each activity/job of the project is an edge. Some jobs must be finished before others can be started, as described by the topology structure of N.

It is known that job in normal speed would take days to be finished after it is started, and hence the (normal) duration of the project N, denoted by , is determined, which equals the length of the critical path (namely, the longest path) of N.

To speed up the project, the manager can crash a few jobs (namely, reduce the length of the corresponding edges) by investing extra resources into those jobs, such as deploying more staff, upgrading current procedures, and purchasing more equipment. However, the time for completing has a lower bound due to technological limits—it requires at least days to be completed. Following the convention, assume that the duration of a job has a linear relation with the extra resources put into this job; equivalently, there is a parameter (slope), so that shortening by days costs resources.

Given project N and an integer , the k-crashing problem asks the minimum cost to speed up the project by k days.

In fact, many people also care about the case of non-linear relation, especially the convex case, where shortening an edge becomes more difficult after a previous shortening. Delightfully, the greedy algorithm performs equally well for this convex case. Without any change, it still finds a solution with the approximation ratio upper bound .

1.2. Our Contribution and Paper Structure

The main contributions of this paper are as follows:

We revisit a simple and efficient greedy algorithm for solving the k-crashing problem in project management, which aims to minimize the resources required to shorten a project’s duration by k days. We prove that this algorithm achieves an approximation ratio upper bound of , providing a theoretical guarantee for its performance.

We explore the generalization of the algorithm to the convex case, where the cost of shortening an edge increases as more resources are invested, and it shows that the approximation ratio upper bound remains valid in this more complex scenario.

We extend the analysis to a similar problem, the k-extending problem, where the goal is to extend the shortest paths of a network by k days, and we prove that the same approximation ratio upper bound holds for this problem, demonstrating the generalizability of our analysis.

The conference version of this paper only involves the approximation ratio bound of the first contribution all other contributions are only demonstrated in this paper. We believe these contributions provide insights for project managers and researchers working on time-cost trade-offs in project scheduling.

The organization of this paper is as follows. In

Section 2, we discuss the work related to our research. In

Section 3, we prove the approximation ratio upper bound of

for Algorithm KD in the original

k-crashing problem. Then, in

Section 4, we generalize the approximation ratio upper bound to the convex case where shortening an edge gets more and more costly as we shorten it. Next, in

Section 6, we discuss the tightness of our bound with a counterexample where Algorithm KD does not produce an optimum solution and demonstrate the experimental results reflecting the efficiency of Algorithm KD. In

Section 6, we implement the same procedure and prove the same approximation ratio upper bound of

for the greedy algorithm in the

k-extending problem. Finally, in

Section 7, we summarize our work from this paper and point out some of the possible directions for future research.

2. Related Work

The first solution to the

k-crashing problem was given by Fulkerson [

4] and by Kelley [

5], respectively, in 1961. The results in these two papers are independent, yet the approaches are essentially the same, as pointed out in [

6]. In both of them, the problem is first formulated into a linear program problem, whose dual problem is a minimum-cost flow problem, which can then be solved efficiently.

Later in 1977, Phillips and Dessouky [

6] reported another clever approach (denoted by Algorithm PD). Similar to the greedy algorithm mentioned above, Algorithm PD also consists of

k steps, and at each step it locates a minimal cut in a flow network derived from the original project network. This minimal cut is then utilized to identify the jobs which should be expedited or de-expedited in order to reduce the project reduction. It is however not clear whether this algorithm can always find an optimum solution (it is believed so in many sources [

7,

8,

9,

10]). We tend to believe the correctness yet cannot find a proof in [

6].

It is noteworthy to mention that Algorithm PD shares a lot of common logic with the greedy algorithm we considered. Both of them locate a minimal cut in some flow network and then use it to identify the set of jobs to expedite/de-expedite in the next round. However, the constructed flow networks are different. The one in the greedy algorithm has only capacity upper bounds, whereas the one in Algorithm PD has both capacity upper bounds and lower bounds and is thus more complex.

Algorithm KD is simpler and easier to implement compared to all the approaches above. Since it is arguably the simplest algorithm for the problem, it has been brought up in the literature multiple times [

2,

11,

12,

13,

14]. Compared with Algorithm PD, there is no de-expedite option allowed in Algorithm KD. As a result, for online cases where the project duration must be reduced optimally unit by unit (where each step must be the cheapest at the time) and the crashing is non-revocable, Algorithm KD can still be implemented but Algorithm PD cannot. So the approximation ratio of Algorithm KD can also be regarded as the price of “online” property, which is also known as the concept of competitive ratio studied for multiple problems, such as online allocation [

15], linear search [

16], chasing convex bodies [

17], bin packing [

18], online matching [

19], and perimeter defense [

20].

Other approaches for the problem are proposed by Siemens [

1] and Goyal [

21], but these are heuristic algorithms without any guarantee—approximation ratios are not proved in these papers.

Many variants of the

k-crashing problem have been studied in the past decades; see [

9,

10,

22,

23], and the references within.

3. k-Crashing Problem

To begin with, we now introduce the basic notations and definitions needed for this research as follows.

- Project N.

Assume

is a directed acyclic graph with a single source node

s and a single sink node

t (a source node refers to a node without incoming edge, and a sink node refers to a node without outgoing edges). Each edge

has three attributes

, as introduced in

Section 1.1.

- Critical paths and critical edges.

A path of N refers to a path of N from source s to sink t. Its length is the total length of the edges included, and the length of edge equals . The path of N with the longest length is called a critical path. The duration of N equals the length of the critical paths. There may be more than one critical path. An edge that belongs to some critical paths is called a critical edge.

- Accelerate plan X.

Denote by the multiset of edges of N that contains with a multiplicity . Each subset X of (which is also a multiset) is called an accelerate plan, or plan for short. The multiplicity of in X, denoted by , describes how much length is shortened; i.e., takes days when plan X is applied. The cost of plan X, denoted by , is .

- Accelerated project .

Define as the project that is the same as N, but with decreased by ; in other words, stands for the project optimized with plan X.

- k-crashing.

We say a plan X is k-crashing if the duration of the project N is shortened by k when we apply plan X.

- Cut of N.

Suppose that V is partitioned into two subsets , which, respectively, contain s and t. Then, the set of edges from S to T is referred to as a cut of N. Notice that we cannot reach t from s if any cut of N is removed.

Let be the duration of the original project N minus the duration of the accelerated project . Clearly, the duration of is at least the duration of , since X is a subset of . It follows that a k-crashing plan only exists for .

Throughout the paper, we assume that .

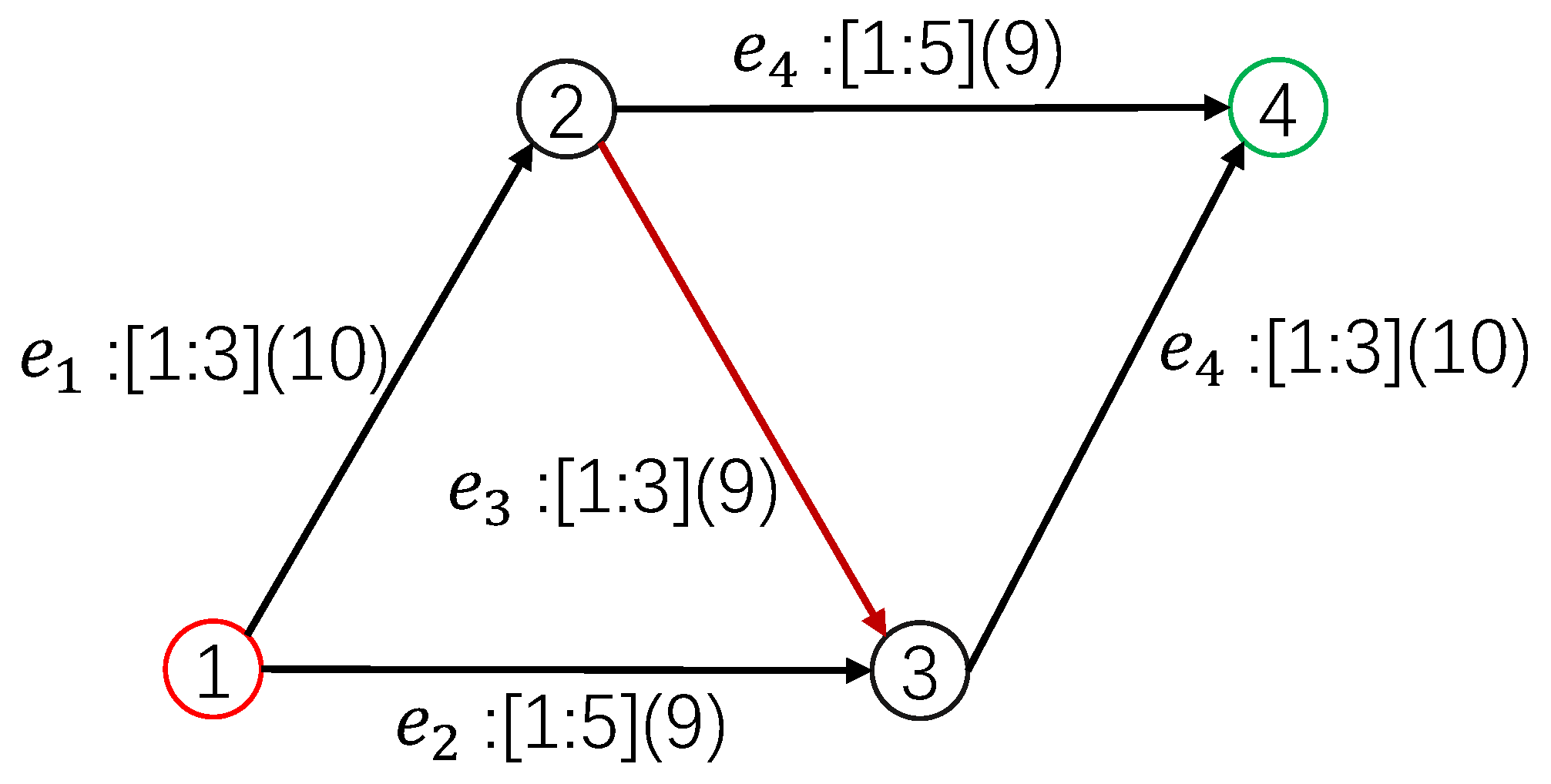

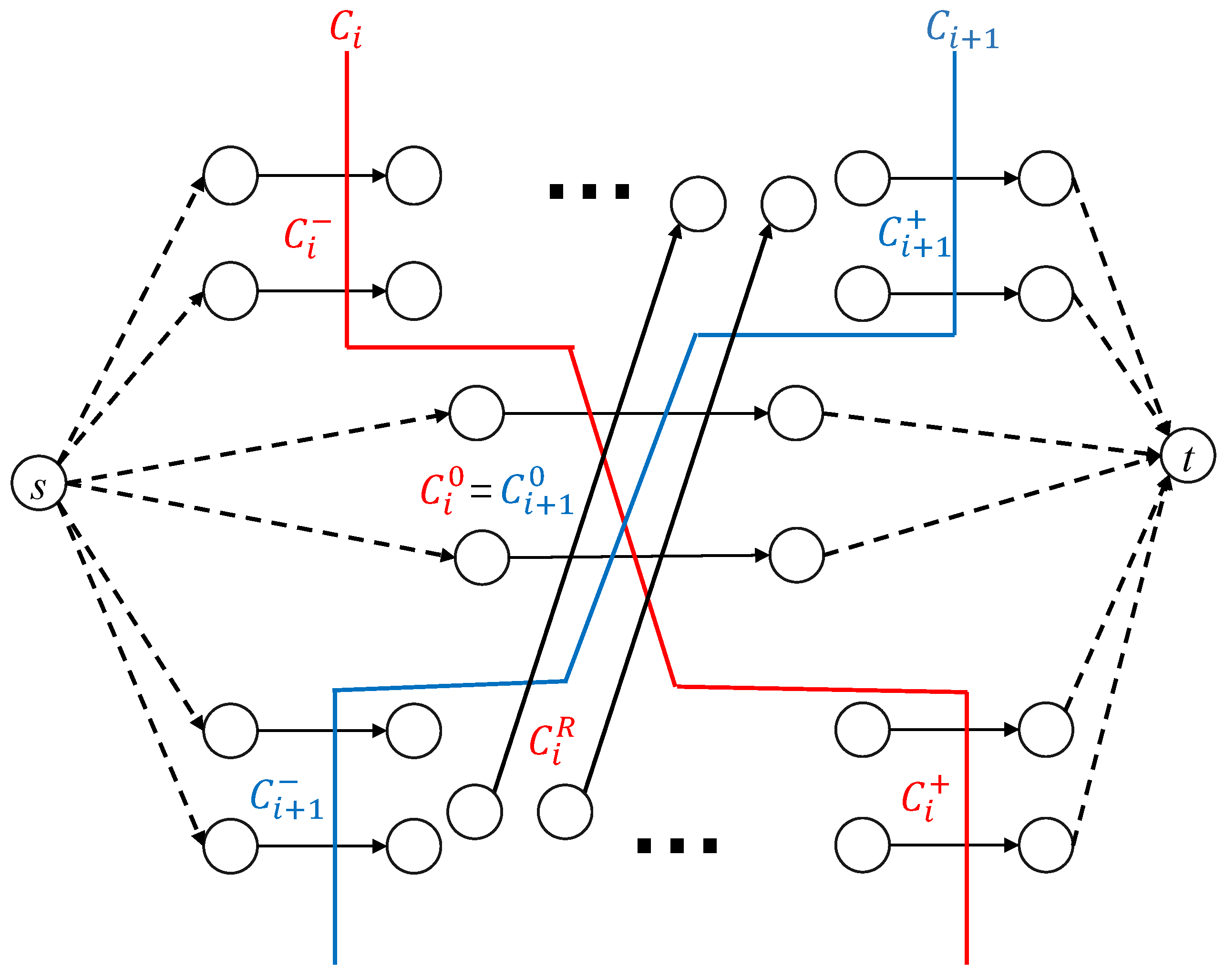

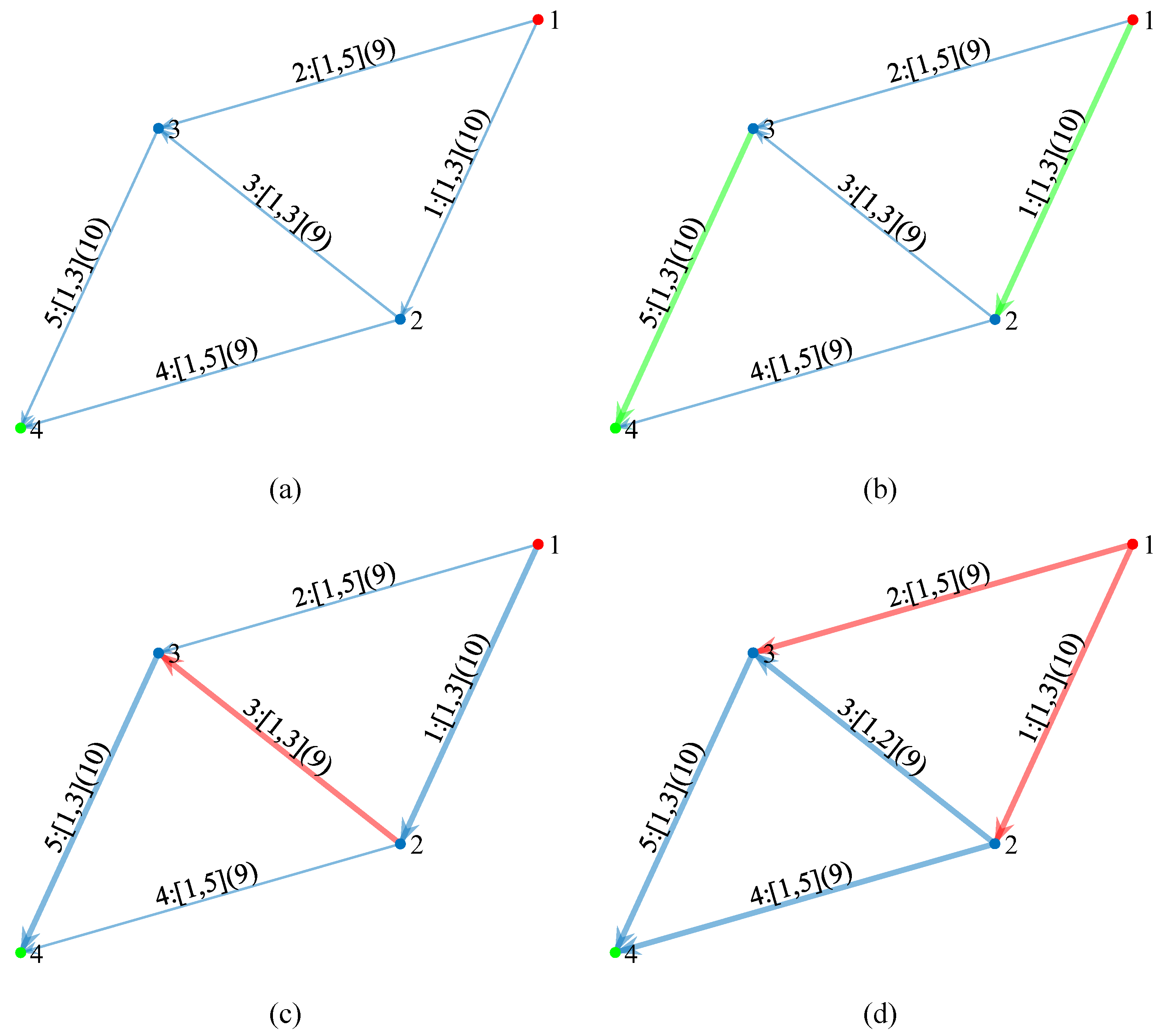

The greedy algorithm (Algorithm KD) in the following (see Algorithm 1) finds a

k-crashing plan efficiently. It finds the plan incrementally—each time it reduces the duration of the project by 1. See

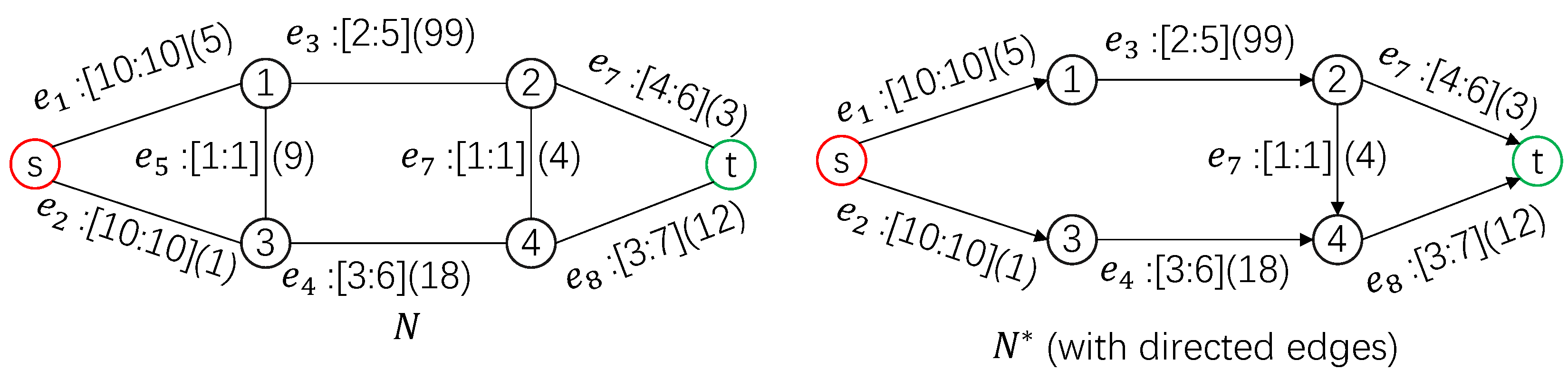

Figure 1 for an example of a 1-crashing problem of a project network.

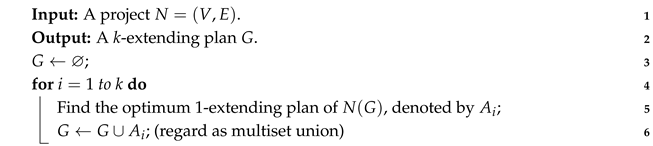

| Algorithm 1: Greedy algorithm for finding a k-crashing plan.(Algorithm KD). |

![Mathematics 13 02234 i001]() |

Observe that G is an i-crashing plan of N after the i-th iteration , as the duration of is reduced by 1 at each iteration. Therefore, G is a k-crashing plan at the end.

If a 1-crashing plan of does not exist in the i-th iteration , we determine that there is no k-crashing plan; i.e., .

Theorem 1. Let be the k-crashing plan found by Algorithm 1. Let denote the optimum k-crashing plan. Then, The proof of the theorem is given in the following, which applies Lemma 1. This applied lemma is nontrivial and is proven below.

Lemma 1. For any project N, its k-crashing plan (where ) costs at least k times the cost of the optimum 1-crashing plan.

Proof of Theorem 1. For convenience, let , for . Note that is the original project.

Fix i in in the following. By the algorithm, is the optimum 1-crashing plan of . Using Lemma 1, we know (1) any -crashing plan of costs at least .

Let and ; hence .

Observe that saves k days compared to N, because is k-crashing, whereas saves days compared to N. So, saves days compared to , which means (2) X is a -crashing plan of . Combining (1) and (2), .

Furthermore, since

(as

), we obtain a relation

. Therefore,

□

The critical graph of network H, denoted by , is formed by all the critical edges of H; all the edges that are not critical are removed in .

Before presenting the proof to the key lemma (Lemma 1), we shall briefly explain how we find the optimum 1-crashing plan of some project

H (e.g., the accelerated project

in Algorithm 1). First, compute the critical graph

and define the capacity of

in

by

if

in

H can still be shortened (i.e., its length is more than

); otherwise, define the capacity of

in

to be

∞. Then we compute the minimum

cut of

(using the max-flow algorithm [

24]), and this cut gives the optimum 1-crashing plan of

H.

3.1. Proof (Part I)

Proposition 1. A k-crashing plan X of N contains a cut of .

Proof. Because X is k-crashing, each critical path of N will be shortened in , and that means it contains an edge of X. Furthermore, since the paths of are critical paths of N (which simply follows from the definition of ), each path of contains an edge in X.

As a consequence, after removing the edges in X that belong to , we disconnect source s and sink t in . Now, let S denote the vertices of that can still be reached from s after removal, and let T denote the remaining part. Observe all edges from S to T in which form a cut of that belongs to X. □

When X contains at least one cut of network H, let be the minimum cut of H among all cuts of H that belong to X.

Recall that is the duration of network H.

In the following, suppose X is a k-crashing plan of N. We introduce a decomposition of X which is crucial to our proof.

(Because is k-crashing, applying Proposition 1, contains at least one cut of . It follows that is well-defined.)

Next, for

, define

Proposition 2. For , it holds that

1. (namely, ).

2. contains a cut of (and thus is well-defined).

Proof. 1. Because is a cut of , set is a 1-crashing plan of , which means .

Moreover, cannot hold. Otherwise, cancel one edge of and still holds, which means is at least 1-crashing for , and thus contains a cut of (by Proposition 1). This contradicts the assumption that is the minimum cut.

Therefore, .

2. According to Proposition 1, it is sufficient to prove that is a 1-crashing plan to .

Suppose the opposite, where is not 1-crashing to . There exists a path P in that is disjoint with . Observe that

(1) The length of P in is the original length of P (in N) minus the number of edges in X that fall in P.

(2) The length of P in is the original length of P (in N) minus the number of edges in that fall in P.

(3) The number of edges in that fall in P equals the number of edges in X that fall in P, because is disjoint with P.

Together, the length of P in equals the length of P in , which equals . This means X is not a k-crashing plan of P, contradicting our assumption. □

The following lemma easily implies Lemma 1.

Lemma 2. for any .

We show how to prove Lemma 1 in the following. The proof of Lemma 2 will be shown in the next subsection.

Proof of Lemma 1. Suppose X is k-crashing to N.

By Lemma 2, we know .

Furthermore, since

,

Because is the minimum cut of that is contained in X, whereas is the minimum cut of among all, . To sum up, we have □

It is noteworthy to mention that is not always equal to X and may not be k-crashing.

3.2. Proof (Part II)

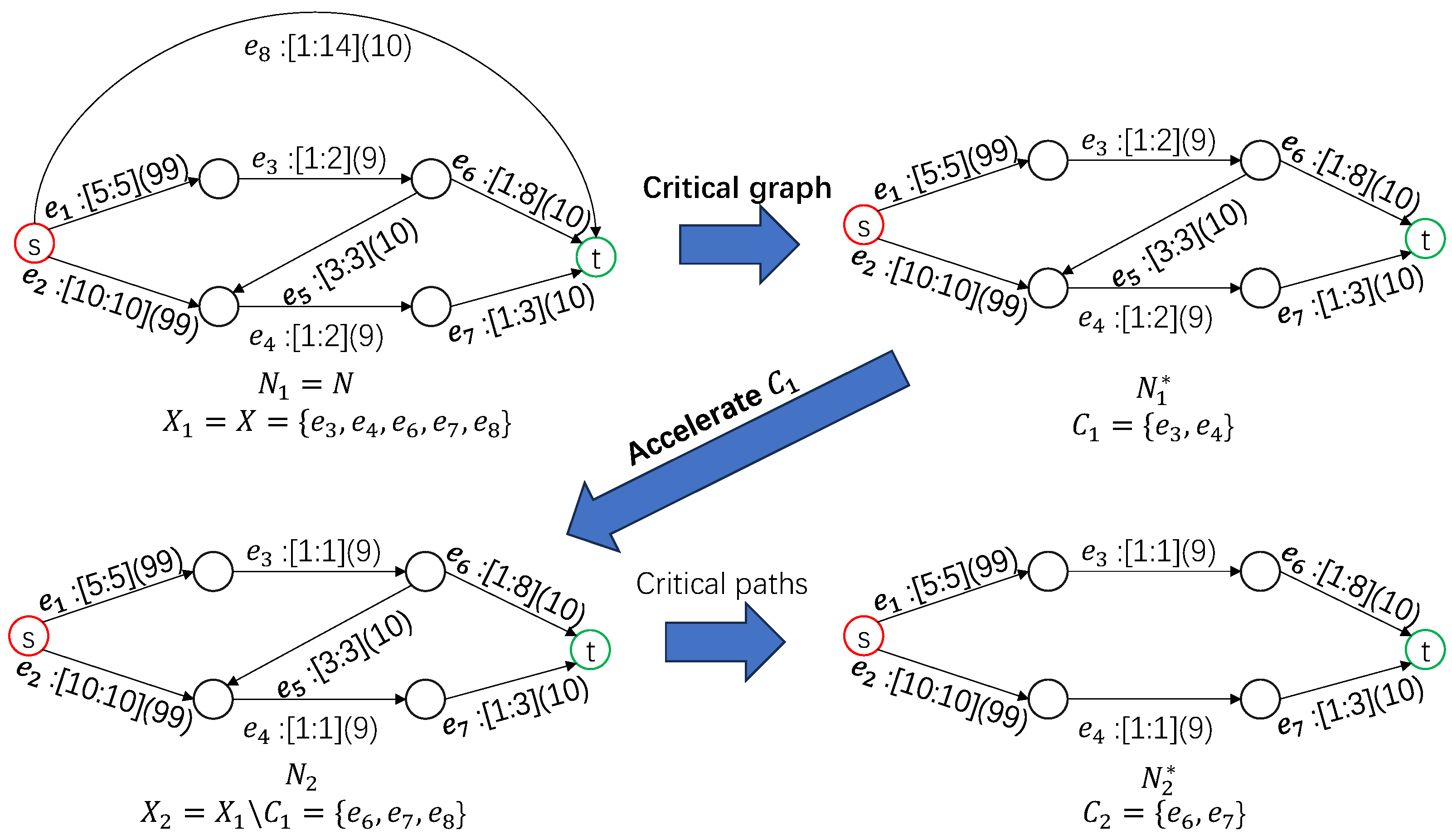

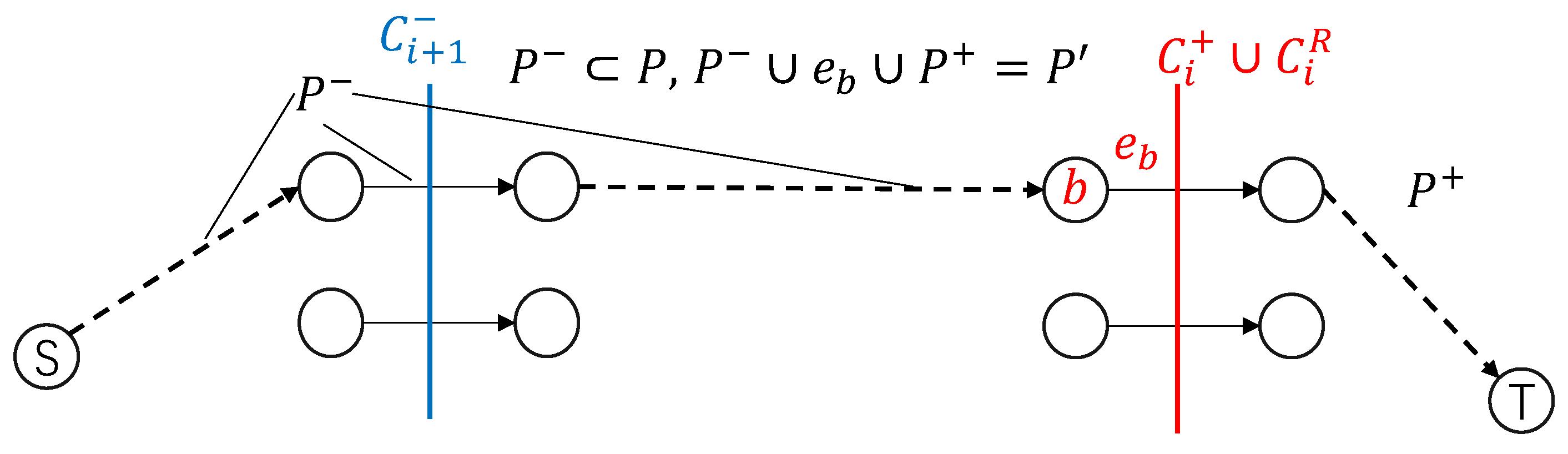

Assume

is fixed. In the following we prove that

, as stated in Lemma 2. Some additional notation shall be introduced here. See

Figure 3 and

Figure 4.

Assume the cut of divides the vertices of into two parts, , where and . The edges of are divided into four parts as follows: 1. —the edges within ; 2. —the edges within ; 3. —the edges from to ; and 4. —the edges from to .

Proposition 3. (1) and (2) .

Proof. (1) Consider . Any path involving goes through at least twice. Such paths are shortened by by at least 2 and are thus excluded from . However, are the edges in and so are included in . Together, .

(2) Suppose there is an edge and . All paths in passing will be shortened by at least 2 after expediting to avoid becoming a critical path (which makes critical). If the shortening of is canceled, the paths can still be shortened by 1. So still contains a cut to , which violates the assumption that is the minimum cut and is contradictory. So . □

Because

is a subset of the edges of

, and the edges of

are also in

, we see

. Furthermore, since

(Proposition 3), set

consists of the following three disjoint parts:

Due to

(Proposition 3), set

consists of four disjoint parts as follows:

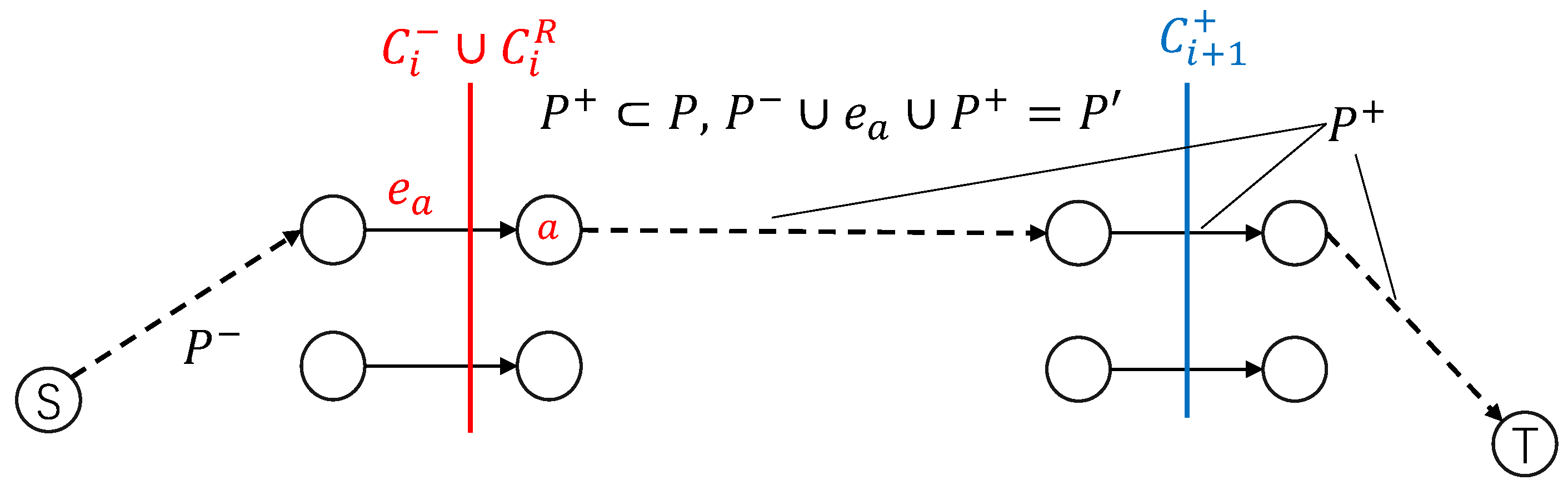

See

Figure 4 for an illustration. Note that

.

Proposition 4.

1. contains a cut of .

2. contains a cut of .

Proof. To show that contains a cut of , it is sufficient to prove that any path P in goes through .

Assume that P is disjoint with ; otherwise, it is trivial. We shall prove that P goes through .

Clearly,

P goes through

, a cut of

. Therefore,

P goes through

. See

Figure 5.

Take the last edge in that P goes through; denoted by with endpoint a. Denote the part of P after by ().

We now claim that

(1) .

(2) In , there must be a path from the source to that does not pass through ().

(3) .

Since P is disjoint with and has gone through , we obtain (1).

If (2) does not hold, then all paths in that pass through have passed through already. In this case, also contains a cut of , which contradicts our definition of minimum cut . Thus, we have (2).

By definition (

4), any edge of

ends at a vertex of

. Since

a is the endpoint of

, we have (3).

By (2), we can obtain a from s to . Concatenating , we obtain a path in . and , only goes through once. So is only shortened by 1 and is still critical after expediting . Therefore, exists in .

According to (3), we know that starts with and ends at the sink in . Thus, it must go though cut .

According to (1) and definition (

3), path

(which is a subset of

due to (1)) can only go through

.

Since , we know that P goes through . So any path P in goes through .

Therefore, contains a cut of .

Symmetrically, we can show that contains a cut of , □

We are ready to prove Lemma 2.

Proof of Lemma 2. According to Proposition 4,

and

each contain a cut of

. Notice that

, so the mentioned two cuts are in

. Furthermore, since

is the minimum cut of

in

. We obtain

By adding the inequalities above (and noting that

), we have

By removing one piece of

from both sides,

Therefore, . □

Besides, with Lemma 2, we can now prove that the cost of the greedy steps is incremental during Algorithm 1.

Corollary 1. For neighboring greedy steps and , we have .

Proof. Note that any neighboring greedy steps and can be seen as the two greedy steps of a 2-crashing problem. Therefore, we can reduce it to proving that for any 2-crashing problem.

In this case, recall the definitions related to Lemma 2. We now let and X be the greedy solution for the 2-crashing problem. Naturally, we have and . By definition, we know that and . Then, we have .

By Lemma 2, we know that . Since and , we have . □

With the upper bound of cost for Algorithm 1 (i.e., Theorem 1) and Corollary 1, we can also obtain the lower bound of k for the greedy algorithm when the budget is fixed and the target k is not.

For this symmetric problem, we describe the algorithm as follows.

Note that Algorithms 1 and 2 are essentially the same procedure. The difference is merely the termination condition of the loop. Therefore, for the same

k-crashing result, they deliver exactly the same plan. Then, for the

k in Algorithm 2, we have the following corollary. (For simplicity, we use the harmonic number

below.)

| Algorithm 2: Greedy algorithm for finding a crashing plan with a limited budget a. |

![Mathematics 13 02234 i002]() |

Corollary 2. For budget a, project . Suppose we can shorten the network by with Algorithm 2 and by in the optimum solution. We have Proof. Suppose Algorithm 2 can deliver a

-crashing plan with a budget of at least

x. Since Algorithm 1 delivers the same plan for the

-crashing problem, by Theorem 1, we have

Then by Corollary 1, we know that the cost of greedy steps is incremental. Then the average cost of the greedy procedure is incremental as well. Therefore, we have

□

6. k-Extending Problem

In

Section 3, we have proven the upper bound of Algorithm KD in

k-crashing problem. The result naturally leads us to a similar problem. With most of the notations unchanged, we now consider the network to be not directed in this

k-extending problem. In this problem, we try to extend the length of the project (i.e., lengthening all the shortest paths (from

s to

t) in the network). This problem actually shares a lot of properties in common with the

k-crashing problem. Using a similar technique, we can obtain the same

upper bound for the greedy algorithm of the

k-extending problem below.

Here are some notations that have changed in this section compared with the k-crashing context.

- Project N.

Assume is a directed acyclic graph with a single source node s and a single sink node t. Each edge has three attributes , where denotes its current duration, denotes its maximum durations, and denotes its cost per unit extension.

- Shortest paths and critical edges.

The path from s to t with the shortest length is called the shortest path. The duration of N equals the length of the shortest paths. There may be more than one shortest path. An edge that belongs to some of the shortest paths is called a critical edge.

- Extend plan X.

Denote by the multiset of the edges of N that contains with a multiplicity . Each subset X of (which is also a multiset) is called an extend plan, or plan for short. The multiplicity of in X, denoted by , describes how much the length is extended; i.e., takes days when plan X is applied. The cost of plan X, denoted by , is .

- Extended project .

Define as the project that is the same as N, but with increased by ; in other words, stands for the project optimized with plan X.

- k-extending.

We say a plan X is k-extending, if the length of the shortest paths of the project N is extended by k when we apply plan X.

Formally, for a k-extending problem, we have

Theorem 3. Let be the k-extending plan found by Algorithm 3. Let denote the optimum k-extending plan. Then, Let

denote the network consisting of all the shortest paths (from

s to

t) in

N. Although the network

N is undirected in this case, we can still assign a direction for every edge of the critical edges of the network and thus have

as a directed path.

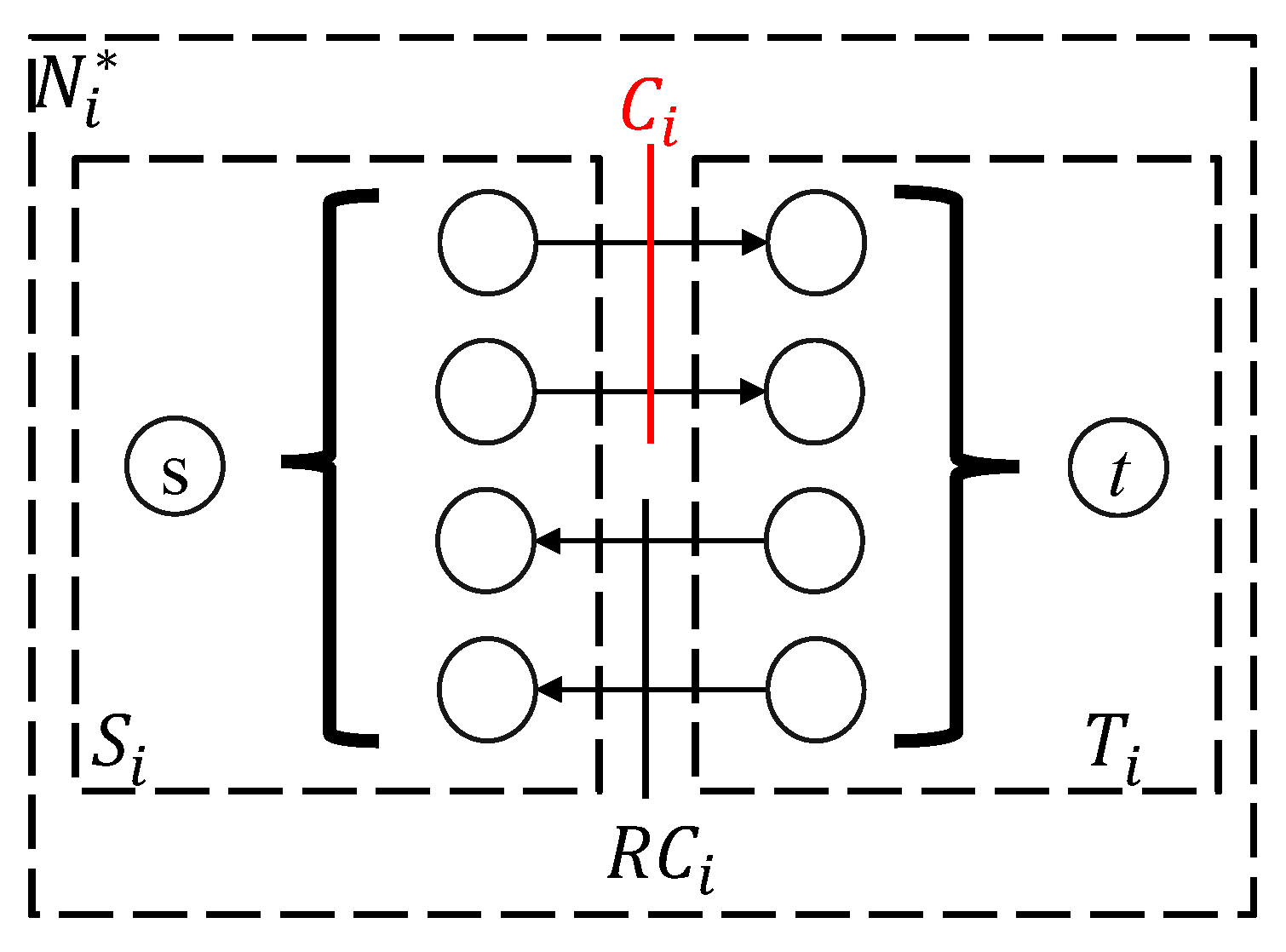

| Algorithm 3: Greedy algorithm for finding a k-extending plan. |

![Mathematics 13 02234 i003]() |

For a given network and two nodes a and b, let and denote the lengths of their shortest path to s. If , the edge between a and b (if it exists) is assigned a direction from a to b since a comes before b in all shortest paths. As a result, we can always assume that each edge in the shortest paths has a direction.

As a result, although the original network is not directed, the shortest path network

can be treated as a directed network. See

Figure 9 for an example.

Similar to Proposition 1, we know that an optimum 1-extending plan contains a cut of (treated as directed, like described above).

Proposition 5. A k-extending plan X of N contains a cut of .

Proof. Because X is k-extending, each shortest path of N will be extended in and that means it contains an edge of X. Furthermore, since the paths of are the shortest paths of N (with directions now), each path of contains an edge in X.

As a consequence, after removing the edges in X that belong to , we disconnect source s and sink t in . Now, let S denote the vertices of that can still be reached from s after removal and let T denote the remaining part. Observe that all edges from S to T in form a cut of that belongs to X.

□

Let be the minimum cut of among all cuts of (a directed network) that belong to X.

- Decomposition of plan X

Recall the decomposition procedure in

Section 3. We can a execute similar procedure for any

k-extending plan

X. Namely,

Next, let

denote the network

N without directions on its edges. For

, define (like in Equation (

2))

- Decomposition of plan and

Assume the cut of divides the vertices of into two parts, , where and . The edges of are divided into four parts as follows: 1. —the edges within ; 2. —the edges within ; 3. —the edges from to ; and 4. —the edges from to ;

Therefore, we can still divide

into three parts like in Equation (

3).

Then, just like in Equation (

4),

also consists of

Similar to Proposition 4, we can prove that

Proposition 6.

1. contains a cut of .

2. contains a cut of .

Proof. Symmetric to Proposition 4, here we prove that contains a cut of . To show that, it is sufficient to prove that any path P in goes through .

Assume that P is disjoint with ; otherwise, it is trivial. We shall prove that P goes through .

Clearly,

P goes through

, a cut of

. Therefore,

P goes through

. See

Figure 10.

Take the first edge in that P goes through; denoted by with start point b. Denote the part of P before by ().

We now claim that

(1) .

(2) In , there must be a path from to the sink that does not pass ().

(3) .

Since P is disjoint with and has not gone through yet, we obtain (1).

If (2) does not hold, then all paths in that pass through will pass through again. In this case, also contains a cut of , which contradicts our definition of minimum cut . Thus, we have (2).

By definition (

6), any edge of

starts at a vertex of

. Since

b is the start point of

, we have (3).

By (2), we can obtain a from to the sink. Concatenating , we obtain a path in . and , only goes through once. So is only extended by 1 and it is still the shortest after extending . Therefore, exists in .

According to (3), we know that ends with and starts at the source in . Thus, it must go though the cut .

According to (1) and definition (

5), path

(which is a subset of

due to (1)) can only go through

.

Since , we obtain that P goes through . So any path P in goes through .

Therefore, contains a cut of .

Symmetrically, we can show that contains a cut of . □

- Critical inequality

Notice that

, so the mentioned two cuts are in

. Furthermore, since

is the minimum cut of

in

, we obtain

By adding the inequalities above (and noting that

), we have

By removing one piece of

from both sides,

Therefore, .

- Obtaining Theorem 3

Since

is the minimum cut of

, we have

. Thus, by

and

, we have

can be seen as the

for a

-extending problem and

contains a plan for the

-extending problem. Similar to Theorem 1, we can derive

The above inequality is exactly the same as Theorem 3