Abstract

The current design of reinforcement learning methods requires extensive computational resources. Algorithms such as Deep Q-Network (DQN) have obtained outstanding results in advancing the field. However, the need to tune thousands of parameters and run millions of training episodes remains a significant challenge. This document proposes a comparative analysis between the Q-Learning algorithm, which laid the foundations for Deep Q-Learning, and our proposed method, termed M-Learning. The comparison is conducted using Markov Decision Processes with the delayed reward as a general test bench framework. Firstly, this document provides a full description of the main challenges related to implementing Q-Learning, particularly concerning its multiple parameters. Then, the foundations of our proposed heuristic are presented, including its formulation, and the algorithm is described in detail. The methodology used to compare both algorithms involved training them in the Frozen Lake environment. The experimental results, along with an analysis of the best solutions, demonstrate that our proposal requires fewer episodes and exhibits reduced variability in the outcomes. Specifically, M-Learning trains agents 30.7% faster in the deterministic environment and 61.66% faster in the stochastic environment. Additionally, it achieves greater consistency, reducing the standard deviation of scores by 58.37% and 49.75% in the deterministic and stochastic settings, respectively. The code will be made available in a GitHub repository upon this paper’s publication.

Keywords:

reinforcement learning; exploration–exploitation dilemma; Q-learning; frozen lake; heuristic approach MSC:

68Q32; 68T05

1. Introduction

The current development of artificial intelligence (AI) is strongly associated with high costs and extensive computational resources [1], and the field of reinforcement learning (RL) is no exception to this challenge. Algorithms such as the Deep Q-Network (DQN) [2] have achieved significant results in advancing RL by integrating neural networks. However, the requirement for thousands or millions of training episodes persists [3]. Training agents in parallel has proven to be an effective strategy for reducing the number of episodes required [4,5], which is feasible in simulated environments but poses significant implementation challenges in real-world physical training environments.

In the context of reinforcement learning, Markov Decision Processes (MDPs) are typically used as the mathematical model to describe the working environment [6,7]. MDPs have enabled the development of classical algorithms such as Value Iteration [8], Monte Carlo, State-Action-Reward-State-Action SARSA, and Q-Learning, among others.

One of the most widely used algorithms is Q-Learning, introduced by Christopher Watkins in 1989 [9], upon which various proposals have been based [10]. The main advantage of this algorithm is an optimal policy that maximizes the reward obtained by the agent if the action-state set is visited an infinite number of times [9]. The Q-Learning algorithm implemented in this work was taken from [9] and is shown in Algorithm 1.

| Algorithm 1: Q-Learning algorithm |

Algorithm parameters: step size , small Initialize , for all , , arbitrarily except that Loop for each episode: - Initialize s - Loop for each step of the episode: - - Choose a from s using policy derived from Q (e.g., -greedy) - - Take action a, observe , - - Update - until is terminal |

Some disadvantages of Q-Learning include a rapid table size growth as the number of states and actions increases, leading to the problem of dimensional explosion [11]. Furthermore, this algorithm tends to require a significant number of iterations in order to solve a problem, and adjusting its multiple parameters can prolong this process.

Regarding its parameters, Q-Learning requires directly assigning the learning rate and the discount factor , which are generally tuned empirically through trial and error by observing the rewards obtained in each training session [12].

The learning rate directly influences the magnitude of the updates made by the agent to its memory table. In [13], eight different methods for tuning this parameter are explored, including iteration-dependent variations and changes based on positive or negative table fluctuation. The experimental results of [13] show that, for the Frozen Lake environment, the rewards converge above 10,000 iterations across all conducted experiments.

In Q-Learning, the exploration–exploitation dilemma is commonly addressed using the -greedy policy, where an agent decides to choose between a random action and the action with the highest Q-value with a certain probability. Current research around the algorithm focuses extensively on the exploration factor , as described in [14], highlighting its potential to cause exploration imbalance and slow convergence [15,16,17].

The reward function significantly impacts the agent’s performance, as reported in various applications. For instance, in path planning tasks for mobile robots [18], routing protocols in unmanned robotic networks [19], and the scheduling of gantry workstation cells [20], the reward function plays a crucial role by influencing the observed agent performance variations.

Regarding the initial values of the Q-table, the algorithm does not provide specific recommendations for their assignment. Some works, such as [21], which utilize the whale optimization algorithm proposed in [22], aim to optimize the initial values of the Q-table, addressing the slow convergence caused by Q-Learning’s initialization issues.

The reward function directly impacts the agent’s training time. The reward can guide the agent through each state or be sparse across the state space, requiring the agent to take many actions and visit many states before receiving any reward, generally at the end of the episode, thereby learning from delayed rewards. As concluded in [23] (p. 4), when analyzing the effect of dense and sparse rewards in Q-Learning, “an important lesson here is that sparse rewards are indeed difficult to learn from, but not all dense rewards are equally good”.

The proposed heuristic is inspired by key conceptual elements of Q-Learning, such as the use of a table for storage, and suggests an alternative approach to addressing the exploration–exploitation dilemma based on the probability of actions. This heuristic also aims to reduce the complexity of adjustments and the time required to apply Q-Learning. This algorithm, termed M-Learning, will be explained in detail and referenced throughout this document.

The primary contributions of this study revolve around the development of the M-Learning algorithm, designed to tackle reinforcement learning challenges involving delayed rewards. Firstly, the algorithm introduces a self-regulating mechanism for the exploration–exploitation trade-off, eliminating the necessity for additional parameter tuning typically required by -greedy policies. This advancement simplifies the configuration process and enables the system to dynamically balance exploration and exploitation. Secondly, M-Learning employs a probabilistic framework to assess the value of actions, allowing for a comprehensive update of all action probabilities within a state through a single environmental interaction. This design enhances the algorithm’s responsiveness to changes, improving the utilization of computational resources and increasing the overall effectiveness of the learning process.

2. Defining the M-Learning Algorithm

The main idea of M-Learning is to determine what constitutes a solution in an MDP task for the agent and how this solution can be understood in terms of the elements that make up Markov processes. The purpose is to find solutions that are not necessarily optimal. On the other hand, Q-Learning searches for the optimal solution through the reward function and the -greedy policy, both of which are aspects to be tuned when solving general tasks.

The first consideration involves establishing a foundation for the reward function. In the MDP framework, the solutions to a task are represented as trajectories composed of sequences of states. In Q-Learning, the objective is to find the trajectory that maximizes the reward function. However, our approach focuses on trajectories that connect to a specific state. The objective of M-Learning is to find a particular s within the set S, referred to as the objective state . Thereupon, this work proposes assigning the maximum reward value to the state and the minimum reward value to the terminal states . The rationale behind this approach is to encourage the agent to remain active throughout the episode.

During the RL cycle, the reward signal aims to guide the agent in searching for a solution. Through it, the agent obtains feedback from the environment as to whether its actions are favorable. Q-Learning uses a reward factor to modify the action-state value and estimate it. However, in environments where the reward is sparsely distributed and the agent does not receive feedback from the Q-Learning environment for most of the time, the algorithm must solve the search for the reward with exploration time, thus reaching the exploitation–exploration dilemma, which is usually tackled with an -greedy policy.

Using the -greedy policy as a decision-making mechanism requires setting the decay rate parameter , which determines how long the agent will explore while seeking feedback from the environment. This parameter is decisive in reducing the number of iterations needed to find a solution. Additionally, this parameter is not related to the learning process; rather, it is external to the knowledge being acquired. It is only possible to assign a favorable value by interacting with the problem through computation, making variations in the decay rate and the number of iterations. It should be kept in mind that this is also conditioned by the other parameters that need to be defined.

If the reward is considered to be the only source of stimulus for the agent in an environment with dispersed rewards, the agent must explore the state space in order to interact with states where it obtains a reward and, based on them, modify its behavior. As these states are increasingly visited, the agent should be able to reduce the level of exploration and exploit more of its knowledge about the rewarded states. The agent explores in search of stimuli, and, to the extent that it acquires knowledge, it also reduces arbitrary decision making. The exploration–exploitation process can be discretely understood with regard to each state in the state space. Thus, the agent can propose regions that it knows in the state space and, depending on how favorable or unfavorable they are, reinforce the behaviors that lead it to achieve its objective or seek to explore other regions.

As previously mentioned, exploration and decision-making are closely linked to how the reward function is interpreted. The specific proposal of M-Learning consists of the following:

- First, the reward function is limited to values between −1 and 1, assigning the maximum value for reaching the goal state and the minimum for terminal states .

- Second, each action in a state has a percentage value that represents the level of certainty regarding the favorability of said action. Consequently, the sum of all actions in a state is 1. Initially, all actions are equally probable.

Q-Learning also rewards and penalizes the agent in a similar way, but the effect of this process can be obscured by the -probability. For example, if the agent reaches a terminal state in the initial phase of an episode, the environment punishes it through the reward function, and this learning is stored in its table. In subsequent episodes, this learned information has a low probability of being used by the agent since the exploration probability is high. In M-Learning, this learned knowledge is available for the next episode because it modifies the value of the actions that lead to .

Note that the values of the actions are expressed as probabilities, which are used to select the action in each state. Thus, when interacting with the environment, favorable actions are more likely to be selected—unlike unfavorable ones. The principle is that, when the agent has no prior knowledge of a state, all actions have an equal probability. As the agent learns, this knowledge is stored in the variations of these probabilities. The M-Learning algorithm is presented in Algorithm 2 and the notations with their definitions are shown in Table 1.

| Algorithm 2: M-Learning |

Initialize Q(s,a) for all s ∈, a, with , and set Loop for each episode: - Initialize s - Loop for each step of the episode: - - Choose a from s using probability Q(s,) for - - Take action a, observe , - - Update - - Resize the probability of to 1, - until is terminal |

Table 1.

Notation and Definitions.

The first step in the M-Learning algorithm is to initialize the table, unlike in Q-Learning, where the initial value can be set arbitrarily. The value of each state-action is initialized as a percentage of the state according to the number of actions available. Thus, the initial value for each action in a state will be the same as shown in (1).

Once the table has been initialized, the cycle of episodes begins. The initial state of the agent is established by observing the environment. The cycle of steps begins within the episode, and, based on its initial state, the agent chooses an action to take. To this effect, it evaluates the current state according to its table, obtains the values for each action, and randomly selects one using its value as the probability of choice. Thus, the value of each action represents its probability of choice for a given state, as presented in Equation (2).

After selecting an action, the agent acts on the environment and observes its response in the form of a reward and a new state. After transitioning to the new state, it evaluates the action taken and modifies the value of the action-state according to (3).

The percent modification value of the previous state-action is computed while considering the existence of the reward (4). If exists, is calculated based on the current reward or on both the current and previous rewards. If there is no reward, it is calculated as the differential of information contained in the current state and the previous .

When the environment rewards the agent for his action, . is a measure of the approach to . Specifically, the best case of the new state will be the target state , with a maximum positive reward . In the other case, the new state will be radically opposite to the target state and its reward will be a maximum but negative . When the reward is not maximal, the value of (5) may be calculated through a function that maps the reward differential within the range . For this case, a first-order relationship is proposed.

For the case when , it is necessary to calculate dynamically in proportion to the magnitude of . This presents a challenge for tuning and increases the difficulty of adjusting the algorithm. Consequently, using terminal rewards of 1 and −1 provides an advantage as it simplifies the reward structure by eliminating the need for fine-grained adjustments. Since this setup corresponds to a more complex environment that extends beyond the primary objective of this work’s target environment, further exploration of this aspect is not pursued.

If the reward function of the environment is described in such a way that its possible values are (1, 0, −1), the calculation of m can be rewritten by replacing with . This describes environments where it is difficult to establish a continuous signal that correctly guides the agent through the state space. In these situations, it may be convenient to generate a stimulus for the agent only upon achieving the objective or causing the end of the episode. Accordingly, Equation (4) can be rewritten as (6):

When the environment does not return a reward , the agent evaluates the correctness of its action based on the difference in the information stored regarding its current and previous state, as depicted in Equation (7). This is the way in which the agent spreads the reward stimulus.

To calculate the information for a state , Equation (8) evaluates the difference between the value of the maximum action and the mean of the possible actions in said state. The mean value of an action is also its initial value, which indicates that no knowledge is available in that state for the agent to use in the selection process. In other words, the variation with respect to the mean is a percentage indicator of the agent’s level of certainty when selecting a particular action.

Since the number of actions available in the previous and current states may differ, it is necessary to put them on the same scale to allow for comparison. When calculating the mean value of the actions, the maximum variation in information is also determined, which represents the change required in the value of an action for it to have a probability of 1. The maximum information is, therefore, the difference between the maximum and the mean action value, as shown in Equation (9).

In order to modify the previous state-action, the information value of the current state is adjusted to align with the information scale of the previous state, as shown in Equation (10). Suppose two states with different numbers of available actions and the following values: and . The certainty of the best action is , but the information differs due to the number of actions in each state. Action 2, with a value of in state , competes with two additional options; therefore, the information of the states must be adjusted for a correct comparison.

It is possible that, for a state , all actions are restricted to the extent that the value of all possible actions becomes zero, making it impossible to calculate the maximum information for . Therefore, a negative constant is assigned to indicate that the action taken leads to an undesirable state. Consequently, the information differential is defined according to Equation (11).

The magnitude is a parameter that controls the maximum saturation of an action during propagation. This value allows controlling how closely the value of an action can approach the possible extremes of 0 and 1.

This parameter represents the maximum certainty for an action and the lowest probability value for one action. To establish this, derive it from the number of actions , as given by (12):

After calculating m and modifying the action-state , the probabilities within the state must be distributed to maintain . This is performed by applying (13) to previous state actions.

Thus, variations in the value of the selected action affect the rest of the actions. The principle is that, as the agent becomes increasingly certain about one action, the likelihood of selecting other actions decreases. Conversely, reducing the likelihood of selecting a particular action increases the certainty of choosing the others.

Similarly to the -greedy approach, if a minimum level of exploration is to be maintained, it is necessary to restrict the maximum value that an action can reach. This is controlled by the parameter , which affects the redistribution of probabilities when the maximum action exceeds the saturation limit, i.e., when . In such cases, the value of is reduced so that it matches the saturation limit when applying Equation (14).

Finally, the process returns to the beginning of the cycle for the specified number of steps and continues executing the algorithm until either the step limit is reached or a terminal state is encountered.

Example of an M-Learning Application

Suppose that the agent is in the state preceding the objective state, i.e., , for the first time, and four actions are available to the agent, denoted as . The initial values of these actions in the table for are shown (15):

The agent selects an action using an equal probability of for each option. Suppose that the agent selects the action leading to state , for instance, action two. The agent executes the action, the environment transitions to state , and the agent receives a reward of . The agent updates its state, as expressed in Equation (16).

Now, the agent updates its table according to Equation (3). First, the value of m is computed using Equation (4), since and . Therefore, , following Equation (6) for environments with (1, 0, −1) rewards. The updated value for is calculated in Equation (17).

The new value of action two in the table for is shown in Equation (18). Clearly, the sum of the action values now exceeds 1, requiring normalization in the next step of the M-Learning algorithm.

Applying Equation (13) for state , the normalized values of the actions are computed as follows in Equation (19):

The next time the agent reaches state , it will be more likely to choose action to obtain the reward, progressively reinforcing this action for this state as it continues to receive rewards.

3. Experimental Setup

To compare the performance of M-Learning and Q-Learning, agents were trained in two distinct environments: one deterministic and one stochastic.

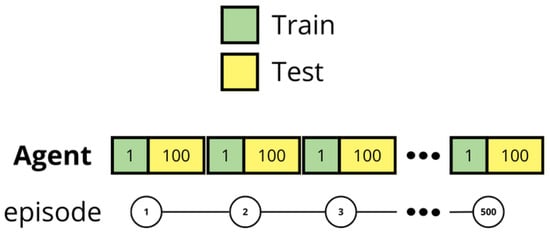

The methodology involved evaluating the agents after each training episode. During the training process, the agent was allowed to update its memory table according to the specific algorithm being employed. Following each training episode, the agent attempted to achieve the objective in the environment using the resulting memory table. This evaluation was repeated 100 times, during which the agent’s memory table remained fixed and could no longer be modified. As illustrated in Figure 1, during the training phase, the agent updates its memory table; whereas, during the testing phase, it utilizes its current memory table without making further modifications. During the test, exploration methods are disabled, including in Q-Learning and the action probability in M-Learning.

Figure 1.

Overview of the methodology used to train and test the methods.

The test score used to compare both algorithms is based on the number of times the agents successfully locate the objective during testing. Evaluation is conducted over 100 attempts, measuring how often the agent achieves success following a training episode. In a deterministic environment, the outcomes are binary, resulting in either a 100% loss rate or a 100% success rate. This evaluation method is particularly valuable in stochastic environments, as it assesses the agent’s success rate under uncertain conditions.

The purpose of this test score is to evaluate how effectively the agents learn in each episode when applying the respective algorithm (Q-Learning or M-Learning). The policy implemented by both algorithms in the test involves always selecting the action with the highest value . This approach enables us to measure the agents’ learning progress and their ability to retain the knowledge acquired during training.

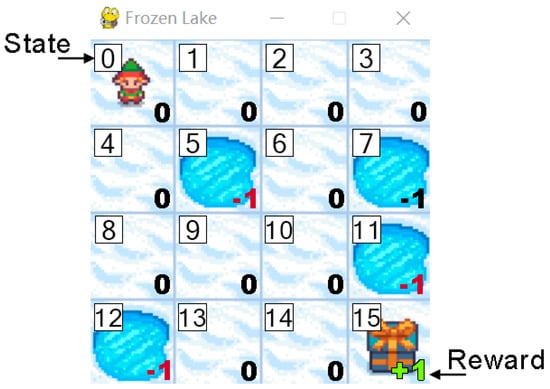

The game used for this study is Frozen Lake (Figure 2), where the objective is to cross a frozen lake without falling through a hole. The game is tested in its two modes: slippery and non-slippery, representing stochastic and deterministic environments, respectively. The tiles and, thus, the possible states of the environment are represented as integers from 0 to 15. From any position, the agent can move in four possible directions: left, down, right, or up. If the agent moves to a hole, it receives a final reward of −1, and the learning process terminates. Conversely, reaching the goal results in a reward of 1. Any other position where the agent moves results in a reward of 0.

Figure 2.

The Frozen Lake environment with some states and rewards.

This environment presents two variations, each representing a distinct problem for the agent. In the first variation, the environment allows the agent to move in the intended direction and the state transition function is deterministic. In the second variation, when the agent attempts to move in a certain direction, the environment may or may not guide it as intended, depending on a predefined probability. Specifically, the probability of slipping and consequently moving in an orthogonal direction to the intended one is . In this case, the state transition function is stochastic.

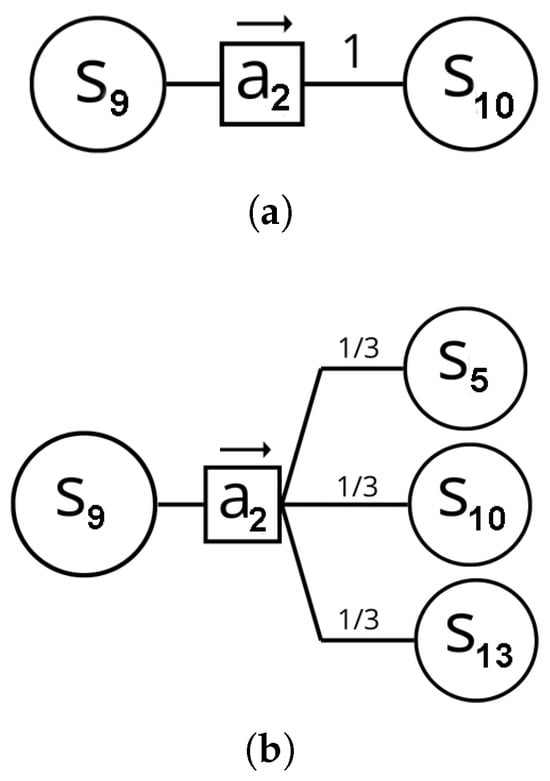

Refer to Figure 3 for a representation of the transitions. Suppose that the agent is in state and takes action , moving to the right. In the deterministic environment, the probability of transitioning to state from upon taking action is 1, . In the stochastic environment, the probability of transitioning to state from upon taking action is . Additionally, there are two other possible outcomes: moving to orthogonal directions, and . In conclusion, in the stochastic environment, the agent has a probability of moving in the intended direction and a probability of moving in an orthogonal direction.

Figure 3.

Representation of the state transition functions of the environments: (a) Deterministic. (b) Stochastic.

Frozen Lake is available in the Gymnasium library, a maintained fork of OpenAI’s Gym [24], which is described as “an API standard for reinforcement learning”. This environment is fully documented in the Gymnasium resources. Table 2 provides a detailed description of these environments.

Table 2.

Description of environments.

For the grid parameter search in Q-Learning, 200 agents were trained for each combination of algorithm parameters. After selecting the optimal parameter set for Q-Learning, an additional 1000 agents were trained to compare their results with those obtained using M-Learning. Table 3 summarizes the experimental configuration. The grid parameter search included five values for , five values for , and four values for , resulting in a total of parameter combinations.

Table 3.

Configuration of experimental grid parameter search and final comparison.

Tuning the Algorithms’ Parameters

To train agents using Q-Learning, it is crucial to appropriately configure the algorithm’s parameters. The initial values of the Q-table were initialized with pseudo-random values within the range of 0 to 1. The minimum value () for the -greedy policy was set to 0.005. A grid search (Table 4) was performed for , , and , as these parameters have a significant influence on the algorithm’s performance.

Table 4.

Grid parameter search for Q-Learning algorithm.

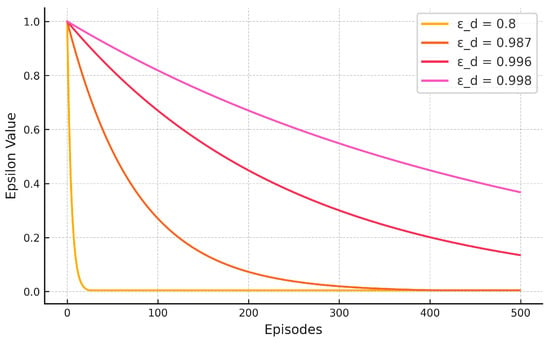

The parameters of the Q-Learning algorithm in (Table 4) are proposed to explore the space of the parameter grid search. The purpose is to see the algorithm’s behavior over the parameter space. Values of alpha and gamma are proposed to be searched uniformly since both are independent of the number of episodes. For , four values are proposed that maintain the exploration–exploitation balance within the range of episodes. Figure 4 shows the behavior of with these values of .

Figure 4.

Epsilon decay behavior.

The training of agents using our proposed M-Learning method involved setting the parameter to 0.96, as proposed by Equation (12).

4. Results

For each experiment, a dataset containing the test scores of the agents across the episodes was obtained. The score refers to the number of test episodes that the agent successfully completes. For each episode, the test scores were averaged, and a 95% confidence interval was calculated, assuming a normal distribution. In this section, the results are displayed as line graphs, with the average value plotted as a solid line in a strong color. The confidence interval is represented as a shaded area around the solid line in a fainter version of the same color.

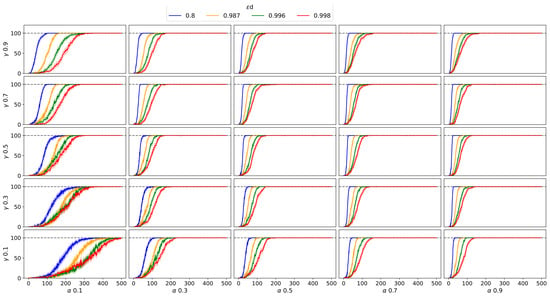

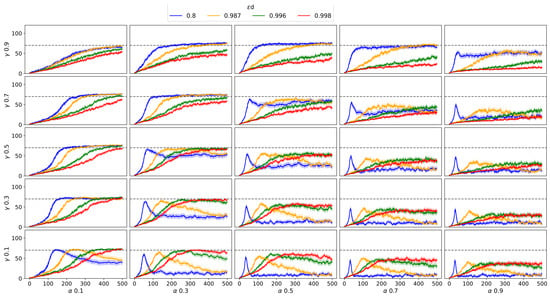

Figure 5 and Figure 6 show the results of a grid search over parameters, using one hundred sets of parameters for Q-Learning. The vertical axis represents the number of successful tests, while the horizontal axis represents the number of episodes. Each line shows the results for a specific set of parameters, with colors corresponding to the parameter. The external grid indicates the values of (vertical) and (horizontal) in the grid search.

Figure 5.

Q-agent results in a deterministic environment. For the subplots, vertical axis is the number of successful tests and the horizontal axis is number of episodes.

Figure 6.

Q-agent results in the stochastic environment. For the subplots, vertical axis is number of successful tests and horizontal axis is number of episodes.

To provide a measure of the dispersion of the results for the algorithms used to train the agents, the standard deviation () is included in the result graphs. The value of is calculated for each episode to show how it changes throughout the training process. It is defined as follows in Equation (20):

where

- is the standard deviation of the results for a given algorithm,

- N is the total number of trained agents,

- represents the individual result score obtained by an agent in the episode,

- is the mean result score of the agents for the episode, given by

For simplicity in the text labels used in the graphics, the results of agents trained with the Q-Learning algorithm are referred to as Q-Agents. Similarly, the results of agents trained with the M-Learning algorithm are referred to as M-Agents.

4.1. Deterministic Environment

This subsection presents the simulation results for the two algorithms in the deterministic environment, following the proposed experimental setup.

4.1.1. Q-Learning

The results of the grid search for the parameters of Q-Learning within the deterministic Frozen Lake environment are shown in Figure 5. In total, 20,000 agents were trained for this experiment. The results indicate that an increase in causes the agents to require more episodes to find a policy. Smaller values of increase the dispersion in the agent scores, and an increase in reduces the time it takes for the agents to achieve the goal.

The proposed criterion for selecting the best set of parameters was the combination that required the fewest episodes for the mean value to achieve 100% of the test scores. The results of the deterministic environment (Figure 5) show that all the agents trained with are consistently faster than with other values of . Based on this criterion, the results for , shown in Table 5, were ordered from largest to smallest.

Table 5.

Set of parameters requiring the lowest number of episodes to reach a score of 100 in the test.

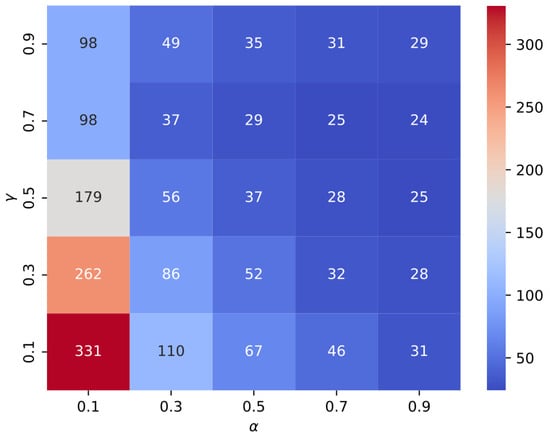

Figure 7 shows the behavior of the exploration mesh for according to the proposed criterion. Note that the best results are located in the high-value region for parameters and .

Figure 7.

Set of parameters requiring the lowest number of episodes to score 100 in the test.

The best set of parameters for , , and was (0.9, 0.7, 0.8). All agents trained with Q-Learning obtained a score of 100 in episode 24.

Once the set of parameters had been selected, a second experiment was conducted with 1000 agents. Figure 8 shows the results obtained using the best set of parameters along with the standard deviation per episode. In this experiment, Q-Learning achieved a score of 100 in episode 26 for all agents.

Figure 8.

Set of parameters requiring the lowest number of episodes to obtain a score of 100 in the test.

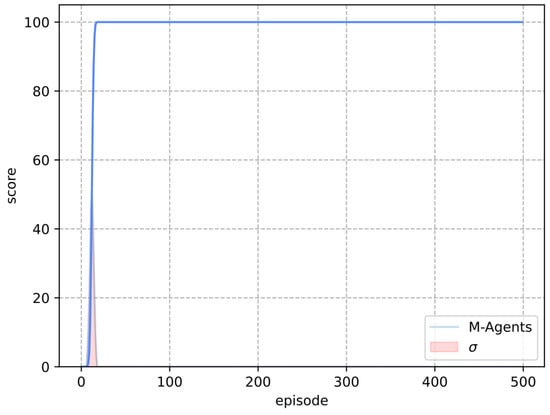

4.1.2. M-Learning

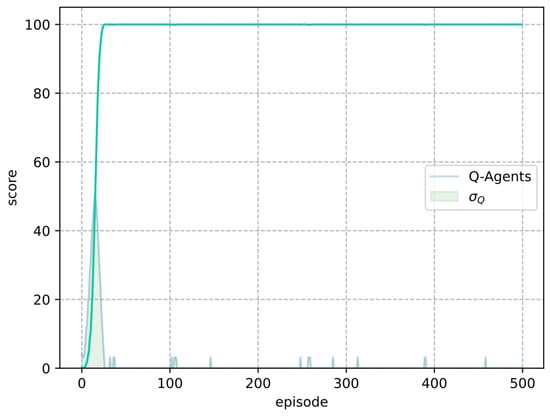

Figure 9 shows the results of the M-Learning algorithm in the deterministic Frozen Lake environment. A total of 1000 agents were trained for the comparison with Q-Learning. All trained agents achieved a score of 100 in episode 18.

Figure 9.

M-agent results in the deterministic environment.

4.2. Stochastic Environment

This subsection presents the simulation results for the two algorithms in the stochastic environment, following the proposed experimental setup.

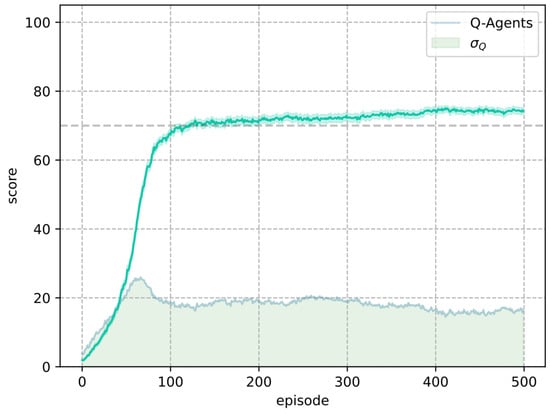

4.2.1. Q-Learning

The results of the grid parameter search for Q-Learning within the stochastic Frozen Lake environment are shown in Figure 6. A total of 20,000 agents were trained for this experiment. The results indicate that an increase in causes the agents to require more episodes to find a policy, similarly to what occurs in the deterministic environment. The results also suggest that a larger value of may lead to missing the maximum score, especially when in this problem. Unlike the deterministic Frozen Lake environment, it is not possible to achieve a success rate in the tests, given the stochastic nature of the environment.

As a criterion for selecting a set of parameters, two conditions were proposed: (i) selecting the combination that required the fewest episodes for its mean value to exceed of the test scores; (ii) ensuring that, during the remaining episodes, the mean did not fall below . The set of parameters that met the criterion is presented in Table 6, ordered from highest to lowest.

Table 6.

Set of parameters requiring the lowest number of episodes to exceed a score of 70 while remaining above 65 in the rest of the test episodes.

The best set of parameters for , , and was (0.3, 0.7, 0.8). The agents trained with Q-Learning obtained a mean score higher than in episode 101.

Once the set of parameters had been selected, the second experiment was conducted with 1000 agents. Figure 10 shows the training results. In this experiment, Q-Learning met the score criteria in episode 120.

Figure 10.

Set of Q-Learning parameters requiring the lowest number of episodes to achieve a score above 70 in the test.

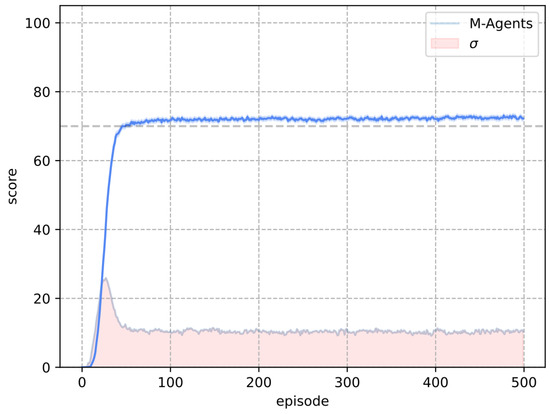

4.2.2. M-Learning

Figure 11 shows the results of the M-Learning algorithm in the stochastic Frozen Lake environment. A total of 1000 agents were trained for the comparison with Q-Learning. In this experiment, M-Learning achieved the score criteria by episode 46.

Figure 11.

Results obtained with the M-agents in the stochastic environment.

5. Discussion

5.1. Deterministic Environment

The results in the deterministic environment indicate that a policy solution is easily obtained by the algorithms. For Q-Learning, Table 5 and Figure 7 show that different sets of parameters yield the same results, demonstrating the low difficulty of the task in the environment and its limited variability. The results also highlight that longer exploration times can delay the learning of agents in environments with low difficulty.

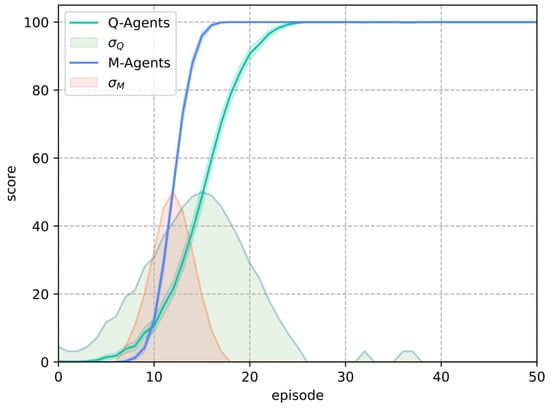

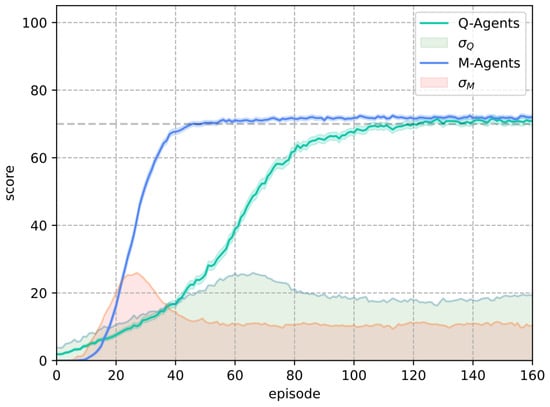

Figure 12 presents a comparison of the solutions obtained by the agents trained with Q-Learning and M-Learning in the deterministic Frozen Lake environment. This figure shows that the M-agents achieved a score of 100 in a shorter period of time and that the standard deviation area of the score was lower than that of the Q-agents.

Figure 12.

Results obtained for the Q-Learning and M-Learning algorithms in the deterministic environment.

The standard deviation indicates that the agents trained with Q-Learning may experience losses in the acquired knowledge at some points, even if the environment remains unchanged. This behavior can be observed throughout training (see Figure 8).

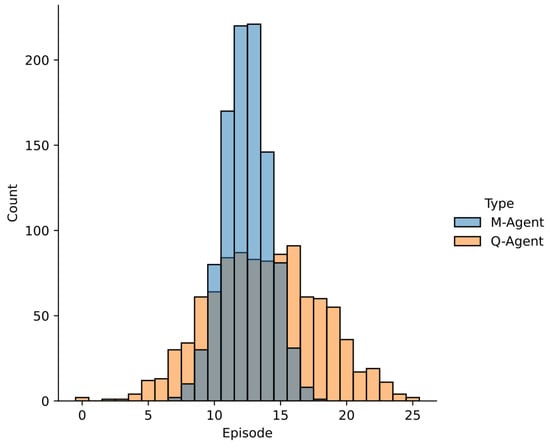

Additionally, the comparison was analyzed in terms of the frequency with which the agents successfully reached the objective in each episode. For the 1000 agents, we analyze the distribution of their scores. In each episode, we determine how many agents meet the score criteria. This approach aims to reveal the overall shape and behavior of the algorithms across the general population of agents. Figure 13 presents the histogram of this comparison.

Figure 13.

Distribution of the results obtained by Q-Learning and M-Learning in the deterministic environment.

The agents trained using Q-Learning achieved the objective, on average, in episode 13.827, with a standard deviation of 4.18 episodes, whereas the agents trained using M-Learning took a mean of 12.456 episodes to meet the criteria, with a standard deviation of 1.74 episodes. This corresponds to a reduction in the mean number of episodes required to achieve the goal, as well as a reduction in the standard deviation.

5.2. Stochastic Environment

Figure 14 compares the solutions obtained by the agents trained with Q-Learning and M-Learning in the stochastic Frozen Lake environment. The comparison shows that the M-agents met the score criteria in a shorter period of time and that their standard deviation area was lower than that of the agents trained with the Q-Learning algorithm.

Figure 14.

Results obtained with the Q-Learning and M-Learning algorithms in the stochastic environment.

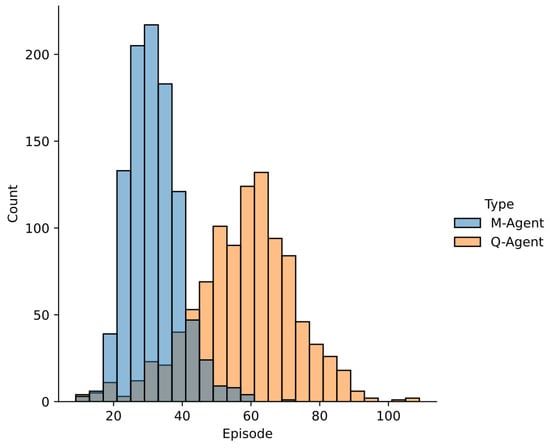

Figure 15 is a histogram comparing the results of Q-Learning and M-Learning in the stochastic environment. For the 1000 agents, we analyze the distribution of their scores. In each episode, we determine how many agents meet the score criteria. This approach aims to reveal the overall shape and behavior of the algorithms across the general population of agents.

Figure 15.

Distribution of the results obtained by Q-Learning and M-Learning in the stochastic environment.

The agents trained using Q-Learning met the score criteria, on average, in episode 57,591 with a standard deviation of 14.77, whereas the agents trained using M-Learning took a mean of 31,186 episodes to reach the goal, with a standard deviation of 7.42. This corresponds to a reduction in the mean number of episodes required to meet the score criteria and a reduction in the standard deviation.

6. Conclusions

This paper presents a grid search for parameters for the deterministic and stochastic Frozen Lake environments (Figure 5 and Figure 6, respectively) when using Q-Learning. As Frozen Lake is one of the earliest and most recognized environments in RL, these experiments may be helpful for those who wish to conduct further experiments with this environment and Q-Learning.

In Frozen Lake, the M-Learning algorithm trains agents faster than Q-Learning. In the deterministic environment, the number of episodes necessary for all agents to achieve a score of 100 is lower (eight episodes) for M-Learning when compared to Q-Learning. In the stochastic environment, the former requires fewer episodes (74) on average.

Additionally, in terms of the standard deviation of the score, M-Learning demonstrates greater consistency in the training results. Compared to the standard deviation of Q-Learning, M-Learning shows a reduction of in the deterministic environment, as well as a reduction of in the stochastic environment.

Furthermore, in both deterministic and stochastic environments, the difference in M-Learning’s result distributions (Figure 13 and Figure 15) compared to Q-Learning becomes more pronounced as complexity increases. This highlights M-Learning’s consistency despite growing environmental complexity, without requiring parameter adjustments. Notably, M-Learning maintained the same parameter , while Q-Learning required a grid search to optimize its parameters, which varied across environments.

Finally, the main contribution of this paper consists of two interconnected aspects, both of which stem from the formulation of the proposed M-Learning algorithm.

- The most relevant aspect is that the exploration–exploitation dilemma can be self-regulated based on the value of actions in the states, which eliminates the need to use the additional parameters demanded by the -greedy policy.

- The second one is that considering the value of actions with a probabilistic approach allows modifying the value of all actions in a state through a single interaction with the environment.

While the above can be understood from different perspectives, it is ultimately the result of a unified approach.

Future Work

With the results obtained for M-Learning, we suggest that future research should focus on evaluating its performance in other environments with the purpose of identifying the specific problems where it may prove most useful.

The factor , which modifies the value of an action, offers a promising avenue for further exploration. Future studies could investigate alternative methods for calculating this factor, either to simplify the process or to incorporate additional sources of information beyond the reward and the knowledge stored in the states.

Author Contributions

Methodology, M.S.M.C., C.A.P.C. and O.J.P.; Writing—original draft, M.S.M.C.; Writing—review & editing, O.J.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Universidad Distrital Francisco José de Caldas with the Call P2-2025 /Payment of Article Processing Costs (APC).

Data Availability Statement

The data presented in this study will be available in Github repo. [Github] [https://github.com/MarlonSMC/] (accessed on 20 January 2025 ).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Cottier, B.; Rahman, R.; Fattorini, L.; Maslej, N.; Owen, D. The rising costs of training frontier AI models. arXiv 2024, arXiv:2405.21015. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing Atari with Deep Reinforcement Learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Sadhu, A.K.; Konar, A. Improving the speed of convergence of multi-agent Q-learning for cooperative task-planning by a robot-team. Robot. Auton. Syst. 2017, 92, 66–80. [Google Scholar] [CrossRef]

- Canese, L.; Cardarilli, G.C.; Dehghan Pir, M.M.; Di Nunzio, L.; Spanò, S. Design and Development of Multi-Agent Reinforcement Learning Intelligence on the Robotarium Platform for Embedded System Applications. Electronics 2024, 13, 1819. [Google Scholar] [CrossRef]

- Torres, J. Introducción al Aprendizaje por Refuerzo Profundo: Teoría y Práctica en Python; Direct Publishing, Independently Published: Chicago, IL, USA, 2021. [Google Scholar]

- Lapan, M. Deep Reinforcement Learning Hands-On; Packt Publishing: Birmingham, UK, 2018. [Google Scholar]

- Balaji, N.; Kiefer, S.; Novotný, P.; Pérez, G.A.; Shirmohammadi, M. On the Complexity of Value Iteration. arXiv 2019, arXiv:1807.04920. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 2nd ed.; The MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Jang, B.; Kim, M.; Harerimana, G.; Kim, J.W. Q-Learning Algorithms: A Comprehensive Classification and Applications. IEEE Access 2019, 7, 133653–133667. [Google Scholar] [CrossRef]

- Liu, S.; Hu, X.; Dong, K. Adaptive Double Fuzzy Systems Based Q-Learning for Pursuit-Evasion Game. IFAC-PapersOnLine 2022, 55, 251–256. [Google Scholar] [CrossRef]

- Silva Junior, A.G.d.; Santos, D.H.d.; Negreiros, A.P.F.d.; Silva, J.M.V.B.d.S.; Gonçalves, L.M.G. High-Level Path Planning for an Autonomous Sailboat Robot Using Q-Learning. Sensors 2020, 20, 1550. [Google Scholar] [CrossRef] [PubMed]

- Çimen, M.E.; Garip, Z.; Yalçın, Y.; Kutlu, M.; Boz, A.F. Self Adaptive Methods for Learning Rate Parameter of Q-Learning Algorithm. J. Intell. Syst. Theory Appl. 2023, 6, 191–198. [Google Scholar] [CrossRef]

- Zhang, L.; Tang, L.; Zhang, S.; Wang, Z.; Shen, X.; Zhang, Z. A Self-Adaptive Reinforcement-Exploration Q-Learning Algorithm. Symmetry 2021, 13, 1057. [Google Scholar] [CrossRef]

- Huang, J.; Zhang, Z.; Ruan, X. An Improved Dyna-Q Algorithm Inspired by the Forward Prediction Mechanism in the Rat Brain for Mobile Robot Path Planning. Biomimetics 2024, 9, 315. [Google Scholar] [CrossRef]

- Xu, S.; Gu, Y.; Li, X.; Chen, C.; Hu, Y.; Sang, Y.; Jiang, W. Indoor Emergency Path Planning Based on the Q-Learning Optimization Algorithm. ISPRS Int. J. Geo-Inf. 2022, 11, 66. [Google Scholar] [CrossRef]

- dos Santos Mignon, A.; de Azevedo da Rocha, R.L. An Adaptive Implementation of ϵ-Greedy in Reinforcement Learning. Procedia Comput. Sci. 2017, 109, 1146–1151. [Google Scholar] [CrossRef]

- Zhang, M.; Cai, W.; Pang, L. Predator-Prey Reward Based Q-Learning Coverage Path Planning for Mobile Robot. IEEE Access 2023, 11, 29673–29683. [Google Scholar] [CrossRef]

- Jin, W.; Gu, R.; Ji, Y. Reward Function Learning for Q-learning-Based Geographic Routing Protocol. IEEE Commun. Lett. 2019, 23, 1236–1239. [Google Scholar] [CrossRef]

- Ou, X.; Chang, Q.; Chakraborty, N. Simulation study on reward function of reinforcement learning in gantry work cell scheduling. J. Manuf. Syst. 2019, 50, 1–8. [Google Scholar] [CrossRef]

- Li, Y.; Wang, H.; Fan, J.; Geng, Y. A novel Q-learning algorithm based on improved whale optimization algorithm for path planning. PLoS ONE 2022, 17, e0279438. [Google Scholar] [CrossRef] [PubMed]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Sowerby, H.; Zhou, Z.H.; Littman, M.L. Designing Rewards for Fast Learning. arXiv 2022, arXiv:2205.15400. [Google Scholar]

- Brockman, G.; Cheung, V.; Pettersson, L.; Schneider, J.; Schulman, J.; Tang, J.; Zaremba, W. OpenAI Gym. arXiv 2016, arXiv:1606.01540. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).