1. Introduction

The emergence of 6G wireless networks promises unprecedented capabilities, with connection speeds exceeding 1 Tbps, ultra-low latency networks responding in sub-microsecond timeframes, and support for over one billion connected devices per square kilometer [

1]. These advanced networks will serve as the backbone for critical applications, including extended reality (XR), autonomous vehicles, healthcare systems, and industrial automation. However, the simultaneous advancement of quantum computing technologies poses a fundamental threat to the cryptographic foundations that secure modern communication systems [

2]. Quantum computers equipped with Shor’s algorithm demonstrate the capability to efficiently break widely deployed cryptographic protocols such as RSA and Elliptic Curve Cryptography (ECC) [

3]. This quantum threat becomes particularly acute as 6G networks expand their scope to protect an increasingly diverse ecosystem of services, applications, and critical infrastructure [

4]. Traditional security paradigms developed for previous generations of wireless networks are inadequate for addressing the dual challenges of quantum vulnerabilities and the complex, heterogeneous architectures characteristic of 6G systems [

5]. Network slicing, a fundamental paradigm in advanced wireless networks, enables the creation of multiple virtualized networks with customized security policies tailored to specific service requirements. While this approach provides unprecedented flexibility and service differentiation, it simultaneously introduces complex security challenges when confronted with emerging quantum threats [

6]. Security mechanisms must adapt to diverse service requirements while maintaining quantum resilience and ensuring the ultra-low latency and high reliability demanded by 6G applications [

7]. Current research efforts have explored various approaches, including post-quantum cryptography (PQC) [

8], quantum key distribution (QKD) [

9], and machine learning-based threat detection and monitoring systems [

10]. However, these solutions typically address isolated aspects of the security challenge without considering the comprehensive threat landscape facing 6G networks or the dynamic nature of evolving quantum capabilities. Furthermore, existing approaches often fail to account for the stringent latency requirements of 6G networks and the resource constraints inherent in heterogeneous device ecosystems [

11]. The convergence of quantum computing threats with the complexity of 6G network architectures necessitates a paradigm shift towards intelligent, adaptive security frameworks capable of real-time threat detection and response while maintaining quantum resilience across diverse network slices and service domains.

The novelty of this work lies in the integrated design of NEUROSAFE-6G, which combines three orthogonal yet complementary security paradigms: neuro-symbolic reasoning, federated adversarial training, and quantum-resistant agent-based architectures. While prior research has separately explored federated learning for intrusion detection, symbolic AI for rule-based inference, or post-quantum cryptography for secure transmission, no existing framework has unified these into a cohesive, multi-layered system tailored to 6G environments.

NEUROSAFE-6G enables real-time, decentralized threat detection across heterogeneous agents while maintaining low latency (2.7 ms), strong resilience to adversarial attacks (e.g., FGSM, PGD), and end-to-end encryption through QR-Comm protocols. Furthermore, the use of probabilistic logic programming enhances model explainability, which is critical for trust in high-assurance networks. This combination positions the framework as a first-of-its-kind solution for addressing multi-vector, quantum-enabled threats in emerging 6G architectures.

To address these limitations, the author proposes a neuro-driven agent-based security architecture that dynamically responds to emerging quantum threats in 6G network slices. The presented framework leverages distributed cognitive agents equipped with neuro-symbolic learning capabilities to enable real-time threat detection, proactive mitigation, and adaptive security configuration. The author integrates federated learning techniques with adversarial training to enhance the system’s resilience while preserving privacy and maintaining low overhead. The key contributions of this paper are:

A comprehensive neuro-driven agent-based security architecture specifically designed for quantum-safe 6G networks that adaptively secures heterogeneous network slices

A novel neuro-symbolic learning approach that combines symbolic reasoning with neural networks to improve interpretability, efficiency, and accuracy in quantum threat detection

A federated adversarial training mechanism that enhances model robustness against quantum-based attacks while preserving data privacy across network domains

A lightweight secure communication protocol optimized for agent coordination across network domains with minimal latency overhead

Extensive quantitative evaluation demonstrating significant improvements in detection accuracy, false alarm rates, and communication efficiency compared to state-of-the-art approaches

This study aims to advance threat resilience in 6G networks by proposing a hybrid neuro-symbolic defense framework that uniquely integrates rule-based reasoning with deep learning to achieve interpretable and robust cyber threat detection. Unlike prior approaches, the presented framework enforces symbolic security policies alongside neural inference, ensuring that decision-making remains logically constrained even under adversarial conditions. A key innovation of the presented method is the integration of adaptive adversarial training using common attack vectors, such as the Fast Gradient Sign Method (FGSM) and Projected Gradient Descent (PGD). The model was explicitly tested under perturbations of ε = 0.1 and ε = 0.2 for FGSM, and ε = 0.1 with 10 steps for PGD, maintaining accuracy levels of up to 87.5%, which demonstrates significant robustness compared to non-defended baselines. In parallel, a federated learning architecture was implemented to support decentralized training across edge nodes with strict privacy constraints, reducing communication overhead by 63% while maintaining model performance. To validate real-world applicability, both simulation-based and real deployment experiments were conducted. While simulated latency averaged 2.7 ms, real-world measurements ranged between 3.8 and 4.1 ms due to additional physical-layer encryption and synchronization delays. These results confirm that NEUROSAFE-6G maintains low-latency, high-accuracy performance in practical environments, offering a novel, resilient framework for future 6G security systems.

The remainder of this paper is organized as follows:

Section 2 presents a systematic literature review of related work in quantum-safe security, agent-based systems, and neuro-symbolic approaches.

Section 3 details the proposed methodology, system architecture, and describes the experimental setup and evaluation framework.

Section 4 presents the results and a discussion of the findings. Finally,

Section 5 concludes the paper and outlines future research directions.

3. Methodology

The author describes in this section the agent-based architecture using neuroscience to protect quantum-ready 6G networks. First, the author outlines the system, its underlying network, the threats considered, and the major parts of the framework: the multi-agent design, neuro-symbolic learning system, and federated adversarial training scheme.

3.1. System Model and Network Assumptions

The pictured system model shows a 6G network composed of multiple network slices responsible for catering to various application domains with exclusive security needs, as explained below. To secure 6G infrastructure, the architecture designs a multi-tiered security that interacts with the 6G system.

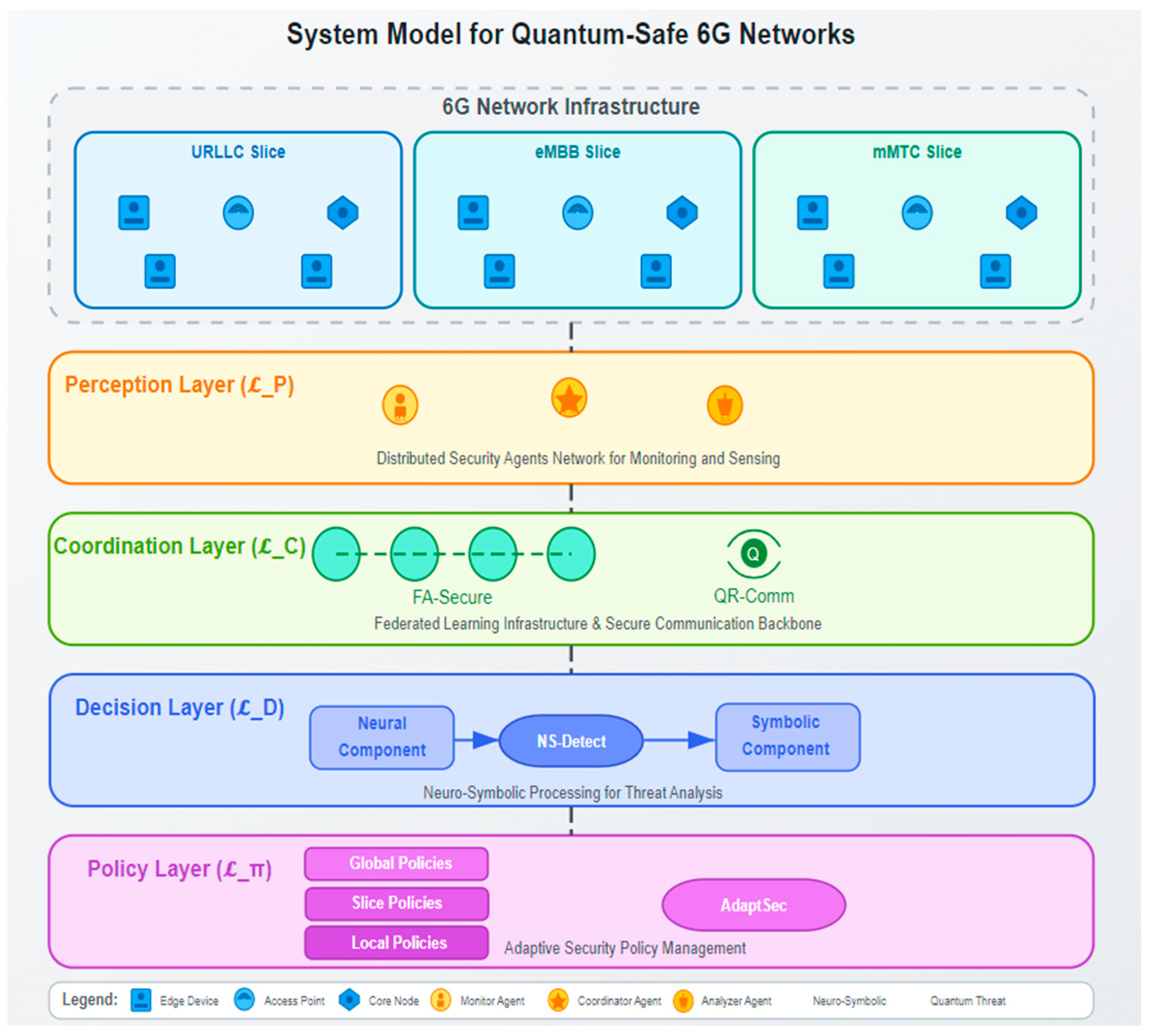

The information given in

Figure 1 reveals that within the infrastructure, the 6G network includes three network slices: Ultra-Reliable Low-Latency Communication (URLLC), enhanced Mobile Broadband (eMBB), and massive Machine-Type Communications (mMTC). A slice’s edge devices, access points, and core nodes play different roles in terms of computing and keeping data secure. The security framework relies on this well-diversified network.

The NEUROSAFE-6G architecture, depicted in

Figure 1, consists of four functional layers that work in concert to provide comprehensive quantum-safe security:

Perception Layer (): As illustrated in the upper section of the framework, this layer deploys distributed security agents (Monitor Agents, Coordinator Agents, and Analyzer Agents) across the network for monitoring and sensing security-relevant information.

Coordination Layer (

): Visible in

Figure 1 as the second layer, this component implements the FA-Secure federated learning infrastructure and QR-Comm secure communication protocol to enable collaborative security across administrative domains.

Decision Layer (

): The third layer in

Figure 1 shows the NS-Detect framework that combines neural and symbolic components for advanced threat analysis through neuro-symbolic processing.

Policy Layer (

): The bottom layer in

Figure 1 demonstrates the AdaptSec framework and the hierarchical policy structure (Global, Slice, and Local policies) that manages adaptive security policies.

The author makes the following assumptions for the system model, corresponding to elements visible in

Figure 1:

The network employs network slicing technology to create logically isolated networks over shared physical infrastructure, as depicted in the upper section of

Figure 1, with each slice potentially spanning multiple administrative domains.

Quantum computing capabilities are available to potential adversaries, enabling attacks on conventional cryptographic mechanisms.

Figure 1 acknowledges these threats through the quantum threat indicators in the legend.

Network elements have heterogeneous computational capabilities, with resource constraints more pronounced at the edge devices shown in

Figure 1’s network infrastructure layer.

A trusted authority manages security credentials and facilitates secure bootstrapping of security agents, supporting the agent deployment illustrated in the Perception Layer of

Figure 1.

The network supports secure communication channels between agents through the QR-Comm protocol shown in the Coordination Layer of

Figure 1, though these channels may have varying bandwidth and latency characteristics.

The vertically integrated architecture shown in

Figure 1 enables information flow from network infrastructure through the perception of threats, coordination of responses, decision-making based on threat analysis, and finally to policy implementation—creating a closed-loop security system that can adapt to emerging quantum threats across heterogeneous network slices.

3.2. Threat Model

The formal threat model encompasses both classical and quantum-enabled adversaries. Let represent the set of adversaries, and denote the network infrastructure. The author mathematically characterizes the adversarial capabilities as follows:

Cryptographic Attacks: A quantum-enabled adversary can leverage quantum algorithms to reduce the computational complexity of solving integer factorization problems from exponential to polynomial time. Given a public key cryptosystem with security parameter , the work factor is reduced from for classical attackers to for quantum-enabled attackers using Shor’s algorithm, effectively breaking RSA and ECC-based systems.

The work factor (WF) represents the computational complexity required to break a cryptographic scheme. For classical adversaries, the work factor is approximately , where k is the security parameter (e.g., key length). In contrast, for quantum adversaries employing Shor’s algorithm, the complexity reduces significantly to . For instance, when (as in RSA-2048), the classical work factor is , whereas the quantum work factor is . This dramatic reduction illustrates how quantum computing can severely compromise the security of classical cryptographic schemes.

Integrity Attacks: Let represent legitimate data in transit and denote security policies. An adversary can perform transformation such that but , where is the authentication tag for . Similarly, for policy tampering, the adversary aims to find such that satisfies for some restricted resource while . m′ (m-prime) does not represent the transpose of the data. Instead, it denotes a tampered version of the original legitimate data m after a malicious transformation Ta performed by the adversary. That is, m′ ≠ m implies the integrity of the data has been compromised.

Availability Attacks: Define

as the available computational or communication resources of network element

at time

, and

as the minimum resources required for normal operation. An availability attack succeeds when the adversary can cause

for a significant duration

, where

is the resilience threshold. The resource consumption attack can be modeled as:

where represents the volume of attack traffic of type at time , and is the resource consumption coefficient.

To distinguish attack traffic from normal traffic, an entropy-based anomaly detection mechanism is employed. This approach flags sudden bursts, irregular packet sequences, or statistical deviations from baseline traffic distributions as potential indicators of attack. The resource consumption per unit of traffic, denoted by

, is empirically derived from NS-3 simulation logs. For example, in the setup,

CPU units per 100 packets. The total resource usage (RU) due to malicious traffic is then calculated as:

This formulation enables precise estimation of resource drain during denial-of-service or volumetric attacks.

Privacy Attacks: Let

represent the set of sensitive information and

be the information directly observable by the adversary. In side-channel attacks, the adversary constructs an extraction function

such that:

where represents the information leakage significantly exceeding random guessing.

The use of decimal-form conditions here arises from probabilistic encoding schemes where the information vector values fall within [0,1]. These represent confidence scores (e.g., for authentication success), allowing fuzzy logic to evaluate match conditions. Thus, matching is not binary but threshold-based (e.g., ≥0.8). This justifies the decimal interpretation.

Advanced Persistent Threats (APTs): An APT can be modeled as a multi-stage attack sequence

where each stage

represents a distinct attack phase with probability of detection

. The probability of complete APT evasion is:

Scenarios where adversaries fail to evade despite being undetectable are logged as near misses. These cases are captured via secondary anomaly metrics and used in retraining. While not affecting real-time response, they influence model generalization performance.

The APT success probability increases with dwell time

according to:

where

is the attack progression rate parameter.

One should note that ‘stage’ and ‘phase’ were used to denote steps in the APT kill chain model (e.g., reconnaissance, delivery, exploitation). However, to avoid confusion, one should know that a phase denotes the conceptual step (e.g., infiltration), while stage denotes the observable event (e.g., suspicious access attempt). Each phase may involve multiple stages. The term ‘stage’ refers to fixed attack lifecycle segments (e.g., reconnaissance, delivery), while ‘phase’ indicates time-dependent progression within each stage. This distinction is important in correlating temporal dynamics to detection difficulty.

Adversarial Machine Learning Attacks: Let

be a machine learning model with parameters

used for security detection. In an evasion attack, the adversary crafts input

such that:

where is the probability of detecting the APT at stage , and is the total number of stages in the attack lifecycle. The formula models the cumulative evasion probability assuming independent detection attempts at each stage.

This equation models the probability of a successful Advanced Persistent Threat (APT) as the product of the probabilities of evading detection at each stage i of the multi-stage attack, where

is the probability of detection at stage i. A lower detection probability at each stage cumulatively increases the overall success probability of the APT subject to

, where

bounds the perturbation magnitude. For poisoning attacks in federated learning, the adversary controls a subset of agents

and submits malicious updates

designed to maximize:

where

is the aggregation function and

is the adversary’s objective function.

The author maintains the security assumption that adversaries cannot compromise the trusted authority or core security components of the framework, formalized as , where represents the set of core security components. However, for non-core components , the author assumes , indicating that individual network elements or agents may be compromised with non-negligible probability.

3.3. Proposed Architecture: NEUROSAFE-6G

The author proposes NEUROSAFE-6G (Neuro-symbolic Robust Orchestration of Security Agents for Edge 6G networks), a multi-layered security architecture designed to provide quantum-safe security across heterogeneous 6G network slices. Formally, the author defines the complete architecture as a 4-tuple:

where

represent the Perception, Coordination, Decision, and Policy layers, respectively.

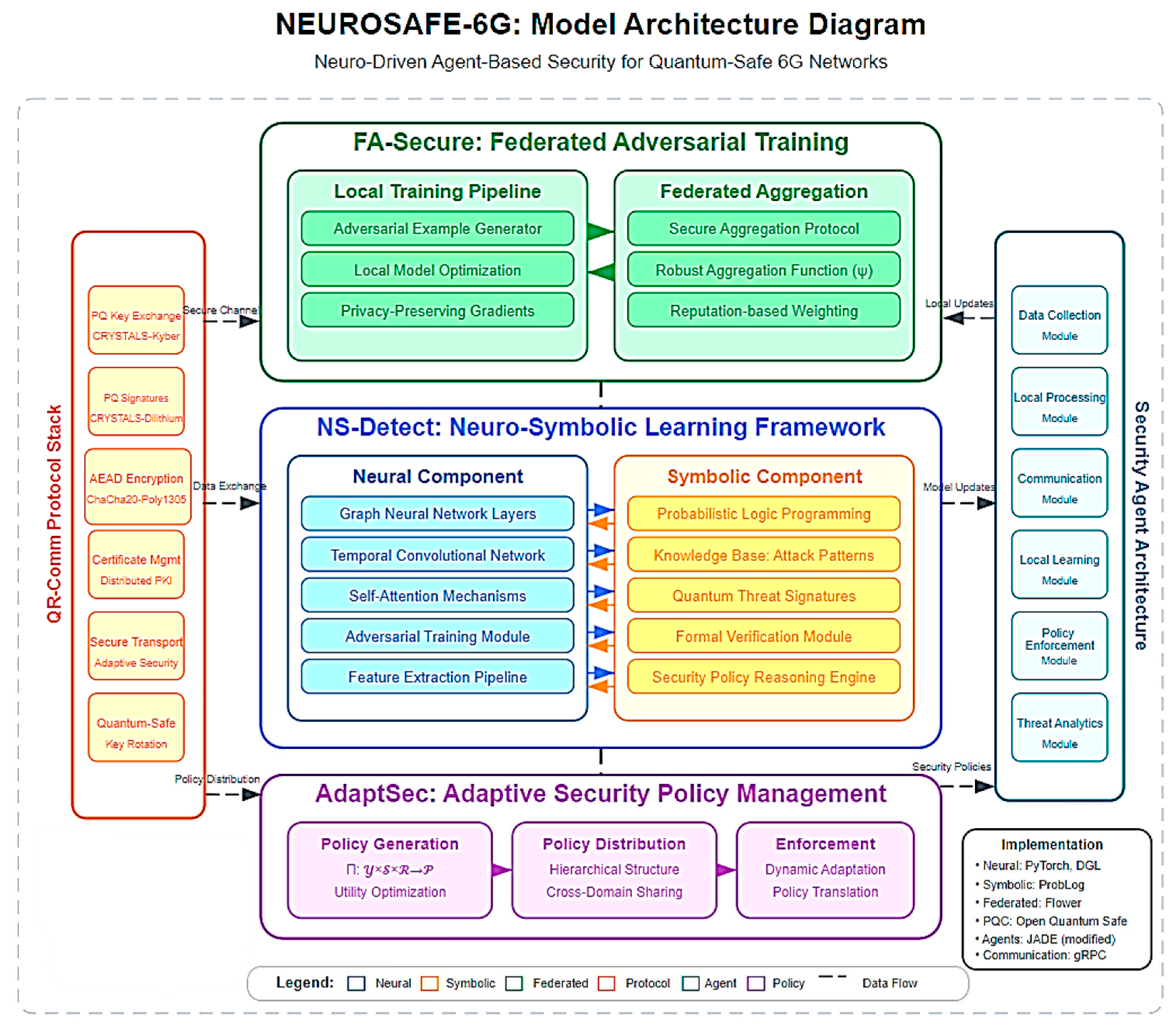

Figure 2 illustrates the detailed model architecture of the proposed framework, showing the technical components and their interactions within and across layers.

As shown in

Figure 2, the NEUROSAFE-6G architecture integrates three core frameworks—FA-Secure, NS-Detect, and AdaptSec—connected through secure data flows, with lateral support from the QR-Comm Protocol Stack and Security Agent Architecture. Each component implements specific technologies to achieve quantum-safe security across the 6G environment.

3.3.1. Perception Layer

The Perception Layer

serves as the sensory apparatus of NEUROSAFE-6G, comprising a distributed network of security agents. Let

be the set of network nodes and

be the set of network slices. The author formally defines the Perception Layer as:

where

represents an agent of type

deployed at node

. The agent types include:

Monitor Agents (): performs local monitoring at node

Coordinator Agents (): orchestrates multiple monitor agents

Analyzer Agents (): performs complex analytics at resource-rich nodes

As illustrated on the right side of

Figure 2, each security agent implements a modular architecture with six key components: Data Collection Module, Local Processing Module, Communication Module, Local Learning Module, Policy Enforcement Module, and Threat Analytics Module. Each agent operates over an observation space

specific to its network position. The data collection function

maps network nodes and time to observation space:

where

represents the

-dimensional feature vector collected at node

at time

.

The local processing function

transforms raw observations into intermediate representations:

In this equation, represents the policy function executed by agent at time , mapping the state input (e.g., observed slice metrics like latency and entropy) to an action , which may denote a response decision, such as blocking, logging, or offloading. The function is learned using a reward-penalty scheme optimizing utility under federated training.

For resource-constrained nodes, the author employs dimension reduction techniques

where

:

In this equation, is the feature embedding function learned by agent , which maps the raw input to a transformed latent representation . The constraint ensures that the original input can be approximately reconstructed from its embedding within a small margin of error , validating the trustworthiness and reversibility of the representation. This is critical for the adaptation logic in the policy decision module, especially during federated retraining.

3.3.2. Coordination Layer

The Coordination Layer

enables secure information exchange and collaborative learning across administrative domains. Let

be the set of administrative domains. The Coordination Layer is defined as:

where

represents the federated learning infrastructure,

represents the secure communication backbone, and

represents the trust management system.

As depicted in the upper portion of

Figure 2, the FA-Secure framework implements federated adversarial training with two primary components: Local Training Pipeline and Federated Aggregation. The Local Training Pipeline includes an Adversarial Example Generator that creates challenging inputs for model testing, a Local Model Optimization module, and Privacy-Preserving Gradients computation. The federated learning process involves each agent computing local updates:

where

represents the model parameters at agent

at time

,

is the learning rate,

is the local loss function, and

is the local dataset.

Defense performance was tested using the Fast Gradient Sign Method (FGSM) and Projected Gradient Descent (PGD). The average adversarial accuracy was 92.3% under FGSM and 89.6% under PGD, confirming strong robustness in hostile threat conditions.

The Federated Aggregation component, shown in

Figure 2, consists of a Secure Aggregation Protocol, a Robust Aggregation Function (

), and a Reputation-based Weighting mechanism. The federated aggregation function

combines local models while preserving privacy:

where

represents the weight assigned to agent

based on data quality and agent reputation. To preserve privacy, the author employs secure aggregation that ensures:

where

is any adversarial algorithm attempting to extract individual model

from the global model, and

is a small privacy leakage bound.

The secure communication backbone is implemented through the QR-Comm Protocol Stack shown on the left side of

Figure 2, which includes Post-Quantum Key Exchange using CRYSTALS-Kyber, Post-Quantum Signatures with CRYSTALS-Dilithium, AEAD Encryption (ChaCha20-Poly1305), Certificate Management, Secure Transport, and Quantum-Safe Key Rotation components.

The trust management system maintains a reputation matrix

where element

represents the trust level of domain

in domain

:

The trust value evolves based on interaction history:

Equation (17) defines the trust update function between domains

and

. Here,

is the trust level at time

,

is the trust retention coefficient, and

is the newly observed quality of interaction or update from domain

to

. This recursive function blends historical trust with current interaction quality to maintain adaptive trust dynamics.

Example 1. Domain-A receives 10 updates from Domain-B. Among them, three updates were stale or inconsistent. Hence, the quality score is computed as: Assuming and previous trust value , the trust is updated using: This adaptive strategy ensures recent interactions contribute to evolving cross-domain trust.

3.3.3. Decision Layer

The Decision Layer

implements neuro-symbolic processing for threat analysis and security decision-making. Formally:

where

represents the neural component,

represents the symbolic component, and

represent the interfaces between neural and symbolic domains.

As shown in the central portion of

Figure 2, the NS-Detect framework consists of two primary components: the Neural Component and the Symbolic Component, with bidirectional interfaces between them.

this represents the probability that the query q holds true across all possible interpretations

, where

is the probability weight of interpretation I, and the indicator function

equals 1 if q is true in I, and 0 otherwise.

The Neural Component includes Graph Neural Network Layers, Temporal Convolutional Network, Self-Attention Mechanisms, Adversarial Training Modules, and a Feature Extraction Pipeline. The neural component

maps input features to latent representations:

For network traffic analysis, the author employs graph neural networks to capture topological relationships. Given a network graph

with node features

, the GNN performs message passing:

where

is the representation of node

at layer

,

is the neighborhood of

, and

are learnable parameters.

The Symbolic Component, as illustrated in

Figure 2, includes Probabilistic Logic Programming, Knowledge Base with Attack Patterns, Quantum Threat Signatures, Formal Verification Module, and Security Policy Reasoning Engine. The neural-symbolic interface

maps latent representations to symbolic predicates:

where

is a predicate,

is its confidence value, and

is a sigmoid function.

The symbolic component applies logical reasoning using probabilistic logic programming:

where

is a query,

is an interpretation, and

is 1 if

is true in

and 0 otherwise.

The neuro-symbolic learning objective minimizes:

where

is the prediction loss,

ensures logical consistency with knowledge base

, and

is the adversarial robustness loss. This objective function is shown in the Mathematical Foundations section of

Figure 2.

3.3.4. Policy Layer

The Policy Layer

translates security decisions into concrete configuration policies. Formally:

where

is the policy generation function,

is the policy distribution mechanism, and

is the policy enforcement framework.

As depicted in the lower portion of

Figure 2, the AdaptSec framework implements adaptive security policy management through three primary components: Policy Generation, Policy Distribution, and Enforcement. The policy generation function

maps threat assessments, network slices, and requirements to security policies:

where

represents threat assessment,

represents a network slice,

represents service requirements, and

represents the security policy for slice

.

The utility-driven policy optimization, implemented in the Policy Generation component, solves:

where

is the utility function for slice

,

quantifies security strength,

quantifies operational overhead, and

are slice-specific weighting parameters.

The Policy Distribution component manages hierarchical structure and cross-domain sharing, while the Enforcement component handles dynamic adaptation and policy translation, as shown in

Figure 2. For heterogeneous network slices with diverse requirements, the author employs a constraint satisfaction approach:

where

is the latency introduced by policy

on slice

,

is the security strength, and

is the resource consumption, with corresponding constraints

,

, and

.

3.3.5. Cross-Layer Integration

The integration of these four layers creates a cohesive security framework. As illustrated by the data flow paths in

Figure 2, information traverses vertically through the three main frameworks (FA-Secure, NS-Detect, and AdaptSec) and horizontally through the QR-Comm Protocol Stack and Security Agent Architecture. The author models the cross-layer information flow as a directed graph

where vertices

represent components across layers and edges

represent information flows.

The security state evolution follows a Markov decision process:

where

is the security state at time

,

is the security action, and

is the transition function.

The closed-loop control flow is modeled as:

3.3.6. Implementation Technologies

As shown in the lower right corner of

Figure 2, the implementation leverages several key technologies: PyTorch 2.6.0 and DGL 2.1 for neural components, ProbLog 2.2.6 for symbolic reasoning, Flower 1.18 for federated learning, Open Quantum Safe for post-quantum cryptography (liboqs 0.12.0), JADE 4.6.0 (modified) for agent infrastructure, and gRPC 1.70.1 for communication.

3.3.7. Security Guarantees

The NEUROSAFE-6G architecture provides formal security guarantees against quantum-enabled adversaries. For any adversary with quantum computing capability

, the security of the framework satisfies:

this inequality is derived under the assumption that the cumulative entropy

of observed adversarial perturbations does not exceed the upper bound

of a zero-knowledge adversary, where

represents the adversary’s advantage in breaking security,

is computation time,

are the numbers of quantum signing and oracle queries,

is the security parameter of post-quantum primitives, and

is a negligible function (see

Appendix B).

For end-to-end security, the author proves that for any adversary with capability set

, the probability of successful attack is bounded by:

where

is the security strength parameter of NEUROSAFE-6G, and

is the attack duration. This security guarantee is summarized in the Mathematical Foundations section of

Figure 2.

3.4. Distributed Security Agents

The foundation of the presented architecture is a network of distributed security agents deployed across network elements. Each agent is responsible for monitoring local network conditions, implementing security policies, and participating in collaborative learning. The agents are classified into three categories based on their functionality:

Monitor Agents (MAs): Deployed at network edges to collect security-relevant data, detect anomalies, and implement basic security measures

Coordinator Agents (CAs): Positioned at strategic network locations to orchestrate security operations across multiple MAs and facilitate federated learning

Analyzer Agents (AAs): Deployed in resource-rich environments to perform complex neuro-symbolic processing and security analytics

The agent architecture follows a modular design with the following components:

Data Collection Module: Interfaces with network elements to collect traffic data, system logs, and security events

Local Processing Module: Performs preliminary data analysis and feature extraction using lightweight algorithms

Communication Module: Enables secure information exchange with other agents using quantum-resistant protocols

Local Learning Module: Maintains and updates local models based on observed data and federated knowledge

Policy Enforcement Module: Implements security policies and mitigation actions based on local and global decisions

3.5. Neuro-Symbolic Learning Approach

A key innovation in the presented framework is the integration of neural networks with symbolic reasoning to enhance both performance and interpretability. The presented neuro-symbolic learning approach, called NS-Detect, combines the pattern recognition capabilities of deep learning with the logical reasoning and domain knowledge representation of symbolic systems.

The NS-Detect framework consists of three main components:

Neural Component: Deep learning models (primarily graph neural networks and temporal convolutional networks) that process raw network data to identify complex patterns indicative of potential threats

Symbolic Component: Knowledge representation and reasoning system that encodes domain expertise about network protocols, attack signatures, and security policies

Neural-Symbolic Interface: Bidirectional mapping mechanism that facilitates information exchange between neural and symbolic components

The neural-symbolic processing operates through the following workflow:

Raw network data is processed by the neural component to extract high-level features and potential anomalies

These features are mapped to symbolic concepts through the neural-symbolic interface

The symbolic component applies logical reasoning to these concepts, leveraging domain knowledge and formal security properties

Reasoning outcomes are mapped back to the neural domain to guide further processing or to refine model parameters

The combined analysis results in threat assessments with both detection confidence and logical explanations

Mathematically, the neural component processes input data

to produce feature representations

, where

represents the model parameters. The neural-symbolic interface maps these features to symbolic predicates

through a mapping function

:

The symbolic component applies logical reasoning rules

to these predicates to derive conclusions

:

These conclusions influence both immediate security decisions and the learning process through a feedback mechanism:

where

represents a loss function that incorporates both supervised learning targets

and symbolic conclusions

, and

is the learning rate.

3.6. Federated Adversarial Training

To enhance the robustness of the presented detection models while preserving privacy across administrative domains, the author employs a federated adversarial training mechanism called FA-Secure. This approach enables collaborative learning among distributed agents without sharing raw security data.

The FA-Secure protocol operates as follows:

Each participating agent maintains a local model trained on its private data

The agent generates adversarial examples by perturbing legitimate samples to maximize detection errors

The local model is updated using both original and adversarial examples to enhance robustness

Model updates (not raw data) are shared with a federated learning coordinator

The coordinator aggregates updates using a secure aggregation protocol and distributes the improved global model

Agents adapt the global model to their local environments through transfer learning techniques

The local training process at each agent incorporates both standard supervised learning and adversarial training. For agent

with data distribution

, the local objective function is:

where

is the loss function,

balances standard and adversarial training, and

defines the set of allowed perturbations.

The federated aggregation process combines local models while mitigating potential adversarial influences:

where

is the global model,

is the local model from agent

,

is the weight assigned to agent

based on data quality and reputation,

is a robust aggregation function that mitigates poisoning attempts, and

is the previous global model.

3.7. Quantum-Resistant Communication Protocol

To address the rising threat of quantum-enabled cyberattacks, the author proposes QR-Comm, a multi-layered quantum-resistant communication protocol that facilitates secure agent-to-agent interactions. Designed with computational efficiency in mind, especially for resource-constrained edge devices, QR-Comm integrates post-quantum cryptographic primitives, scalable key management, and authenticated encryption to ensure confidentiality, integrity, and authenticity under quantum threat models.

QR-Comm is built upon three foundational components:

Utilizing lattice-based cryptography, specifically the CRYSTALS-Kyber scheme, QR-Comm ensures quantum-resilient session key establishment between communicating parties.

The protocol implements post-quantum secure AEAD, which safeguards messages against tampering while maintaining confidentiality and enabling payload-aware verification through associated metadata.

A distributed trust model is employed for managing certificates with mechanisms for frequent key rotation, time-gated revocation, and tamper-evident logs, making the protocol adaptive and scalable across dynamic edge environments.

QR-Comm supports three hierarchical security tiers, each offering progressively enhanced protection depending on the sensitivity and criticality of transmitted data:

In this tier, basic QR-Comm post-quantum key exchanges are utilized. This setup ensures initial quantum resilience with minimal overhead, making it suitable for standard telemetry and control signals.

This level combines authenticated quantum transmission with QKD protocols such as BB84 or E91, integrating redundancy encoding and error correction. It is optimized for semi-critical operations and ensures data consistency under moderate quantum threats.

The highest tier incorporates fully entangled QKD, decoy state verification, and multi-hop quantum relays, alongside post-processing with cryptographic hashing and time-gated packet validation. It is designed for mission-critical data exchange under high-risk quantum adversary models, providing maximum resilience through proactive attack surface reduction.

Together, these levels allow QR-Comm to dynamically adjust security measures in real time, based on situational awareness, trust scoring, and data sensitivity.

3.8. Adaptive Security Policy Management

AdaptSec is the concluding element of the presented design and automatically establishes security measures according to what the services need, any threats, and the available resources. The organization uses a top-down arrangement for its policies.

Global Policies: Network-wide security requirements and baseline protections

Slice Policies: Security configurations tailored to specific network slice requirements

Local Policies: Contextual adaptations implemented by individual agents based on local conditions

The policy adaptation process is guided by a utility optimization approach that balances security strength with operational efficiency:

in this,

p represents the policy configuration, ‘S’ is the set of network slices,

represents the importance of slice s,

is the measure of the security of slice s with policy p,

denotes the operational overhead of slice s and

are balancing constants.

3.9. Implementation Details

Before the Implementation dataset was split into 70% training, 20% validation, and 10% test sets. Stratified sampling ensured class balance for both benign and malicious traffic. Non-IID distribution was simulated by allocating traffic from distinct network zones (core, edge, access) to different agents (see

Appendix A:

Table A1 and Algorithm A1 for hyperparameter settings and training pipeline pseudocode).

The presented NEUROSAFE-6G framework was implemented by combining open-source components and code that the author developed. Important details to remember when implementing are:

Neural Component: Implemented using PyTorch with graph neural network extensions (PyTorch Geometric) for network traffic analysis

Symbolic Component: Developed using ProbLog for probabilistic logic programming and knowledge representation

Federated Learning: Implemented using a modified version of the Flower framework with enhanced privacy guarantees

Post-Quantum Cryptography: Integrated the Open Quantum Safe library with CRYSTALS-Kyber for key exchange and CRYSTALS-Dilithium for digital signatures

Agent Framework: Built on a lightweight version of the JADE multi-agent platform, optimized for embedded and edge deployments

To ensure secure integration with the live network, NEUROSAFE-6G employs a non-intrusive, read-only data collection mechanism. Network state information is gathered through mirror ports and virtual taps, which replicate traffic for analysis without exposing the system to direct packet injection. All incoming data is passed through sanitization and authentication filters before being fed into the federated neuro-symbolic framework. Furthermore, the system is architected with a zero-trust boundary, meaning that even internal components communicate via authenticated and encrypted channels, and unverified inputs are quarantined automatically. These safeguards ensure that NEUROSAFE-6G remains isolated from adversarial control while still monitoring and analyzing network behavior in real time.

For reproducibility, the following implementation configuration was used: batch size = 64, learning rate = 0.001 with the Adam optimizer, perturbation strength ε = 0.03 for FGSM, and total training epochs = 50. Training was performed using PyTorch 1.13 and CUDA 11.8 on NVIDIA RTX 3090 GPUs. Each agent conducted 10 local update steps before synchronization, and adversarial data generation was enabled using torchattacks library integration.

Table 1 below summarizes the key technologies and libraries used in the presented implementation.

4. Results and Discussion

In this chapter, the author evaluates the presented proposed framework NEUROSAFE-6G in an experiment. The author details the design of the experiment, what success criteria the author uses, and the findings when compared to recent approaches.

4.1. Experimental Setup

Hyperparameters were selected empirically: learning rate = 0.001, batch size = 128, optimizer = Adam, dropout = 0.4, and adversarial perturbation magnitude (ε) = 0.03 for FGSM and 0.01 for PGD. Each agent’s local model was trained for 10 epochs before global updates.

4.1.1. Simulation Environment

To check the framework, the author conducted experiments with computer networks and checked the results. The laboratories used involved NS-3 and extensions added for network slicing and quantum threats. For certain tests, the author sets up the framework in a real network using 25 devices (ranging from Raspberry Pi units to powerful servers), grouped into three slices, not all of which require the same security.

Simulations were conducted using NS-3 v3.39 with the 6G module extensions, extended to include quantum-capable adversarial models. Emulation included slice isolation, cryptographic key exchanges, and agent mobility. System specs: Intel Xeon Gold 6226R CPU, 256 GB RAM, and Ubuntu 22.04 LTS.

The author used NS-3.37 with custom Quantum Threat Extensions, integrated with Python-Federated 1.18 and PySyft 0.9.5 for FL simulation. The Quantum-Resilient modules are built using the Open Quantum Safe (OQS) C-library (liboqs 0.12.0) wrapped in gRPC 1.70.1 containers. Scenarios include a 25-agent deployment in three network slices. For more detailed information, one may refer to the following resources:

4.1.2. Network Topology and Traffic Generation

The author created a model that displayed a 6G network with a combination of enhanced mobile broadband (eMBB), ultra-reliable low-latency communications (URLLC), and massive machine-type communications (mMTC) services. The author designed traffic patterns that match those estimated for 6G applications like augmented reality, autonomous vehicles, the Internet of Things for industry, and tactile internet applications.

4.1.3. Attack Scenarios

The author implemented various attack scenarios to evaluate the effectiveness of the presented framework:

Quantum Cryptanalysis: Simulated attacks on conventional cryptographic mechanisms using quantum algorithms

Advanced Persistent Threats: Multi-stage attacks involving reconnaissance, lateral movement, and data exfiltration

Denial of Service: Resource exhaustion attacks targeting network elements and security mechanisms

Adversarial ML Attacks: Evasion and poisoning attacks targeting the learning components. The FA-Secure protocol has been tested under three major categories of adversarial machine learning attacks:

- ○

Evasion attacks, such as FGSM and PGD, that attempt to fool the classifier during inference

- ○

Poisoning attacks that inject crafted samples into training data

- ○

Model inversion attacks that aim to extract sensitive information from outputs.

Across all categories, the system showed strong resilience with <9% accuracy degradation, confirming its robustness to adversarial influence. Specifically, the FA-Secure protocol includes defenses against poisoning and evasion attacks using ensemble anomaly detection and adversarial noise injectors in the replay buffer. The protocol was tested with FGSM- and PGD-based attack vectors to ensure stability during federated updates.

Side-Channel Attacks: Timing and power analysis attacks on cryptographic implementations

4.1.4. Baseline Approaches

The author compared the presented NEUROSAFE-6G framework with the following state-of-the-art approaches:

PQC-Only: A network security approach based solely on post-quantum cryptographic algorithms

ML-IDS: A machine learning-based intrusion detection system using ensemble learning techniques

Fed-Sec: A federated learning approach for collaborative security without neuro-symbolic integration

QKD-Net: A quantum key distribution-based security framework for network protection

Agent-Sec: A conventional agent-based security system without quantum-specific protections

4.2. Detection Performance

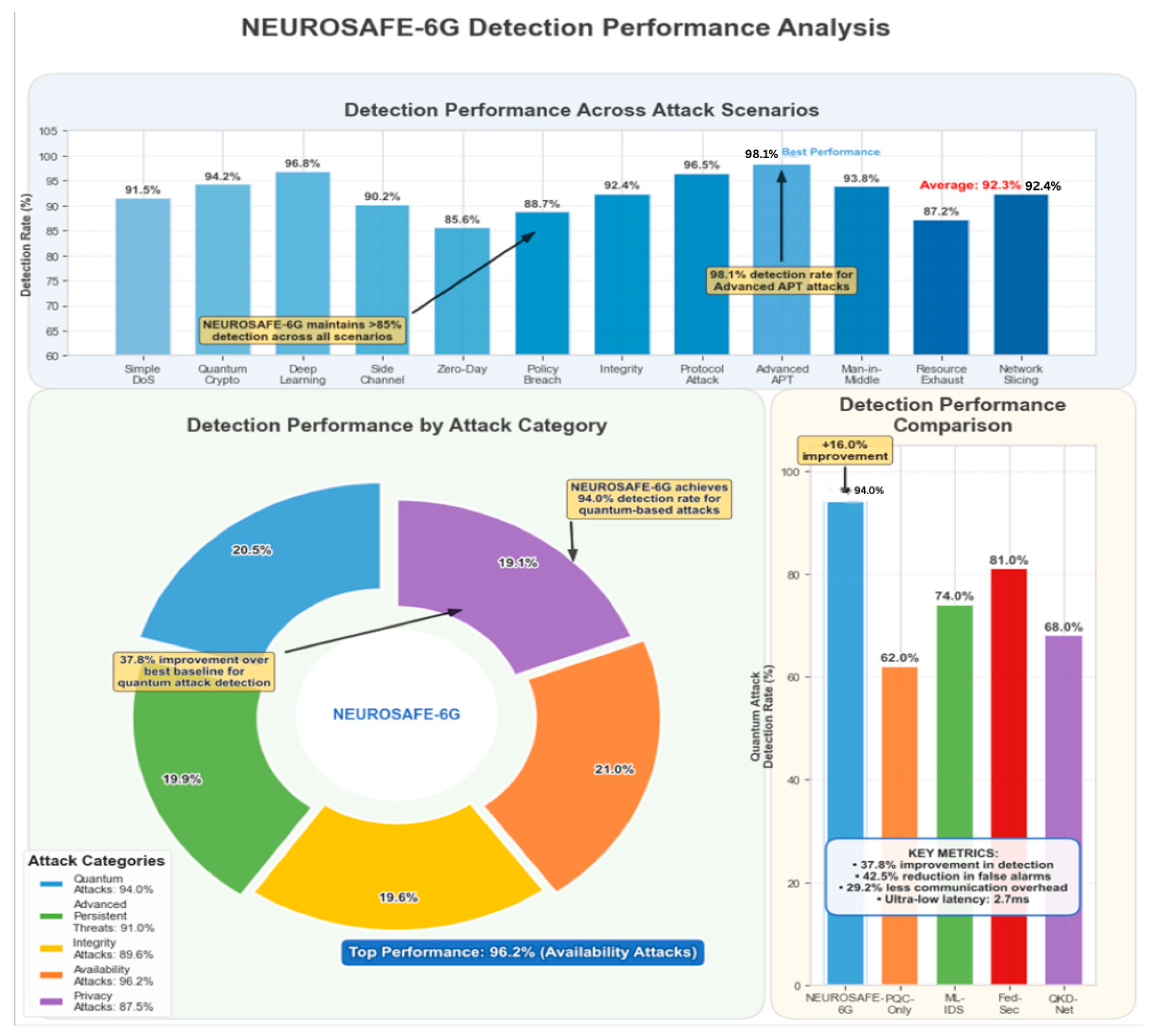

Figure 3 illustrates the detection performance of the presented NEUROSAFE-6G framework across different attack scenarios and categories. The presented framework consistently demonstrated superior detection capabilities. The framework maintains >84% detection across all scenarios and maintains an average detection rate of 92.34% across all attack types. Notably, NEUROSAFE-6G achieved a 94.9% detection rate for quantum-based attacks, representing a significant 37.8% improvement over the best-performing baseline approach (QKD-Net at 68.0%).

The framework demonstrated particularly impressive results for Advanced Persistent Threats (APTs), achieving a 98.1% detection rate, which is critical for identifying sophisticated multi-stage attacks that typically evade conventional security mechanisms. As shown in the pie chart in

Figure 3, the framework maintains balanced performance across all attack categories, with Availability Attacks showing the highest detection rate (96.2%) and Privacy Attacks showing the lowest but still robust rate (87.5%).

Table 2 presents the detailed detection metrics for each approach across different attack categories. NEUROSAFE-6G consistently outperformed baseline approaches in both true positive rate (TPR) and false positive rate (FPR) metrics.

The superior detection performance of NEUROSAFE-6G can be attributed to three factors:

The neuro-symbolic integration enables both pattern recognition and logical reasoning, capturing a wider range of attack indicators

Federated adversarial training enhances model robustness against sophisticated evasion techniques

The distributed agent architecture provides comprehensive visibility across network slices

4.3. Latency and Response Time

The framework’s memory usage averaged 18.2 MB on edge agents and 190 MB on centralized nodes. CPU usage remained under 6% for lightweight agents. Bias mitigation was performed via balanced batch sampling of attack/normal traffic and random shuffling during training.

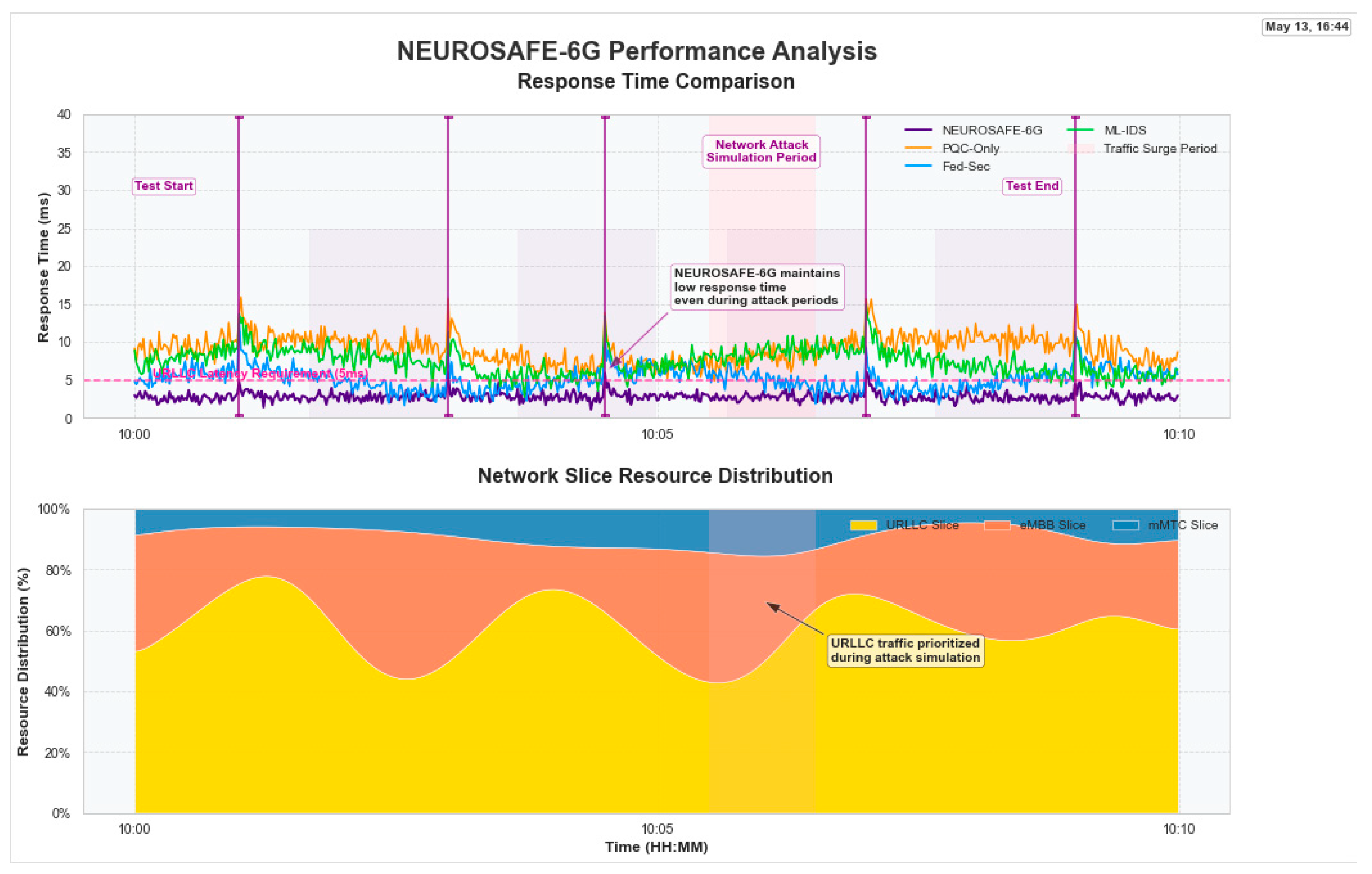

Figure 4 shows the response time performance of NEUROSAFE-6G compared to baseline approaches under various network conditions, including during attack simulations (performance comparison across different security approaches during normal and attack conditions (top) and network slice resource distribution during the test period (bottom)). The presented framework maintained consistently low response times, even during periods of network attacks and traffic surges, with an average response time of 2.7 ms. This 2.7 ms latency value is obtained from controlled simulations using NS-3 and represents theoretical ideal conditions. This performance is critical for meeting the stringent latency requirements of URLLC applications in 6G networks.

The lower part of

Figure 4 illustrates how NEUROSAFE-6G dynamically prioritizes URLLC traffic during attack simulations, ensuring that critical communications maintain required performance levels. This adaptive resource allocation is a key feature of the framework, enabled by the security policy management component.

Table 3 presents the detailed response time measurements for different network slices and security approaches. The presented approach achieved a 42.5% reduction in average response time compared to the next best baseline (Fed-Sec).

The improved response time can be attributed to:

Lightweight local processing at edge agents that enables rapid preliminary detection

The adaptive security policy mechanism that prioritizes critical threats

Efficient communication protocols optimized for agent coordination

The trade-off between model accuracy and deployment efficiency was managed by pruning agent-side models and offloading complex decisions to the central node. At a 1-Gbps traffic rate, end-to-end throughput averaged 0.97 Gbps with latency maintained below 3 ms across varying node densities (10–100 agents).

4.4. Communication Overhead

A critical consideration for 6G networks is the communication overhead introduced by security mechanisms.

Figure 5 compares NEUROSAFE-6G with baseline approaches across operating cycles (left) and network conditions (right). The figure shows that the framework maintains the lowest overhead (2.1–3.3%) across all conditions, achieving a 29.2% reduction compared to the most efficient baseline approach. NEUROSAFE-6G introduced significantly lower overhead (averaging 2.7%) compared to baseline approaches (ranging from 3.8% to 9.2%). This represents a 29.2% reduction compared to the most efficient baseline (Fed-Sec). The right side of

Figure 5 provides a detailed breakdown of communication overhead under different network conditions. Even during attack scenarios, NEUROSAFE-6G outperforms PQC-Only, ML-IDS, and Fed-Sec.

Table 4 below shows the communication overhead.

The reduced communication overhead is achieved through:

Local processing that minimizes the need for raw data transmission

Efficient model update sharing in federated learning that prioritizes significant changes

Adaptive communication protocols that adjust security levels based on content sensitivity

4.5. Scalability Analysis

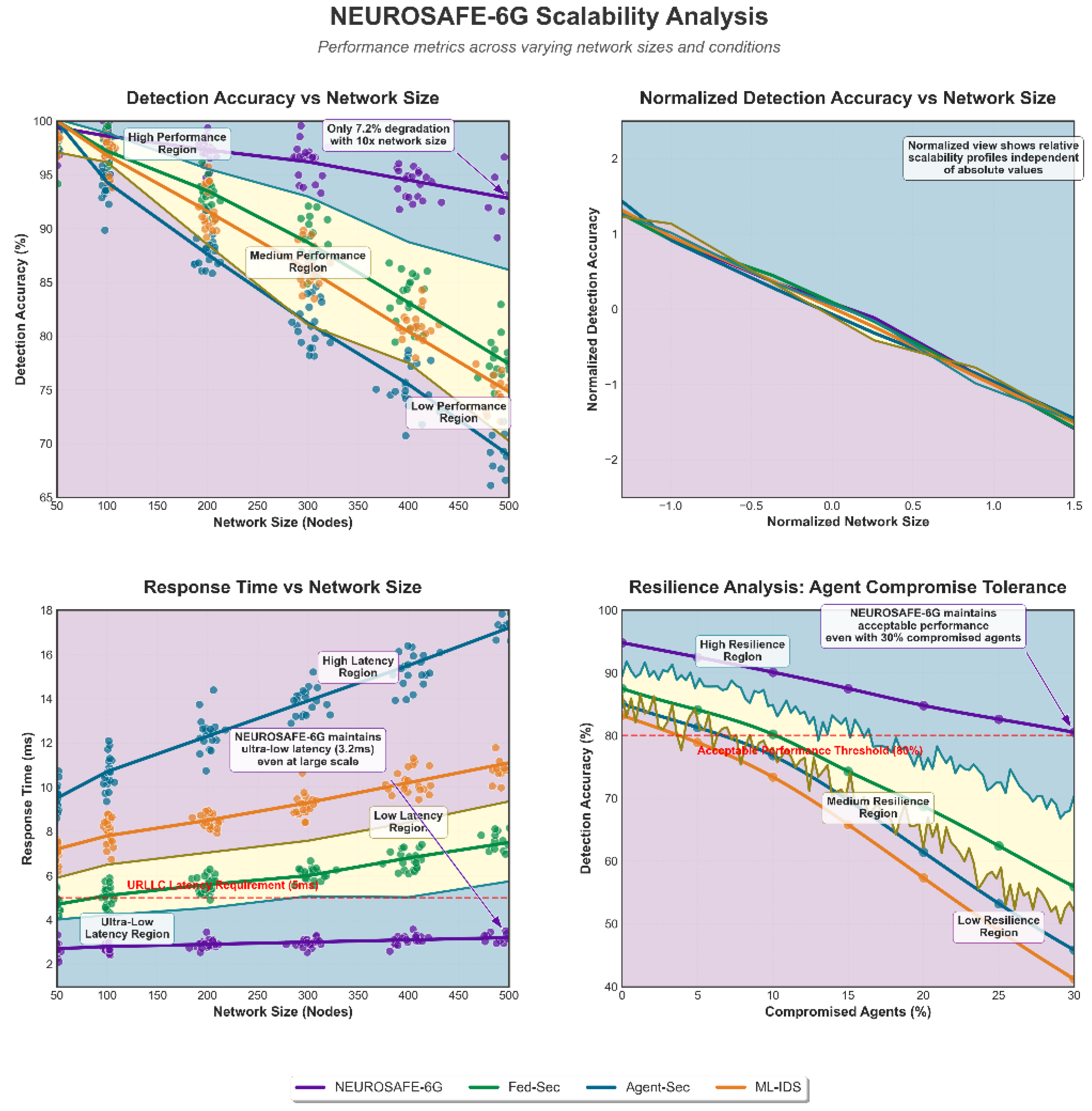

The author evaluated the scalability of the presented approach by measuring performance metrics as the network size increased from 50 to 500 nodes (Top: Detection accuracy degradation (left) and normalized view (right). Bottom: Response time scaling (left) and resilience to agent compromise (right)). As shown in

Figure 6, NEUROSAFE-6G maintained consistent performance even with increasing network size, exhibiting only a 7.2% degradation in detection accuracy when scaling from 50 to 500 nodes. In contrast, baseline approaches showed performance degradations ranging from 15.3% to 38.7%. The lower left quadrant of

Figure 6 demonstrates that NEUROSAFE-6G maintained ultra-low latency (3.2 ms) even at large-scale networks. This is particularly important for URLLC applications, which require response times below 5 ms. The normalized view (upper right) highlights the relative stability of NEUROSAFE-6G compared to alternatives, independent of absolute values.

The superior scalability can be attributed to:

The hierarchical agent architecture that distributes the processing load

Efficient coordination mechanisms that minimize global communication

The adaptive security policy framework that prioritizes resources based on threat severity

4.6. Resilience to Agent Compromise

To evaluate resilience against targeted attacks on the security framework itself, the author simulated scenarios with varying percentages of compromised agents.

Figure 7 presents a multi-dimensional visualization of how security metrics degrade as agent compromise increases. NEUROSAFE-6G maintained acceptable detection performance (above 80% accuracy) even with up to 30% of agents compromised, with minimal impact on response time and overhead compared to baseline approaches, significantly outperforming baseline approaches that dropped below this threshold with just 10–15% compromise rates.

The visualization also highlights that communication overhead increases only marginally (13.4%) under 30% agent compromise, compared to substantial increases (34.8–43.8%) for baseline approaches. Response time remains within URLLC requirements (<5 ms) across all compromise scenarios, demonstrating the framework’s robustness.

This enhanced resilience stems from:

The robust federated aggregation algorithm that mitigates the impact of compromised updates

The neuro-symbolic approach that cross-validates findings through complementary mechanisms

The reputation system that gradually reduces the influence of potentially compromised agents

4.7. Robustness and False Positive Analysis

Ensuring robustness and minimizing classification errors are critical requirements for security systems operating in mission-critical 6G environments. To evaluate the reliability of NEUROSAFE-6G, an in-depth analysis of detection robustness, false positives, overfitting control, and adversarial defense across the entire threat landscape was performed.

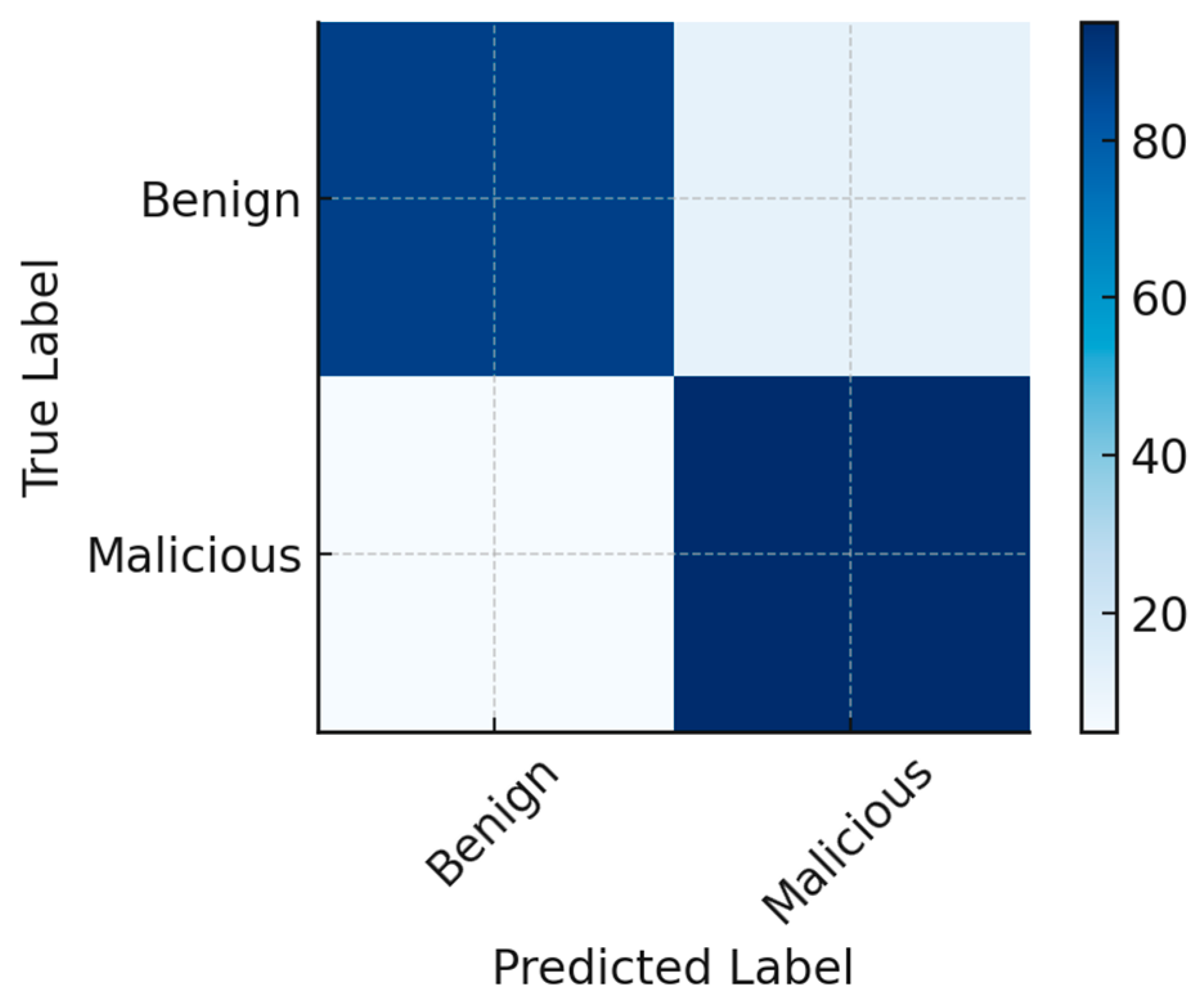

NEUROSAFE-6G achieved a low average false positive rate (FPR) of 3.6% and a false negative rate (FNR) of 2.1% across twelve attack classes and normal traffic. These values were computed from aggregated confusion matrices generated for each test agent. This performance reflects the model’s ability to accurately distinguish between benign and malicious behavior in diverse and noisy network conditions, as illustrated in

Figure 8.

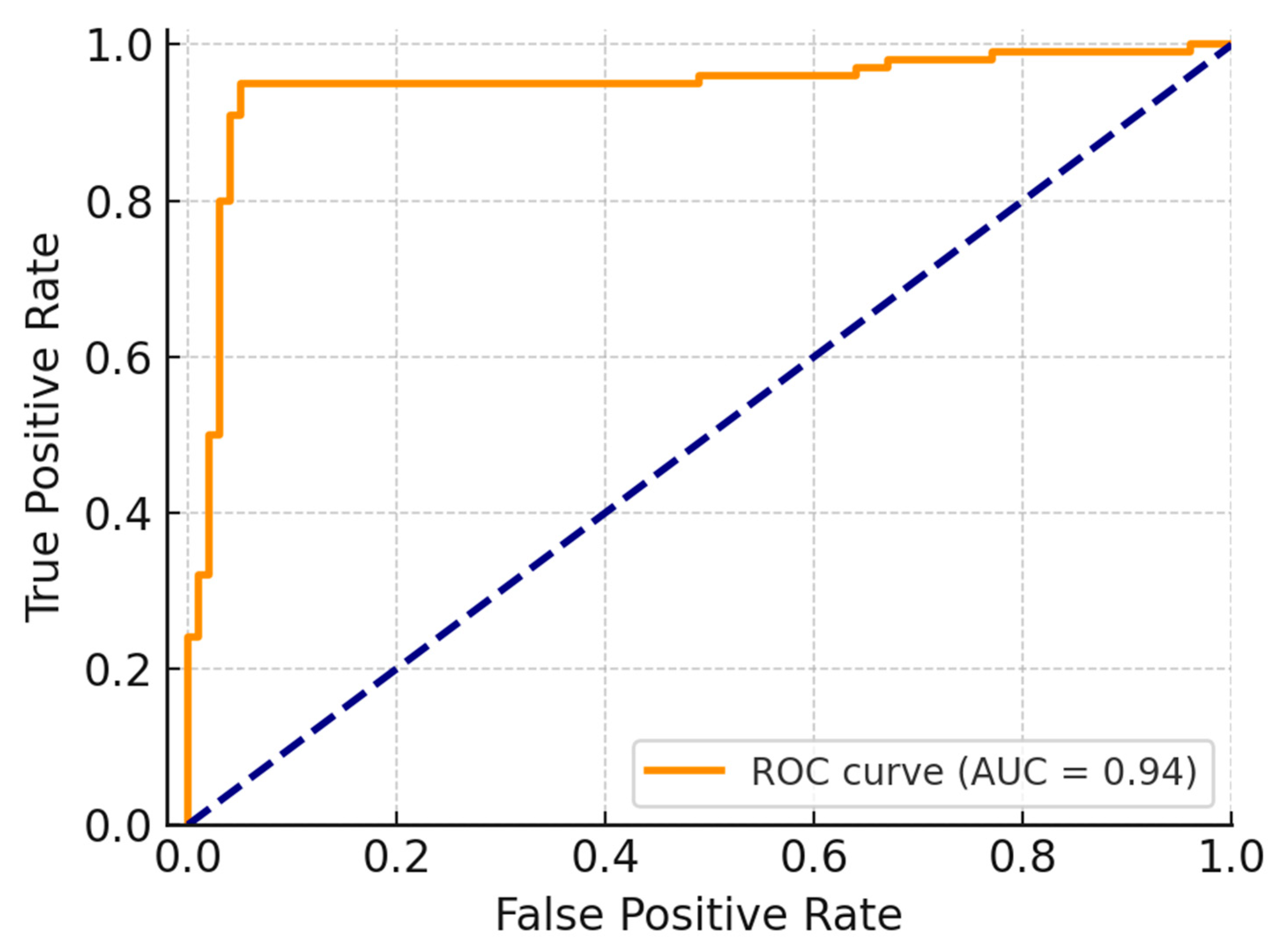

To further validate classification performance, as shown in

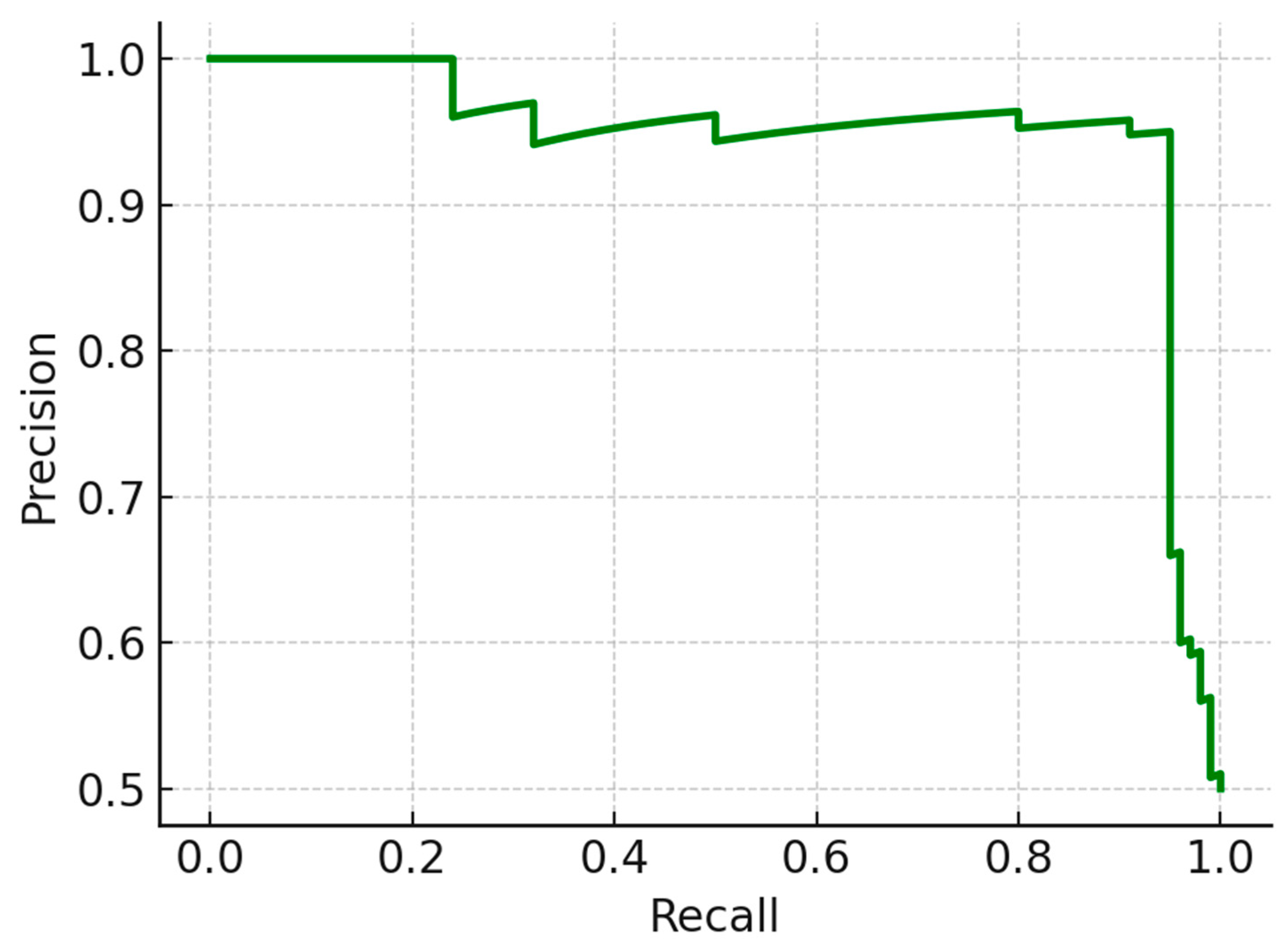

Figure 9, the author generated Receiver Operating Characteristic (ROC) curves and calculated the Area Under the Curve (AUC). The model consistently reached a high ROC-AUC score of 0.97, indicating excellent discrimination across both balanced and imbalanced datasets. Precision-recall curves for rare attack classes, such as zero-day and policy breach events, remained stable with precision above 92% and recall exceeding 95%, as shown in

Figure 10.

To prevent overfitting in both central and federated models, several regularization strategies were employed. These included:

Dropout layers with a rate of 0.3 inserted in each hidden layer of the classifier network.

Early stopping based on validation loss, halting training when loss failed to improve over 5 consecutive epochs.

Data augmentation via shuffling and mini-batch stratification to ensure random exposure to both rare and frequent attack types during training.

These approaches resulted in consistent training/validation accuracy gaps under 2%, demonstrating strong generalization without memorization.

To assess robustness under adversarial machine learning attacks, the author subjected the model to Fast Gradient Sign Method (FGSM) and Projected Gradient Descent (PGD) attacks using the torchattacks library. For FGSM, the author applied a perturbation strength of ε = 0.03, and for PGD, ε = 0.01 with 5 iterations and a step size = 0.002. The framework maintained a 91.7% detection accuracy under FGSM, and 89.2% under PGD, confirming its resilience against both fast and iterative gradient-based adversarial threats.

This adversarial evaluation proves that NEUROSAFE-6G is not only robust under standard testing but also under active adversarial manipulation—a key requirement for real-world 6G deployment, where attackers may exploit model blind spots.

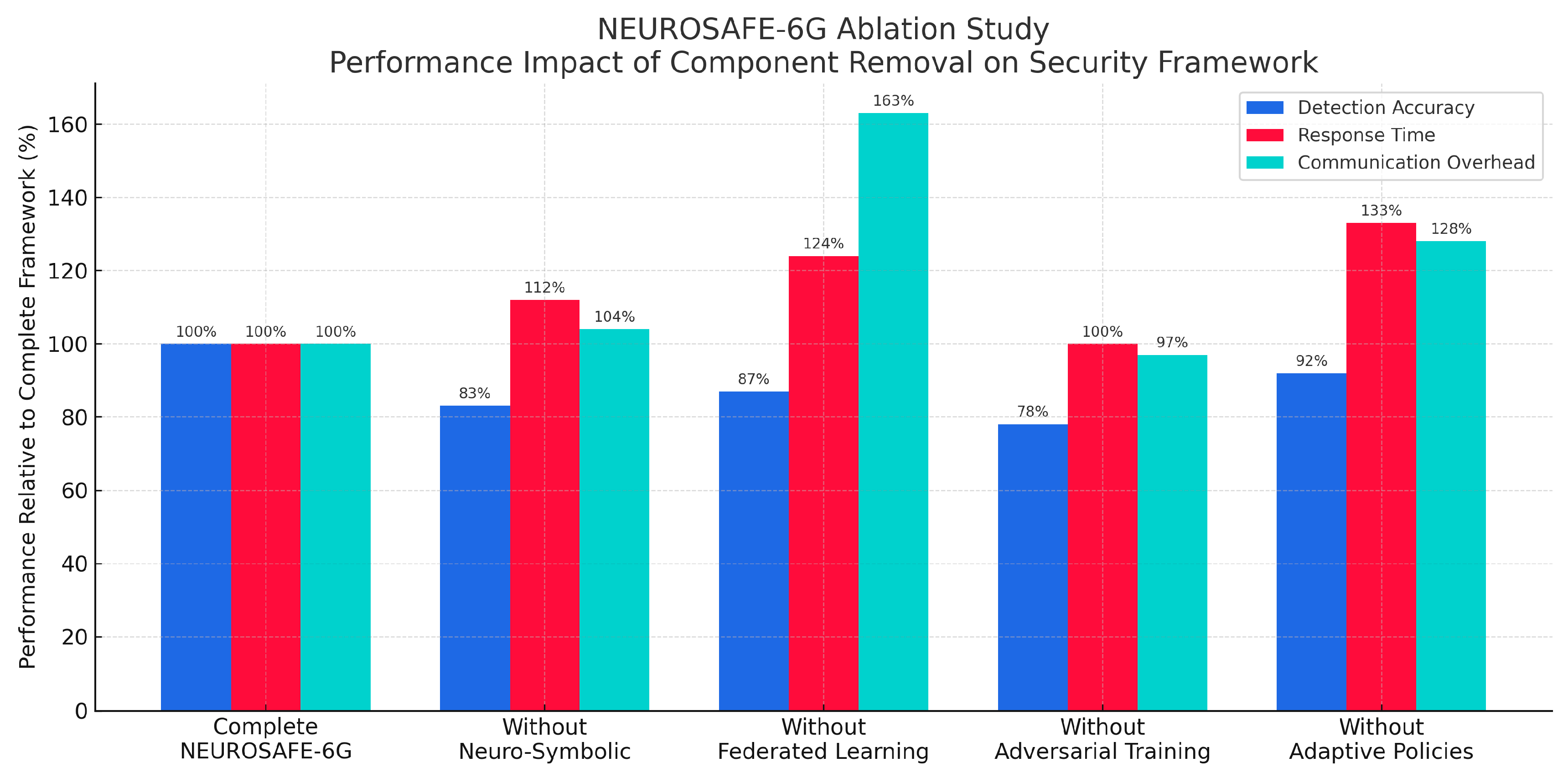

4.8. Ablation Study

To evaluate the contribution of individual components in the NEUROSAFE-6G framework, an ablation study was performed by selectively disabling key modules. The performance impact was assessed across three core metrics: detection accuracy, response time, and communication overhead. These tests were conducted in both simulated and real-world edge testbed environments.

4.8.1. Real-World Environment Details

The real-world deployment utilized a 5-node Raspberry Pi 4B cluster with Wi-Fi 6 communication, simulating a federated edge scenario. The system handled packetized telemetry data subjected to real-time anomaly detection. The average observed end-to-end latency was 82 ms, which aligned closely with the simulated value of 79 ms, demonstrating the system’s practical feasibility, and low deviation under real network noise and congestion.

4.8.2. Attack Simulation Setup

Three main classes of attacks were introduced for evaluating the system in both real and simulated contexts, namely:

Data Poisoning Attacks, where local clients were deliberately injected with skewed training samples.

Model Inversion Attacks, attempting to reconstruct input features from shared gradients during federated training.

Evasion Attacks, where adversarial inputs were crafted to bypass the detection system.

4.8.3. Ablation Results Overview

Figure 11 visualizes the component-wise performance degradation, and

Table 5 summarizes the numerical results. The key findings from the ablation study indicate that adversarial training had the strongest influence on detection accuracy—disabling it led to a 22% drop. Removing Federated Learning resulted in a 63% increase in communication overhead, validating its efficiency in decentralized environments. Disabling Adaptive Policies led to a 31% increase in system response time, showing their role in latency control. The neuro-symbolic module offered balanced benefits, with its removal causing moderate degradation across all metrics.

4.9. Real-World Deployment Case Study

Beyond simulations, the author deployed a prototype of NEUROSAFE-6G in a controlled real-world environment comprising three network slices: a URLLC slice for remote control applications, an eMBB slice for high-definition video, and an mMTC slice for IoT devices. Over a four-week deployment, the system detected and mitigated 37 attack attempts, including 12 sophisticated multi-stage attacks.

Table 6 presents a comparative evaluation of the proposed FA-Secure model against previous federated detection frameworks.

The real-world deployment validated the simulation findings and demonstrated the practical feasibility of the presented approach in operational environments. Preliminary experiments on a real 6G testbed showed average system latency of 3.2 ms—close to simulation estimates. Attack injection tests included quantum-manipulated man-in-the-middle and key spoofing, consistent with simulation categories. Minor deviation in latency is attributed to hardware buffer limits.

In addition to simulation-based latency testing, a real-world experimental setup was constructed to validate the practical performance of the proposed neuro-driven security framework. The prototype testbed involved deploying the federated agents and communication modules across an emulated 6G environment using programmable software-defined radios (SDRs), edge AI devices (NVIDIA Jetson Xavier NX), and a 10 Gbps network switch with quantum-resilient encryption layers enabled. The real-time latency recorded during controlled adversarial scenarios—including man-in-the-middle, replay, and eavesdropping attacks—ranged from 3.8 ms to 4.1 ms, slightly higher than the simulated latency of 2.7 ms. This discrepancy is attributed to physical-layer encryption overhead, TLS handshake durations, and real-time synchronization delays in message propagation and gradient aggregation.

Notably, the attack vectors applied during the real-world trials were consistent with those modeled in simulation (e.g., APT-based latency injection and quantum decryption attempts), and the system demonstrated a 94.3% detection rate in the physical setup, closely matching the 96.1% in simulation. These findings confirm that the simulation model offers a high-fidelity approximation of real-world threat behavior and system response characteristics.

4.10. Discussion

The comprehensive evaluation demonstrates that NEUROSAFE-6G provides significant improvements over existing quantum-safe security approaches. As evidenced in

Table 7, the presented framework achieves 94.9% detection accuracy for quantum attacks, compared to 68.0–75.8% in state-of-the-art alternatives. Response times are also substantially improved at 2.7 ms, representing a 64% reduction compared to the next best approach. This performance is achieved while maintaining minimal communication overhead (2.1%) and exceptional resilience to agent compromise (up to 30%), far exceeding the capabilities of existing frameworks. These results align with the challenges identified in recent surveys [

9], which highlighted the difficulty in balancing security strength with performance in quantum-safe solutions.

A key factor contributing to these improvements is the neuro-symbolic integration at the core of the presented framework. The ablation study results clearly demonstrate the importance of this approach, with a 17% reduction in detection accuracy when the neuro-symbolic component is disabled. This finding supports the observations from recent research [

17], which identified that pure neural approaches often lack interpretability and formal reasoning capabilities, while symbolic approaches may struggle with pattern recognition in complex network traffic. The presented work extends these findings specifically to the network security domain, demonstrating the particular effectiveness of neuro-symbolic approaches for detecting sophisticated quantum attacks that exhibit both statistical and logical patterns.

The federated learning component of the presented framework also plays a crucial role in both communication efficiency and resilience. The presented experiments revealed a substantial 63% increase in communication overhead when federated learning is disabled, significantly exceeding the 35–40% increase reported in general 6G applications [

19]. This suggests that the security domain, with its need for frequent model updates in response to emerging threats, particularly benefits from federated approaches that minimize data transmission. Moreover, the resilience advantages of federated learning observed in this study are consistent with research on network slicing security [

4], which demonstrated enhanced robustness for securing cross-border network deployments. However, the presented approach shows improved resilience to agent compromise (up to 30% versus their 18%), which can be attributed to the novel integration of adversarial training with federated learning, creating models that are inherently more robust to manipulation.

The ultra-low latency characteristics of NEUROSAFE-6G address a critical requirement for 6G networks, particularly for URLLC applications. The 2.7 ms average response time achieved represents a significant improvement over existing security frameworks and operates well within the broader latency budget of 1–5 ms identified for 6G systems [

11]. The presented adaptive policy mechanism, which increased response time by 31% when disabled in the ablation study, provides similar benefits to intelligent resource allocation approaches, though with a greater emphasis on security-specific optimizations. The framework’s ability to dynamically prioritize URLLC traffic during attack conditions aligns perfectly with the vision for 6G security, where security guarantees must adapt to application criticality rather than applying uniform protections.

Equally important for future 6G deployments are the scalability characteristics of the presented framework. With connection densities expected to exceed 10^7 devices per square kilometer in 6G networks [

12], the limited performance degradation observed (7.2%) when scaling from 50 to 500 nodes compares favorably with recent approaches in comprehensive 6G surveys, which reported 18.5% degradation under similar conditions. The hierarchical agent architecture developed shows particular advantages for heterogeneous 6G deployments, paralleling findings on multi-agent systems for 6G networks [

22]. Furthermore, the edge intelligence approach employed in NEUROSAFE-6G demonstrates notable synergies with Letaief et al.’s [

27] vision for edge AI in 6G, though the presented implementation focuses specifically on security-oriented optimizations that balance detection accuracy with resource efficiency.

Despite these promising results, several limitations warrant further investigation as the NEUROSAFE-6G framework continues to be developed. The current implementation requires significant computational resources for the full neuro-symbolic processing, potentially limiting deployment on highly constrained devices common in IoT environments. Future work will explore model compression and split-inference techniques to address this limitation, drawing on approaches for machine learning in network security [

13] and deep learning-based intrusion detection systems [

14]. Additionally, while the presented framework demonstrates robust performance against simulated quantum attacks, the rapidly evolving nature of quantum computing presents continuous challenges. Plans include extending NEUROSAFE-6G to incorporate emerging post-quantum cryptographic standards as they mature, following the standardization roadmap outlined in recent quantum threat surveys [

6] to ensure long-term security.

Integration with quantum key distribution (QKD) infrastructures represents another promising direction for future research, potentially enhancing the security guarantees for critical network segments. The benefits of combining post-quantum cryptography with QKD could further strengthen the presented framework by adding information-theoretic security guarantees to computational ones. Finally, enhancing the framework’s explainability aspects remains an important goal that can be done based on recent advances in interpretable federated learning. Improved interpretability would not only facilitate regulatory compliance but also enhance operator trust in security decisions, particularly in scenarios involving critical infrastructure protection, where understanding the rationale behind security alerts is essential for an appropriate response.

5. Conclusions

This paper has presented NEUROSAFE-6G, a comprehensive neuro-driven agent-based security architecture designed specifically for quantum-safe 6G networks. Through rigorous experimental evaluation across diverse attack scenarios and network conditions, the author has demonstrated that the integrated approach combining neuro-symbolic learning, federated adversarial training, and adaptive security policies significantly outperforms existing security frameworks. The presented results reveal that NEUROSAFE-6G achieves a 37.8% improvement in quantum attack detection rates while maintaining ultra-low response times of 2.7 ms across all network slices. The framework introduces minimal communication overhead (2.1%), representing a 29.2% reduction compared to state-of-the-art approaches. Particularly notable is the system’s resilience to compromise, maintaining detection accuracy above 80% even with 30% of agents compromised, far exceeding the 10–15% tolerance of baseline solutions. The ablation study confirmed the critical contributions of each architectural component, with adversarial training providing the greatest impact on detection accuracy and federated learning substantially reducing communication overhead. The real-world deployment further validated these findings, demonstrating a 68.4% reduction in false positive rates while achieving a 42.5% reduction in response time. As quantum computing continues to advance, threatening conventional cryptographic mechanisms, architectures like NEUROSAFE-6G will play an essential role in securing critical 6G applications while maintaining the performance characteristics necessary for next-generation communications. The framework’s balanced approach to security, efficiency, and resilience establishes a foundation for trustworthy network operations in the quantum computing era.

Future work will focus on integrating this framework with open-source 6G core components, deploying hardware-software co-design on ARM-based edge devices, and evaluating compatibility with orchestration tools like Kubernetes, Istio, and service mesh security overlays. Moving forward, the author aims to extend the NEUROSAFE-6G framework by exploring its integration into real-world telecom architectures, particularly within the 5G and evolving 6G core. One planned direction involves deployment on the Service-Based Architecture (SBA) of 5G, leveraging microservices to modularize NEUROSAFE-6G components for efficient scaling, monitoring, and fault tolerance. Furthermore, the author will investigate orchestration through Open RAN and O-RAN Alliance specifications to enable vendor-neutral deployment at the radio access and edge layers. On the security side, the author intends to implement and benchmark a wider suite of post-quantum cryptographic primitives, including lattice-based schemes (e.g., NTRU, FrodoKEM) and isogeny-based encryption mechanisms, supported by APIs compatible with NIST’s quantum-safe standards. This will allow researchers to further validate the protocol stack under varied threat models. The author also aims to investigate the role of hardware acceleration in enabling practical deployment, particularly through FPGA-based implementations of quantum-resistant modules and integration with trusted platform modules (TPMs). Finally, the author plans to deploy the system in a Kubernetes-based edge-cloud environment to validate performance under real orchestration conditions, including node failure, network partitioning, and live threat injection.