Combining Lexicon Definitions and the Retrieval-Augmented Generation of a Large Language Model for the Automatic Annotation of Ancient Chinese Poetry

Abstract

1. Introduction

2. Related Work

2.1. Traditional Annotation Studies

2.2. Automatic Annotation Research

2.3. Research on Classical Chinese Poetry and Ci

2.4. Large Language Models and Retrieval-Augmented Generation

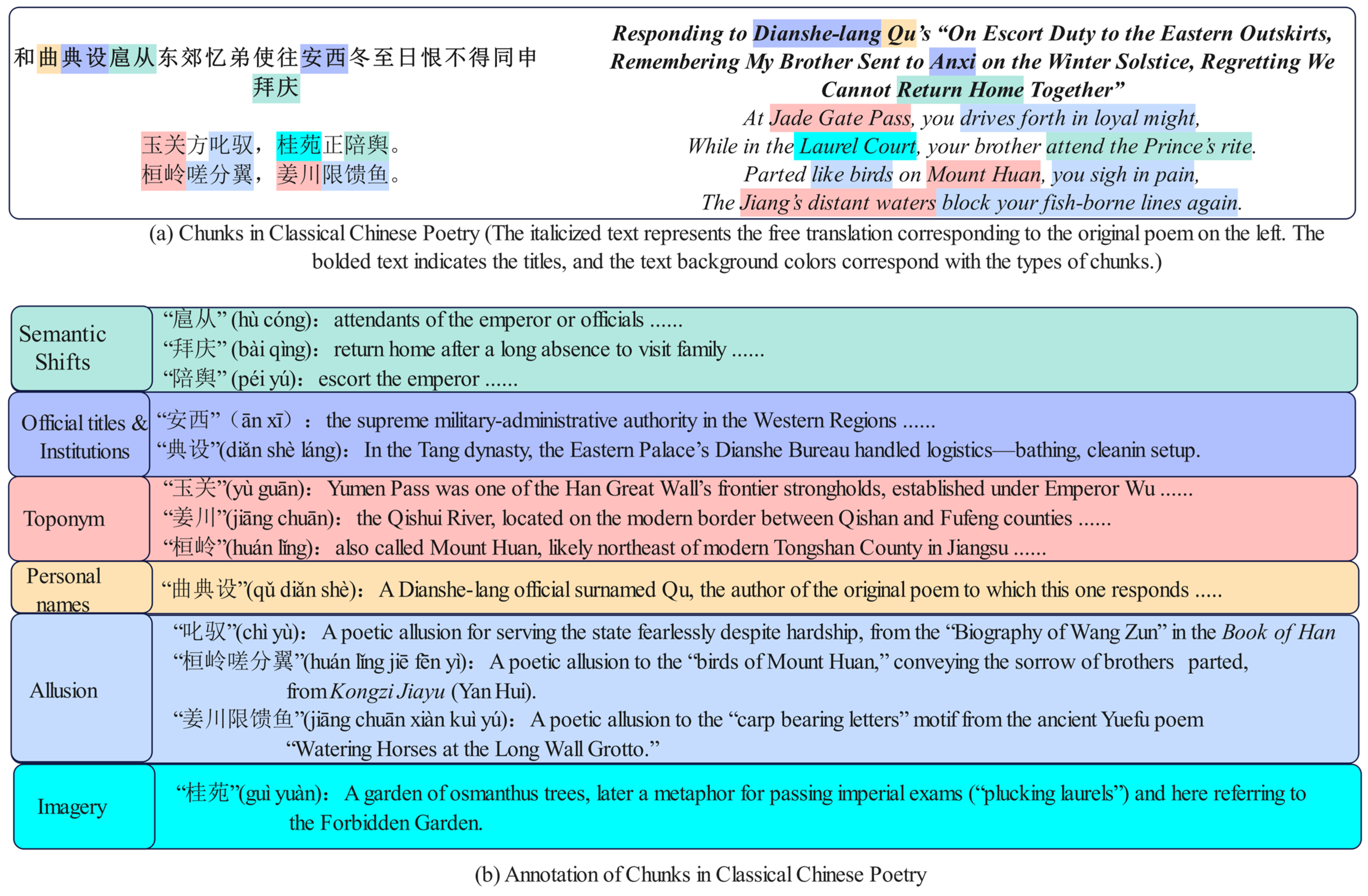

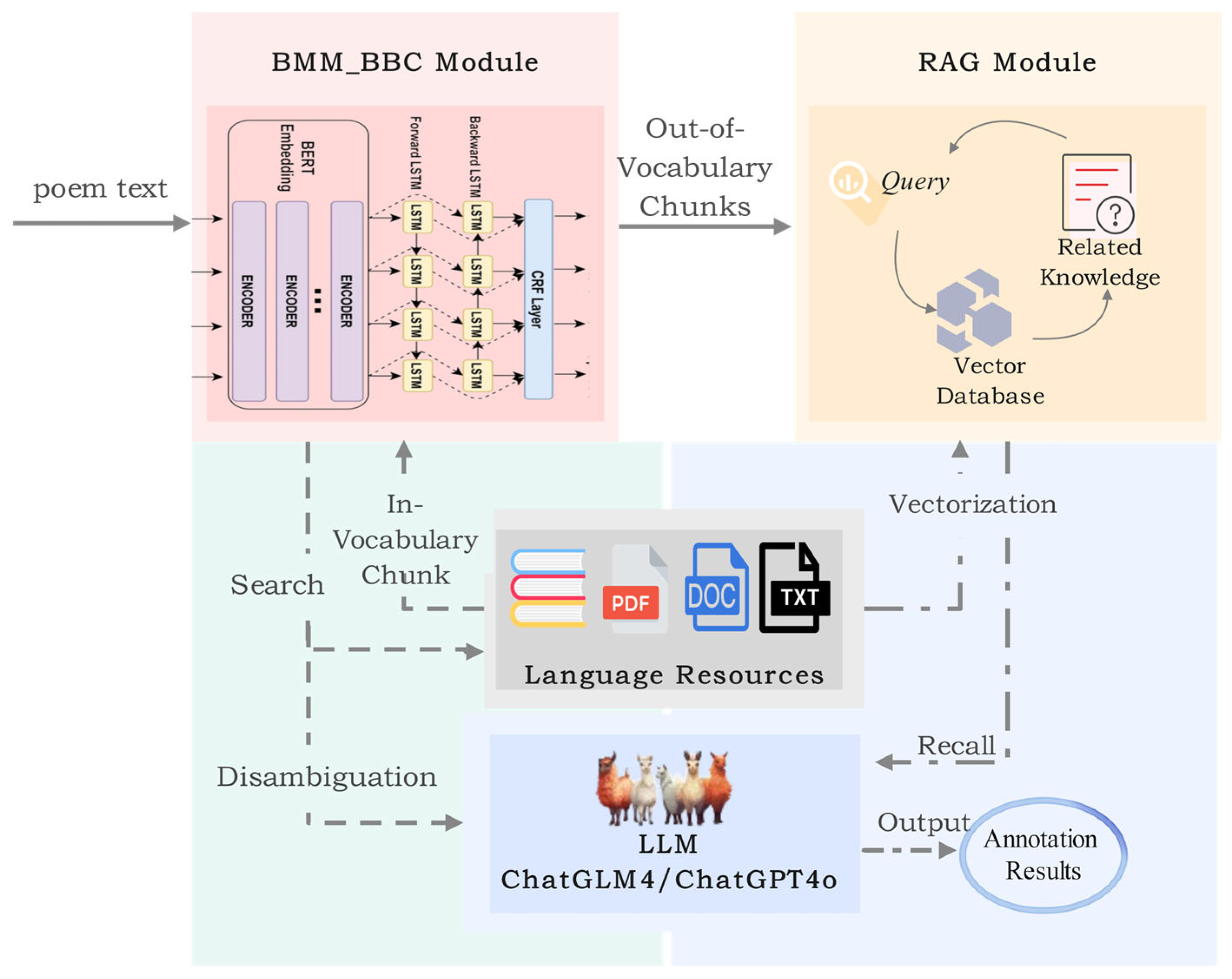

3. Automatic Annotation Method

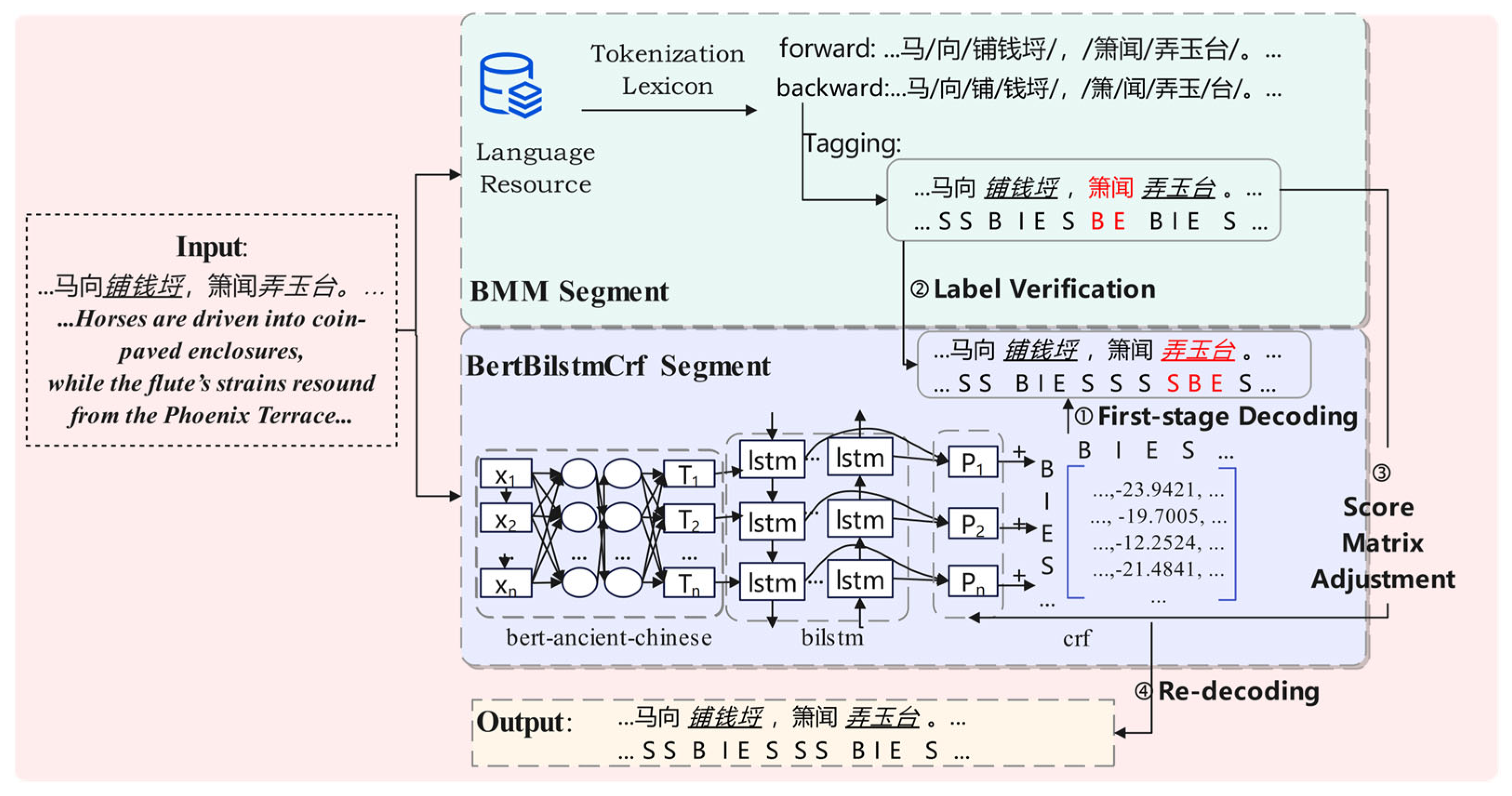

3.1. BMM_BBC Chunking Module

3.1.1. Training the BBC Model

3.1.2. Semantic Chunking with Integrated Dictionary Information

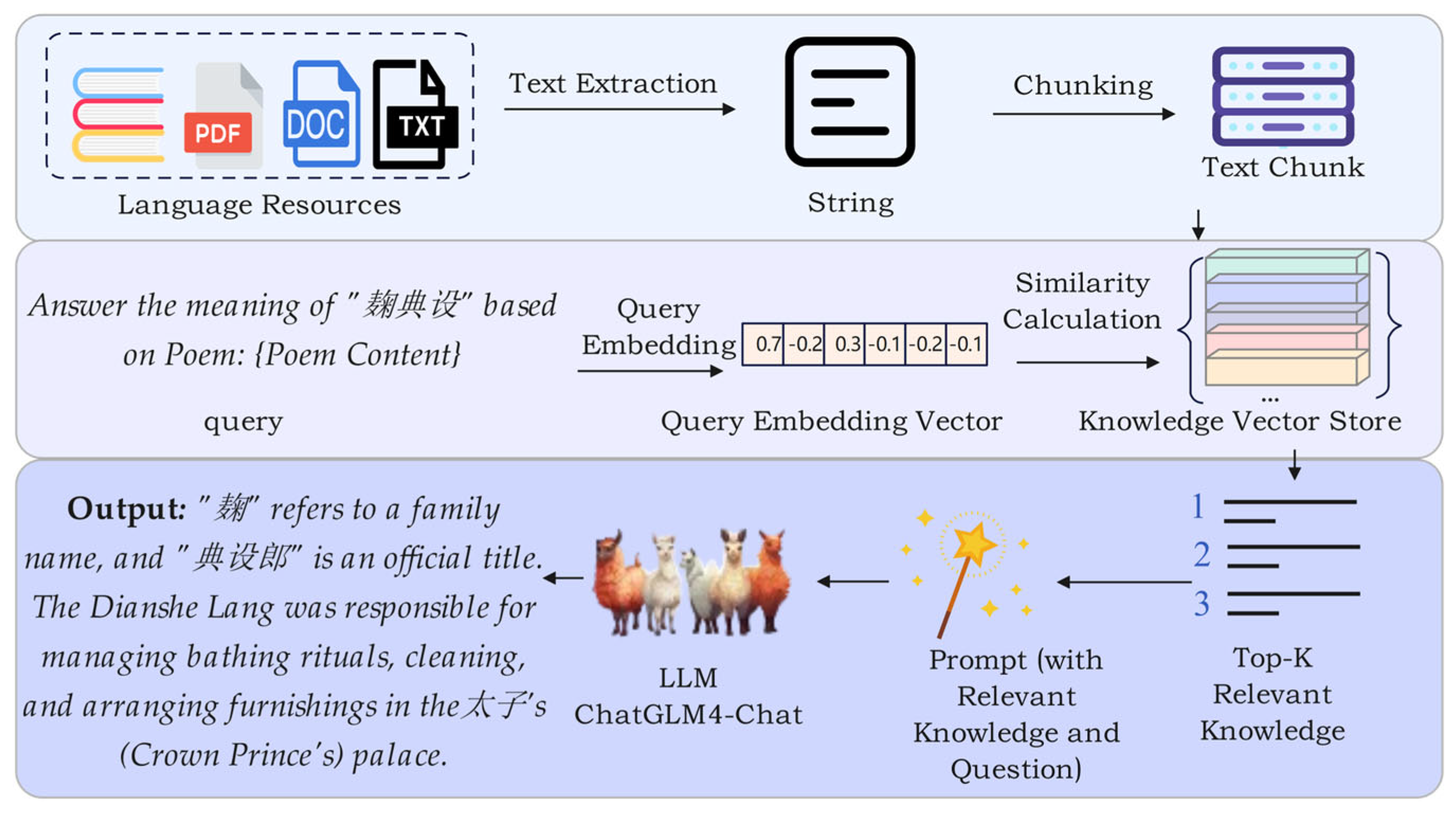

3.2. Retrieval-Augmented Generation (RAG) Module

4. Results

4.1. Chunk Segmentation Experiment and Analysis

4.1.1. Experimental Setup for Chunk Segmentation

4.1.2. Chunk Segmentation Experiments and Analysis

4.2. Chunk Annotation Experiments and Analysis

4.2.1. Experimental Setup for Chunk Annotation

4.2.2. Comparative Analysis of Chunk Annotation

4.2.3. Comparative Analysis of Source Citations

4.2.4. Inference of Chunk Referents and Annotation Example Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, J.; Wei, T.; Qu, W.; Li, B.; Feng, M.; Wang, D. Research on the Construction and Application of an Ancient Poetry Annotation Knowledge Base with Large Language Models. Libr. Trib. 2025, 45, 99–109. [Google Scholar]

- Jin, P.; Wang, H.; Ma, L.; Wang, B.; Zhu, S. Translating Classical Chinese Poetry into Modern Chinese with Transformer. In Proceedings of the Chinese Lexical Semantics (CLSW), Nanjing, China, 15–16 May 2021. [Google Scholar]

- Gao, R.; Lin, Y.; Zhao, N.; Cai, Z.G. Machine translation of Chinese classical poetry: A comparison among ChatGPT, Google Translate, and DeepL Translator. Humanit. Soc. Sci. Commun. 2024, 11, 835. [Google Scholar] [CrossRef]

- Li, S.; Hu, R.; Wang, L. Efficiently Building a Domain-Specific Large Language Model from Scratch: A Case Study of a Classical Chinese Large Language Model. arXiv 2025, arXiv:2505.11810. [Google Scholar]

- Souyunwang. Available online: https://www.sou-yun.cn/Labeling.aspx/ (accessed on 3 November 2024).

- Xu, Z.W.; Jain, S.; Kankanhalli, M. Hallucination is Inevitable: An Innate Limitation of Large Language Models. arXiv 2024, arXiv:2401.11817. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.T.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020. [Google Scholar]

- Wang, Y. ZhuShiXueGangYao; Yuwen; Language Press: Beijing, China, 1991. [Google Scholar]

- Jin, J. ZhuShiXueChuYi; Shanxi People’s Publishing House: Taiyuan, China, 2000. [Google Scholar]

- Li, S.; Zhang, X.; Shen, W.; Hou, L. Research Status and Path Construction of Ancient Book Digitization in China and Abroad. J. Mod. Inf. 2024, 43, 4–20. [Google Scholar]

- Wang, J. Digitization and Intelligent Development of Ancient Book Resources. Document 2023, 2, 188–190. [Google Scholar]

- Gushiwen. Available online: https://www.gushiwen.cn/ (accessed on 3 November 2024).

- Gushici. Available online: https://shici.tqzw.net.cn/ (accessed on 3 November 2024).

- Qu, T.; Zhu, J. Li Bai Ji: Textual Collation and Annotation; Shanghai Guji Publishing House: Shanghai, China, 1980. [Google Scholar]

- Xiao, D.F. Du Fu Quan Ji: Textual Collation and Annotation; People’s Literature Publishing House: Beijing, China, 2014. [Google Scholar]

- Souyun. Available online: https://www.sou-yun.cn/ (accessed on 3 November 2024).

- Shen, L.; Hu, R.; Wang, L. Construction and Application of Ancient Chinese Large Language Model. Chin. J. Lang. Policy Plan. 2024, 5, 22–33. [Google Scholar]

- Hu, R.F.; Zhu, Y.C. Automatic Classification of Tang Poetry Themes. Acta Sci. Nat. Univ. Pekin. 2015, 51, 262–268. [Google Scholar] [CrossRef]

- Huang, Y.; Chen, X.; Feng, M.; Wang, Y.; Wang, B.; Li, B. The Difficulty Classification of ‘Three Hundred Tang Poems’ Based on the Deep Processing Corpus. In Proceedings of the 22nd Chinese National Conference on Computational Linguistics, Harbin, China, 3–5 August 2023. [Google Scholar]

- Liu, L.; He, B.; Sun, L. An Annotated Dataset for Ancient Chinese Poetry Readability. J. Chin. Inf. Process. 2020, 34, 9–18+48. [Google Scholar]

- Yao, R. An Automatic Analysis System for Poetry Based on the Ontology of Allusions. Softw. Guide 2011, 10, 80–82. [Google Scholar]

- Tang, X.; Liang, S.; Zheng, J.; Hu, R.; Liu, Z. Automatic Recognition of Allusions in Tang Poetry based on BERT. In Proceedings of the International Conference on Asian Language Processing (lAlP), Shanghai, China, 15–17 November 2019. [Google Scholar]

- Yi, X.; Sun, M.; Li, R.; Yang, Z. Chinese Poetry Generation with a Working Memory Model. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018. [Google Scholar]

- Yi, X.; Li, R.; Yang, C.; Li, W.; Sun, M. MixPoet: Diverse Poetry Generation via Learning Controllable Mixed Latent Space. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Bao, T.; Zhang, C. Extracting Chinese Information with ChatGPT: An Empirical Study by Three Typical Tasks. Data Anal. Knowl. Discov. 2023, 7, 1–11. [Google Scholar]

- Yu, P.; Chen, J.; Feng, X.; Xia, Z. CHEAT: A Large-scale Dataset for Detecting CHatGPT-writtEn AbsTracts. IEEE Trans. Big Data 2025, 11, 898–906. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Z.; Zhang, W. ArguGPT: Evaluating, understanding and identifying argumentative essays generated by GPT models. arXiv 2023, arXiv:2304.07666. [Google Scholar]

- Bu, W.; Wang, H.; Li, X.; Zhou, S.; Deng, S. The Exploration of Ancient Poetry: A Decision-Level Fusion of Large Model Corrections for Allusion Citation Recognition Methods. Sci. Inf. Res. 2024, 6, 37–52. [Google Scholar]

- Cui, J.; Ning, M.; Li, Z.; Chen, B.; Yan, Y.; Li, H.; Ling, B.; Tian, Y.; Yuan, L. Chatlaw: A Multi-Agent Collaborative Legal Assistant with Knowledge Graph Enhanced Mixture-of-Experts Large Language Model. arXiv 2023, arXiv:2306.16092. [Google Scholar]

- LexiLaw. Available online: https://github.com/CSHaitao/LexiLaw (accessed on 3 November 2024).

- Huang, Q.; Tao, M.; Zhang, C.; An, Z.; Jiang, C.; Chen, Z.; Wu, Z.; Feng, Y. Lawyer LLaMA Technical Report. arXiv 2024, arXiv:2305.15062. [Google Scholar]

- Xiong, H.; Wang, S.; Zhu, Y.; Zhao, Z.; Liu, Y.; Huang, L.; Wang, Q.; Shen, D. DoctorGLM: Fine-tuning Your Chinese Doctor is Not a Herculean Task. arXiv 2023, arXiv:2304.01097. [Google Scholar]

- Wang, H.; Liu, C.; Xi, N.; Qiang, Z.; Zhao, S.; Qin, B.; Liu, T. HuaTuo: Tuning LLaMA Model with Chinese Medical Knowledge. arXiv 2023, arXiv:2304.06975. [Google Scholar]

- XrayGLM. Available online: https://github.com/WangRongsheng/XrayGLM (accessed on 3 November 2024).

- Liang, X.; Wang, H.; Wang, Y.; Song, S.; Yang, J.; Niu, S.; Hu, J.; Liu, D.; Yao, S.; Xiong, F.; et al. Controllable Text Generation for Large Language Models: A Survey. arXiv 2024, arXiv:2408.12599. [Google Scholar]

- Yue, S.; Chen, W.; Wang, S.; Li, B.; Shen, C.; Liu, S.; Zhou, Y.; Xiao, Y.; Yun, S.; Huang, X.; et al. DISC-LawLLM: Fine-tuning Large Language Models for Intelligent Legal Services. arXiv 2024, arXiv:2309.11325. [Google Scholar]

- Setty, S.; Thakkar, H.; Lee, A.; Chung, E.; Vidra, N. Improving Retrieval for RAG based Question Answering Models on Financial Documents. arXiv 2024, arXiv:2404.07221. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019. [Google Scholar]

- Ancient Chinese Corpus. Available online: https://catalog.ldc.upenn.edu/LDC2017T14 (accessed on 3 November 2024).

- Poetry Dataset. Available online: https://github.com/Werneror/Poetry/tree/master (accessed on 3 November 2024).

- Zhang, H. Dictionary of Personal Names in Chinese Dynasties; Shanghai Ancient Books Publishing House: Shanghai, China, 1999. [Google Scholar]

- China Biographical Database (CBDB). Available online: https://inindex.com/biog (accessed on 3 January 2024).

- Historical Place Names. Available online: https://www.guoxuemi.com/diming/ (accessed on 3 January 2024).

- Xue, G. Table of Ancient and Modern Chinese Place Names; Shanghai Lexicographical Publishing House: Shanghai, China, 2014. [Google Scholar]

- Gong, Y. Dictionary of Official Aliases in Chinese History; Zhonghua Book Company: Beijing, China, 2019. [Google Scholar]

- Xiao, S.; Liu, Z.; Zhang, P.; Muennighoff, N.; Lian, D.; Nie, J.Y. C-Pack: Packed Resources for General Chinese Embeddings. arXiv 2023, arXiv:2309.07597. [Google Scholar]

- GLMT; Zeng, A.; Xu, B.; Wang, B.; Zhang, C.; Yin, D.; Zhang, D.; Rojas, D.; Feng, G.; Zhao, H.; et al. ChatGLM: A Family of Large Language Models from GLM-130B to GLM-4 All Tools. arXiv 2024, arXiv:2406.12793. [Google Scholar]

| Property | Value |

|---|---|

| EMBEDDING_MODEL | bge-large-zh-v1.5 |

| RERANKER_MODEL | bge-reranker-base |

| LLM_MODEL | glm-4-9b-chat |

| CHUNK_SIZE | 250 |

| TOP_K | 3 |

| SCORE_THRESHOLD | 1 |

| TEMPERATURE | 0.7 |

| MAX_TOKENS | 2048 |

| Model | Length = 2 (Acc) | Length = 3 (Acc) | Length ≥ 4 (Acc) | Weighted_Acc |

|---|---|---|---|---|

| BMM | 78.47% | 33.74% | 43.48% | 72.92% |

| BBC | 78.27% | 55.19% | 53.25% | 75.07% |

| BMM_BBC | 92.88% | 71.72% | 76.46% | 90.25% |

| Chunk Type | Strict Correctness | Lenient Correctness |

|---|---|---|

| Allusion | Definition and Extended Meaning | Source of the Allusion |

| Metonymy | Contextual meaning | Attributes of the Referent |

| Imagery | Emotional or object interpretation | Object only |

| Semantic Shift | Contextual meaning | Partial contextual meaning |

| Proper Name | No Erroneous Attributes | Some Erroneous Attributes |

| Chunk | Metric | ChatGPT-4o | Taiyan 2.0 | Human | Ours—RAG | Ours |

|---|---|---|---|---|---|---|

| Allusion | Strict | 32.14 | 32.14 | 30.73 | 89.41 | 90.59 |

| Lenient | 65.48 | 73.81 | 63.30 | 91.76 | 94.12 | |

| Metonymy | Strict | 80.12 | 85.03 | 55.73 | 78.47 | 91.47 |

| Lenient | 89.03 | 95.21 | 80.73 | 82.19 | 95.43 | |

| Imagery | Strict | 67.74 | 69.61 | 73.66 | 74.74 | 83.16 |

| Lenient | 80.65 | 80.39 | 91.03 | 80.00 | 90.53 | |

| Proper Name | Strict | 51.15 | 54.26 | 56.76 | 66.20 | 86.57 |

| Lenient | 76.04 | 75.34 | 84.57 | 68.52 | 95.83 | |

| Semantic Shift | Strict | 75.15 | 75.15 | 71.14 | 82.25 | 90.27 |

| Lenient | 85.63 | 88.96 | 87.51 | 84.62 | 92.63 | |

| Micro-Average | Strict | 69.28 | 72.01 | 57.60 | 77.81 | 89.72 |

| Lenient | 84.06 | 86.73 | 81.43 | 80.93 | 94.33 |

| Model | Cited Sources | Valid Citations | Total Annotated Chunks |

|---|---|---|---|

| ChatGPT-4o (baseline) | 18 | 12 | 1217 |

| Taiyan 2.0 | 23 | 21 | 1217 |

| Ours | 652 | 613 | 1217 |

| Model | Chunk | Definition | Source |

|---|---|---|---|

| ChatGPT-4o | 大树思冯异 The towering tree recalls General Feng Yi. | In this line, the image of the “big tree” evokes the outstanding talent and notable deeds of Feng Yi. | Cited from the “Biography of Feng Yi”. Feng Yi was a renowned general of the early Eastern Han dynasty, celebrated for governing his troops with civil virtue and repeatedly distinguishing himself in battle. |

| Taiyan 2.0 | 大树思冯异 The towering tree recalls General Feng Yi. | None | Hou Han Shu, “Biography of Feng Yi”: “…每所止舍,诸将并坐论功,异常独屏树下,军中号曰‘大树将军’。” |

| Ours (full) | 大树思冯异 The towering tree recalls General Feng Yi. | Refers to the Eastern Han general Feng Yi (courtesy name Gongsun), who was appointed “General Who Assists the Army.” Whenever other commanders convened to discuss merits, he alone would withdraw to stand beneath a tree, earning him the moniker “General of the Big Tree.” In later usage, it became an idiom praising exceptional military leaders. | Hou Han Shu, Vol. 17, “Biography of Feng Yi”: “异为人谦退不伐,行与诸将相逢,辄引车避道。进止皆有表识,军中号为整齐。每所止舍,诸将并坐论功,异常独屏树下,军中号曰‘大树将军’。” |

| Ours (full) | 慈恩寺浮图应制 Cien Pagoda: A Poem Composed by Imperial Command | “浮图” (also written “浮屠”) is a Buddhist term, a phonetic transliteration of the Sanskrit “Buddha”, referring to a stupa. | Record of the Five Hundred Arhats of Jiancheng Chan Yuan: “…且造铁浮屠十有三级,高百二十尺。” |

| No. | Classical Poem Excerpt | Annotation Result |

|---|---|---|

| 1 | “怀薛尚书兼呈 东阳王使君” In Memory of Secretary Xue and Also Addressed to Prefect Wang of Dongyang | ① “Refers to the Tang poet, courtesy name Congyi, a native of Dongyang in Yue Prefecture. He served as governor of Hangzhou, Yuanzhou, and Taizhou.—Taiyan 2.0” |

| ② “‘Prefect Wang of Dongyang’ refers to a local official serving in Dongyang, who is a friend with whom the poet communicates or shares thoughts in the poem.—ChatGPT4o” | ||

| ③ “‘Prefect Wang of Dongyang’: Refers to an official who once served as prefect of Dongyang, possibly referring to Wang An.—Ours” | ||

| 2 | “酬张祜处士见寄长句四韵” In Reply to Recluse Zhang Hu’s Four-Rhyme Poem Sent to Me | ① “Zhang Hu, styled as ‘recluse.’ See—Taiyan 2.0” |

| ② “‘Recluse Zhang Hu’ refers to the virtuous Zhang Hu, used here to address the person interacting with the poet, suggesting his talent and integrity made him worthy of respect.—ChatGPT4o” | ||

| ③ “Refers to the Tang poet Zhang Hu, known for living in seclusion without holding official post, hence referred to as ‘recluse.’ Zhang Hu authored Two Palace Poems, one of which… According to《唐诗纪事》 (Records of Tang Poetry), Zhang arrived in the capital and became affiliated with Yuan Zhen in the inner court…—Ours” |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Wei, T.; Qu, W.; Li, B.; Feng, M.; Wang, D. Combining Lexicon Definitions and the Retrieval-Augmented Generation of a Large Language Model for the Automatic Annotation of Ancient Chinese Poetry. Mathematics 2025, 13, 2023. https://doi.org/10.3390/math13122023

Li J, Wei T, Qu W, Li B, Feng M, Wang D. Combining Lexicon Definitions and the Retrieval-Augmented Generation of a Large Language Model for the Automatic Annotation of Ancient Chinese Poetry. Mathematics. 2025; 13(12):2023. https://doi.org/10.3390/math13122023

Chicago/Turabian StyleLi, Jiabin, Tingxin Wei, Weiguang Qu, Bin Li, Minxuan Feng, and Dongbo Wang. 2025. "Combining Lexicon Definitions and the Retrieval-Augmented Generation of a Large Language Model for the Automatic Annotation of Ancient Chinese Poetry" Mathematics 13, no. 12: 2023. https://doi.org/10.3390/math13122023

APA StyleLi, J., Wei, T., Qu, W., Li, B., Feng, M., & Wang, D. (2025). Combining Lexicon Definitions and the Retrieval-Augmented Generation of a Large Language Model for the Automatic Annotation of Ancient Chinese Poetry. Mathematics, 13(12), 2023. https://doi.org/10.3390/math13122023