Abstract

Existing deepfake detection methods heavily rely on specific training data distributions and struggle to generalize to unknown forgery techniques. To address the challenge, this paper focuses on two critical research gaps: (1) the lack of systematic mining of standard features across multiple forgery methods; (2) the unresolved distribution shift problem in the strong supervised learning paradigm. To tackle these issues, we propose a self-supervised learning framework based on feature disentanglement, which enhances the generalization ability of detection models by uncovering the intrinsic features of forged content. The core method comprises three key components: self-supervised sample construction and training samples for feature disentanglement, which are generated via an image self-mixing mechanism; feature disentanglement network, where the input image is decomposed into two parts—content features irrelevant to forgery and discriminative forgery-related features; and conditional decoder verification, where both types of features are used to reconstruct the image, with forgery-related features serving as conditional vectors to guide the reconstruction process. Orthogonal constraints on features are enforced to mitigate the overfitting problem in traditional methods. Experimental results demonstrate that, compared with state-of-the-art methods, the proposed framework exhibits superior generalization performance in cross-unknown forgery technique detection tasks, effectively breaking through the dependency bottleneck of traditional supervised learning on training data distributions. This study provides a universal solution for deepfake detection that does not rely on specific forgery techniques. The model’s robustness in real-world complex scenarios is significantly improved by mining the common essence of forgery features.

MSC:

68T07

1. Introduction

The rapid advancement of generative artificial intelligence (AI) technology in recent years has significantly reduced the production costs of deepfake content. In contrast, the realism of synthetic content has reached a level nearly undetectable by the human eye. From forged political statements to financial fraud, deepfake technology poses substantial risks to social trust systems, national security, and personal privacy. Although academic communities have proposed multiple deep learning-based detection methods, current approaches still exhibit notable limitations in generalization capability when confronted with unknown manipulation algorithms or cross-domain data. This limitation primarily stems from the strong dependency of conventional, intensely supervised learning paradigms on training data distributions. This hinders models from capturing cross-technique standard features of synthetic media, which makes the models vulnerable to distributional shifts in practical applications.

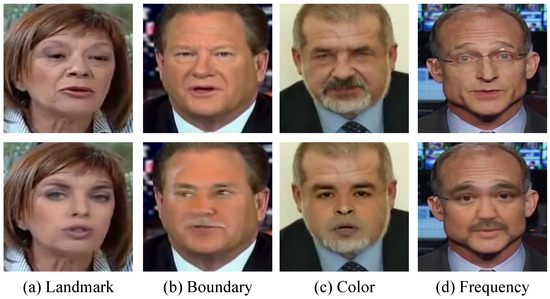

This study systematically analyzes mainstream deepfake generation methods and reveals inherent artifact defects prevalent in synthetic samples. Building upon existing research, we categorize four typical artifact patterns as illustrated in Figure 1. Notably, current supervised learning-based detection methods focus on learning features strongly associated with specific forgery techniques. While demonstrating excellent detection performance within training domains, their generalization performance exhibits significant degradation when confronting data generated by novel or heterogeneous forgery methods, with accuracy rates in some scenarios falling below random guessing thresholds.

Figure 1.

Four typical artifacts in deepfake samples: (a) landmark mismatch; (b) blending boundary; (c) color mismatch; (d) frequency inconsistency.

Current mainstream deepfake detection methods predominantly adopt intensely supervised learning frameworks, relying on extensive labeled datasets to train classification models. For example, models based on Convolutional Neural Networks (CNNs) or Vision Transformers [1] (ViTs) perform authenticity verification by extracting local texture patterns, frequency-domain features, or illumination consistency cues. However, these approaches suffer from two fundamental limitations:

Insufficient mining of cross-manipulation common features: Existing studies predominantly focus on designing feature extraction modules tailored to specific manipulation techniques, which lack systematic modeling of common artifacts across diverse synthesis algorithms. As noted in [2], synthetic samples from different manipulation techniques may exhibit shared anomalies in high-frequency information or content features. However, current models fail to decouple these cross-technique discriminative patterns effectively.

Unaddressed distribution shift vulnerability: Strongly supervised models tend to overfit training data distributions, which suffer significant performance degradation when encountering domain discrepancies between testing and training data (e.g., novel synthesis algorithms or post-processing operations). Study [3] experimentally demonstrates that supervised models may experience error rate increases in cross-dataset evaluations, whereas self-supervised learning can mitigate this issue through feature alignment mechanisms.

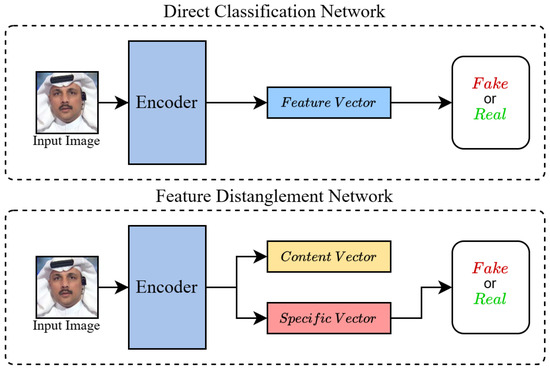

To address these challenges, this paper proposes a self-supervised learning framework based on feature disentanglement, which improves the generalization of the detection model by exploiting intrinsic data characteristics, distinguishing itself from prior works through explicit feature decomposition and adaptive constraint mechanisms. As illustrated in Figure 2, the feature-decoupling-based approach decomposes the features of deepfake samples into distinct components, such as common forgery features and specific forgery features. Unlike traditional methods that treat forged features as an undifferentiated whole, our decoupling process enables separate extraction and analysis of these features, facilitating a more precise understanding of the forgery essence. This method surpasses conventional cross-dataset detection approaches, which often struggle with technique-specific biases by explicitly modeling the shared semantics across different forgery techniques. The core innovation lies in employing self-supervised tasks to guide models in learning manipulation-irrelevant content features and discriminative forgery patterns, thereby reducing dependency on specific data distributions. This represents a significant advancement over previous studies that relied heavily on task-specific annotations or limited semantic modeling. The key contributions are structured as follows:

Figure 2.

Comparison between the Direct Classification Method and the Feature Disentanglement Method. The direct classification network transforms the input data into feature vectors and directly conducts classification. The feature decoupling network strips away the content features that are irrelevant to forgery detection and focuses on the concealed forgery features in the image so as to prevent overfitting and enhance the generalization ability of the model.

- 1.

- Self-supervised sample construction with controlled disentanglement guidance: While existing works like the adjustable forgery synthesizer (AFS) [4] focus on generating pseudo-samples through image blending, our approach introduces explicit guidance for feature disentanglement via controlled blending ratios and spatial constraints. Unlike AFS, which lacks systematic mechanisms to enforce feature separability, our method strategically simulates diverse manipulation patterns by mixing authentic image patches with forged regions under strict spatial-temporal constraints. This ensures that the generated pseudo-samples effectively promote learning disentangled feature representations, overcoming the vague guidance issue in prior self-supervised sample construction.

- 2.

- Adversarially constrained feature disentanglement network architecture: Inspired by mutual information maximization strategies in [5], our dual branch network design goes beyond previous feature extraction frameworks by introducing adversarial training constraints to enforce strict feature independence between content features (e.g., identity, scene context) and forgery-related artifacts (e.g., high-frequency noise, edge discrepancies). Unlike study [5], which mainly relies on mutual information estimation without explicit adversarial regularization, our architecture significantly enhances cross-domain adaptability through adversarial minimization of feature interdependencies. This adversarial constraint mechanism represents a novel improvement in ensuring the disentangled features are discriminative and domain-invariant.

- 3.

- Generative conditional decoding validation mechanism extended: Building on the unlearning memory mechanism (UMM) in [3], which was initially applied to discriminative tasks, our work innovatively extends UMM to generative scenarios through a conditional decoding validation mechanism. Using content features as primary input and forgery patterns as conditional vectors, with reconstruction quality as disentanglement validity criteria, our approach introduces a feedback loop where failed reconstructions trigger network optimization via backward feature redundancy analysis. This generative validation framework addresses the limitation of previous UMM-based methods that lacked effective validation mechanisms for feature disentanglement in generative tasks, providing a more comprehensive solution for ensuring the quality of disentangled features.

Experimental results on multiple public datasets (e.g., FaceForensics++, Celeb-DF) demonstrate the superior performance of our method in detecting unseen manipulation algorithms compared to existing supervised models and self-supervised baselines. In cross-domain testing, our framework achieves an approximately 2.1% increase in Area Under the ROC Curve (AUC) over the Xception fine-tuning approach [6], while exhibiting enhanced robustness against post-processing operations such as image compression and noise injection attacks. Furthermore, visual analysis confirms the model’s capability to precisely localize forged regions, providing interpretable support for detection outcomes.

The remainder of this paper has the following structure: Section 2 analyzes generalization bottlenecks in deepfake detection, Section 3 details the design of the self-supervised feature disentanglement framework, Section 4 presents experimental configurations and comparative analyses, and Section 5 concludes with research summarization and future directions.

2. Related Work

This section reviews recent studies in deepfake detection, focusing on three key aspects: (1) primitive detection methods; (2) detection methods toward generalization; (3) detection methods based on self-supervised learning.

2.1. Primitive Detection Methods

Initial explorations in facial forgery detection primarily relied on traditional digital image processing methods and shallow learning architectures [7,8,9,10,11]. Early approaches typically leveraged specific statistical features—such as keypoint matching [7], illumination inconsistencies [8], local noise distributions [9], and device fingerprints [10]—to identify inherent forgery patterns, and employed traditional machine learning classifiers like Support Vector Machines(SVM) for binary classification to generate final detection outcomes. Moreover, since deepfake technology is inherently rooted in manipulating image pixels, these methods demonstrated remarkable effectiveness during the early stages. However, handcrafted feature extraction methods often fail to capture optimal forensic features for reliable forgery detection. With advancements in both forgery techniques and deep learning technologies, the automatic extraction of forensic features via neural networks has emerged as a critical research focus [12]. Following the remarkable success of deep convolutional neural networks (CNNs) in image recognition, exploring optimizations of CNN frameworks has proven to be highly effective in achieving superior deepfake detection results [13,14,15]. However, such approaches fail to sufficiently exploit frequency-domain characteristics of images (e.g., compression artifacts) while neglecting temporal dependencies inherent in video sequences. Some works are shown in Table 1. To this end, numerous studies have extensively explored the potential of utilizing frequency information [16,17] and temporal information [18,19,20,21] to enhance detector performance, achieving remarkable results. Nevertheless, existing detection frameworks exhibit limited generalizability when confronted with emerging forgery methodologies beyond their training distribution.

Table 1.

Overview of selected works and their limitations in deepfake detection. The table summarizes recent representative studies in the field of deepfake detection. These works not only highlight current research trends but also reflect the evolving landscape of this domain.

2.2. Generalization-Oriented Deepfake Detection Approaches

While current detectors demonstrate satisfactory performance in within-domain evaluations, their generalization capabilities remain constrained when tested across diverse datasets and are a key research focus in deepfake detection. Face X-ray [28] detects forged faces by identifying blending boundaries, improving generalization to unseen manipulation methods without requiring algorithm-specific prior knowledge. Luo et al. [22] propose detecting face forgeries by analyzing high-frequency features, which enhances generalization to unseen deepfake techniques by capturing common artifacts across manipulation methods. Cao et al. [23] develop an end-to-end reconstruction-classification framework that jointly learns to reconstruct images and detect forgeries, enhancing generalization to unseen manipulations by capturing domain-invariant features. SBIs [24] and SLADD [25] further improve the generalization ability of the model by combining data augmentation and blending and using synthetic data generation to capture domain-independent forgery patterns. Yan et al. [2] propose a novel multi-task learning framework to disentangle shared forgery artifacts, demonstrating superior generalization in cross-dataset evaluations; building on this, they further augment forged images in latent space and distill a lightweight classifier via knowledge distillation, achieving enhanced generalization capabilities [29]. Yu et al. [26] proposed a short-term and long-term spatiotemporal view fusion framework, which analyzes the distribution differences among forgery techniques through graph convolutional networks and significantly improves the generalization ability in cross-dataset testing. Yu et al. [27] proposed fine-tuning large-scale visual-language models (LVLMs), achieving forged region localization and natural language interpretation through Prompt design. Cui et al. [30] designed a lightweight adapter, combining the visual features of CLIP with task-specific knowledge to enhance the capture of forgery traces through hybrid boundary learning.

While current methods detect deepfakes well in known scenarios, their heavy reliance on labeled data and struggle with new forgery techniques have pushed researchers toward exploring more detection methods.

2.3. Self-Supervised Learning-Based Detection Methods

Recent advancements in self-supervised learning-based deepfake detection methods aim to learn universal forgery patterns from unlabeled data, effectively reducing dependency on manual annotations while improving generalization capabilities against emerging manipulation techniques. Chen et al. [25] develop a self-supervised adversarial learning framework that dynamically generates diverse deepfake samples and jointly optimizes forgery detection with artifact parameter prediction, significantly improving cross-dataset generalization. Haliassos et al. [31] propose a self-supervised method that leverages temporal consistency in real talking-face videos to learn robust forgery representations, achieving a high accuracy on unseen manipulation techniques. Nguyen et al. [6] employed DINO-pre-trained ViT as a feature extractor and achieved visualizations of forged regions through an attention mechanism. Their method attained state-of-the-art (SOTA) performance with limited training data, validating the advantages of self-supervised models in cross-domain detection. Zhang et al. [32] proposed the NACO framework, which learns spatio-temporal consistency representations of real face videos via self-supervised learning. It uses CNN to extract spatial features and a Transformer to model long-range spatiotemporal relationships, thereby enhancing representation robustness through a combination of the Spatial Prediction Module and the Temporal Contrast Module. Sun et al. [33] proposed generating adversarial samples by freezing a pre-trained Stable Diffusion network and constructing a hybrid dataset. This approach forces detectors to learn the inherent source-target feature differences in forgeries, using diffusion models as feature perturbation tools to enhance detectors’ understanding of the generation process. Xu et al. [34] proposed a Self-bending training method that eliminates the need for forged samples to generate mixed region labels. This method achieves high-precision detection and localization by integrating Swin-Unet for tamper region localization.

Self-supervised learning has emerged as a core paradigm in deepfake detection through data-driven strategies and task diversity. Its evolution reflects a shift from single-feature learning to complex multimodal collaboration, and it is poised to overcome the dual bottlenecks of annotation dependency and generalization constraints in the future.

3. Methods

3.1. Motivation

The generalization problem in deepfake detection comes from multiple technical hurdles. New face-forgery techniques are in constant evolution. Features from different algorithms vary widely. Based on subjective assumptions for a standard forgery feature model, traditional methods cannot capture the essence of diverse real-world forgeries, thus limiting model generalization. Sample content info easily influences Binary classification detection algorithms, causing “implicit identity leakage.” Identity-info loss during forgery creates an identity-based split in training samples. When the model cannot extract forgery features, content info may mislead it, weakening its ability to detect unknown forgeries. Also, detection-related generative adversarial and convolutional neural networks have innate flaws. A convolutional neural network trained on one dataset cannot adapt to other data distributions or handle diverse deepfake content, worsening the generalization challenge.

To address the challenge of generalization in deepfake detection, we draw inspiration from the research conducted at UCF [2] and construct a disentanglement learning framework based on self-supervised learning. The core objective of this framework is to decompose self-supervised image data into two components: content features and forgery features. By performing conditional self-reconstruction operations on these features, we can deeply explore the standard features shared by forged samples. With the help of a conditional decoder, the framework can accurately recover the content information of forged samples while performing detection relying solely on the extracted forgery features. This unique design significantly enhances the generalization ability of the deepfake detector, enabling it to perform well in various scenarios. It also effectively prevents the model from overfitting to features unrelated to forgery and features specific to certain forgery methods. As a result, it dramatically improves the accuracy and reliability of detection.

3.2. Architecture Summary

This framework employs a feature disentanglement learning architecture that integrates self-supervised data synthesis and feature-conditioned reconstruction networks to isolate forgery-related features, thereby enhancing the generalization capability of deepfake detection methods through its unique architectural design. Specifically, the architecture consists of three components: (1) A self-supervised forged data synthesis module aimed at reproducing the inherent synthetic traces of deepfake samples to provide representative training data for feature disentanglement; (2) A content-forgery feature disentanglement mechanism, which realizes unbiased extraction of generic forgery features through a specifically designed disentanglement network structure; (3) A conditional reconstruction decoding module, which reconstructs samples via feature recombination of disentangled content and forgery features, establishing a closed-loop validation mechanism to reinforce the effectiveness of feature disentanglement.

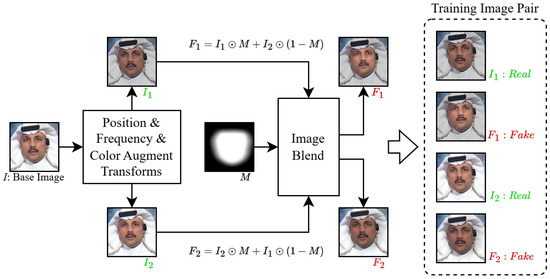

3.3. Self-Supervised Forged Image Synthesis

Given a real input image I, we generated image pairs (, ) with statistical inconsistencies by applying diverse data augmentation techniques to I. These data augmentations included randomly altering the RGB channel values of the input image, along with color transformations such as changes in hue, saturation, value, brightness, and contrast. Subsequently, frequency transformations were implemented using sampling or image sharpening. The image pairs were subjected to resizing and translation operations to reproduce blending boundary and landmark mismatches. These methods generated image pairs (,) exhibiting color differences and landmark disparities from a single real image.

To simulate the prevalent phenomena of boundary fusion and landmark mismatch in forged samples, image pairs are blended by generating grayscale masks. This is accomplished as follows: First, a face detector is utilized to predict the facial region. Based on the obtained facial landmarks, the convex hulls of the inner and outer faces are computed to initialize the mask. Next, the mask is deformed using the landmark transformation adopted in BI [28]. To enhance the diversity of the blended masks, the mask’s shape and the blending ratio are randomly varied. Subsequently, the mask is smoothed using Gaussian filters with different parameters. Finally, the blending ratio of the image pairs is adjusted by multiplying the mask image by a constant .

By performing a mixing operation on the image pair using the grayscale mask M, a forged and blended image pair is generated, and the synthesis formula is as follows.

Figure 3 shows examples of partially synthesized forged samples, where the facial regions of the synthesized samples exhibit inherent traces of deepfake technology, such as differences in color and frequency characteristics between the inner and outer face regions and mismatches in facial landmarks. Based on these samples, the feature decoupling network can effectively learn the general features of forgery methods, thereby enhancing the generalization performance of the detection model.

Figure 3.

Self-supervised Forged Image Synthesis. Given an input image I, introduce inconsistencies through data augmentation methods. Then, a mask based on the bounding box obtained from face detection will be generated, and this mask will be used to perform a blending operation on the augmented images and . Finally, two synthetic images and containing implicit forgery traces are obtained and constructed into a training sample pair.

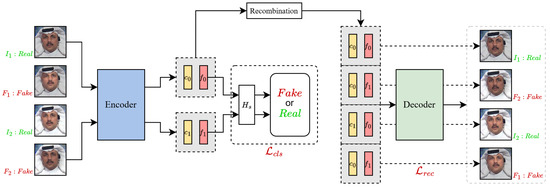

3.4. Feature Disentanglement Network

The feature disentanglement network proposed comprises an encoder, a decoder, a feature recombination module, and a classification head. Specifically, the encoder consists of a content feature encoder and a forgery feature encoder, which are respectively designed to extract content features irrelevant to forgery and general forgery features. These two encoders adopt the same architecture, yet their parameters are independent. Multiple convolutional layers and upsampling layers construct the decoder. It achieves image reconstruction by utilizing the content features and forgery features that have been recombined through the feature recombination module. Based on the extracted general forgery features, the classification head executes a binary classification task to distinguish between standard and forged samples.

Encoder. As depicted in Figure 4, the encoder architecture processes synthetic image data pairs previously introduced in this study. The real image component I undergoes standardized data augmentation procedures to enhance generalization, while F represents synthetically generated forgeries produced through the generation mechanism defined in Equation (1). The encoder E employs a dual-branch neural network configuration: The content encoder specializes in extracting semantically meaningful content features c that characterize image content and structural properties. The forged feature encoder focuses on identifying artificial artifacts f indicative of digital manipulation, including but not limited to inconsistent lighting patterns and compression anomalies. Through a coordinated feature disentanglement process, the dual-branch architecture simultaneously decomposes input images into distinct semantic and forensic components. This separation is mathematically formalized as follows:

where represents the index of the image to be detected, and and denote the content features irrelevant to forgery and the features related to forgery of the sample, respectively.

Figure 4.

Architecture of feature disentanglement network. Use the self-supervised synthetic training data from the previous section. Input it into the encoder to extract content and forgery features. The forgery features are for binary authenticity classification. Combine the two sets of content features with the forgery features in four ways. Then, use the conditional decoder to reconstruct images. Separate content and forgery features via reconstruction loss.

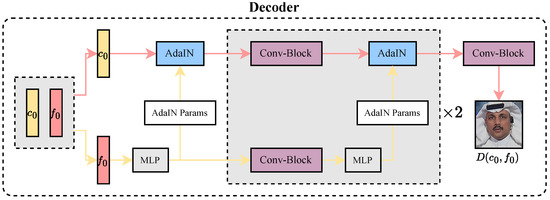

Decoder. The decoder architecture, illustrated in Figure 5, comprises a series of upsampling and convolutional layers. Its operation mechanism is to reconstruct a specific image by utilizing the content features and the features related to forgery. During this process, the decoder in this study adopts the same configuration settings as those in the research work of UCF [2], and the Adaptive Instance Normalization [35] (AdaIN) technique is introduced, aiming to optimize the image reconstruction and decoding effects, as shown in Figure 4 specifically. In terms of specific details, the function of the AdaIN [35] technique is to make the mean and variance of the content feature encoding match those of the forgery feature encoding. The relevant formulas are as follows:

where and are the content feature vector and the forged feature vector of the input image, respectively. The functions and are used to calculate the mean value and variance of the vectors.

Figure 5.

Decoder architecture. The content feature and the forgery-related feature are jointly input into the AdaIN [35] (Adaptive Instance Normalization) module. Then, the process of the Conv-Block, the upsampling layer combined with the MLP auxiliary structure, is repeated twice. Relying on the cooperative work of multiple modules, finally, the deepfake reconstructed image is decoded and output through the Conv-Block.

3.5. Objective Function

In order to effectively separate the forgery-irrelevant content features and forgery-related features from the input image, we have designed two types of loss functions within the framework of the feature decoupling network: the binary cross-entropy loss applied to the forgery-related features and the reconstruction loss that ensures the pixel-level consistency between the original image and the reconstructed image. The weighted sum of these loss functions is the overall loss function for training the framework.

Classification Loss. For the deepfake detection task, we feed the forgery-related features extracted by the forgery feature encoder into a classification head H, which is implemented as a multi-layer perceptron (MLP). The self-supervised training objective is formulated as a binary cross-entropy loss , which is defined as follows:

where H denotes the classification head for forgery features , composed of multiple MLP layers. represents the binary labels of size N, and corresponds to the forgery-related features of the i-th sample.

Reconstruction Loss. Our framework employs two conditional reconstruction methods for training: self-reconstruction and cross-reconstruction. For conditional self-reconstruction, we perform reconstruction using both the content features and forgery-related features extracted by the image encoder. This encourages the decoder to leverage these two types of features to restore the input image data accurately. The formulation is as follows:

For conditional cross-reconstruction, we perform a cross-combination of the content features and forgery-related features extracted by the encoder. Enforcing the decoder to reconstruct specified target images facilitates the disentanglement of intrinsic content information and forgery-related artifacts in the images. The formulation of conditional cross-reconstruction is presented below:

The reconstruction loss of our framework consists of two components: conditional self-reconstruction and conditional cross-reconstruction. The complete image reconstruction loss is formulated as follows:

Overall Loss Function. The overall loss function consists of a weighted combination of binary cross-entropy loss and reconstruction loss and is formulated as follows:

where is a hyperparameter that balances the contribution of the two loss terms.

4. Experiments

4.1. Experimental Settings

Datasets. To evaluate the generalization performance of the proposed framework, we utilize the FaceForensics++ [15] (FF++) benchmark dataset in the field of deepfake detection for training. This dataset includes 1000 original videos and 4000 forged videos generated by four techniques: DeepFakes [36], Face2Face [37], FaceSwap [38], and NeuralTextures [39]. For testing the model’s generalization ability, we select four recently widely used deepfake datasets: Celeb-DF [40] (CDF) focuses on celebrity facial forgery scenarios and covers multiple forgery techniques and complex scenes; DeepFake Detection [41] (DFD) containing diverse real-scene videos and corresponding forged samples to simulate real-world interfering factors; DeepFake Detection Challenge [42] (DFDC) and DeepFake Detection Challenge Preview [43] (DFDCP), a large-scale challenge dataset co-initiated by Facebook, which includes tens of thousands of professionally annotated videos and covers various forgery types and real-world interferences such as compression distortion. The specific information of the dataset is shown in Table 2. In the experiments, we strictly adhere to each dataset’s official training-test split schemes. When a test set is not explicitly provided, the validation set is used as an alternative to ensure the standardization of the evaluation process and the reliability of results.

Table 2.

Overview of datasets for deepfake detection. Summarizes five key datasets (FF++, DFD, CDF, DFDCP, DFDC) in deepfake detection. Presents core info like forgery method counts, fake/real video sizes, and repository links.

Implementation. The experimental environment is an Ubuntu operating system with two NVIDIA GeForce RTX 3090 Ti GPUs based on the PyTorch version 1.13.1deep learning framework. We employed the state-of-the-art convolutional network EfficientNet-b4 [44] (EFNB4), which was pre-trained on the ImageNet [45] dataset, as the feature encoder. The model has 39.4 M parameters and achieves an inference speed of 184 images per second on a single RTX 3090 Ti GPU. The model was trained for 20 epochs using the SAM [46] optimizer. The batch size and learning rate were 32 and 0.001, respectively. We extracted 10 frames at equal intervals from each video file to prepare the training and test data. After performing face extraction and alignment operations on these frames, we resized them to a resolution of . In cases where multiple faces were present in an image frame, we selected the face with the most significant area. Each batch of data consisted of an equal amount of real images and self-supervised forged synthetic data. In the overall loss function defined in Equation (9), we set the value of to 0.3. Additionally, we utilized some widely adopted data augmentation methods, such as image compression, horizontal flipping, and random color contrast adjustment, to enhance the model’s generalization ability.

Evaluation Metrics. In deepfake detection tasks, we adopt the AUC (Area Under the ROC Curve) as the core evaluation metric, validating model performance through horizontal comparisons with existing methods. This metric effectively assesses the comprehensive performance of the model under different decision thresholds, particularly in scenarios involving imbalanced distributions of forged data. Additionally, we introduce Average Precision (AP) as a supplementary evaluation metric. Mathematically, AUC can be calculated as follows:

In this formula, M and N denote the number of positive and negative samples, respectively. represents the rank of the i-th positive sample when all samples are sorted in descending order by the predicted probability of belonging to the positive class.

4.2. Generalization Evaluation

All experiments adhere to deepfake detection research’s widely adopted generalization evaluation protocol. Specifically, the models are trained on the FaceForensics++ [15] (FF++) dataset and subsequently evaluated on multiple unseen datasets, including Celeb-DF [40] (CDF) and DeepFake Detection Challenge [42] (DFDC), to assess cross-domain performance.

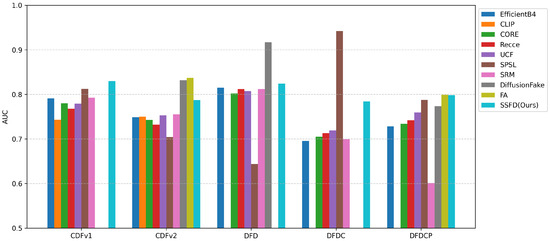

Comparision with Competing Methods. Previous studies have adopted diverse approaches in data preprocessing and experimental settings, which may significantly affect model performance and hinder fair comparisons. To address this challenge, we utilize DeepfakeBench [47], a unified benchmark for deepfake detection, to conduct comparative experiments, as shown in Table 3 and Figure 6. Specifically, we implement our proposed algorithm within a standardized framework, while results of other competitive detection algorithms are directly referenced from DeepfakeBench [47]. By adhering to a unified experimental protocol, we ensure a fair comparison. Our experimental results demonstrate that our algorithm performs superior generalization compared to baseline methods.

Table 3.

Cross domain evaluation results on CDF, DFD, DFDC, and DFDCP datasets. To ensure the fairness and comparability of the experimental results, the data of the comparative methods in the table are mostly sourced from the DeepfakeBench [47]. In the table, the optimal results are marked in bold, and the sub-optimal results are underlined.

Figure 6.

Cross Domain Detection Performance Comparison: AUC results on CDF, DFD, DFDC, and DFDCP datasets.

Comparision with state-of-the-art methods. After comparing our method with the algorithms implemented under the DeepfakeBench [47] framework, we further evaluate it against other state-of-the-art (SOTA) models. Some results of the methods listed in Table 4 are directly cited from the DeepfakeBench [47] paper. The data demonstrate that our approach outperforms other methods in terms of the AUC metric on both CelebDF [40] and DFDC [42] datasets, indicating its superior generalization ability. Although our work and SLADD [25] aim to enhance model generalization performance through synthetic fake sample utilization, they differ in how samples are leveraged. Our method synthesizes samples to achieve feature disentanglement, whereas SLADD trains models on forged features directly. The experimental results show that our approach achieves a higher AUC metric than SLADD.

Table 4.

Comparison with state-of-the-art methods on CDFv2 and DFDC. To ensure the fairness and comparability of the experiment, the experimental results of other works in the table are cited from [47,52,53]. The optimal results in the table are marked in bold, and the sub-optimal results are underlined.

Comparison with disentanglement-based methods. Our study is inspired by previous works UCF [2], whose frameworks directly perform feature disentanglement and reconstruction learning on data from the original training set. Meanwhile, their methods conduct multi-task learning for the categories of forgery techniques in the FF++ dataset. Using the official open-source code from the original paper, we trained the baseline model Xception [58], the UCF [2] method, and our approach on the FF++ dataset, followed by cross-dataset validation on the CelebDF [40], DFD, and DFDC [42] datasets. As shown in Table 5, UCF effectively enhances the generalization capability of the baseline model. Notably, our method outperforms UCF on all test sets, demonstrating that our approach’s self-supervised synthetic data strategy can more effectively promote the separation of content features and forgery-related features, thereby significantly improving the model’s cross-dataset detection ability.

Table 5.

Comparison with features disentanglement methods on CDFv2, DFD, and DFDC.

4.3. Ablation Study

4.3.1. Effects of Self-Supervised Forged Image

Inspired by previous research [2,59] on decoupling content features and forgery features from forged samples, our study proposes a self-supervised feature decoupling framework. The framework employs an image self-mixing forged training data generation mechanism to control the forged data synthesis process explicitly. This enables precise recombination of content features and forgery features separated by the decoupling network when the conditional decoder reconstructs the original input image, thereby fully leveraging the representational capability of the decoupled features. Experimental results (see Table 6) demonstrate that training with the proposed self-supervised forged data strategy significantly enhances the generalization performance of deepfake detection methods.

Table 6.

The effect of self-supervised forged image. By default, it uses linear addition to combine content features and forgery features. CD denotes the conditional decoder in the image reconstruction process.

4.3.2. Effects of Conditional Decoder

Unlike other disentanglement-based detection frameworks, which employ linear addition to merge fingerprint and content features for recombination, the decoder in our proposed approach uses AdaIN [35]. Specifically, it incorporates the fingerprint as a condition along with the content to enhance the reconstruction and decoding processes. To assess the influence of the conditional decoder on the generalization capacity, we conduct an ablation study on the proposed framework, comparing versions with and without the conditional decoder. The results presented in Table 7 show that our proposed conditional decoder can lead to better performance within and across different datasets. This clearly emphasizes the significance of using AdaIN [35] layers for the reconstruction and decoding tasks.

Table 7.

The effect of the conditional decoder. By default, it uses linear addition to combine content features and forgery features. CD denotes the conditional decoder in the image reconstruction process.

4.3.3. Choice of Encoder Architectures

Although we employ EfficientNet-b4 as the standard encoder, the proposed method applies equally to other network architectures. To investigate the performance of different state-of-the-art architectures, we conduct experiments using ResNet-50, Xception, EfficientNet-b1, and EfficientNet-b4 as backbone networks. As shown in Table 8, all architectures achieve excellent results on FF++, CDFv2, and DFDCP datasets. Additionally, the experimental results demonstrate that the larger the network, the stronger its generalization ability. This indicates that greater model capacity can capture more complex feature representations in self-supervised fused forged samples, enhancing the model’s adaptability to different data distributions.

Table 8.

Compare the results of different backbone models with those obtained using our proposed framework. The best results are highlighted in bold font. “Avg” represents the average AUC across datasets.

4.3.4. Effects of Hyper-Parameter

The core objective of this ablation experiment is to explore the influence mechanism of the hyperparameter of the reconstruction loss within the overall loss function on model performance. By systematically adjusting the values of , multiple sets of comparative experiments are constructed to dynamically monitor the convergence process of the model during the training phase and its generalization performance on the test set. Experimental verification shows that the AUC performance indicators of the model on the test set exhibit noticeable regular changes under different values of (see Table 9 for details). The data results indicate that when is set to 0.3, the model achieves an optimal balance between the cross-entropy loss and the reconstruction loss.

Table 9.

Impact of hyper-parameter on Model Testing AUC across datasets. Using the FF++ training dataset, experiments are conducted with different values (0.1, 0.3, 0.5, 0.7, 1.0). The best results are highlighted in bold font. “Avg” represents the average AUC across datasets.

5. Conclusions

This paper addresses the long-standing challenge of insufficient generalization capability in deepfake detection by systematically investigating cross-forgery method characteristics and the distribution shift limitations inherent in intensely supervised learning. We propose a novel self-supervised learning framework based on feature decoupling, which employs image self-fusion technology to construct self-supervised samples. The framework integrates a feature decoupling network to separate forgery-related features from content features and a conditional decoder for feature recombination verification. This architecture ultimately establishes feature mutual exclusivity constraints to prevent overfitting. Experimental results demonstrate that our method significantly outperforms existing state-of-the-art approaches in generalization capability, confirming the effectiveness of the feature decoupling strategy. The main findings of this study carry significant practical implications and application potential. The proposed feature decoupling framework establishes a new paradigm for deepfake detection research, and its modular design provides an extensible technical pathway for cross-modal detection and real-time detection scenarios. For instance, in cyberspace security, this framework can be applied to fake content screening on social platforms, financial identity verification, and other scenarios, effectively addressing the detection challenges posed by deepfake technologies in cross-platform dissemination. In the judicial forensics domain, its generalization capability helps enhance robustness against unknown forgery methods, providing more reliable technical support for verifying the authenticity of digital evidence.

Author Contributions

B.Y. conceptualized the algorithm, designed and implemented the experimental validation framework, and prepared the original draft. P.L. conducted the experimental procedures and performed data curation. Y.Y. contributed to manuscript revision and algorithm optimization discussions. Y.G. participated in algorithm refinement and performance improvement analysis. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Natural Science Foundation of Hunan Province (Grants No. 2024JJ5173, 2023JJ50047) and the Research Foundation of Education Bureau of Hunan Province (Grant No. 23A0494).

Data Availability Statement

All facial images utilized in this work are sourced from publicly available datasets and are appropriately attributed through proper citations.

Conflicts of Interest

The authors declare no competing financial interests.

References

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Yan, Z.; Zhang, Y.; Fan, Y.; Wu, B. UCF: Uncovering Common Features for Generalizable Deepfake Detection. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; pp. 22355–22366. [Google Scholar]

- Qiang, W.; Song, Z.; Gu, Z.; Li, J.; Zheng, C.; Sun, F.; Xiong, H. On the Generalization and Causal Explanation in Self-Supervised Learning. arXiv 2024, arXiv:2410.00772. [Google Scholar] [CrossRef]

- Chen, H.; Lin, Y.; Li, B.; Tan, S. Learning Features of Intra-Consistency and Inter-Diversity: Keys Toward Generalizable Deepfake Detection. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 1468–1480. [Google Scholar] [CrossRef]

- Ba, Z.; Liu, Q.; Liu, Z.; Wu, S.; Lin, F.; Lu, L.; Ren, K. Exposing the Deception: Uncovering More Forgery Clues for Deepfake Detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024. [Google Scholar]

- Nguyen, H.H.; Yamagishi, J.; Echizen, I. Exploring Self-Supervised Vision Transformers for Deepfake Detection: A Comparative Analysis. In Proceedings of the 2024 IEEE International Joint Conference on Biometrics (IJCB), Buffalo, NY, USA, 15–18 September 2024; pp. 1–10. [Google Scholar]

- Amerini, I.; Ballan, L.; Caldelli, R.; Del Bimbo, A.; Serra, G. A sift-based forensic method for copy–move attack detection and transformation recovery. IEEE Trans. Inf. Forensics Secur. 2011, 6, 1099–1110. [Google Scholar] [CrossRef]

- De Carvalho, T.J.; Riess, C.; Angelopoulou, E.; Pedrini, H.; de Rezende Rocha, A. Exposing digital image forgeries by illumination color classification. IEEE Trans. Inf. Forensics Secur. 2013, 8, 1182–1194. [Google Scholar] [CrossRef]

- Lukas, J.; Fridrich, J.; Goljan, M. Detecting digital image forgeries using sensor pattern noise. In Proceedings of the SPIE, the International Society for Optical Engineering, San Diego, CA, USA, 13–17 August 2006; Society of Photo-Optical Instrumentation Engineers: Bellingham, DC, USA, 2006; p. 60720Y–1. [Google Scholar]

- Chierchia, G.; Parrilli, S.; Poggi, G.; Verdoliva, L.; Sansone, C. PRNU-based detection of small-size image forgeries. In Proceedings of the 2011 17th International Conference on Digital Signal Processing (DSP), Corfu, Greece, 6–8 July 2011; pp. 1–6. [Google Scholar]

- Wen, D.; Han, H.; Jain, A.K. Face spoof detection with image distortion analysis. IEEE Trans. Inf. Forensics Secur. 2015, 10, 746–761. [Google Scholar] [CrossRef]

- Pei, G.; Zhang, J.; Hu, M.; Zhang, Z.; Wang, C.; Wu, Y.; Zhai, G.; Yang, J.; Shen, C.; Tao, D. Deepfake generation and detection: A benchmark and survey. arXiv 2024, arXiv:2403.17881. [Google Scholar]

- Afchar, D.; Nozick, V.; Yamagishi, J.; Echizen, I. Mesonet: A compact facial video forgery detection network. In Proceedings of the 2018 IEEE International Workshop on Information Forensics and Security (WIFS), Hong Kong, 11–13 December 2018; pp. 1–7. [Google Scholar]

- Nguyen, H.H.; Yamagishi, J.; Echizen, I. Capsule-forensics: Using capsule networks to detect forged images and videos. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 2307–2311. [Google Scholar]

- Rossler, A.; Cozzolino, D.; Verdoliva, L.; Riess, C.; Thies, J.; Nießner, M. Faceforensics++: Learning to detect manipulated facial images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1–11. [Google Scholar]

- Qian, Y.; Yin, G.; Sheng, L.; Chen, Z.; Shao, J. Thinking in frequency: Face forgery detection by mining frequency-aware clues. In Proceedings of the European Conference on Computer Vision; Springer: London, UK, 2020; pp. 86–103. [Google Scholar]

- Frank, J.; Eisenhofer, T.; Schönherr, L.; Fischer, A.; Kolossa, D.; Holz, T. Leveraging frequency analysis for deep fake image recognition. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 12–18 July 2020; PMLR: New York, NY, USA, 2020; pp. 3247–3258. [Google Scholar]

- Agarwal, S.; Farid, H.; Gu, Y.; He, M.; Nagano, K.; Li, H. Protecting world leaders against deep fakes. In Proceedings of the CVPR Workshops, Long Beach, CA, USA, 16–20 June 2019; Volume 1. [Google Scholar]

- Amerini, I.; Galteri, L.; Caldelli, R.; Del Bimbo, A. Deepfake video detection through optical flow based cnn. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Güera, D.; Delp, E.J. Deepfake video detection using recurrent neural networks. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar]

- Sabir, E.; Cheng, J.; Jaiswal, A.; AbdAlmageed, W.; Masi, I.; Natarajan, P. Recurrent convolutional strategies for face manipulation detection in videos. Interfaces 2019, 3, 80–87. [Google Scholar]

- Luo, Y.; Zhang, Y.; Yan, J.; Liu, W. Generalizing face forgery detection with high-frequency features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16317–16326. [Google Scholar]

- Cao, J.; Ma, C.; Yao, T.; Chen, S.; Ding, S.; Yang, X. End-to-end reconstruction-classification learning for face forgery detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4113–4122. [Google Scholar]

- Shiohara, K.; Yamasaki, T. Detecting deepfakes with self-blended images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 18720–18729. [Google Scholar]

- Chen, L.; Zhang, Y.; Song, Y.; Liu, L.; Wang, J. Self-supervised learning of adversarial example: Towards good generalizations for deepfake detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 18710–18719. [Google Scholar]

- Yu, Y.; Ni, R.; Yang, S.; Ni, Y.; Zhao, Y.; Kot, A.C. Mining Generalized Multi-timescale Inconsistency for Detecting Deepfake Videos. Int. J. Comput. Vis. 2024, 133, 1532–1548. [Google Scholar] [CrossRef]

- Yu, P.; Fei, J.; Gao, H.; Feng, X.; Xia, Z.; Chang, C.H. Unlocking the Capabilities of Vision-Language Models for Generalizable and Explainable Deepfake Detection. arXiv 2025, arXiv:2503.14853. [Google Scholar]

- Li, L.; Bao, J.; Zhang, T.; Yang, H.; Chen, D.; Wen, F.; Guo, B. Face X-ray for more general face forgery detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5001–5010. [Google Scholar]

- Yan, Z.; Luo, Y.; Lyu, S.; Liu, Q.; Wu, B. Transcending forgery specificity with latent space augmentation for generalizable deepfake detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 8984–8994. [Google Scholar]

- Cui, X.; Li, Y.; Luo, A.; Zhou, J.; Dong, J. Forensics Adapter: Adapting CLIP for Generalizable Face Forgery Detection. arXiv 2024, arXiv:2411.19715. [Google Scholar]

- Haliassos, A.; Mira, R.; Petridis, S.; Pantic, M. Leveraging real talking faces via self-supervision for robust forgery detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14950–14962. [Google Scholar]

- Zhang, D.; Xiao, Z.; Li, S.; Lin, F.; Li, J.; Ge, S. Learning Natural Consistency Representation for Face Forgery Video Detection. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024. [Google Scholar]

- Sun, K.; Chen, S.; Yao, T.; Liu, H.; Sun, X.; Ding, S.; Ji, R. DiffusionFake: Enhancing Generalization in Deepfake Detection via Guided Stable Diffusion. arXiv 2024, arXiv:2410.04372. [Google Scholar]

- Xu, J.; Liu, X.; Lin, W.; Shang, W.; Wang, Y. Localization and detection of deepfake videos based on self-blending method. Sci. Rep. 2025, 15, 3927. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Belongie, S. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1501–1510. [Google Scholar]

- Deepfakes. 2021. Available online: https://github.com/deepfakes/faceswap (accessed on 30 May 2025).

- Thies, J.; Zollhöfer, M.; Stamminger, M.; Theobalt, C.; Nießner, M. Face2Face: Real-Time Face Capture and Reenactment of RGB Videos. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2387–2395. [Google Scholar]

- Faceswap. 2021. Available online: https://github.com/MarekKowalski/FaceSwap (accessed on 25 May 2025).

- Thies, J.; Zollhöfer, M.; Nießner, M. Deferred neural rendering: Image synthesis using neural textures. ACM Trans. Graph. 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Li, Y.; Yang, X.; Sun, P.; Qi, H.; Lyu, S. Celeb-df: A large-scale challenging dataset for deepfake forensics. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3207–3216. [Google Scholar]

- Contributing Data to Deepfake Detection Research. 2021. Available online: https://ai.googleblog.com/2019/09/contributing-data-to-deepfake-detection.html (accessed on 23 May 2025).

- Dolhansky, B.; Bitton, J.; Pflaum, B.; Lu, J.; Howes, R.; Wang, M.; Ferrer, C.C. The deepfake detection challenge (dfdc) dataset. arXiv 2020, arXiv:2006.07397. [Google Scholar]

- Dolhansky, B.; Howes, R.; Pflaum, B.; Baram, N.; Ferrer, C.C. The deepfake detection challenge (dfdc) preview dataset. arXiv 2019, arXiv:1910.08854. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; PMLR: New York, NY, USA, 2019; pp. 6105–6114. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Foret, P.; Kleiner, A.; Mobahi, H.; Neyshabur, B. Sharpness-aware minimization for efficiently improving generalization. arXiv 2020, arXiv:2010.01412. [Google Scholar]

- Yan, Z.; Zhang, Y.; Yuan, X.; Lyu, S.; Wu, B. DeepfakeBench: A Comprehensive Benchmark of Deepfake Detection. arXiv 2023, arXiv:2307.01426. [Google Scholar]

- Wang, S.Y.; Wang, O.; Zhang, R.; Owens, A.; Efros, A.A. CNN-generated images are surprisingly easy to spot... for now. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8695–8704. [Google Scholar]

- Ojha, U.; Li, Y.; Lee, Y.J. Towards Universal Fake Image Detectors that Generalize Across Generative Models. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 24480–24489. [Google Scholar]

- Ni, Y.; Meng, D.; Yu, C.; Quan, C.; Ren, D.; Zhao, Y. Core: Consistent representation learning for face forgery detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12–21. [Google Scholar]

- Liu, H.; Li, X.; Zhou, W.; Chen, Y.; He, Y.; Xue, H.; Zhang, W.; Yu, N. Spatial-phase shallow learning: Rethinking face forgery detection in frequency domain. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 772–781. [Google Scholar]

- Wang, Y.; Yu, K.; Chen, C.; Hu, X.; Peng, S. Dynamic graph learning with content-guided spatial-frequency relation reasoning for deepfake detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7278–7287. [Google Scholar]

- Yu, P.; Fei, J.; Xia, Z.; Zhou, Z.; Weng, J. Improving generalization by commonality learning in face forgery detection. IEEE Trans. Inf. Forensics Secur. 2022, 17, 547–558. [Google Scholar] [CrossRef]

- Masi, I.; Killekar, A.; Mascarenhas, R.M.; Gurudatt, S.P.; AbdAlmageed, W. Two-branch recurrent network for isolating deepfakes in videos. In Proceedings of the Computer vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; proceedings, Part VII 16. Springer: London, UK, 2020; pp. 667–684. [Google Scholar]

- Gu, Q.; Chen, S.; Yao, T.; Chen, Y.; Ding, S.; Yi, R. Exploiting fine-grained face forgery clues via progressive enhancement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 735–743. [Google Scholar]

- Zhao, H.; Zhou, W.; Chen, D.; Wei, T.; Zhang, W.; Yu, N. Multi-attentional deepfake detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2185–2194. [Google Scholar]

- Chen, S.; Yao, T.; Chen, Y.; Ding, S.; Li, J.; Ji, R. Local relation learning for face forgery detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 1081–1088. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2016; pp. 1800–1807. [Google Scholar]

- Liang, J.; Shi, H.; Deng, W. Exploring disentangled content information for face forgery detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: London, UK, 2022; pp. 128–145. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).