Rolling Bearing Fault Diagnosis Based on SCNN and Optimized HKELM

Abstract

1. Introduction

- (1)

- In the feature extraction layer, an SCNN architecture with multi-scale perceptual capabilities is designed. The parallel convolution paths and probabilistic sampling pooling mechanism significantly enhance the completeness of feature representation.

- (2)

- In the classifier design, an adaptive kernel function space combination strategy is proposed, and an improved northern goshawk optimization algorithm is introduced to intelligently select hyperparameters, thereby constructing an optimal HKELM model.

- (3)

- By organically integrating the feature extraction advantages of SCNN with the classification capabilities of HKELM, an end-to-end intelligent diagnostic system is established, achieving accurate mapping from raw data to fault categories.

2. Related Technologies

2.1. Stochastic Convolutional Neural Networks

2.2. Northern Goshawk Optimization

2.2.1. Prey Identification and Attack

2.2.2. Pursuit and Escape

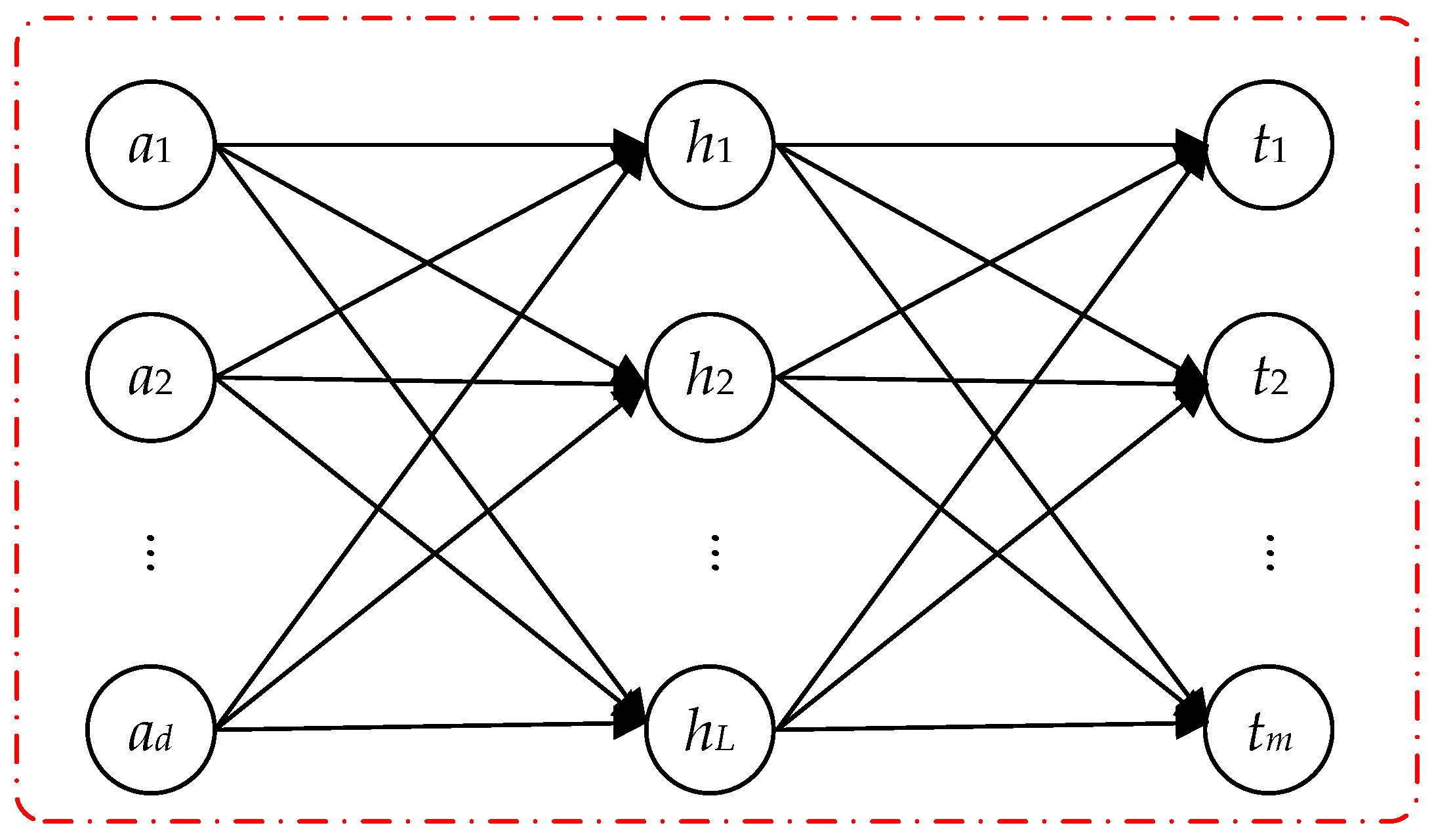

2.3. Hybrid Kernel Extreme Learning Machine

3. Model Design and Implementation

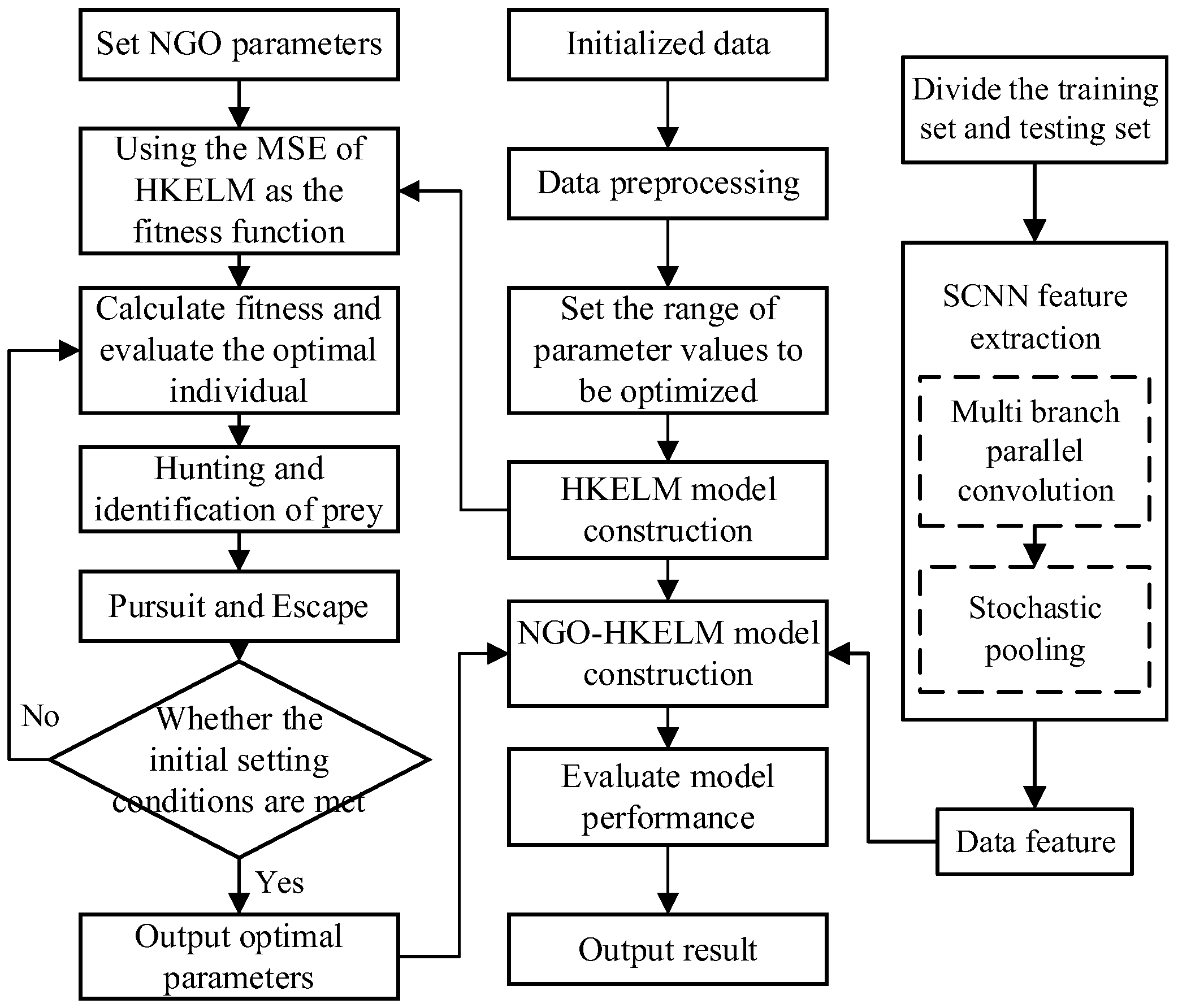

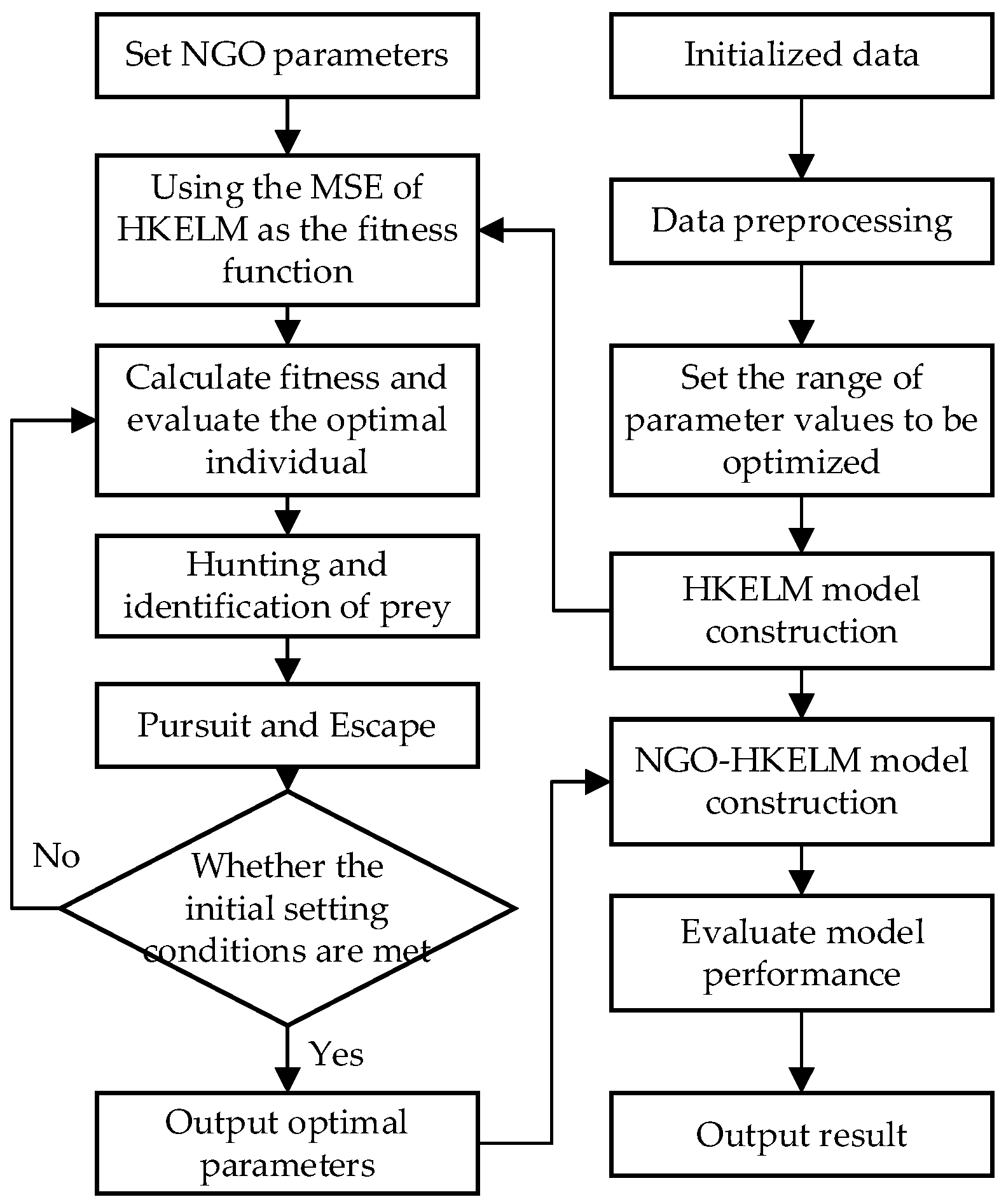

3.1. Construction of NGO–HKELM Classification Model

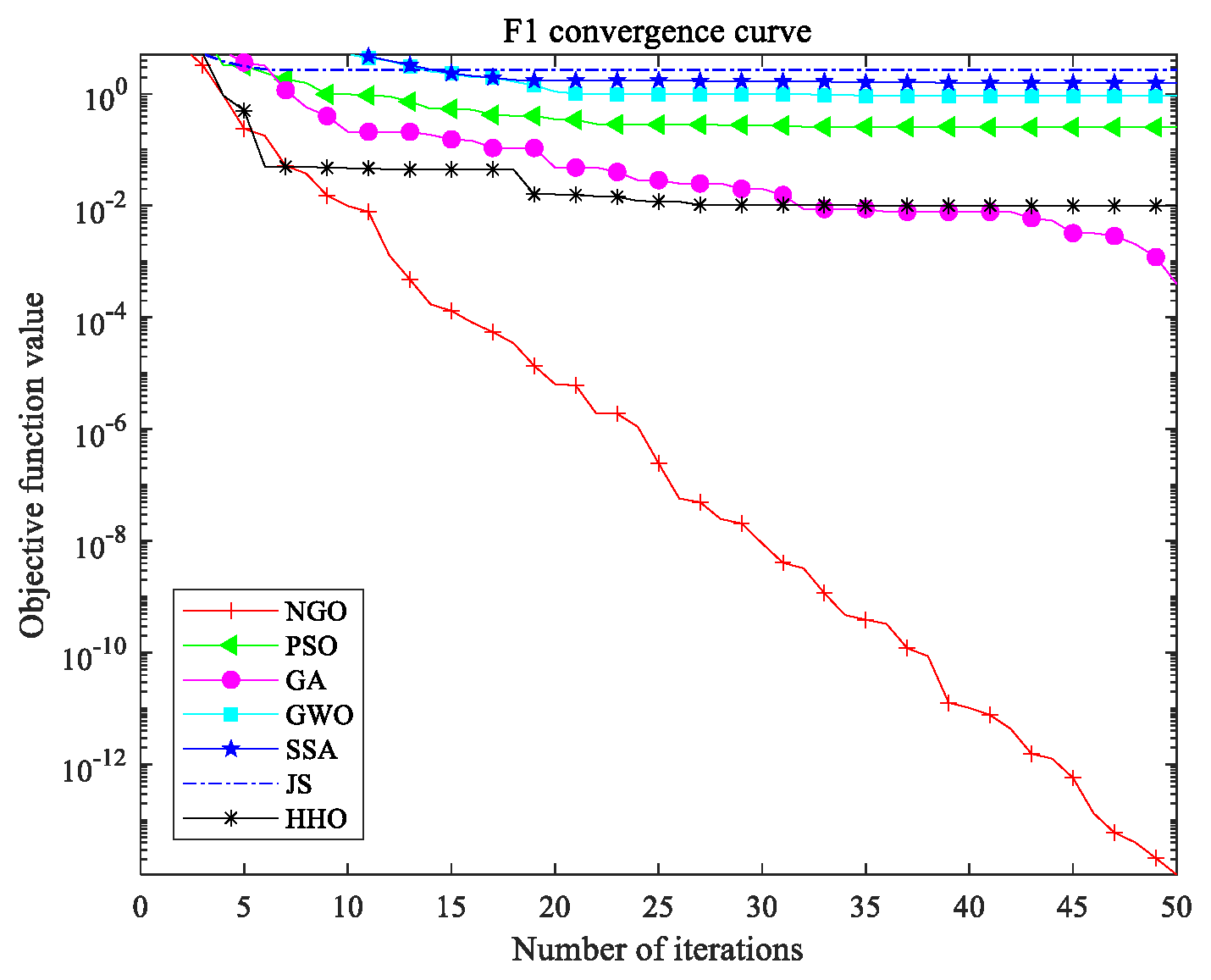

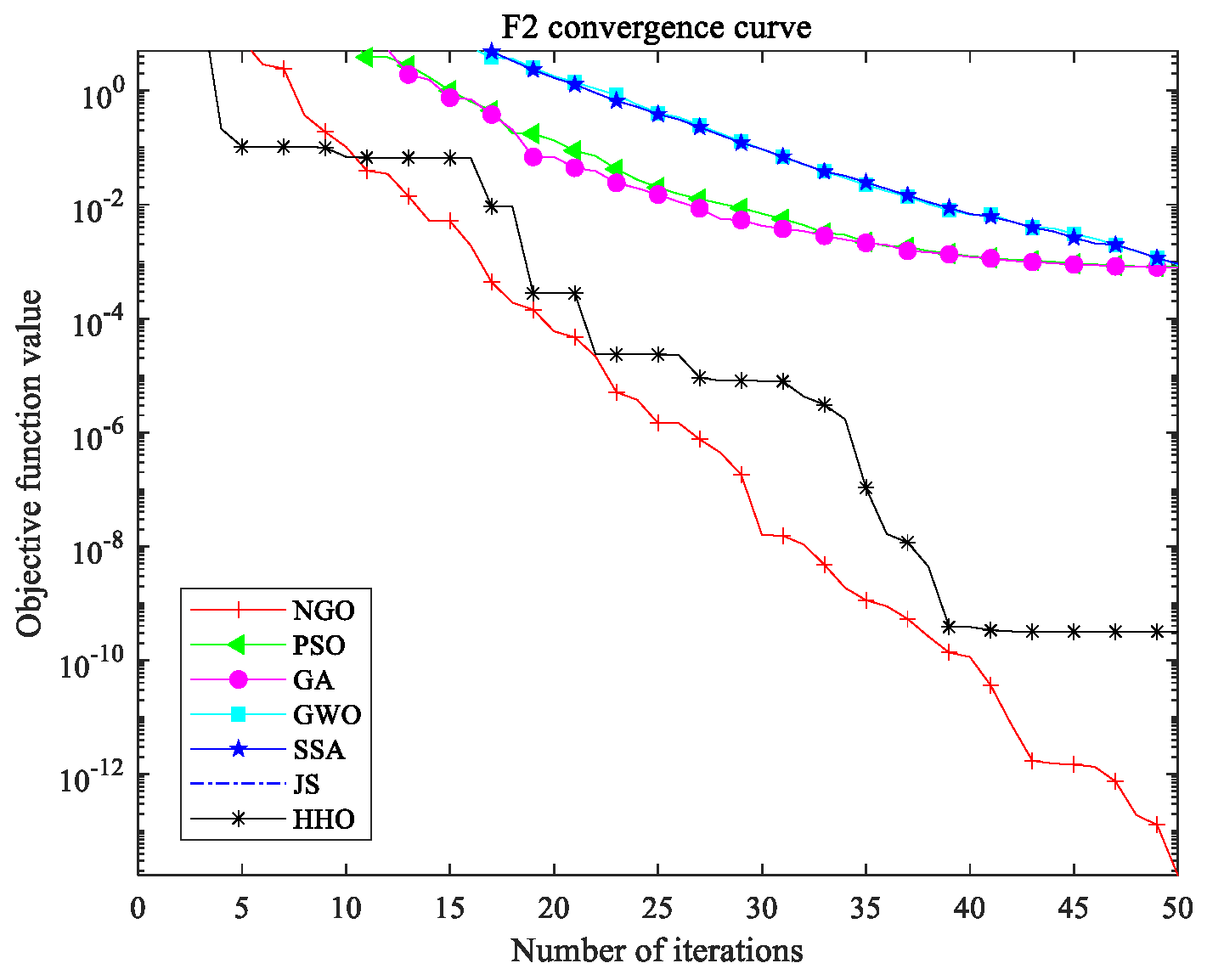

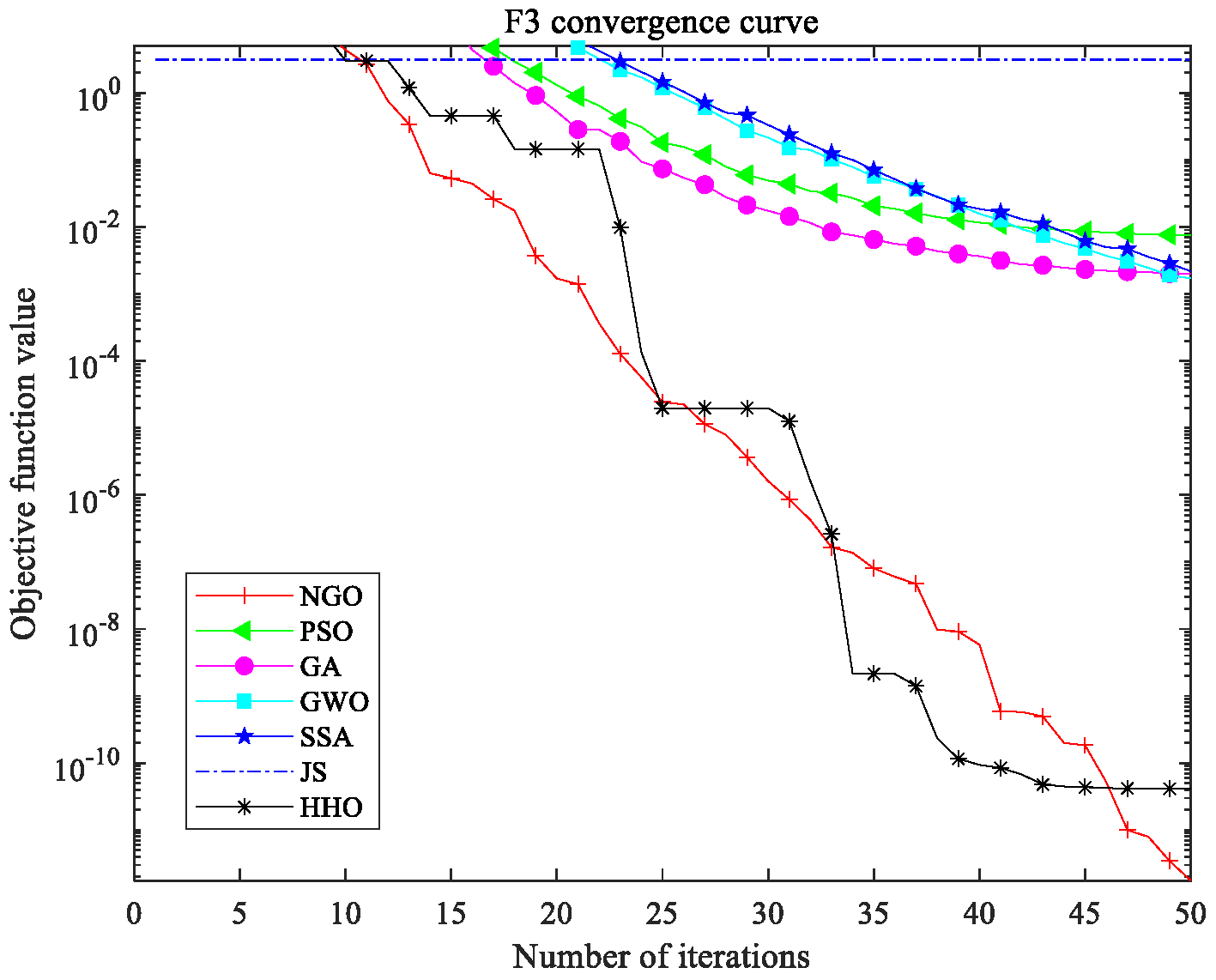

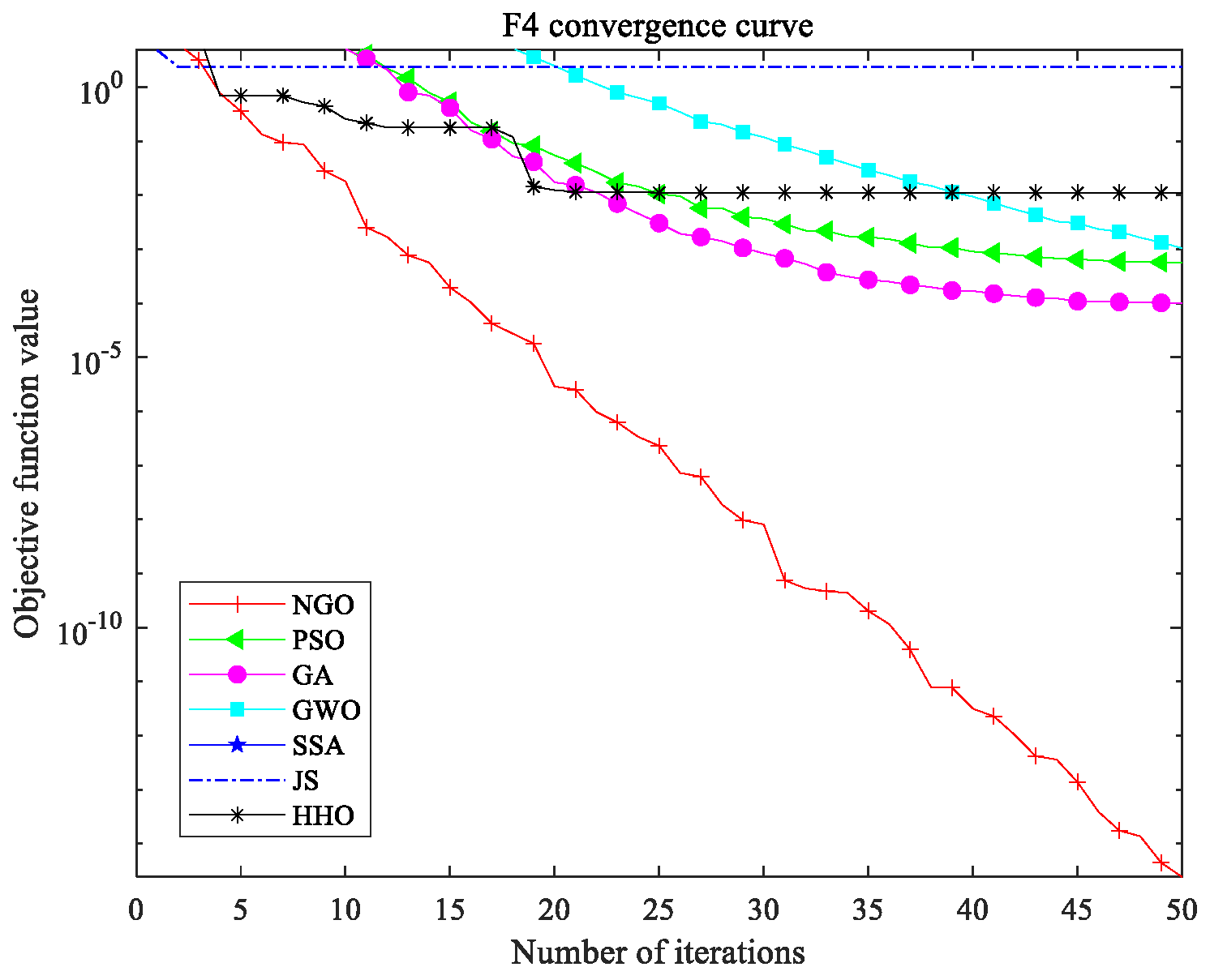

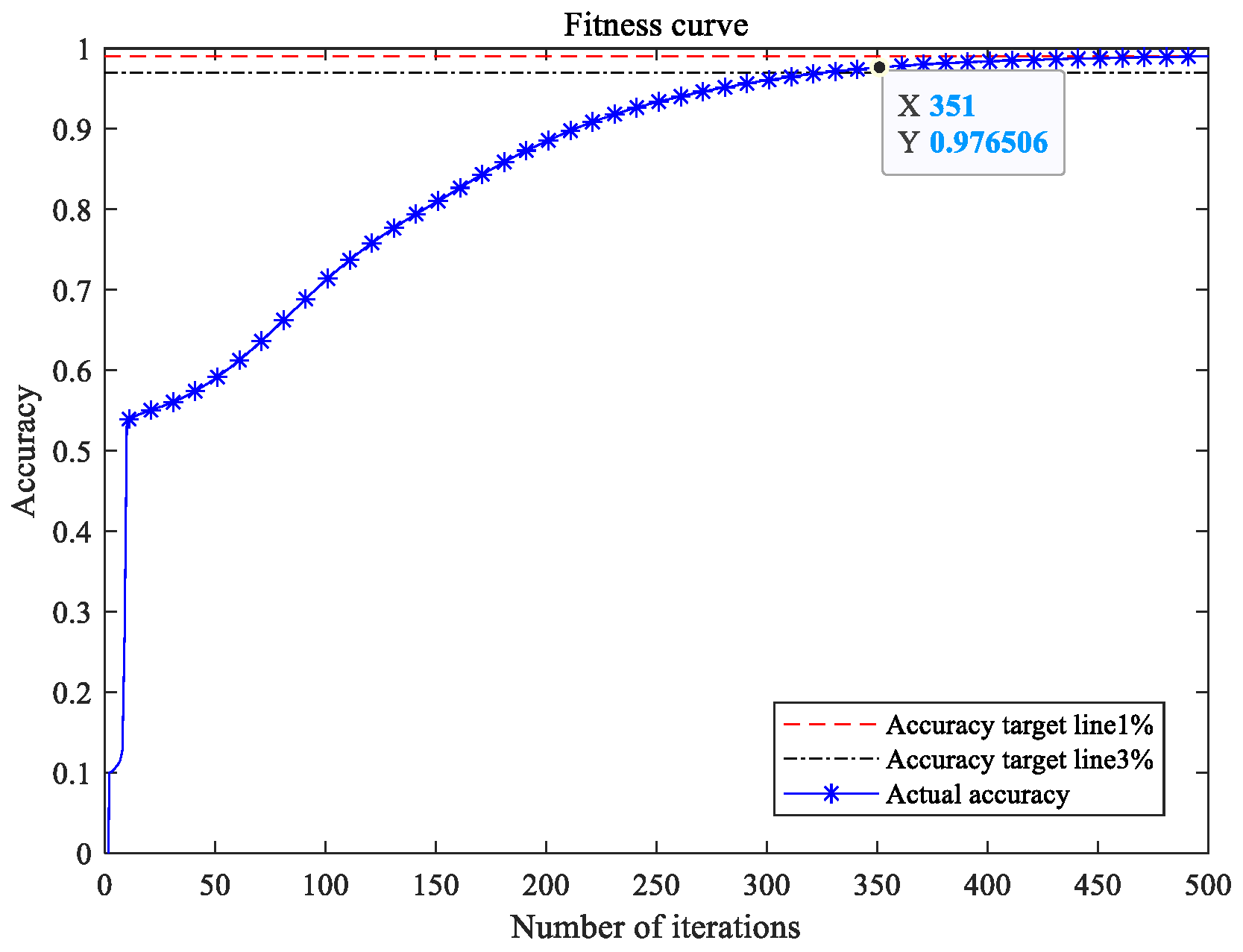

3.1.1. Discussion on the Performance of NGO Algorithm

3.1.2. Optimizing HKELM Using NGO

- (1)

- The population size of the northern sparrowhawk, fitness function, number of iterations, and other parameters are set, and the initial positions of the sparrowhawk individuals are generated.

- (2)

- The value ranges for the parameters to be optimized in the HKELM network are set, and the HKELM model is constructed. The mean squared error (MSE) of the HKELM is used as the fitness function for NGO.

- (3)

- The fitness values are calculated, and the optimal northern sparrowhawk individual at the current moment is evaluated.

- (4)

- The sparrowhawk state is updated according to Equations (1)–(6), continuing the process of prey search and recognition, pursuit, and escape.

- (5)

- It is determined whether the initial set conditions are met. If so, the current optimal three-dimensional values corresponding to the northern sparrowhawk individual are output; otherwise, step (3) is repeated to continue the optimization process.

- (6)

- The optimal parameters are obtained, and the NGO–HKELM model is constructed. The model’s performance is evaluated, and the prediction results are output.

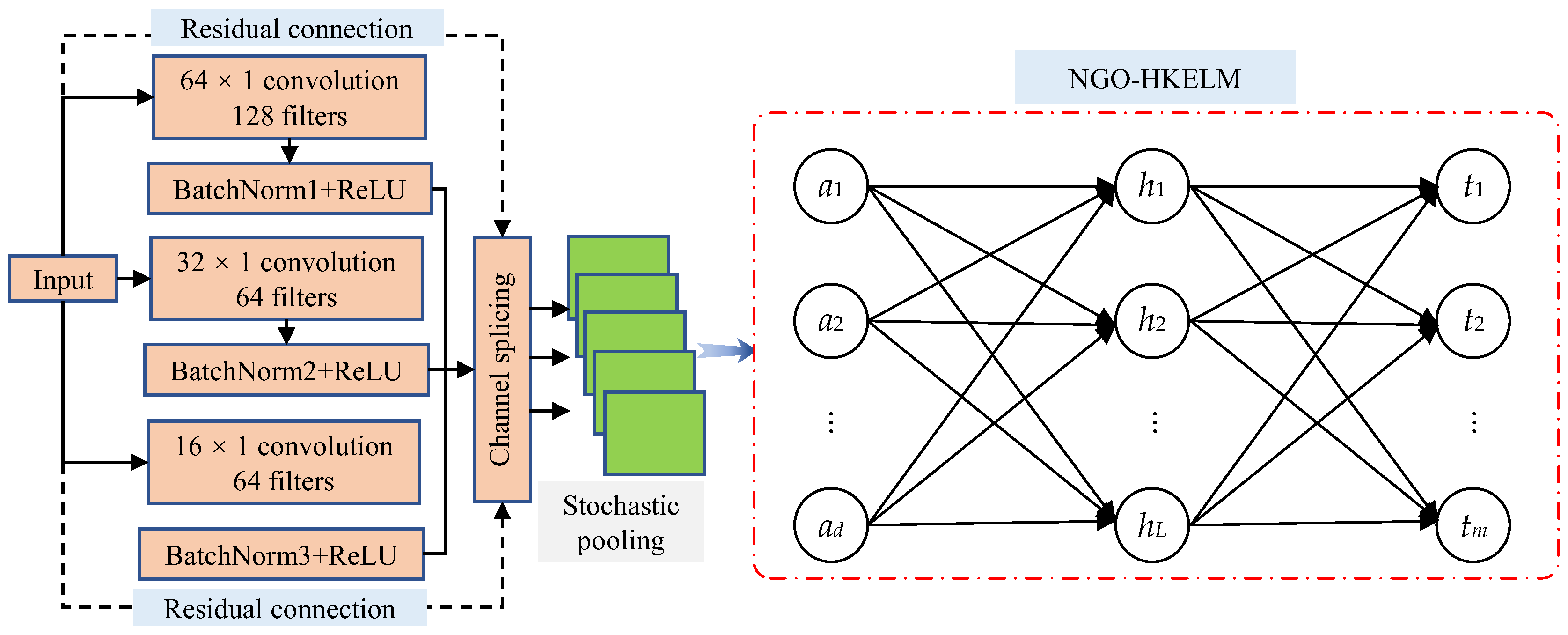

3.2. SCNN and NGO–HKELM Model Network Structure

- (1)

- This study innovatively designs a multi-scale feature extraction architecture that overcomes the limitations of traditional CNNs, which typically rely on a single convolution kernel size. The architecture includes three parallel feature extraction pathways: the first pathway employs a large 64 × 1 convolution kernel to focus on extracting low-frequency features that reflect the long-term operational state of the equipment; the second pathway utilizes a medium-sized 32 × 1 convolution kernel to capture transitional features at intermediate time scales; the third pathway uses a compact 16 × 1 convolution kernel specifically for detecting transient impact signals. The outputs of these pathways are fused by concatenating along the channel dimension, followed by a 1 × 1 convolution for feature compression and dimensionality reduction. This multi-scale collaborative processing mechanism significantly enhances the model’s ability to perceive fault features across different frequency bands.

- (2)

- A residual learning mechanism is incorporated into the deep neural network architecture, effectively addressing the gradient vanishing problem in deep networks by constructing cross-layer connection pathways. This design not only ensures the effective flow of gradients during the backpropagation process but also substantially improves the model’s feature representation capability.

- (3)

- A Bernoulli-distribution-based random pooling operation is proposed, which better captures underlying changes in the data and preserves the spatial structure information of feature maps as much as possible.

3.3. Model Flowchart and Algorithm Steps

- (1)

- The SCNN model is constructed, utilizing the multi-branch parallel convolution and Bernoulli-distribution-based random pooling layers within the SCNN framework for feature extraction.

- (2)

- The initialization weights, thresholds, and other parameters of the HKELM network are determined, along with the range of values for the parameters to be optimized.

- (3)

- The population is initialized, and the training error of the HKELM is used as the fitness value.

- (4)

- The fitness of the hawk population is evaluated, and the hawk positions are updated based on the predatory behavior, search, pursuit, and evasion patterns of the northern goshawk.

- (5)

- A check is performed to determine if the parameter optimization condition is satisfied. If the condition is met, the optimal hyperparameters are assigned to the HKELM, and the NGO–HKELM model is constructed. If the condition is not met, step (3) is repeated.

- (6)

- The fault state is identified using the constructed NGO–HKELM model, and the diagnostic results are output.

4. Case Introduction

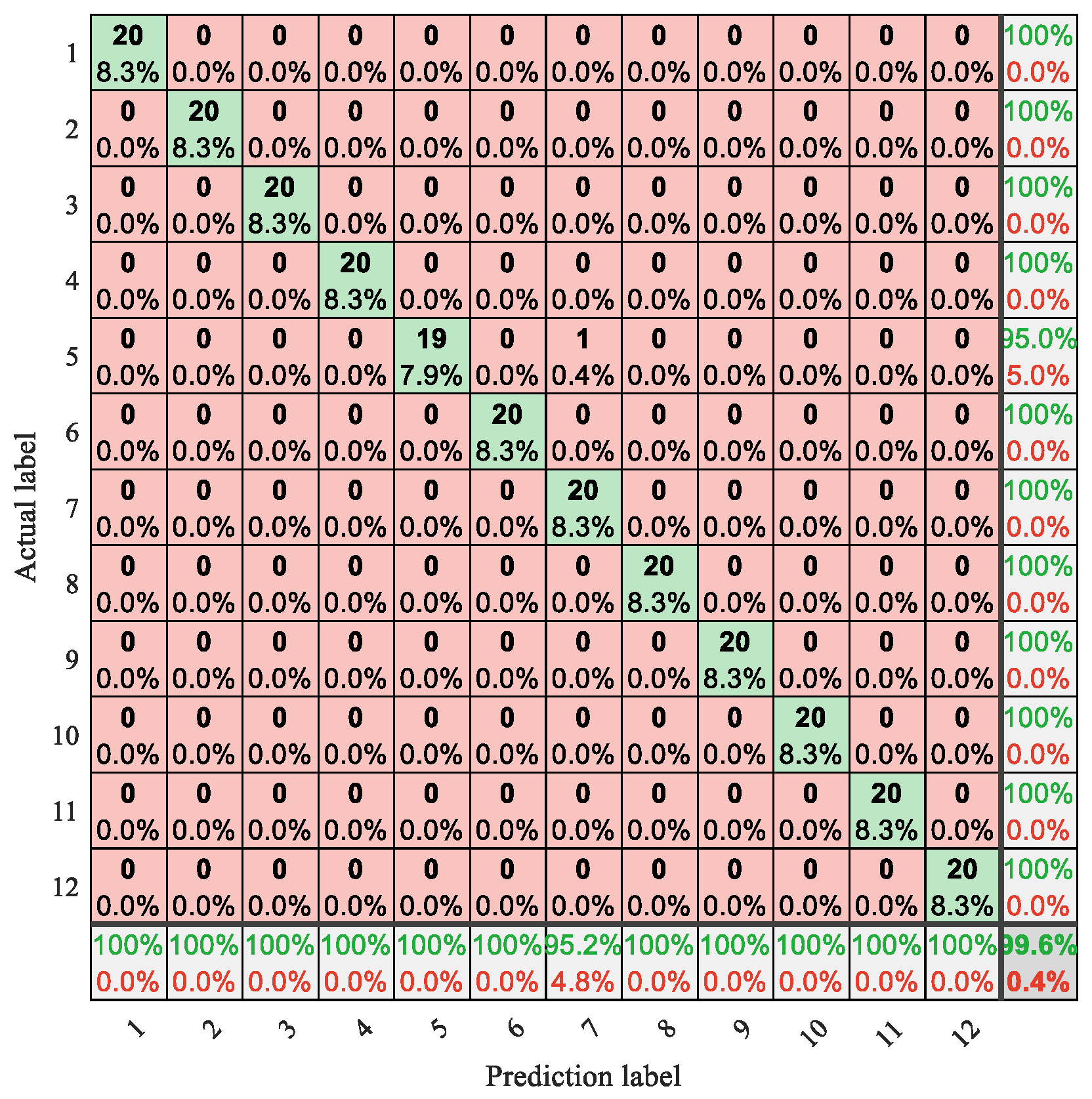

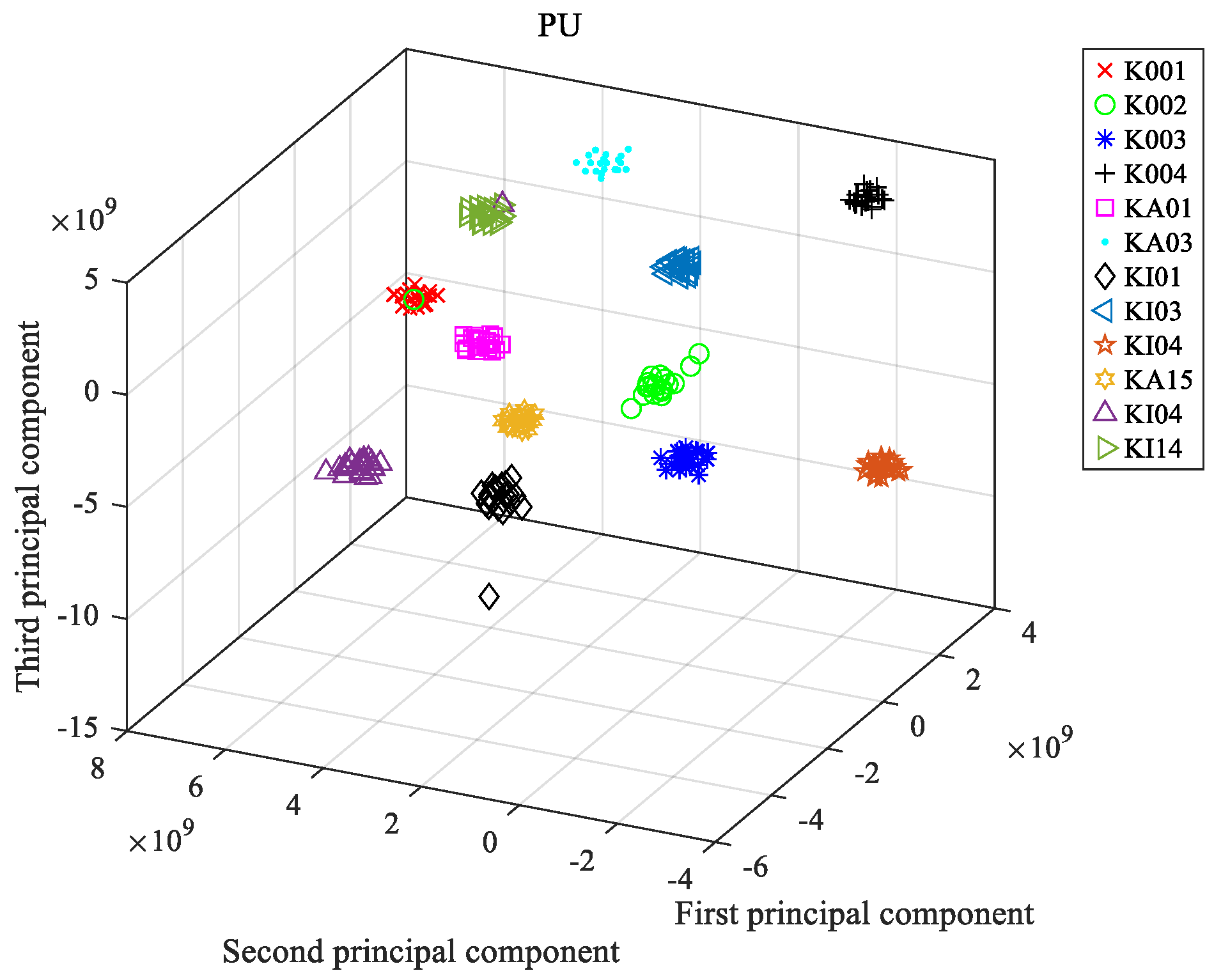

4.1. Data Description

4.2. Experimental Plan and Analysis

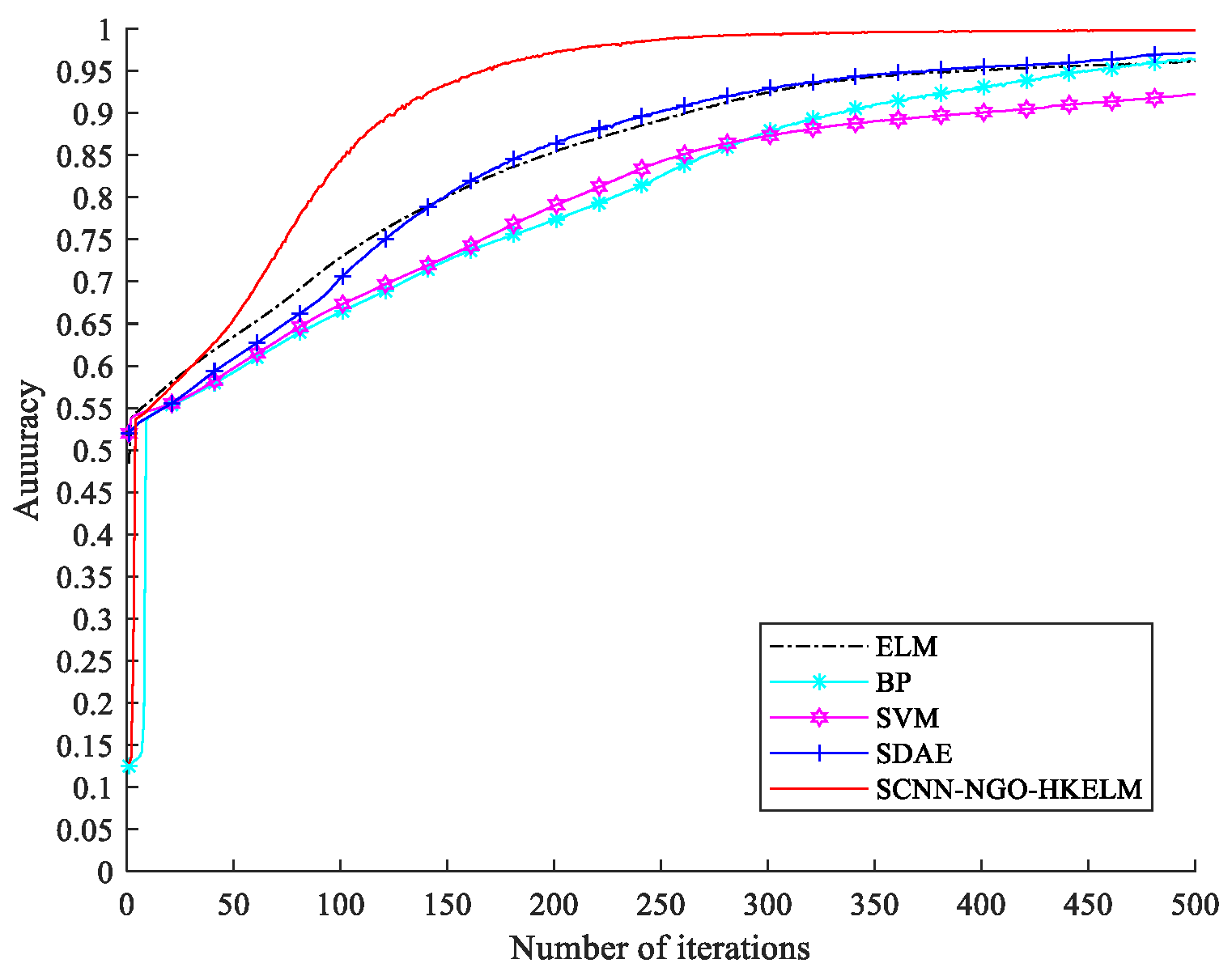

4.3. Comparative Experiment

- (1)

- SVM: The regularization parameter was set to 1, and the Gaussian kernel width was 0.1.

- (2)

- BP: The network topology consisted of a 41–26–32 three-hidden-layer structure, with an initial learning rate of 0.02.

- (3)

- ELM: The regularization coefficient was set to 1, the kernel function parameter was 0.15, and the number of hidden layer nodes was determined through cross-validation.

- (4)

- Stacked denoising autoencoder (SDAE): A layer-wise greedy training strategy was employed, with a learning rate of 1, input noise ratio of 0.1, and a batch size of 200 for training samples.

5. Conclusions

- (1)

- An improved SCNN architecture with multi-scale perception capability is developed. This architecture captures cross-frequency features through parallel multi-branch convolution paths, combined with a probabilistic sampling-based random pooling layer, significantly enhancing the discriminative power of fault features.

- (2)

- The modified NGO algorithm is introduced to adaptively adjust the parameters of the HKELM.

- (3)

- Comparative experiments based on the Paderborn University standard bearing dataset (12 fault types, 4 load conditions) demonstrated that the proposed method significantly outperformed traditional intelligent algorithms in diagnostic accuracy.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Qian, Q.; Zhang, B.; Li, C.; Mao, Y.; Qin, Y. Federated transfer learning for machinery fault diagnosis: A comprehensive review of technique and application. Mech. Syst. Signal Process. 2025, 223, 111837. [Google Scholar] [CrossRef]

- Xiao, Y.; Shao, H.; Yan, S.; Wang, J.; Peng, Y.; Liu, B. Domain generalization for rotating machinery fault diagnosis: A survey. Adv. Eng. Inform. 2025, 64, 103063. [Google Scholar] [CrossRef]

- Xu, Y.; Ge, X.; Guo, R.; Shen, W. Recent advances in model-based fault diagnosis for lithium-ion batteries: A comprehensive review. Renew. Sustain. Energy Rev. 2025, 207, 114922. [Google Scholar] [CrossRef]

- Wang, L.; Zhao, W. An ensemble deep learning network based on 2D convolutional neural network and 1D LSTM with self-attention for bearing fault diagnosis. Appl. Soft Comput. 2025, 172, 112889. [Google Scholar] [CrossRef]

- Ren, X.; Wang, S.; Zhao, W.; Kong, X.; Fan, M.; Shao, H.; Zhao, K. Universal federated domain adaptation for gearbox fault diagnosis: A robust framework for credible pseudo-label generation. Adv. Eng. Inform. 2025, 65, 103233. [Google Scholar] [CrossRef]

- Tian, J.; Jiang, Y.; Zhang, J.; Luo, H.; Yin, S. A novel data augmentation approach to fault diagnosis with class-imbalance problem. Reliab. Eng. Syst. Saf. 2024, 243, 109832. [Google Scholar] [CrossRef]

- Yang, B.; Lei, Y.; Li, X.; Li, N. Targeted transfer learning through distribution barycenter medium for intelligent fault diagnosis of machines with data decentralization. Expert Syst. Appl. 2024, 244, 122997. [Google Scholar] [CrossRef]

- Yu, Y.; Karimi, H.R.; Gelman, L.; Liu, X. A novel digital twin-enabled three-stage feature imputation framework for non-contact intelligent fault diagnosis. Adv. Eng. Inform. 2025, 66, 103434. [Google Scholar] [CrossRef]

- Ardali, N.R.; Zarghami, R.; Gharebagh, R.S.; Mostoufi, N. A data-driven fault detection and diagnosis by NSGAII-t-SNE and clustering methods in the chemical process industry. Comput. Aided Chem. Eng. 2022, 49, 1447–1452. [Google Scholar]

- Tarcsay, B.L.; Bárkányi, Á.; Chován, T.; Németh, S. A Dynamic Principal Component Analysis and Fréchet-Distance-Based Algorithm for Fault Detection and Isolation in Industrial Processes. Processes 2022, 10, 2409. [Google Scholar] [CrossRef]

- Meng, L.; Su, Y.; Kong, X.; Xu, T.; Lan, X.; Li, Y. Intelligent fault diagnosis of gearbox based on differential continuous wavelet transform-parallel multi-block fusion residual network. Measurement 2023, 206, 112318. [Google Scholar] [CrossRef]

- Wang, X.; Shi, J.; Zhang, J. A power information guided-variational mode decomposition (PIVMD) and its application to fault diagnosis of rolling bearing. Digit. Signal Process. 2022, 132, 103814. [Google Scholar] [CrossRef]

- Jalayer, M.; Orsenigo, C.; Vercellis, C. Fault detection and diagnosis for rotating machinery: A model based on convolutional LSTM, Fast Fourier and continuous wavelet transforms. Comput. Ind. 2021, 125, 103378. [Google Scholar] [CrossRef]

- Da Silva, P.R.N.; Gabbar, H.A.; Junior, P.V.; Junior, C.T.d.C. A new methodology for multiple incipient fault diagnosis in transmission lines using QTA and Naïve Bayes classifier. Int. J. Electr. Power Energy Syst. 2018, 103, 326–346. [Google Scholar] [CrossRef]

- Prasojo, R.A.; Putra, M.A.A.; Apriyani, M.E.; Apriyani, M.E.; Rahmanto, A.N.; Ghoneim, S.S.; Mahmoud, K.; Lehtonen, M.; Darwish, M.M. Precise transformer fault diagnosis via random forest model enhanced by synthetic minority over-sampling technique. Electr. Power Syst. Res. 2023, 220, 109361. [Google Scholar] [CrossRef]

- Cao, H.; Sun, P.; Zhao, L. PCA-SVM method with sliding window for online fault diagnosis of a small pressurized water reactor. Ann. Nucl. Energy 2022, 171, 109036. [Google Scholar] [CrossRef]

- Chen, X.; Qi, X.; Wang, Z.; Cui, C.; Wu, B.; Yang, Y. Fault diagnosis of rolling bearing using marine predators algorithm-based support vector machine and topology learning and out-of-sample embedding. Measurement 2021, 176, 109116. [Google Scholar] [CrossRef]

- Kumar, R.S.; Raj, I.G.C.; Alhamrouni, I.; Saravanan, S.; Prabaharan, N.; Ishwarya, S.; Gokdag, M.; Salem, M. A combined HT and ANN based early broken bar fault diagnosis approach for IFOC fed induction motor drive. Alex. Eng. J. 2023, 66, 15–30. [Google Scholar] [CrossRef]

- Wang, H.; Zheng, J.; Xiang, J. Online bearing fault diagnosis using numerical simulation models and machine learning classifications. Reliab. Eng. Syst. Saf. 2023, 234, 109142. [Google Scholar] [CrossRef]

- Hakim, M.; Omran, A.A.B.; Ahmed, A.N.; Al-Waily, M.; Abdellatif, A. A systematic review of rolling bearing fault diagnoses based on deep learning and transfer learning: Taxonomy, overview, application, open challenges, weaknesses and recommendations. Ain Shams Eng. J. 2022, 14, 101945. [Google Scholar] [CrossRef]

- Gao, S.; Xu, L.; Zhang, Y.; Pei, Z. Rolling bearing fault diagnosis based on SSA optimized self-adaptive DBN. ISA Trans. 2022, 128, 485–502. [Google Scholar] [CrossRef] [PubMed]

- Niu, G.; Wang, X.; Golda, M.; Mastro, S.; Zhang, B. An optimized adaptive PReLU-DBN for rolling element bearing fault diagnosis. Neurocomputing 2021, 445, 26–34. [Google Scholar] [CrossRef]

- Gao, D.; Zhu, Y.; Ren, Z.; Yan, K.; Kang, W. A novel weak fault diagnosis method for rolling bearings based on LSTM considering quasi-periodicity. Knowl.-Based Syst. 2021, 231, 107413. [Google Scholar] [CrossRef]

- Zhao, K.; Jia, F.; Shao, H. A novel conditional weighting transfer Wasserstein auto-encoder for rolling bearing fault diagnosis with multi-source domains. Knowl.-Based Syst. 2023, 262, 110203. [Google Scholar] [CrossRef]

- Xiang, Z.; Zhang, X.; Zhang, W.; Xia, X. Fault diagnosis of rolling bearing under fluctuating speed and variable load based on TCO spectrum and stacking auto-encoder. Measurement 2019, 138, 162–174. [Google Scholar] [CrossRef]

- Qu, J.; Yu, L.; Yuan, T.; Tian, Y. Adaptive fault diagnosis algorithm for rolling bearings based on one-dimensional convolutional neural network. Chin. J. Sci. Instrum. 2018, 39, 134–143. [Google Scholar]

- Huo, C.; Jiang, Q.; Shen, Y.; Qian, C.; Zhang, Q. New transfer learning fault diagnosis method of rolling bearing based on ADC-CNN and LATL under variable conditions. Measurement 2022, 188, 110587. [Google Scholar] [CrossRef]

- Li, K.; Xiong, M.; Li, F.; Su, L.; Wu, J. A novel fault diagnosis algorithm for rotating machinery based on a sparsity and neighborhood preserving deep extreme learning machine. Neurocomputing 2019, 350, 261–270. [Google Scholar] [CrossRef]

- He, C.; Wu, T.; Gu, R.; Jin, Z.; Ma, R.; Qu, H. Rolling bearing fault diagnosis based on composite multiscale permutation entropy and reverse cognitive fruit fly optimization algorithm–extreme learning machine. Measurement 2021, 173, 108636. [Google Scholar] [CrossRef]

- Gong, J.; Yang, X.; Wang, H.; Shen, J.; Liu, W.; Zhou, F. Coordinated method fusing improved bubble entropy and artificial Gorilla Troops Optimizer optimized KELM for rolling bearing fault diagnosis. Appl. Acoust. 2022, 195, 108844. [Google Scholar] [CrossRef]

- Lessmeier, C.; Kimotho, J.K.; Zimmer, D.; Sextro, W. Condition monitoring of bearing damage in electromechanical drive systems by using motor current signals of electric motors: A benchmark data set for data-driven classification. In Proceedings of the PHM Society European Conference, Bilbao, Spain, 5–8 July 2016. [Google Scholar]

| Algorithm | Parameter Setting | Population Number | Number of Iterations |

|---|---|---|---|

| PSO | Learning factor c1 = 1.2; c2 = 1.2; inertia weight w = 0.68 | 50 | 50 |

| GA | Selection probability PI = 0.6; cross probability PC = 0.5 | 50 | 50 |

| GWO | The coefficient vector component a linearly decreases from 1 to 0; the coefficient vector c is randomly taken as 0 or 1 | 50 | 50 |

| SSA | Cross-validation fold v = 5; discoverer ratio d = 0.6 | 50 | 50 |

| JS | 50 | 50 | |

| HHO | Random numbers with proportional coefficients between 0 and 2 | 50 | 50 |

| Algorithm | Performance Indicator | F1 | F2 | F3 | F4 |

|---|---|---|---|---|---|

| NGO | Minimum | 3.218 × 10−15 | 9.872 × 10−15 | 4.926 × 10−12 | 2.981 × 10−30 |

| Mean | 0.6824 | 0.4976 | 0.9538 | 0.0382 | |

| Variance | 3.0157 | 2.3641 | 5.8923 | 0.1947 | |

| PSO | Minimum | 0.014892 | 1.327 × 10−4 | 1.086 × 10−3 | 5.873 × 10−12 |

| Mean | 4.9263 | 13.458 | 2.6749 | 0.1528 | |

| Variance | 7.8923 | 30.147 | 8.7624 | 0.3275 | |

| GA | Minimum | 3.892 × 10−4 | 4.673 × 10−5 | 5.327 × 10−3 | 2.108 × 10−13 |

| Mean | 2.3276 | 20.458 | 11.327 | 0.1843 | |

| Variance | 7.2159 | 41.327 | 21.458 | 0.7924 | |

| GWO | Minimum | 1.0843 | 6.327 × 10−4 | 1.892 × 10−3 | 1.542 × 10−6 |

| Mean | 2.0157 | 32.458 | 7.2159 | 0.0973 | |

| Variance | 7.8923 | 49.327 | 22.458 | 1.3276 | |

| SSA | Minimum | 1.2157 | 8.762 × 10−4 | 1.327 × 10−3 | 9.327 × 10−7 |

| Mean | 4.3276 | 44.892 | 20.327 | 0.2427 | |

| Variance | 8.4582 | 59.327 | 25.015 | 0.5893 | |

| JS | Minimum | 1.0427 | 1.8923 | 1.2157 | 0.0628 |

| Mean | 4.8923 | 2.3276 | 1.4582 | 0.8927 | |

| Variance | 8.3276 | 2.0427 | 0.0582 | 1.0427 | |

| HHO | Minimum | 1.2157 | 8.458 × 10−4 | 1.892 × 10−4 | 9.458 × 10−7 |

| Mean | 2.0427 | 26.458 | 8.8923 | 2.3276 | |

| Variance | 5.8923 | 30.015 | 23.458 | 2.8923 |

| Algorithm | Step (F1) | Sphere (F2) | Rastrigin (F3) | Quartic (F4) | ||||

|---|---|---|---|---|---|---|---|---|

| Training (s) | Test (s) | Training (s) | Test (s) | Training (s) | Test (s) | Training (s) | Test (s) | |

| NGO | 0.85 | 0.0011 | 0.87 | 0.001 | 0.82 | 0.0012 | 0.79 | 0.0013 |

| PSO | 1.23 | 0.0015 | 1.25 | 0.0019 | 1.22 | 0.0014 | 1.31 | 0.0017 |

| GA | 3.51 | 0.0023 | 3.61 | 0.0033 | 3.55 | 0.0039 | 3.58 | 0.0034 |

| GWO | 1.05 | 0.0018 | 1.12 | 0.0017 | 1.07 | 0.0018 | 1.04 | 0.0013 |

| SSA | 1.12 | 0.0019 | 1.15 | 0.0018 | 1.18 | 0.0015 | 1.21 | 0.0015 |

| JS | 2.86 | 0.0024 | 2.93 | 0.0022 | 2.87 | 0.0027 | 2.83 | 0.0026 |

| HHO | 1.15 | 0.0018 | 1.24 | 0.0013 | 1.23 | 0.0013 | 1.19 | 0.0012 |

| Label | Fault Type | Fault Location | Cause of Fault | Sample Size |

|---|---|---|---|---|

| 1 | K001 | Fault-free | Healthy | 100 |

| 2 | K002 | Fault-free | Healthy | 100 |

| 3 | K003 | Fault-free | Healthy | 100 |

| 4 | K004 | Fault-free | Healthy | 100 |

| 5 | KA01 | Outer race | Electrical discharge machining | 100 |

| 6 | KA03 | Outer race | Electrical discharge machining | 100 |

| 7 | KI01 | Inner race | Manual electric engraving damage | 100 |

| 8 | KI03 | Inner race | Manual electric engraving damage | 100 |

| 9 | KA04 | Outer race | Accelerated life test | 100 |

| 10 | KA15 | Outer race | Accelerated life test | 100 |

| 11 | KI04 | Inner race | Accelerated life test | 100 |

| 12 | KI14 | Inner race | Accelerated life test | 100 |

| Network | ELM | BP | SVM | SDAE | SCNN | SCNN–NGO–HKELM |

|---|---|---|---|---|---|---|

| Training time (s) | 5.86 | 7.52 | 12.37 | 25.77 | 13.62 | 15.63 |

| Test time (s) | 247 | 2.53 | 3.98 | 6.96 | 3.35 | 4.56 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Du, X. Rolling Bearing Fault Diagnosis Based on SCNN and Optimized HKELM. Mathematics 2025, 13, 2004. https://doi.org/10.3390/math13122004

Wang Y, Du X. Rolling Bearing Fault Diagnosis Based on SCNN and Optimized HKELM. Mathematics. 2025; 13(12):2004. https://doi.org/10.3390/math13122004

Chicago/Turabian StyleWang, Yulin, and Xianjun Du. 2025. "Rolling Bearing Fault Diagnosis Based on SCNN and Optimized HKELM" Mathematics 13, no. 12: 2004. https://doi.org/10.3390/math13122004

APA StyleWang, Y., & Du, X. (2025). Rolling Bearing Fault Diagnosis Based on SCNN and Optimized HKELM. Mathematics, 13(12), 2004. https://doi.org/10.3390/math13122004