Abstract

The ionospheric total electron content (TEC) has complex spatiotemporal variations, making its spatiotemporal prediction challenging. Capturing long-range spatial dependencies is of great significance for improving the spatiotemporal prediction accuracy of TEC. Existing work based on Convolutional Long Short-Term Memory (ConvLSTM) primarily relies on convolutional operations for spatial feature extraction, which are effective at capturing local spatial correlations, but struggle to model long-range dependencies, limiting their predictive performance. Self-Attention Convolutional Long Short-Term Memory (SA-ConvLSTM) can selectively store and focus on long-range spatial dependencies, but it requires the input length and output length to be the same due to its “n vs. n” structure, limiting its application. To solve this problem, this paper proposes an encoder-decoder SA-ConvLSTM, abbreviated as ED-SA-ConvLSTM. It can effectively capture long-range spatial dependencies using SA-ConvLSTM and achieve unequal input-output lengths through encoder–decoder structure. To verify its performance, the proposed ED-SA-ConvLSTM was compared with C1PG, ConvLSTM, and PredRNN from multiple perspectives in the area of 12.5° S–87.5° N, 25° E–180° E, including overall quantitative comparison, comparison across different months, comparison at different latitude regions, visual comparisons, and comparison under extreme situations. The results have shown that, in the vast majority of cases, the proposed ED-SA-ConvLSTM outperforms the comparative models.

Keywords:

ionospheric total electron content (TEC); long-range spatial dependencies; self-attention; spatiotemporal prediction MSC:

86-10

1. Introduction

The ionosphere is the upper layer of the atmosphere that is ionized via high-energy solar radiation and cosmic rays. It contains a large number of charged particles. When Global Positioning System (GPS) signals pass through the ionosphere, the path of the signal bends and the propagation speed changes, resulting in a deviation in the measured distance, causing an ionospheric delay [1]. Ionospheric delay is the main source of error in Global Navigation Satellite System (GNSS) based applications [2]. The ionosphere total electron content (TEC), which is a parameter describing the state of the ionosphere, is used to calculate ionospheric delay. Thus, TEC prediction is of great significance for correcting ionospheric delay and improving the accuracy of the GNSS based applications.

TEC is influenced by various factors, such as solar activity, local time, and geomagnetic storms, exhibiting highly complex and nonlinear variations. As a result, TEC prediction is very challenging [3]. Recently, deep learning models have gained significant success in a variety of fields due to their excellent nonlinear representation ability [4,5,6]. Deep learning technology has also dominated the field of TEC prediction, and developing high-precision deep learning models has become a hot topic in TEC prediction. Compared to traditional ionospheric empirical models, such as the International Reference Ionosphere (IRI) model [7], Bent model [8], NeQuick model [9,10], and statistical models such as the autoregressive moving average(ARMA) [11,12], autoregressive integrated moving average(ARIMA) [13], deep learning models have demonstrated superiority in TEC prediction [14,15,16].

Deep learning models for TEC prediction can be roughly classified into two categories:(1) Time series models. These models in this category consider TEC prediction as a time series problem. They usually model TEC historical sequences from a single location to generate the output TEC sequences at that same location. The most commonly used models in this type are unidirectional time series models, such as LSTM [17,18], GRU [19], and ED-LSTME [20]. Then, bidirectional time series models, such as BiLSTM [21,22,23], were introduced for TEC prediction, which have been proven to have stronger nonlinear representation capabilities than unidirectional ones. Later, researchers combined convolutional neural networks (CNN) with time series models to model both the temporal and local positional patterns of TEC sequences. The combination models include CNN-LSTM [24], CNN-GRU [25], and CNN-BiLSTM [26]. Recently, attention mechanisms have been embedded into TEC time series models due to their capability to focus on important features and suppress unimportant ones. Then, embedding attention mechanisms in deep learning models has become a research hotspot in TEC prediction, such as Attentional BiGRU [27], Attention BiLSTM [28], and Att-CNN-BiLSTM [29]. Although time series models have made some progress in TEC prediction, their performance still needs to be improved as they ignore spatial features, such as the correlations between TECs in adjacent regions. (2) Spatiotemporal models. Models in this category receive TEC map sequences as input, then extract temporal and spatial features from them, and generate the predicted future TEC maps. The most famous spatiotemporal model is ConvLSTM, which was originally used for precipitation prediction [30], and then has been introduced for TEC prediction [31,32]. Afterwards, various variants of ConvLSTM emerged in TEC prediction, such as Attention ConvLSTM [33], ED-ConvLSTM [34,35,36], ED-AttConvLSTM [37], ConvGRU [38], BiConvLSTM [1,39], and BiConvGRU [40].

Spatiotemporal models, such as ConvLSTM and its variants, are dominant in TEC prediction. However, they also have some flaws. In TEC prediction, the key issue is to model the complex dynamic long-range spatial dependencies in TEC map sequences. When generating TEC prediction maps, long-range spatial dependence refers to the periodic variation pattern between TEC maps at time steps that are farther away from the predicted time. TEC map exhibits multi-scale temporal variations: in the short term, TEC maps are highly correlated with their adjacent time steps due to continuity of data; in the medium to long term, it exhibits annual periodic changes due to influence of the Earth’s revolution; and influenced by the solar activity cycle, the TEC map shows periodic changes of about 11 years. For TEC map prediction, the longer the spatiotemporal dependency span of memory, the more knowledge can be learned from historical data, resulting in better prediction performance. In those ConvLSTM-based models, spatial features are extracted using convolution, which can effectively capture local dependencies. To capture long-range dependencies, stacking multiple convolutional layers is necessary. This approach can result in an effective receptive field that is much smaller than the theoretical receptive field, diminishing the efficiency of capturing long-range dependencies [41]. As a solution, Wang et al. proposed a new spatiotemporal model called PredRNN in 2017, which adds a spatial memory to ConvLSTM to store long-range spatial memory [42]. However, in PredRNN, the long-range spatial memory has not been selectively focused and suppressed, and there is still room for improvement. Inspired by self-attention, Lin et al. proposed the Self-Attention Memory (SAM) module to focus and suppress long-range spatial memory, then embedded it into ConvLSTM, and proposed SA-ConvLSTM [43]. SA-ConvLSTM uses self-attention to strengthen and suppress long-range spatial memory and aggregates it with short-distance memory. In this way, it solves the problem of ConvLSTM being unable to effectively extract long-range spatial dependencies and PredRNN being unable to focus and suppress long-range spatial memory.

SA-ConvLSTM can selectively store and focus on long-range and short-distance spatial dependencies, which is superior to ConvLSTM in some applications of deep learning. This paper introduced it for TEC spatiotemporal prediction. The original SA-ConvLSTM adopts an “n vs. n” structure. This structure requires the input length and output length of the model to be the same, which limits the application of SA-ConvLSTM. To solve this problem, this paper redesigns SA-ConvLSTM using an encoder–decoder structure and proposes the encoder–decoder SA-ConvLSTM, abbreviated as ED-SA-ConvLSTM. This paper is the first to redesign SA-ConvLSTM using an encoder–decoder structure, making it suitable for more spatiotemporal prediction tasks. The proposed ED-SA-ConvLSTM is divided into two parts: the encoder part receives the TEC map sequence and converts it into a final spatiotemporal feature map; the decoder part decodes the final spatiotemporal feature map and converts it into the predicted TEC map sequence. This improvement allows for different input and output lengths of the model, making it more flexible. In addition, existing research has shown that the encoder–decoder structure is superior to the “n vs. n” structure [44,45,46].

To validate the performance of the proposed ED-SA-ConvLSTM, we selected the 12.5° S–87.5° N, 25° E–180° E regions as the study area. Within this area, a 6-year TEC map sequence was selected as the dataset, including 3 years of high solar activity and 3 years of low solar activity. On this dataset, the proposed ED-SA-ConvLSTM was compared against ConvLSTM, PredRNN, and C1PG from multiple perspectives. Among these three comparative models, ConvLSTM and PredRNN are the state-of-the-art spatiotemporal prediction models. C1PG is a one-day TEC prediction product provided by the Center for Orbit Determination in Europe (CODE), which can be considered a benchmark for TEC prediction. Experimental results show that in the vast majority of cases, the predictive performance of the proposed ED-SA-ConvLSTM is better than those of the comparative models.

2. Data and Data Processing

In this paper, the TEC maps used are Global Ionospheric Map (GIM) data provided by the International GNSS Service (IGS). These TEC maps have a longitude resolution of 5°, a latitude resolution of 2.5°, and a time resolution of 2 h. To reduce computational complexity, this paper focuses on the Asia area (25° E–180° E, 12.5° S–87.5° N).

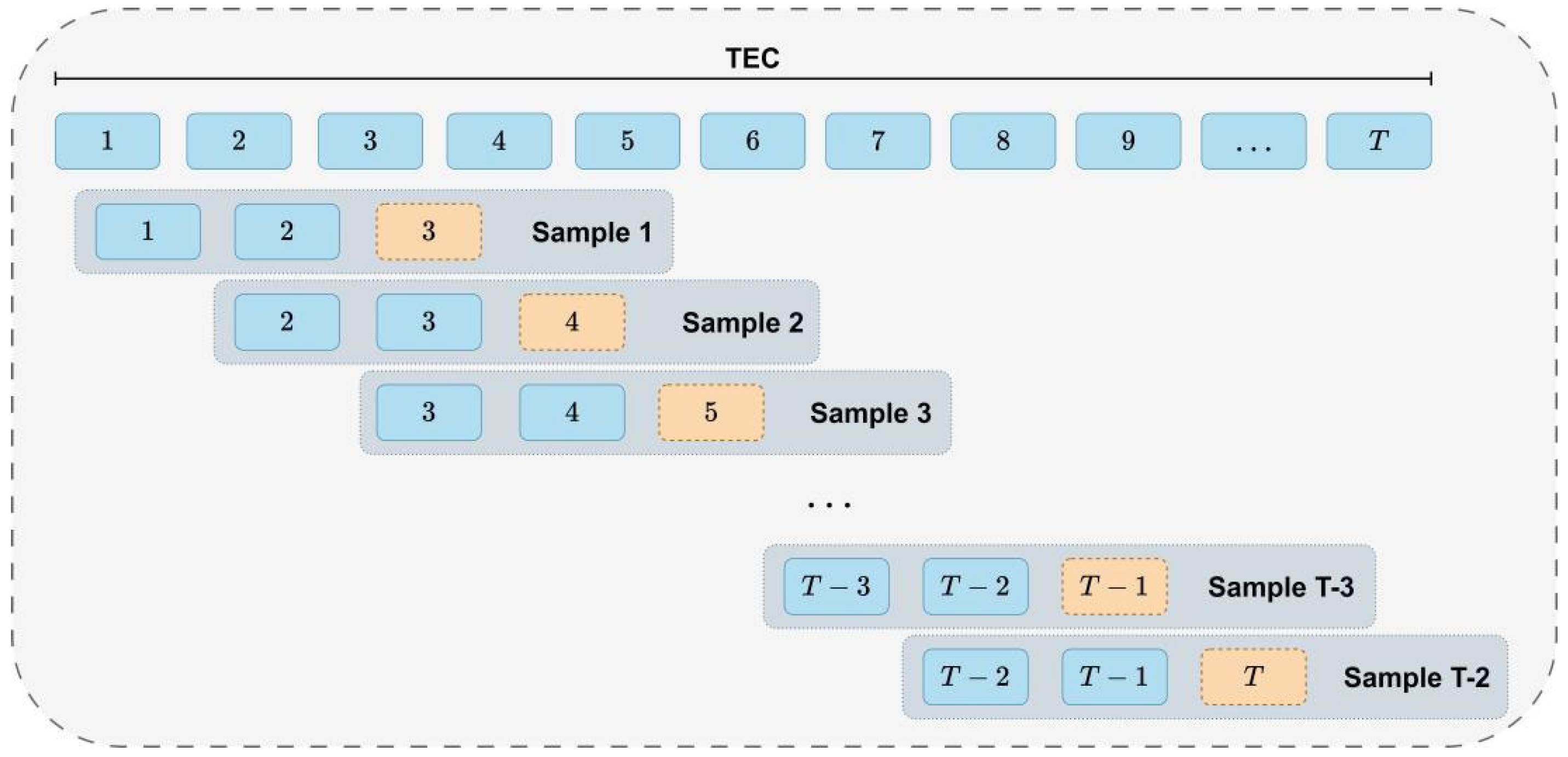

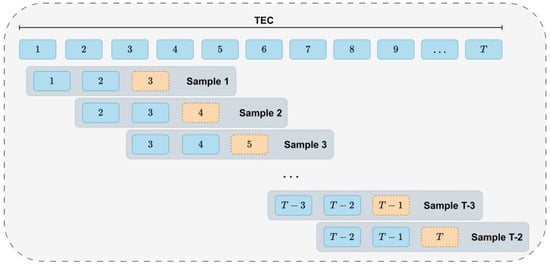

For the convenience of comparison under different solar activities, the TEC dataset includes TEC maps from 3 years of high solar activity (2013–2015) and 3 years of low solar activity (2017–2019). Among them, 4 years are used as the training set (2013, 2014, 2017, and 2018), while the rest 2 years are used as the test set (2015 and 2019). A sliding window method is used to divide samples, with the window slides for one day at a time. Subsequent experiments show that the model has the best performance when the input length is 2 days and the output length is 1 day. The specific partitioning process is shown in Figure 1, in which each box represents 12 TEC maps for a day.

Figure 1.

Generate TEC dataset using sliding window.

To prevent the model from being biased by large data, we normalize the dataset using the Min–Max standardization method, which is calculated as follows:

where denotes the original TEC map, is the standardized TEC map, and and represent the minimum and maximum of in the training set, respectively.

3. Methodology

The proposed ED-SA-ConvLSTM adopts an encoder–decoder structure to organize SA-ConvLSTM units. Therefore, this section first introduces the SA-ConvLSTM unit, then the proposed ED-SA-ConvLSTM.

3.1. SA-ConvLSTM

The self-Attention Convolutional Long Short-Term Memory (SA-ConvLSTM) can be seen as a variant of ConvLSTM by extending the standard ConvLSTM architecture through integration of a Self-Attention Memory (SAM) module. This subsection will present SAM and SA-ConvLSTM.

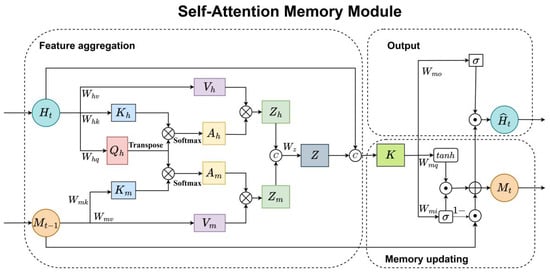

3.1.1. Self-Attention Memory (SAM) Module

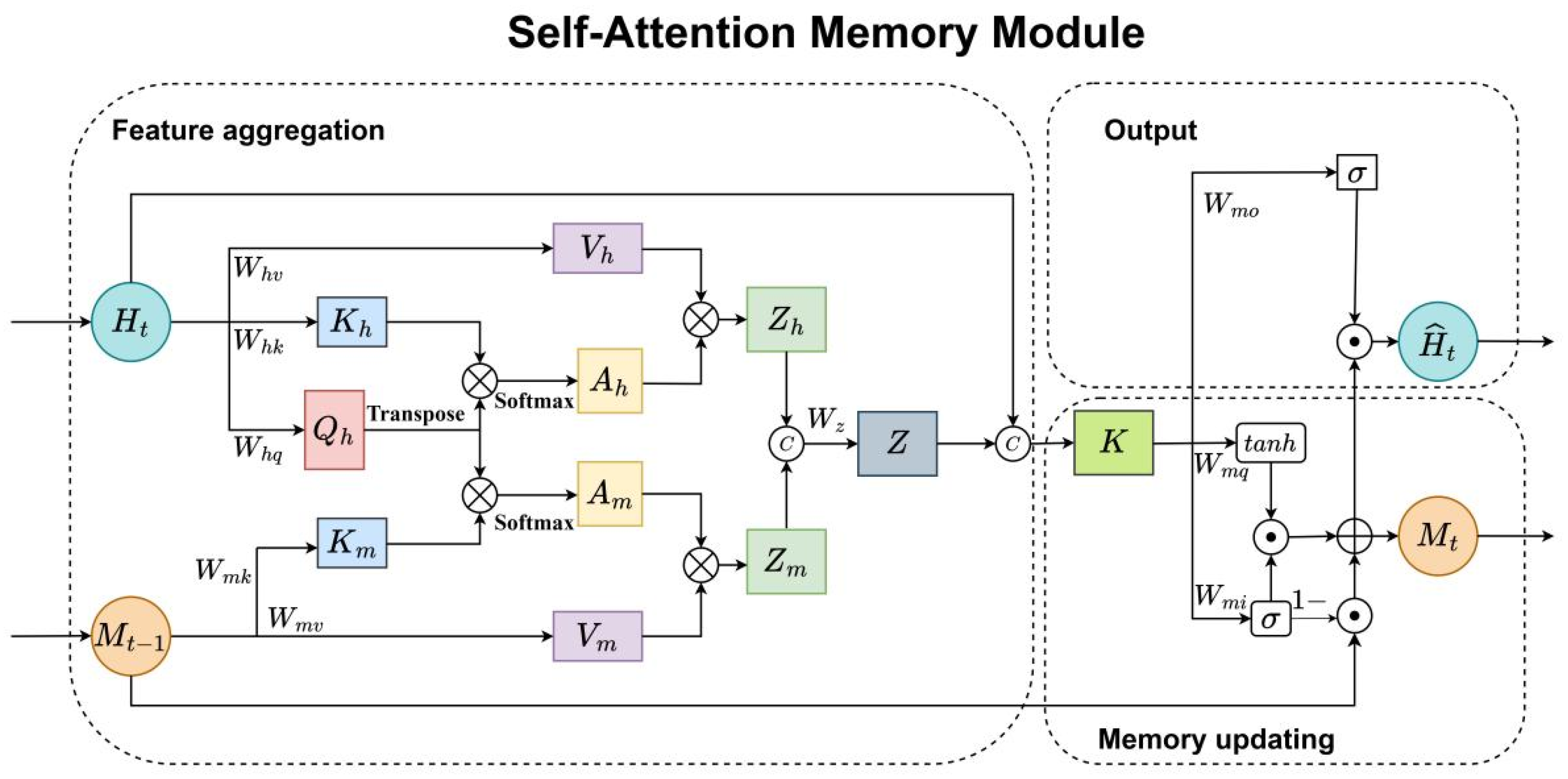

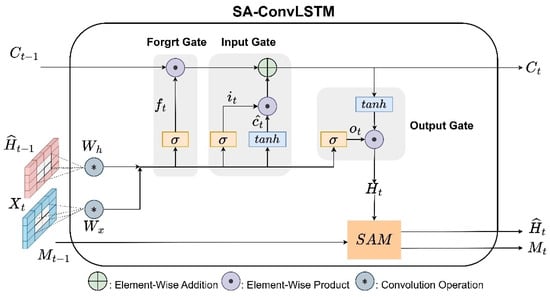

The structure of the SAM is shown in Figure 2, where is the current memory state saves long-range spatial dependencies.

Figure 2.

The structure of a SAM module.

SAM contains two inputs, namely as the current time step and as the previous time step. It consists of three parts: feature aggregation, memory updating, and output generation.

- ●

- Feature aggregation: This part first weights to the features of the current time step . It obtained by applying self-attention to . And the long-range spatial memory features is calculated using and similarity score . Finally, and are aggregated into . The calculation of weighted features and are shown in Equations (2) and (3), respectively.The weighted current feature and the weighted long-range memory state are connected to obtain the aggregated feature . The calculation is as follows:In Equations (2)–(4), is the hidden layer feature at the current time step, is the long-range spatial memory from the previous step, , , , , and , are weight matrices, while , , , , and are the intermediate vectors that help to calculate the attention scores of and , and denotes vector concatenation.

- ●

- Memory updating: The function of memory updating is to update the long-range spatial memory adaptively. The aggregated and the hidden layer feature of the current time step are connected to form . The calculations are shown in Equation (5):where * denotes convolution, and ⨀ represents the Hadamard product.

- ●

- Output: The output part ultimately generates a new using a dot product between the output gate and update memory . Its calculation is as follows:

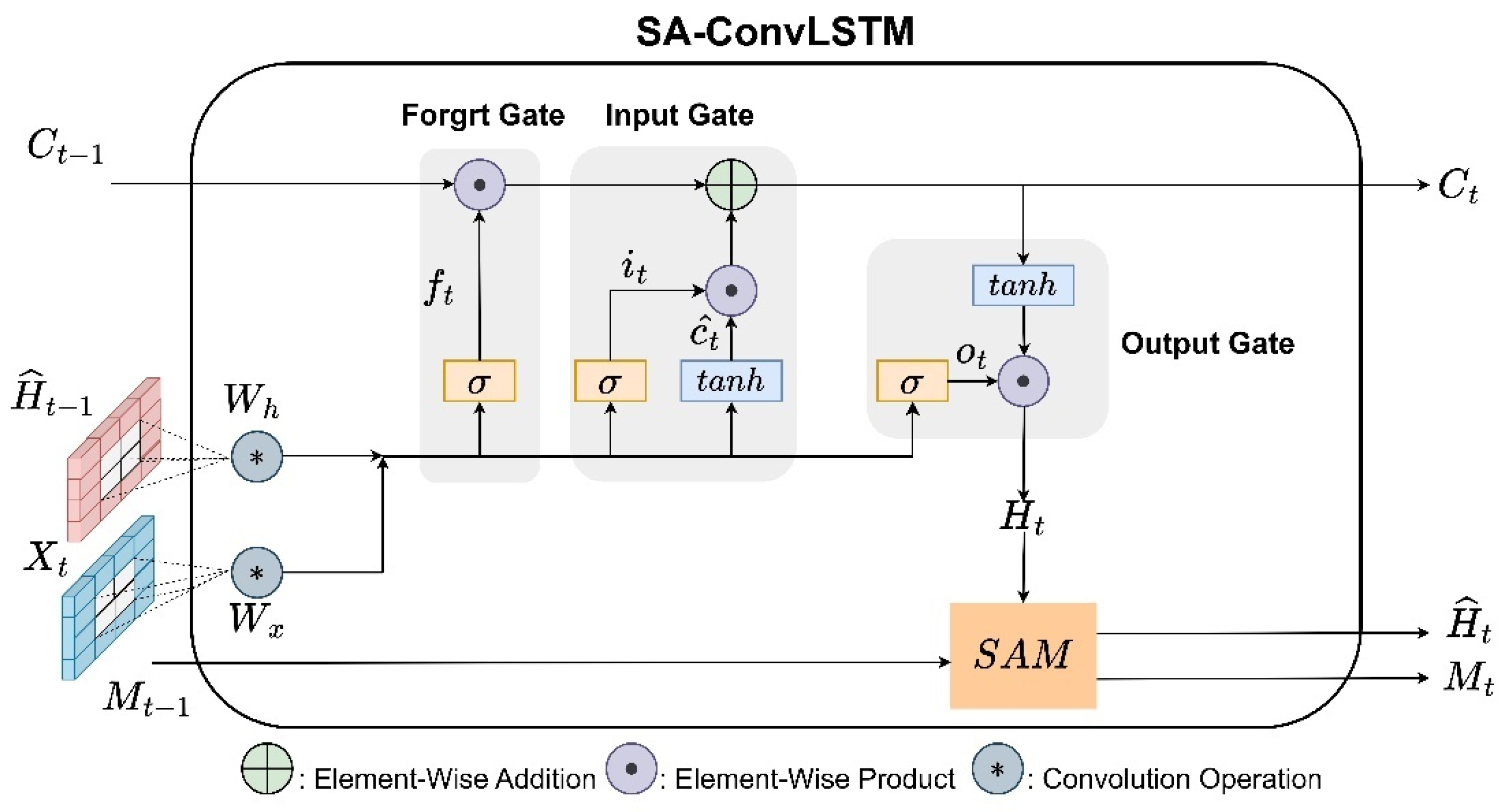

3.1.2. SA-ConvLSTM Unit

SAM module is embedded in ConvLSTM to form SA-ConvLSTM. The structure of an SA-ConvLSTM unit is shown in Figure 3. It includes three gates (the forget gate , the input gate , and the output gate ) and the SAM module. The calculations are shown in Equation (7):

Figure 3.

Structure of a SA-ConvLSTM unit.

Among them, is the current input, is the current feature extracted using ConvLSTM, and is the output from the previous time step.

3.2. The Proposed ED-SA-ConvLSTM

The previous subsection introduced the basic structure of the SA-ConvLSTM unit. In this subsection, a novel TEC spatiotemporal prediction model was built using SA-ConvLSTM as the basic blocks.

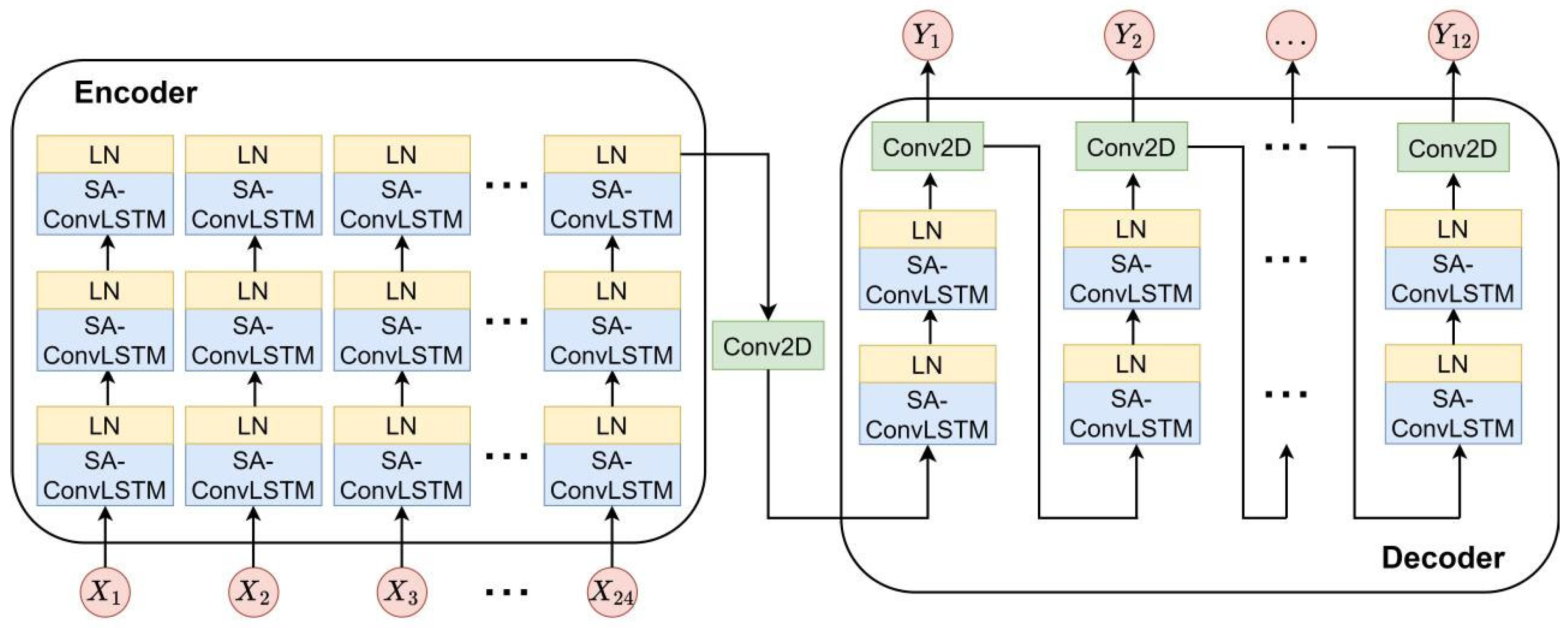

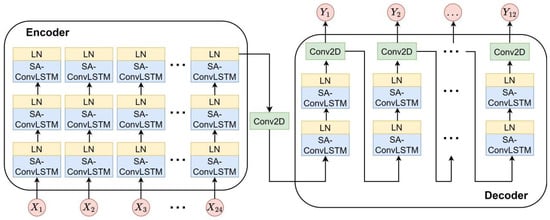

In the original SA-ConvLSTM model, which uses the “n vs. n” structure, data flows through its layers in a “sequence-sequence” manner. This means that any layer in the network receives a sequence of length n and outputs a sequence of the same length. The model with “n vs. n” structure cannot be applied to variable-length prediction. This paper proposes a model, ED-SA-ConvLSTM, by redesigning SA-ConvLSTM using an encoder–decoder structure. The structure of ED-SA-ConvLSTM is shown in Figure 4, which consists of two parts: the encoder part and the decoder part.

Figure 4.

Structure of ED-SA-ConvLSTM.

The encoder part receives TEC map sequences as input, extracting spatiotemporal features from them and ultimately transforming these spatiotemporal features into a final spatiotemporal feature map. Subsequent experiments in this paper indicate that to predict the TEC maps for the next day, an input length of two days is optimal. So, the tensor size accepted for the encoder part is (24, 40, 32, and 1), with “24” indicating the input length (12 TEC maps from 2 days are used as input), “1” representing the singular feature channel, “40” and “32” being the grid size of each TEC map. In the encoder, three SA-ConvLSTM layers are used to extract features from the input spatiotemporal feature map. To prevent data from changing at each layer, which leads to gradient vanishing or explosion, we added a Layer Normalization (LN) layer after each SA-ConvLSTM layer to standardize the data. Finally, a Conv2D layer is used to aggregate the sequence of spatiotemporal feature maps and transform them into a single final spatiotemporal feature map.

The decoder part receives the final spatiotemporal feature map generated using the encoder and decodes it through two pairs of cascaded SA-ConvLSTM and LN layers. The decoder adopts a stepwise recursive prediction method: first, it generates the TEC prediction map at the current time, and then uses the current prediction map as the input to generate the prediction for the next time step. By continuously repeating this process, the decoder ultimately generates 12 TEC maps through this step-by-step prediction method. The stepwise recursive prediction method is a conventional approach to solve multi-step prediction using recursive neural networks [47].

Obviously, from the above description, in the proposed ED-SA-ConvLSTM, the flow of data is “sequence-feature-sequence”. Namely, the model receives a certain length TEC map sequence, converts it into fixed TEC spatiotemporal features using the encoder, then decodes it through the decoder, and finally generates variable lengths TEC sequence. The data flow pattern of “sequence-feature-sequence” in the encoder–decoder structure can adapt to variable length prediction problems, which overcomes the shortcomings of the original SA-ConvLSTM.

3.3. Evaluation Metrics

To evaluate the performance of the proposed model and the comparative models, this paper uses two evaluation metrics: Root Mean Square Error () and Mean Absolute Percentage Error (), which are as follows:

Among them, n is the number of samples in the test set; and denote the true and predicted values, respectively.

4. Experimental Results

All deep learning models in this paper are built using PyTorch 1.11.0. For all the deep learning models, the optimizer is Adaptive Motion Estimation (ADAM); the loss function is Mean Square Error (MSE); the learning rate is dynamically adjusted using the CosineAnnealingWarmRestarts strategy, with an initial value of 0.001 and adjusted every 50 iterations; the maximum number of iterations is 300.

4.1. Model Optimization

C1PG is a prediction product provided by CODE, which needs no optimization, while ED-SA-ConvLSTM, ConvLSTM, and PredRNN are deep learning models. And there are many hyperparameters that will affect their performance. These three models need to be optimized. In this paper, we use Bayesian optimization to optimize the two most important hyperparameters of these three models: the number and size of convolution kernels (represented Filter and Kernel size separately). The optimization results via Bayesian optimization are shown in Table 1. In the subsequent experiments, the hyperparameters of ED-SA-ConvLSTM, ConvLSTM, and PredRNN were set to the values in Table 1.

Table 1.

Best hyperparameters found via Bayesian optimization.

4.2. The Input Length

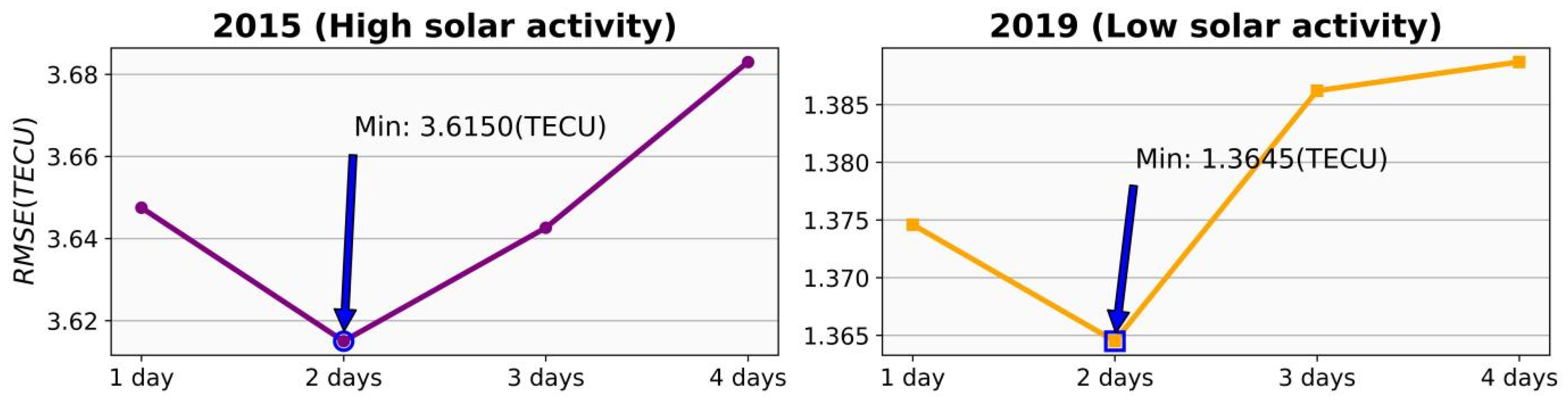

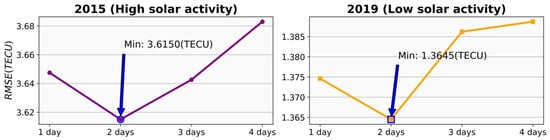

This subsection discusses the input length required to predict the TEC maps for the next day. Figure 5 shows the predictive performance of the model when the input length is 1 to 4 days, where the left side represents the for 2015 (a period of high solar activity), and the right demonstrates the for 2019 (a period of low solar activity).

Figure 5.

Influence of input length on the model’s prediction performance.

Obviously, whether during high or low solar activity years, it is best when the input length is 2 days. So, in subsequent experiments, an input length of each deep learning model is 2 days (including 24 TEC maps), and an output length is 1 day (12 TEC maps).

4.3. Ablation Experiment

In this subsection, we first verify the effectiveness of the encoder–decoder structure through an ablation experiment. In the original SA-ConvLSTM, variable-length prediction cannot be directly achieved. Therefore, we added Conv3D at the end of the original SA-ConvLSTM to adjust the output length to one day.

The results of the ablation experiment are shown in Table 2, in which the of ED-SA-ConvLSTM decreased by 2.24% and 2.56% compared to those of SA-ConvLSTM under high solar activity and low solar activity, respectively. From the perspective of , ED-SA-ConvLSTM outperforms SA-ConvLSTM in both solar activities. These prove the superiority of the encoder–decoder structure over “n vs. n” structure.

Table 2.

The results of the ablation experiment.

4.4. Comparison with Other Models

In this part, ED-SA-ConvLSTM was compared with C1PG, ConvLSTM, and PredRNN from multiple perspectives. Each model receives TEC map sequences from two days, processes them through an encoder–decoder structure, and finally generates TEC map sequences for the next day.

4.4.1. Overall Quantitative Comparison

This subsection provides an overall quantitative comparison on the test set. Table 3 shows the overall quantitative comparison results of ED-SA-ConvLSTM model and three comparative models during high and low solar activity. Apparently, compared to C1PG, ConvLSTM, and PredRNN, the of the proposed ED-SA-ConvLSTM decreased by 10.27%, 7.16%, and 4.53% in 2015 (high solar activity), and by 11.46%, 4.06%, and 2.75% in 2019 (low solar activity). In addition, the of ED-SA-ConvLSTM is significantly lower than those of the comparative models under both solar activities, demonstrating the effectiveness of the proposed ED-SA-ConvLSTM.

Table 3.

Overall comparison on test set (The best is bolded).

Furthermore, for a more detailed quantitative comparison, we first calculated the absolute error () of each model at every grid point on each map in the test set. Then, under different solar activities, the absolute error is subdivided into several small intervals. Finally, the percentage of each model within each error interval is counted, and the results are shown in Table 4 and Table 5. Obviously, the percentages of ED-SA-ConvLSTM are higher than those of the competitive models in all absolute error intervals. This further confirms the superiority of the proposed ED-SA-ConvLSTM.

Table 4.

Absolute error distribution statistics for 2015 (The best is bolded).

Table 5.

Absolute error distribution statistics for 2019 (The best is bolded).

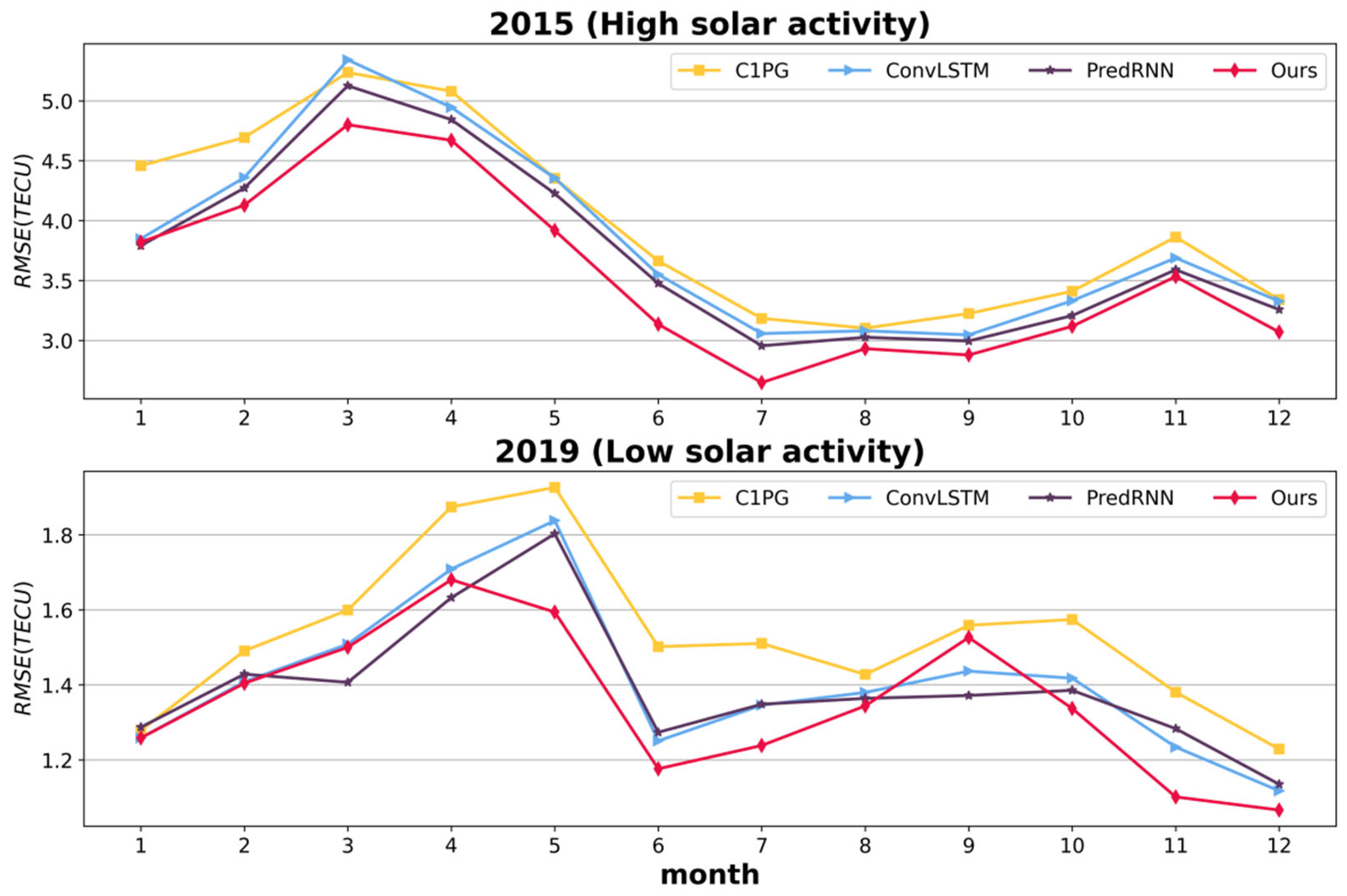

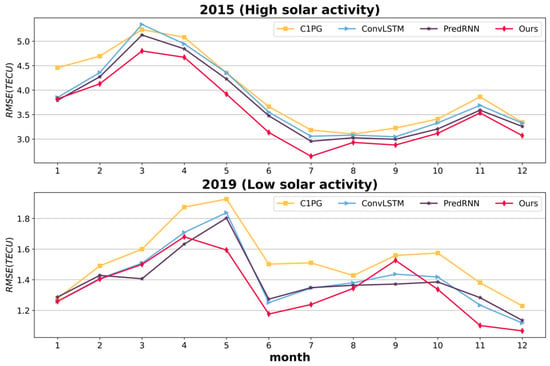

4.4.2. Comparison in Different Months

The previous subsection provided a quantitative comparison from an overall perspective. In contrast, this subsection divides the evaluation period by month, with detailed results presented in Figure 6, with the top and bottom representing the monthly comparisons for 2015 and 2019, respectively.

Figure 6.

Monthly comparison among all models.

In 2015, the of ED-SA-ConvLSTM is consistently the lowest across all months except January. Notably, the advantage of ED-SA-ConvLSTM is very obvious in 7 months (February through July, and December). In 2019, ED-SA-ConvLSTM had the smallest in 9 months, with particularly notable advantages in May, June, July, November, and December. In March and April, it is inferior to PredRNN, and in September, it is inferior to ConvLSTM and PredRNN. In addition, it can be seen that PredRNN is the second best in the vast majority of cases, due to its dual memory of time and space, which can remember more spatiotemporal changes in history. ED-SA-ConvLSTM is also a dual memory model. In addition to dual memory, ED-SA-ConvLSTM also uses self-attention technology to adaptively weight historical memory to focus on spatial dependencies over long and short distances, making it surpass the PredRNN, which has dual memory in the vast majority of cases.

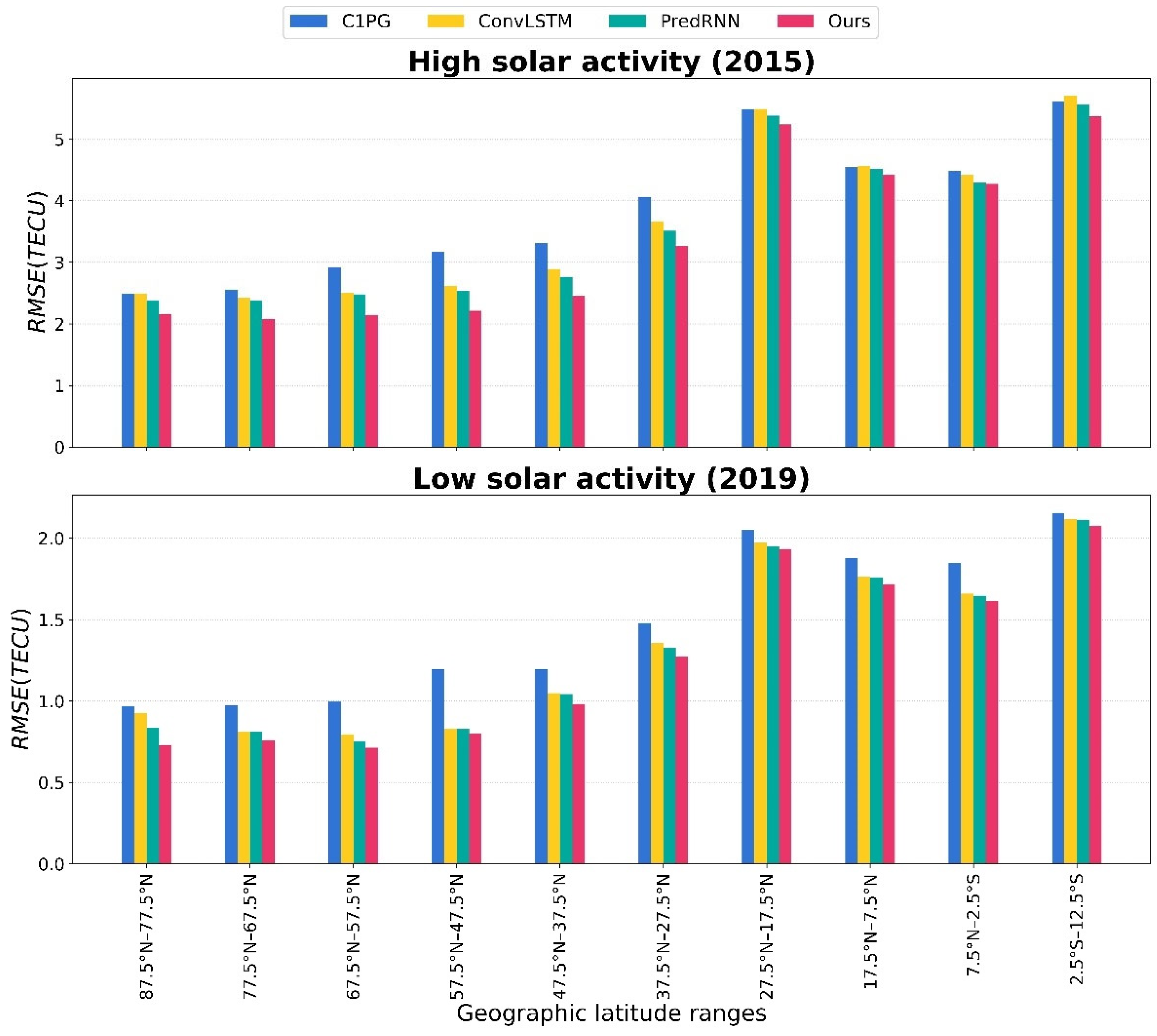

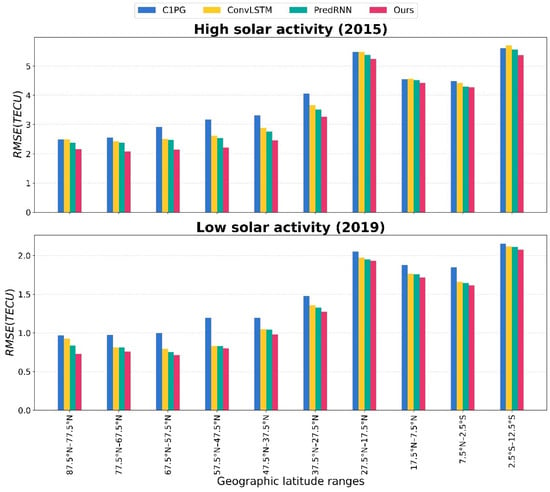

4.4.3. Comparison at Different Latitude Regions

The previous subsection focused on a temporal comparison, while this subsection will shift to a spatial comparison. To facilitate the spatial analysis, the research area is divided into 10 subregions, each covering a span of 10 degrees of latitude. Figure 7 illustrates the comparisons within each subregion, with the upper depicting the comparison for 2015 and the lower for 2019.

Figure 7.

Comparison of each model in each subregion.

Figure 6 shows that, under both solar activities, the of each model is highest near the equator and low away from it. This is consistent with the Equatorial Ionization Anomaly (EIA) phenomenon. Additionally, in all cases, the of the proposed ED-SA-ConvLSTM is lower than that of the comparative models, indicating its superiority over the comparative models.

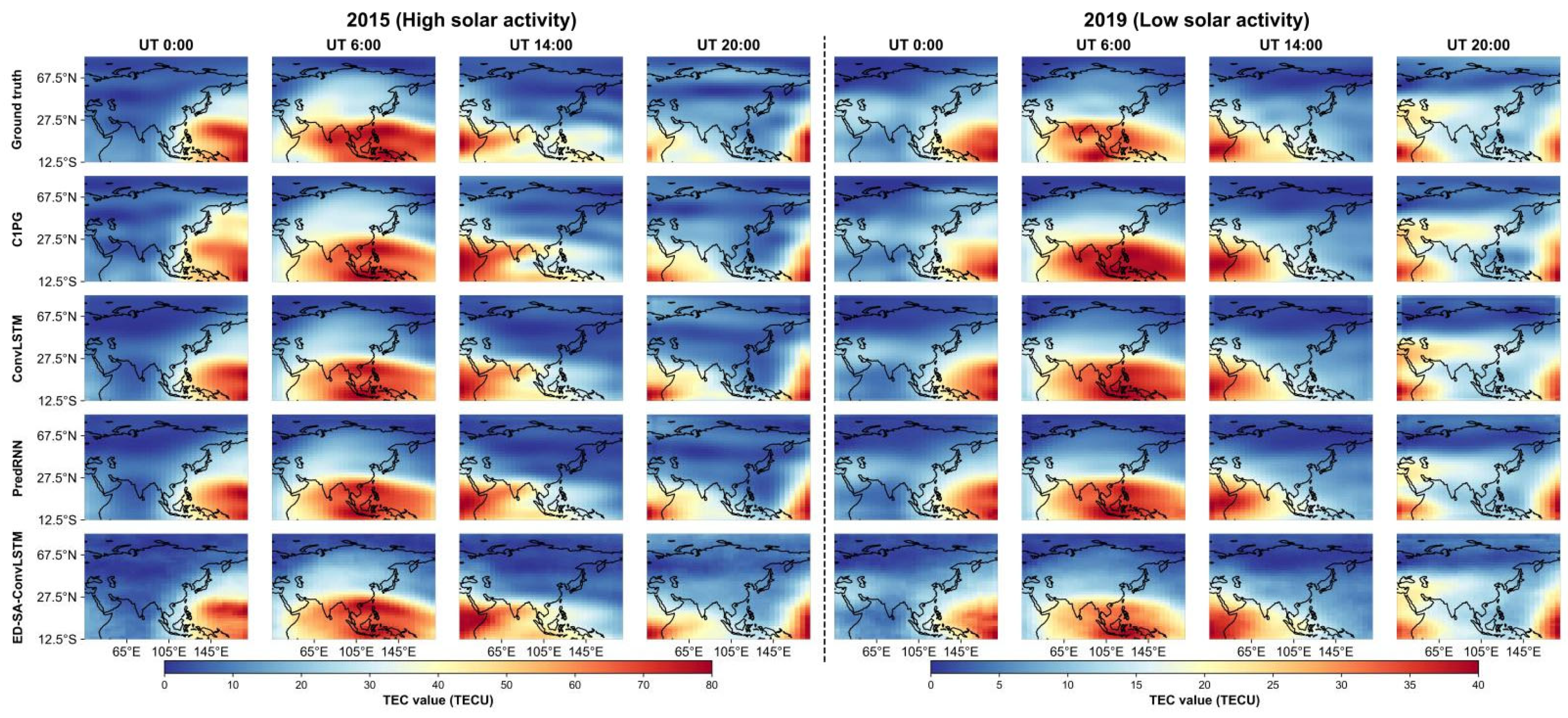

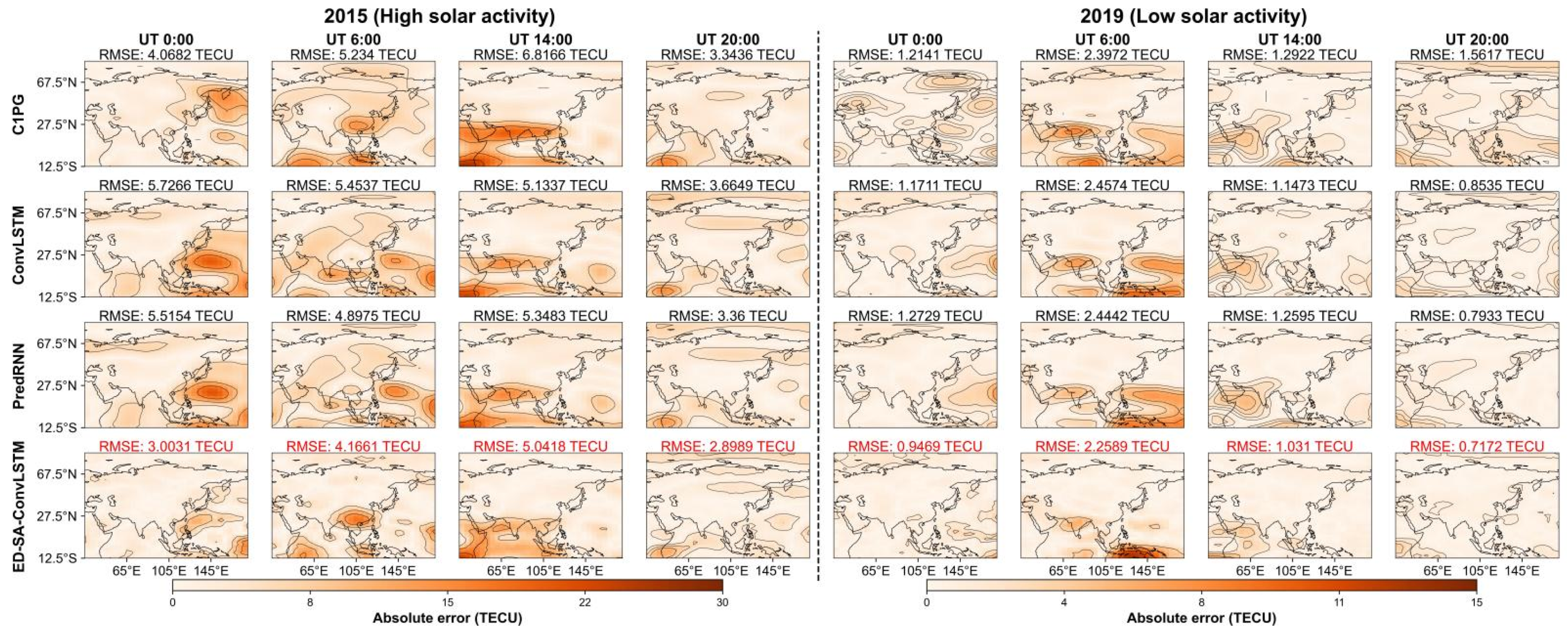

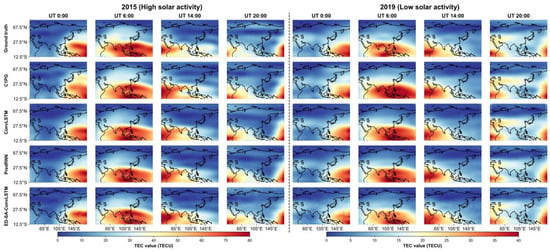

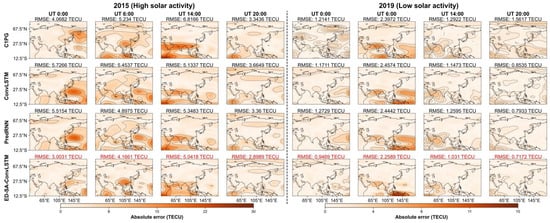

4.4.4. Visual Effects of Various Models

This subsection provides a visual comparison of the prediction effects of ED-SA-ConvLSTM and the three comparative models. We chose one day representing high solar activity (DOY 5, 2015) and another representing low solar activity (DOY 47, 2019) to showcase the performance of each model. The visual effects for each model on the selected days are presented in Figure 8. The corresponding absolute error maps are shown in Figure 9, with the of each model being annotated at the top of each map and the best highlighted in green. These figures clearly illustrate that ED-SA-ConvLSTM exhibits the smallest area of absolute error anomalies, highlighting its superior predictive performance compared to the other models.

Figure 8.

TEC prediction maps in 2015 and in 2019 for each model.

Figure 9.

Absolute error maps for 2015 and for 2019 for each model.

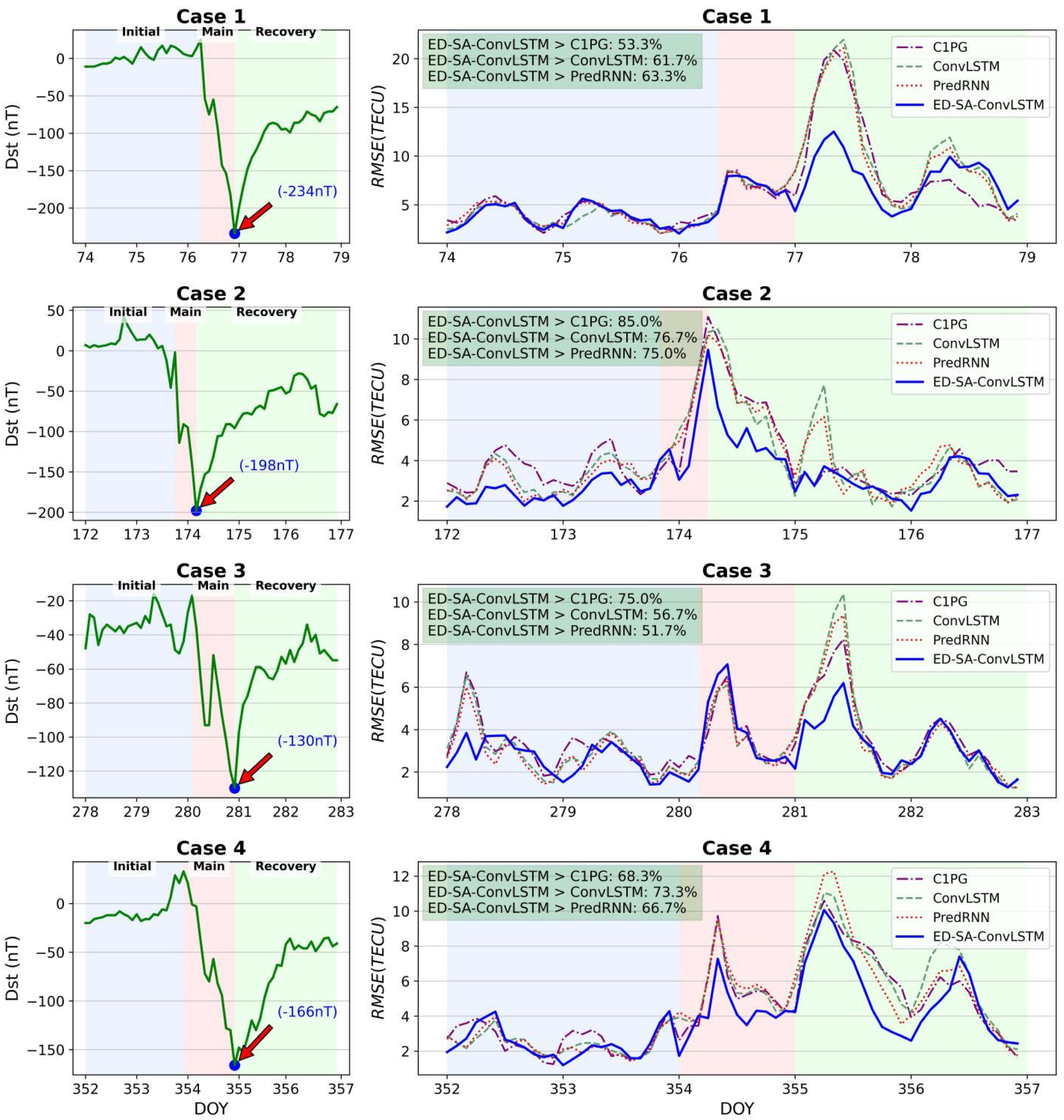

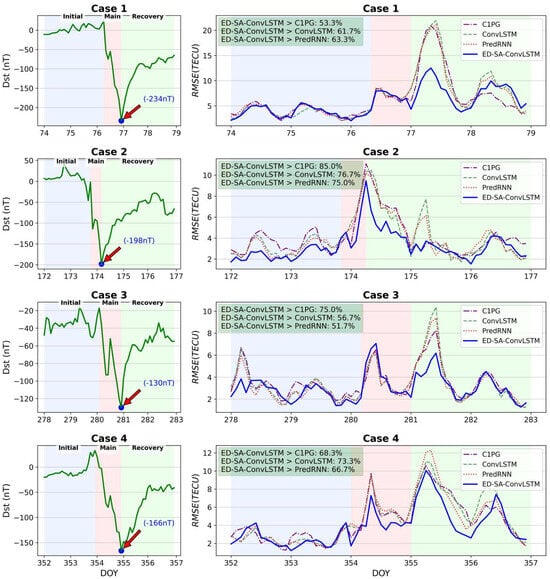

4.5. Comparison Under Extreme Situations

This subsection assesses the effectiveness of the proposed ED-SA-ConvLSTM under extreme conditions. To achieve this, four geomagnetic storm events were selected for analysis. The details of the four selected geomagnetic storm events are presented in Table 6.

Table 6.

Four geomagnetic storm events and their corresponding minimum dst.

Figure 10 shows a comparison of the various models across the four geomagnetic storm events. It can be seen that in most cases, the of the proposed ED-SA-ConvLSTM is lower than those of the comparison models. But there are also some situations that are not as good as comparison models, such as on DOY280 in Case 3.

Figure 10.

Comparison on four magnetic storm events.

In summary, the experiments in this subsection prove that ED-SA-ConvLSTM has excellent predictive ability under extreme conditions.

4.6. Comparison Under Other Test Sets

In this subsection, the performance of the proposed ED-SA-ConvLSTM was validated for the years 2011 (high solar activity) and 2016 (low solar activity). The entire region is divided into three parts, namely the low-latitude region (30° N–12.5° S), the mid-latitude region (60° N–30° N), and the high-latitude region (87.5° N–60° N). and for each model were calculated separately in these three regions. The results are shown in Table 7. It can be seen that the proposed ED-SA-ConvLSTM outperformed the comparative methods in and in low-, mid-, and high-latitude regions in 2011. And in 2016, although PredRNN performed better in the low-latitude region, the proposed ED-SA-ConvLSTM still maintained the lowest errors in the mid- and high-latitude regions. Overall, the proposed ED-SA-ConvLSTM performed better than the comparative models in other years.

Table 7.

Each model error in different latitude regions (The best results are bolded).

4.7. Comparison of Computational Time and Memory Usage

In this subsection, we conducted a quantitative evaluation of the computational time and memory usage for each model, as presented in Table 8. C1PG is an official prediction product provided by CODE, so there is no need to calculate the cost. The experimental results demonstrate that the proposed ED-SA-ConvLSTM, which incorporates the self-attention memory module, exhibits significantly higher computational time and memory usage compared to ConvLSTM and PredRNN. However, when combined with the prediction accuracy presented in Section 4.3, Section 4.4 and Section 4.5, ED-SA-ConvLSTM showed superior predictive performance compared to ConvLSTM and PredRNN. This indicates that a moderate investment in computational resources greatly improves prediction accuracy, achieving a balance between accuracy and efficiency.

Table 8.

Computational time and memory usage for each model.

5. Discussion

The above experiments show that the proposed ED-SA-ConvLSTM is generally superior to the other three state-of-the-art comparison models (C1PG, ConvLSTM, and PredRNN). In 2015, the proposed ED-SA-ConvLSTM reduced by 10.27%, 7.16%, and 4.53%, respectively; while in 2019, the reductions were 11.46%, 4.06%, and 2.75%, respectively. In addition, the proposed ED-SA-ConvLSTM exhibits excellent spatiotemporal stability: it maintains optimal performance in 11 months of 2015 and 9 months of 2019, and outperforms the comparison model across 10 regions at different latitudes. We also verified the proposed ED-SA-ConvLSTM under extreme cases. In four magnetic storm events, the proposed ED-SA-ConvLSTM outperformed the comparison models in most cases. Moreover, we tested other solar activity years, namely 2011 (high solar activity) and 2016 (low solar activity). The results indicate that in 2011, the model’s predictive performance was outstanding; In 2016, the performance was slightly inferior to PredRNN. This may be related to the relative amplification of prediction error when TEC value is generally low during periods of low solar activity. Although this model has slightly higher computational time and memory usage than ConvLSTM and PredRNN, comprehensive error analysis shows that this moderate investment of computational resources has resulted in a great improvement in prediction accuracy, achieving a balance between accuracy and efficiency.

6. Conclusions

This paper proposes ED-SA-ConvLSTM, a novel encoder–decoder structure with SA-ConvLSTM units for TEC spatiotemporal prediction. It uniquely integrates dual memory states of temporal and spatial, and a structure similar to self-attention to simultaneously capture short-range memory and long-range memory. This is different from the unique structure of ConvLSTM and PredRNN, which enables it to model complex, dynamic, and long-range spatial dependencies in TEC. Additionally, the encoder–decoder structure used in the proposed model enables it to be applied to spatiotemporal prediction problems with unequal input and output lengths.

In the future, we will focus on optimizing the computational efficiency of the model to reduce processing time and memory usage while maintaining current prediction accuracy. Furthermore, we will explore transferring this model framework to other spatiotemporal prediction domains, including but not limited to solar flare prediction, ionospheric effect simulation during solar eclipses, earthquake precursor monitoring, ionospheric disturbance prediction, and traffic flow prediction across interdisciplinary applications.

Author Contributions

The contributions of Y.L. and H.D. are constructing concepts and the manuscript’s revising. The contributions of J.X. and H.L. are writing original draft and building software. The contributions of B.L. and J.H. are providing methodologies and validation. And the contribution of T.H. is data curation. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Project of the Natural Science Foundation of Hunan Province, China (No. 2023JJ50066, 2024JJ5364, 2023JJ50390) and the Hunan Provincial Education Office (No. 23A0593).

Data Availability Statement

The final product from the International GNSS Service (IGS) and the CODE 1-day prediction product (C1PG) were obtained from NASA’s CDDIS website (https://cddis.nasa.gov/archive/gnss/products/ionex/) (accessed on 16 June 2015). The code for this paper can be downloaded from https://github.com/Amos0311/ED-SA-ConvLSTM (accessed on 11 June 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, H.; Liu, H.; Yuan, J.; Le, H.; Shan, W.; Li, L. MAOOA-Residual-Attention-BiConvLSTM: An Automated Deep Learning Framework for Global TEC Map Prediction. Space Weather 2024, 22, e2024SW003954. [Google Scholar] [CrossRef]

- Gao, X.; Yao, Y. A Storm-Time Ionospheric TEC Model with Multichannel Features by the Spatiotemporal ConvLSTM Network. J. Geod. 2023, 97, 9. [Google Scholar] [CrossRef]

- Sivavaraprasad, G.; Deepika, V.S.; SreenivasaRao, D.; Ravi Kumar, M.; Sridhar, M. Performance Evaluation of Neural Network TEC Forecasting Models over Equatorial Low-Latitude Indian GNSS Station. Geod. Geodyn. 2020, 11, 192–201. [Google Scholar] [CrossRef]

- Hu, T.; Lei, Y.; Su, J.; Yang, H.; Ni, W.; Gao, C.; Yu, J.; Wang, Y.; Gu, Y. Learning Spatiotemporal Features of DSA Using 3D CNN and BiConvGRU for Ischemic Moyamoya Disease Detection. Int. J. Neurosci. 2023, 133, 512–522. [Google Scholar] [CrossRef]

- Wang, D.; Yang, Y.; Ning, S. DeepSTCL: A Deep Spatio-Temporal ConvLSTM for Travel Demand Prediction. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar]

- Wang, X.; Xie, W.; Song, J. Learning Spatiotemporal Features With 3DCNN and ConvGRU for Video Anomaly Detection. In Proceedings of the 2018 14th IEEE International Conference on Signal Processing (ICSP), Beijing, China, 12–16 August 2018; pp. 474–479. [Google Scholar]

- Bilitza, D. International Reference Ionosphere: Recent Developments. Radio Sci. 1986, 21, 343–346. [Google Scholar] [CrossRef]

- Bent, R.B.; Llewellyn, S.K.; Nesterczuk, G.; Schmid, P.E. The Development of a Highly-Successful Worldwide Empirical Ionospheric Model and Its Use in Certain Aspects of Space Communications and Worldwide Total Electron Content Investigations; Naval Research Laboratory: Washington, DC, USA, 1975; Volume 1. [Google Scholar]

- Hochegger, G.; Nava, B.; Radicella, S.; Leitinger, R. A Family of Ionospheric Models for Different Uses. Phys. Chem. Earth Part C Sol. Terr. Planet. Sci. 2000, 25, 307–310. [Google Scholar] [CrossRef]

- Nava, B.; Coïsson, P.; Radicella, S.M. A New Version of the NeQuick Ionosphere Electron Density Model. J. Atmos. Sol.-Terr. Phys. 2008, 70, 1856–1862. [Google Scholar] [CrossRef]

- Ansari, K.; Park, K.-D.; Kubo, N. Linear Time-Series Modeling of the GNSS Based TEC Variations over Southwest Japan during 2011–2018 and Comparison against ARMA and GIM Models. Acta Astronaut. 2019, 165, 248–258. [Google Scholar] [CrossRef]

- Ratnam, D.V.; Otsuka, Y.; Sivavaraprasad, G.; Dabbakuti, J.R.K.K. Development of Multivariate Ionospheric TEC Forecasting Algorithm Using Linear Time Series Model and ARMA over Low-Latitude GNSS Station. Adv. Space Res. 2019, 63, 2848–2856. [Google Scholar] [CrossRef]

- Vankadara, R.K.; Sasmal, S.; Maurya, A.K.; Panda, S.K. An Autoregressive Integrated Moving Average (ARIMA) Based Forecasting of Ionospheric Total Electron Content at a Low Latitude Indian Location. In Proceedings of the 2022 URSI Regional Conference on Radio Science (USRI-RCRS), Indore, India, 1–4 December 2022; pp. 1–4. [Google Scholar]

- Jiang, Z.; Zhang, Z.; He, X.; Li, Y.; Yuan, H. Efficient and Accurate TEC Modeling and Prediction Approach with Random Forest and Bi-LSTM for Large-Scale Region. Adv. Space Res. 2024, 73, 650–662. [Google Scholar] [CrossRef]

- Liu, L.; Zou, S.; Yao, Y.; Wang, Z. Forecasting Global Ionospheric TEC Using Deep Learning Approach. Space Weather 2020, 18, e2020SW002501. [Google Scholar] [CrossRef]

- Wen, Z.; Li, S.; Li, L.; Wu, B.; Fu, J. Ionospheric TEC Prediction Using Long Short-Term Memory Deep Learning Network. Astrophys Space Sci 2021, 366, 3. [Google Scholar] [CrossRef]

- Sun, W.; Xu, L.; Huang, X.; Zhang, W.; Yuan, T.; Chen, Z.; Yan, Y. Forecasting of Ionospheric Vertical Total Electron Content (TEC) Using LSTM Networks. In Proceedings of the 2017 International Conference on Machine Learning and Cybernetics (ICMLC), Ningbo, China, 9–12 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 340–344. [Google Scholar]

- Xie, T.; Dai, Z.; Zhu, X.; Chen, B.; Ran, C. LSTM-Based Short-Term Ionospheric TEC Forecast Model and Positioning Accuracy Analysis. GPS Solut. 2023, 27, 66. [Google Scholar] [CrossRef]

- Tang, J.; Liu, C.; Yang, D.; Ding, M. Prediction of Ionospheric TEC Using a GRU Mechanism Method. Adv. Space Res. 2024, 74, 260–270. [Google Scholar] [CrossRef]

- Xiong, P.; Zhai, D.; Long, C.; Zhou, H.; Zhang, X.; Shen, X. Long Short-Term Memory Neural Network for Ionospheric Total Electron Content Forecasting Over China. Space Weather 2021, 19, e2020SW002706. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, H.; Shan, W.; Yao, Y.; Xing, L.; Wang, H.; Zhang, K. Optimizing Deep Learning Models with Improved BWO for TEC Prediction. Biomimetics 2024, 9, 575. [Google Scholar] [CrossRef]

- Li, D.; Jin, Y.; Wu, F.; Zhao, J.; Min, P.; Luo, X. A Hybrid Model for TEC Prediction Using BiLSTM and PSO-LSSVM. Adv. Space Res. 2024, 74, 303–318. [Google Scholar] [CrossRef]

- Maheswaran, V.K.; Baskaradas, J.A.; Nagarajan, R.; Anbazhagan, R.; Subramanian, S.; Devanaboyina, V.R.; Das, R.M. Bi-LSTM Based Vertical Total Electron Content Prediction at Low-Latitude Equatorial Ionization Anomaly Region of South India. Adv. Space Res. 2024, 73, 3782–3796. [Google Scholar] [CrossRef]

- Mao, S.; Li, H.; Zhang, Y.; Shi, Y. Prediction of Ionospheric Electron Density Distribution Based on CNN-LSTM Model. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Kaselimi, M.; Doulamis, N.; Voulodimos, A.; Doulamis, A.; Delikaraoglou, D. Spatio-Temporal Ionospheric TEC Prediction Using a Deep CNN-GRU Model on GNSS Measurements. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 8317–8320. [Google Scholar]

- Ren, X.; Zhao, B.; Ren, Z.; Wang, Y.; Xiong, B. Deep Learning-Based Prediction of Global Ionospheric TEC During Storm Periods: Mixed CNN-BiLSTM Method. Space Weather 2024, 22, e2024SW003877. [Google Scholar] [CrossRef]

- Lei, D.; Liu, H.; Le, H.; Huang, J.; Yuan, J.; Li, L.; Wang, Y. Ionospheric TEC Prediction Base on Attentional BiGRU. Atmosphere 2022, 13, 1039. [Google Scholar] [CrossRef]

- Han, C.; Guo, Y.; Ou, M.; Wang, D.; Song, C.; Jin, R.; Zhen, W.; Bai, P.; Chong, X.; Wang, X. A Lightweight Prediction Model for Global Ionospheric Total Electron Content Based on Attention-BiLSTM. Adv. Space Res. 2024, 75, 3614–3629. [Google Scholar] [CrossRef]

- Liu, H.; Wang, H.; Yuan, J.; Li, L.; Zhang, L. TEC Prediction Based on Att-CNN-BiLSTM. IEEE Access 2024, 12, 68471–68484. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.-Y.; Wong, W.; WOO, W. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28. [Google Scholar]

- Chen, J.; Zhi, N.; Liao, H.; Lu, M.; Feng, S. Global Forecasting of Ionospheric Vertical Total Electron Contents via ConvLSTM with Spectrum Analysis. GPS Solut. 2022, 26, 69. [Google Scholar] [CrossRef]

- Liu, L.; Morton, Y.J.; Liu, Y. ML Prediction of Global Ionospheric TEC Maps. Space Weather 2022, 20, e2022SW003135. [Google Scholar] [CrossRef]

- Xu, C.; Ding, M.; Tang, J. Prediction of GNSS-Based Regional Ionospheric TEC Using a Multichannel ConvLSTM With Attention Mechanism. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Li, L.; Liu, H.; Le, H.; Yuan, J.; Shan, W.; Han, Y.; Yuan, G.; Cui, C.; Wang, J. Spatiotemporal Prediction of Ionospheric Total Electron Content Based on ED-ConvLSTM. Remote Sens. 2023, 15, 3064. [Google Scholar] [CrossRef]

- de Paulo, M.C.M.; Marques, H.A.; Feitosa, R.Q.; Ferreira, M.P. New Encoder–Decoder Convolutional LSTM Neural Network Architectures for next-Day Global Ionosphere Maps Forecast. GPS Solut. 2023, 27, 95. [Google Scholar] [CrossRef]

- Yang, J.; Huang, W.; Xia, G.; Zhou, C.; Chen, Y. Operational Forecasting of Global Ionospheric TEC Maps 1-, 2-, and 3-Day in Advance by ConvLSTM Model. Remote Sens. 2024, 16, 1700. [Google Scholar] [CrossRef]

- Li, L.; Liu, H.; Le, H.; Yuan, J.; Wang, H.; Chen, Y.; Shan, W.; Ma, L.; Cui, C. ED-AttConvLSTM: An Ionospheric TEC Map Prediction Model Using Adaptive Weighted Spatiotemporal Features. Space Weather 2024, 22, e2023SW003740. [Google Scholar] [CrossRef]

- Tang, J.; Zhong, Z.; Ding, M.; Yang, D.; Liu, H. Forecast of Ionospheric TEC Maps Using ConvGRU Deep Learning Over China. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 3334–3344. [Google Scholar] [CrossRef]

- Liu, H.; Wang, H.; Le, H.; Yuan, J.; Shan, W.; Wu, Y.; Chen, Y. CGAOA-STRA-BiConvLSTM: An Automated Deep Learning Framework for Global TEC Map Prediction. GPS Solut. 2025, 29, 55. [Google Scholar] [CrossRef]

- Tang, J.; Zhong, Z.; Hu, J.; Wu, X. Forecasting Regional Ionospheric TEC Maps over China Using BiConvGRU Deep Learning. Remote Sens. 2023, 15, 3405. [Google Scholar] [CrossRef]

- Luo, W.; Li, Y.; Urtasun, R.; Zemel, R. Understanding the Effective Receptive Field in Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2016, 29. Available online: https://proceedings.neurips.cc/paper/2016/hash/c8067ad1937f728f51288b3eb986afaa-Abstract.html (accessed on 11 June 2025).

- Wang, Y.; Long, M.; Wang, J.; Gao, Z.; Yu, P.S. PredRNN: Recurrent Neural Networks for Predictive Learning Using Spatiotemporal LSTMs. Adv. Neural Inf. Process. Syst. 2017, 30. Available online: https://proceedings.neurips.cc/paper/2017/hash/e5f6ad6ce374177eef023bf5d0c018b6-Abstract.html?ref=https://githubhelp.com (accessed on 11 June 2025).

- Lin, Z.; Li, M.; Zheng, Z.; Cheng, Y.; Yuan, C. Self-Attention ConvLSTM for Spatiotemporal Prediction. Proc. AAAI Conf. Artif. Intell. 2020, 34, 11531–11538. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Cho, K.; Merrienboer, B.v.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations Using RNN Encoder-Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014. [Google Scholar]

- Elman, J.L. Finding Structure in Time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).