Abstract

Variational inequality problems (VIPs) provide a versatile framework for modeling a wide range of real-world applications, including those in economics, engineering, transportation, and image processing. In this paper, we propose a novel iterative algorithm for solving VIPs in real Hilbert spaces. The method integrates a double-inertial mechanism with the two-subgradient extragradient scheme, leading to improved convergence speed and computational efficiency. A distinguishing feature of the algorithm is its self-adaptive step size strategy, which generates a non-monotonic sequence of step sizes without requiring prior knowledge of the Lipschitz constant. Under the assumption of monotonicity for the underlying operator, we establish strong convergence results. Numerical experiments under various initial conditions demonstrate the method’s effectiveness and robustness, confirming its practical advantages and its natural extension of existing techniques for solving VIPs.

Keywords:

variational inequalities; two-subgradient extragradient method; monotone operators; inertial techniques; strong convergence; self-adaptive step sizes MSC:

65J15; 47H05; 47J25; 47J20; 91B50

1. Introduction

This paper investigates the variational inequality problem (VIP) in real Hilbert spaces, a fundamental mathematical framework with wide-ranging applications in operations research, split feasibility problems, equilibrium analysis, optimization theory, and fixed-point problems.

Let be a nonempty, closed, and convex subset of a real Hilbert space , endowed with the inner product and the induced norm . Given an operator , the VIP seeks to find a point such that

Variational inequality problems have gained increasing attention in recent mathematical research due to their capacity to model a broad spectrum of phenomena across multiple disciplines. Applications span structural mechanics, economic theory, and optimization [1,2,3], as well as operations research, engineering systems, and the physical sciences [4,5,6,7]. The VIP framework provides a powerful tool for analyzing equilibrium in complex systems, including transportation networks, pricing mechanisms, financial markets, supply chains, ecological networks, and communication infrastructures [8,9,10].

The foundations of finite-dimensional VIP theory were established independently by Smith [11] and Dafermos [12], who applied the framework to traffic flow models. Subsequent developments by Lawphongpanich and Hearn [13] and Panicucci et al. [14] further extended these models to traffic assignment problems under Wardrop’s equilibrium principles. More recent studies have expanded VIP’s applications to economic models involving Nash equilibria, pricing mechanisms, internet-based resource allocation, financial networks, and environmental policy design [15,16,17,18,19,20,21].

Recent advances in iterative algorithms for VIPs have focused on identifying algorithmic properties that ensure convergence under weaker assumptions [22]. Among the various methods developed, two primary classes are widely studied: regularization techniques and projection-based methods. This paper emphasizes projection methods, with a particular focus on their convergence behavior and computational efficiency.

The projected gradient method is one of the most fundamental projection-based approaches for solving VIPs. Its iterative scheme is given by

where denotes the metric projection onto . While this method benefits from simplicity, requiring only one projection per iteration, its convergence relies on strong assumptions: the operator must be both L-Lipschitz-continuous and -strongly monotone, with step sizes constrained by . These restrictive conditions often limit the method’s applicability in practice.

The strong monotonicity condition is particularly restrictive for many real-world problems. Korpelevich’s extragradient method (EM) [23] addresses this limitation by relaxing the assumptions while still ensuring convergence. The EM iteration scheme is given by

which requires only that is monotone and L-Lipschitz continuous, with step sizes . Korpelevich established the weak convergence of the sequence to a solution of the VIP under these milder conditions.

In the past two decades, significant research has been devoted to improving and generalizing the extragradient method, resulting in numerous extensions and refinements [24,25,26,27,28,29,30,31,32].

The standard EM (3) requires two evaluations of the operator and two projections onto the feasible set per iteration. However, when is a general closed and convex set, these projections can become computationally expensive. To mitigate this issue, Censor et al. [33] proposed the subgradient extragradient method (SEM), which replaces the second projection onto with a computationally simpler projection onto a half-space. The SEM iteration scheme is defined as follows:

Building on this advancement, Censor et al. [34] introduced the two-subgradient extragradient method (TSEM), which further enhances computational efficiency by utilizing two half-space projections instead of projections onto . The TSEM algorithm is formulated as

We now briefly introduce the inertial technique, which has become increasingly influential in the development of iterative schemes for solving VIPs. This approach originates from Polyak’s work [35] on the discretization of second-order dissipative dynamical systems. Its main characteristic is the use of information from the two most recent iterations to compute the next approximation. Although conceptually simple, this modification has proven remarkably effective in accelerating convergence in practice. The inertial idea has inspired numerous advanced algorithms, as reflected in recent studies [1,36,37].

A significant development occurred in 2024 when Alakoya et al. [38] introduced the inertial two-subgradient extragradient method (ITSEM). This method preserves computational efficiency while ensuring strong convergence to the minimum-norm solution of the VIP, a particularly desirable property, as minimum-norm solutions often possess meaningful physical interpretations in applications. The ITSEM algorithm is defined by the following iteration:

Recent advances in projection-based methods have extended from single inertial schemes to more sophisticated double-inertial step techniques for solving VIPs. These enhanced approaches utilize multiple inertial extrapolations to accelerate convergence while operating under relaxed assumptions. Notable contributions include the double-inertial subgradient extragradient method for pseudomonotone VIPs developed by Yao et al. [39], the single projection approach with double-inertial steps proposed by Thong et al. [40], and the method introduced by Li and Wang [41] for quasi-monotone VIPs. These methodological innovations have significantly expanded the theoretical scope and practical utility of projection methods. A comprehensive overview of these developments can be found in [42,43] and the related literature.

Motivated by these recent trends, we propose a novel double-inertial two-subgradient extragradient algorithm that incorporates several key innovations. First, the proposed framework integrates double-inertial steps with a self-adaptive step size strategy, which eliminates the need for prior knowledge of the Lipschitz constant and avoids computationally expensive linesearch procedures. Second, our method guarantees strong convergence under milder assumptions than those required by existing algorithms, making it particularly effective for problems involving non-monotone operators or unknown Lipschitz parameters. Third, through extensive numerical experiments, we demonstrate the algorithm’s superior performance in terms of convergence rate, solution accuracy, and robustness across various problem instances. The results confirm the method’s practical advantages in terms of both efficiency and stability under diverse parameter settings.

The remainder of this paper is organized as follows. Section 2 presents the mathematical preliminaries and key definitions required for the development of our method. In Section 3, we establish the convergence properties of the proposed algorithm through a detailed theoretical analysis. Section 4 reports a series of numerical experiments that benchmark the algorithm against existing techniques. Finally, Section 5 concludes the paper by summarizing the main findings and outlining directions for future research.

2. Preliminaries

Let be a nonempty, closed, and convex subset of a real Hilbert space . We denote weak convergence by and strong convergence by . The weak limit set of the sequence , denoted by , consists of all points for which there exists a subsequence such that , i.e.,

The following basic identities and inequality hold for all and :

The metric projection operator maps each to its unique nearest point in , defined by

The operator is nonexpansive and satisfies the following properties [44]:

- (1)

- For all ,

- (2)

- For all and ,

- (3)

- For all and ,

- (4)

- Let be a half-space defined by with . Then the projection of onto Q is explicitly given by

Definition 1

([45]). Let be an operator. Then

- (1)

- is γ-strongly monotone if there exists such that for all ,

- (2)

- is γ-inverse strongly monotone (or γ-cocoercive) if for some ,

- (3)

- is monotone if for all ,

- (4)

- is L-Lipschitz continuous for some if

Definition 2

([46]). A function is said to be Gâteaux-differentiable at if there exists an element such that

The vector is called the Gâteaux derivative of at u. If this holds for all , then is said to be Gâteaux-differentiable on .

Definition 3

([44]). Let be convex. The function is subdifferentiable at if the subdifferential

is nonempty. Each is called a subgradient of at u. If is Gâteaux-differentiable at u, then .

Definition 4

([44]). Let be a real Hilbert space. A function is said to be weakly lower semicontinuous at if, for every sequence such that , we have

Lemma 1

([44]). Let be a convex function. Then, is weakly sequentially lower semicontinuous if and only if it is lower semicontinuous.

Lemma 2

([47]). Let , where is a continuously differentiable convex function. Suppose that the solution set of the variational inequality problem (1) is nonempty. Then, for any , the point u is a solution to if and only if one of the following conditions holds:

- 1.

- ;

- 2.

- and there exists a constant such that ,

where denotes the boundary of the set .

Lemma 3

([31]). Let be a nonempty, closed, and convex subset of , and let be a continuous monotone operator. Then, for any , the following equivalence holds:

Lemma 4

([48]). Let be a sequence such that , and let be a nonnegative sequence. Suppose that is a real sequence satisfying

If every subsequence satisfies

then the condition implies that .

Lemma 5

([49]). Let and be two nonnegative sequences such that

If , then the limit exists and is finite.

3. Main Results

We propose a new inertial subgradient extragradient method that incorporates both monotonic and non-monotonic step size strategies to solve variational inequality problems. The iterative sequence is generated according to the steps described in Algorithm 1. The convergence of the method is established under the following key assumptions.

Assumption 1.

The following conditions are assumed:

- (C1)

- The feasible set is defined aswhere is a continuously differentiable convex function with -Lipschitz continuous gradient . The constant is not assumed to be known in advance.

- (C2)

- The operator satisfies the following conditions:

- (a)

- is monotone and -Lipschitz-continuous (where is also unknown);

- (b)

- For all , the growth condition holds for some constant ;

- (c)

- The solution set is nonempty.

- (C3)

- The parameters satisfy the following conditoins:

- (a)

- ;

- (b)

- The sequence is nonnegative and summable, i.e., .

Remark 1.

The proposed algorithm possesses the following key properties:

- (i)

- The half-space construction guarantees that for all , which follows directly from the convexity of and the subgradient inequality.

- (ii)

- Under the assumptions on () and , the inertial terms satisfyConsequently, combining this with and the uniform lower bound , we obtain the uniform boundedness result: For any , there exists such that

| Algorithm 1: Double-Inertial Two-Subgradient Extragradient Method |

|

The following lemma is instrumental in establishing the boundedness of the iterative sequence and serves as a foundation for the convergence analysis of the proposed algorithm.

Lemma 6.

Let be the sequence generated by the update rule in Algorithm 1. Then,

where .

Proof.

Assume that for all ,

Since is -Lipschitz-continuous and is -Lipschitz-continuous, we have

Thus,

From the update rule, it follows that

so the sequence is bounded above by and bounded below by . Applying Lemma 5, we conclude that the limit exists and is finite. Denoting this limit by , we obtain

□

Lemma 7.

Let and be sequences generated by Algorithm 1. Then, for any solution , the following estimate holds:

Proof.

From the step size update rule, we observe that

This inequality immediately yields the key estimate

Let be a solution. For simplicity, define , which gives . Since , the projection property (6) yields

We now expand these terms systematically:

Since and , the projection property yields

Combining (10) with Young’s inequality, we obtain

Furthermore, the monotonicity of implies

Substituting (12)–(15) into (11) produces the key estimate

We now analyze two distinct cases:

- Case 1:

- . In this scenario, the desired result follows immediately from (16).

- Case 2:

- . By Lemma 2, we have

- ;

- There exists such that .

Since , we know . Applying the subdifferential inequality yields

This inequality implies

Furthermore, since , the half-space construction gives

Applying the subdifferential inequality, we obtain

Combining inequalities (18) and (19) yields

From (17) and (20), we derive the key estimate

We note that by Assumption 1 C2(b), the constant satisfies

Consequently, we derive the following estimates:

Substituting (10), (21) and (22) into (16) yields

which establishes the desired inequality (9). □

The following fundamental lemma establishes the boundedness of the iterative sequences generated by our algorithm.

Lemma 8.

Under Assumption 1, the sequences and generated by Algorithm 1 are bounded.

Proof.

Since the limit of exists by Lemma 6, we have . From the parameter conditions in Assumption 1, we derive

Consequently, there exists an integer such that for all ,

From inequality (9), we deduce that for all ,

Using the definition of and the bound (8), we systematically derive

Combining the inequalities (24) and (25), we obtain the recursive estimate:

This recursive bound establishes the boundedness of both and , thus completing the proof of Lemma 8. □

Lemma 9.

Let be generated by Algorithm 1 under Assumption 1. Then for any solution , the following estimate holds:

where and

Proof.

Lemma 10.

Let and be the sequences generated by Algorithm 1 under Assumption 1. If there exists a subsequence that converges weakly to and satisfies , then .

Proof.

Let and be subsequences of the iterates generated by the algorithm, such that and

Since , the half-space construction implies that

Applying the Cauchy–Schwarz inequality gives

Since is continuous and the sequence is bounded, it follows that is also bounded. Hence, there exists a constant such that

Substituting into (29), we obtain

Since is convex and continuous, Lemma 1 ensures that is weakly lower semi-continuous. Therefore, taking the limit inferior on both sides of (30) gives

which implies that . Furthermore, we have

Using the monotonicity of , we derive the following inequality

Taking the limit as and using the fact that

we obtain the variational inequality

By Lemma 3, this implies that . □

We now establish the strong convergence of the proposed algorithm.

Theorem 1.

Under Assumption 1, the sequence generated by Algorithm 1 converges strongly to , where is the minimum-norm solution:

Proof.

Since , it follows from Lemma 9 that

Since , there exists such that for all ,

The conditions on the parameters yield

To prove that , we apply Lemma 4. It suffices to show that for any subsequence satisfying

we also have

Consider a subsequence satisfying condition (33). From Lemma 9, we derive the key inequality

where the normalized term expands as

Applying the limit conditions (7), (32) and (33) to (34) yields

From (23), we obtain the key convergence:

Using the boundedness of and conditions (7) and , we analyze

The boundedness of ensures that is nonempty. Let be arbitrary, with being a subsequence such that . From (37), we deduce , and Lemma 10 combined with (36) establishes that . This proves . Consider a weakly convergent subsequence of with . Since , we have

Taking in (35) and applying (7), (37) and (38), we obtain

Finally, Lemma 4 applied to (31) yields

completing the proof of strong convergence. □

4. Numerical Illustrations

In the following analysis, we present a detailed comparison of the algorithms applied to selected numerical examples. Each example is chosen to highlight distinct aspects of performance, including convergence rate, computational efficiency, and solution accuracy. All experiments are carried out under uniform conditions to ensure a fair and consistent comparison. The test cases are designed to represent a range of problem types, including ill-conditioned systems and problems with varying levels of computational complexity.

To assess the efficiency and robustness of the proposed method (Algorithm 1, hereafter referred to as Alg1), we compare its performance with several benchmark algorithms from the existing literature. In particular, we include Algorithm 3.2 from [38] (denoted as Alg3.2), Algorithm 2 from [50] (referred to as Alg2), and Algorithm 3.1 from [51] (referred to as Alg3.1).

The comparison is based on three representative examples. For each case, the problem settings and algorithmic parameters are selected and adjusted to maintain consistency across methods. Where applicable, parameter choices follow those recommended in the respective source references, with slight modifications to accommodate the test conditions. For the proposed Alg1, we employ parameters specifically tuned to balance rapid convergence with low computational cost.

Example 1 (HpHard Problem).

We examine the classical HpHard problem originally proposed by Harker and Pang [52], which has been widely used as a benchmark in variational inequality research [32,53]. The problem is defined by several key components:

First, we have the linear operator given by , where the matrix M has the decomposition . Here, B represents an skew-symmetric matrix, N is an arbitrary matrix, and D is a positive-definite diagonal matrix of the same dimension. The feasible set is the hypercube . The mapping is both monotone and Lipschitz0continuous, with the Lipschitz constant . In the special case where , the solution set reduces to .

For our numerical experiments, we initialize all algorithms with and employ the stopping criterion . The parameter configurations for each compared algorithm are as follows:

- 1.

- For Alg3.2 from [38]: , , , with the sequences , , , and .

- 2.

- For Alg2 from [50]: , , , with and .

- 3.

- For Alg3.1 from [51]: , , , using , , , , and .

- 4.

- For our proposed Algorithm 1 (Alg1), , , , , with , , and .

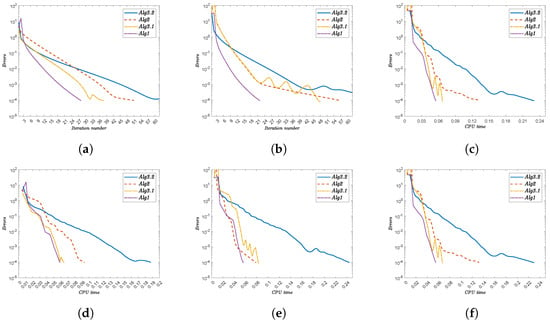

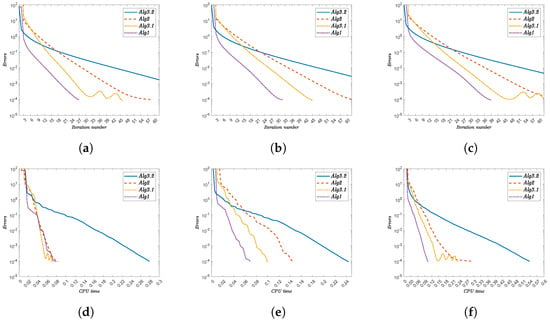

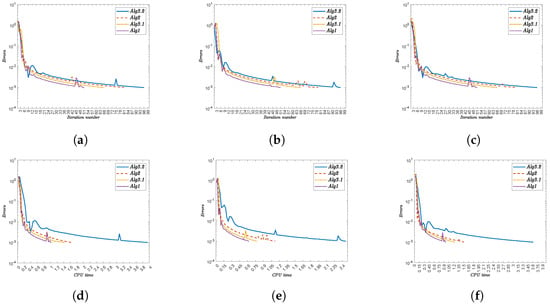

Comparative Numerical Analysis: This study evaluates the performance of four optimization algorithms (Alg1, Alg3.2, Alg2, and Alg3.1) across increasing problem dimensions . Our proposed Alg1 demonstrates superior efficiency in both iteration counts and computational time compared to existing methods, as evidenced by the numerical results in Table 1 and visualized in Figure 1 and Figure 2. The key findings reveal several important patterns.

Table 1.

Performance comparison across dimensions (iterations n and time t in seconds).

Figure 1.

Convergence behavior and computational efficiency of the algorithms for dimensions . The top row shows error versus iterations; the bottom row shows error versus time. (a) Convergence (). (b) Convergence (). (c) Convergence (). (d) Time efficiency (). (e) Time efficiency (). (f) Time efficiency ().

Figure 2.

Convergence behavior and computational efficiency of the algorithms for dimensions . The top row shows error versus iterations; the bottom row shows error versus time. (a) Convergence (). (b) Convergence (). (c) Convergence (). (d) Time efficiency (). (e) Time efficiency (). (f) Time efficiency ().

First, Alg1 consistently achieves the lowest iteration counts and fastest computation times across all dimensions. For example, at , Alg1 converges in just 39 iterations (0.105 s), while Alg3.2 requires 104 iterations (0.538 s), a 62.5% reduction in iterations and 80.5% faster computation.

Second, all algorithms exhibit dimension-dependent performance degradation, though Alg1 maintains the most favorable scaling. While Alg1’s iteration count grows from 28 () to 39 (), Alg3.2 shows a more dramatic increase from 72 to 104 iterations for the same dimensional range.

Third, the computational time follows similar trends, with Alg1 demonstrating remarkable efficiency. At , Alg1 completes in 0.0587 s compared to Alg3.2’s 0.1876 s, and this advantage persists at (0.1052 vs. 0.538 s).

The results conclusively demonstrate Alg1’s superior scalability and computational efficiency, particularly for high-dimensional problems. This performance advantage stems from the algorithm’s innovative combination of inertial techniques and adaptive step sizes, which effectively mitigate the computational burden associated with increasing dimensionality.

Example 2.

Consider the Hilbert space equipped with the inner product

and induced norm

Let be the closed unit ball. We examine the nonlinear operator defined by

with kernel and nonlinearity given by

This operator satisfies

- Monotonicity: for all ;

- Lipschitz continuity with : .

The compared algorithms use the following configurations.

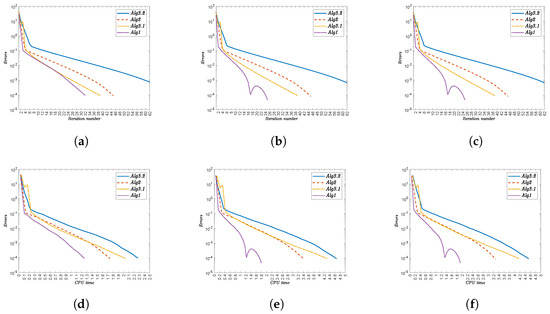

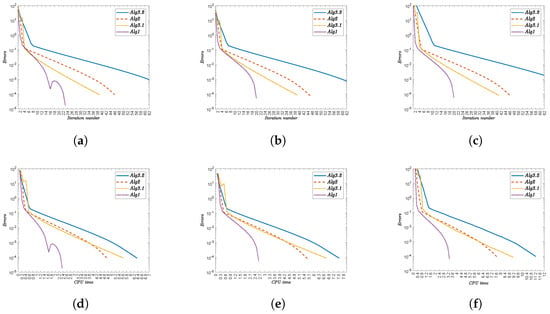

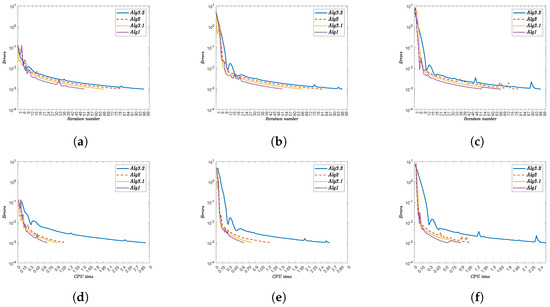

Comparative Numerical Analysis: In this study, we conduct a comprehensive numerical comparison of four optimization algorithms (Table 2): Alg1, Alg3.2, Alg2, and Alg3.1. Our analysis focuses on their performance across different initial points and function complexities. The test functions range from elementary cases (x, , ) to more challenging examples (, ). The evaluation metrics include iteration counts and CPU time, with results presented in Table 3 and Figure 3 and Figure 4. Key findings are as follows.

Table 2.

Parameter settings for the compared algorithms.

Table 3.

Performance comparison: iteration counts (n) and CPU times (t, seconds).

Figure 3.

Convergence behavior of Alg3.2, Alg2, Alg3.1, and Alg1 for different initial points . The top row shows error versus iteration count (n); the bottom row shows error versus CPU time (t). (a) Error vs. iteration for . (b) Error vs. iteration for . (c) Error vs. iteration for . (d) Error vs. time for . (e) Error vs. time for . (f) Error vs. time for .

Figure 4.

Convergence behavior for complex initial points . Top row: error versus iteration count (n); bottom row: error versus CPU time (t). Algorithms compared: Alg3.2, Alg2, Alg3.1, and Alg1. (a) Error vs. iteration for . (b) Error vs. iteration for . (c) Error vs. iteration for . (d) Error vs. time for . (e) Error vs. time for . (f) Error vs. time for .

- (i)

- Alg1 demonstrates superior efficiency, consistently achieving the lowest iteration counts and CPU times. For instance,

- For x, Alg1 requires 32 iterations (1.225 s) versus Alg3.2’s 75 iterations (2.274 s).

- For , Alg1 converges in 20 iterations (2.634 s), while Alg3.2 needs 76 iterations (7.542 s).

- (ii)

- The initial point significantly impacts convergence. Complex functions (e.g., ) amplify this effect, with Alg3.2 requiring 84 iterations (11.222 s) compared to Alg1’s 20 iterations (3.336 s).

- (iii)

- Nonlinearities in functions like increase computational demand, yet Alg1 maintains robust performance.

- (iv)

- The performance gap widens with function complexity, reinforcing Alg1’s scalability.

Alg1 outperforms competing algorithms across all test scenarios, demonstrating faster convergence and lower computational costs, particularly for complex functions. The results underscore its reliability for diverse optimization problems.

Example 3.

The third example is adapted from [54], where the mapping is defined by

with the feasible set given by .

This mapping satisfies several important properties. First, it is Lipschitz-continuous with Lipschitz constant . Second, it is pseudomonotone on the set , though it is not monotone. Finally, the problem admits a unique solution, given by .Parameter settings:

- Alg3.2 [38]: , , , , , ,

- Alg2 [50]: , , , ,

- Alg3.1 [51]: , , , , , , ,

- Alg1 (Algorithm 1): , , , , , , , ,

Comparative Numerical Analysis: This study conducts a systematic numerical comparison of four optimization algorithms—Alg1, Alg3.2, Alg2, and Alg3.1—evaluating their computational efficiency through iteration counts and CPU time. The analysis examines algorithm performance across multiple initial points , including , , and others, to assess convergence behavior under varying starting conditions.

The numerical results, presented in Table 4 and Figure 5 and Figure 6, demonstrate that Alg1 consistently achieves superior performance. Notably, while iteration counts remain stable across different values, Alg1 maintains significantly lower computational times compared to competing methods. Key observations are as follows.

Table 4.

Performance comparison across initial points : iteration counts (n) and CPU times (t, seconds).

Figure 5.

Convergence behavior of Alg3.2, Alg2, Alg3.1, and Alg1 for different initial points. The top row shows error versus iteration count; the bottom row shows error versus CPU time. (a) Error vs. iteration for . (b) Error vs. iteration for . (c) Error vs. iteration for . (d) Error vs. time for . (e) Error vs. time for . (f) Error vs. time for .

Figure 6.

Convergence behavior for additional initial points. Top row: error versus iteration count; bottom row: error versus CPU time. Algorithms compared: Alg3.2, Alg2, Alg3.1, and Alg1. (a) Error vs. iteration for . (b) Error vs. iteration for . (c) Error vs. iteration for . (d) Error vs. time for . (e) Error vs. time for . (f) Error vs. time for .

- (i)

- Alg1 outperforms all other algorithms in both metrics. For , it completes in 51 iterations (0.69481 s) versus Alg3.2’s 96 iterations (2.51503 s).

- (ii)

- The performance advantage persists across all test cases. At , Alg1 requires 51 iterations (0.62848 s) compared to Alg3.2’s 96 iterations (2.46246 s).

- (iii)

- Iteration counts remain constant for each algorithm regardless of :

- Alg3.2: 96 iterations.

- Alg2: 79–81 iterations.

- Alg3.1: 66 iterations.

- Alg1: 51 iterations.

- (iv)

- CPU times show minor variations with . For Alg1, they range from 0.62848 s () to 0.96045 s ().

- (v)

- The computational advantage of Alg1 is most pronounced against Alg3.2. At , Alg1 uses 0.67053 s versus Alg3.2’s 2.90826 s.

- (vi)

- Alg1 demonstrates robust efficiency across all test cases, maintaining low CPU times without compromising convergence speed.

Alg1 emerges as the most efficient algorithm, demonstrating consistent superiority in both iteration counts and computational time across all tested initial points . The results highlight its robustness and practical value for optimization tasks.

5. Conclusions and Future Directions

This study introduces a novel algorithm for solving variational inequality problems in real Hilbert spaces, which is particularly effective for equilibrium problems involving monotone operators. The algorithm’s key innovation is its dynamic variable step size mechanism, which provides two significant advantages: first, it eliminates the need for prior knowledge of Lipschitz-type constants, and second, it avoids the computational overhead associated with line search procedures. Extensive numerical experiments demonstrate the algorithm’s superior performance compared to existing methods in the literature.

Future Research Directions

The promising results suggest several valuable extensions for future work.

1. Application to problems with non-monotone or quasi-monotone operators, which would expand the algorithm’s practical utility across a wider range of problems.

2. Extensions to handle equilibrium problems with additional constraints, such as composite or split feasibility constraints, enabling solutions to more complex optimization scenarios.

3. The development of parallel and distributed implementations to enhance computational efficiency, particularly for large-scale problems in high-dimensional spaces.

4. Further theoretical analysis to establish convergence under weaker or more general conditions, thereby broadening the algorithm’s applicability.

5. Practical applications in important domains, including image reconstruction, machine learning, and network optimization, to validate and demonstrate the method’s effectiveness in real-world settings.

6. Exploration of alternative dynamic step size strategies, potentially incorporating adaptive learning or machine learning techniques, to further improve convergence speed and robustness.

Author Contributions

Software, F.A. and H.u.R.; Formal analysis, C.A.; Investigation, H.u.R. and C.A.; Writing—original draft, I.K.A., F.A. and H.u.R.; Writing—review & editing, I.K.A., F.A. and H.u.R.; Supervision, I.K.A. All authors have read and agreed to the published version of the manuscript.

Funding

Habib ur Rehman acknowledges financial support from Zhejiang Normal University, Jinhua, China (Grant YS304223974).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- Alakoya, T.O.; Mewomo, O.T. Viscosity s-iteration method with inertial technique and self-adaptive step size for split variational inclusion, equilibrium and fixed point problems. Comput. Appl. Math. 2022, 41, 31–39. [Google Scholar] [CrossRef]

- Aubin, J.-P.; Ekeland, I. Applied Nonlinear Analysis; Wiley: Hoboken, NJ, USA, 1984. [Google Scholar]

- Ogwo, G.N.; Izuchukwu, C.; Shehu, Y.; Mewomo, O.T. Convergence of relaxed inertial subgradient extragradient methods for quasimonotone variational inequality problems. J. Sci. Comput. 2022, 90, 35. [Google Scholar] [CrossRef]

- Baiocchi, C.; Capelo, A. Variational and Quasivariational Inequalities: Applications to Free Boundary Problems; Wiley: Hoboken, NJ, USA, 1984. [Google Scholar]

- Censor, Y.; Gibali, A.; Reich, S. Algorithms for the split variational inequality problem. Numer. Algorithms 2012, 59, 301–323. [Google Scholar] [CrossRef]

- Godwin, E.C.; Mewomo, O.T.; Alakoya, T.O. A strongly convergent algorithm for solving multiple set split equality equilibrium and fixed point problems in Banach spaces. Proc. Edinb. Math. Soc. 2023, 66, 475–515. [Google Scholar] [CrossRef]

- Kinderlehrer, D.; Stampacchia, G. An Introduction to Variational Inequalities and Their Applications; SIAM: Philadelphia, PA, USA, 2000. [Google Scholar]

- Geunes, J.; Pardalos, P.M. Network optimization in supply chain management and financial engineering: An annotated bibliography. Networks 2003, 42, 66–84. [Google Scholar] [CrossRef]

- Nagurney, A. Network Economics: A Variational Inequality Approach, 2nd ed.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1999. [Google Scholar]

- Nagurney, A.; Dong, J. Supernetworks: Decision-Making for the Information Age; Edward Elgar Publishing: Northampton, MA, USA, 2002. [Google Scholar]

- Smith, M.J. The existence, uniqueness and stability of traffic equilibria. Transp. Res. B 1979, 13, 295–304. [Google Scholar] [CrossRef]

- Dafermos, S. Traffic equilibrium and variational inequalities. Transp. Sci. 1980, 14, 42–54. [Google Scholar] [CrossRef]

- Lawphongpanich, S.; Hearn, D.W. Simplicial decomposition of the asymmetric traffic assignment problem. Transp. Res. B 1984, 18, 123–133. [Google Scholar] [CrossRef]

- Panicucci, B.; Pappalardo, M.; Passacantando, M. A path-based double projection method for solving the asymmetric traffic network equilibrium problem. Optim. Lett. 2007, 1, 171–185. [Google Scholar] [CrossRef]

- Aussel, D.; Gupta, R.; Mehra, A. Evolutionary variational inequality formulation of the generalized Nash equilibrium problem. J. Optim. Theory Appl. 2016, 169, 74–90. [Google Scholar] [CrossRef]

- Ciarciá, C.; Daniele, P. New existence Theorems for quasi-variational inequalities and applications to financial models. Eur. J. Oper. Res. 2016, 251, 288–299. [Google Scholar] [CrossRef]

- Nagurney, A.; Parkes, D.; Daniele, P. The internet, evolutionary variational inequalities, and the time-dependent Braess paradox. Comput. Manag. Sci. 2007, 4, 355–375. [Google Scholar] [CrossRef]

- Scrimali, L.; Mirabella, C. Cooperation in pollution control problems via evolutionary variational inequalities. J. Glob. Optim. 2018, 70, 455–476. [Google Scholar] [CrossRef]

- Xu, S.; Li, S. A strongly convergent alternated inertial algorithm for solving equilibrium problems. J. Optim. Theory Appl. 2025, 206, 35. [Google Scholar] [CrossRef]

- Yao, Y.; Adamu, A.; Shehu, Y. Strongly convergent golden ratio algorithms for variational inequalities. Math. Methods Oper. Res. 2025. [Google Scholar] [CrossRef]

- Tan, B.; Qin, X. Two relaxed inertial forward-backward-forward algorithms for solving monotone inclusions and an application to compressed sensing. Can. J. Math. 2025, 1–22. [Google Scholar] [CrossRef]

- Argyros, I.K. The Theory and Applications of Iteration Methods, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar]

- Korpelevich, G.M. The extragradient method for finding saddle points and other problems. Ekonom. Mat. Methods 1976, 12, 747–756. [Google Scholar]

- Tseng, P. A modified forward-backward splitting method for maximal monotone mappings. SIAM J. Control Optim. 2000, 38, 431–446. [Google Scholar] [CrossRef]

- Thong, D.V.; Hieu, D.V. Modified subgradient extragradient method for variational inequality problems. Numer. Algorithms 2018, 79, 597–610. [Google Scholar] [CrossRef]

- Reich, S.; Thong, D.V.; Cholamjiak, P.; Long, L.V. Inertial projection-type methods for solving pseudomonotone variational inequality problems in Hilbert space. Numer. Algorithms 2021, 88, 813–835. [Google Scholar] [CrossRef]

- Suleiman, Y.I.; Kumam, P.; Rehman, H.U.; Kumam, W. A new extragradient algorithm with adaptive step-size for solving split equilibrium problems. J. Inequal. Appl. 2021, 2021, 136. [Google Scholar] [CrossRef]

- Rehman, H.U.; Tan, B.; Yao, J.C. Relaxed inertial subgradient extragradient methods for equilibrium problems in Hilbert spaces and their applications to image restoration. Commun. Nonlinear Sci. Numer. Simul. 2025, 146, 108795. [Google Scholar] [CrossRef]

- Ceng, L.C.; Ghosh, D.; Rehman, H.U.; Zhao, X. Composite Tseng-type extragradient algorithms with adaptive inertial correction strategy for solving bilevel split pseudomonotone VIP under split common fixed-point constraint. J. Comput. Appl. Math. 2025, 470, 116683. [Google Scholar] [CrossRef]

- Nwawuru, F.O.; Ezeora, J.N.; Rehman, H.U.; Yao, J.C. Self-adaptive subgradient extragradient algorithm for solving equilibrium and fixed point problems in Hilbert spaces. Numer. Algorithms 2025. [Google Scholar] [CrossRef]

- Dong, Q.; Cho, Y.; Zhong, L.; Rassias, T.M. Inertial projection and contraction algorithms for variational inequalities. J. Glob. Optim. 2018, 70, 687–704. [Google Scholar] [CrossRef]

- Solodov, M.V.; Svaiter, B.F. A new projection method for variational inequality problems. SIAM J. Control Optim. 1999, 37, 765–776. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 2011, 148, 318–335. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. Extensions of Korpelevich’s extragradient method for the variational inequality problem in Euclidean space. Optimization 2012, 61, 1119–1132. [Google Scholar] [CrossRef]

- Polyak, B.T. Some methods of speeding up the convergence of iterative methods. USSR Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Gibali, A.; Jolaoso, L.O.; Mewomo, O.T.; Taiwo, A. Fast and simple Bregman projection methods for solving variational inequalities and related problems in Banach spaces. Results Math. 2020, 75, 179. [Google Scholar] [CrossRef]

- Godwin, E.C.; Alakoya, T.O.; Mewomo, O.T.; Yao, J.-C. Relaxed inertial Tseng extragradient method for variational inequality and fixed point problems. Appl. Anal. 2023, 102, 4253–4278. [Google Scholar] [CrossRef]

- Alakoya, T.O.; Mewomo, O.T. Strong convergent inertial two-subgradient extragradient method for finding minimum-norm solutions of variational inequality problems. Netw. Spat. Econ. 2024, 24, 425–459. [Google Scholar] [CrossRef]

- Yao, Y.H.; Iyiola, O.S.; Shehu, Y. Subgradient extragradient method with double inertial steps for variational inequalities. J. Sci. Comput. 2022, 90, 71. [Google Scholar] [CrossRef]

- Thong, D.V.; Dung, V.T.; Anh, P.K.; Van Thang, H. A single projection algorithm with double inertial extrapolation steps for solving pseudomonotone variational inequalities in Hilbert space. J. Comput. Appl. Math. 2023, 426, 115099. [Google Scholar] [CrossRef]

- Li, H.; Wang, X. Subgradient extragradient method with double inertial steps for quasi-monotone variational inequalities. Filomat 2023, 37, 9823–9844. [Google Scholar] [CrossRef]

- Pakkaranang, N. Double inertial extragradient algorithms for solving variational inequality problems with convergence analysis. Math. Methods Appl. Sci. 2024, 47, 11642–11669. [Google Scholar] [CrossRef]

- Wang, K.; Wang, Y.; Iyiola, O.S.; Shehu, Y. Double inertial projection method for variational inequalities with quasi-monotonicity. Optimization 2024, 73, 707–739. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Bianchi, M.; Schaible, G. Generalized monotone bifunctions and equilibrium problems. J. Optim. Theory Appl. 1996, 90, 31–43. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. A weak-to-strong convergence principle for Fejér-monotone methods in Hilbert spaces. Math. Oper. Res. 2001, 26, 248–264. [Google Scholar] [CrossRef]

- He, S.; Xu, H.-K. Uniqueness of supporting hyperplanes and an alternative to solutions of variational inequalities. J. Glob. Optim. 2013, 57, 1375–1384. [Google Scholar] [CrossRef]

- Saejung, G.; Yotkaew, P. Approximation of zeros of inverse strongly monotone operators in Banach spaces. Nonlinear Anal. Theory Methods Appl. 2012, 75, 742–750. [Google Scholar] [CrossRef]

- Tan, K.K.; Xu, H.-K. Approximating fixed points of nonexpansive mappings by the Ishikawa iteration process. J. Math. Anal. Appl. 1993, 178, 301–308. [Google Scholar] [CrossRef]

- Muangchoo, K.; Alreshidi, N.A.; Argyros, I.K. Approximation results for variational inequalities involving pseudomonotone bifunction in real Hilbert spaces. Symmetry 2021, 13, 182. [Google Scholar] [CrossRef]

- Tan, B.; Sunthrayuth, P.; Cholamjiak, P.; Cho, Y.J. Modified inertial extragradient methods for finding minimum-norm solution of the variational inequality problem with applications to optimal control problem. Int. J. Comput. Math. 2023, 100, 525–545. [Google Scholar] [CrossRef]

- Harker, P.T.; Pang, J.S. For the linear complementarity problem. Lect. Appl. Math. 1990, 26, 265–284. [Google Scholar]

- Van Hieu, D.; Anh, P.K.; Muu, L.D. Modified hybrid projection methods for finding common solutions to variational inequality problems. Comput. Optim. Appl. 2017, 66, 75–96. [Google Scholar] [CrossRef]

- Shehu, Y.; Dong, Q.L.; Jiang, D. Single projection method for pseudo-monotone variational inequality in Hilbert spaces. Optimization 2018, 68, 385–409. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).