1. Introduction

In early-stage drug development research, lead compounds are often selected in an online fashion for the next-stage experiment (i.e., from discovery to an in vitro study or from in vitro to in vivo studies) [

1]. Because the data enters in a stream or in batches, and real-time decision-making is desirable, online sequential hypothesis testing procedures need to be adopted. Furthermore, hypotheses in drug discovery are commonly partitioned into groups by their nature, and multiple partitioning methods are simultaneously of interest. For example, in developing innovative RNA nanocapsules to treat cancer metastasis, therapeutic nucleic acids (siRNAs) as well as delivery nanocapsules are designed [

2,

3]. At each step of the screening, a decision needs to be made whether to follow up on a particular combination of an siRNA and nanocapsule. In this case, these combinations are naturally partitioned according to their siRNA and nanocapsule formulations. Ideally, to select the candidate lead compounds, we are interested in controlling the false positives in terms of both the siRNA and nanocapsule formulations. We are interested in other potential partitions, for example, based on experiments in different cancer types [

4].

Beyond drug development, the need for multi-layer false discovery rate (FDR) control also arises in many modern applications. In real-time A/B testing on online platforms, experiments are often run concurrently across user segments, device types, or product categories, and the results arrive continuously [

5,

6]. Similar challenges also exist in genomics, where hypotheses (e.g., gene expressions or mutations) may be partitioned by biological pathways or genomic regions and tested sequentially in large-scale screening studies [

7]. These situations require statistical methods that can make decisions in an online fashion while still simultaneously controlling the FDR across multiple layers of structures. Motivated by these examples, we aimed to develop a hypothesis testing procedure that controls the FDR simultaneously for multiple partitions of hypotheses in an online fashion.

Mathematically, at time , we observe a stream of null hypotheses, , where denotes the index for the siRNA being tested at time t, and denotes the index for the nanocapsule being tested at time t. indicates a hypothesis with respect to the siRNA and nanocapsule .

We define two partition-level null hypotheses,

and

, such that

where

is the partition-level hypothesis with respect to the siRNAs, and

is the partition-level hypothesis with respect to the nanocapsules.

is the hypothesis where the siRNA index

is fixed at time t and varies over the nanocapsule indices

j, and

is a hypothesis where the nanocapsule index

is fixed at time t and varies over the siRNA indices

i. At time

t, we make a decision on whether to reject

based on its corresponding test statistic and

p-value, as well as the history information on the previous hypotheses (statistics,

p-values, and decisions); i.e., we let

if we reject

, and

if we accept

. Our goal is to control the group-level FDR with both partitions according to the siRNA and according to the nanocapsules, which we denote as

and

. Let

where

and

denote the sets of rejected hypotheses at time

t grouped by the siRNA and nanocapsule partitions, respectively;

and

denote the corresponding sets of true null hypotheses for the siRNA and nanocapsule partitions, respectively. ∨ is the maximum operator:

.

To control the FDR for individual online hypotheses, Foster and Stine (2008) [

8] introduced the

-investing framework, a foundational method for online FDR control. This approach treats significance thresholds as a budget, namely

-wealth, which is spent to test hypotheses and “spent” upon discoveries (i.e., rejected null hypotheses). This method controls the modified FDR (mFDR) and is sensitive to test ordering. The modified false discovery rate (mFDR) is an alternative measure defined by the ratio between the expected number of false discoveries and the expected number of discoveries. To address these limitations, Javanmard and Montanari (2018) [

9] proposed two alternative procedures, LOND (Levels based On Number of Discoveries) and LORD (Levels based On Recent Discoveries), to provide finite-sample FDR control under dependency structures. In LOND, the significance level at each step is scaled according to the number of previous rejections. The LORD procedure, on the other hand, updates the significance thresholds after each discovery, allowing for greater adaptability. Both methods depends on independent

p-values. More recently, these methods have been further extended for improved power and relaxed dependence assumptions for the

p-values [

10,

11,

12].

For FDR control in grouped or structured hypotheses, such as genes grouped by biological pathways or drug compounds tested across cancer types, Ramdas and Barber (2019) [

13] developed the p-filter, a method for multi-partitioned data settings where hypothesis testing occurs across distributed or federated environments. The p-filter maintains a dynamic filtration level that propagates across data partitions, enabling asynchronous hypothesis testing while preserving FDR guarantees. Ramdas et al. (2019) [

14] developed the DAGGER method, which controls the FDR in hypothesis testing structured using directed acyclic graphs, where nodes represent hypotheses with parent–child dependencies. DAGGER processes hypotheses top-down, ensuring that a node is rejected only if its parents are rejected, and guarantees FDR control under the independence or positive or arbitrary dependence of

p-values.

In many practical applications related to drug discovery, as we described in the Introduction, there is a need to control the FDR at multiple partition levels simultaneously in an online fashion; there is no existing method for this kind of setting. We aimed to address this gap by developing an FDR control method to control the group-level FDR defined based on multiple structures or partitions (multiple layers) in an online fashion. In our setting, not only do the hypotheses enter sequentially, but the group membership of the hypothesis entering each layer is also not predefined. We developed a novel framework that integrates online FDR control with multi-layer structured testing capabilities to provide theoretically guaranteed FDR control in multi-layer online testing environments. The main contributions of this paper are summarized below:

We present multi-layer -investing, LOND, and LORD algorithms for controlling the FDR in online multiple-layer problems.

We provide theoretical guarantees for the multi-layer -investing, LOND, and LORD methods.

We demonstrate the numerical FDR control’s performance and the power of our methods over an extensive range of simulation settings.

The rest of this paper is structured as follow. In

Section 2, we provide a mathematical formulation of the online multi-layer hypothesis testing problem. In

Section 3, we illustrate the multi-layer

-investing, LOND, and LORD algorithms. In

Section 4, we show our main theoretical results, that the multi-layer

-investing method simultaneously controls the mFDR in different layers and the multi-layer LOND and LORD simultaneously control the FDR and mFDR in different layers. In

Section 5, we demonstrate the performance of our methods with the results from extensive simulation studies.

2. Model Framework

Now we formulate the problem for more general scenarios. From now on, we use the following notations. Suppose we have a series of (unlimited) null hypotheses,

s,

, and

M partitions of the space

, denoted as

for

. We call these

M different partitions “layers” of hypotheses. We can define the group-level null hypotheses in layer

m at time

t as

where [

t] =

. We also define a group index for the

ith hypothesis in the

mth layer as

, i.e.,

.

In the online setting, s come in sequentially, and the corresponding test statistics s and associated p-values s are also observed sequentially. For each we need to make the decision whether to reject it based on , , and the available history information; i.e., if we reject , and if we accept . At time t (t is discretized), we have three different types of history information:

All information, .

All decisions, .

A summary of decisions, for example, .

Now, we define decisions at the group level using decisions at the individual level. At the individual level, we define the rejection set at time

t as

. Since the decision whether to reject a group will change over time, we use

to denote the decision for group

g (

) in layer

m at time

t, where

So for the

mth partition, the group-level selected sets at time

t are

Similarly, we denote the truth about

at the individual level by

;

when

should be accepted, while

when

should be rejected. We can obtain the true set

and

For the mth partition, the group-level true null set at time t is .

Now we can define the time-dependent false discovery rate (FDR) and modified false discovery rate (mFDR) for each layer separately. For the

mth layer, we have

where

and

.

4. Theoretical Results

We will show that multi-layer -investing method simultaneously controls the mFDR in different layers and the multi-layer LOND and LORD simultaneously control both the FDR and mFDR in different layers. First we introduce one preliminary assumption; this assumption is weaker than the independence assumption across the tests.

Assumption 1. , and for all the layers that need to be tested at time j, Remark: This assumption is a simple extension of Assumption 2.2 in the paper of Aharoni and Rosset (2014) [

15] regarding the generalized

-investing method. It requires that the level and power traits of a test hold even given the history of rejections. This is somewhat weaker than requiring independence. In particular,

is the maximal probability of rejection for any parameter value in the alternative hypothesis, which quantifies the “best power”.

Next we state the theoretical control guarantee for the multi-layer generalized -investing procedure.

Theorem 1. Under Assumption 1, if , then a multi-layer generalized α-investing procedure simultaneously controls the for all the layers, .

The proof of Theorem 1 is postponed to

Appendix A. One key step is to establish a submartingale, as stated in the following lemma.

Lemma 1. Under Assumption 1 and the multi-layer α-investing procedure, the stochastic processes , are submartingales with respect to .

Next, we state the and control guarantees for the multi-layer LOND and LORD methods.

Theorem 2. Suppose that conditional on , , we havewhere and are given by the LOND algorithm. Then this rule simultaneously controls the multi-layer FDRs and mFDRs at levels less than or equal to α; i.e., Theorem 3. Suppose that conditional on the history of decisions in layer m, , we have that need to be tested at time i: Then, the LORD rule simultaneously controls the multi-layer mFDR to ensure it is less than or equal to α; i.e., Further, it simultaneously controls the multi-layer FDR at every discovery. More specifically, letting be the time of the kth discovery in layer m, we have the following for all : All the proofs of Lemma 1 and Theorems 1–3 are in Web

Appendix A.

5. Numerical Studies

We conducted extensive simulations to evaluate the performance of our proposed multi-layer methods in various scenarios. We simulated data from a two-layer model with different settings to evaluate the proposed methods. For each setting, we compared the FDR and mFDR control and power at both the group and individual levels. For all simulations, we set the target FDR level at and ran 100 repeats.

5.1. Simulation of Group Structures

We considered three types of group structures: the first two were balanced, while the third was unbalanced. For the balanced structure, we denoted G as the number of groups and the number of hypotheses per group as n. Therefore, the total number of features was . The three types of group structures are described below:

Balanced Block Structure: The features came in group by group; i.e., all the features from the first groups came in, then all the features from the second group followed, and so on.

Balanced Interleaved Structure: The first feature was from group 1, the second feature was from group 2,⋯, the nth feature was from group n, the th feature was from group 1, and so on.

Unbalanced Structure: The features were randomly assigned to be in either the current group or a new group by some random mechanism. To be more specific, we fixed the total number of features as

N and denoted the membership of the

tth feature as

, letting

where

.

5.2. Simulation of True Signals

We considered three signal structures:

Fixed Pattern: The first of groups were true groups. Within the true groups, the first of features were the true features.

Random Pattern: The true groups and true signals were randomly sampled. We first randomly sampled of the total groups to be true groups. Then within each true group, we randomly sampled of the features as the true features.

Markov Pattern: The signals were from a hidden Markov model. We considered a Markov model with two hidden states: a stationary state and an eruption state. In the stationary state, ; in the erruption state, . At each step, the probability that the signal remained in the same state as the previous step was 0.9, and it had a probability of 0.1 of changing to the other state.

5.3. Simulation of Signal Strength

We considered three types of signal strength settings:

Constant Strength: The signal strengths were equal; the p-values for the true signals were from a two-sided test with z-statistics from .

Increasing Strength: The signal strengths were increasing; the p-values were from a two-sided test with z-statistics from , where . Here t is the number of true signals until that moment, and is the total number of true signals in the simulation.

Decreasing Strength: The signal strengths were decreasing; the p-values were from a two-sided test with z-statistics from , where , with t and defined the same as in (2).

All the simulation designs satisfied Assumption 1 by construction. Specifically, under the null hypothesis (), p-values were generated independently from the Uniform distribution. This guaranteed that the probability of rejection at each step was less than or equal to the target significance level (i.e., ), satisfying the first condition of Assumption 1. Under the alternative hypothesis (), p-values were generated from two-sided tests using normally distributed test statistics with non-zero means. These distributions were stochastically smaller than the null distribution, which led to a higher probability of rejection. However, this probability was still finite and bounded above by one, ensuring that the second condition of Assumption 1 was satisfied with a constant . Moreover, our simulation generated data sequentially and independently, making the conditional probability bounds in Assumption 1 valid under the filtration . Therefore, both conditions of Assumption 1 were met in all the simulation settings, ensuring that the theoretical guarantees in Theorems 1–3 were applicable.

We compared seven methods for each setting: the original -investing (GAI), original LORD (LORD), original LOND (LOND), multi-layer -investing (ml-GAI), multi-layer LORD (ml-LORD), multi-layer LOND (ml-LOND), and modified multi-layer LOND (ml-LOND_m) methods.

5.4. Simulation Results

Our baseline configuration used the following parameters: 20% true groups (), 50% true signals within true groups (), 20 groups in total (), 10 features per group (), a Balanced Block Structure, Fixed Pattern, and a Constant Strength. We varied the signal strength parameter to examine how the methods performed across different effect sizes.

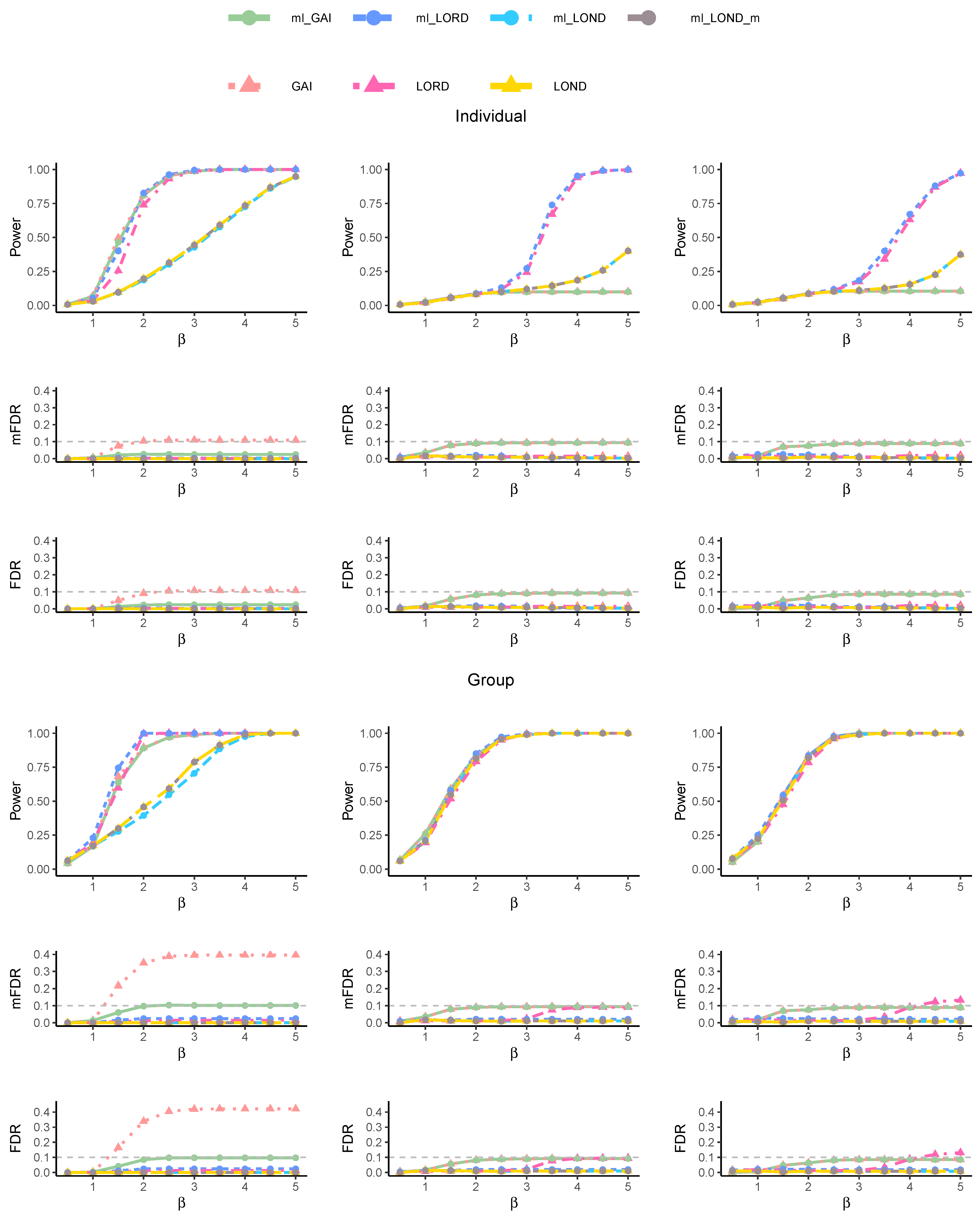

Figure 1 shows the power, FDR, and mFDR for both the individual and group layers under different group structures. For the

Balanced Block Structure, all the methods successfully maintained FDR and mFDR control at both the individual and group levels except GAI. For GAI, both the group-level FDR and mFDR exceeded 0.4 when

exceeded 2.0. The ml-LORD and LORD methods exhibited the highest power at both levels. As

increased beyond 4.5, the power of all the methods approached 1.0. For the

Balanced Interleaved Structure, we observed that the LORD methods (both LORD and ml-LORD) significantly outperformed all other methods in terms of power at both the individual and group levels. Importantly, all the multi-layer methods maintained FDR and mFDR control at both levels. The

Unbalanced Structure demonstrated results largely consistent with those of the

Balanced Interleaved Structure. However, a slight exceedance of the 0.1 threshold was observed for the LORD procedure when

reached 0.5.

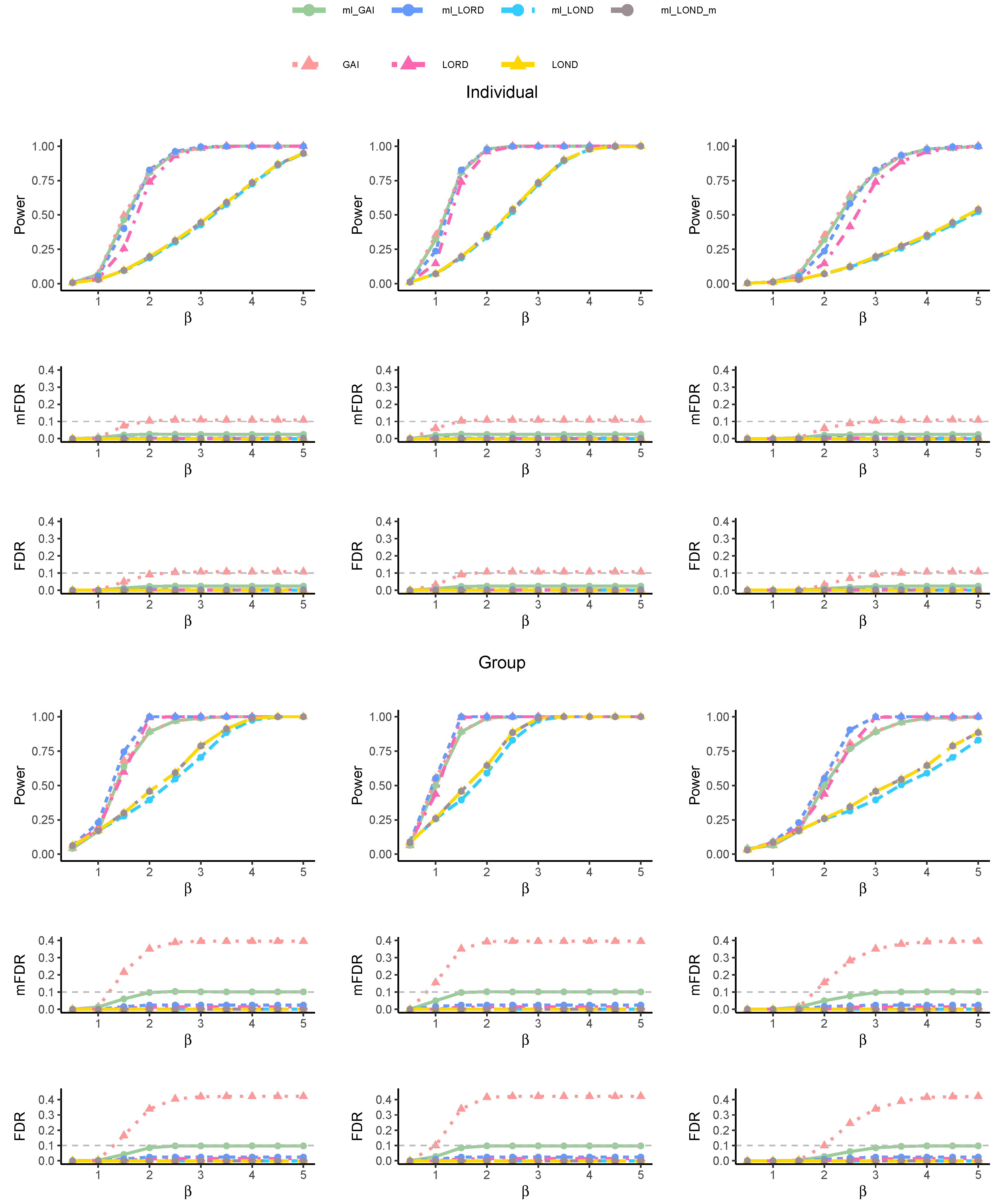

Figure 2 illustrates the performance metrics across different true signal patterns. All the methods controlled both the individual and group FDR and mFDR in the

Fixed Pattern and

Markov Pattern. However, LORD failed to control both the group-level mFDR and FDR for a

Random Pattern when

exceeded 3. In terms of power, both LORD and ml-LORD obviously had better performance than the other methods in these three patterns.

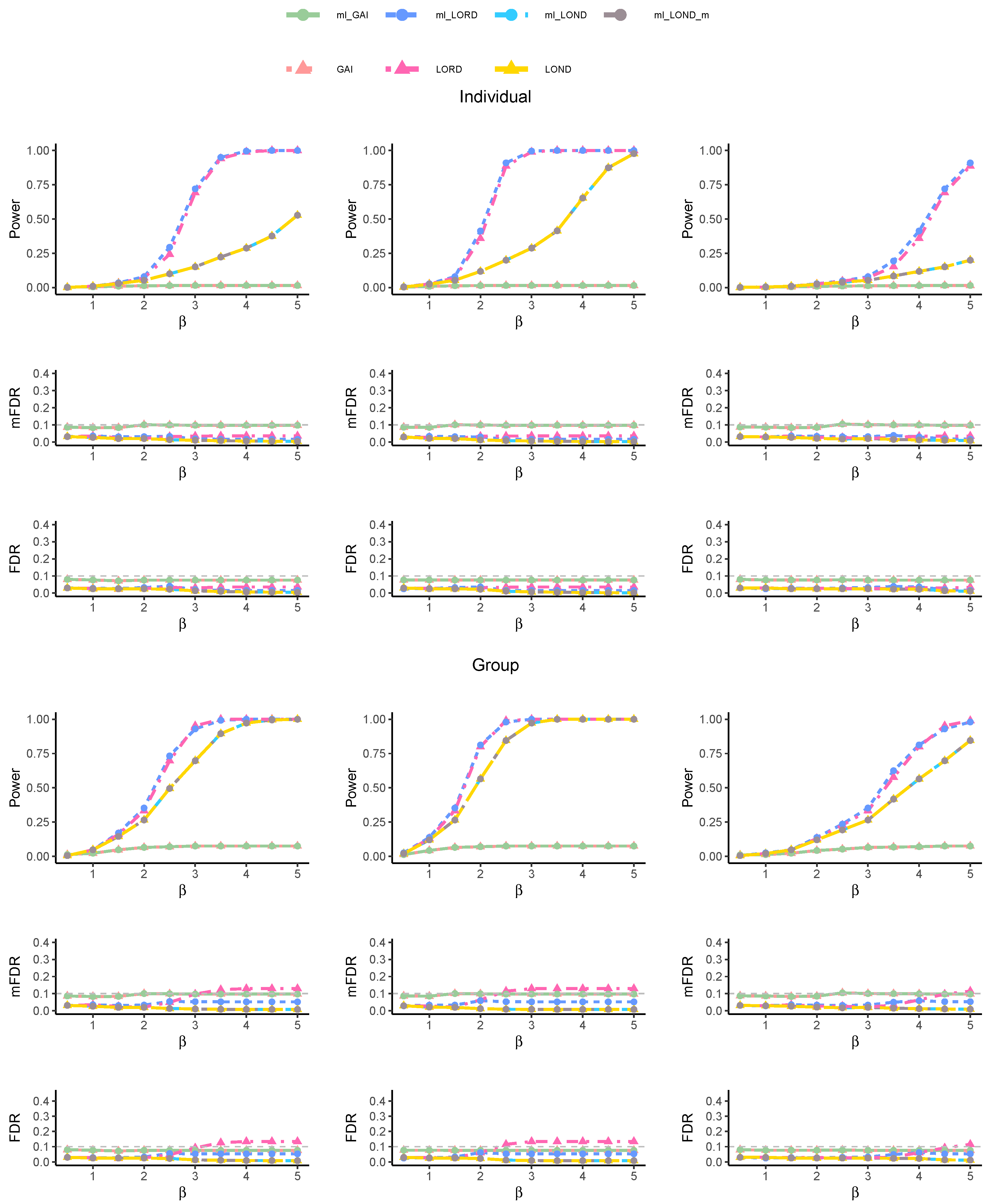

Figure 3 shows the impact of different signal strength patterns on the power and FDR control. The GAI method failed to control both the group-level mFDR and FDR across the three patterns. In contrast, all the multi-layer methods successfully controlled both the mFDR and FDR. Regarding power, all the versions of the LOND procedure (LOND, ml-LOND, and ml-LOND_m) exhibited lower power compared to the

-investing (GAI, ml-GAI) and LORD (LORD, ml-LORD) methods. Among the three patterns, the ml-LORD method demonstrated the best overall performance, as it consistently achieved the highest power while maintaining FDR control. Furthermore,

Figure 4 shows the results with a reduced number of true signals (

). Most methods continued to control both the FDR and mFDR. However, a slight exceedance of the 0.1 threshold was observed for the LORD method when the effect size

exceeded 3.0.

Our extensive simulation studies highlighted the advantages of using multi-layer FDR control methods over the original ones. Under a Balanced Block Structure, the GAI procedure failed to maintain group-level FDR control. In more complex scenarios, such as the Random Pattern setting, the LORD method also failed to adequately control the group-level FDR. In contrast, all the multi-layer methods consistently maintained valid control of both the FDR and mFDR across all the settings. In terms of power, the modified multi-layer LORD method slightly outperformed the original LORD approach while maintaining comparable FDR control.

All the simulation results are consistent with theoretical results showing that the multi-layer -investing method simultaneously controls the mFDR in multiple layers (Theorem 1) and the multi-layer LOND and LORD procedures achieve the simultaneous control of both the FDR and mFDR across layers (Theorems 2 and 3). These findings demonstrate that multi-layer methods not only ensure more reliable FDR/mFDR control, particularly at the group level, but also deliver better statistical power compared to the original methods.

6. Discussion

In summary, we propose three methods that simultaneously control the mFDR or FDR for multiple layers of groups (partitions) of hypotheses in an online manner. The mFDR or FDR controls were theoretically guaranteed and demonstrated with simulations. These methods fill an important gap in drug discovery when we need to control the FDR in drug screening for multiple layers of features (i.e., siRNAs, nanocapsules, target cell lines, etc.). For example, when screening siRNA–nanocapsule combinations, our methods allow researchers to make statements about both the efficacy of specific combinations and the general performance of specific siRNAs or nanocapsules across all the combinations tested, which can accelerate the identification of promising candidates and reduce false leads. In addition, the proposed algorithms for the methods are also computationally efficient. The computational overhead of our multi-layer methods is minimal compared to that of the original methods. This makes our methods highly scalable, even for large-scale screening applications involving thousands of hypotheses across multiple layers.

A challenge we face is that the power of these methods reduces as the number of layers increases. We can potentially increase the power by adding more assumptions and estimating the pattern of the true signals and the correlation between the p-values more accurately. The modified multi-layer LOND method () shows an effective example. It had better power performance than the multi-layer LOND method in the simulation; developing such methods with theoretical guarantees will be the subject of our future work.

Another limitation lies in the assumption of independence or weak dependence among

p-values across different layers. In real-world settings, particularly in high-throughput biological studies or multi-modal drug screenings, their characteristics are often correlated. Our multi-layer online frameworks do not assume independence across multiple characteristics because the key assumptions are based on the conditional distribution conditioned on the history information. The construction of statistics for such scenarios is a practical challenge. Some extensions of the conditional permutation test [

16] can be explored as a future direction.