Abstract

This work addresses the challenge of low-cost road quality monitoring in the context of developing countries. Specifically, we focus on utilizing accelerometer data collected from smartphones as drivers traverse roads in their vehicles. Given the high frequency of data collection by accelerometers, the resulting large datasets pose a computational challenge for anomaly detection using supervised classification algorithms. To mitigate scalability issues, it is beneficial to first group the data into homogeneous continuous sections. This approach aligns with the broader problem of change detection in a finite sequence of data indexed by a totally ordered set, which could represent either a time series or a spatial trajectory. Curvature features are extracted and segmented through adapted Ascending Hierarchical Clustering (AHC) and K-means algorithms suited to sequential road data. Our goal is to segment roads into homogeneous sub-sections that can subsequently be labeled based on the level or type of irregularity. Using an analysis of variance (ANOVA) statistical test, we demonstrate that curvature features are effective for classification, with a Fisher value of and a p-value of . We use two change detection algorithms: (1) Ascending Hierarchical Clustering (AHC) and (2) K-means. Based on the dataset and the number of classes, AHC and K-means achieve the following performance metrics, respectively: specificity of and , true negative rate of and , accuracy of and , -coefficient of and , and Rand index of and . The average computational time for K-means is s, compared to s for AHC, resulting in a ratio of 1070. Overall, AHC is significantly faster and achieves a better balance of performance compared to K-means.

MSC:

68Q25; 68Txx; 68T09; 68T10

1. Introduction

Standard French Norm “NF EN 13306: 01 2018” (European standard for maintenance terminology) defines maintenance as all technical, administrative and management actions during the life cycle of a system, intended to maintain or restore it to a state in which it can perform its required function. An important aspect of maintenance is the diagnosis of failures. The failure of a system can lead to more or less serious consequences. This is particularly true for roads, which are of vital importance for development. In economic terms, poor road quality entails direct costs such as repairs to damaged vehicles, as well as indirect costs such as reduced productivity due to displacement difficulties [1]. In addition to the purely economic aspect, road failures can cause social trauma. Over the past decade, the rate of road traffic deaths has risen dramatically in Africa, resulting in the loss of nearly 250,000 in human lives by 2021 (https://www.afro.who.int/fr/news/les-deces-dus-aux-accidents-de-la-circulation-augmentent-dans-la-region-africaine-mais) (13 December 2024). According to the World Health Organization (WHO, Geneva, Switzerland), there will be around million traffic-related deaths worldwide in 2023 (https://www.who.int/fr/news/item/13-12-2023-despite-notable-progress-road-safety-remains-urgent-global-issue) (13 December 2024). Therefore, it is very important to develop mechanisms for monitoring road quality. This would make it possible to identify failures and assess the corrective actions required.

Many works in the literature deal with the detection of road anomalies. This detection is generally carried out by analyzing data collected by various sensors. In general, it involves the analysis of images taken by various means [2,3,4], the vibratory analysis, or the kinematic analysis of the road course [5,6,7,8]. The images obtained are then processed by algorithms to detect irregularities. Image analysis benefits from technological advances in image processing and computer vision. However, the quality of detection can be altered by environmental factors such as luminosity or weather conditions. For vibration and kinematic analysis, inertial sensors such as Global Positioning Systems (GPS), accelerometers and gyroscopes built into smart phones or vehicles are used. This last group of sensors seems more accessible in the context of developing countries, where a large proportion of vehicles in circulation are second-hand and in a relatively advanced state of amortization [9]. For example, of Cameroon’s vehicle fleet is made up of vehicles acquired second-hand and are more than 15 years old (https://www.investiraucameroun.com/transport/1706-6454-92-du-parc-automobile-camerounais-est-constitue-de-vehicules-acquis-en-seconde-main) (13 December 2024).

Regardless of the type of road status data collected as time series, classifying this data is essential. This process involves two phases. The first phase consists of segmenting the time series into homogeneous sequences. Groups of homogeneous points are then labeled with predefined road types. In the second phase, automatic classification models are often trained in a supervised manner [5,6,7]. Classification is based on the characteristics extracted and the segmentation performed in the first phase [10,11]. The final performance of the models is highly sensitive to this step. The segmentation problem is equivalent to change detection, which is frequently addressed using unsupervised learning algorithms [12,13,14,15].

Unsupervised learning algorithms seek to identify underlying structures in data. In particular, clustering algorithms aim to group sets of data into subsets or ’clusters’, based on similarity or distance between data points [16]. Some works exploit clustering to evaluate the change between several time series [17,18]. However, in the context of this work, we aim to detect change in the same time series. Tran is part of this approach in [15] by proposing a real-time segmentation algorithm using a dynamic K-means algorithm based on Euclidean distance. In the same vein, the authors of the reference [19] propose the SWAB algorithm, which creates classes using a spline-based interpolation error minimization approach. These important general works do not dwell particularly on the extraction of classification features. However, it is common practice in data science to take into account the particularities of the application domain. For example, to avoid obstacles or adapt to road irregularities, drivers are often forced to adjust their trajectory, which then becomes less regular. Indeed, since the quality of the road is linked to its geometry, it seems natural to extract geometric characteristics from driving trajectories. On the other hand, since the segmentation of a time series can be reduced to a binary label, existing classification algorithms could be adapted or even improved.

This work tackles the problem of detecting changes in road conditions using road access data. The aim is to segment a given road into homogeneous sub-sections for labeling according to the level or type of irregularity. The aim is to develop and evaluate algorithms for change detection and to compare their performance on sets of real data. The research hypotheses are that (1) curvatures are relevant characteristics for classifying road irregularities, and (2) the bottom-up hierarchical classification algorithm is faster and more efficient than the K-means algorithm for this task. Our contributions are as follows:

- We pre-process the data before labeling by reducing its dimensionality through segmentation, which is a specific form of clustering.

- We propose features that better characterize changes in road structure.

- We adapt abstract algorithmic approaches -specifically, AHC and k-means- to road segmentation.

- We demonstrate that the segmentation problem in time series is analogous to binary classification or -labeling, enabling the use of a confusion matrix for evaluating the performance of the proposed algorithms.

- We introduce a novel change detection metric called the rate of agreement with the change.

2. Related Work

2.1. Road Condition Detection and Classification

The detection of anomalies on the road surface using mobile sensing has been extensively explored through supervised approaches. Basavaraju et al. (2019) proposed a supervised model to classify road surface anomalies such as potholes and cracks using vibration data and images. Their system employed support vector machines and neural networks for multi-class classification, but required fully labeled training data [20]. Nguyen et al. (2019) developed a crowdsourcing-based solution that combines vibration and GPS data from smartphones. Their system uses adaptive thresholding and spatial clustering to detect and map surface defects. Despite its practical implementation, the approach lacks a segmentation mechanism and depends heavily on parameter tuning [21]. Wu et al. (2020) introduced a machine learning pipeline for pothole detection using smartphone accelerometers. It features a preprocessing stage followed by supervised classification. While effective, the model is focused on binary detection and is reliant on well-annotated datasets [22]. Rajput et al. (2022) designed an unsupervised framework for monitoring road conditions using vibration data from public transport vehicles. By exploiting the regular motion of buses, they detect irregular road segments without the need for training labels, offering a scalable alternative for smart city deployment [23]. Bustamante et al. (2022) proposed a shallow neural network to classify road types based on smartphone accelerometer readings. Although their method achieved promising classification accuracy, it does not incorporate any temporal segmentation process [24]. Kim et al. (2024) presented a deep learning architecture combining CNN and LSTM to detect road anomalies from spatiotemporal sequences. Their work emphasizes learning temporal dependencies in road condition monitoring, but is computationally intensive and less suitable for low-resource environments [25]. Recent advances in land cover and land use mapping from satellite time-series, demonstrate the potential of remote sensing for detecting large-scale changes in infrastructure, including roads [26]. However, such products typically offer limited spatial and temporal resolution, which constrains their ability to capture fine-grained features like local curvature variations, small-scale surface anomalies, or gradual structural degradation. In contrast, our approach based on vehicle-mounted inertial and GPS sensors provides a more detailed, ground-level perspective. These two approaches are therefore complementary: satellite data is useful for macro-scale monitoring, while sensor-based methods are better suited for localized, high-resolution road condition assessment.

2.2. Trajectory Segmentation

Several studies have investigated trajectory segmentation. Van Kuppevelt et al. (2019) proposed an unsupervised method to segment daily-life accelerometer signals using a Hidden Semi-Markov Model. Their approach segments sensor data into behavioral states without requiring labels, showcasing the feasibility of structure discovery in time series [27]. Etemad et al. (2019) introduced a trajectory segmentation algorithm based on interpolation and clustering. Their method automatically detects homogeneous movement phases by minimizing interpolation errors in an unsupervised way, without assuming prior knowledge of trajectory labels [28]. Tapia et al. (2024) designed an unsupervised gesture recognition framework that uses curvature and its derivative to segment continuous movement trajectories. Although applied in robotics and gesture domains, their curvature-based approach shares conceptual similarities with our method for road segmentation [29]. In order to better understand our contribution, it seems appropriate to focus on fundamental segmentation methods based on data in the form of time series, sometimes acquired in real time.

2.2.1. Sliding Window and Bottom up Method

The SWAB method proposed in [19] is a combination of bottom-up clustering and the sliding window clustering that facilitates real-time segmentation of a time series flow. The authors in [19] seek the best interpolation of a time series using linear splines. The pieces of linearity also define a partition of the series into classes. Given a class C, the corresponding spline is obtained by linear regression () and the maximum absolute estimation error must not exceed a given threshold (). Given an integer (excluding 0), the size of a class is bounded between and . It is also limited by the maximum absolute estimation error.

The Algorithms 1 and 2 are adapted versions of the bottom-up algorithm and SWAB algorithm discussed in [19]. The current descriptions of these algorithms remain fundamentally identical to the original versions. However, they are now closer to practical implementation. Letting n be the length of time series T, the respective complexities of Algorithms 1 and 2 are and . Indeed, the complexity of regression function named “line” is , there are nested loops and Algorithm 2 calls Algorithm 1.

| Algorithm 1 BUA (T: Time Series, : error threshold) |

| {} while do end while for to do if then end if end for while and do for to do if then end if end for end while Return |

| Algorithm 2 SWAB (T: Time Series, N: Window’s length, : error threshold) |

| {} /* Temporary cluster*/ {} /* Final cluster*/ {} /* The window*/ while () do () and () and () while do () and () and () end while end while Return |

2.2.2. Overlapping Windows Method

The overlapping windows method consists in selecting two blocks of data of the same size N having points in common. The time series is considered as a set in a dimensional space-time. The first block is partitioned into K disjoint groups using K-means algorithm. For each class the size, the mean (centroid) and the standard deviation (radius) vectors are computed and saved as an abstract class. The mean and the standard deviation vectors are calculated dimension by dimension. A point in the second block belongs to an abstract class if according to each dimension, the Euclidean distance (usual distance in the real line) between it and the centroid is less or equal to the corresponding standard deviation component. Thus, to be member of an abstract class is the success of tests. A point that does not belong to any abstract class is considered as a change point. Algorithm 3 is an adaptation of Algorithm 1 presented in [15]. Although K-means is a stochastic methods we can estimate the complexity of Algorithm 3 as . This comes from the fact that the number of possible partitions in K subsets of a set of with N element is .

| Algorithm 3 OWA (T: Time Series, N: Length of the window, K: Number de Clusters) |

| {} /* The final cluster*/ /* The current position of a first cursor in T */ /* C is a cluster from in K classes */ /* The current position of a second cursor in T */ while () do if () or () then else end if end while Return |

Table 1 summarizes the most relevant studies related to the detection of road condition and the segmentation of the trajectory. Supervised methods (e.g., [22,25]) offer high classification accuracy but rely on labeled data and do not perform explicit segmentation. Unsupervised works (e.g., [27,29]) focus on structural analysis but rarely target road data or leverage geometric descriptors. Our method bridges these gaps by combining curvature-based features, a custom dissimilarity metric, and adaptations of AHC and K-means for time-series segmentation. It stands out for being fully unsupervised, label-free, and tailored to road structure analysis-making it more suitable for deployment in resource-constrained environments.

Table 1.

Extended comparison of related works on unsupervised and supervised road and trajectory analysis.

3. Methodology of the Work

The detection of road irregularities can be divided into two main stages: (1) the detection of changes and (2) the characterization or labeling of these changes. In this work, we focus on the first stage, which involves segmenting the road into a series of relatively homogeneous sections or classes. Since the objective is not directly labeling, we employ unsupervised approaches to classification.

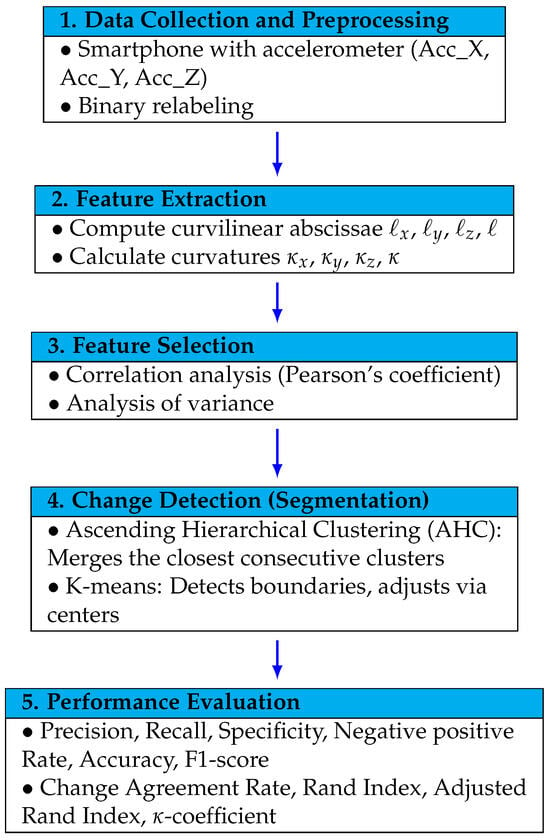

The proposed methodology consists of five steps: (1) Collection and preprocessing of accelerometer data, where smartphone sensors record vibrations along road segments. (2) Feature extraction is performed using numerical integration and differentiation techniques to calculate curvilinear abscissas and curvatures. (3) Feature selection, where correlation and variance analyses are used to retain the most discriminative attributes. (4) Change detection is carried out using adapted versions of Ascending Hierarchical Clustering (AHC) and K-means, tailored to the sequential nature of the data. (5) Performance evaluation of the resulting segmentation using both classical metrics (e.g., Precision, Recall, F1-score) and additional measures such as the Adjusted Rand Index and the Change Agreement Rate. The overall methodological framework adopted in this study is summarized in the workflow presented in Figure 1. The proposed methodology is generalizable and remains applicable regardless of sensor frequency, driving behavior, or environmental conditions.

Figure 1.

Overall methodological framework.

3.1. Data Collection and Pre-Processing

Detecting points of change on a road involves monitoring information that is characteristic of the route. These characteristics are varied in nature (sounds, images, etc.) and may require specialized and costly hardware. As our objective is to propose effective solutions for contexts with limited resources, we have opted for data that is relatively easy to access via an accelerometer integrated with current equipment such as a smart phone. We use the trajectories of the vehicles to assess any changes in the nature of the road.

3.1.1. Description of the Dataset

We use a dataset collected on various road sections using a smartphone equipped with a accelerometer for acceleration measurements. These sensors provided samples at a frequency of 10 Hz. The data, previously used in [11] for supervised classification, are available in CSV format via the following URL: https://github.com/simonwu53/RoadSurfaceRecognition/blob/master/data/sensor_data_v2.zip (13 November 2024). The accelerometer data used in this study were already preprocessed by the dataset authors [11].

- Timestamps: sampling instants in milliseconds.

- Raw data: raw measurements of the components of the acceleration vector for the X, Y and Z axes.

- Adjusted data: Adjusted access data from the telephone’s co-ordination system for a universal co-ordination system.

- Route labels: character string that can take the values ’smooth’, ’bumpy’ or ’rough’.

The main characteristics of the dataset used in this study, including data types, sources, resolution, acquisition devices, and labeling information, are summarized in Table 2.

Table 2.

Summary of dataset characteristics used in the study.

The data is enriched by secondary information calculated on the basis of the first data collected: curvilinear abscissa (ℓs) and curvatures (s). Since only the changes are of interest, the data are relabeled with binary values. In this approach, changes are represented by transitions from 0 to 1 or from 1 to 0, as illustrated in Table 3. The sequence of labels corresponds to the following three classes: , , and . This labeling aligns with the logical framework for change detection described in [15]. The curvilinear abscissas in each direction of the orthonormal basis of Cartesian space, and the associated curvatures, are also added to the data, as shown in Table 4.

Table 3.

Labeling of data.

Table 4.

Dataset structure.

3.1.2. Extraction of Features

The features used in this work are the curvilinear abscissa (ℓ) and curvatures (), computed using acceleration data from accelerometers. The primary advantage of using curvatures is that they generally capture the intrinsic characteristics of a trajectory, independent of velocity. As a result, the information about the road condition conveyed by curvatures is likely to be more robust. This approach helps mitigate the issue of sampling frequency highlighted in [6]. Large curvature values indicate significant or frequent irregularities, while a constant curvature provides insights into the shape of the road, which can be useful for the labeling process. Curvature can be sensitive to noise and sensor drift. However, the data set used in this study was pre-processed and denoised. For further theoretical details of curvatures, we refer to [30].

Considering the trajectories , , and the curvilinear abscissas are respectively given through the following formulas:

In the Frenet’s basis, we have the curvatures

with

The above formulas are practically approximated using trapezes integration method and finite difference derivatives available in [31]. Letting , and an locally integrable application.

For any time , and a function v, we have the well known approximations

3.2. Change Detection Algorithms

In this section, we present two adaptations of well-known classification algorithms for detecting change in data with a time-series structure. These algorithms are ascending hierarchical clustering (AHC) and K-means. The choice of AHC and K-means is guided by their simplicity and ease of adaptation to the sequential nature of our curvature-based data. As unsupervised methods, they are well-suited to our context and require minimal assumptions. Their low computational cost and straightforward implementation make them practical alternatives to more complex segmentation methods, especially when aiming for scalable and interpretable solutions. While both AHC and K-means are well-established in the literature for general-purpose clustering, our contribution lies in their novel adaptation to a specific segmentation task: identifying changes in road surface conditions from sequential accelerometer data collected by smartphones.

In this research, we reformulate these classical methods to fit a segmentation framework by:

- Reformulate K-means and AHC into segmentation algorithms that respect the sequential structure of the data.

- Introduce a new dissimilarity measure that respects the characteristics of road surfaces.

3.2.1. Ascending Hierarchical Classification

Ascending Hierarchical Clustering (AHC) is a widely used method for grouping data into nested clusters based on pairwise dissimilarities [19]. The classification method we adopt is based on an elliptic distance between tuples of curvatures . according to weights , the distance is given such as

The weights are motivated by the fact that each feature has a specific dimension which should be normalized to avoid relative size oder effects. For example, the respective variations of x and y are bigger than the variation of z in general. The principle of ascending hierarchical classification is to progressively group sets points (or tuples) which are the nearest according to the defined distance. Algorithm 4 describes the various steps in the AHC algorithm. Given that the complexity of the distance function d is , it can be easily deduced, using nested loops, that the complexity of Algorithm 4 is .

| Algorithm 4 AHC (T: Time Series) |

| /*the final cluster*/ N /*the number of classification attempts*/ for do if then /*the list of distances between elements of taken by twos*/ for do end for while do if then else end if end while end if end for return |

3.2.2. K-Means Algorithm

K-means is a general algorithmic principle that has been adapted to different context and needs [16]. The K-means algorithm we present takes into account the fact that of data are sequential. Classes are delimited by points of change or frontier. The centers are generally the midpoints between the frontiers, except for the first class, where it is the first element, and the last class, where it’s the last element. This technical choice has been made to facilitate the class adjustment procedure. The latter is done by assigning the elements between two centers to the class of the nearest center. The convexity constraint requires that the distance be an increasing function of the distance between indexes. So we take the distance from an index to a center to be the maximum of the distances between that center and all intermediary indexes up to the current index. Readjustments are repeated until the changes are minor. Algorithm 5 describes the various steps in the K-means algorithm. As discussed in Section 2.2.2, K-means has a stochastic nature, but we can establish a lower bound for its complexity. Considering the nested loops and the complexity of the distance function d, the complexity of Algorithm 5 is .

| Algorithm 5 K-means (T: Time Series, K: Number of Clusters) |

| /* Number of points in T */ /* Randomly select distinct values in */ /* are the point before change */ {} /* Initialize Centers */ for to do if {} then else end if end for while do {} {} for to do if then else end if while and do if then else end if end while if then else end if end for if then end if end while /*the final cluster*/ for ( to length()) do end for return |

3.3. Tools for Performance Evaluation

3.3.1. Feature Selection and Dissimilarity Distance

As described in Section 3.1.1, the data have qualitative labels and numeric features. The selection of features is based on correlation analysis, which is extensively discussed in [32]. Dependence between features are evaluated through the Pearson correlation coefficient which permits to reduce the number of features and the redundancy of information. Given a sample we recall the Pearson correlation coefficient given by

Dependence between features and the labels are evaluated by the Fisher’s statistics from analysis of variance. Given a labeled feature the Fisher’s statistics is given by

with k the number of labels, the number of times the i-th label appears, n the size of the dataset, and the j-th value of the feature X corresponding to the i-th label.

Although the choice of curvature as potential feature is motivated by searching of a certain constance even in the change, variation is not really avoidable. Even the euclidean metrics is largely used to evaluate dissimilarity, here we introduce a new distance considering uncertainties and convex structure of classes. Indeed, given three indexes in a time series X, if i and j belongs to the same class then k also belong to the same class (by convexity). Hence we define the distance

The distance, corresponds to the standard deviation of the set

when the mean is zero and to the coefficient of variation when the mean is non-zero. Thus, a class is considered when the relative dispersion is as small as possible.

3.3.2. Performance Metrics

Algorithms 4 and 5 are evaluated according to data and several metrics shown in Table 5. Despite the fact that grouping is more important than labeling in the problem we tackle, all the components of confusion matrix (precision, recall, specificity and negative positive rate) are applicable because of the binary labeling we adopted. We introduce the “agreement rate on change” in order to really capture if classification algorithm over-predicts or under-predicts changes. Note that a change is a nonzero difference between two consecutive binary labels. Further metrics are available in [33,34,35,36], but we have just retained those giving simple and significant interpretations such as the coefficient, the Rand index (RI) and its adjusted value.

Table 5.

Table of metrics and their formulas.

The authors in [36] give a very good analysis permitting to choose the adequate adjusted Rand index (ARI) according to the embedded random model. Given the general Formula (20), the key is to get and . is the expectation of RI obtained with a random clustering, while is the maximum possible value of RI.

Since we have the true clustering, the formulas of given in [36] are not applicable and is not necessary 1. Indeed, the number of classes being an hyper-parameter, it could be different with the realty. As we will see in the following, having to compare two clusters with different numbers of classes may not lead to .

Given a clustering of a set , set if i and j belong to the same class, and otherwise. If K denotes the number of class in then there is possibilities of clustering. The latter is due to the ordered nature of time series. Let us assume that the real clustering is and its number of classes is . Having in mind that for a given event A, if A occurs and otherwise,

In the particular case , the Equation (20) is not determined but its suitable value is 1. In the simpler case we have and

In (22), is a probability measure and when we have

If then we will consider the new reference cluster such as

and we apply the Equations (20)–(22) after replacing by . In that case . The aim here is to check if at least the first classes agree with the reference clustering when the number of classes is limited to . A perfect agreement on these first classes would suggest that, by reducing the limitation on the number of classes, we could still have relatively good performance.

In the last case , we will consider the new reference cluster such as

and we apply the Equations (20)–(22) after replacing by . Similarly to the previous case, the aim here is to check if at least the first classes agree with the reference clustering. A perfect agreement will correspond to , that is the last classes are just existing to satisfy the constraint on the number of classes. Thus, they all have the minimal size 1. With the clustering we have .

The coefficient has a very similar objective with the adjusted Rand Index. It is the ratio

where is the number “True Positive”, is the number of “True Negative”, is the number of “False Positive” and is the number of “False Negative”.

In order to simplify the presentation of the summary Table 5, we set as the real label of the i-th term and as the predicted label of the i-th term.

4. Results and Discussions

Following the methodology described in Section 3, we implemented all experiments in Python https://www.python.org/ (accessed on 25 May 2025) using a custom module designed for sequential road segmentation based on accelerometer data.

4.1. Visualization of Data and Selection of Features

In this section we present the evolution of curvatures as a function of path length. We also study, using analysis of variance, the dependence between road quality and the various curvatures. Finally, we carry out a correlation study between the latter to limit redundancies.

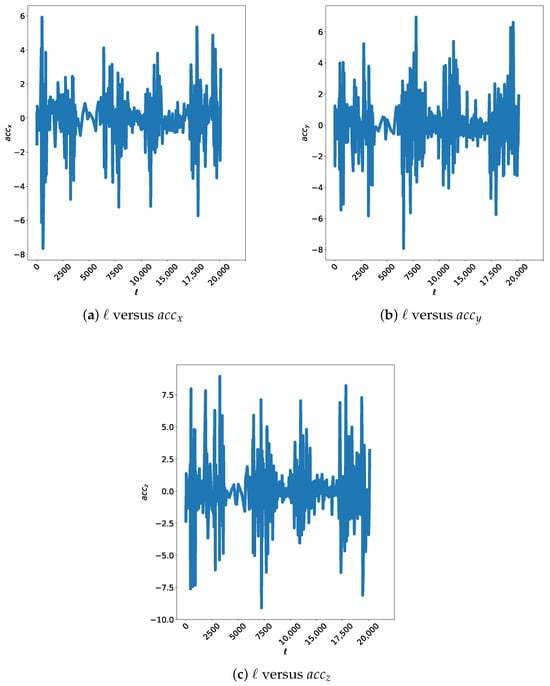

Figure 2 shows the evolution of the three components of the acceleration vector (, , ) as a function of the distance traveled ℓ. This shows changes in driving pace and trajectory. These changes can be explained by the local characteristics of the road, including its quality. Although the shapes of the curves are visually different it is difficult to evaluate the most significant profile.

Figure 2.

Acceleration as function of the length of the path.

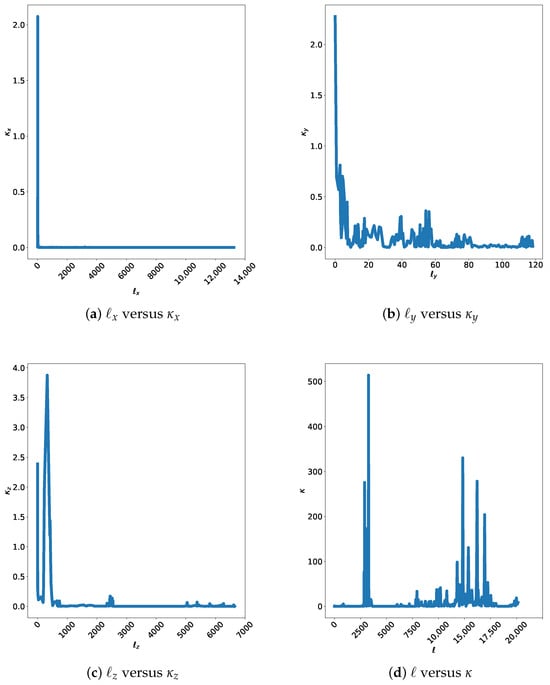

To highlight the irregular variations in trajectories, we evaluate the curvatures , , and . Figure 3 shows that despite some high peaks the curves and are relatively regular compared to the curves and . This makes it possible to identify y and more precisely as a potential explanatory variable for road quality. At first sight, this result is rather implausible given the nature of the irregularities considered (flat, bumpy, rough), which would make us think more in terms of through the z component. Even the literature has mainly focused on the z-axis [6,37,38] there are works including y-axis [5]. Taking into account the common reflex of vehicle drivers, which is to dodge the obstacle, the variation in the y component becomes more understandable. Naturally is linked to the y component and is impacted by its change.

Figure 3.

Curvature as function of the length of the path.

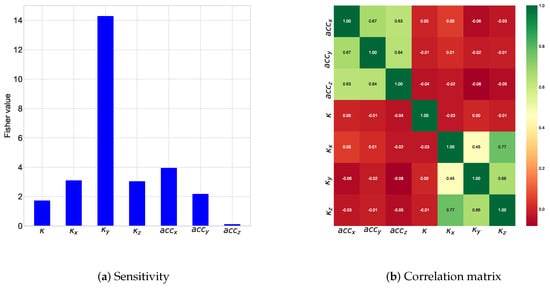

Since Figure 2 and Figure 3 allow hypotheses to be made about the factors that predict the quality of a road, we have proceeded to use the data available from analyses of variance. Similarly, correlation studies were carried out to avoid redundancy of information. Figure 4 shows the Fisher statistics for each potential predictor of road quality and displays a co-relation matrix. It emerges that the most relevant predictor is with a Fisher value of and a p-value of . It is followed by with a Fisher value of and a p-value of . The parameter is very insignificantly relevant with a Fisher value of and a p-value of . The correlation matrix shows correlations in the group of acceleration components and in the group of curves . We also find that curvatures are highly non-linear transformations of acceleration. By combining the analysis of variance and the analysis of correlation, it seems natural to select as a predictor of road quality.

Figure 4.

Correlation analysis.

4.2. Comparison Between AHC and K-Means Performances

In this section, we carry out a comparative study of the two proposed classification algorithms: AHC and classification by K-means. As we mentioned in the methodology, our aim is to detect changes in road quality in a non-supervisory way, without specifying the nature of the change. The points of change subdivide the data into homogeneous consumptive classes. The algorithms are evaluated both according to several classic metrics (based on binary labeling) and according to a new metric that we consider relevant: the rate of agreement on the change. We are also interested in the experimental calculation time.

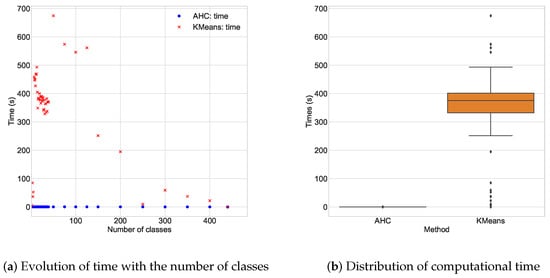

Figure 5 shows the experimental execution times for the K-means and AHC algorithms. Naturally, the fewer the classes, the longer the classification procedure. The times of the K-means algorithm largely dominate those of the AHC algorithm. This can be explained by the convergence process of the K-means algorithm, which is exploratory in nature. It was also predictable according to complexities of those algorithms that were polynomial for AHC and exponential for K-means.

Figure 5.

Distributions of the computational time.

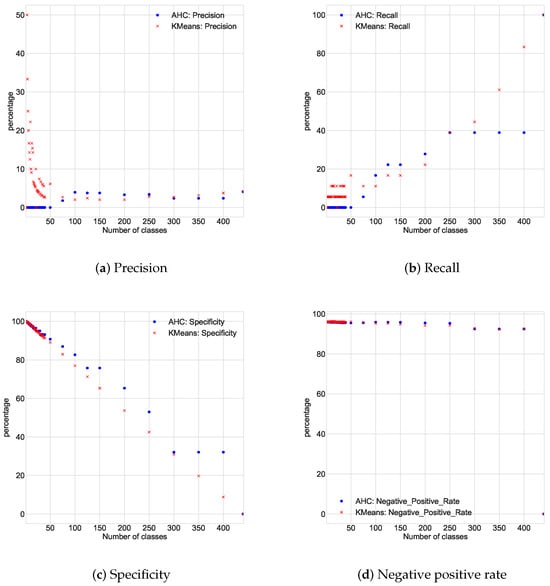

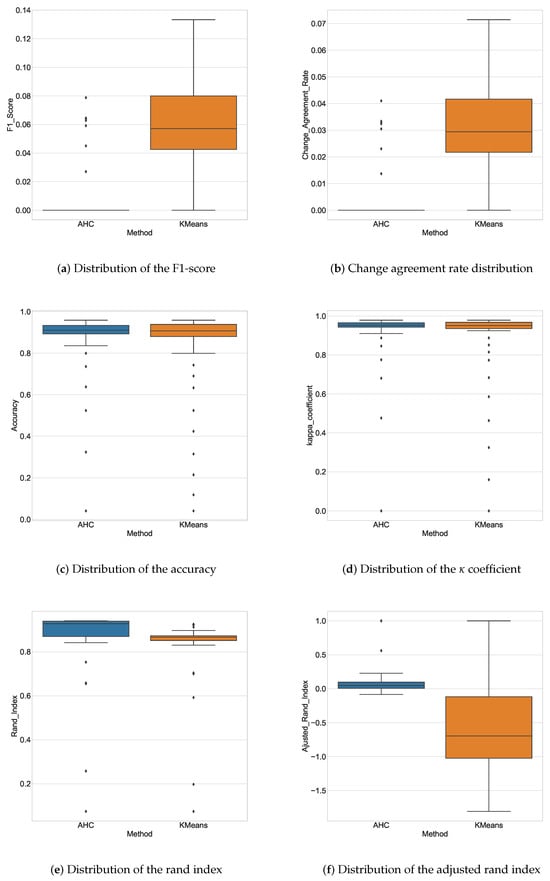

Figure 6, Figure 7 and Figure 8 show the responses of the classification algorithms to the various usual confusion metrics as a function of the number of classes imposed. It should be noted that there are actually 19 classes and that fixing the number of classes (K) imposes the number of change points ().

Figure 6.

Confusion matrix as function of the number of classes.

Figure 7.

Distributions of the confusion metrics.

Figure 8.

Global metrics as function of the number of classes.

The accuracy rate (probability that an announced change will be effective) increases with the number of classes for the K-means algorithm. On the other hand, it increases with the number of classes for the AHC algorithm. However, the performances of the two algorithms stabilize in the middle zone ( on average) for a relatively high number of classes compared to the actual number of classes. In fact, the more change points are proposed, the less likely it is that all these points really are change points. The average precision is for the K-means algorithm and for the AHC algorithm.

The recall rate (probability that a real change is detected) for the K-means and AHC algorithms is low for a small number of classes. It increases overall with the number of classes because, the more change point proposals there are, the more verifiable change points are included. The recall rate is on average for the K-means algorithm and for the AHC algorithm.

The specificity (probability that a point with no change will be considered as such) increases overall with the number of classes. It is on average for the K-means algorithm and for the AHC algorithm. The specificity decreases overall with the number of classes because the more change points are proposed, the greater the chances of proposing the wrong ones.

The true negative rate (the probability that a point considered to be without change really is) increases overall, although only slightly, with the number of classes. It is on average for the K-means algorithm and for the AHC algorithm. The decrease in the true negative rate can be explained by the fact that the fewer change point propositions there are, the fewer wrong propositions there are.

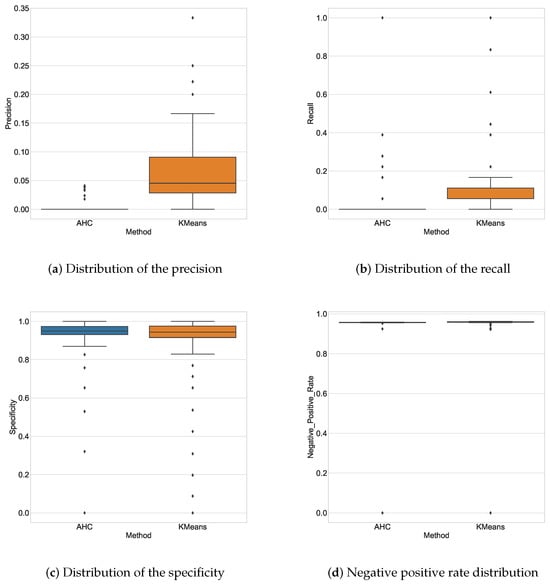

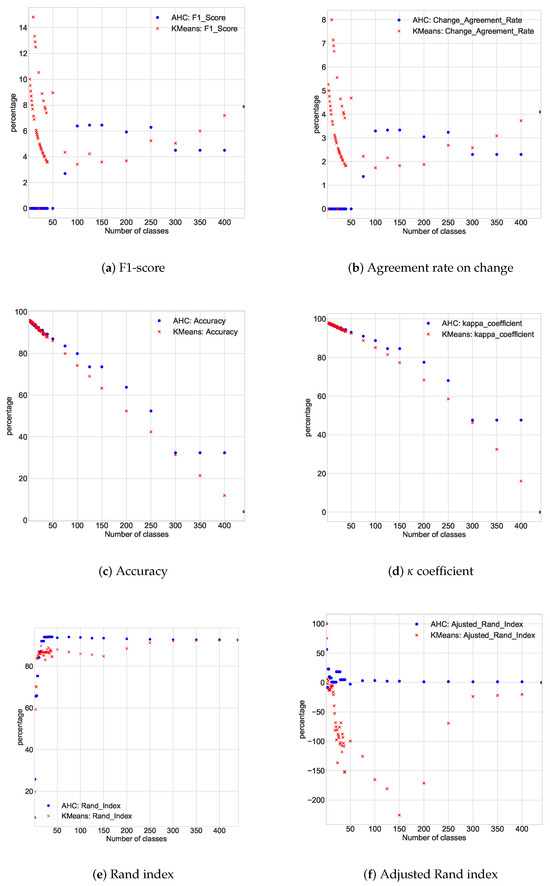

Overall, in terms of the confusion matrix, except for the specificity, the K-means algorithm seems to perform better than the AHC algorithm. We can extend the analysis through more synthetic indicators such as the F1-score, the agreement rate on change, the accuracy, the coefficient, the Rand index and the adjusted Rand index. We use Figure 8 and Figure 9.

Figure 9.

Distributions of the global metrics.

The F1-score is the harmonic mean between the accuracy and the recall rate. It therefore tries to strike a compromise between these two indicators, which must not be zero. Unsurprisingly, the average F1-score of the K-means algorithm () is higher than the average F1-score of the AHC algorithm (). The value of the F1-score is better for a relatively small number of classes.

The rate of agreement on change (probability that the prognosis of change or not is endorsed) increases with the number of classes, since more class proposals expose unfounded considerations of change. The average F1-score is for the K-means algorithm and for the AHC algorithm. We note that the F1-score and the rate of agreement on the change have very similar appearances even if the ranges of values are not the same. On the one hand, this confirms the relevance of the F1-score and, on the other, validates the new metric of the rate of agreement with the change, which also offers better interpretation.

The accuracy (probability that a point is correctly considered) decreases significantly with the number of classes at least because the number of false positive increases. The average value is for the K-means algorithm and for the AHC algorithm.

The coefficient has a very similar behavior with the accuracy. Its average value is for the K-means algorithm and for the AHC algorithm. This confirms the relevance of the accuracy offers better interpretation through in the context of clustering.

The Rand index (the probability that the prediction of whether or not two points in the siblings belong to the same class is correct) appears to be greater when the number of classes imposed is close to the actual number of classes. It is on average for the K-means algorithm and for the AHC algorithm.

The adjusted Rand index reveals that the AHC algorithm is more stable than the K-means algorithm. The former guarantees very often a performance better than the average of all possible performances while the K-means algorithm can be very below that average depending on the number of classes. The adjusted Rand index was in average for the K-means algorithm and for the AHC algorithm.

Despite the low values of the F1-score and the rate of agreement on the change allow us to conclude that the exact detection of points of change is difficult. On the other hand, the relatively good performances of the accuracy and the Rand index show that the proposed algorithms do a good job of identifying homogeneous classes or phases. The AHC algorithm appears to offer a good compromise in terms of performance. This is in accordance with [19].

5. Conclusions

This work focused on detecting changes in the characteristics of roads along their route using a vehicle equipped with sensors such as accelerometer and GPS. The aim was to segment the geographical data collected by the sensors with a view to subsequent labeling that could be used to diagnose the state of the road. To do this, we used a dataset available via the url https://github.com/simonwu53/RoadSurfaceRecognition/blob/master/data/sensor_data_v2.zip (13 November 2024).

From the acceleration data available in the dataset, we determined trajectory curvatures. After an analysis of correlation and variance, we were able to identify the curvature that best explained the changes. Contrary to the usual considerations in the literature, vertical variations weakly explained these changes. This could be understood by the tendency of vehicle drivers to avoid obstacles. We then proposed two adapted algorithms: AHC and clustering by K-means. Performance was compared according to several metrics. By assimilating change detection to binary labeling, we were able to use the metrics derived from the confusion matrix (precision, recall, specificity, overall accuracy, and F1 score). Furthermore, since we are dealing with classification, we have also used some of the more common metrics in this field (Rand index, adjusted Rand index, statistical coefficient ).

The results showed that curvature was a relevant characteristic for classifying road irregularities. The relative performance of the proposed algorithms varies from one metric to another. However, hierarchical ascending classification appears to be more stable in terms of performance and, above all, less costly in terms of computing time. This last observation could be very decisive for the needs of real-time evaluation of changes. The AHC algorithm appears to offer a good compromise in terms of performance. This is in accordance with our results discussed in Section 4, which show that AHC achieves faster computation times than K-means, albeit with slightly lower precision. This trade-off makes AHC particularly suitable for deployment in resource-constrained settings (e.g., mobile or embedded systems), where quick detection of irregularities may outweigh the need for high segmentation accuracy. Conversely, K-means remains preferable when precision is prioritized, such as in offline analysis or post-processing stages.

Although the proposed algorithms have demonstrated promising performance, several limitations must be acknowledged. A key limitation is that the number of clusters to be formed is not determined automatically, requiring manual intervention. In future work, we aim to address this by proposing features that remain constant per road segment, facilitating easier and more accurate label assignment, as suggested in [6]. One potential development involves introducing additional features that are consistent within each road segment. Such features could streamline the automatic labeling process and enhance the robustness of predictions. Furthermore, exploring advanced clustering techniques that dynamically determine the optimal number of clusters could significantly improve the adaptability and efficiency of algorithms. This work lays a foundation for future research focused on improving road safety and infrastructure maintenance through automated anomaly detection, with the potential to integrate real-time data processing and machine learning advancements for more scalable and accurate solutions.

Author Contributions

Conceptualization, D.J.F.-M. and A.B.N.-W.; methodology, D.J.F.-M., A.B.N.-W., J.M.N.II and M.A.; software, D.J.F.-M. and A.B.N.-W.; validation, D.J.F.-M., J.M.N.II, M.A. and M.T.; writing—original draft preparation, D.J.F.-M. and A.B.N.-W.; writing—review and editing, D.J.F.-M., J.M.N.II, M.A. and M.T.; supervision, J.M.N.II and M.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The dataset will be made available upon reasonable request to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Al-Sabaeei, A.M.; Souliman, M.I.; Jagadeesh, A. Smartphone applications for pavement condition monitoring: A review. Constr. Build. Mater. 2024, 410, 134207. [Google Scholar] [CrossRef]

- Dhiman, A.; Klette, R. Pothole detection using computer vision and learning. IEEE Trans. Intell. Transp. Syst. 2019, 21, 3536–3550. [Google Scholar] [CrossRef]

- Guan, J.; Yang, X.; Ding, L.; Cheng, X.; Lee, V.C.; Jin, C. Automated pixel-level pavement distress detection based on stereo vision and deep learning. Autom. Constr. 2021, 129, 103788. [Google Scholar] [CrossRef]

- Rajamohan, D.; Gannu, B.; Rajan, K.S. MAARGHA: A prototype system for road condition and surface type estimation by fusing multi-sensor data. ISPRS Int. J. Geo-Inf. 2015, 4, 1225–1245. [Google Scholar] [CrossRef]

- Anaissi, A.; Khoa, N.L.D.; Rakotoarivelo, T.; Alamdari, M.M.; Wang, Y. Smart pothole detection system using vehicle-mounted sensors and machine learning. J. Civ. Struct. Health Monit. 2019, 9, 91–102. [Google Scholar] [CrossRef]

- Martinez-Ríos, E.A.; Bustamante-Bello, M.R.; Arce-Sáenz, L.A. A review of road surface anomaly detection and classification systems based on vibration-based techniques. Appl. Sci. 2022, 12, 9413. [Google Scholar] [CrossRef]

- Seraj, F.; Zwaag, B.J.v.d.; Dilo, A.; Luarasi, T.; Havinga, P. RoADS: A road pavement monitoring system for anomaly detection using smart phones. In Big Data Analytics in the Social and Ubiquitous Context; Springer: Berlin/Heidelberg, Germany, 2015; pp. 128–146. [Google Scholar]

- Sattar, S.; Li, S.; Chapman, M. Developing a near real-time road surface anomaly detection approach for road surface monitoring. Measurement 2021, 185, 109990. [Google Scholar] [CrossRef]

- Boateng, F.G.; Klopp, J.M. Beyond bans. J. Transp. Land Use 2022, 15, 651–670. [Google Scholar] [CrossRef]

- Egaji, O.A.; Evans, G.; Griffiths, M.G.; Islas, G. Real-time machine learning-based approach for pothole detection. Expert Syst. Appl. 2021, 184, 115562. [Google Scholar] [CrossRef]

- Wu, S.; Hadachi, A. Road surface recognition based on deepsense neural network using accelerometer data. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 305–312. [Google Scholar]

- Amarbayasgalan, T.; Pham, V.H.; Theera-Umpon, N.; Ryu, K.H. Unsupervised anomaly detection approach for time-series in multi-domains using deep reconstruction error. Symmetry 2020, 12, 1251. [Google Scholar] [CrossRef]

- Aminikhanghahi, S.; Cook, D.J. A survey of methods for time series change point detection. Knowl. Inf. Syst. 2017, 51, 339–367. [Google Scholar] [CrossRef] [PubMed]

- Benevento, A.; Durante, F. Correlation-based hierarchical clustering of time series with spatial constraints. Spat. Stat. 2024, 59, 100797. [Google Scholar] [CrossRef]

- Tran, D.H. Automated change detection and reactive clustering in multivariate streaming data. In Proceedings of the 2019 IEEE-RIVF International Conference on Computing and Communication Technologies (RIVF), Danang, Vietnam, 20–22 March 2019; pp. 1–6. [Google Scholar]

- Bezdan, T.; Stoean, C.; Naamany, A.A.; Bacanin, N.; Rashid, T.A.; Zivkovic, M.; Venkatachalam, K. Hybrid fruit-fly optimization algorithm with k-means for text document clustering. Mathematics 2021, 9, 1929. [Google Scholar] [CrossRef]

- Javed, A.; Lee, B.S.; Rizzo, D.M. A benchmark study on time series clustering. Mach. Learn. Appl. 2020, 1, 100001. [Google Scholar] [CrossRef]

- Holder, C.; Middlehurst, M.; Bagnall, A. A review and evaluation of elastic distance functions for time series clustering. Knowl. Inf. Syst. 2024, 66, 765–809. [Google Scholar] [CrossRef]

- Keogh, E.; Chu, S.; Hart, D.; Pazzani, M. An online algorithm for segmenting time series. In Proceedings of the 2001 IEEE International Conference on Data Mining, San Jose, CA, USA, 29 November–2 December 2001; pp. 289–296. [Google Scholar]

- Basavaraju, A.; Du, J.; Zhou, F.; Ji, J. A machine learning approach to road surface anomaly assessment using smartphone sensors. IEEE Sens. J. 2019, 20, 2635–2647. [Google Scholar] [CrossRef]

- Nguyen, V.K.; Renault, É.; Milocco, R. Environment monitoring for anomaly detection system using smartphones. Sensors 2019, 19, 3834. [Google Scholar] [CrossRef]

- Wu, C.; Wang, Z.; Hu, S.; Lepine, J.; Na, X.; Ainalis, D.; Stettler, M. An automated machine-learning approach for road pothole detection using smartphone sensor data. Sensors 2020, 20, 5564. [Google Scholar] [CrossRef]

- Rajput, P.; Chaturvedi, M.; Patel, V. Road condition monitoring using unsupervised learning based bus trajectory processing. Multimodal Transp. 2022, 1, 100041. [Google Scholar] [CrossRef]

- Bustamante-Bello, R.; García-Barba, A.; Arce-Saenz, L.A.; Curiel-Ramirez, L.A.; Izquierdo-Reyes, J.; Ramirez-Mendoza, R.A. Visualizing street pavement anomalies through fog computing v2i networks and machine learning. Sensors 2022, 22, 456. [Google Scholar] [CrossRef]

- Kim, G.; Kim, S. A road defect detection system using smartphones. Sensors 2024, 24, 2099. [Google Scholar] [CrossRef] [PubMed]

- He, D.; Shi, Q.; Xue, J.; Atkinson, P.M.; Liu, X. Very fine spatial resolution urban land cover mapping using an explicable sub-pixel mapping network based on learnable spatial correlation. Remote Sens. Environ. 2023, 299, 113884. [Google Scholar] [CrossRef]

- van Kuppevelt, D.; Heywood, J.; Hamer, M.; Sabia, S.; Fitzsimons, E.; van Hees, V. Segmenting accelerometer data from daily life with unsupervised machine learning. PLoS ONE 2019, 14, e0208692. [Google Scholar] [CrossRef]

- Etemad, M.; Júnior, A.S.; Hoseyni, A.; Rose, J.; Matwin, S. A Trajectory Segmentation Algorithm Based on Interpolation-based Change Detection Strategies. In Proceedings of the EDBT/ICDT Workshops, Lisbon, Portugal, 26 March 2019; p. 58. [Google Scholar]

- Tapia, G.; Colomé, A.; Torras, C. Unsupervised Trajectory Segmentation and Gesture Recognition through Curvature Analysis and the Levenshtein Distance. In Proceedings of the 2024 7th Iberian Robotics Conference (ROBOT), Madrid, Spain, 6–8 November 2024; pp. 1–8. [Google Scholar]

- Chilov, G. Fonctions d’une Variable [Tome 1], 1ère er 2eme Parties; Mir: Moscou, Russie, 1973. [Google Scholar]

- Fortin, A. Analyse Numérique pour Ingénieurs; Presses Inter Polytechnique: Montréal, QC, Canada, 2011. [Google Scholar]

- Saporta, G. Probabilités, Analyse des Données et Statistique; Editions Technip: Paris, France, 2006. [Google Scholar]

- Rand, W.M. Objective criteria for the evaluation of clustering methods. J. Am. Stat. Assoc. 1971, 66, 846–850. [Google Scholar] [CrossRef]

- Hubert, L.; Arabie, P. Comparing partitions. J. Classif. 1985, 2, 193–218. [Google Scholar] [CrossRef]

- Zhang, H.; Ho, T.B.; Zhang, Y.; Lin, M.S. Unsupervised feature extraction for time series clustering using orthogonal wavelet transform. Informatica 2006, 30, 305–319. [Google Scholar]

- Gates, A.J.; Ahn, Y.Y. The impact of random models on clustering similarity. J. Mach. Learn. Res. 2017, 18, 1–28. [Google Scholar]

- Zheng, Z.; Zhou, M.; Chen, Y.; Huo, M.; Sun, L. QDetect: Time series querying based road anomaly detection. IEEE Access 2020, 8, 98974–98985. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhou, M.; Chen, Y.; Huo, M.; Sun, L.; Zhao, S.; Chen, D. A fused method of machine learning and dynamic time warping for road anomalies detection. IEEE Trans. Intell. Transp. Syst. 2020, 23, 827–839. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).