1. Introduction

Let

w be a given nonnegative and integrable weight function on the interval

. Let us denote by

the monic polynomial of degree

k, which is orthogonal to

with respect to

w, where, hereafter,

denotes the set of polynomials of degree at most

k with respect to

w; that is,

Let us recall that the sequence of polynomials

satisfies a three-term recurrence relation of the form

where

,

, and the

are positive.

The unique interpolatory quadrature formula with

n nodes and the highest possible degree of exactness

is the Gaussian formula with respect to the weight

w,

In [

1], D.P. Laurie introduced quadrature rules that he referred to as anti-Gauss associated with the weight

w,

Indeed, (

3) is an

-point interpolatory formula of degree

that integrates polynomials of degree up to

with an error equal in magnitude, but with opposite sign, to that of the

n-point Gaussian Formula (

2). The underlying goal is to estimate the error of the Gaussian quadrature by halving the difference between the results obtained from the two formulas. This quadrature rule and related topics have appeared in several papers in recent years (see, e.g., [

2,

3,

4,

5,

6,

7]). In his original paper, Laurie [

1] showed that an anti-Gaussian quadrature formula has positive weights and is such that its nodes, with the possible exception of two of them, are in the integration interval in such a way that they interlace with those of the corresponding Gaussian formula. Moreover, the anti-Gaussian formula is as easy to compute as the

-point Gaussian formula since it is based on the zeros of the polynomial

which is orthogonal with respect to the linear functional

.

All the cases where

represents some of the four Chebyshev weight functions are solved in [

8]. In the current paper,

w represents a Jacobi weight function,

where

. In these cases, whenever

we can assure that all nodes of anti-Gauss quadrature Formula (

3), i.e., all zeros of the corresponding polynomial

, belong to the interval

(see [

1], Theorem 4); in other words, this quadrature rule is said to be internal. In the cases of the Chebyshev weight functions (see [

8]) they are, in turn, the Gauss–Kronrod nodes. In particular, when

, we know that the anti-Gauss quadrature Formula (

3) is internal whenever

.

In the next section, the remainder term of the anti-Gauss quadrature rule is carefully studied.

2. On the Remainder Term of Anti-Gauss Quadrature Formulas for Analytic Functions

Let

be a simple closed curve in the complex plane surrounding the interval

, and let

D be its interior. Suppose that the integrand

f is analytic on

D and continuous on

, and that all the nodes of the anti-Gauss quadrature formula belong to the interval

, i.e., it is an internal rule. Then, following a procedure similar to that used for the Gauss formula, one has that the remainder term

in (

3) admits the contour integral representation

where the kernel is given by

with

and the polynomial

given in (

4). We have that the modulus of the kernel is symmetric with respect to the real axis, i.e.,

. Moreover, if the weight function

w is even, the modulus of the kernel is symmetric with respect to both axes, i.e.,

also holds (see [

9]).

In many papers, the error bounds of

, i.e., the modulus of the remainder term in the Gauss quadrature Formula (

2), where

f is an analytic function, are considered. Two choices of the contour

have been widely used:

- •

A circle with its center at the origin and a radius , i.e., , ;

- •

An ellipse with foci at the points and a sum of semi-axes ,

The ellipses are the level curves corresponding to the conformal application that maps the complement to the real interval onto the exterior of the unit circle; thus, when , the ellipse shrinks to the interval , while when increases, it becomes more and more circle-like. Therefore, the advantage of the elliptical contours compared to the circular ones is that such a choice requires the analyticity of f in a smaller region of the complex plane, especially when is near 1. For this reason, in this paper, we take to be an ellipse .

This way, the integral representation (

7) for the remainder term in the anti-Gauss quadrature Formula (

3) leads to a general error estimate by using Hölder’s inequality of the form

that is,

where

,

, and

The case

yields

i.e.,

where

is the length of the ellipse

, while for

, we have

3. Main Results

The main results are inspired by the approach followed by H. Sugiura and T. Hasegawa in [

10]. Whang and Zhang showed [

11] that

with

.

The explicit expressions for the coefficients

were derived, and

Starting from equality (

7), in which

and

for

given by (

5), and

being its orthogonal polynomial of the

n-th degree, we obtain

In the case where

, in the same manner as in Equation (4.9) [

10], if we define

it holds that

where, from [

10],

for each

, with

.

From [

10], Equations (4.13) and (4.15), we know that

while

and

Further, using the same argument as in Equation (4.9) [

10], it holds that

From [

10], Corollary 3.4, the asymptotic behavior of the modulus of the kernel

for sufficiently large

depends on the sign of the expression

Now, we can formulate the corresponding statement.

Theorem 1. Consider the anti-Gaussian quadrature formula, where , with the weight function , , provided it is internal. Then, there exists such that for each , the modulus of the kernel attains its maximum value on the positive real semi-axis if and on the negative real semi-axis if , that is,for andfor . Let us now focus on the Gegenbauer case, that is, when

, which means that

must hold in order for an internal anti-Gauss quadrature rule to exist. The identity Equation (5.2) [

10] means that

with

given by (

14), which implies that

Using Equations (5.3) and (5.4) [

10], where the coefficients

are defined, we obtain

and so the asymptotic behavior of the modulus directly depends on the sign of the following expression:

Those expressions are too large to discuss for each

, but we know that

, and from (5.8) in [

10], it follows that

when

Equation (5.6) [

10]. Further, from

one obtains

because from Equation (4.14) [

10], we have

while

follows directly from

which can be found, for example, in Chapter IV [

12]. Finally, again from Equation (5.6) [

10], we get

which altogether means that

and therefore, we conclude the following result.

Theorem 2. Consider the anti-Gaussian quadrature formula for the weight function , with . Then, for sufficiently large n, there exists a such that for each , the modulus of the kernel attains its maximum on the imaginary semi-axis , i.e., 4. Numerical Results

Consider the numerical estimation of the integral given by (

3), with

, that is,

According to the previously introduced notation, under the assumption that

f is analytic inside

, the error bound of the corresponding quadrature formula can be optimized by

where

Here,

represents the length of the ellipse

, which can be estimated by (see [

13])

where

.

Therefore, the expression of the error bound

can be reduced to

where

denotes, as usual, the sup-norm of the function

g on the compact set

K. Next, the maximum of the modulus of the kernel (

8) is analyzed by separately considering its numerator and denominator. The denominator

is a complex number calculated with 100 nodes and 50 significant digits. Applying the recurrence relation, we first generate the set of Jacobi orthogonal polynomials

. The numerator

is computed numerically using the functions sgauss.m and sr_jacobi.m (see [

14]).

Since and , the modulus of the kernel is calculated for all and . Depending on the values of a and b, the modulus attains its maximum value at , or

In order to check the proposed error bounds, we performed several tests and compared them with respect to the exact (actual) errors. The examples are presented for a function that often appears in the literature. In what follows, “Error” denotes the sharp (actual) error bound of the corresponding anti-Gauss quadrature formulas.

Example 1. LetIt can be checked that The infimum (

15) is computed on the interval

, where

.

For fixed

and

Table 1 displays the error bounds,

, and the actual errors, Error, corresponding to the quadrature rules (

3) with the Jacobi weight function. Similarly,

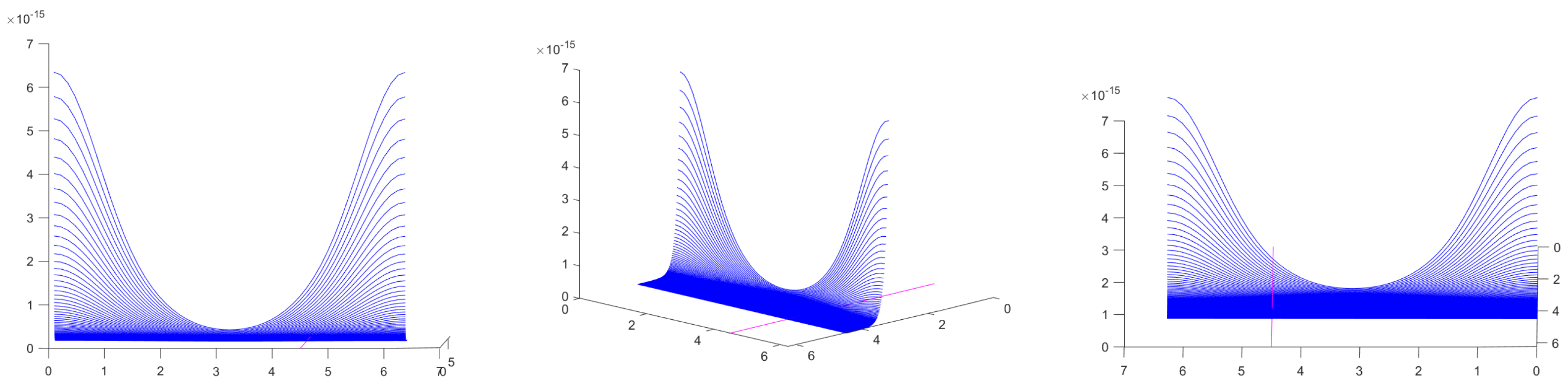

Figure 1 presents the modulus of the kernel

. The moduli are computed for

and for all

. It is evident that the modulus of the kernel attains its maximum value at

, as stated in Theorem 1.

Some values of the maxima of the modulus are given; for instance, if , then , while when , we have

For fixed

and

Table 2 displays the error bounds,

, and the actual errors, Error, corresponding to the anti-Gauss quadrature rules. Similarly,

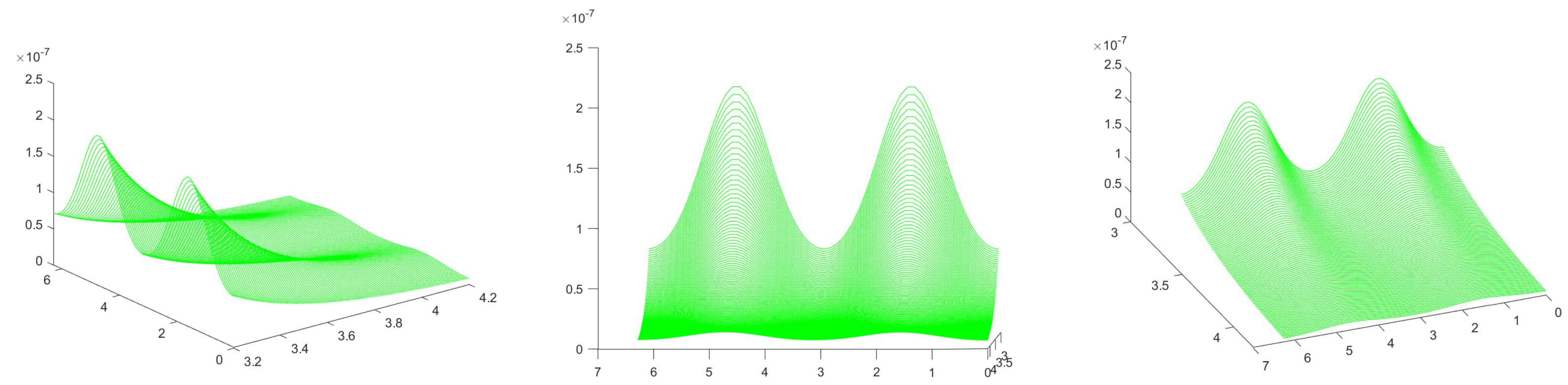

Figure 2 represents the modulus of the kernel computed for

and all

. It is clear that the modulus of the kernel attains its maximum value at

(i.e.,

), as stated in Theorem 1.

Similarly, for the Gegenbauer case,

Figure 3 shows that the maximum value is achieved at

in the case where

and

, as predicted by Theorem 2, while

Table 3 displays the error bounds and the actual errors for the same case.