Abstract

This research presents innovative modified explicit block methods with fifth-order algebraic accuracy to address initial value problems (IVPs). The derivation of the methods employs fitting coefficients that eliminate phase lag and amplification error, as well as their derivatives. A thorough stability analysis of the new approach is conducted. Comparative assessments with existing methods highlight the superior effectiveness of the proposed algorithms. Numerical tests verify that this technique significantly surpasses conventional methods for solving IVPs, particularly those exhibiting oscillatory solutions.

MSC:

65N35; 65M12; 65M70; 65L04; 34A12

1. Introduction

Differential equations with oscillatory solutions model systems exhibiting periodic or cyclic behavior, such as mechanical vibrations, electrical circuits, and biological rhythms. These equations often involve sinusoidal or other periodic functions, and accurately capturing their oscillations is crucial for understanding natural phenomena. Due to their repetitive nature, solving such equations typically requires specialized numerical methods, making this an important area of research [1]. Runge–Kutta and linear multi-step methods are well-known classes of methods that are widely used in approximating the solution of the initial value problems (IVPs). Other classes of methods for integrating IVP include hybrid methods, adapted Falkner-type methods, exponentially fitted methods, and trigonometrically fitted methods [2,3,4,5,6,7,8,9,10,11,12], etc. For a detailed survey of different classes of methods, one can consult the books by Butcher [13] and Hairer et al. [14] (and also references therein). These methods evaluate the numerical solution sequentially, at one point at a time. However, more efficient computational schemes can be devised by enabling the simultaneous calculation of solutions at multiple points. This approach is referred to as the block method. Block methods for the numerical solution of first-order ordinary differential equations (ODEs) have been developed by several researchers. Notable contributions include those by Birta et al. [15], Chu and Hamilton [16], Shampine and Watt [17], and Tam et al. [18]. From the literature, the following key observations motivate our work:

- Hybrid multi-step methods were implemented by Gragg and Stetter [19] to address stiff systems, while the block methods introduced by Milne [20], provide computational efficiency through parallel computing.

- To further address stiffness, hybrid block methods were developed, followed by optimized hybrid block methods [21] that combine the advantages of stability and block structuring. However, these methods primarily focus on reducing local truncation error and are not well-suited for handling highly oscillatory solutions.

- In problems involving high-frequency oscillations, recovering detailed information such as amplitude, energy, envelope, and especially phase becomes crucial. However, achieving this over long time intervals is challenging. Efficiency often requires a trade-off, accepting phase and amplification errors to allow for larger step sizes, as seen in real-time simulations.

- Many existing numerical methods assume a nearly linear problem structure, limiting their applicability. In contrast, block methods can effectively handle non-linear problems, making them suitable for a wide range of real-world applications.

Despite the extensive research in this field, there has been a notable gap in the development of block methods with minimal phase lag or amplification/phase-fitted block methods specifically designed for first-order IVPs. This work aims to introduce a numerical integrator designed to solve initial value problems of the form

where , represents the rate of change in the system, is a function describing the system’s dynamics, and provides the initial condition. We develop a zero-stable explicit block method and apply the theory for calculating phase lag and amplification error (or amplification factor) [9]. We also present methodologies for constructing amplification-fitted and phase-fitted block methods.

The paper is structured as follows: in Section 2, we derive explicit zero-stable five-points block method of order 5 and use the theory from [9] for calculating the phase lag and amplification error (or amplification factor) associated with block methods for solving first-order initial value problems (IVPs). Section 3 is dedicated to the development of methodologies aimed at optimizing the phase lag, amplification factor, and achieving phase-fitted and amplification-fitted block methods. Specifically, we present strategies for minimizing the phase lag, methods for obtaining amplification-fitted block techniques, and a comprehensive approach for deriving both phase-fitted and amplification-fitted block methods. Additionally, we eliminate the first-order derivatives of the amplification factor and phase lag. In Section 4, we conduct a thorough stability analysis of the family of newly proposed methods, assessing their performance under various conditions. Finally, in Section 5, we provide detailed numerical results to demonstrate the effectiveness and accuracy of the proposed approaches.

2. Explicit Five-Point Block Method

According to Fatunla [22], the s-point m-step block method for (1) is given by the matrix finite difference equation:

where

where and

The block scheme is explicit if the coefficient matrix is a null matrix. According to [23,24], supposing function to be smooth enough, linear difference operator corresponding to the multi-step method is

Expanding Taylor’s series expansion about yields The order of a linear multi-step method is p if and . Chollom et al. [25] extended this approach for the entire block, and this technique will be used to define the order of the block method of the type (2). The local truncation error for the block method (2) in matrix form after Taylor’s expansion:

If and , then the order of the block method is p and is error constant.

For the explicit five-point block method (), we have the matrix finite difference equation:

with the coefficient matrices specified as

Zero-stability ensures that the numerical method does not cause errors to increase as the computation advances. Therefore, for a zero-stable one-step explicit block method of (5th order), we require the following:

- 1.

- Local truncation error of .

- 2.

- All eigenvalues of A must have magnitudes less than or equal to 1, and eigenvalues with a magnitude of 1 must have multiplicity 1.

There are numerous cases where we can find A with the above condition, and one of them is

Now, by expanding , using the Taylor series, we equate the co-efficient of equal to zero in each row of the matrix. This gives 25 equations with 25 unknowns, and solving it using MATHEMATICA, which gives the following matrix

The block method

has local truncation error:

Considering the following scalar test equation:

with the exact solution given as:

we extend the approach used to evaluate the amplification factor for a multi-step method at a single step in the theory presented in [9] to evaluate it for the explicit block method (3). After employing block method (3) on IVP (6), one obtains

Now, considering the substitution , the characteristic equation for the system of difference Equations (8) is as follows:

Taking , one obtains

Definition 1

(Order of phase lag). Given that the theoretical solution of the scalar test Equation (6) at is equal to , or equivalently, , and the numerical solution of the scalar test Equation (6) for is equal to , the phase lag is defined as:

If the quantity as , then it is said that the order of the phase lag is q.

Lemma 1.

The following relations are valid:

For the proof, see [9].

Theorem 1

(Direct Formula for the Amplification Factor of a Block Method). For a five-point explicit block method (3), the amplification factor is given by

where and

Proof.

From the established Lemma 1, we substitute the obtained relation (12) directly into the characteristic Equation (10), and one obtains

Now, the imaginary part of the above system is as follows

and further simplifying,

where . The direct formula for the computation of the amplification factor () of the block method (3) is

where .

□

Theorem 2

(Direct Formula for the Phase Lag of a Block Method). For a five-point block method, the phase lag is expressed as

where and .

Proof.

Similar to the proof of Theorem 1, by applying the established Lemma 1, substitute the derived relation (12) into the characteristic Equation (9), leading to (15). Likewise, by comparing the real part of (15), one obtains

where .

By expanding the formula (13) from Theorem 1 for method (5) with , and using Taylor’s series expansion,

Thus, and the block method (5) is of the sixth-order amplification factor. Similarly, the phase error for (5) using Taylor’s expansion on direct formula (18) from Theorem 2 for (5) with is

Thus, and the block method (5) referred as is of the fourth-order phase lag. □

2.1. Method 2: Amplification Fitted Block Method with Minimal Phase Lag

Using matrix A from (4) and the direct formula in (13), the following algorithm derives the amplification-fitted block method with minimal phase lag. Algorithm:

- 1.

- Eliminate the amplification factor.

- 2.

- Calculate the phase lag using the coefficient obtained in the previous step.

- 3.

- Perform a Taylor series expansion on the computed phase lag.

- 4.

- Solve the system of equations required to minimize the phase lag.

- 5.

- Determine the remaining unknown coefficients by minimizing the local truncation error using the updated coefficients.

In accordance with the algorithm, elimination of amplification factor of block method (3), where A is considered as (4) leads to following set of equations

Further, the phase lag is evaluated by utilizing the above coefficient values, and then minimized by vanishing the coefficients of , , and , which gives the following

The above set of equations produces the following local truncation error:

The remaining elements are found by optimizing the local truncation error:

Thus, the resultant method with coefficient matrix is named as Method 2 () and has following properties

The local truncation error (LTE)

2.2. Method 3: Amplification-Fitted and Phase Fitted Block Method

The derivation of the method proceeds with the following steps:

- 1.

- Eradicate and .

- 2.

- Evaluate local truncation error.

- 3.

- Extract remaining 15 unknown coefficients by improving the precision of the local truncation error.

The development process of this algorithm is outlined in Appendix A. The coefficient matrix B in (3) for this method is given as follows

The remaining elements of matrix are given in Appendix A. The method is referred as and the local truncation error of the block method is

Therefore, Method is of fifth order with and .

2.3. Method 4: Amplification-Fitted and Phase Fitted Block Method with Vanished First Derivative of Phase-Error

The process is carried out in the following steps:

- 1.

- First, the amplification factor (AF) and phase error (PhEr) are evaluated.

- 2.

- Next, the first derivative of the phase error is calculated.

- 3.

- Finally, similar steps are repeated to derive the block method, ensuring that the amplification factor, phase error and its derivative vanish.

- 4.

- Other 10 undetermined coefficients are evaluated by optimizing LTE.

The algorithm leads to the coefficient matrix is as follows:

and the values of the elements are given in Appendix A.

Local Truncation error of the method is

2.4. Method 5: Amplification-Fitted and Phase Fitted Block Method with Vanished First Derivative of Amplification-Factor

The process is executed in the following steps:

- 1.

- Initially, the amplification factor (AF) and phase error (PhEr) are determined.

- 2.

- The first derivative of the amplification factor is then computed.

- 3.

- Subsequently, analogous steps are reiterated to derive the block method, ensuring the elimination of the phase error, amplification factor, and its first order derivative.

- 4.

- The remaining 10 undetermined coefficients are evaluated by optimizing LTE.

The above algorithm leads to the coefficient matrix for method , and is given as follows:

The rest of the elements of matrix are provided in Appendix A.

Local Truncation error of the method is

2.5. Method 6: Amplification-Fitted and Phase Fitted Block Method with Vanished First Derivative of Amplification-Factor and Phase Error

The process is executed in the following steps:

- 1.

- The amplification factor (AF) and phase error (PhEr) are calculated.

- 2.

- The first derivatives of both the amplification factor and phase error are then computed.

- 3.

- Next, similar steps are repeated to derive the block method, ensuring the elimination of the phase error, its first derivative, the amplification factor, and its first derivative.

- 4.

- Finally, the remaining 5 undetermined coefficients are determined by optimizing LTE.

This algorithm results in the coefficient matrix :

and the rest of the elements are given in Appendix A.

Local Truncation error of the method is

3. Stability

For the explicit block method, we consider a general form that incorporates coefficients A and B multiplied by function evaluations at different time steps as follows:

when applied to the scalar test Equation , this method yields a difference equation

The characteristic polynomial of the method is

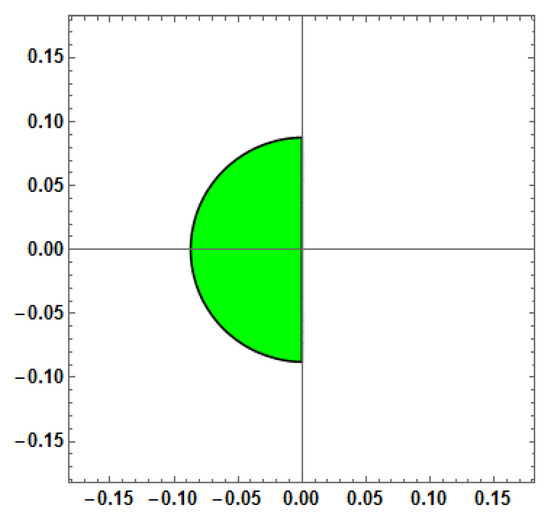

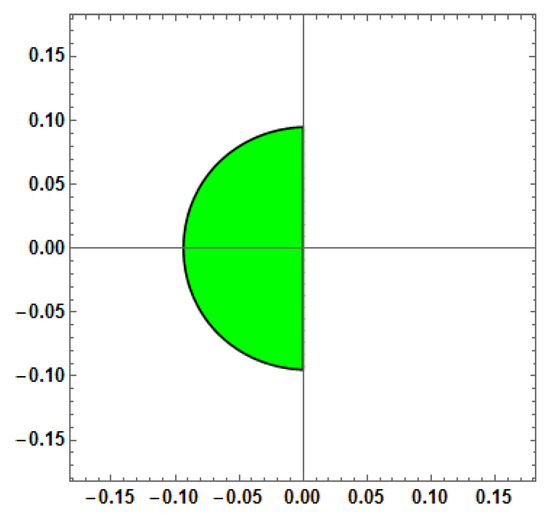

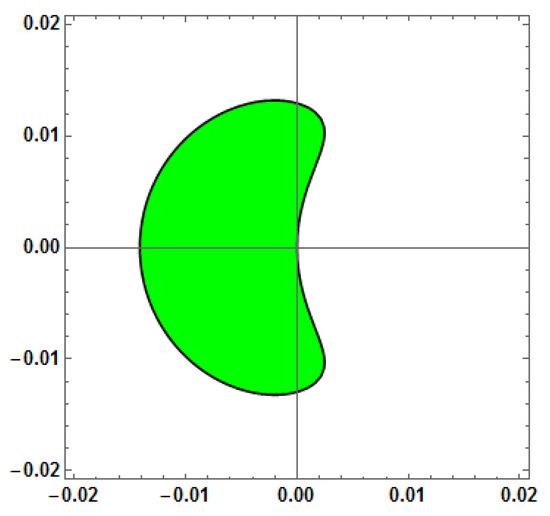

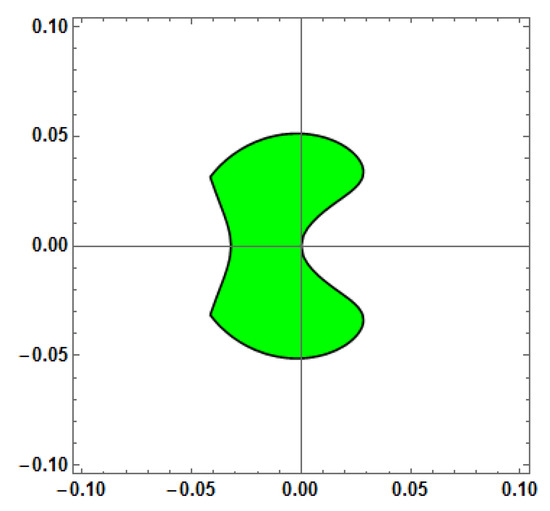

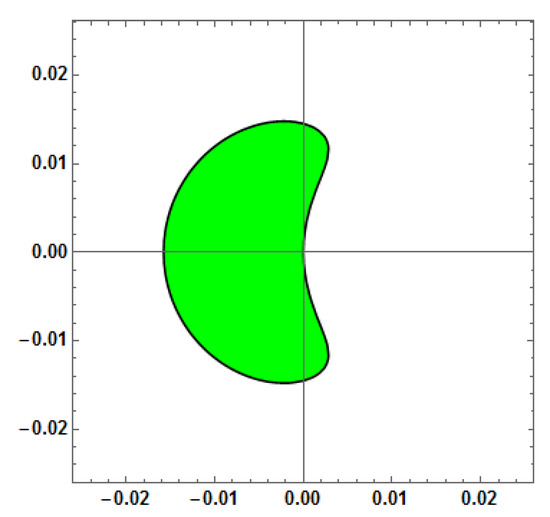

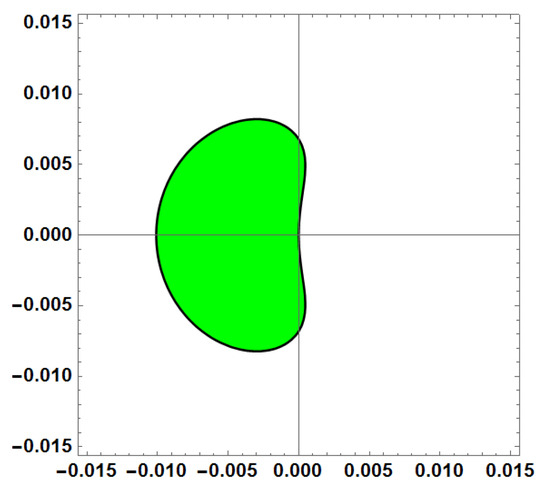

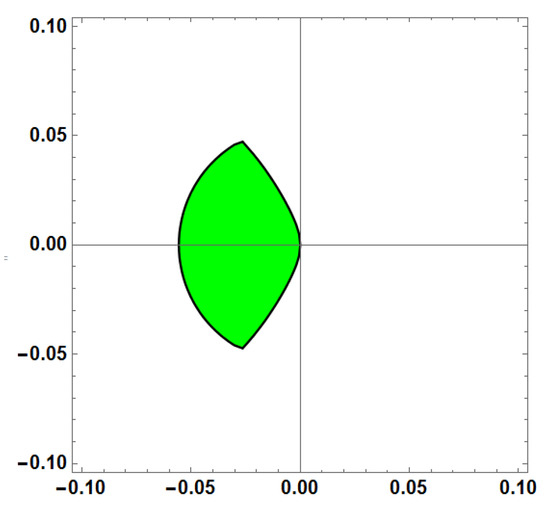

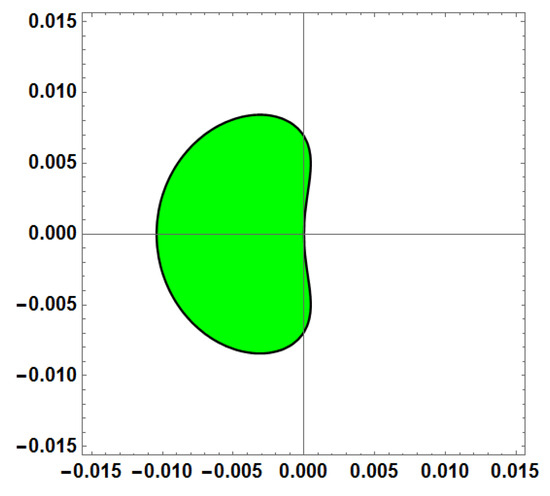

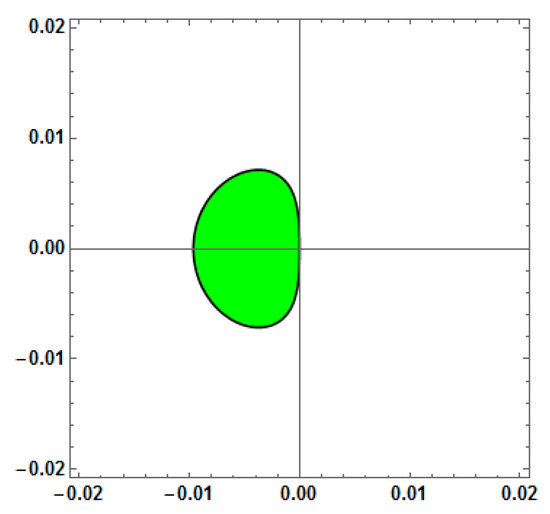

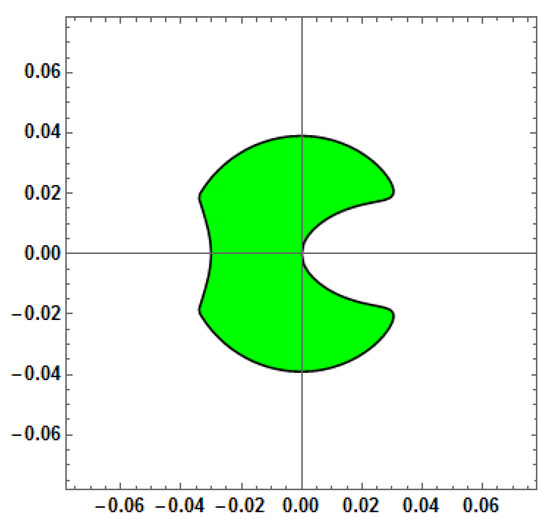

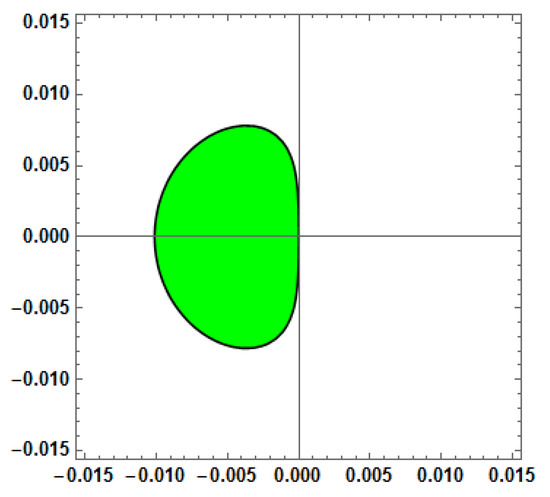

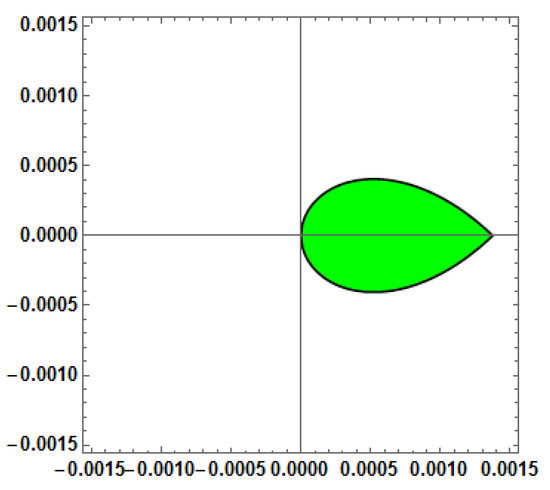

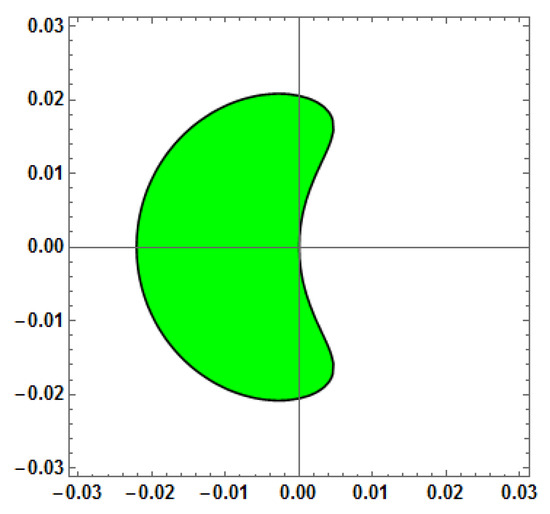

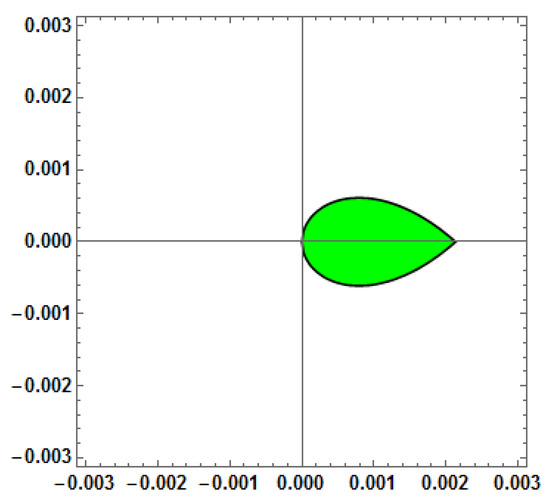

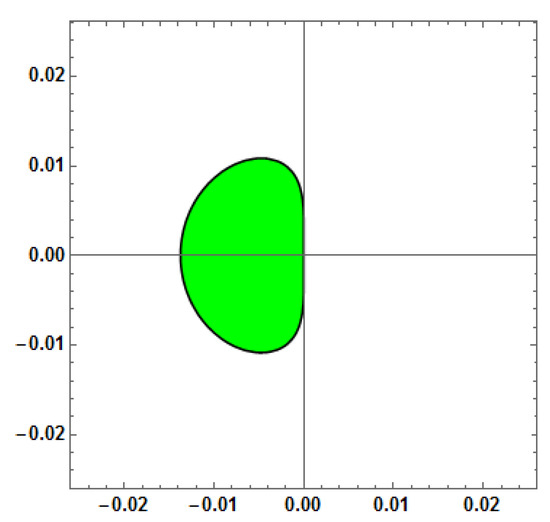

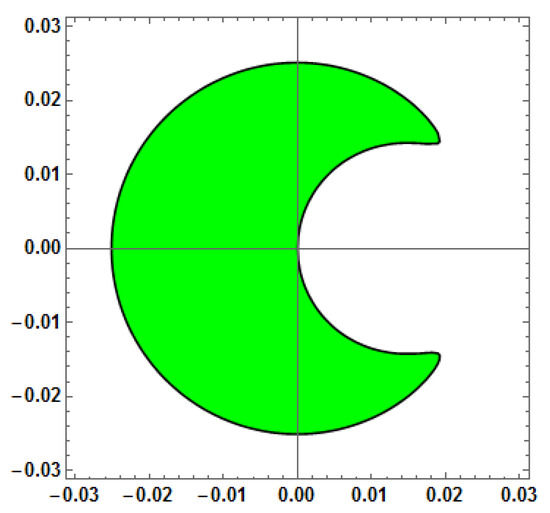

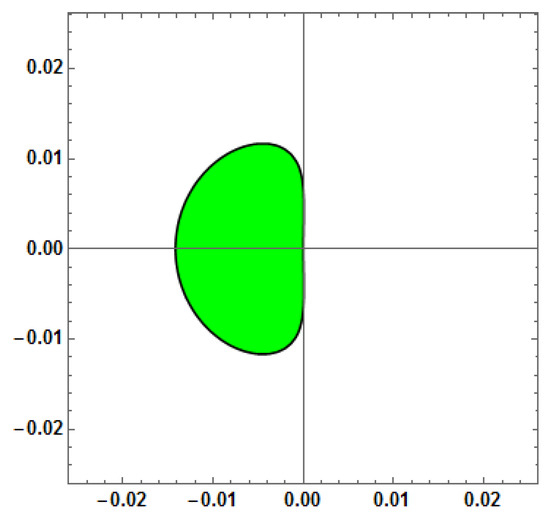

By solving the stability polynomial for , with the condition , using MATHEMATICA, we can visualize the stability regions of the method in the complex plane as shown in Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12, Figure 13, Figure 14, Figure 15, Figure 16 and Figure 17. These regions provide valuable insights into the method’s behavior for different step sizes. The stability analysis reveals how the method performs for various parameter values, including different values of v in Methods 2–6, allowing for a comprehensive understanding of the method’s stability properties across different scenarios.

Figure 1.

Stability region for Method-1 (Explicit method).

Figure 2.

Stability Region for Method-2 (AF = 0) with vs. = h (or w = 1).

Figure 3.

Stability region for Method 2 (AF = 0) with vs. = 1.

Figure 4.

Stability region for Method 2 (AF = 0) with vs. = 100.

Figure 5.

Stability region for Method 2 (AF = 0) with vs. = 1000.

Figure 6.

Stability region for Method 3 (AF = 0, PE = 0) with vs. = 1.

Figure 7.

Stability region for Method 3 (AF = 0, PE = 0) with vs. = 100.

Figure 8.

Stability region for Method 3 (AF = 0, PE = 0) with vs. = 1000.

Figure 9.

Stability region for Method 4 (AF = 0, PE = 0, D[PE] = 0) with vs. = 1.

Figure 10.

Stability region for Method 4 (AF = 0, PE = 0, D[PE] = 0) with vs. = 100.

Figure 11.

Stability region for Method 4 (AF = 0, PE = 0, D[PE] = 0) with vs. = 1000.

Figure 12.

Stability region for Method 5 (AF = 0, PE = 0, D[AF] = 0) with vs. = 1.

Figure 13.

Stability region for Method 5 (AF = 0, PE = 0, D[AF]=0) with vs. = 100.

Figure 14.

Stability region for Method 5 (AF = 0, PE = 0, D[AF] = 0) with vs. = 1000.

Figure 15.

Stability region for Method 6 (AF = 0, PE = 0, D[PE] = 0, D[AF] = 0) with vs. = 1.

Figure 16.

Stability region for Method 6 (AF = 0, PE = 0, D[PE] = 0, D[AF] = 0) with vs. = 100.

Figure 17.

Stability region for Method 6 (AF = 0, PE = 0, D[PE] = 0, D[AF] = 0) with vs. = 1000.

4. Numerical Results

In this section, we solve seven ODE problems with oscillatory solutions and one PDE problem, the Telegraph equation. The error is evaluated using the following formula:

The ODEs are solved using MATHEMATICA, while the PDE is solved using MATLAB R2017a. For comparison, we have considered three ODE solvers from the Runge–Kutta family [26,27]: the fifth-order Cash–Karp method (RKCash), the fifth-order Fehlberg method (RKFehl), and the fourth-order classical Runge–Kutta method (RK4). To ensure a fair and consistent comparison, all methods-including the developed ones and the benchmark solvers-were implemented in the same software environment, MATHEMATICA (Version number: 11.0.1.0). A uniform step size approach was used across all methods to allow direct and consistent evaluation of error norms and computational performance.

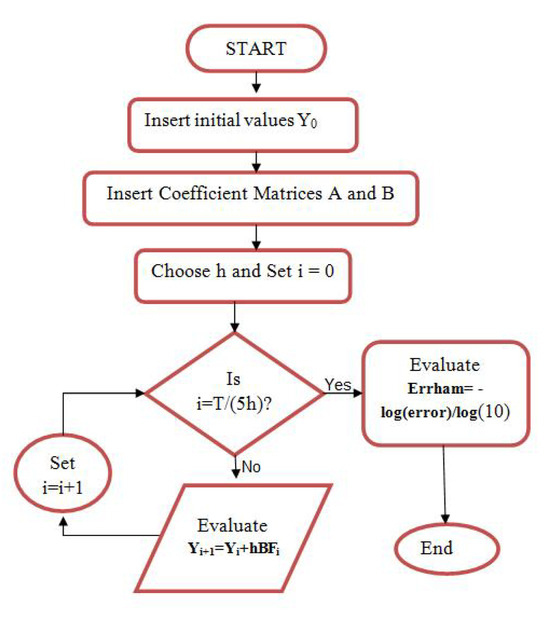

To compute the initial four steps for each problem, Matlab in-built function ode45 is used. The flowchart for the implementation of the derived method is given in Figure 18.

Figure 18.

Flowchart for the implementation of block methods.

Example 1.

Stiefel and Bettis [28] studied the almost periodic orbit problem, and it is as follows:

The exact solution is

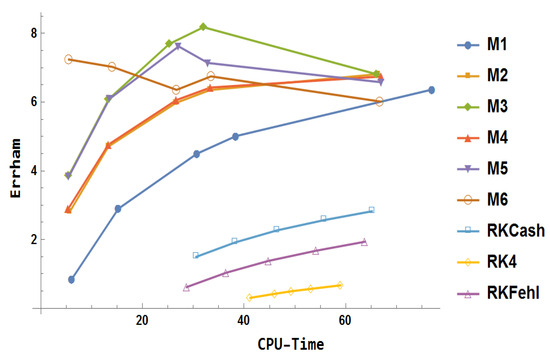

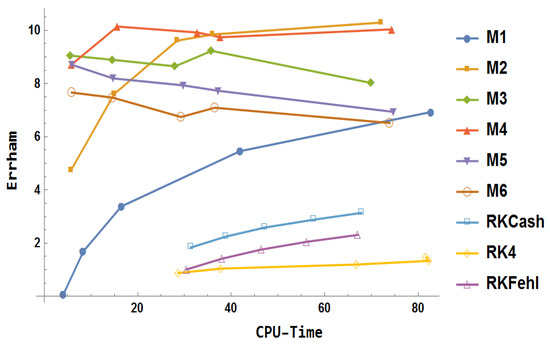

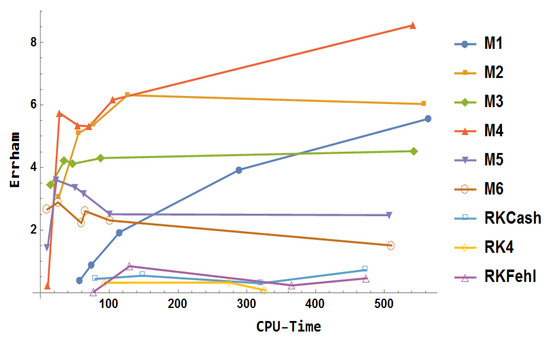

Using the derived methods with , numerical results have been computed and visualized through Figure 19. The key observations regarding their respective behaviors are outlined below:

- Block methods () provide more accurate results compared to the traditional Runge–Kutta methods: Runge–Kutta Fehlberg fifth-order method (RKFehl), Runge–Kutta Cash and Karp fifth-order method (RKCash), and classical fourth-order Runge–Kutta method (RK4).

- Methods and exhibit the same accuracy, both of which outperform .

- The results of are superior to those of and .

- delivers the highest accuracy with a larger step size, achieving this in less CPU time, but it falls behind and when smaller step sizes are considered.

- demonstrates better performance than .

- Overall, achieves the highest accuracy among all the methods.

Figure 19.

Numerical results for example 1.

Example 2.

The following inhomogeneous linear problem, analyzed by Franco et al. [29], is considered:

for which the exact solution is given as follows:

In this case, , the investigation interval is , and the numerical solution is computed with .

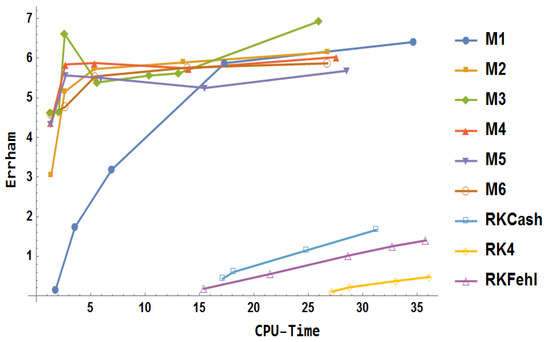

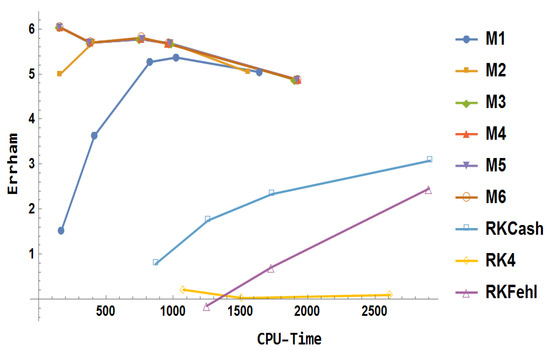

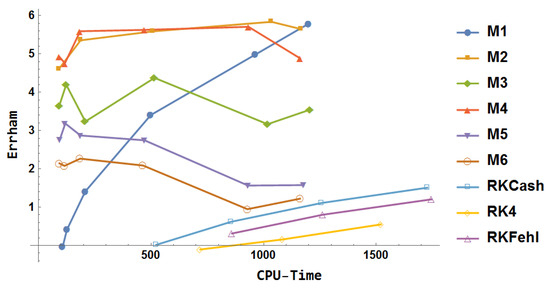

The observations on computational outcomes plotted in Figure 20 are as follows:

- Among the Runge–Kutta methods considered, RK4 is the least accurate, while the explicit block method provides more accurate results.

- RKCash outperforms RKFehl, but M1 surpasses both methods by a significant margin.

- M2 shows higher accuracy than M1.

- Methods M5 and M4 initially exhibit the same accuracy, but M4 slightly outperforms M5 when the step size is reduced.

- M3 performs better than both M5 and M1.

- The results of M6 are marginally better than those of M5.

- On average, M3 achieves the highest accuracy among all the methods.

Figure 20.

Numerical results for example 2.

Example 3.

Considering the Franco and Palacios problem [30]:

The exact solution is

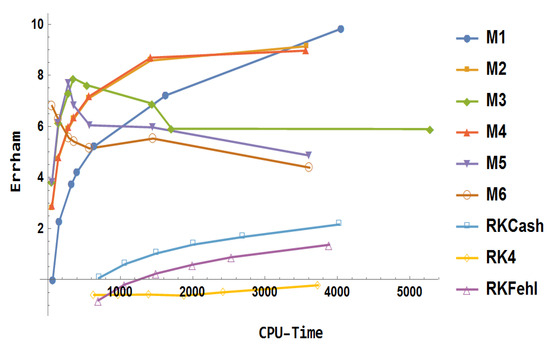

where and . This problem is solved numerically using for .

The key observations from Figure 21 are outlined below:

- RKCash yields better performance than both RKFehl and RK4.

- The block method produces more precise results than the traditional Runge–Kutta methods.

- Method surpasses in terms of performance.

- provides greater accuracy compared to both and .

- achieves the highest accuracy when a large step-size is used, but its accuracy remains unchanged regardless of the step-size.

- demonstrates a steady improvement in accuracy as the step-size decreases.

- Among all methods, is the most accurate.

Figure 21.

Numerical results for example 3.

Example 4.

The nonlinear orbital problem studied by Simos in [31] is as follows:

where . The exact solution is

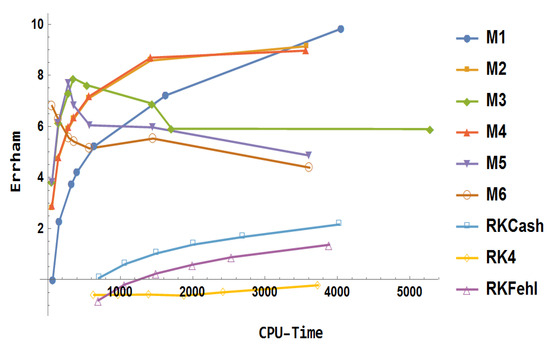

The numerical findings for obtained using are shown in Figure 22 and following points summarize the observations:

- RK4 demonstrated no significant improvement despite an increase in CPU time.

- RKCash outperforms both RKFehl and RK4 in terms of overall performance.

- The block method delivers more accurate results than the conventional Runge–Kutta methods.

- Method exceeds the performance of in terms of computational efficiency and accuracy.

- Methods , , , and exhibit identical levels of accuracy.

- Methods , , , and provide superior accuracy compared to both and .

Figure 22.

Numerical results for example 4.

Example 5.

Addressing the nonlinear first-order differential problem studied by Petzold [32] as follows:

with the analytical solution

where , and the domain of the problem is [0,100]. For this problem, using and plotting results in Figure 23, the following observations are outlined.

Figure 23.

Numerical results for example 5.

- RK4 was unable to achieve an acceptable level of accuracy within the given CPU time range.

- RKCash outperforms RKFehl in terms of overall performance.

- The block method provides more accurate results than RKCash, with accuracy gradually improving as CPU time increases.

- For very large step-sizes, among the block methods, is the least accurate, while provides the highest accuracy.

- Methods and surpass in both computational efficiency and accuracy.

- Methods and demonstrate equivalent performance in terms of accuracy and efficiency.

- Methods and show similar accuracy initially, but exhibits a slight improvement over time.

- Method performs better than the others at the beginning but loses its advantage as the process progresses.

Example 6.

Considering the two-body gravitational problem:

The true solution is

Using , Figure 24 shows numerical results for . The comparison is summarized in the following points.

- The Runge–Kutta methods (RK4, RKCash, and RKFehl) exhibit minimal improvement, maintaining a constant level of performance without significant advancement.

- The block method demonstrates superior accuracy when compared to the conventional Runge–Kutta methods.

- Initially, outperforms , but as the step-size is reduced, reaches a saturation point, while continues to show improvement with smaller step-sizes.

- The block method delivers more precise results than , showing a clear advantage in accuracy.

- Method surpasses in terms of both accuracy and computational efficiency.

- Method outperforms in terms of overall accuracy and efficiency.

- In comparison to other methods, provides the highest accuracy when a very large step size is employed.

Figure 24.

Numerical results for example 6.

Example 7.

We consider the perturbed two-body Kepler’s problem:

The exact solution is:

For this problem, we use:

The domain of the system of differential equation is with .

From Figure 25, it is observed that:

- The Runge–Kutta methods (RK4, RKFehl, and RKCash) show limited improvement in both accuracy and efficiency, with RK4 performing the worst.

- The block method outperforms all Runge–Kutta methods in terms of accuracy.

- delivers superior performance compared to , especially with larger step-sizes, achieving higher accuracy initially, but its advantage diminishes as the process progresses.

- achieves more accurate results than and maintains superior performance in terms of both precision and computational efficiency.

- surpasses with improved results, especially leveling up the accuracy.

- outperforms in both accuracy and efficiency, providing the best overall performance across different step sizes.

- and deliver equivalent performance, both excelling in accuracy and efficiency, making either method an optimal choice for high-precision applications.

Figure 25.

Numerical results for example 7.

Example 8.

Consider the hyperbolic telegraph equation

The initial and boundary conditions are given by:

The parameters are chosen as , , and

The analytic solution is

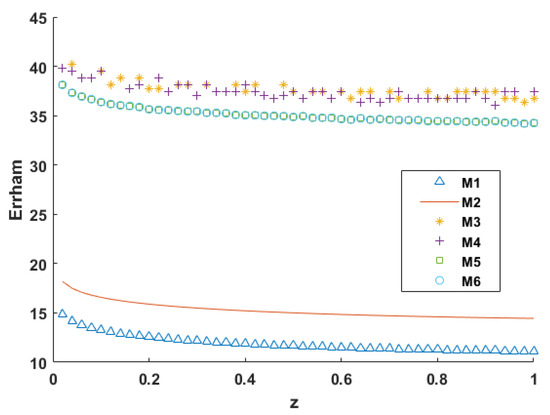

For block methods, the parameter is chosen. The following observations can be made:

- The results in Table 1 demonstrate that the block method, implemented using the algorithm presented in [33], significantly outperforms the results reported in [34], which considered SSP-RK54.

Table 1. Comparison of error.

Table 1. Comparison of error. - The error analysis Figure 26 for indicates that achieves better accuracy compared to .

Figure 26. Numerical results for example 8.

Figure 26. Numerical results for example 8. - Method exhibits a significantly higher accuracy than , with a considerable margin of improvement.

- Methods and display equivalent accuracy over the spatial grid.

- Method surpasses in performance across all considered time values.

- Methods and demonstrate almost comparable accuracy, with a slight advantage observed for .

- The CPU time required for varies among the methods: completes in 0.003172 s, in 0.010569 s, in 0.010235 s, in 0.011391 s, in 0.015868 s, and in 0.013777 s.

- Overall, demonstrated the best performance among the other methods.

Based on the numerical problems considered in the study, the overall performance and interpretation are summarized in the following Table 2.

Table 2.

Performance Summary of Numerical Methods.

5. Conclusions

Overall, the block methods significantly outperform traditional Runge–Kutta methods, with showing notable improvement over RK4, RKFehl, and RKCash. As we move to more advanced block methods, such as , , , , and , there is a consistent increase in both accuracy and computational efficiency. There was a close competition among , , and , while and performed better than but fell short compared to the other methods. No single method emerged as the definitive winner, as the performance of numerical methods depends heavily on the type of problem being addressed. For partial differential equations (PDEs), and delivered the best results. Among these, consistently demonstrated its effectiveness and superiority in most cases. This study not only evaluates the performance of the methods but also broadens the scope of applications for modified explicit block methods.

Author Contributions

Conceptualization, R.T.A., A.K. and T.E.S.; methodology, R.T.A., A.K. and T.E.S.; software, A.K.; validation, R.T.A., A.K. and T.E.S.; formal analysis, T.E.S.; investigation, R.T.A.; data curation, A.K.; writing—original draft preparation, A.K.; writing—review and editing, R.T.A. and T.E.S.; supervision, T.E.S.; funding acquisition, T.E.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Deanship of Scientific Research at Imam Mohammad Ibn Saud Islamic University (IMSIU) (grant number IMSIU-DDRSP-RP25).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A

Appendix A.1. Amplification-Fitted and Phase Fitted Block Method: Method 3

For Method , the elimination of will lead to (22) and equating to zero results in following equations:

To continue the evaluation of the remaining unknown components of the matrix, the local truncation error is minimized by setting the first three leading coefficients in the series expansion equal to zero.

By using these values, the coefficient matrix obtained after calculation is .

The remaining elements of matrix are as follows:

Appendix A.2. Amplification-Fitted and Phase Fitted Block Method with Vanished First Derivative of Phase-Error: Method 4

The elements of matrix are as follows:

Appendix A.3. Amplification-Fitted and Phase Fitted Block Method with Vanished First Derivative of Amplification-Factor: Method 5

The matrix for the method involves the following:

Appendix A.4. Amplification-Fitted and Phase Fitted Block Method with Vanished First Derivative of Amplification-Factor and Phase Error: Method 6

The method 6 has following set of elements: where

References

- Mikhaĭlovich, L.; Landau, L.D. Quantum Mechanics: Non-Relativistic Theory; Pergamon Press: Oxford, UK, 1965. [Google Scholar]

- Ixaru, L.G.; Berghe, G.V.; De Meyer, H. Frequency evaluation in exponential fitting multistep algorithms for ODEs. J. Comput. Appl. Math. 2002, 140, 423–434. [Google Scholar] [CrossRef]

- Li, J.; Wu, X. Adapted Falkner-type methods solving oscillatory second-order differential equations. Numer. Algorithms 2013, 62, 355–381. [Google Scholar] [CrossRef]

- Li, J. Trigonometrically fitted multi-step Runge–Kutta methods for solving oscillatory initial value problems. Numer. Algorithms 2017, 76, 237–258. [Google Scholar] [CrossRef]

- Lee, K.C.; Senu, N.; Ahmadian, A.; Ibrahim, S.N.I. High-order exponentially fitted and trigonometrically fitted explicit two-derivative Runge–Kutta-type methods for solving third-order oscillatory problems. Math. Sci. 2022, 16, 281–297. [Google Scholar] [CrossRef]

- Ramos, H.; Vigo-Aguiar, J. On the frequency choice in trigonometrically fitted methods. Appl. Math. Lett. 2010, 23, 1378–1381. [Google Scholar] [CrossRef]

- Raptis, A.; Allison, A. Exponential-fitting methods for the numerical solution of the schrodinger equation. Comput. Phys. Commun. 1978, 14, 1–5. [Google Scholar] [CrossRef]

- Senu, N.; Lee, K.; Wan Ismail, W.; Ahmadian, A.; Ibrahim, S.; Laham, M. Improved Runge–Kutta method with trigonometrically fitting technique for solving oscillatory problem. Malays. J. Math. Sci. 2021, 15, 253–266. [Google Scholar]

- Simos, T.E. A new methodology for the development of efficient multistep methods for first-order IVPs with oscillating solutions. Mathematics 2024, 12, 504. [Google Scholar] [CrossRef]

- Thomas, R.; Simos, T. A family of hybrid exponentially fitted predictor-corrector methods for the numerical integration of the radial Schrödinger equation. J. Comput. Appl. Math. 1997, 87, 215–226. [Google Scholar] [CrossRef]

- Van de Vyver, H. A symplectic exponentially fitted modified Runge–Kutta–Nyström method for the numerical integration of orbital problems. New Astron. 2005, 10, 261–269. [Google Scholar] [CrossRef]

- Zhai, W.; Fu, S.; Zhou, T.; Xiu, C. Exponentially fitted and trigonometrically fitted implicit RKN methods for solving y”= f (t, y). J. Appl. Math. Comput. 2022, 68, 1449–1466. [Google Scholar] [CrossRef]

- Butcher, J.C. The Numerical Analysis of Ordinary Differential Equations: Runge–Kutta and General Linear Methods; Wiley-Interscience: Hoboken, NJ, USA, 1987. [Google Scholar]

- Hairer, E.; Wanner, G. Convergence for nonlinear problems. In Solving Ordinary Differential Equations II: Stiff and Differential-Algebraic Problems; Springer: Berlin/Heidelberg, Germany, 1996; pp. 339–355. [Google Scholar]

- Birta, A.-R. Parallel block predictor-corrector methods for ODE’s. IEEE Trans. Comput. 1987, C-36, 299–311. [Google Scholar] [CrossRef]

- Chu, M.T.; Hamilton, H. Parallel solution of ODE’s by multiblock methods. SIAM J. Sci. Stat. Comput. 1987, 8, 342–353. [Google Scholar] [CrossRef]

- Shampine, L.F.; Watts, H. Block implicit one-step methods. Math. Comput. 1969, 23, 731–740. [Google Scholar] [CrossRef]

- Tam, H.W. Parallel Methods for the Numerical Solution of Ordinary Differential Equations; University of Illinois at Urbana-Champaign: Champaign, IL, USA, 1989. [Google Scholar]

- Gragg, W.B.; Stetter, H.J. Generalized multistep Predictor-Corrector methods. J. ACM 1964, 11, 188–209. [Google Scholar] [CrossRef]

- Milne, W.E. Numerical Solution of Differential Equations; John Wiley & Sons: New York, NY, USA, 1953. [Google Scholar]

- Kaur, A.; Kanwar, V. Numerical solution of generalized Kuramoto-Sivashinsky equation using cubic trigonometric B-spline based differential quadrature method and one-step optimized hybrid block method. Int. J. Appl. Comput. Math. 2022, 8, 1–19. [Google Scholar] [CrossRef]

- Fatunla, S.O. Numerical Methods for Initial Value Problems in Ordinary Differential Equations; Academic Press: Cambridge, MA, USA, 1988. [Google Scholar]

- Fatunla, S.O. Block methods for second order ODEs. Int. J. Comput. Math. 1991, 41, 55–63. [Google Scholar] [CrossRef]

- Lambert, J.D. Computational Methods in Ordinary Differential Equations; John Wiley & Sons: Hoboken, NJ, USA, 1973. [Google Scholar]

- Chollom, J.; Ndam, J.; Kumleng, G. On some properties of the block linear multi-step methods. Sci. World J. 2007, 2, 51747. [Google Scholar] [CrossRef]

- Cash, J.R.; Karp, A.H. A variable order Runge–Kutta method for initial value problems with rapidly varying right-hand sides. ACM Trans. Math. Softw. 1990, 16, 201–222. [Google Scholar] [CrossRef]

- Fehlberg, E. Classical Fifth-, Sixth-, Seventh-, and Eighth-Order Runge–Kutta Formulas with Stepsize Control; ASA Technical Report No. R-287; National Aeronautics and Space Administration: Washington, DC, USA, 1968. [Google Scholar]

- Stiefel, E.; Bettis, D. Stabilization of Cowell’s method. Numer. Math. 1969, 13, 154–175. [Google Scholar] [CrossRef]

- Franco, J.; Gómez, I.; Rández, L. Four-stage symplectic and P-stable SDIRKN methods with dispersion of high order. Numer. Algorithms 2001, 26, 347–363. [Google Scholar] [CrossRef]

- Franco, J.; Palacios, M. High-order P-stable multistep methods. J. Comput. Appl. Math. 1990, 30, 1–10. [Google Scholar] [CrossRef]

- Simos, T. New open modified Newton Cotes type formulae as multilayer symplectic integrators. Appl. Math. Model. 2013, 37, 1983–1991. [Google Scholar] [CrossRef]

- Petzold, L.R. An efficient numerical method for highly oscillatory ordinary differential equations. Siam J. Numer. Anal. 1981, 18, 455–479. [Google Scholar] [CrossRef]

- Kaur, A.; Kanwar, V.; Ramos, H. A coupled scheme based on uniform algebraic trigonometric tension B-spline and a hybrid block method for Camassa-Holm and Degasperis-Procesi equations. Comput. Appl. Math. 2024, 43, 16. [Google Scholar] [CrossRef]

- Mittal, R.; Bhatia, R. Numerical solution of second order one dimensional hyperbolic telegraph equation by cubic B-spline collocation method. Appl. Math. Comput. 2013, 220, 496–506. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).