Advances in Zeroing Neural Networks: Convergence Optimization and Robustness in Dynamic Systems

Abstract

1. Introduction

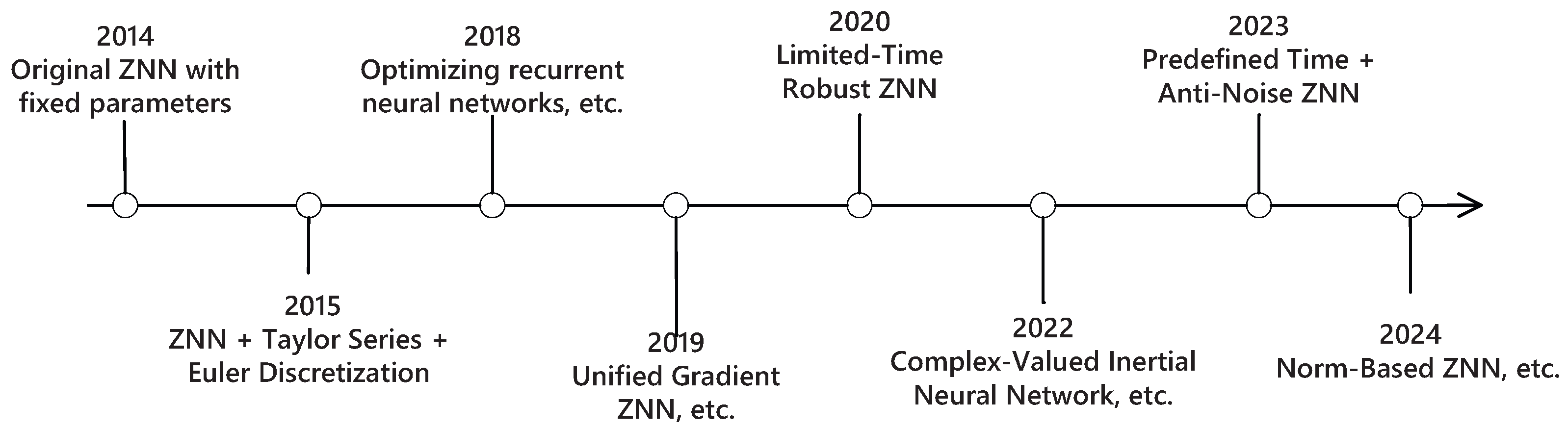

2. The Development of Convergence

2.1. Fixed Parameters

2.2. Variable Parameters

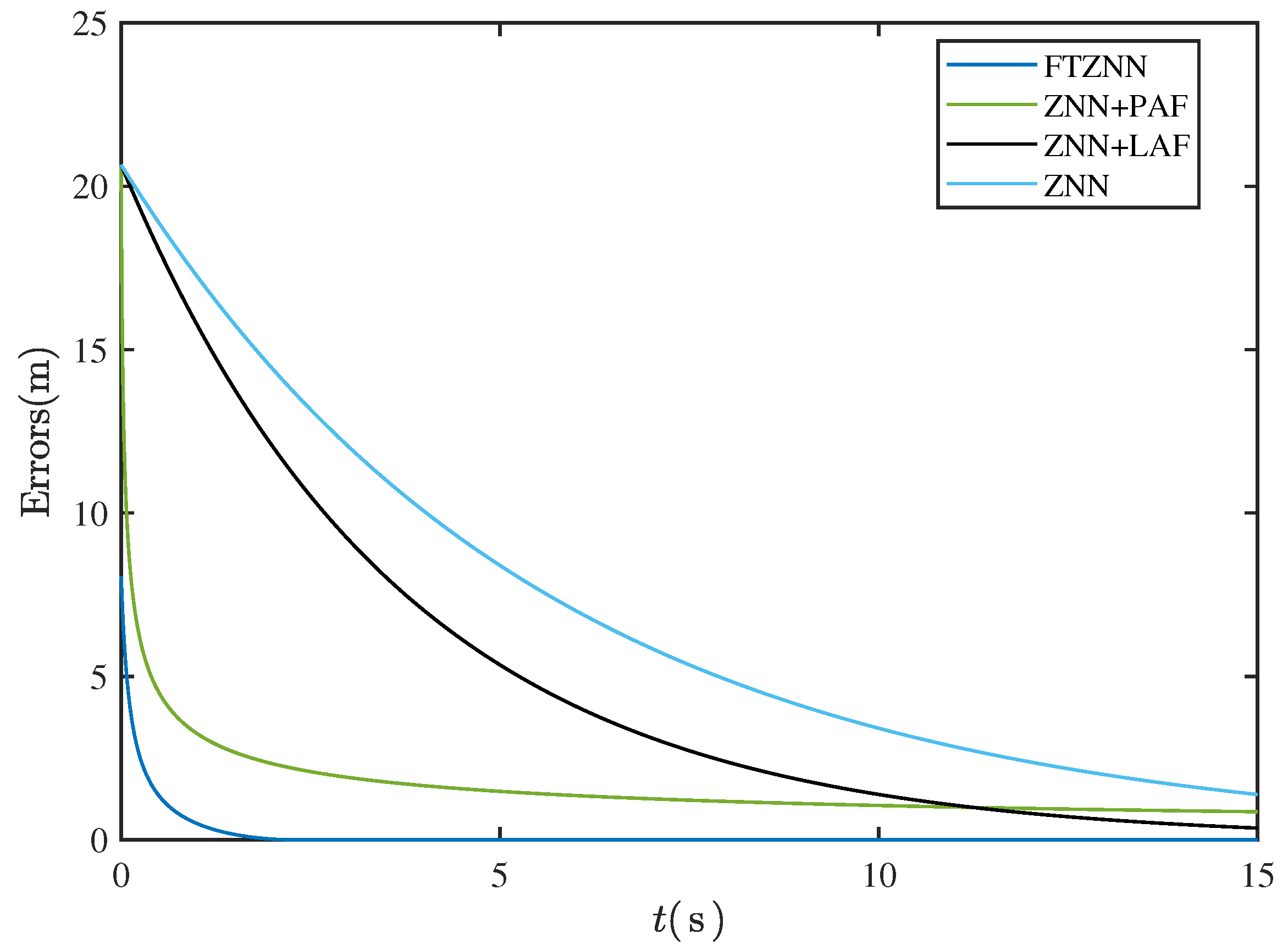

2.3. Activation Function

3. The Development of Robustness

- Structural Adaptations: ZNN incorporates noise factors directly into its structure, enhancing the model’s stability.

- Activation Function Design: By developing specific activation functions within the improved ZNN structure, the model effectively suppresses the interference of noise on output results, thus improving robustness.

- Fuzzy Control Mechanism: ZNN leverages a fuzzy inference mechanism to smooth input data during the processing of noisy information, further improving the model’s adaptability to uncertainty and noise. This ensures that ZNN maintains high accuracy and stability even in complex environments.

3.1. Structural Adaptations for Stochastic Robustness

3.2. Activation Function Design

3.3. Fuzzy Control Mechanism

4. Application

4.1. Robotic Arm

4.2. Chaotic System

4.3. Multi-Vehicle Cooperation

4.4. Other Aspects of ZNNs

5. Discussion and Conclusions

5.1. Discussion

5.2. Methodological Summary

5.3. Concluding Remarks

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, Z.; Wu, X. Structural analysis of the evolution mechanism of online public opinion and its development stages based on machine learning and social network analysis. Int. J. Comput. Intell. Syst. 2023, 16, 99. [Google Scholar] [CrossRef]

- Zhang, Z.; Ding, C.; Zhang, M.; Luo, Y.; Mai, J. DCDLN: A densely connected convolutional dynamic learning network for malaria disease diagnosis. Neural Netw. 2024, 176, 106339. [Google Scholar] [CrossRef] [PubMed]

- Zhong, J.; Zhao, H.; Zhao, Q.; Zhou, R.; Zhang, L.; Guo, F.; Wang, J. RGCNPPIS: A Residual Graph Convolutional Network for Protein-Protein Interaction Site Prediction. IEEE/ACM Trans. Comput. Biol. Bioinform. 2024, 21, 1676–1684. [Google Scholar] [CrossRef]

- Peng, Y.; Li, M.; Li, Z.; Ma, M.; Wang, M.; He, S. What is the impact of discrete memristor on the performance of neural network: A research on discrete memristor-based BP neural network. Neural Netw. 2025, 185, 107213. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, J.; Mai, W. VPT: Video portraits transformer for realistic talking face generation. Neural Netw. 2025, 184, 107122. [Google Scholar] [CrossRef]

- Wei, L.; Jin, L. Collaborative Neural Solution for Time-Varying Nonconvex Optimization with Noise Rejection. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 2935–2948. [Google Scholar] [CrossRef]

- Xiao, R.; Li, W.; Lu, J.; Jin, S. ContexLog: Non-Parsing Log Anomaly Detection with All Information Preservation and Enhanced Contextual Representation. IEEE Trans. Netw. Serv. Manag. 2024, 21, 4750–4762. [Google Scholar] [CrossRef]

- Luo, M.; Wang, K.; Cai, Z.; Liu, A.; Li, Y.; Cheang, C.F. Using Imbalanced Triangle Synthetic Data for Machine Learning Anomaly Detection. Comput. Mater. Contin. 2019, 58, 15–26. [Google Scholar] [CrossRef]

- Chen, S.; Zhou, C.; Li, J.; Peng, H. Asynchronous introspection theory: The underpinnings of phenomenal consciousness in temporal illusion. Minds Mach. 2017, 27, 315–330. [Google Scholar] [CrossRef]

- Jin, J. Resonant amplifier-based sub-harmonic mixer for zero-IF transceiver applications. Integration 2017, 57, 69–73. [Google Scholar] [CrossRef]

- Qin, Z.; Tang, Y.; Tang, F.; Xiao, J.; Huang, C.; Xu, H. Efficient XML query and update processing using a novel prime-based middle fraction labeling scheme. China Commun. 2017, 14, 145–157. [Google Scholar] [CrossRef]

- Zhang, Z.; He, Y.; Mai, W.; Luo, Y.; Li, X.; Cheng, Y.; Huang, X.; Lin, R. Convolutional Dynamically Convergent Differential Neural Network for Brain Signal Classification. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 8166–8177. [Google Scholar] [CrossRef] [PubMed]

- Xiang, Q.; Gong, H.; Hua, C. A new discrete-time denoising complex neurodynamics applied to dynamic complex generalized inverse matrices. J. Supercomput. 2025, 81, 1–25. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhu, M.; Ren, X. Double center swarm exploring varying parameter neurodynamic network for non-convex nonlinear programming. Neurocomputing 2025, 619, 129156. [Google Scholar] [CrossRef]

- Zhang, Z.; Yu, H.; Ren, X.; Luo, Y. A swarm exploring neural dynamics method for solving convex multi-objective optimization problem. Neurocomputing 2024, 601, 128203. [Google Scholar] [CrossRef]

- Yan, J.; Jin, L.; Hu, B. Data-driven model predictive control for redundant manipulators with unknown model. IEEE Trans. Cybern. 2024, 54, 5901–5911. [Google Scholar] [CrossRef]

- Tang, Z.; Zhang, Y. Refined self-motion scheme with zero initial velocities and time-varying physical limits via Zhang neurodynamics equivalency. Front. Neurorobot. 2022, 16, 945346. [Google Scholar] [CrossRef]

- Wu, W.; Tian, Y.; Jin, T. A label based ant colony algorithm for heterogeneous vehicle routing with mixed backhaul. Appl. Soft Comput. 2016, 47, 224–234. [Google Scholar] [CrossRef]

- Wang, C.; Wang, Y.; Yuan, Y.; Peng, S.; Li, G.; Yin, P. Joint computation offloading and resource allocation for end-edge collaboration in internet of vehicles via multi-agent reinforcement learning. Neural Netw. 2024, 179, 106621. [Google Scholar] [CrossRef]

- Xiang, Z.; Xiang, C.; Li, T.; Guo, Y. A self-adapting hierarchical actions and structures joint optimization framework for automatic design of robotic and animation skeletons. Soft Comput. 2021, 25, 263–276. [Google Scholar] [CrossRef]

- Liu, M.; Li, Y.; Chen, Y.; Qi, Y.; Jin, L. A Distributed Competitive and Collaborative Coordination for Multirobot Systems. IEEE Trans. Mob. Comput. 2024, 23, 11436–11448. [Google Scholar] [CrossRef]

- Liu, J.; Feng, H.; Tang, Y.; Zhang, L.; Qu, C.; Zeng, X.; Peng, X. A novel hybrid algorithm based on Harris Hawks for tumor feature gene selection. PeerJ Comput. Sci. 2023, 9, e1229. [Google Scholar] [CrossRef] [PubMed]

- Lan, Y.; Zheng, L. Equivalent-input-disturbance-based preview repetitive control for Takagi–Sugeno fuzzy systems. Eur. J. Control 2023, 71, 100781. [Google Scholar] [CrossRef]

- Liu, M.; Jiang, Q.; Li, H.; Cao, X.; Lv, X. Finite-time-convergent support vector neural dynamics for classification. Neurocomputing 2025, 617, 128810. [Google Scholar] [CrossRef]

- Ding, Y.; Mai, W.; Zhang, Z. A novel swarm budorcas taxicolor optimization-based multi-support vector method for transformer fault diagnosis. Neural Netw. 2025, 184, 107120. [Google Scholar] [CrossRef]

- Zhang, Z.; Sun, X.; Li, X.; Liu, Y. An adaptive variable-parameter dynamic learning network for solving constrained time-varying QP problem. Neural Netw. 2025, 184, 106968. [Google Scholar] [CrossRef]

- Chen, L.; Jin, L.; Shang, M. Efficient Loss Landscape Reshaping for Convolutional Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2024, 1–15. [Google Scholar] [CrossRef]

- Liu, J.; Du, X.; Jin, L. A Localization Algorithm for Underwater Acoustic Sensor Networks with Improved Newton Iteration and Simplified Kalman Filter. IEEE Trans. Mob. Comput. 2024, 23, 14459–14470. [Google Scholar] [CrossRef]

- Peng, S.; Zheng, W.; Gao, R.; Lei, K. Fast cooperative energy detection under accuracy constraints in cognitive radio networks. Wirel. Commun. Mob. Comput. 2017, 2017, 3984529. [Google Scholar] [CrossRef]

- Chai, B.; Zhang, K.; Tan, M.; Wang, J. Prescribed time convergence and robust Zeroing Neural Network for solving time-varying linear matrix equation. Int. J. Comput. Math. 2023, 100, 1094–1109. [Google Scholar] [CrossRef]

- Xiao, L.; Zhang, Y. Solving time-varying inverse kinematics problem of wheeled mobile manipulators using Zhang neural network with exponential convergence. Nonlinear Dyn. 2014, 76, 1543–1559. [Google Scholar] [CrossRef]

- Liao, B.; Zhang, Y. From different ZFs to different ZNN models accelerated via Li activation functions to finite-time convergence for time-varying matrix pseudoinversion. Neurocomputing 2014, 133, 512–522. [Google Scholar] [CrossRef]

- Xiao, L. A finite-time convergent neural dynamics for online solution of time-varying linear complex matrix equation. Neurocomputing 2015, 167, 254–259. [Google Scholar] [CrossRef]

- Xiao, L. A new design formula exploited for accelerating Zhang neural network and its application to time-varying matrix inversion. Theor. Comput. Sci. 2016, 647, 50–58. [Google Scholar] [CrossRef]

- Ding, L.; Xiao, L.; Liao, B.; Lu, R.; Peng, H. An improved recurrent neural network for complex-valued systems of linear equation and its application to robotic motion tracking. Front. Neurorobot. 2017, 11, 45. [Google Scholar] [CrossRef]

- Xiao, L.; Zhang, Y.; Li, K.; Liao, B.; Tan, Z. A novel recurrent neural network and its finite-time solution to time-varying complex matrix inversion. Neurocomputing 2019, 331, 483–492. [Google Scholar] [CrossRef]

- Xiao, L.; He, Y.; Wang, Y.; Dai, J.; Wang, R.; Tang, W. A segmented variable-parameter ZNN for dynamic quadratic minimization with improved convergence and robustness. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 2413–2424. [Google Scholar] [CrossRef]

- Xiao, L.; He, Y.; Dai, J.; Liu, X.; Liao, B.; Tan, H. A variable-parameter noise-tolerant Zeroing Neural Network for time-variant matrix inversion with guaranteed robustness. IEEE Trans. Neural Netw. Learn. Syst. 2020, 33, 1535–1545. [Google Scholar] [CrossRef]

- Xiao, L.; Li, L.; Tao, J.; Li, W. A predefined-time and anti-noise varying-parameter ZNN model for solving time-varying complex Stein equations. Neurocomputing 2023, 526, 158–168. [Google Scholar] [CrossRef]

- Deng, J.; Li, C.; Chen, R.; Zheng, B.; Zhang, Z.; Yu, J.; Liu, P.X. A Novel Variable-Parameter Variable-Activation-Function Finite-Time Neural Network for Solving Joint-Angle Drift Issues of Redundant-Robot Manipulators. IEEE/ASME Trans. Mechatron. 2024, 30, 1578–1589. [Google Scholar] [CrossRef]

- He, Y.; Xiao, L.; Li, L.; Zuo, Q.; Wang, Y. Two ZNN-Based Unified SMC Schemes for Finite/Fixed/Preassigned-Time Synchronization of Chaotic Systems. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 9, 2108–2121. [Google Scholar] [CrossRef]

- Luo, J.; Gu, Z. Novel Varying-Parameter ZNN Schemes for Solving TVLEIE Under Prescribed Time With UR5 Manipulator Control Application. Int. J. Robust Nonlinear Control 2025. [Google Scholar] [CrossRef]

- Li, X.; Ren, X.; Zhang, Z.; Guo, J.; Luo, Y.; Mai, J.; Liao, B. A varying-parameter complementary neural network for multi-robot tracking and formation via model predictive control. Neurocomputing 2024, 609, 128384. [Google Scholar] [CrossRef]

- Dai, J.; Tan, P.; Xiao, L.; Jia, L.; He, Y.; Luo, J. A Fuzzy Adaptive Zeroing Neural Network Model With Event-Triggered Control for Time-Varying Matrix Inversion. IEEE Trans. Fuzzy Syst. 2023, 31, 3974–3983. [Google Scholar] [CrossRef]

- Xiao, L.; Wang, D.; Luo, L.; Dai, J.; Yan, X.; Li, J. A Double Integral Noise-Tolerant Fuzzy ZNN Model for TVSME Applied to the Synchronization of Chua’s Circuit Chaotic System. IEEE Trans. Fuzzy Syst. 2024, 32, 6214–6223. [Google Scholar] [CrossRef]

- Jagtap, A.D.; Kawaguchi, K.; Karniadakis, G.E. Adaptive activation functions accelerate convergence in deep and physics-informed neural networks. J. Comput. Phys. 2020, 404, 109136. [Google Scholar] [CrossRef]

- Xiao, L. A nonlinearly-activated neurodynamic model and its finite-time solution to equality-constrained quadratic optimization with nonstationary coefficients. Appl. Soft Comput. 2016, 40, 252–259. [Google Scholar] [CrossRef]

- Xiao, L.; Liao, B. A convergence-accelerated Zhang neural network and its solution application to Lyapunov equation. Neurocomputing 2016, 193, 213–218. [Google Scholar] [CrossRef]

- Lv, X.; Xiao, L.; Tan, Z.; Yang, Z. Wsbp function activated Zhang dynamic with finite-time convergence applied to Lyapunov equation. Neurocomputing 2018, 314, 310–315. [Google Scholar] [CrossRef]

- Xiao, L.; Liao, B.; Li, S.; Chen, K. Nonlinear recurrent neural networks for finite-time solution of general time-varying linear matrix equations. Neural Netw. 2018, 98, 102–113. [Google Scholar] [CrossRef]

- Xiao, L.; Li, K.; Tan, Z.; Zhang, Z.; Liao, B.; Chen, K.; Jin, L.; Li, S. Nonlinear gradient neural network for solving system of linear equations. Inf. Process. Lett. 2019, 142, 35–40. [Google Scholar] [CrossRef]

- Lv, X.; Xiao, L.; Tan, Z. Improved Zhang neural network with finite-time convergence for time-varying linear system of equations solving. Inf. Process. Lett. 2019, 147, 88–93. [Google Scholar] [CrossRef]

- Lv, X.; Xiao, L.; Tan, Z.; Yang, Z.; Yuan, J. Improved gradient neural networks for solving Moore–Penrose inverse of full-rank matrix. Neural Process. Lett. 2019, 50, 1993–2005. [Google Scholar] [CrossRef]

- Xiao, L.; Tan, H.; Jia, L.; Dai, J.; Zhang, Y. New error function designs for finite-time ZNN models with application to dynamic matrix inversion. Neurocomputing 2020, 402, 395–408. [Google Scholar] [CrossRef]

- Ye, S.; Zhou, K.; Zain, A.M.; Wang, F.; Yusoff, Y. A modified harmony search algorithm and its applications in weighted fuzzy production rule extraction. Front. Inf. Technol. Electron. Eng. 2023, 24, 1574–1590. [Google Scholar] [CrossRef]

- Qin, F.; Zain, A.M.; Zhou, K.Q. Harmony search algorithm and related variants: A systematic review. Swarm Evol. Comput. 2022, 74, 101126. [Google Scholar] [CrossRef]

- Li, Y.; Yang, G.; Zhu, Y.; Ding, X.; Gong, R. Probability model-based early merge mode decision for dependent views coding in 3D-HEVC. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2018, 14, 1–15. [Google Scholar] [CrossRef]

- Tang, Z.; Zhang, Y. Continuous and discrete gradient-Zhang neuronet (GZN) with analyses for time-variant overdetermined linear equation system solving as well as mobile localization applications. Neurocomputing 2023, 561, 126883. [Google Scholar] [CrossRef]

- Xiao, L.; Yi, Q.; Dai, J.; Li, K.; Hu, Z. Design and analysis of new complex Zeroing Neural Network for a set of dynamic complex linear equations. Neurocomputing 2019, 363, 171–181. [Google Scholar] [CrossRef]

- Liao, B.; Hua, C.; Xu, Q.; Cao, X.; Li, S. Inter-robot management via neighboring robot sensing and measurement using a zeroing neural dynamics approach. Expert Syst. Appl. 2024, 244, 122938. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, B.; Pan, C.; Li, K. Energy-efficient triple modular redundancy scheduling on heterogeneous multi-core real-time systems. J. Parallel Distrib. Comput. 2024, 191, 104915. [Google Scholar] [CrossRef]

- Chu, H.M.; Kong, X.Z.; Liu, J.X.; Zheng, C.H.; Zhang, H. A New Binary Biclustering Algorithm Based on Weight Adjacency Difference Matrix for Analyzing Gene Expression Data. IEEE/ACM Trans. Comput. Biol. Bioinform. 2023, 20, 2802–2809. [Google Scholar] [CrossRef] [PubMed]

- Xie, X.; Peng, S.; Yang, X. Deep Learning-Based Signal-To-Noise Ratio Estimation Using Constellation Diagrams. Mob. Inf. Syst. 2020, 2020, 8840340. [Google Scholar] [CrossRef]

- Maharajan, C.; Sowmiya, C.; Xu, C. Delay dependent complex-valued bidirectional associative memory neural networks with stochastic and impulsive effects: An exponential stability approach. Kybernetika 2024, 60, 317–356. [Google Scholar] [CrossRef]

- Liao, B.; Zhang, Y.; Jin, L. Taylor O(h3) discretization of ZNN models for dynamic equality-constrained quadratic programming with application to manipulators. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 225–237. [Google Scholar] [CrossRef]

- Xiao, L.; Li, S.; Yang, J.; Zhang, Z. A new recurrent neural network with noise-tolerance and finite-time convergence for dynamic quadratic minimization. Neurocomputing 2018, 285, 125–132. [Google Scholar] [CrossRef]

- Xiao, L.; Zhang, Z.; Zhang, Z.; Li, W.; Li, S. Design, verification and robotic application of a novel recurrent neural network for computing dynamic Sylvester equation. Neural Netw. 2018, 105, 185–196. [Google Scholar] [CrossRef]

- Xiao, L.; Li, K.; Duan, M. Computing time-varying quadratic optimization with finite-time convergence and noise tolerance: A unified framework for zeroing neural network. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3360–3369. [Google Scholar] [CrossRef]

- Liao, B.; Xiang, Q.; Li, S. Bounded Z-type neurodynamics with limited-time convergence and noise tolerance for calculating time-dependent Lyapunov equation. Neurocomputing 2019, 325, 234–241. [Google Scholar] [CrossRef]

- Xiang, Q.; Liao, B.; Xiao, L.; Lin, L.; Li, S. Discrete-time noise-tolerant Zhang neural network for dynamic matrix pseudoinversion. Soft Comput. 2019, 23, 755–766. [Google Scholar] [CrossRef]

- Li, W.; Xiao, L.; Liao, B. A finite-time convergent and noise-rejection recurrent neural network and its discretization for dynamic nonlinear equations solving. IEEE Trans. Cybern. 2019, 50, 3195–3207. [Google Scholar] [CrossRef] [PubMed]

- Xiao, L.; Dai, J.; Lu, R.; Li, S.; Li, J.; Wang, S. Design and comprehensive analysis of a noise-tolerant ZNN model with limited-time convergence for time-dependent nonlinear minimization. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 5339–5348. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Li, S.; Weng, J.; Liao, B. GNN model for time-varying matrix inversion with robust finite-time convergence. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 559–569. [Google Scholar] [CrossRef]

- Long, C.; Zhang, G.; Zeng, Z.; Hu, J. Finite-time stabilization of complex-valued neural networks with proportional delays and inertial terms: A non-separation approach. Neural Netw. 2022, 148, 86–95. [Google Scholar] [CrossRef] [PubMed]

- Liao, B.; Han, L.; Cao, X.; Li, S.; Li, J. Double integral-enhanced Zeroing Neural Network with linear noise rejection for time-varying matrix inverse. CAAI Trans. Intell. Technol. 2024, 9, 197–210. [Google Scholar] [CrossRef]

- Dai, L.; Xu, H.; Zhang, Y.; Liao, B. Norm-based zeroing neural dynamics for time-variant non-linear equations. CAAI Trans. Intell. Technol. 2024, 9, 1561–1571. [Google Scholar] [CrossRef]

- Luo, Y.; Li, X.; Li, Z.; Xie, J.; Zhang, Z.; Li, X. A Novel Swarm-Exploring Neurodynamic Network for Obtaining Global Optimal Solutions to Nonconvex Nonlinear Programming Problems. IEEE Trans. Cybern. 2024, 54, 5866–5876. [Google Scholar] [CrossRef]

- Li, W.; Liao, B.; Xiao, L.; Lu, R. A recurrent neural network with predefined-time convergence and improved noise tolerance for dynamic matrix square root finding. Neurocomputing 2019, 337, 262–273. [Google Scholar] [CrossRef]

- Xiao, L.; Zhang, Y.; Dai, J.; Chen, K.; Yang, S.; Li, W.; Liao, B.; Ding, L.; Li, J. A new noise-tolerant and predefined-time ZNN model for time-dependent matrix inversion. Neural Netw. 2019, 117, 124–134. [Google Scholar] [CrossRef]

- Liao, B.; Wang, Y.; Li, J.; Guo, D.; He, Y. Harmonic Noise-Tolerant ZNN for Dynamic Matrix Pseudoinversion and Its Application to Robot Manipulator. Front. Neurorobot. 2022, 16, 928636. [Google Scholar] [CrossRef]

- Dai, Z.; Guo, X. Investigation of E-Commerce Security and Data Platform Based on the Era of Big Data of the Internet of Things. Mob. Inf. Syst. 2022, 2022, 3023298. [Google Scholar] [CrossRef]

- Jiang, W.; Zhou, K.Q.; Sarkheyli-Hägele, A.; Zain, A.M. Modeling, reasoning, and application of fuzzy Petri net model: A survey. Artif. Intell. Rev. 2022, 55, 6567–6605. [Google Scholar] [CrossRef]

- Zhang, Z.; Zheng, L.; Weng, J.; Mao, Y.; Lu, W.; Xiao, L. A new varying-parameter recurrent neural-network for online solution of time-varying Sylvester equation. IEEE Trans. Cybern. 2018, 48, 3135–3148. [Google Scholar] [CrossRef] [PubMed]

- Xie, Y.; Xiao, L. A Fuzzy Adaptive Zeroing Neural Network with Noise Tolerance for Time-varying Stein Matrix Equation Solving. In Proceedings of the 2023 International Annual Conference on Complex Systems and Intelligent Science (CSIS-IAC), Shenzhen, China, 20–22 October 2023; pp. 796–801. [Google Scholar] [CrossRef]

- Jia, L.; Xiao, L.; Dai, J.; Wang, Y. Intensive Noise-Tolerant Zeroing Neural Network Based on a Novel Fuzzy Control Approach. IEEE Trans. Fuzzy Syst. 2023, 31, 4350–4360. [Google Scholar] [CrossRef]

- Liufu, Y.; Jin, L.; Li, S. Adaptive Noise-Learning Differential Neural Solution for Time-Dependent Equality-Constrained Quadratic Optimization. IEEE Trans. Neural Netw. Learn. Syst. 2025, 1–12. [Google Scholar] [CrossRef]

- Ding, L.; Zeng, H.B.; Wang, W.; Yu, F. Improved Stability Criteria of Static Recurrent Neural Networks with a Time-Varying Delay. Sci. World J. 2014, 2014, 391282. [Google Scholar] [CrossRef]

- Xiao, L.; Lu, R. Finite-time solution to nonlinear equation using recurrent neural dynamics with a specially-constructed activation function. Neurocomputing 2015, 151, 246–251. [Google Scholar] [CrossRef]

- Jian, Z.; Xiao, L.; Li, K.; Zuo, Q.; Zhang, Y. Adaptive coefficient designs for nonlinear activation function and its application to Zeroing Neural Network for solving time-varying Sylvester equation. J. Frankl. Inst. 2020, 357, 9909–9929. [Google Scholar] [CrossRef]

- Zhou, K.Q.; Gui, W.H.; Mo, L.P.; Zain, A.M. A bidirectional diagnosis algorithm of fuzzy Petri net using inner-reasoning-path. Symmetry 2018, 10, 192. [Google Scholar] [CrossRef]

- Xiao, L. Accelerating a recurrent neural network to finite-time convergence using a new design formula and its application to time-varying matrix square root. J. Frankl. Inst. 2017, 354, 5667–5677. [Google Scholar] [CrossRef]

- Xiao, L.; Li, L.; Huang, W.; Li, X.; Jia, L. A new predefined time Zeroing Neural Network with drop conservatism for matrix flows inversion and its application. IEEE Trans. Cybern. 2022, 54, 752–761. [Google Scholar] [CrossRef] [PubMed]

- Jia, L.; Xiao, L.; Dai, J.; Qi, Z.; Zhang, Z.; Zhang, Y. Design and application of an adaptive fuzzy control strategy to Zeroing Neural Network for solving time-variant QP problem. IEEE Trans. Fuzzy Syst. 2020, 29, 1544–1555. [Google Scholar] [CrossRef]

- Liu, J.; Qu, C.; Zhang, L.; Tang, Y.; Li, J.; Feng, H.; Zeng, X.; Peng, X. A new hybrid algorithm for three-stage gene selection based on whale optimization. Sci. Rep. 2023, 13, 3783. [Google Scholar] [CrossRef]

- Qu, C.; Zhang, L.; Li, J.; Deng, F.; Tang, Y.; Zeng, X.; Peng, X. Improving feature selection performance for classification of gene expression data using Harris Hawks optimizer with variable neighborhood learning. Briefings Bioinform. 2021, 22, bbab097. [Google Scholar] [CrossRef]

- Sun, L.; Mo, Z.; Yan, F.; Xia, L.; Shan, F.; Ding, Z.; Song, B.; Gao, W.; Shao, W.; Shi, F.; et al. Adaptive feature selection guided deep forest for covid-19 classification with chest ct. IEEE J. Biomed. Health Inform. 2020, 24, 2798–2805. [Google Scholar] [CrossRef]

- Sun, Q.; Wu, X. A deep learning-based approach for emotional analysis of sports dance. PeerJ Comput. Sci. 2023, 9, e1441. [Google Scholar] [CrossRef]

- Yu, Y.; Wang, D.; Faisal, M.; Jabeen, F.; Johar, S. Decision support system for evaluating the role of music in network-based game for sustaining effectiveness. Soft Comput. 2022, 26, 10775–10788. [Google Scholar] [CrossRef]

- Xiang, Z.; Guo, Y. Controlling melody structures in automatic game soundtrack compositions with adversarial learning guided gaussian mixture models. IEEE Trans. Games 2020, 13, 193–204. [Google Scholar] [CrossRef]

- Cao, X.; Peng, C.; Zheng, Y.; Li, S.; Ha, T.T.; Shutyaev, V.; Katsikis, V.; Stanimirovic, P. Neural networks for portfolio analysis in high-frequency trading. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 18052–18061. [Google Scholar] [CrossRef]

- Jin, L.; Huang, R.; Liu, M.; Ma, X. Cerebellum-Inspired Learning and Control Scheme for Redundant Manipulators at Joint Velocity Level. IEEE Trans. Cybern. 2024, 54, 6297–6306. [Google Scholar] [CrossRef]

- Bao, G.; Ma, L.; Yi, X. Recent advances on cooperative control of heterogeneous multi-agent systems subject to constraints: A survey. Syst. Sci. Control Eng. 2022, 10, 539–551. [Google Scholar] [CrossRef]

- Kaddoum, G. Wireless chaos-based communication systems: A comprehensive survey. IEEE Access 2016, 4, 2621–2648. [Google Scholar] [CrossRef]

- Shao, W.; Fu, Y.; Cheng, M.; Deng, L.; Liu, D. Chaos synchronization based on hybrid entropy sources and applications to secure communication. IEEE Photonics Technol. Lett. 2021, 33, 1038–1041. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiao, Z.; Guo, D.; Mao, M.; Yin, Y. Singularity-conquering tracking control of a class of chaotic systems using Zhang-gradient dynamics. IET Control Theory Appl. 2015, 9, 871–881. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, B.; Pan, C.; Li, K. Energy-efficient scheduling for parallel applications with reliability and time constraints on heterogeneous distributed systems. J. Syst. Archit. 2024, 152, 103173. [Google Scholar] [CrossRef]

- Xiao, L. A nonlinearly activated neural dynamics and its finite-time solution to time-varying nonlinear equation. Neurocomputing 2016, 173, 1983–1988. [Google Scholar] [CrossRef]

- Xu, H.; Li, R.; Pan, C.; Li, K. Minimizing energy consumption with reliability goal on heterogeneous embedded systems. J. Parallel Distrib. Comput. 2019, 127, 44–57. [Google Scholar] [CrossRef]

- Jin, J. Multi-function current differencing cascaded transconductance amplifier (MCDCTA) and its application to current-mode multiphase sinusoidal oscillator. Wirel. Pers. Commun. 2016, 86, 367–383. [Google Scholar] [CrossRef]

- Yang, X.; Lei, K.; Peng, S.; Hu, L.; Li, S.; Cao, X. Threshold setting for multiple primary user spectrum sensing via spherical detector. IEEE Wirel. Commun. Lett. 2018, 8, 488–491. [Google Scholar] [CrossRef]

- Yang, X.; Lei, K.; Peng, S.; Cao, X.; Gao, X. Analytical expressions for the probability of false-alarm and decision threshold of Hadamard ratio detector in non-asymptotic scenarios. IEEE Commun. Lett. 2018, 22, 1018–1021. [Google Scholar] [CrossRef]

- Li, J.; Mao, M.; Zhang, Y.; Chen, D.; Yin, Y. ZD, ZG and IOL controllers and comparisons for nonlinear system output tracking with DBZ problem conquered in different relative-degree cases. Asian J. Control 2017, 19, 1482–1495. [Google Scholar] [CrossRef]

- Charif, F.; Benchabane, A.; Djedi, N.; Taleb-Ahmed, A. Horn & Schunck Meets a Discrete Zhang Neural Networks for Computing 2D Optical Flow. 2013. Available online: https://dspace.univ-ouargla.dz/jspui/handle/123456789/2644 (accessed on 27 May 2025).

- Chen, J.; Sun, W.; Zheng, S. New predefined-time stability theorem and synchronization of fractional-order memristive delayed BAM neural networks. Commun. Nonlinear Sci. Numer. Simul. 2025, 148, 108850. [Google Scholar] [CrossRef]

- Xiao, L.; Zhang, Y.; Liao, B.; Zhang, Z.; Ding, L.; Jin, L. A velocity-level bi-criteria optimization scheme for coordinated path tracking of dual robot manipulators using recurrent neural network. Front. Neurorobot. 2017, 11, 47. [Google Scholar] [CrossRef]

- Cai, J.; Dai, W.; Chen, J.; Yi, C. Zeroing Neural Networks combined with gradient for solving time-varying linear matrix equations in finite time with noise resistance. Mathematics 2022, 10, 4828. [Google Scholar] [CrossRef]

- Liao, B.; Zhang, Y. Different complex ZFs leading to different complex ZNN models for time-varying complex generalized inverse matrices. IEEE Trans. Neural Netw. Learn. Syst. 2013, 25, 1621–1631. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, K.; Tan, H.Z. Performance analysis of gradient neural network exploited for online time-varying matrix inversion. IEEE Trans. Autom. Control 2009, 54, 1940–1945. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Mayne, D.Q. Model predictive control: Recent developments and future promise. Automatica 2014, 50, 2967–2986. [Google Scholar] [CrossRef]

| Type | Specific Forms | Rate | Application | Literature |

|---|---|---|---|---|

| Constant Parameter | no | Used for static problems | [31,32,33,34] | |

| Varying Parameter | slow | Used for dynamic optimization problems, can accelerate convergence | [35,36,37,38,39,40,41,42] | |

| slow | ||||

| fast | ||||

| fast | ||||

| Fuzzy Parameter | adaptive | Handles uncertainty | [44,45] |

| Method | Principle | References |

|---|---|---|

| Structure-based | Embeds noise factors into network structure via discretization and design changes, suppressing noise and ensuring finite-time convergence. | [65,66,71,72,73,75] |

| Activation-based | Employs advanced activation functions (e.g., predefined time, harmonic) to enhance robustness and suppress noise effects. | [78,79,80] |

| Fuzzy-based | Integrates fuzzy logic for adaptive parameter adjustment under noise, improving system accuracy and adaptability. | [44,45,84,85] |

| Name | Formulation | Convergence Time | Robustness | Literature |

|---|---|---|---|---|

| Linear activation function (LAF) | Infinite time | weak | [53,87,88] | |

| Power activation function (PAF) | () () | Infinite time Finite time | weak | [51,53,89] |

| Bi-power activation function (BPAF) | Infinite time | weak | [48,53,90] | |

| Sign-bi-power activation function (SBPAF) | Finite time | weak | [49,79,91] | |

| Novel sign-bi-power activation function (NSBPAF) | Predefined time | strong | [79,92,93] | |

| Novel exponential activation function (NEAF) | Predefined time | weak | [39] | |

| Hyperbolic sine activation function (HSAF) | Infinite time | strong | [60] | |

| Weighted sigmoid bi-power activation function (WSBPAF) | Finite time | weak | [49,51,59] | |

| Logistic activation function (LAF2) | Infinite time | strong | [54,55,80] |

| Application Scenario | Model Name | Noise Resistance | Discrete or Continuous | Reference |

|---|---|---|---|---|

| Robotic Arm Control | RNN (Dual arm Path Tracking) | No | Continuous | [115] |

| ZNN (Mobile Manipulator Inverse Kinematics) | No | Continuous | [31] | |

| Discrete Noise Resistant ZNN (Pseudo Inverse) | Yes | Discrete | [70] | |

| Chaotic Systems | ZNN + Sliding Mode Control | No | Continuous | [41] |

| Double Integral Fuzzy ZNN | Yes | Continuous | [45] | |

| ZGD (Zhang Gradient Dynamics) | No | Continuous | [105] | |

| Multi-Robot Systems | Variable parameter ZNN (Inequality Constraints) | No | Continuous | [42] |

| Cooperative NN (Noise resistant Non convex Optimization) | Yes | Continuous | [6] | |

| ZND (Multi-Robot Collaboration) | No | Continuous | [60] | |

| Spectrum Estimation and Others | ZD, ZG and IOL Controllers | No | Continuous | [112] |

| Discrete ZNN (Optical Flow Computation) | No | Discrete | [113] | |

| Robust ZNN (Linear Equation Solving) | Yes | Continuous | [30] | |

| Variable parameter Noise resistant ZNN (Matrix Inversion) | Yes | Continuous | [38] | |

| Predefined time Noise resistant ZNN (Stein Equation) | Yes | Continuous | [39] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, X.; Liao, B. Advances in Zeroing Neural Networks: Convergence Optimization and Robustness in Dynamic Systems. Mathematics 2025, 13, 1801. https://doi.org/10.3390/math13111801

Zhou X, Liao B. Advances in Zeroing Neural Networks: Convergence Optimization and Robustness in Dynamic Systems. Mathematics. 2025; 13(11):1801. https://doi.org/10.3390/math13111801

Chicago/Turabian StyleZhou, Xin, and Bolin Liao. 2025. "Advances in Zeroing Neural Networks: Convergence Optimization and Robustness in Dynamic Systems" Mathematics 13, no. 11: 1801. https://doi.org/10.3390/math13111801

APA StyleZhou, X., & Liao, B. (2025). Advances in Zeroing Neural Networks: Convergence Optimization and Robustness in Dynamic Systems. Mathematics, 13(11), 1801. https://doi.org/10.3390/math13111801