Abstract

In the realm of natural language processing (NLP), text classification constitutes a task of paramount significance for large language models (LLMs). Nevertheless, extant methodologies predominantly depend on the output generated by the final layer of LLMs, thereby neglecting the wealth of information encapsulated within neurons residing in intermediate layers. To surmount this shortcoming, we introduce LENS (Linear Exploration and Neuron Selection), an innovative technique designed to identify and sparsely integrate salient neurons from intermediate layers via a process of linear exploration. Subsequently, these neurons are transmitted to downstream modules dedicated to text classification. This strategy effectively mitigates noise originating from non-pertinent neurons, thereby enhancing both the accuracy and computational efficiency of the model. The detection of telecommunication fraud text represents a formidable challenge within NLP, primarily attributed to its increasingly covert nature and the inherent limitations of current detection algorithms. In an effort to tackle the challenges of data scarcity and suboptimal classification accuracy, we have developed the LENS-RMHR (Linear Exploration and Neuron Selection with RoBERTa, Multi-head Mechanism, and Residual Connections) model, which extends the LENS framework. By incorporating RoBERTa, a multi-head attention mechanism, and residual connections, the LENS-RMHR model augments the feature representation capabilities and improves training efficiency. Utilizing the CCL2023 telecommunications fraud dataset as a foundation, we have constructed an expanded dataset encompassing eight distinct categories that encapsulate a diverse array of fraud types. Furthermore, a dual-loss function has been employed to bolster the model’s performance in multi-class classification scenarios. Experimental results reveal that LENS-RMHR demonstrates superior performance across multiple benchmark datasets, underscoring its extensive potential for application in the domains of text classification and telecommunications fraud detection.

MSC:

68T50

1. Introduction

As information society develops rapidly, cybercrime, especially telecommunications fraud, has become a significant global challenge. The U.S. Spam and Scam Report indicates that in 2023, over 56 million American adults fell victim to telecommunications fraud [1], with total losses reaching USD 25.4 billion. According to the U.S. National Consumer Law Center and the Electronic Privacy Information Center, Americans receive more than 33 million fraudulent calls daily [2]. In China, the National Anti-Fraud Center has taken comprehensive measures, such as issuing 9.4 million warnings, blocking billions of fraudulent calls and messages, and preventing potential losses amounting to RMB 328.8 billion [3,4]. These efforts highlight the urgent need for continuous innovation and vigilance in combating telecommunications fraud.

Telecommunications fraud has become a global issue that affects countless individuals and organizations, causing significant financial losses and eroding public trust in communication channels [5,6]. As the tactics employed by fraudsters become more sophisticated and diverse [7,8], traditional detection methods struggle to keep pace [9]. The development of advanced detection models and the availability of high-quality datasets are crucial for effectively addressing this challenge [10,11,12]. In this context, the LENS framework and the eight-category fraud dataset we propose aim to provide a more robust and efficient solution for telecommunications fraud detection. Our work seeks to contribute to the ongoing efforts to combat this pressing issue and to advance the field of fraud detection research.

In this article, our model is based on the RoBERTa pedestal model, although there are currently many other models available, such as the XLNet [13], DeBERTa. Among them, the DeBERTa model was proposed by Microsoft [14] and has actually been iterated in three versions. DeBERTa uses relative position encoding, and the model includes a generator, making it more powerful. However, in this article, we still used the RoBERTa base model. We believe that the RoBERTa model’s dynamic masking and larger training data size strategies have better adaptability and generalization ability to different text data and tasks. The complexity and training cost of the model are lower than those of the DeBERTa model, and it surpasses the XLNet model in the case of a single model.

The LENS framework represents a novel approach to fraud detection, leveraging the power of large language models while incorporating multi-head attention mechanisms and residual connections to enhance text classification and fraud detection performance. By selecting and sparsely integrating prominent neurons from intermediate layers, the framework effectively reduces noise from irrelevant neurons, thereby improving the model’s accuracy and efficiency. The comprehensive eight-category dataset we have constructed addresses a critical gap in current telecommunications fraud research and provides a valuable resource for further studies in this field. The inclusion of a diverse range of fraud categories ensures that the dataset can support the development of more effective and generalized detection models.

Our proposed loss framework, which combines the inconsistency loss function with the cross-entropy loss function, offers a new perspective for optimizing telecommunications fraud detection models. This innovative approach has the potential to significantly improve fraud detection performance and to inspire further research in this area. The LENS-RMHR model, built upon the LENS framework and incorporating RoBERTa, a multi-head attention mechanism, and residual connections, demonstrates enhanced feature representation and improved training efficiency. This model represents a significant advancement in the field of telecommunications fraud detection and has the potential to make a substantial contribution to the ongoing efforts to combat this pressing issue.

In summary, our work addresses the challenges posed by the rapid evolution of telecommunications fraud tactics and the scarcity of datasets. By proposing the LENS framework, constructing a comprehensive eight-category dataset, developing a novel loss framework, and introducing the LENS-RMHR model, we aim to provide a more effective and efficient solution for telecommunications fraud detection. Our contributions have the potential to significantly advance the field of fraud detection research and to help mitigate the impact of telecommunications fraud on society.

2. Related Work

Gurnee [15] explored how large language models (LLMs) represent interpretable features via internal neuron activations. By training k-sparse linear classifier probes to predict input features, the study demonstrated that LLMs can learn spatial and temporal linear representations across different levels, with these representations becoming sparser as the model size increases. Jiao [16] proposed the SPIN framework, a model-agnostic plug-in approach aimed at improving text classification performance. Unlike traditional methods that rely solely on the final layer’s output, SPIN sparsifies and integrates internal neurons from intermediate layers of LLMs, leveraging internal representations as multi-level features. This approach has led to significant improvements in classification accuracy, efficiency, and interpretability. These studies provide valuable insights into the internal structure and functionality of LLMs, highlighting the importance of sparse probes in advancing model interpretability research.

2.1. Technical Progress and Data Challenges in Chinese Telecom Fraud Detection

Recent developments in natural language processing (NLP) have significantly enhanced the detection of fraudulent Chinese texts. For example, Sun [17] introduced CCL23-Eval Task 6, which focuses on classifying cases of telecommunications and network fraud. The study utilized a dataset from a public anti-fraud database and evaluated various deep learning models, including a Transformer-based architecture, demonstrating strong classification performance. It emphasized the effectiveness of pre-trained models and fine-tuning techniques in domain-specific fraud detection tasks. However, the dataset primarily consists of police transcript data, limiting its applicability to real-world anti-fraud scenarios. Similarly, Xu [8] proposed a hybrid model combining BERT with BiLCNN (bidirectional long short-term memory and convolutional neural network) for classifying fraudulent phone call texts. While this model achieved superior accuracy compared to other methods, the lack of public access to the experimental dataset has hindered further research and development.

Moreover, Zhang [11] released the Fake Base Station (FBS) dataset, which contains 14,000 spam and fraud SMS messages sent from actual fake base stations in China. This dataset offers researchers deeper insights into the FBS spam SMS ecosystem. Li [12] introduced a five-category fraud dataset covering four types of fraud, with a total of 12,000 samples. However, the dataset’s limited size and incomplete categorization restrict its utility. These studies indicate that telecommunications fraud detection still has significant room for improvement. Addressing the increasingly serious problem of telecommunications fraud requires the construction of larger and more comprehensive datasets, as well as the development of more powerful, efficient, and accurate detection models.

2.2. Loss Function Optimization

In deep learning and machine learning, the selection and optimization of loss functions play a pivotal role in determining model performance. Recent research has focused on developing new loss functions and their combinations to enhance model performance on specific tasks. Hajiabadi [18] proposed an innovative loss function that combines multiple individual loss functions linearly and integrates them into a deep neural network. By learning the weights of these loss functions through backpropagation, this approach achieved significant improvements over traditional methods in text classification tasks. Li [19] introduced a novel regularization technique to enhance diversity among attention heads in the multi-head attention mechanism. By incorporating a divergence regularization term, the method encourages the model to capture diverse features across different representation subspaces and locations. These studies highlight the importance of loss function optimization in advancing the performance of deep learning models. In natural language processing tasks like text classification, such optimizations not only improve task-specific performance but also significantly enhance the model’s generalization ability.

3. Methods

Traditional text classification methods typically rely on the final output of a large language model, whereas our approach leverages neurons from the intermediate layers (including those from the last and first layers) for text classification. First, we identify effective neurons in the intermediate layers using a linear detection method and integrate them into subsequent modules, such as multi-head attention and residual connections, to achieve more accurate text classification. In terms of loss function design, traditional methods generally use a single loss function, whereas we combine inconsistency loss with cross-entropy loss to enhance the model’s performance.

3.1. Utilization and Selection of Internal Neurons

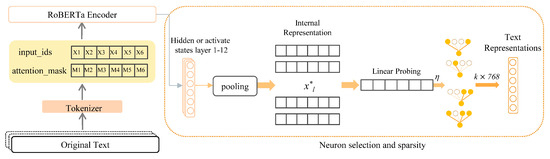

To effectively utilize the intermediate-layer neuron representations in LLMs, we adopt a neuron selection and sparsification strategy [16] to optimize the performance of text classification [20]. All layer representations of the model are extracted in a single forward pass. However, these original representations contain substantial redundant information and need to be further filtered to enhance the accuracy and efficiency of the model.

First, the hidden states and activation values are extracted from each layer and treated as potential feature representations:

where xl represents the internal representation of the l-th layer, L is the sentence length, and D is the dimension of the internal representation. However, not all neurons hold equal importance for the target task. Some irrelevant neurons may introduce noise, interfere with the representation of key features, and thereby degrade model performance. To address this issue, we introduce the Linear Probing method to assess the importance of neurons in each layer.

Specifically, we evaluate the saliency of each neuron in the target task by training a linear model with L1 regularization:

here represents the sigmoid function, denotes the regularization coefficient, N is the number of training samples, and Wl and bl are the weights and biases of the linear model for the l-th layer. The loss function incorporates 1 regularization, which promotes sparsity by enabling the model to automatically disregard neurons with minimal contributions. The magnitude of the weights reflects the relative importance of each neuron.

Based on these weights, a sparsification threshold is defined to retain the neurons that contribute most to the task:

here represents the normalized weights and is the subset of significant neurons selected.

The neuron dimensions extracted from different datasets may vary. To ensure consistency in subsequent calculations, it is essential to standardize these dimensions. The extracted dimensions are re-ordered based on their importance. Multiple approaches were tested, and dimensions were ultimately extracted as k × 768:

where x is the original neuron representation, wi is the importance weight of the i-th dimension, and x′ is the extracted neuron representation with a dimension of k × 768.

This strategy effectively reduces irrelevant information within the model while enhancing task-specific feature representations. By employing this selection and sparsification approach, both the accuracy of text classification and the efficiency of the model are significantly improved, with notable reductions in computational cost and inference time. The steps of utilizing and selecting internal neurons are shown in Figure 1.

Figure 1.

Constructing text representations using internal neurons.

3.2. Design and Optimization of Loss Function

The choice of loss function plays a crucial role in determining the performance and training stability of deep learning models. While traditional cross-entropy loss performs well in multi-class classification tasks, its effectiveness diminishes when dealing with class imbalance and noisy data. To address these challenges, a hybrid strategy is proposed that combines cross-entropy loss [21] with inconsistency loss to take advantage of the strengths of both and improve the generalization capacity of the model.

Cross-entropy loss is widely used in deep learning for multi-class classification tasks. Its core principle is to minimize the negative log-likelihood by maximizing the similarity between the predicted and true distributions. This sensitivity to probability distributions allows cross-entropy loss to effectively guide the model towards accurate classification. However, its performance may degrade in scenarios with class imbalance or noisy annotations, where the model often overfits to the majority classes, neglecting the minority ones. The formula for cross-entropy loss is as follows:

N is the number of samples, M is the number of categories, yij is the true label of sample i, and y’ij is the probability predicted by the model that sample i belongs to category j.

Inconsistency loss addresses the limitations of traditional loss functions by promoting diversity across different heads of attention. It consists of three main components.

The first component, subspace inconsistency, encourages the model to learn diverse feature representations by minimizing subspace similarity between different heads. This is achieved by calculating the angular difference between head feature vectors, as shown in the following formula:

The second component, position inconsistency, ensures that different heads focus on distinct positions within the input. This prevents the attention mechanism from concentrating on the same areas. By comparing the overlap in attention distributions between heads, the model diversity is enhanced. The corresponding formula is provided below:

The third component, representation inconsistency, ensures that the output representations of different heads remain distinct to reduce redundancy. This is optimized by measuring the negative cosine similarity between the outputs of different heads. The formula is given below:

V represents the value of attention, H represents the attention head, Ah represents the attention distribution of the h-th attention head, Oh represents the output of the h-th head, ⊙ represents element-wise multiplication, and represents the L2 norm of the V-th head.

Our method linearly combines the cross-entropy loss with the inconsistency loss and defines the total loss as

LCE denotes the cross-entropy loss, while the other three terms represent the inconsistency losses. The weights , , and are designed such that their sum equals 1.

This combined strategy leverages the precise classification capability of the cross-entropy loss while improving the model’s robustness and sensitivity to minority classes through the inconsistency loss. Experimental results demonstrate that this design performs effectively across multiple datasets with class imbalances and noisy labels, substantially enhancing the model’s generalization performance and stability.

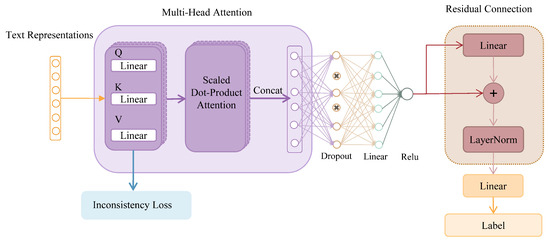

3.3. Model Architecture

In terms of model architecture, we first utilize RoBERTa [22] to generate word embeddings, followed by a neuron selection and sparsification strategy applied to the intermediate layer neurons to extract rich semantic information from the text. Multi-head attention [23] is then employed to simultaneously focus on different parts of the input sequence. Each attention head captures distinct aspects of semantic information, allowing the model to better understand the features and relationships within the text. A dropout layer is introduced to prevent overfitting, and ReLU activation is used to mitigate gradient vanishing and enhance sparsity. The residual connection layer [24] is employed to facilitate gradient flow, accelerate model convergence, and improve stability during training while also helping to prevent issues such as gradient vanishing or explosion. Finally, a linear layer is applied for classification. The entire model architecture is shown in Figure 2.

Figure 2.

The overall model architecture of LENS-RMHR.

In the attention layer, we introduce the query (Q), key (K), and value (V) to calculate the inconsistency loss, which is then combined with the cross-entropy loss to create a novel loss function. In the residual connection section, we constructed a linear detection and sparse integration module that focuses on key classification neurons and reduces the noise impact of irrelevant neurons.

4. Experiment and Analysis

4.1. Datasets

4.1.1. Self-Built Dataset

To build a telecommunications network fraud data set that closely reflects real-life scenarios, we selected seven common types of fraud found in text messages and phone calls from the CCL2023 telecommunication network fraud data set [17]. These types include impersonating customer service, impersonating leadership acquaintances, financial fraud (such as loans and credit reporting), public security fraud, fake rebates, dating fraud, and fraudulent online game product transactions. The dataset is available on GitHub (https://github.com/GJSeason/CCL2023-FCC (accessed on 1 May 2025). The original content of this dataset comes from victims’ transcripts on the public security department’s anti-fraud big data platform, which includes brief descriptions of cases. However, since the model needs to identify text messages or phone texts sent by the suspect to the victim, directly using these transcripts presents certain limitations.

To address this issue, we first cleaned the original data by removing sensitive information such as bank card numbers, personal privacy details, and contact information to ensure data security and compliance. We used keyword fuzzy matching to delete sensitive information. We set up a sensitive word set that includes Chinese sensitive vocabulary, including username and bank card information, address information, etc. After this step, we needed to further check the effectiveness of deleting sensitive words by randomly selecting a portion of the processed data for manual verification. Then, with the help of the large language model [25] Llama3 and DeepSeek [26], which carefully designed prompt words, we generated text similar to real fraud messages. The generated content was manually screened and reviewed by experts to eliminate any samples that did not align with real-world scenarios or contained deviations, thus ensuring the authenticity and credibility of the data.

The large model here has two main functions. Firstly, it is used for matching and deleting sensitive words. Secondly, it is used to generate more complete data. In the process of generating data, the large model relies on existing data for data augmentation operations, expands existing data, and generates new data samples. For this part of the generated samples, there will be special label marks. In testing, the original samples and mixed samples are tested separately to better verify the model’s ability.

In addition, to enhance the timeliness and coverage of the dataset, we incorporated recent typical fraud incidents, including telecommunications fraud that occurred frequently during the epidemic. These new samples enriched the diversity of the data, making the dataset more adaptable to evolving fraud patterns. Finally, combining these seven common fraud types with regular texts, we constructed an eight-category text classification dataset with a total of approximately 28,000 samples. This dataset provides strong support for the training and evaluation of models in the telecommunications network fraud detection task. The specific category labels and their quantities are shown in Table 1.

Table 1.

Table of our dataset categories and examples.

4.1.2. Multi-Category News Datasets

To evaluate the model’s generalization and adaptability in text classification tasks, we used two distinct datasets: the FBS dataset, for fraud detection, and the THUCNews dataset [27], for multi-category news classification. These datasets differ in category composition and scale, providing a solid basis for performance evaluation. The specific category labels and their quantities are shown in Table 2.

Table 2.

Table of THUCNews and FBS dataset statistics.

The FBS dataset contains 14,058 samples divided into four categories: illegal promotion, advertising, other, and fraud. Although illegal promotion is a subcategory of advertising, it is treated as an independent category in this study due to its legal status in China. Despite the dataset’s category imbalance, we preserved the original distribution to better reflect real-world scenarios, where dominant categories often exist.

The THUCNews dataset includes ten categories, such as sports, entertainment, politics, and finance, and is widely used in Chinese text classification research. With its large scale and balanced distribution, it offers a good test for the model’s performance in multi-category tasks.

By using these two datasets, our experiments validate the model’s effectiveness in fraud detection and demonstrate its versatility in broader applications.

4.1.3. Fake Rumor News Datasets

To further validate the classification and detection performance of the model on false rumor data, we also used other publicly available Twitter datasets released by Ma [28], named Twitter 15 and Twitter 16, and Weibo rumor event detection dataset by Huang [29], named Weibo. The Twitter dataset contains a total of 992 tagged events, where each line contains an event, which includes the ID event_id of the relevant tweet and the tag “tweet_ids”. For tags, if the event is a rumor, the value is 1; otherwise, it is 0. The Weibo dataset contains 4664 labeled events in total. Each line contains one event with ids of relevant posts with the following format: event_id, label, post_ids. For the labels, the value is 1 if the event is a rumor and is 0 otherwise. These datasets are created for analyzing social media rumors and include Twitter conversations initiated by rumor tweets, which include responses to those rumor tweets. These tweets have been annotated for support, certainty, and evidence.

4.2. Data Processing

To ensure the quality and applicability of the dataset, we implemented a series of preprocessing measures. First, the text was tokenized, and word frequency was calculated to better analyze the dataset’s characteristics. A stop word list was then generated based on word frequency to exclude low-information words such as you, I, he, hello, here, and there. Given that the dataset pertains to telecommunications fraud, it often contains sensitive information, including names, ID numbers, phone numbers, bank card details, and social media accounts. To ensure data security, regular expressions were employed to anonymize such information, replacing them with generic placeholders like name, ID number, and phone number.

Additionally, since fraud cases frequently involve persuading victims to download specific software, all software names were standardized to the generic term “software”. URLs present in the text were also identified and replaced with “URL” to further enhance privacy protection. To standardize text length across the dataset, we adjusted the text to ensure consistency.

These preprocessing steps not only improved the dataset’s quality and security but also established a robust foundation for subsequent analysis and modeling. During the experiment, we ensured category balance in both the training and test sets by dividing the dataset proportionally. Specifically, 80% of the data were allocated for training and 20% for testing, with no overlap between the two sets. These carefully designed preprocessing steps allowed us to provide a high-quality, reliable dataset for future research and model training.

4.3. Model Description and Parameter Settings

For the RoBERTa model, we employed the “hfl/chinese RoBERTa wwm-ext” [30] variant, which has demonstrated strong performance across various natural language processing benchmarks. Extensive experiments were conducted to optimize the parameter settings and identify the most effective configuration. The final parameter settings include a batch size of 16, 4 training epochs, a learning rate of 1 × 10−5, a dropout rate of 0.2, and 12 attention heads. These parameters were carefully chosen based on experimental results to enhance model performance.

4.4. Experiment

4.4.1. Determining the Screening Method for Middle Layer Neurons

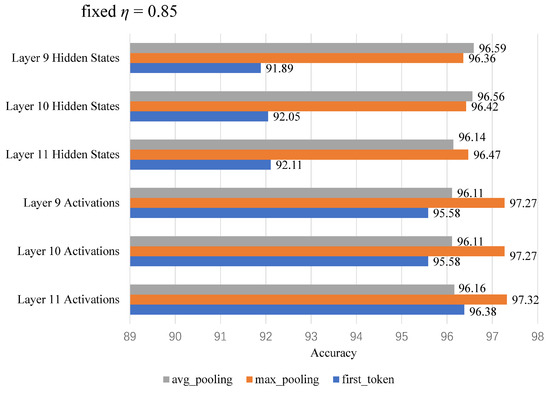

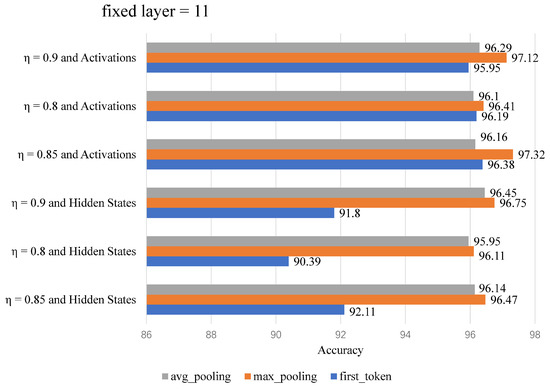

The FBS dataset was first fed into the RoBERTa model to generate the word vector representations of the text. After processing by the pooling layer, we explored three different approaches to represent the outputs of the hidden and activation layers: first token, average pooling (taking the average of all word vectors), and maximum pooling (selecting the maximum value across each dimension). Subsequently, we optimized the sparsification threshold through a grid search method, combined with linear detection techniques, to identify the optimal hyperparameter configuration. Following these steps, we conducted experiments to derive the final results. Two sets of experiments were performed to determine the optimal parameter configuration. The first set used a fixed threshold while varying the state and the number of layers of neurons, as illustrated in Figure 3. The second set fixed the neurons across all intermediate layers of RoBERTa and varied the threshold and state, as shown in Figure 4. Due to the large number of layers, only part of them were taken for display.

Figure 3.

Experiment with fixed neurons and varying thresholds and state.

Figure 4.

Experiment with fixed threshold and varying state and neuron layer count.

In the experiment, we used the RoBERTa-based model, which includes a 12-layer Transformer encoder with 768 hidden units and a total of 9216 neurons. By setting a threshold, we selected the number of neurons to participate in the operation, evaluated different percentages of neurons from the first 12 intermediate layers, and found that 0.85 yielded the best results. This ratio strikes an optimal balance between preserving key information and reducing redundant noise. Lower ratios, such as 0.8, result in significant information loss, while higher ratios, like 0.9, increase model complexity and the risk of overfitting. Additionally, the activation layer outperformed the hidden layer due to its ability to capture deeper features and express complex semantics through nonlinear transformations, while the hidden layer’s linear outputs were less effective in feature separation.

For pooling methods, maximum pooling was the most effective, as it emphasizes the strongest features and enhances semantic representation. In contrast, average pooling may dilute important information, and using only the first token fails to capture the sentence’s overall meaning. The optimal configuration for text classification thus involved a 0.85 neuron ratio, activation layer outputs, and maximum pooling.

4.4.2. Text Classification Task Based on Intermediate Layer Neurons

Using the parameters obtained in the previous section, we conducted a series of controlled experiments to evaluate the impact of different parameter configurations on model performance and explore the contribution of each module. The specific experimental setups are as follows:

Baseline model group: The pre-trained RoBERTa model was used without incorporating any additional modules, serving as the experimental benchmark.

Internal neuron group: This group incorporated only the internal neuron mechanism to evaluate its standalone contribution to model performance.

Internal neuron and k-value combination group: Building on the internal neuron mechanism, this group added modules such as multi-head attention and varied the k-value to analyze its specific impact on model performance: 3.1. k = 5, 3.2. k = 10, 3.3. k = 2.5.

Group of combinations of inconsistency parameters: Since k = 5 produced the best results, the effect of different inconsistency parameters on model performance was evaluated based on this configuration: 4.1. Inconsistency ( = 0.1, = 0.1, = 0.8), 4.2. Inconsistency ( = 0, = 0, = 1), 4.3. Inconsistency ( = 0.3, = 0.1, = 0.6).

As shown in Table 3, we also conducted extensive experiments on various inconsistency parameter combinations but found their effects were not as favorable as those of group 4.1. Thus, their results were excluded from the table of final experimental results. These detailed experimental designs aim to provide a deeper understanding of the contributions of each parameter and module-to-model performance, offering valuable insights for future research.

Table 3.

Table of comparison of various model performances on our dataset.

To comprehensively evaluate the performance of our model, we selected several representative methods for comparison on two additional datasets. BERT-GCN [31] combines BERT with Graph Convolutional Networks (GCNs) to capture both local and global features of the text. Att-CNLSTM and Att-BILSTM [32] leverage attention mechanisms and long short-term memory (LSTM) networks to enhance sequence modeling capabilities. KGBGCN [33] is based on knowledge graphs and BERT, employing Graph Convolutional Networks to extract structured knowledge features. TinyBERT-CNN [34] integrates knowledge-distillation techniques with convolutional neural networks (CNNs), achieving model compression while maintaining high accuracy. The experimental results are shown in Table 4.

Table 4.

Table of comparison of various model performances on the FBS and THUCNews datasets.

In the experiment with parameter k, a smaller k = 2.5 limited the model’s ability to capture rich semantic information, while a larger k = 10 increased feature richness but also introduced redundancy, raised complexity, and reduced efficiency. In contrast, k = 5 provided an optimal balance, enhancing both feature representation and computational efficiency by enabling the multi-head attention mechanism to capture global and local dependencies effectively.

The optimal regularization coefficient distribution of 0.1-0.1-0.8 highlighted the importance of representation inconsistency in text classification. By diversifying attention head outputs, inconsistency improved the model’s discriminative ability, suggesting a greater reliance on global semantic representation rather than position-specific features. Low subspace and position inconsistency coefficients show that the model naturally learns feature diversity without strong constraints, emphasizing the task’s preference for global context.

Furthermore, the alternative configuration of 0-0-1 performed worse than 0.1-0.1-0.8, highlighting that moderate regularization in subspace and position is essential. Without this, the attention mechanism becomes redundant, reducing output regularization effectiveness. Thus, moderate regularization enhances semantic feature extraction and improves classification performance.

4.4.3. Rumor Classification Performance

In order to further verify the classification performance of the model on rumor data, we conducted a rumor data classification task. In the experiment, we compared our method with the method proposed by Ma [28], which was proposed to solve the classification problem in Twitter 15 and Twitter 16 data. They proposed two new rumor-detection methods based on RvNNs (recursive matrix-vector neural networks), namely bottom-up RvNNs (BU-RvNNs) and top-down RvNNs (TD-RvNNs), and we also added the Chinese Weibo dataset, which can test the performance of the model on Chinese text data. We evaluated them on these datasets, and the evaluation indicators included NR (non-rumor); FR (false rumor); TR (true rumor); UR (unverified rumor); the results are shown in Table 5.

Table 5.

Results of rumor detection on various models based on 3 datasets.

As shown in Table 5, our models, Model 2 and Model 3, achieved the best performance on all three datasets, with the highest accuracy and F1 metric. For the English text data, Twitter 15 and Twitter 16, the model improved by an average of six percentage points. On Chinese Weibo data, the model shows significant performance due to the benchmark model. We believe that our method is based on a large amount of Chinese data and has performed relatively well in feature extraction and modeling for Chinese data.

Table 6 shows the ablation experiment. We conducted tests on three different datasets before and after using neuron selection. The results showed that after using neuron selection, the accuracy and F1 metric of the model were significantly improved, and there were improvements on all three datasets. This indicates that our method has certain adaptability and robustness.

Table 6.

Comparison of neuron selection, before and after, on three datasets.

5. Conclusions

This study proposes the LENS framework and its extension, LENS-RMHR, aiming to enhance text classification by utilizing neuron information from intermediate LLM layers. Through linear detection and sparse integration, we focus on key classification neurons and reduce noise from irrelevant ones, improving accuracy and efficiency. We also develop an eight-category telecommunications fraud dataset and innovatively combine the inconsistency loss function with the cross-entropy loss function to boost multi-class classification performance. Experimental results show that LENS-RMHR performs well across benchmarks, particularly in fraud detection, effectively identifying and classifying various fraud types. Thus, this research offers a novel solution for text classification and provides valuable datasets and strategies for telecommunication fraud detection.

However, LENS-RMHR has room for further development regarding generalization across domains and languages. Future research could expand its application into diverse fields and cross-lingual tasks, as well as increase dataset diversity through varied cases and data augmentation to enhance robustness. Additionally, exploring new loss functions tailored to specific tasks may yield better results. In conclusion, LENS-RMHR is a promising tool for text classification and fraud detection. However, further research and applications are needed to fully realize its potential, setting the stage for future breakthroughs.

UTF8gbsn

Author Contributions

The authors confirm their contribution to the paper as follows: study conception and design: L.J., C.Z., X.Q. and J.L.; data collection: J.L., G.H., H.L. and X.Q.; analysis and interpretation of results: J.L., G.H., H.L. and Y.Z.; draft manuscript preparation: J.L., G.H., H.L. and X.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the Guangxi Key Research and Development Program (No. Guike AB23075178, No. Guike AB22080047), the National Natural Science Foundation of China (No. 62267002), and the Guangxi Key Laboratory of Image and Graphic Intelligent Processing (No. GIIP2207).

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Liu, A.; Piao, S. Research on Laws, Regulations, and Policies of Internet Fraud. J. Educ. Humanit. Soc. Sci. 2024, 28, 257–264. [Google Scholar] [CrossRef]

- Qu, J.; Cheng, H. Policing telecommunication and cyber fraud: Perceptions and experiences of law enforcement officers in China. Crime Law Soc. Change 2024, 82, 283–305. [Google Scholar] [CrossRef]

- Maras, M.H.; Ives, E.R. Deconstructing a form of hybrid investment fraud: Examining ‘pig butchering’ in the united states. J. Econ. Criminol. 2024, 5, 100066. [Google Scholar] [CrossRef]

- Han, B. Individual Frauds in China: Exploring the Impact and Response to Telecommunication Network Fraud and Pig Butchering Scams. Ph.D. Thesis, University of Portsmouth, Portsmouth, UK, 2023. [Google Scholar]

- Luo, B.; Zhang, Z.; Wang, Q.; Ke, A.; Lu, S.; He, B. Ai-powered fraud detection in decentralized finance: A project life cycle perspective. ACM Comput. Surv. 2024, 57, 1–38. [Google Scholar] [CrossRef]

- Chatterjee, P.; Das, D.; Rawat, D.B. Digital twin for credit card fraud detection: Opportunities, challenges, and fraud detection advancements. Future Gener. Comput. Syst. 2024, 158, 410–426. [Google Scholar] [CrossRef]

- Shungube, P.S.; Bokaba, T.; Ndayizigamiye, P.; Mhlongo, S.; Dogo, E. A Deep Learning Approach for Healthcare Insurance Fraud Detection. Res. Sq. 2024. [Google Scholar] [CrossRef]

- Zhuoxian, L.; Tuo, S.; Xiaofeng, H. A Text Classification Model Combining Adversarial Training with Pre-trained Language Model and neural networks: A Case Study on Telecom Fraud Incident Texts. arXiv 2024, arXiv:2411.06772. [Google Scholar]

- Cao, J.; Cui, X.; Zheng, C. Tfd-gcl: Telecommunications fraud detection based on graph contrastive learning with adaptive augmentation. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–7. [Google Scholar]

- Wu, J.; Hu, R.; Li, D.; Ren, L.; Huang, Z.; Zang, Y. Beyond the individual: An improved telecom fraud detection approach based on latent synergy graph learning. Neural Netw. 2024, 169, 20–31. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, B.; Lu, C.; Li, Z.; Duan, H.; Hao, S.; Liu, M.; Liu, Y.; Wang, D.; Li, Q. Lies in the air: Characterizing fake-base-station spam ecosystem in china. In Proceedings of the 2020 ACM SIGSAC Conference on Computer and Communications Security, Virtual Event, 9–13 November 2020; pp. 521–534. [Google Scholar]

- Li, J.; Zhang, C.; Jiang, L. Innovative Telecom Fraud Detection: A New Dataset and an Advanced Model with RoBERTa and Dual Loss Functions. Appl. Sci. 2024, 14, 11628. [Google Scholar] [CrossRef]

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.R.; Le, Q.V. Xlnet: Generalized autoregressive pretraining for language understanding. Adv. Neural Inf. Process. Syst. 2019, 32. Available online: https://proceedings.neurips.cc/paper_files/paper/2019/file/dc6a7e655d7e5840e66733e9ee67cc69-Paper.pdf (accessed on 1 May 2025).

- He, P.; Gao, J.; Chen, W. Debertav3: Improving deberta using electra-style pre-training with gradient-disentangled embedding sharing. arXiv 2021, arXiv:2111.09543. [Google Scholar]

- Gurnee, W.; Nanda, N.; Pauly, M.; Harvey, K.; Troitskii, D.; Bertsimas, D. Finding neurons in a haystack: Case studies with sparse probing. arXiv 2023, arXiv:2305.01610. [Google Scholar]

- Jiao, D.; Liu, Y.; Tang, Z.; Matter, D.; Pfeffer, J.; Anderson, A. SPIN: Sparsifying and Integrating Internal Neurons in Large Language Models for Text Classification. arXiv 2023, arXiv:2311.15983. [Google Scholar]

- Sun, C.J.; Ji, J.; Shang, B.; Liu, B. CCL23-Eval 任务 6 总结报告: 电信网络诈骗案件分类 (Overview of CCL23-Eval Task 6: Telecom Network Fraud Case Classification). In Proceedings of the 22nd Chinese National Conference on Computational Linguistics (Volume 3: Evaluations), Harbin, China, 3–5 August 2023; pp. 193–200. [Google Scholar]

- Hajiabadi, H.; Molla-Aliod, D.; Monsefi, R.; Yazdi, H.S. Combination of loss functions for deep text classification. Int. J. Mach. Learn. Cybern. 2020, 11, 751–761. [Google Scholar] [CrossRef]

- Li, J.; Tu, Z.; Yang, B.; Lyu, M.R.; Zhang, T. Multi-head attention with disagreement regularization. arXiv 2018, arXiv:1810.10183. [Google Scholar]

- Awasthi, P.; Mao, A.; Mohri, M.; Zhong, Y. H-consistency bounds for surrogate loss minimizers. In Proceedings of the International Conference on Machine Learning. PMLR, London, UK, 8–11 November 2022; pp. 1117–1174. [Google Scholar]

- Mao, A.; Mohri, M.; Zhong, Y. Cross-entropy loss functions: Theoretical analysis and applications. In Proceedings of the International Conference on Machine Learning. PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 23803–23828. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. Available online: https://proceedings.neurips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf (accessed on 1 May 2025).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The llama 3 herd of models. arXiv 2024, arXiv:2407.21783. [Google Scholar]

- Liu, A.; Feng, B.; Xue, B.; Wang, B.; Wu, B.; Lu, C.; Zhao, C.; Deng, C.; Zhang, C.; Ruan, C.; et al. Deepseek-v3 technical report. arXiv 2024, arXiv:2412.19437. [Google Scholar]

- Liu, Y.; He, M.; Shi, M.; Jeon, S. A novel model combining transformer and bi-lstm for news categorization. IEEE Trans. Comput. Soc. Syst. 2022, 11, 4862–4869. [Google Scholar] [CrossRef]

- Ma, J.; Gao, W.; Wong, K.F. Detect rumors in microblog posts using propagation structure via kernel learning. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (ACL 2017), Vancouver, ON, Canada, 30 July–4 August 2017; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017. [Google Scholar]

- Huang, X. Weibo Rumor Event Detection Dataset. 2022. Available online: https://www.scidb.cn/en/detail?dataSetId=1085347f720f4cfc97a157e469734a66 (accessed on 1 March 2025).

- Cui, Y.; Che, W.; Liu, T.; Qin, B.; Yang, Z. Pre-training with whole word masking for chinese bert. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 3504–3514. [Google Scholar] [CrossRef]

- Zhang, X.; Huang, R.; Jin, L.; Wan, F. A BERT-GCN-Based Detection Method for FBS Telecom Fraud Chinese SMS Texts. In Proceedings of the 2023 4th International Conference on Intelligent Computing and Human-Computer Interaction (ICHCI), Guangzhou, China, 4–6 August 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 448–453. [Google Scholar]

- Ruan, J.; Caballero, J.M.; Juanatas, R.A. Chinese news text classification method based on attention mechanism. In Proceedings of the 2022 7th International Conference on Business and Industrial Research (ICBIR), Bangkok, Thailand, 19–20 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 330–334. [Google Scholar]

- Wang, Y.; Wang, Y.; Hu, H.; Zhou, S.; Wang, Q. Knowledge-graph-and gcn-based domain chinese long text classification method. Appl. Sci. 2023, 13, 7915. [Google Scholar] [CrossRef]

- Yu, H.; Liu, C.; Zhang, L.; Wu, C.; Liang, G.; Escorcia-Gutierrez, J.; Ghoneim, O.A. An intent classification method for questions in “Treatise on Febrile diseases” based on TinyBERT-CNN fusion model. Comput. Biol. Med. 2023, 162, 107075. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).