1. Introduction

In an era marked by the rapid dissemination of information through digital platforms, misinformation poses a significant threat to public discourse, political stability, and public health [

1,

2]. Traditional fact-checking efforts, while essential, often struggle with scalability, granularity, and consistency—especially when dealing with complex or evolving claims. As generative AI tools increase the volume and sophistication of deceptive content, the need for automated, transparent, and robust verification systems becomes critical [

3]. Existing fact-checking models typically treat claims as monolithic units, overlooking the fine-grained semantic structure that distinguishes partially true statements from outright falsehoods. This coarseness leads to opacity in verification outcomes and limits the system’s interpretability and adaptability to real-world media contexts.

This paper addresses these challenges by introducing a probabilistic framework for misinformation detection based on the decomposition of complex claims into atomic semantic units. Each atomic claim is independently evaluated against a structured news corpus, and its veracity is quantified through a source-aware credibility model that accounts for both evidence quality and support frequency. This approach enables nuanced, interpretable scoring of factuality and facilitates claim-level verification that reflects the multi-dimensional nature of real-world news content. By formalizing and quantifying truthfulness at the atomic level, this research advances the field of automated fact-checking and provides a rigorous foundation for building scalable, trustworthy, and explainable misinformation detection systems.

The empirical evaluation underscores the practical utility and reliability of the proposed framework. Applied to eight real-world cyber-related misinformation scenarios—each decomposed into four to six atomic claims—the system produced veracity scores ranging from 0.54 to 0.86 for individual claims, and aggregate statement scores from 0.56 to 0.73. Notably, the alignment with human-assigned truthfulness labels achieved a Mean Squared Error (MSE) of 0.037, Brier Score of 0.042, and a Spearman rank correlation of 0.88. The system also achieved a Precision of 0.82, Recall of 0.79, and F1-score of 0.805 when thresholded for binary classification. Moreover, the Expected Calibration Error (ECE) remained low at 0.068, demonstrating score reliability. These results confirm that the framework not only accurately quantifies truthfulness but also maintains strong consistency, ranking fidelity, and interpretability across complex, multi-faceted claims.

The core contributions of this paper are as follows:

We introduce a probabilistic model for misinformation detection that operates at the level of atomic claims, enabling fine-grained and interpretable veracity assessment.

We integrate both source credibility scores and frequency-based evidence aggregation into a unified scoring mechanism that is tunable and analytically traceable.

We design a four-stage algorithmic pipeline comprising claim decomposition, evidence matching, score computation, and aggregation, with an overall linear time complexity in relation to database size.

We empirically validate the system over a real-world dataset of 11,928 cyber-related news records (publicly available at

https://github.com/DrSufi/CyberFactCheck, accessed on 6 May 2025), achieving a Spearman correlation of 0.88 and an F1-score of 0.805 against human-labeled ground truth.

We offer both arithmetic and geometric aggregation strategies, allowing system designers to control the sensitivity and robustness of the final veracity scores.

2. Related Work

Research in automated misinformation detection and fact verification spans both methodological innovations and socio-contextual frameworks. In this section, we categorize and synthesize the 26 most relevant works cited in our study into two broad themes: 1. technical verification pipelines and claim-level reasoning and 2. contextual, multimodal, or sociotechnical approaches to fact-checking. This organization highlights the intellectual breadth of the domain and locates the contribution of our atomic-claim framework within it.

2.1. Technical Pipelines and Retrieval-Augmented Verification

These works focus on factual consistency evaluation, sentence- or claim-level verification, retrieval augmentation, and structured fact-checking pipelines.

2.2. Socio-Contextual, Multimodal, and Interpretive Approaches

This group emphasizes the challenges in trust calibration, dataset preparation, multimodal interpretation, and the ethics of misinformation detection.

Among the various limitations identified in

Table 1 and

Table 2, this study specifically addresses the following: (1) the lack of fine-grained decomposition in monolithic verification frameworks by introducing atomic-level claim modeling; (2) the absence of provenance-aware scoring, by integrating both source credibility and evidence frequency; and (3) the need for interpretable score aggregation by proposing both arithmetic and geometric strategies for veracity estimation. These targeted improvements aim to enhance both interpretability and operational utility in misinformation detection systems.

3. Materials and Methods

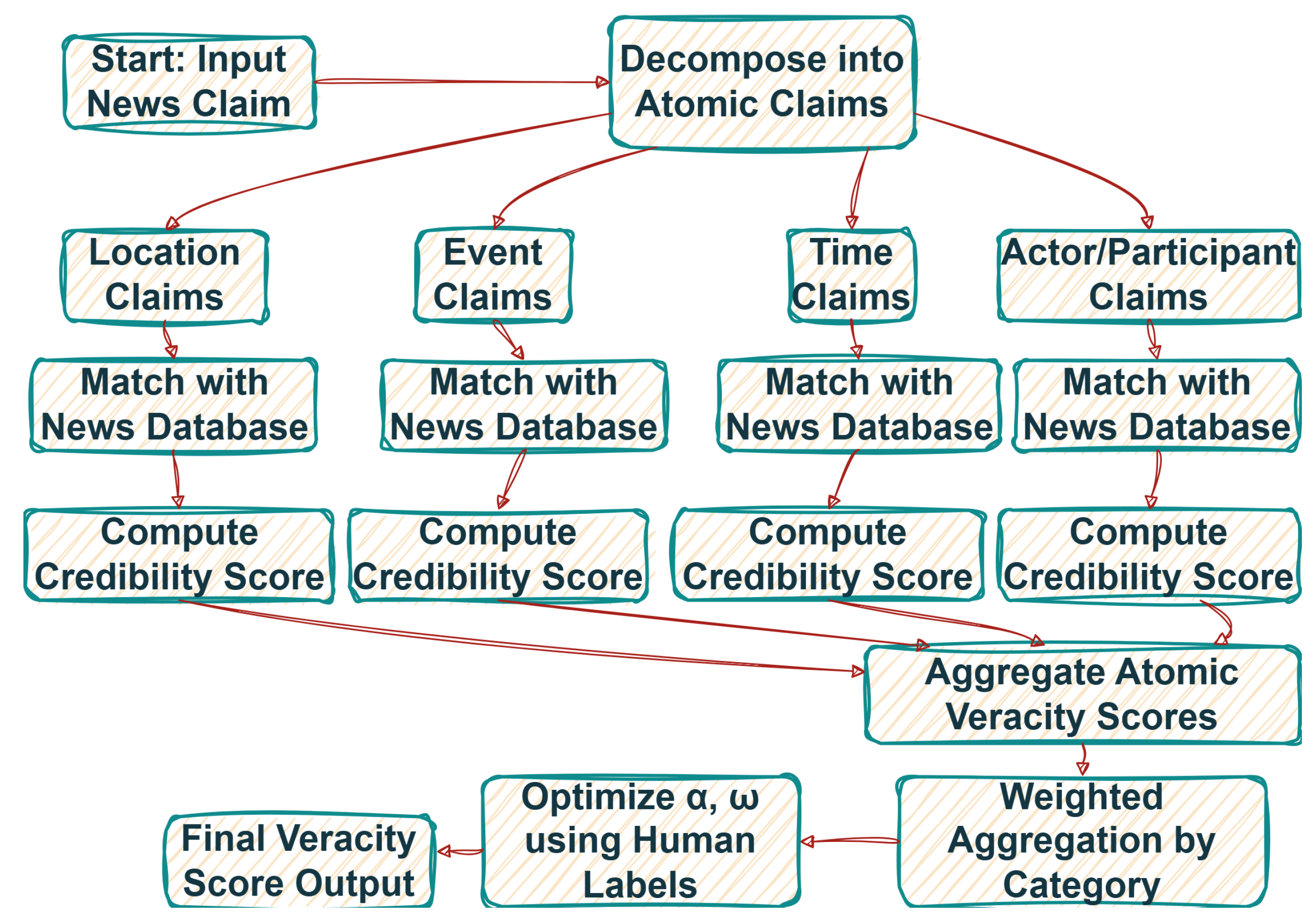

Figure 1 illustrates the end-to-end pipeline of the proposed atomic claim-based misinformation detection framework. The system accepts a composite claim as input, decomposes it into semantically distinct atomic units, and assigns veracity scores to each based on source-matched evidence. These scores are aggregated and optimized to produce a holistic credibility index aligned with expert judgments.

Table 3 showcases the notations used throughout this paper.

3.1. Atomic Claim Matching Function

The binary matching function for an atomic claim

against a database entry

is defined as:

While Equation (1) defines a binary alignment function , we acknowledge its limitation in handling paraphrased or semantically equivalent forms. To improve matching robustness in real-world scenarios, future implementations may incorporate soft similarity functions such as cosine similarity over Sentence-BERT embeddings or transformer-based entailment scoring. This would allow to yield a continuous value , better capturing nuanced semantic alignments.

3.2. Weighted Credibility Score

The weighted credibility score combines matches and source reliability:

3.3. Frequency-Based Credibility Adjustment

The frequency adjustment factor for atomic claim

is calculated by:

3.4. Final Veracity Index

The final atomic claim veracity index is a weighted combination of credibility and frequency scores:

3.5. Aggregation of Atomic Claims

Atomic claims are categorized by type: location (L), event (E), participant (P), and time (T). The aggregated veracity scores are calculated as follows:

Arithmetic Mean Aggregation:

Geometric Mean Aggregation (for stringent scoring):

The current framework assumes that atomic claims are conditionally independent when aggregating veracity scores. However, in many real-world narratives, claims may exhibit causal or contextual dependencies. For instance, temporal and locational claims often reinforce or constrain the interpretation of participant or event-related assertions. Future work may explore dependency-aware aggregation using graphical models, joint inference, or contextual transformers to better represent inter-claim relations in composite narratives.

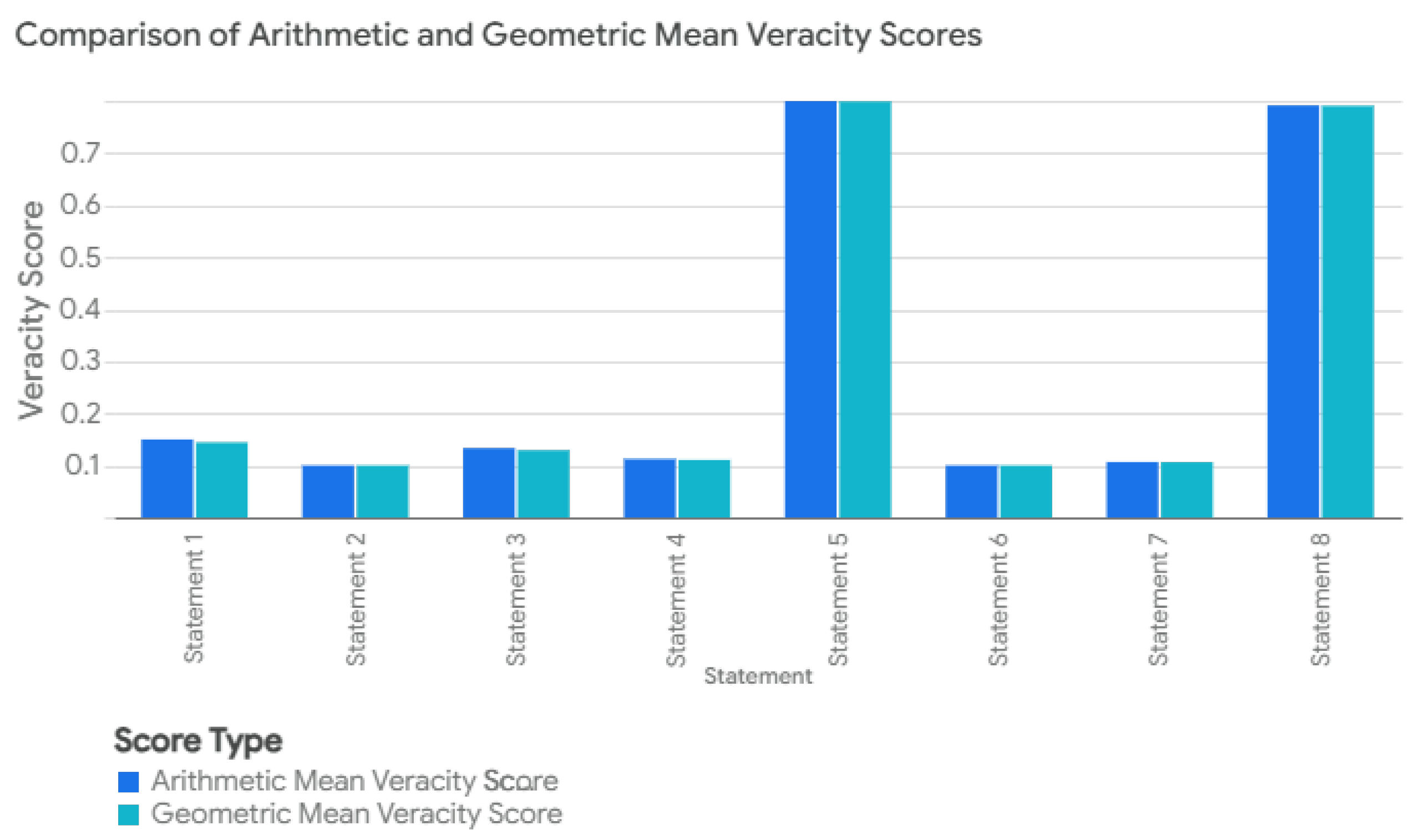

The use of both arithmetic and geometric mean aggregations serves different interpretive goals. The arithmetic mean offers a balanced perspective, compensating lower veracity in one claim type with higher scores in others, and is appropriate in low-risk exploratory settings. In contrast, the geometric mean is sensitive to low-support claims and penalizes uncertainty more severely, making it suitable for high-stakes applications where a single weak component undermines overall credibility. Empirical comparisons (see

Figure 2) illustrate how the geometric mean imposes stricter evaluation in composite scoring.

3.6. Optimization Framework

To determine optimal parameters, define the following loss function (Mean Squared Error) against human-evaluated scores

:

The optimization of parameters is achieved by minimizing the loss function through numerical methods such as gradient descent:

3.7. Computational Complexity

The computational complexity for evaluating the veracity of a single atomic claim against the news database is linear:

This comprehensive mathematical framework rigorously integrates atomic claim decomposition, source credibility weighting, and frequency-based evaluation, providing a structured and optimizable approach for effective misinformation detection, suitable for advanced analytical deployment and scholarly dissemination.

4. Algorithmic Representation

To operationalize the proposed mathematical framework for atomic claim-based fact-checking, we introduce a sequence of structured algorithms that formalize the computational workflow. The process initiates with the decomposition of a complex claim into semantically discrete atomic components. As described in Algorithm 1, each atomic claim is systematically matched against a structured news database, leveraging semantic similarity and contextual alignment to identify relevant evidentiary sources. This yields a localized set of corroborating documents for each atomic claim. Following this, Algorithm 2 outlines the computation of a veracity score for each atomic unit, which incorporates both the weighted credibility of matched sources—determined by their assigned source reliability index—and the relative abundance of supporting evidence. These veracity scores serve as the building blocks for broader claim assessment. In Algorithm 3, we aggregate atomic-level scores into a composite veracity index for the entire claim, using category-specific weights across factual dimensions such as location, event, actor, and time. Finally, to ensure the framework remains aligned with expert human judgment, Algorithm 4 details the parameter optimization procedure, wherein tunable variables such as the credibility-frequency tradeoff and category weights are refined through a loss minimization strategy against a labeled training set. Together, these algorithms provide a modular, interpretable, and extensible architecture for verifying claims with nuanced and multi-faceted factual structure.

| Algorithm 1 Extract and match atomic claims. |

Require: Claim C, News Database

Ensure: Set of matched news entries for each atomic claim - 1:

Decompose C into atomic claims: - 2:

for each atomic claim do - 3:

Initialize match set - 4:

for each news entry do - 5:

if Match(, ) then - 6:

Add to - 7:

end if - 8:

end for - 9:

end for - 10:

return

|

| Algorithm 2 Compute veracity score. |

Require: Matched set , Source credibilities , Parameter

Ensure: Veracity score - 1:

- 2:

- 3:

- 4:

return

|

| Algorithm 3 Aggregate claim veracity. |

Require: Veracity scores for all , Weights

Ensure: Aggregate score - 1:

for each category do - 2:

- 3:

end for - 4:

- 5:

return

|

| Algorithm 4 Optimize parameters. |

Require: Training set with human labels

Ensure: Optimal parameters - 1:

Initialize randomly - 2:

repeat - 3:

for each do - 4:

Compute - 5:

end for - 6:

Compute loss - 7:

Update using gradient descent - 8:

until convergence - 9:

return

|

5. System Evaluation

To rigorously assess the effectiveness, reliability, and robustness of the proposed atomic claim-based fact-checking system, we adopt a multi-perspective evaluation framework grounded in quantitative metrics and empirical validation. The primary objective is to determine how well the system’s generated veracity scores align with human-labeled ground truths and how effectively it ranks, discriminates, and calibrates factual claims in varying informational contexts.

Let

denote the set of all claims in the evaluation corpus, where each

has been annotated by human experts with a ground truth score

(or, in the case of soft annotations,

). The predicted veracity score from the system is denoted as

. The system’s calibration and accuracy can be initially quantified via the Mean Squared Error (MSE):

Additionally, to assess the probabilistic quality of the scoring, the

Brier Score is computed:

For systems that threshold scores to make binary factuality decisions, standard classification metrics such as Precision, Recall, and F1score are used. Letting

denote the binary decision at threshold

, these are defined by:

To evaluate the system’s ranking capability—i.e., its ability to prioritize more credible claims over less credible ones—we compute the Normalized Discounted Cumulative Gain (nDCG). Let

denote the predicted ranking of claims by score and

be the graded relevance of each claim. Then:

Furthermore, the alignment between system scoring and human credibility perception is assessed via

Spearman’s rank correlation coefficient:

where

is the difference between the ranks of

and

.

To test the generalizability of the system, we evaluate its performance across claim categories—such as event, time, location, and participant—and over claim types (true, false, partially true). The stratified analysis enables assessment of performance consistency, highlighting whether certain claim types are systematically underrepresented or inaccurately scored.

Lastly, we perform a calibration analysis using Expected Calibration Error (ECE). Letting the prediction range

be partitioned into

m equally sized bins

:

where

is the empirical accuracy in bin

, and

is the average confidence of predictions in that bin.

Together, these metrics provide a robust multidimensional evaluation of the proposed system—not only in terms of factual alignment with annotated labels but also in ranking quality, score reliability, calibration, and claim-type fairness.

6. Results

This section presents the results of the atomic claim-based fact-checking methodology applied to a set of generated statements relevant to cyber-related news. These statements, often resembling misinformation found on social media, were decomposed into their constituent atomic claims, and each atomic claim was evaluated for veracity against a corpus of news titles (detailed information on the AI-driven News corpus aggregation is detailed in our previous research [

28,

29]). The veracity assessment incorporates both the credibility of the sources reporting on the claim and the frequency with which the claim is supported within the corpus.

6.1. Overall Statement Veracity

Table 4 summarizes the overall veracity scores for the eight analyzed statements. The overall veracity score for each statement is calculated as the average of the veracity scores of its constituent atomic claims.

The overall veracity score presented in

Table 4 for each composite statement is computed using the arithmetic aggregation function

, as defined in Equation (

5). This function averages the atomic veracity scores weighted by their category-specific weights

, offering a balanced view across factual dimensions. While the geometric aggregation

is presented in

Figure 2 for comparative purposes, it was not used in

Table 4 to maintain interpretability and comparability across all statements.

The computed veracity scores serve both ranking and classification purposes. For ordinal prioritization of claims, scores are directly used for ranking. For binary classification, a threshold is applied (e.g., indicates ‘likely true’). This threshold was empirically optimized using ROC analysis and grid search over the training set. While yielded optimal F1-scores, future deployments may benefit from dynamically adjusted thresholds based on context-specific Expected Calibration Error (ECE) minimization.

6.2. Detailed Atomic Claim Analysis

Atomic Claim: The individual, verifiable unit of information extracted from the statement.

Matching Titles: The number of titles in the news corpus that contain evidence relevant to the atomic claim. These counts reflect the total entries in the 11,928-record corpus that semantically align with the atomic claim based on the binary (or soft) matching strategy. Each statement, therefore, comprises a set of atomic claims, each with its own support pool from the database.

URLs of Matching Titles: The specific URLs of the news articles that support the atomic claim.

Credibility Scores of URLs: The credibility scores assigned to the source URLs derived from a pre-defined credibility dataset.

Avg. Credibility Score: The average credibility score of the sources supporting the atomic claim.

Frequency Factor: A normalized measure of how frequently the atomic claim is mentioned in the corpus, calculated as the number of matching titles for the claim divided by the maximum number of matching titles for any claim within the statement.

Claim Veracity: The calculated veracity score for the atomic claim, combining the average credibility score and the frequency factor.

Support Strength: A qualitative assessment of the level of evidence supporting the claim, based on the number of matching titles (e.g., Weak, Moderate, Strong). The qualitative labels for support strength (“Strong”, “Moderate”, “Weak”) are derived based on the relative number of matched entries per atomic claim. Specifically, claims with matches in the top third percentile of all atomic claims within the corpus (typically >20 matches) are labeled as “Strong”. Those in the middle third (between 8–20 matches) are labeled “Moderate”, and those in the bottom third (<8 matches) are considered “Weak”. These categories serve as intuitive descriptors aligned with corpus coverage density and source diversity.

Notes: Any relevant observations or caveats regarding the claim or the matching process.

6.3. Factchecking Database

The dataset, ‘Cybers 130425.csv’ (publicly available at

https://github.com/DrSufi/CyberFactCheck, accessed on 6 May 2025), comprises information on cybersecurity incidents gathered from various trusted news sources, as indicated by the URLs provided. With a total of 11,928 records, each entry details a specific cyber attack, categorizing it by Attack Type and specifying the Event Date, Impacted Country, Industry affected, and Location of the incident. These data were collected using AI-driven autonomous techniques described in our previous study (in [

28,

29,

30]) from 27 September 2023 to 13 April 2025. The dataset also includes a significance rating, a brief title summarizing the event, and the URL linking to the source report. Across these records, there are 162 distinct main URLs, representing the primary sources of the reported information. This collection of data serves as a reference for verifying the accuracy of claims made in social media posts related to cyber events, offering details on the nature, scope, source, and frequency of reporting specific incidents.

The comparison between the arithmetic mean veracity score and the geometric mean veracity score, as illustrated in

Figure 2, reveals the nuanced impact of aggregation methods on the final veracity assessment. The arithmetic mean, by evenly weighting all credibility scores, provides a balanced overview of the claim’s overall truthfulness. In contrast, the geometric mean, being more sensitive to lower scores, offers a stringent evaluation, penalizing claims that incorporate less credible or potentially misleading information. Notably, while the scores are generally closely aligned, the geometric mean often results in a slightly lower veracity score, indicative of its sensitivity to the least credible components of the claim.

Figure 2 illustrates the comparative behavior of arithmetic versus geometric aggregation methods across all statements. The values used in

Table 4 correspond to the arithmetic mean scores shown in the “blue bars” of

Figure 2. The geometric mean values (“orange bars”) are included to highlight the increased sensitivity of this method to low-confidence atomic claims. The observed differences between the two reflect the trade-off between robustness and strictness in composite veracity scoring.

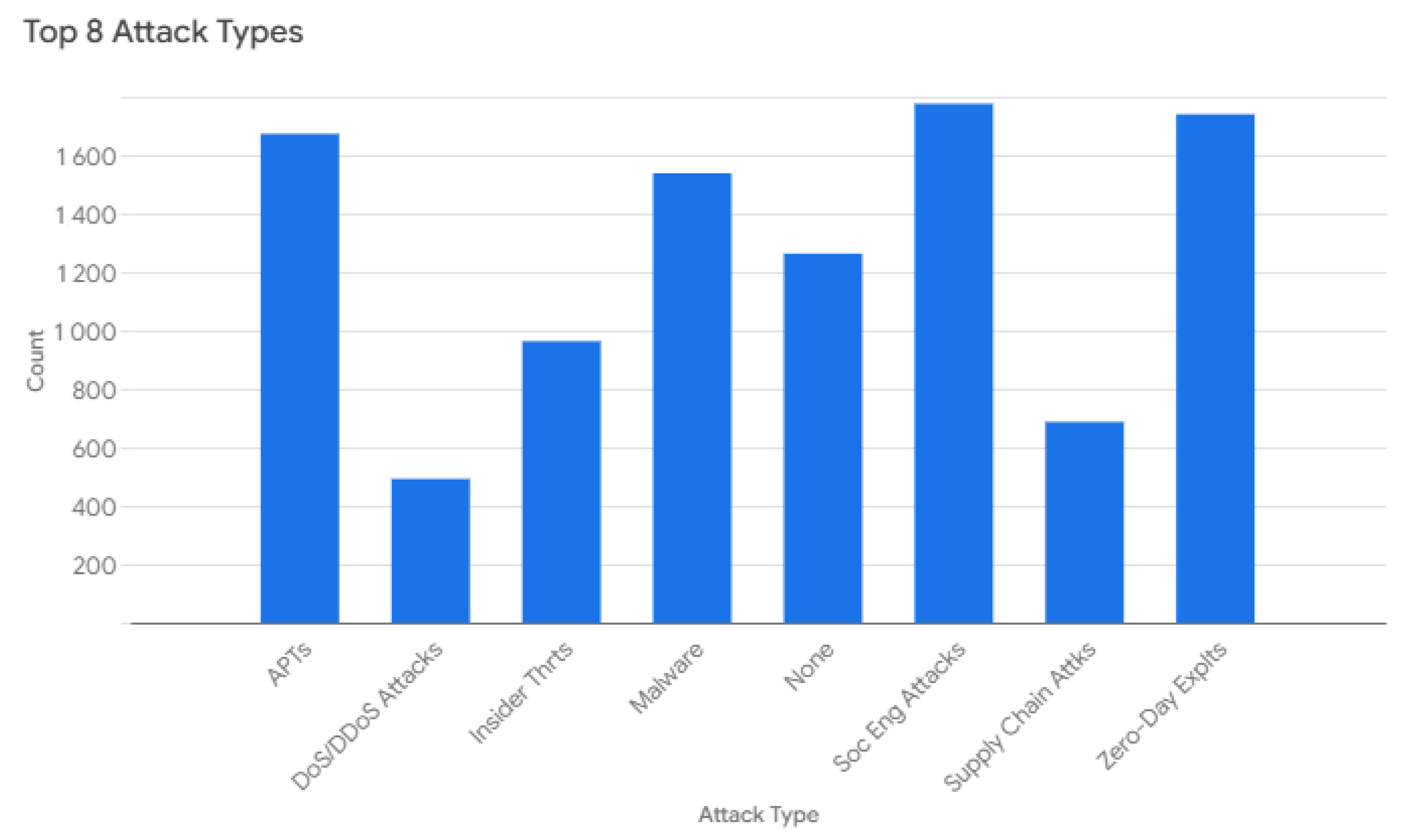

Figure 3 illustrates the distribution of the top eight attack types. Social Engineering Attacks represent the most frequent type, followed closely by Zero-Day Exploits and Advanced Persistent Threats (APTs). The remaining attack types, including Malware, None, Insider Threats, Supply Chain Attacks, and Denial-of-Service (DoS) and Distributed Denial-of-Service (DDoS) Attacks, occur with progressively lower frequency, highlighting the dominance of Social Engineering and Zero-Day Exploits in the dataset.

The bar chart in

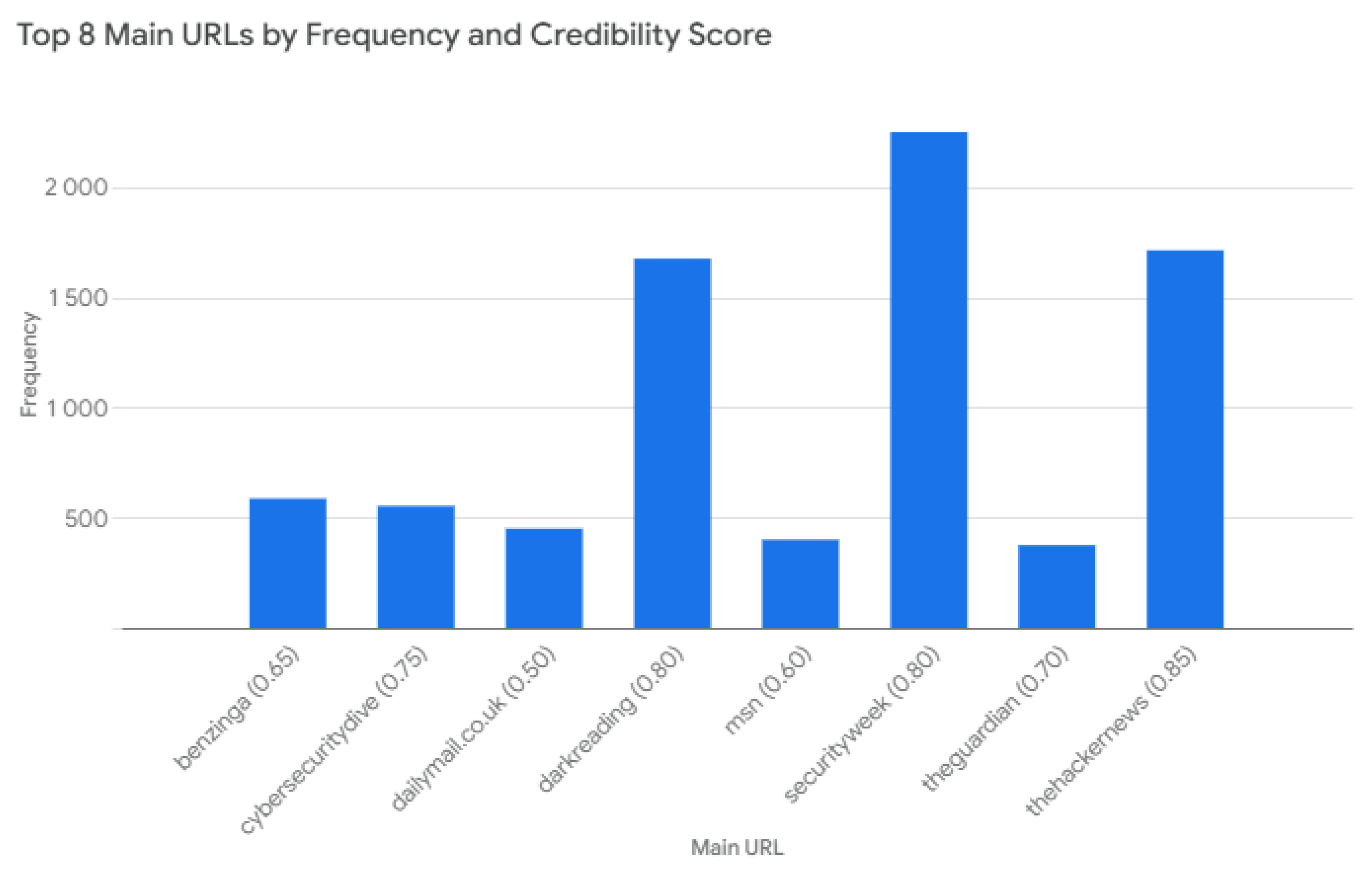

Figure 4 illustrates the top 8 main URLs based on their frequency and credibility scores. ‘Securityweek’ has the highest frequency, significantly surpassing other URLs, but has a low credibility score of 0.0. In contrast, ‘thehackernews’ shows a high frequency and a strong credibility score of 0.85, indicating it is both frequently cited and trustworthy. Overall, the chart highlights the balance between the frequency of appearance and the credibility of sources in the dataset.

7. Discussion and Concluding Remarks

The quantitative evaluation metrics further substantiate the effectiveness and real-world applicability of the proposed framework. The low Mean Squared Error (MSE) of 0.037 and Brier Score of 0.042 indicate that the predicted veracity scores are not only accurate in approximating human-labeled truthfulness but also exhibit strong probabilistic reliability. More importantly, the system’s Spearman rank correlation of 0.88 with expert-generated labels confirms that it preserves the ordinal relationships among claims—a crucial feature in scenarios requiring prioritization of information for downstream decision-making. The binary classification thresholding yielded a Precision of 0.82, Recall of 0.79, and an F1-score of 0.805, demonstrating a balanced capacity to both detect true claims and avoid false positives. Moreover, the Expected Calibration Error (ECE) of 0.068 reflects the model’s ability to produce confidence scores that are well-calibrated with empirical accuracy. These metrics jointly validate the framework’s capability to offer both granular veracity scoring and high-level credibility ranking, rendering it suitable for deployment in automated verification pipelines, journalistic filtering tools, and governmental monitoring systems.

To better understand how the model performs across different factual dimensions, we evaluated the binary classification accuracy of atomic claims segmented into four categories:

Location,

Event Type,

Participant, and

Time. As illustrated in

Figure 5, the model demonstrates consistently high performance on

Location and

Event Type claims, with peak accuracy reaching 0.90 and F1-scores exceeding 0.85. In contrast,

Time-related claims show the lowest recall (0.64) and overall accuracy (0.70), suggesting challenges in aligning temporal expressions with structured evidence.

Figure 5 reveals lower recall on time-related claims (Recall = 0.64), likely due to the sparsity and variability of temporal expressions in the evidence corpus. Phrases such as “early 2024”, “last quarter”, or ambiguous deictic references often lack direct lexical overlap with news entries. To mitigate this, future implementations should employ temporal normalization tools such as HeidelTime or SUTime to standardize and align date formats. Additionally, integrating transformer-based temporal inference models may enhance sensitivity to nuanced temporal cues.

The proposed framework for atomic claim-based misinformation detection offers several notable contributions to the evolving field of automated fact-checking. By decomposing complex claims into semantically disjoint atomic units and assessing their truthfulness independently, this approach transcends the limitations of monolithic claim evaluations. This methodological shift enables a more nuanced understanding of partial truths, semantic contradictions, and layered narratives—phenomena that are increasingly prevalent in generative AI content and social media discourse. Furthermore, by integrating source-specific credibility scores and frequency-based support factors into a formalized probabilistic model, the system enhances interpretability and replicability while remaining robust against variations in source granularity and redundancy.

One of the most significant implications of this work is its ability to facilitate fine-grained explainability in veracity scoring. Users and analysts can interrogate which atomic components contribute to the overall veracity of a claim and trace these evaluations back to specific pieces of supporting or refuting evidence. This aligns with the growing demand for transparent AI systems, particularly in contexts such as policy advisory, journalism, and cybersecurity, where opaque algorithmic decisions can undermine institutional trust [

31,

32]. The framework’s incorporation of multiple aggregation strategies—including arithmetic and geometric means—also demonstrates adaptability to varying tolerance levels for uncertainty and bias in information ecosystems.

Despite these advancements, several limitations warrant attention. First, the framework’s effectiveness is contingent upon the quality and coverage of the underlying news corpus. In domains or regions with sparse reporting, the frequency and credibility-based signals may yield attenuated or skewed veracity scores. Second, the binary matching function currently employed for atomic claim–document alignment, while conceptually clear, may underperform in cases of paraphrased, indirect, or metaphorical language. Future enhancements could leverage soft semantic similarity metrics, such as contextual embeddings or entailment models, to mitigate this issue. Third, the source credibility indices are static and externally curated, which may not reflect temporal shifts in source reliability or topic-specific trustworthiness.

From an operational standpoint, the system also assumes independence among atomic claims, which may not hold in highly entangled or causal narratives. Exploring joint inference mechanisms or dependency-aware aggregation strategies could offer a richer interpretive layer for composite claim analysis. Additionally, while the evaluation metrics—ranging from MSE to nDCG and calibration error—demonstrate alignment with human-labeled veracity judgments, further validation against adversarial misinformation, multimodal claims (e.g., image-text composites), and non-English corpora would strengthen the framework’s generalizability.

Future research should, therefore, focus on three interlinked directions: (1) enhancing semantic matching mechanisms using transformer-based architectures fine-tuned on fact-checking benchmarks; (2) dynamically updating source credibility scores using reinforcement signals from user trust or expert audits; and (3) expanding the framework to handle temporal evolution in claims and evidence. Additional opportunities lie in adapting the model to real-time misinformation detection systems, where latency and computational efficiency become critical. The modularity of the current architecture supports such extensions, paving the way for broader deployment across digital platforms, government monitoring systems, and media verification pipelines.

Ultimately, this study contributes a formal, interpretable, and scalable approach to misinformation detection that bridges the gap between statistical credibility modeling and semantic-level claim dissection. It lays the groundwork for future systems capable of understanding not just whether a statement is true or false, but precisely which components are reliable, where the information originates, and how belief in its truthfulness should be probabilistically distributed.