Abstract

Accurate stock price prediction requires the integration of heterogeneous data streams, yet conventional techniques struggle to simultaneously leverage fine-grained micro-stock features and broader macroeconomic indicators. To address this gap, we propose MambaLLM, a novel framework that fuses macro-index and micro-stock inputs through the synergistic use of state-space models (SSMs) and large language models (LLMs). Our two-branch architecture comprises (i) Micro-Stock Encoder, a Mamba-based temporal encoder for processing granular stock-level data (prices, volumes, and technical indicators), and (ii) Macro-Index Analyzer, an LLM module—employing DeepSeek R1 7B distillation—capable of interpreting market-level index trends (e.g., S&P 500) to produce textual summaries. These summaries are then distilled into compact embeddings via FinBERT. By merging these multi-scale representations through a concatenation mechanism and subsequently refining them with multi-layer perceptrons (MLPs), MambaLLM dynamically captures both asset-specific price behavior and systemic market fluctuations. Extensive experiments on six major U.S. stocks (AAPL, AMZN, MSFT, TSLA, GOOGL, and META) reveal that MambaLLM delivers up to a 28.50% reduction in RMSE compared with suboptimal models, surpassing traditional recurrent neural networks and MAMBA-based baselines under volatile market conditions. This marked performance gain highlights the framework’s unique ability to merge structured financial time series with semantically rich macroeconomic narratives. Altogether, our findings underscore the scalability and adaptability of MambaLLM, offering a powerful, next-generation tool for financial forecasting and risk management.

MSC:

68T07

1. Introduction

Stock price prediction is a pivotal task in financial analytics, driving advances in algorithmic trading, portfolio optimization, and systemic risk mitigation. Traditional econometric models such as ARIMA and GARCH, however, depend largely on linear assumptions and historical price trajectories, rendering them limited in their capacity to navigate volatile market environments [1,2,3]. More recent machine learning approaches—including LSTMs and transformers—have bolstered predictive prowess by capturing intricate temporal relationships, yet they still face critical bottlenecks [4,5,6,7,8,9,10,11]. Chief among these challenges are (i) the effective fusion of micro-stock inputs (e.g., intraday price–volume data and technical indicators) with macro-scale market signals (e.g., index movements and economic indicators), (ii) the high computational overhead of modeling extensive time series in real-time applications, and (iii) the underutilization of unstructured data, such as macroeconomic narratives and market-related news content.

Encouraging progress in state-space models (SSMs), particularly the Mamba architecture, has begun to address these constraints by leveraging hardware-friendly state representations that improve efficiency on long-sequence tasks [12,13,14]. Recent research has applied advanced neural architectures to capture temporal dependencies in micro-level stock data, including the development of Mamba-based models such as MambaStock and S-Mamba [15]. These models exploit the linear state-space modeling power of Mamba to efficiently handle long-range dependencies and outperform traditional Transformer and Long Short-Term Memory (LSTM) architectures in many time series forecasting tasks. However, existing Mamba-based approaches primarily focus on micro-level signals and fail to integrate macro-level, text-driven market information, leading to a partial view of the market’s driving forces.

At the same time, large language models (LLMs) have emerged as powerful tools for extracting meaningful insights from unstructured textual data, enabling fine-grained interpretations of economic dynamics and market sentiment. Yet despite the promise of both SSMs and LLMs, there remains a conspicuous gap in research concerning the unification of macro-level information and micro-stock patterns under a single predictive framework. Specifically, the alignment between LLM-derived embeddings for macroeconomic factors and Mamba-based encoders for micro-stock time series has seldom been explored, leaving open questions about how to reconcile differences in temporal resolution and semantic granularity.

To address these challenges, we propose MambaLLM, a novel framework that unifies structured micro-level and unstructured macro-level financial data for enhanced stock price prediction. MambaLLM introduces two key architectural innovations: (i) A Tri-directional Mamba-based Micro-Stock Encoder that simultaneously captures temporal, cross-feature, and global interactions across stock-level numerical indicators, improving over prior single-directional or stacked Mamba approaches, and (ii) an LLM-based Macro-Index Analyzer that leverages large language models, such as DeepSeek and FinBERT, to encode market-wide sentiment and macroeconomic signals extracted from the S&P 500 technical indicators. These two components are fused through a flexible hybrid fusion layer designed to combine learned representations from both the micro and macro streams, enabling the model to account for both local price patterns and broader economic context.

Our main contributions can be summarized as follows:

- Unlike previous Mamba-based models (e.g., MambaStock, which focuses solely on stock-level sequences, and S-Mamba, which targets sector-specific patterns), MambaLLM introduces a novel Micro-Stock Encoder that integrates a Tri-Mamba Modeling Layer with tri-directional Mamba blocks to jointly capture temporal, cross-feature, and global dependencies within stock time series.

- A Macro-Index Analyzer module is presented to incorporate high-level market sentiment and trend information, designed specifically for this work. A carefully constructed financial prompt is used to guide DeepSeek R1 7B distillation in generating daily market summaries, which are subsequently fed into FinBERT to obtain domain-specific financial embeddings.

- Combining broad market data (e.g., stock indices) with individual stock cues (e.g., price–volume metrics and technical indicators) enables MambaLLM to generate a dual-perspective market view, capturing both systemic changes and stock-specific fluctuations.

- Comprehensive tests on six major U.S. stocks (AAPL, AMZN, MSFT, TSLA, GOOGL, META) demonstrate that MambaLLM achieves a remarkable 28.50% reduction in RMSE relative to other baselines, maintaining superior resilience amid market turbulence.

The remainder of this paper is structured as follows. Section 2 provides a review of SSM-based and LLM-based methodologies in the context of financial forecasting. Section 3 presents a detailed account of MambaLLM’s architecture, including data preprocessing and model training procedures. Experimental results and analyses are outlined in Section 4, followed by additional discussions in Section 5. Finally, Section 6 concludes the paper and offers directions for future exploration.

2. Related Works

In order to better understand the similarities and differences within the literature covered by the proposed methodology, two different research areas are examined: neural networks for financial time series and LLMs for financial time series. The discussion and presentation of the related studies allows for a focused and organized exploration of current research.

2.1. Neural Networks for Financial Time Series

Neural networks have revolutionized financial time series prediction by offering powerful tools to model complex patterns in stock market data. Traditional architectures such as recurrent neural networks (RNNs) and their variants, including Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs), have proven effective at capturing non-linear relationships and long-term dependencies despite challenges with high volatility and non-stationarity [4,5,6,7]. Convolutional Neural Networks (CNNs) not only excel in stock prediction tasks but also outperform traditional statistical methods across a wide range of applications [16,17,18,19,20,21,22].

The evolution of neural networks for time series prediction has seen significant advances with the introduction of Transformer-based architectures. Models like Informer, Autoformer, Pyraformer, and ETSFormer have introduced innovations such as ProbSparse attention, auto-correlation decomposition, pyramid attention modules, and frequency-based attention mechanisms [8,9,10,11]. These approaches have achieved state-of-the-art results in general time series forecasting but face unique challenges when applied to the volatile nature of financial data.

State-space models (SSMs) represent another important class of time series models that have seen remarkable developments recently. The Mamba architecture and its variants have demonstrated exceptional effectiveness in capturing long-range dependencies through mechanisms like forgetting gates, variable scanning along time, and hybrid transformer architectures [23,24,25,26,27]. While these SSM variants have shown promise in various domains, their application to financial time series prediction remains underexplored, creating an opportunity for our proposed MambaLLM framework that combines Mamba with large language models.

The complexity of financial time series has inspired hybrid and multi-scale approaches that combine different methodologies. Recent innovations include CNN-GRU-attention frameworks that separate predictable components of stock returns, XGBoost-BiGRU integrations for futures price forecasting, and seasonal-trend decomposition methods [28,29,30]. These approaches attempt to address the unique challenges of financial data by leveraging the complementary strengths of different architectures.

Emerging models specifically designed for financial applications continue to push the boundaries of stock price prediction. Novel architectures such as SSA-BiGRU-GSCV, DLinear, SegRNN, and TimeMixer demonstrate how specialized designs can improve forecasting accuracy [31,32,33,34]. These developments highlight the ongoing evolution of neural network applications in financial forecasting and underscore the potential for our proposed LLM-augmented Mamba framework to make a significant contribution to this field.

2.2. Large Language Models (LLMs) for Financial Time Series

The recent surge in popularity of large language models (LLMs) has transformed time series analysis by providing powerful tools for various tasks [35,36]. These models not only generate additional features from textual data but also directly analyze time series data through their inherent sequential processing capabilities [36,37].

Several studies have demonstrated the efficacy of LLMs in time series analysis. Zhou et al. showed the versatility of LLMs across forecasting, anomaly detection, classification, and imputation tasks using a GPT-2 backbone [38]. Gruver et al. explored the zero-shot capabilities of pretrained LLMs for time series forecasting through appropriate data tokenization [39]. Jin et al. enhanced LLM performance by translating time series data into more interpretable representations [40]. These advances, along with foundation models specifically designed for time series analysis, establish a new paradigm for capturing complex temporal dependencies [41,42].

In financial time series forecasting, LLMs have shown significant promise. Some approaches directly apply LLMs to stock forecasting, integrating diverse data sources for robust predictions with enhanced explainability [43]. Other methods combine LLMs with neural networks, such as Chen et al.’s framework that uses ChatGPT to enhance graph neural networks for stock movement prediction [44].

Specialized models like FinBERT, fine-tuned for financial sentiment analysis, have proven valuable for incorporating non-numerical data. Li et al. combined FinBERT with LSTM networks to predict stock price movements based on news sentiment, achieving improved short-term accuracy [45]. Zhang et al. enhanced few-shot stock trend prediction using LLM-based sentiment analysis, particularly effective in uncertain markets [46]. Zhou proposed a hybrid framework integrating LLMs, Linear Transformers, and CNNs, leveraging FinBERT for technical analysis [47].

LLMs excel in multimodal data analysis, crucial for comprehensive financial forecasting. Wimmer and Rekabsaz developed models combining textual and visual data for market movement prediction [48]. The RiskLabs framework integrates diverse financial data types, using LLMs to extract insights from multiple sources before modeling risk over different timeframes [49].

Despite these advances, challenges remain. Xie et al. found ChatGPT underperformed in zero-shot multimodal stock prediction tasks [50]. However, Lopez-Lira and Tang demonstrated that advanced LLMs like GPT-4 outperform traditional models, especially with negative news and smaller stocks [51].

While challenges exist, research reveals considerable promise for LLMs in financial time series forecasting. Explainability, comprehensive news understanding, and multimodal integration remain key areas for future investigation. These aspects also highlight the necessity for further research to fully realize the potential of LLMs in financial forecasting.

3. Methods

3.1. Problem Statement

Given the complexity and dynamic nature of financial markets, accurately forecasting stock prices remains a challenging, yet critical task. To address this, we propose integrating micro-stock characteristics with macro-index context to enhance predictive performance. Formally, let represent the closing price of a specific target stock on trading day t. The task is to predict the next day’s closing price utilizing two distinct historical data sources:

- Micro-stock Data: This comprises a 12-dimensional characteristic vector capturing intrinsic stock-specific information at day t, defined aswhere each feature in is normalized by MinMax scaling to ensure feature comparability and stable model training.

- Macro-index Context: This involves daily S&P 500 data analyzed through an LLM, such as DeepSeek and FinBERT, to generate textual summaries that reflect the broader market conditions. Subsequently, these summaries are encoded in high-dimensional semantic embeddings, represented as , providing a context-rich macroeconomic perspective.

Consequently, given a historical window of length L, the learning task is expressed as follows in Equation (1):

where represents the temporal sequence of micro-stock data, and denotes the corresponding macro-index embedding sequence derived from LLM.

By systematically combining micro-stock dynamics with macro-index sentiment encoded in LLM embeddings, we propose a MambaLLM architecture specialized in capturing multi-dimensional financial market patterns. This dual-mode approach aims to efficiently capture both short-term price fluctuations and long-term trends, thereby significantly enhancing the predictive power of financial time series models.

3.2. Data Representation and Preprocessing

3.2.1. Micro-Stock Features

Each day’s micro-stock data comprise five raw price metrics: open (O), high (H), low (L), close (C), and volume (V), along with seven technical indicators: Simple Moving Averages (SMA10, SMA50), Exponential Moving Average (EMA10), Bollinger Bands (upper and lower), Moving Average Convergence Divergence (MACD), and Relative Strength Index (RSI). These indicators are defined as follows:

- SMA: SMA smooth out price fluctuations by calculating the average closing price over a specific period. They help identify trends: SMA10 captures short-term trends (10-day window), while SMA50 reflects medium-term trends (50-day window):where denotes the closing price at time t.

- EMA10: EMA10 assigns greater weight to recent prices, making it more sensitive to recent market movements compared to SMA. This feature allows it to track price trends while emphasizing new data:

- Bollinger Bands: Comprising a middle SMA line (typically 20-day) and upper/lower bands based on standard deviation, Bollinger Bands gauge price volatility. Prices near the upper band may signal overbought conditions, while those near the lower band may indicate oversold conditions:where is the standard deviation of closing prices over the past 20 days.

- MACD: MACD is a momentum indicator that analyzes the relationship between two EMAs (12-day and 26-day). Crossovers of these EMAs generate buy/sell signals, aiding in identifying trend reversals:

- RSI: RSI measures price movement speed and magnitude to determine overbought or oversold conditions. Values above 70 generally indicate overbought status, while values below 30 suggest oversold status:where and are the average gains and losses over a 14-day window.

To normalize the input ranges, we apply MinMax scaling to each feature independently:

where and are the minimum and maximum values of the feature across the training set. The resulting 12-dimensional vector (5 raw metrics + 7 indicators) are input into the Mamba state-space encoder (Section 3.3.1).

3.2.2. Macro-Index Data Processing with LLM

The S&P 500 technical indicators are analyzed through the local deployment of DeepSeek-R1-7B via Ollama API. The structured prompt template contains the following:

You are a professional financial analyst. Based on the overall market performance of the S&P 500 Index and its technical indicators (open, high, low, close, volume, 10-day Simple Moving Average (SMA10), 50-day Simple Moving Average (SMA50), 10-day Exponential Moving Average (EMA10), Bollinger Bands upper, Bollinger Bands lower, Moving Average Convergence Divergence (MACD), Relative Strength Index (RSI)), conduct a comprehensive analysis of the overall market trend. For each trading day, provide a detailed technical analysis of the market as a whole, including but not limited to whether the market is in an overbought or oversold condition, the strength of bullish or bearish trends, market volatility based on Bollinger Bands, and other key technical signals that may influence market movements.

Data Input Format: Daily market data are formatted as follow:

Open: 2425.66

High: 2543.21

Low: 2401.34

Close: 2529.19

Volume: 837M

SMA10: 2760.36

SMA50: 3166.31

EMA10: 2713.64

Bollinger Bands Upper: 2984.12

Bollinger Bands Lower: 2345.88

MACD: -185.0

RSI: 36.0

LLM Output Structure: Typical analysis contains key price movement observations, volume–impact analysis, technical indicator interpretations, volatility assessment, and trading conclusions. A sample is as follows:

Key Observations:

- Bullish intraday movement (Open: 2425.66→Close: 2529.19)

- High volume (837M) signals strong market participation

- SMA50 = 3166.31 maintains long-term bullish trend

- MACD = −185 indicates underlying bearish pressure

Interpretation:

- Divergence between price action and MACD

- RSI = 36 suggests oversold conditions

- Bollinger Band positioning implies volatility

Conclusion: Bullish trend with potential reversal signals. Monitor MACD-RSI convergence.

FinBERT Vectorization: The financial-specific transformer FinBERT (ProsusAI/finbert) converts textual analyses to 768-d embeddings:

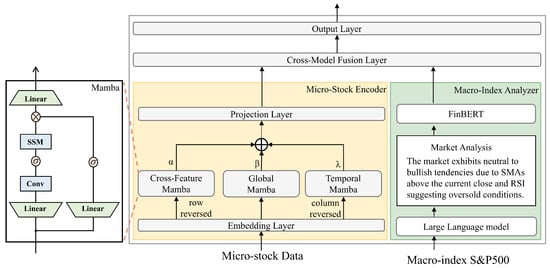

3.3. Proposed MambaLLM Architecture

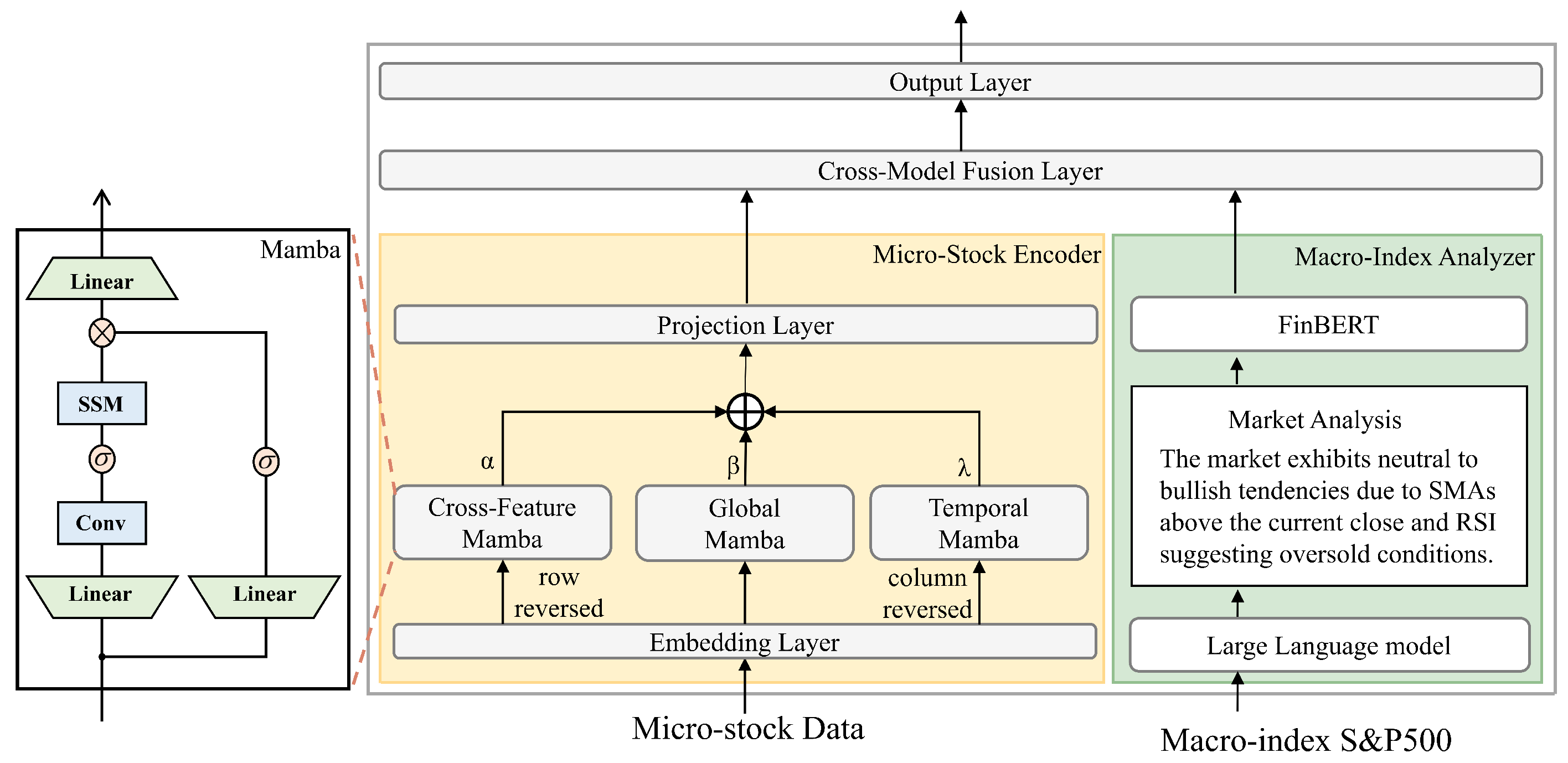

Figure 1 illustrates the three major components of the MambaLLM framework:

Figure 1.

Structure of proposed method.

- Micro-Stock Encoder (Mamba SSM): A selective state-space model that processes normalized micro-stock features.

- Macro-Index Analyzer (DeepSeek R1 7B + FinBERT): Deepseek-R1-7B generates daily textual insights from S&P 500 data, which are converted into 768-dimensional embeddings via FinBERT.

- Cross-Modal Fusion: A multi-layer perceptron (MLP) integrates outputs from the two previous streams to predict the next day’s closing price.

3.3.1. Micro-Stock Encoder

In this section, the Micro-Stock Encoder is presented, which is designed to capture multi-dimensional dependencies within micro-stock time series data. This encoder is composed of three sequential modules: an Embedding Layer, a Tri-Mamba Modeling Layer, and a Projection Layer. Directional information from feature-wise, temporal, and global perspectives is integrated to enable the extraction of more comprehensive representations of stock behavior.

- Embedding Layer: Given a batch of multivariate stock time series input , where B is the batch size, L denotes the number of time steps, and D represents the number of stock-related features (e.g., open, high, low, close, and volume), a dimension permutation is first performed to rearrange the tensor into shape :This operation enables each feature dimension to be treated as an independent token sequence across time, which facilitates token-wise encoding by a linear layer.A linear projection is then applied along the temporal axis to project each feature’s temporal dynamics into a latent representation of dimension d:where and are learnable parameters. The resulting embedding tensor encodes the time-aware feature representations and is passed to the subsequent Tri-Mamba Modeling Layer.

- Tri-Mamba Modeling Layer: This layer is designed to model dependencies from different perspectives and consists of three parallel Mamba modules dealing with different variants of the input:

- -

- Global Mamba (Original Order): Used to capture holistic dependencies over the original sequence.

- -

- Cross-Feature Mamba (Row-Reversed): Applied to model inter-feature relationships by reversing the input along the feature (row) dimension.

- -

- Temporal Mamba (Column-Reversed): Designed to emphasize temporal dependency learning by reversing the input along the time (column) dimension.

Given the embedded input , each directional branch first performs a series of operations within a Mamba Block. The internal structure of a Mamba Block can be described as follows.The input is first projected into two streams via a linear transformation:followed by a one-dimensional convolution and non-linearity on :The transformed signal is then passed through a selective state-space model (SSM), parameterized by discretized state matrices and a learnable step size . The discrete update process is formulated asA selective gating mechanism is applied to combine the SSM output with the auxiliary stream :and finally, the output of the block is computed via a final linear transformation:where ⊙ denotes element-wise multiplication. The selective state-space operator is able to capture long-range dependencies with high efficiency by integrating structured recurrence with data-dependent selection [14].Given the embedded input , the outputs of the three Mamba blocks are computed as follows:The outputs from the three directions are then fused using a learnable weighted sum:where , , and are trainable scalar weights that balance the contributions of the three directional Mamba blocks. A residual connection is applied to enhance representational stability: - Projection Layer: To obtain the final encoded representation suitable for fusion and prediction, a two-stage linear projection is applied to the output tensor from the Tri-Mamba Modeling Layer.In the first stage, a linear transformation is performed along the last dimension (i.e., the hidden dimension d), reducing it to a scalar:Next, the singleton dimension is moved to the middle position via permutation, and the number of features D is projected to a user-defined output dimension using a second linear layer:Finally, the singleton dimension is removed using a squeeze operation to produce the final encoder output:This output vector serves as the micro-level stock representation that is later fused with macro-financial information. The two-step projection enables decoupled control over temporal feature reduction and dimensional alignment.

3.3.2. Macro-Index Analyzer (DeepSeek R1 7B + FinBERT)

To integrate macro-level financial context into the prediction framework, a dedicated Macro-Index Analyzer module is designed. This module extracts market sentiment and trend signals from historical macro indicators using a large language model (LLM) followed by a financial domain-specific encoder.

Let the daily macro indicator vector for day t be denoted as

For each day t, the vector is first embedded into a structured prompt using a template-based financial instruction. The prompt is then processed by a large language model (DeepSeek R1 7B) to generate a contextualized textual summary:

The generated financial insight is subsequently encoded using FinBERT, yielding a sentiment-aware embedding:

To capture market conditions, the embeddings from the most recent trading days are aggregated via average pooling:

where T is the current trading day. The resulting vector encodes temporal macro-financial sentiment and trend dynamics, and serves as a global conditioning input for downstream prediction modules.

3.3.3. Cross-Modal Fusion

To integrate micro-level stock representations with macro-financial sentiment features, a Cross-Modal Fusion module is employed. This module combines the outputs from the Micro-Stock Encoder and the Macro-Index Analyzer to form a unified representation for downstream forecasting.

Let denote the output from the Micro-Stock Encoder, and let be the macro-level embedding obtained from the Macro-Index Analyzer. These two representations are concatenated along the feature dimension to form a fused vector:

This fused representation is processed through a three-layer multi-layer perceptron (MLP) with ReLU activations. The transformation steps are as follows:

The final output captures a compact and expressive cross-modal representation, integrating both fine-grained stock dynamics and broader macroeconomic context. This vector is then passed to the final prediction layer.

3.3.4. Output Layer

The final prediction is generated by a simple Output Layer, which takes the cross-modal representation as input and maps it to a scalar output through a fully connected layer:

The resulting output represents the model’s prediction for each sample in the batch.

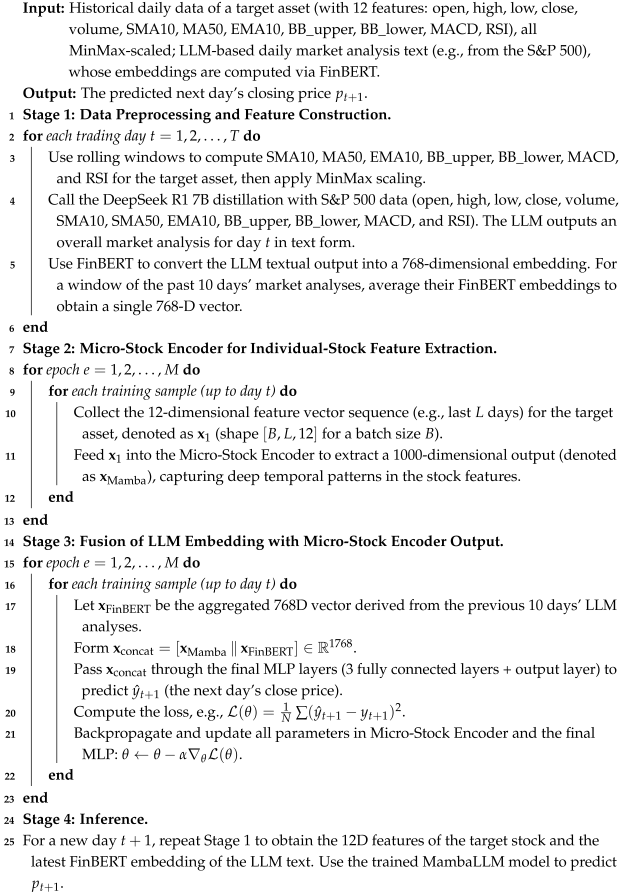

3.4. MambaLLM Training

The training procedure of MambaLLM is illustrated in Algorithm 1. The MambaLLM algorithm proceeds by first constructing a set of stock-specific features (e.g., SMA10, MA50, and RSI) from historical data and normalizing them, then using the DeepSeek R1 7B distillation to generate textual market overviews—based on macro-index data—which are converted into high-dimensional embeddings via FinBERT. Next, a Mamba-based module ingests the preprocessed micro-stock feature sequence to extract deep temporal patterns, while the FinBERT embeddings encapsulate broader market insights. These representations are concatenated and passed through an MLP for final price forecasting, with model parameters updated iteratively using Mean Squared Error Loss. At inference time, the same data flow applies to new samples, leveraging the trained network to estimate the target asset’s next-day closing price.

| Algorithm 1: The process flow of MambaLLM |

|

4. Experiment

4.1. Dataset

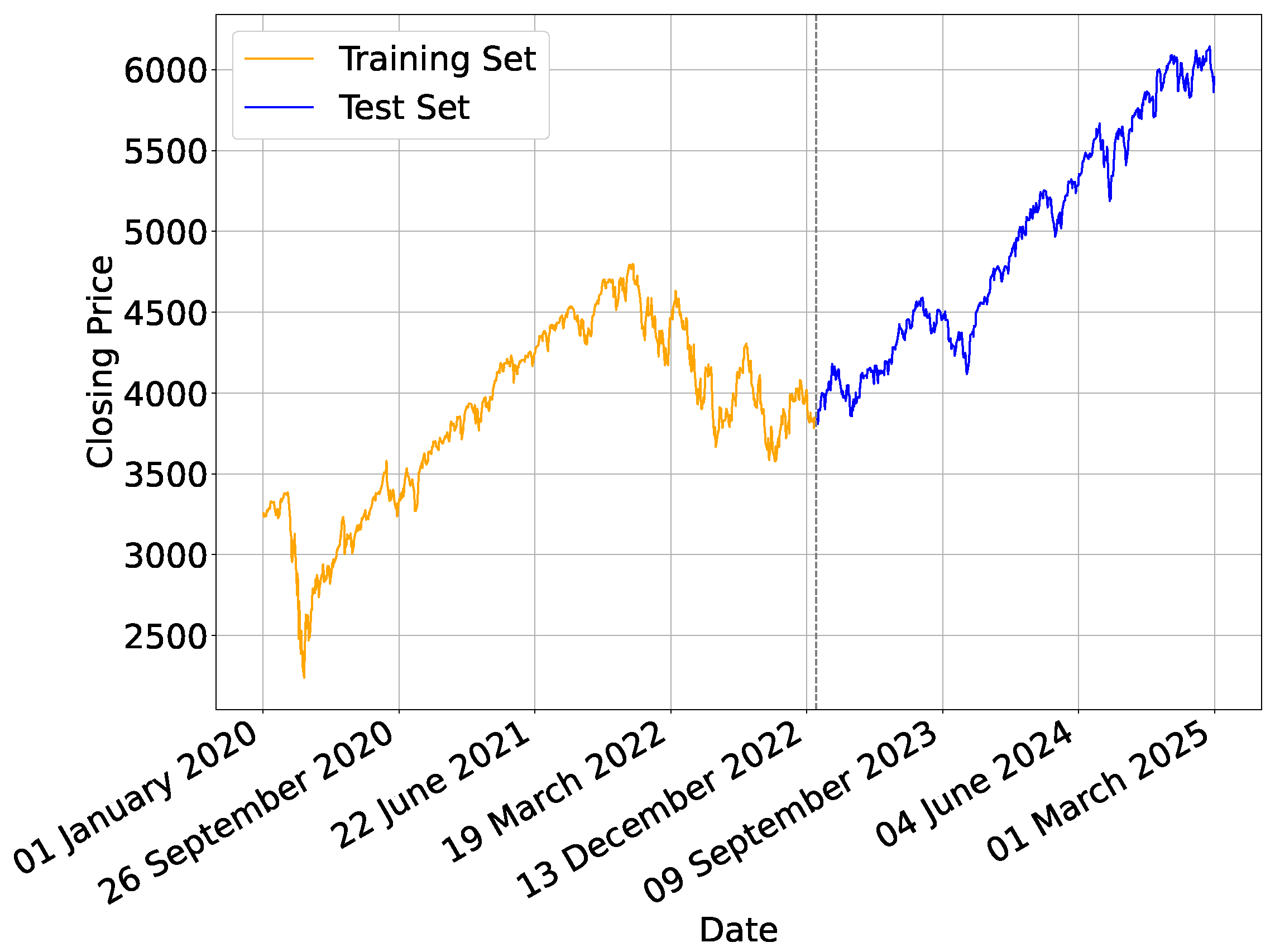

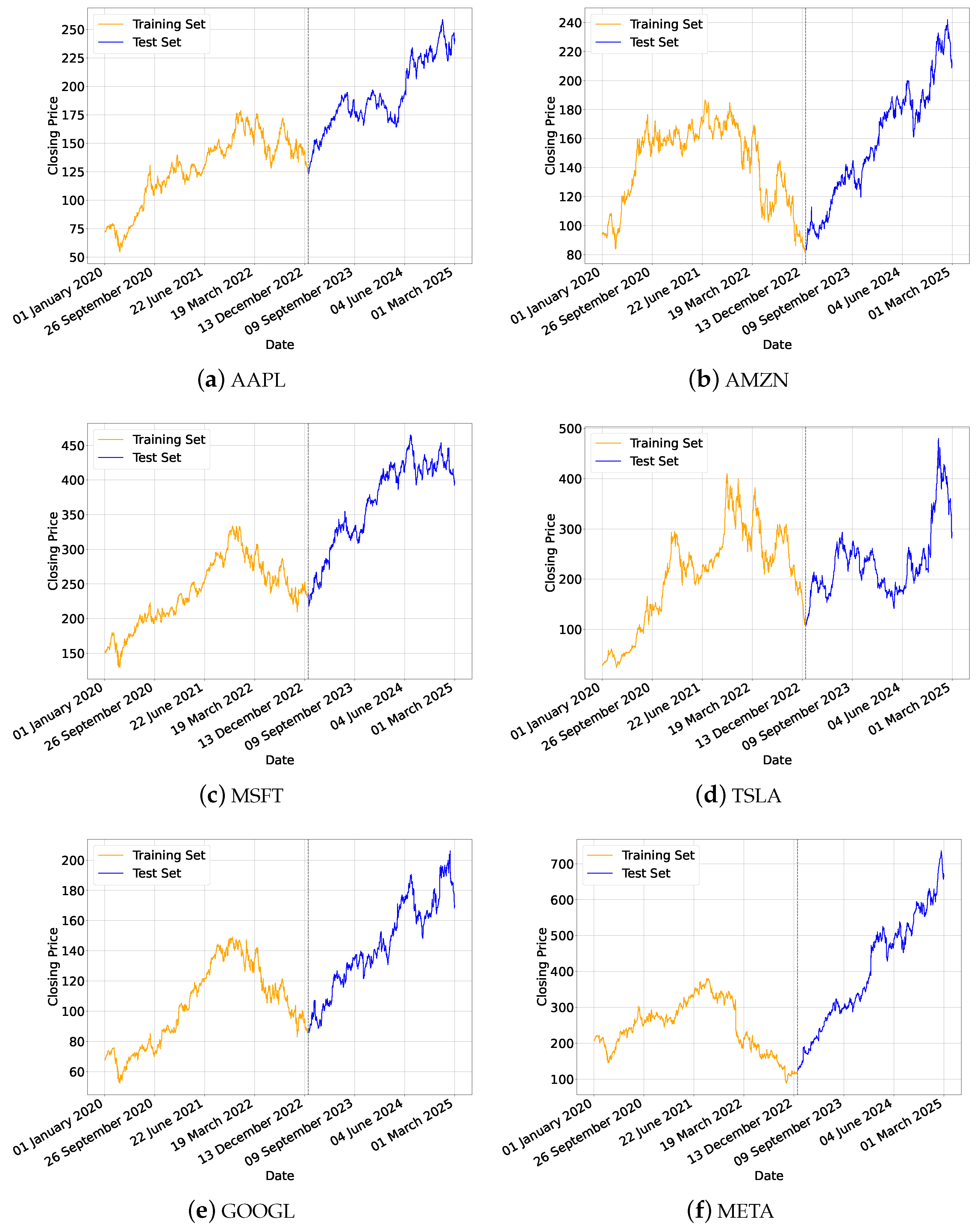

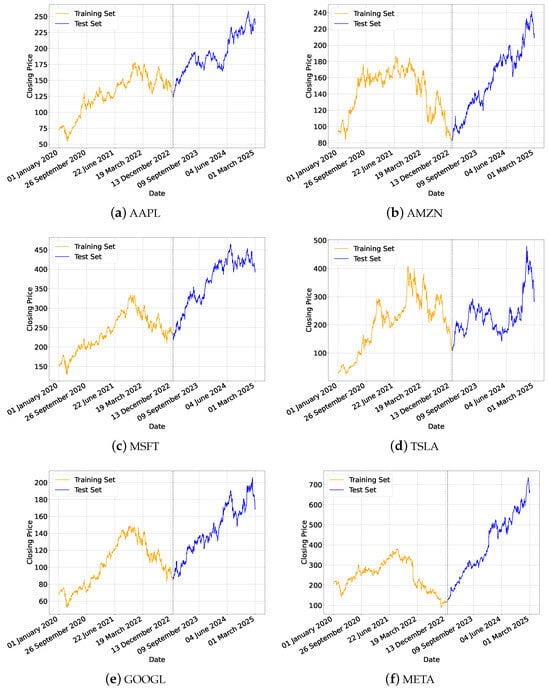

In this study, we use the yfinance library to download historical stock data, which includes pre-adjusted prices accounting for stock splits, dividends, and major corporate actions as provided by the Yahoo Finance platform. We employ the S&P 500 as the macro-level index and six individual stocks—Apple (AAPL), Amazon (AMZN), Microsoft (MSFT), Meta (META), Tesla (TSLA), and Google (GOOGL)—to validate the effectiveness of our proposed approach. The dataset spans from 1 January 2020–28 February 2025, providing a comprehensive timeframe for both model training and evaluation. As illustrated in Figure 2 and Figure 3, we partition the data into two subsets:

Figure 2.

Close price curve of S&P 500 index.

Figure 3.

Close price curve of six individual stocks.

- Training set (1 January 2020–31 December 2022), used for tuning model parameters.

- Testing set (1 January 2023–28 February 2025), used for final performance evaluation.

4.2. Baseline Methods

In empirical studies, it is standard to compare newly proposed models with a range of established methods. In this work, we evaluate the performance of the following baseline models:

- GRU: Gated Recurrent Unit (GRU) is a simplified variant of recurrent neural networks (RNNs). They use gating mechanisms to control the flow of information, helping alleviate the vanishing gradient problem. GRU models are often employed in sequence data modeling due to their relatively fewer parameters compared to LSTM, faster convergence, and strong predictive power.

- BiGRU: Bidirectional GRU extends the GRU model by processing the input sequence in both forward and backward directions. This structure allows the model to capture dependencies from past and future time steps simultaneously, improving performance on tasks requiring context from both directions.

- LSTM: Long Short-Term Memory (LSTM) networks are a foundational type of RNN designed to capture long-term dependencies in sequential data. LSTMs use specialized gating units (input, forget, and output gates) to learn what information to keep or discard over time, making them effective in many time series forecasting tasks.

- BiLSTM: Bidirectional LSTM processes input sequences in both the forward and backward directions using separate hidden layers. It is particularly useful for tasks where future context can enhance the prediction of current states.

- Informer: Informer [8] proposes an efficient Transformer architecture tailored for long sequence time series forecasting (LSTF), featuring three key innovations: the ProbSparse self-attention mechanism, attention distillation, and a generative decoder to overcome the computational bottlenecks of vanilla Transformers.

- Autoformer: Autoformer [9] proposes a novel decomposition-based Transformer architecture for long-term time series forecasting, embedding progressive series decomposition into model layers and introducing an auto-correlation mechanism that exploits time series periodicity to enhance dependency discovery and computational efficiency.

- Crossformer: Crossformer [52] is a Transformer-based model that explicitly captures both cross-time and cross-dimension dependencies in multivariate time series through a novel Dimension-Segment-Wise embedding and a Two-Stage Attention mechanism.

- MambaStock: MambaStock [53] is a novel stock prediction model based on the Mamba framework. It leverages the Mamba architecture to effectively utilize historical market data for forecasting future stock prices. By directly learning representations from raw price data (open, high, low, close, etc.), MambaStock aims to reduce the need for hand-crafted features or complex preprocessing steps, showing strong performance on multiple real-world datasets.

- S-Mamba: S-Mamba [15] is an architecture designed for time series forecasting (TSF), also based on the Mamba framework. It incorporates bidirectional Mamba layers to capture both intra- and inter-variate correlations and then applies a feed-forward network to model temporal dependencies. By autonomously tokenizing time points via a linear layer and performing forecasts through a final linear mapping, S-Mamba achieves state-of-the-art performance across thirteen public TSF datasets. Its efficient design highlights the generalizability and computational benefits of Mamba-based approaches.

4.3. Evaluation Metrics

In stock price forecasting, it is essential to evaluate the accuracy and performance of predictive models. The following metrics are frequently used:

- Root Mean Square Error (RMSE): RMSE is defined as the square root of MSE. It provides an interpretable metric because it shares the same units as the original data, allowing for an intuitive assessment of the average magnitude of prediction errors:

- Mean Absolute Percentage Error (MAPE): MAPE expresses the average deviation between predicted and actual values in percentage terms. It helps assess errors relative to the scale of the data and enables comparison across different datasets:

- Mean Absolute Error (MAE): MAE calculates the average absolute deviation between predicted values and their corresponding actual values. In contrast to MSE and RMSE, all errors contribute equally to the final metric, making MAE a straightforward measure of prediction accuracy:

4.4. Experiment Setup

The method proposed in this research is trained across six distinct datasets. The performance of the model is evaluated by a test set associated with its respective dataset. The finely tuned hyperparameters for the agent in this experiment are detailed in Table 1. The computational resources utilized in this study are specified as follows: the central processing unit (CPU) employed is the 13th Generation Intel(R) Core(TM) i7-13700KF. Graphics processing is managed by an NVIDIA RTX 4090 GPU, which boasts 24 GB of memory. On the software front, the study employs Python version 3.10, Pytorch version 1.12, and CUDA version 12.1. The operating system is Windows 10.

Table 1.

The tuned hyperparameters.

The hyperparameters of MambaLLM are carefully tuned to optimize prediction performance. As shown in Table 1, three linear layers are used in the Cross-Modal Fusion Layer. The GELU activation function is selected to improve non-linear feature transformation. An Adam optimizer is employed with a learning rate of 0.0005 to ensure stable convergence. A dropout rate of 0.1 is applied to prevent overfitting. The input window size is set to 10 days, allowing the model to capture recent temporal dynamics effectively. Furthermore, the training of a single epoch requires approximately 0.40 s, with the maximum number of epochs set to 100. An early stopping strategy is employed, resulting in a typical total training time of approximately 20 to 30 s.

4.5. Experimental Results

4.5.1. Comparison with Baselines

Table 2 demonstrates that all the conventional RNN variants (GRU, BiGRU, LSTM, and BiLSTM) perform worse than the newer Mamba-based models. Among these, MambaStock already surpasses the baseline series RNNs. The S-Mamba model, by focusing on individual stock signals, further reduces the error across all metrics. Most notably, MambaLLM achieves the best overall results by incorporating macro-level index information alongside traditional micro-level features.

Table 2.

Comparison on the performance of the proposed method with various time series prediction networks.

We further incorporate Transformer-based time series models (Informer, Autoformer, and Crossformer) into our comparative analysis. These models offer modest improvements over RNNs on certain datasets, such as Autoformer achieving an RMSE of 5.39 on AAPL compared to 6.29 by BiLSTM. However, their performance is less stable across the board. Informer yields the highest RMSE on AAPL (13.63) and META (53.47), while Crossformer underperforms on MSFT (15.09) and GOOGL (6.01), suggesting limited robustness when applied to volatile stock sequences.

Compared to Transformer-based models, Mamba-based models consistently yield better predictive accuracy. For instance, on the AMZN dataset, Autoformer achieves an RMSE of 5.26, while MambaStock and S-Mamba further reduce it to 7.86 and 5.00, respectively. Similarly, on the MSFT dataset, Autoformer and Crossformer obtain RMSEs of 9.06 and 15.09, while MambaStock and S-Mamba push the error down to 9.12 and 7.42, respectively. These results underscore the advantage of Mamba’s sequential state modeling over standard self-attention in capturing stock dynamics.

MambaLLM outperforms not only the RNN and Transformer-based baselines but also all Mamba-based models across every dataset. For example, on the AAPL dataset, the RMSE improves progressively from 6.29 (BiLSTM) to 5.39 (Autoformer), then to 4.61 (S-Mamba), and finally to 2.93 (MambaLLM). On META, MambaLLM reduces the RMSE from 21.57 (MambaStock) and 12.82 (S-Mamba) to 10.41. These consistent gains demonstrate the effectiveness of integrating macro-level information via large language models.

By leveraging an LLM to incorporate broader macroeconomic factors, MambaLLM establishes a new state of the art. Its ability to unify structured stock-level time series with unstructured macroeconomic sentiment enables it to capture both fine-grained price signals and broader market trends, delivering superior performance across all metrics (RMSE, MAPE, and MAE).

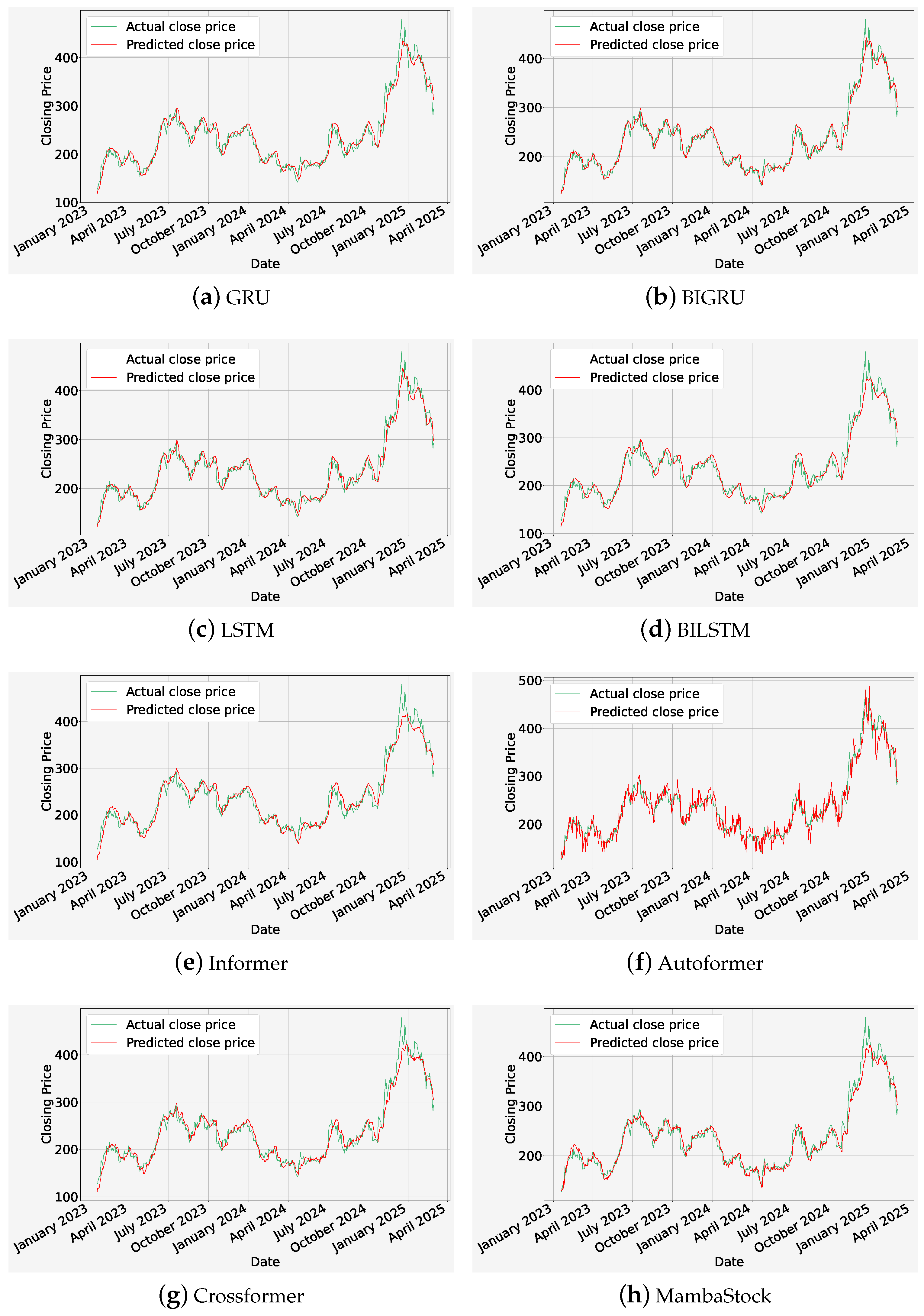

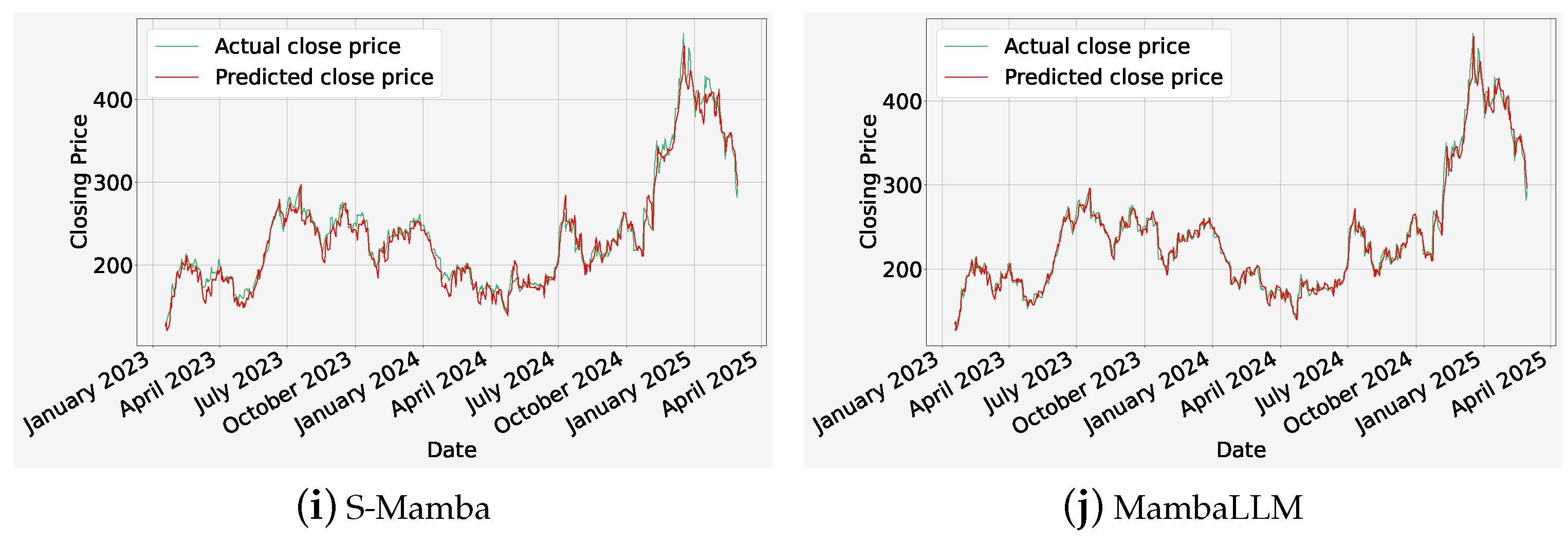

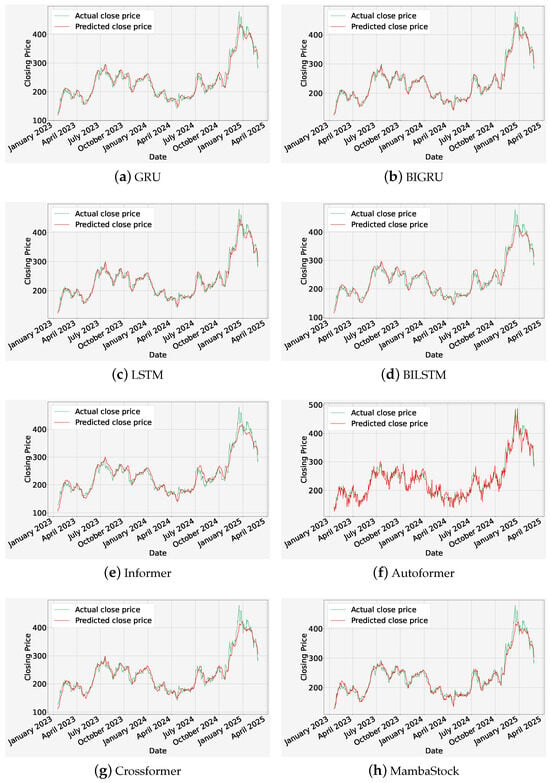

Figure 4 illustrates the performance comparison of our MambaLLM framework with multiple baselines on the TSLA dataset. We provide the LLM with macro-index data from the S&P 500 index, as well as micro-stock features (including price–volume records and technical indicators) specific to the TSLA, which generates the next day’s closing price. Benchmark comparisons are conducted between MambaLLM and several baseline models, including traditional sequence architectures (GRU, BiGRU, LSTM, and BiLSTM), three Transformer-based time series models (Informer, Autoformer, and Crossformer) and two Mamba-based variants (MambaStock and S-Mamba). It can be shown from subfigures (a)–(i) that while these benchmark track Tesla’s stock price trend well (green curve is actual close price),they tend to struggle to maintain predictive accuracy during periods of high market volatility, especially in late 2024 and early 2025. In contrast, subfigure (j) highlights that the prices predicted by MambaLLM (red curve) are much closer to the observed values over the entire time span, including the highly volatile parts. By fully integrating macro-level index signals with finer-grained TSLA data, MambaLLM significantly improves forecast accuracy. This holistic macro–micro framework allows MambaLLM to better capture broad market movements without sacrificing sensitivity to individual stock behavior, resulting in more robust and consistent forecasts.

Figure 4.

Comparison of stock price prediction between MambaLLM and other baselines on the TSLA dataset.

4.5.2. Analysis of Mamba-Based Methods

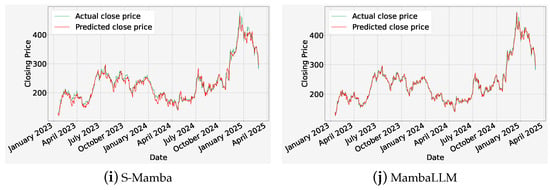

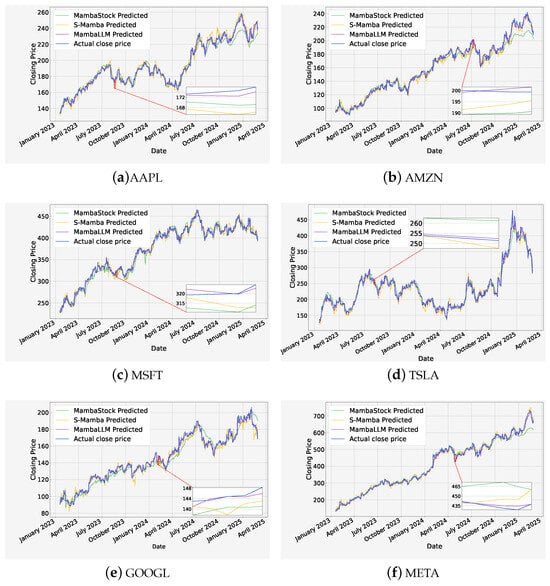

To better emphasize the distinction between MambaLLM and other Mamba-based approaches (MambaStock and S-Mamba), a dedicated comparison of their experimental results is conducted. As shown in Table 3, MambaLLM consistently achieves the lowest error across all evaluation metrics—RMSE, MAPE, and MAE—on every tested dataset, including AAPL, AMZN, MSFT, TSLA, GOOGL, and META. For instance, on the AAPL dataset, MambaLLM reduces the RMSE to 2.93, compared to 6.03 for MambaStock and 4.61 for S-Mamba, representing a relative improvement of over 51% compared to the best-performing baseline. Similar performance gains are observed across other datasets, such as AMZN (RMSE reduced to 3.28), MSFT (5.66), and GOOGL (2.89). These results demonstrate that by integrating both micro-level numerical sequences and macro-level contextual signals through its hybrid architecture, MambaLLM significantly enhances predictive accuracy compared to previous models that rely solely on stock-level features or sector-specific correlations.

Table 3.

Comparison of the performance of Mamba-based methods.

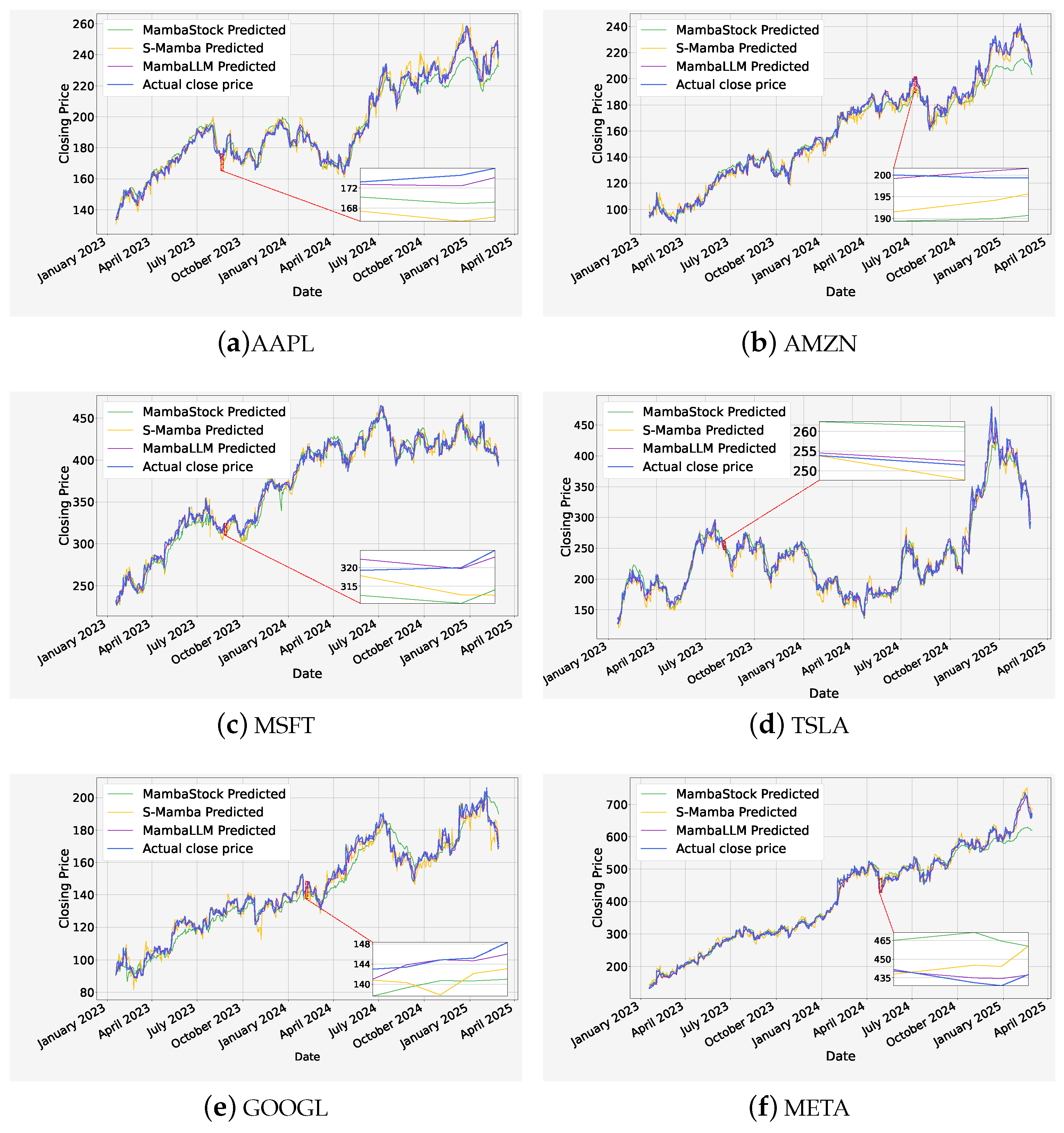

Figure 5 illustrates the performance of our MambaLLM framework, along with two of Mamba-based methods, MambaStock and S-Mamba, on six datasets. In each subplot, the actual daily closing price (blue) is compared against the predicted prices generated by MambaStock (green), S-Mamba (yellow), and MambaLLM (purple). The results indicate that MambaStock mainly uses micro-level stock features. It often captures overall price movements but sometimes misses abrupt changes. S-Mamba shows better alignment around local fluctuations. Yet, MambaLLM, drawing on a broader set of macro-index and micro-scale inputs through an LLM-based pipeline, consistently provides forecasts that remain closer to actual prices. This holds true not only over extended time spans but also during the zoomed-in intervals of volatile activity.

Figure 5.

Comparison of stock price prediction between Mamba series methods on six datasets.

Moreover, a magnified lower inset highlights a period of heightened volatility, allowing a closer look at the models’ short-term predictive capabilities. Within these enlarged segments, we can see how MambaStock—relying mainly on individual stock signals—captures broad directional changes but occasionally shows larger deviations from the true price path during rapid market swings. S-Mamba generally narrows these deviations and offers better local accuracy. However, MambaLLM—which integrates a comprehensive range of macro-index data with fine-grained micro-stock features—consistently aligns most closely with the actual prices, including those abrupt shifts captured in the insets. This tighter tracking becomes evident when comparing the purple prediction curve to its counterparts during sudden rises or drops.

Overall, integrating comprehensive macro indicators and detailed stock-specific attributes in a single, LLM-driven framework yields consistently stronger predictive performance. Compared with both MambaStock and S-Mamba, MambaLLM’s unified macro–micro perspective offers improved robustness and accuracy across a range of equities, establishing a new state-of-the-art benchmark for stock price forecasting.

4.5.3. Analysis of MambaLLM Ablation

An ablation study is conducted to evaluate the contribution of each module in MambaLLM. As shown in Table 4, removing the Mamba encoder leads to a significant increase in error, with RMSE reaching 29.71. This indicates that micro-level temporal modeling is essential for performance. When the LLM-based macro analyzer is excluded, RMSE increases to 4.00, suggesting the importance of macroeconomic context. An attention-based fusion of Mamba and LLM modules achieves improved results, with RMSE reduced to 3.71. The best performance is obtained when feature fusion is implemented via simple concatenation. In this case, RMSE drops to 2.89, and both MAPE and MAE also reach the lowest values. These findings confirm that both micro and macro modules are critical and that concatenation offers a more effective fusion strategy than attention in this setup.

Table 4.

The performance of the GOOGL dataset.

4.5.4. Analysis of Computational Efficiency

The computational efficiency of MambaLLM was evaluated on the GOOGL dataset. As shown in Table 5, the lowest RMSE of 2.89 was achieved by MambaLLM, indicating superior prediction accuracy. Inference time was maintained at 0.52 s, which is competitive with other Mamba-based models and faster than Informer. Memory usage during inference was measured at 1093 MB, which is slightly higher than MambaStock and S-Mamba but significantly lower than LSTM-based models. These results suggest that MambaLLM achieves a favorable balance between accuracy and computational cost. While MambaLLM introduces additional complexity due to the incorporation of LLM-based fusion, we argue that the approach remains feasible for near-real-time applications such as daily closing price prediction. These tasks typically operate on daily or hourly cycles, rather than requiring millisecond-level latency. As such, the framework remains practical for deployment in time-sensitive trading or decision-support systems.

Table 5.

Performance comparison of time series prediction networks: RMSE, runtime, and memory usage on the GOOGL dataset.

5. Discussion

The experimental results highlight the transformative potential of integrating macro-index and micro-stock data into a unified LLM framework for stock price prediction.The outperformance of MambaLLM over traditional RNNs and intermediate Mamba-based variants highlights three important insights. First, traditional RNN architectures (e.g., LSTM, GRU), while effective in modeling temporal dependencies, struggle with coordinating heterogeneous data sources (e.g., macroeconomic trends and fine-grained stock-specific signals) due to their limited ability to parallelize and fuse context-aware features. In contrast, the selective state-space mechanism of the Mamba architecture enables the efficient modeling of long-distance dependencies, while the LLM component provides a scalable pathway for encoding and coordinating multi-scale financial data. This dual capability enables MambaLLM to distinguish industry-wide macroeconomic impacts (e.g., S&P 500 index volatility) from localized stock dynamics, thereby reducing forecasting errors in both stable and volatile market environments.

Second, the results observed in MambaStock, S-Mamba and MambaLLM validate the need for overall macro-micro integration. While MambaStock and S-Mamba utilize micro-level features to achieve benchmark performance, their inability to link stock movements to broader market trends results in less-than-optimal accuracy during systematic changes as evidenced by their higher RMSE on volatile datasets such as TSLA and META. However, MambaLLM’s LLM-driven framework dynamically weights and combines macro-index and micro-stock signals, allowing the model to discern when macroeconomic factors are dominant and when stock-specific technical indicators are dominant. Compared to the suboptimal method, S-Mamba, the MambaLLM method achieved a 36.44% reduction in RMSE for APPL price predictions and a 37.58% RMSE improvement for GOOGL.

Third, the role of LLM in parsing unstructured macroeconomic data complements Mamba’s structured time series modeling, providing a robust solution to the “heterogeneous data gap” in financial forecasting. As shown in Figure 4, the MambaLLM forecasts continue to closely match ground truth prices even during the volatile period from late 2024 to early 2025, during which the baseline model exhibits significant lags or overshoots. This suggests that the embedding of the LLM encapsulates the underlying relationship between macro-index movements and the behavior of individual stocks, which is not captured by conventional models. Furthermore, the enlarged inset in Figure 5 demonstrates the ability of the MambaLLM to refine predictions on both macro and micro time scales, a capability that is critical for high-frequency trading strategies.

Despite the advantages outlined above, the MambaLLM framework remains computationally intensive, in large part because of its reliance on DeepSeek R1 7B distillation. This model size is relatively large, which poses practical challenges for deployment on resource-constrained environments. As a limitation of this work, the use of such a heavyweight backbone may hinder accessibility for smaller institutions or real-time systems. Future works could consider more lightweight or efficient LLM architectures to reduce computational cost and improve deployment feasibility. Additionally, while our study demonstrates strong results on major U.S. tech stocks, this focus represents a limitation of the current work. The effectiveness of MambaLLM on non-U.S. or non-tech stocks has not yet been verified. Future studies could assess the model across different markets, such as emerging economies and traditional industries, to provide further insights. These markets typically experience higher macroeconomic volatility and more diverse financial shocks, offering a rigorous test of the framework’s adaptability.

6. Conclusions

In this paper, we propose MambaLLM, a pioneering framework that combines macro-index data and micro-stock features in an LLM-based architecture to improve stock price prediction. Our comprehensive experiments on six major U.S. stocks (AAPL, AMZN, MSFT, TSLA, GOOGL, and META) show that MambaLLM outperforms traditional RNN variants and our earlier Mamba family of methods, with a 28.50% reduction in the RMSE when compared to the suboptimal model. This improvement highlights the framework’s ability to capture cross-scale market signals and adapt to sudden price fluctuations as evidenced by its performance on highly volatile stocks such as GOOGL.

The three main innovations of MambaLLM are as follows:

1. A Micro-Stock Encoder is introduced, incorporating tri-directional Mamba modeling to effectively extract multi-perspective representations from fine-grained financial time series.

2. To integrate macro-financial context, a novel Macro-Index Analyzer is proposed and specifically designed in this work. Based on carefully crafted financial prompts, DeepSeek R1 7B is employed to generate daily textual market summaries, which are then encoded by FinBERT to extract domain-specific financial embeddings.

3. By unifying broad market data (such as stock indices) with individual stock signals (e.g., price–volume information and technical indicators), MambaLLM creates a two-tier view of the market. Systemic changes are tracked through macro indices, while stock-level clues help fine-tune forecasts for specific stocks.

This macro-micro integration allows MambaLLM to excel in volatile environments where purely micro-level or partially macro-augmented models often struggle. Beyond demonstrating the practical value of macro–micro fusion for financial forecasting, our results also reaffirm the broader potential of LLM architectures in algorithmic trading and risk management applications. Future directions for MambaLLM include expanding its data sources (e.g., integrating real-time news sentiment or alternative macroeconomic indicators) and optimizing computational requirements for production-level deployment. With its strong empirical performance and versatile structure, MambaLLM sets a new standard for data-driven stock market analytics.

Author Contributions

Conceptualization, methodology, data curation, writing—original draft preparation. J.Y. and Y.H.; software, validation, visualization, investigation, J.Y.; supervision, writing—review and editing, project administration, Y.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work are supported by the Zhaoqing University Natural Science Fund (File no. 2024BSZ018 and qn202529).

Data Availability Statement

Our stock data are available for download at http://finance.yahoo.com (accessed on 1 March 2025). The MambaLLM model is available at https://github.com/ttys0001/MambaLLM (accessed on 5 May 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SSM | State-Space Model |

| GRU | Gated Recurrent Unit |

| BiGRU | Bidirectional Gated Recurrent Unit |

| LSTM | Long Short-Term Memory |

| BiLSTM | Bidirectional Long Short-Term Memory |

| LLM | Large Language Model |

| SMA | Simple Moving Average |

| EMA | Exponential Moving Average |

| MACD | Moving Average Convergence Divergence |

| RSI | Relative Strength Index |

References

- Shumway, R.H.; Stoffer, D.S.; Shumway, R.H.; Stoffer, D.S. ARIMA models. Time Series Analysis and Its Applications: With R Examples; Springer: New York, NY, USA, 2017; pp. 75–163. [Google Scholar]

- Franses, P.H.; Van Dijk, D. Forecasting stock market volatility using (non-linear) Garch models. J. Forecast. 1996, 15, 229–235. [Google Scholar] [CrossRef]

- Chong, C.W.; Ahmad, M.I.; Abdullah, M.Y. Performance of GARCH models in forecasting stock market volatility. J. Forecast. 1999, 18, 333–343. [Google Scholar] [CrossRef]

- Chen, K.; Zhou, Y.; Dai, F. A LSTM-based method for stock returns prediction: A case study of China stock market. In Proceedings of the 2015 IEEE International Conference on Big Data (Big Data), Santa Clara, CA, USA, 29 October–1 November 2015; pp. 2823–2824. [Google Scholar]

- Nelson, D.M.; Pereira, A.C.; De Oliveira, R.A. Stock market’s price movement prediction with LSTM neural networks. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 1419–1426. [Google Scholar]

- Baek, Y.; Kim, H.Y. ModAugNet: A new forecasting framework for stock market index value with an overfitting prevention LSTM module and a prediction LSTM module. Expert Syst. Appl. 2018, 113, 457–480. [Google Scholar] [CrossRef]

- Chen, X.; Ma, X.; Wang, H.; Li, X.; Zhang, C. A hierarchical attention network for stock prediction based on attentive multi-view news learning. Neurocomputing 2022, 504, 1–15. [Google Scholar] [CrossRef]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond efficient transformer for long sequence time-series forecasting. Proc. AAAI Conf. Artif. Intell. 2021, 35, 11106–11115. [Google Scholar] [CrossRef]

- Wu, H.; Xu, J.; Wang, J.; Long, M. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. Adv. Neural Inf. Process. Syst. 2021, 34, 22419–22430. [Google Scholar]

- Liu, S.; Yu, H.; Liao, C.; Li, J.; Lin, W.; Liu, A.X.; Dustdar, S. Pyraformer: Low-complexity pyramidal attention for long-range time series modeling and forecasting. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

- Woo, G.; Liu, C.; Sahoo, D.; Kumar, A.; Hoi, S. Etsformer: Exponential smoothing transformers for time-series forecasting. arXiv 2022, arXiv:2202.01381. [Google Scholar]

- Gu, A.; Johnson, I.; Goel, K.; Saab, K.; Dao, T.; Rudra, A.; Ré, C. Combining recurrent, convolutional, and continuous-time models with linear state space layers. Adv. Neural Inf. Process. Syst. 2021, 34, 572–585. [Google Scholar]

- Gu, A.; Goel, K.; Ré, C. Efficiently modeling long sequences with structured state spaces. arXiv 2021, arXiv:2111.00396. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar]

- Wang, Z.; Kong, F.; Feng, S.; Wang, M.; Yang, X.; Zhao, H.; Wang, D.; Zhang, Y. Is Mamba effective for time series forecasting? Neurocomputing 2025, 619, 129178. [Google Scholar] [CrossRef]

- Hoseinzade, E.; Haratizadeh, S. CNNpred: CNN-based stock market prediction using a diverse set of variables. Expert Syst. Appl. 2019, 129, 273–285. [Google Scholar] [CrossRef]

- Mehtab, S.; Sen, J. Stock price prediction using convolutional neural networks on a multivariate timeseries. arXiv 2020, arXiv:2001.09769. [Google Scholar]

- Lu, W.; Li, J.; Wang, J.; Qin, L. A CNN-BiLSTM-AM method for stock price prediction. Neural Comput. Appl. 2021, 33, 4741–4753. [Google Scholar] [CrossRef]

- Du, L.; Gu, Z.; Wang, Y.; Wang, L.; Jia, Y. A few-shot class-incremental learning method for network intrusion detection. IEEE Trans. Netw. Serv. Manag. 2023, 21, 2389–2401. [Google Scholar] [CrossRef]

- Jia, Y.; Gu, Z.; Du, L.; Long, Y.; Wang, Y.; Li, J.; Zhang, Y. Artificial intelligence enabled cyber security defense for smart cities: A novel attack detection framework based on the MDATA model. Knowl.-Based Syst. 2023, 276, 110781. [Google Scholar] [CrossRef]

- Jiang, W. Applications of deep learning in stock market prediction: Recent progress. Expert Syst. Appl. 2021, 184, 115537. [Google Scholar] [CrossRef]

- Gong, J.; Eldardiry, H. Multi-stage Hybrid Attentive Networks for Knowledge-Driven Stock Movement Prediction. In Proceedings of the Neural Information Processing: 28th International Conference, ICONIP 2021, Sanur, Bali, Indonesia, 8–12 December 2021; Part IV 28, pp. 501–513. [Google Scholar]

- Patro, B.N.; Agneeswaran, V.S. Mamba-360: Survey of state space models as transformer alternative for long sequence modelling: Methods, applications, and challenges. arXiv 2024, arXiv:2404.16112. [Google Scholar]

- Liang, A.; Jiang, X.; Sun, Y.; Lu, C. Bi-Mamba4TS: Bidirectional Mamba for Time Series Forecasting. arXiv 2024, arXiv:2404.15772. [Google Scholar]

- Cai, X.; Zhu, Y.; Wang, X.; Yao, Y. MambaTS: Improved Selective State Space Models for Long-term Time Series Forecasting. arXiv 2024, arXiv:2405.16440. [Google Scholar]

- Xu, X.; Liang, Y.; Huang, B.; Lan, Z.; Shu, K. Integrating Mamba and Transformer for Long-Short Range Time Series Forecasting. arXiv 2024, arXiv:2404.14757. [Google Scholar]

- Ma, S.; Kang, Y.; Bai, P.; Zhao, Y.B. FMamba: Mamba based on Fast-attention for Multivariate Time-series Forecasting. arXiv 2024, arXiv:2407.14814. [Google Scholar]

- Yang, J.; Zhang, M.; Fang, R.; Zhang, W.; Zhou, J. Separating the predictable part of returns with CNN-GRU-attention from inputs to predict stock returns. Appl. Soft Comput. 2024, 165, 112116. [Google Scholar] [CrossRef]

- Wang, J.; Cheng, Q.; Dong, Y. An XGBoost-based multivariate deep learning framework for stock index futures price forecasting. Kybernetes 2023, 52, 4158–4177. [Google Scholar] [CrossRef]

- Zhou, T.; Ma, Z.; Wen, Q.; Wang, X.; Sun, L.; Jin, R. Fedformer: Frequency enhanced decomposed transformer for long-term series forecasting. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; 27268–27286. [Google Scholar]

- Mao, Z.; Wu, C. Stock price index prediction based on SSA-BiGRU-GSCV model from the perspective of long memory. Kybernetes 2023, 5, 5905–5931. [Google Scholar] [CrossRef]

- Zeng, A.; Chen, M.; Zhang, L.; Xu, Q. Are transformers effective for time series forecasting? Proc. AAAI Conf. Artif. Intell. 2023, 37, 11121–11128. [Google Scholar] [CrossRef]

- Lin, S.; Lin, W.; Wu, W.; Zhao, F.; Mo, R.; Zhang, H. Segrnn: Segment recurrent neural network for long-term time series forecasting. arXiv 2023, arXiv:2308.11200. [Google Scholar]

- Wang, S.; Wu, H.; Shi, X.; Hu, T.; Luo, H.; Ma, L.; Zhang, J.Y.; Zhou, J. TimeMixer: Decomposable Multiscale Mixing for Time Series Forecasting. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Jiang, Y.; Pan, Z.; Zhang, X.; Garg, S.; Schneider, A.; Nevmyvaka, Y.; Song, D. Empowering time series analysis with large language models: A survey. arXiv 2024, arXiv:2402.03182. [Google Scholar]

- Zhang, Z.; Sun, Y.; Wang, Z.; Nie, Y.; Ma, X.; Sun, P.; Li, R. Large language models for mobility in transportation systems: A survey on forecasting tasks. arXiv 2024, arXiv:2405.02357. [Google Scholar]

- Jin, M.; Zhang, Y.; Chen, W.; Zhang, K.; Liang, Y.; Yang, B.; Wang, J.; Pan, S.; Wen, Q. Position: What Can Large Language Models Tell Us about Time Series Analysis. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Zhou, T.; Niu, P.; Wang, X.; Sun, L.; Jin, R. One Fits All: Power General Time Series Analysis by Pretrained LM. In Proceedings of the Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Gruver, N.; Finzi, M.; Qiu, S.; Wilson, A.G. Large Language Models Are Zero-Shot Time Series Forecasters. arXiv 2023, arXiv:2310.07820. [Google Scholar]

- Jin, M.; Wang, S.; Ma, L.; Chu, Z.; Zhang, J.Y.; Shi, X.L.; Chen, P.Y.; Liang, Y.; Li, Y.F.; Pan, S.; et al. Time-LLM: Time Series Forecasting by Reprogramming Large Language Models. arXiv 2023, arXiv:2310.01728. [Google Scholar]

- Jin, M.; Wen, Q.; Liang, Y.; Zhang, C.; Xue, S.; Wang, X.; Zhang, J.Y.; Wang, Y.; Chen, H.; Li, X.; et al. Large Models for Time Series and Spatio-Temporal Data: A Survey and Outlook. arXiv 2023, arXiv:2310.10196. [Google Scholar]

- Liang, Y.; Wen, H.; Nie, Y.; Jiang, Y.; Jin, M.; Song, D.; Pan, S.; Wen, Q. Foundation Models for Time Series Analysis: A Tutorial and Survey. arXiv 2024, arXiv:2403.14735. [Google Scholar]

- Yu, X.; Chen, Z.; Ling, Y.; Dong, S.; Liu, Z.; Lu, Y. Temporal Data Meets LLM - Explainable Financial Time Series Forecasting. arXiv 2023, arXiv:2306.11025. [Google Scholar]

- Chen, Z.; Zheng, L.; Lu, C.; Yuan, J.; Zhu, D. ChatGPT Informed Graph Neural Network for Stock Movement Prediction. arXiv 2023, arXiv:2306.03763. [Google Scholar] [CrossRef]

- Halder, S. FinBERT-LSTM: Deep Learning based stock price prediction using News Sentiment Analysis. arXiv 2022, arXiv:2211.07392. [Google Scholar]

- Deng, Y.; He, X.; Hu, J.; Yiu, S.M. Enhancing Few-Shot Stock Trend Prediction with Large Language Models. arXiv 2024, arXiv:2407.09003. [Google Scholar]

- Zhou, L.; Zhang, Y.; Yu, J.; Wang, G.; Liu, Z.; Yongchareon, S.; Wang, N. LLM-Augmented Linear Transformer–CNN for Enhanced Stock Price Prediction. Mathematics 2025, 13, 487. [Google Scholar] [CrossRef]

- Wimmer, C.; Rekabsaz, N. Leveraging Vision-Language Models for Granular Market Change Prediction. arXiv 2023, arXiv:2301.10166. [Google Scholar]

- Cao, Y.; Chen, Z.; Pei, Q.; Dimino, F.; Ausiello, L.; Kumar, P.; Subbalakshmi, K.; Ndiaye, P.M. RiskLabs: Predicting Financial Risk Using Large Language Model Based on Multi-Sources Data. arXiv 2024, arXiv:2404.07452. [Google Scholar]

- Xie, Q.; Han, W.; Lai, Y.; Peng, M.; Huang, J. The Wall Street Neophyte: A Zero-Shot Analysis of ChatGPT Over MultiModal Stock Movement Prediction Challenges. arXiv 2023, arXiv:2304.05351. [Google Scholar]

- Lopez-Lira, A.; Tang, Y. Can ChatGPT Forecast Stock Price Movements? Return Predictability and Large Language Models. arXiv 2023, arXiv:2304.07619. [Google Scholar] [CrossRef]

- Zhang, Y.; Yan, J. Crossformer: Transformer utilizing cross-dimension dependency for multivariate time series forecasting. In Proceedings of the Eleventh International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Shi, Z. MambaStock: Selective state space model for stock prediction. arXiv 2024, arXiv:2402.18959. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).