Abstract

The primary objective of this article is to enhance the convergence rate of the extragradient method through the careful selection of inertial parameters and the design of a self-adaptive stepsize scheme. We propose an improved version of the extragradient method for approximating a common solution to pseudomonotone equilibrium and fixed-point problems that involve an infinite family of demimetric mappings in real Hilbert spaces. We establish that the iterative sequences generated by our proposed algorithms converge strongly under suitable conditions. These results substantiate the effectiveness of our approach in achieving convergence, marking a significant advancement in the extragradient method. Furthermore, we present several numerical tests to illustrate the practical efficiency of the proposed method, comparing these results with those from established methods to demonstrate the improved convergence rates and solution accuracy achieved through our approach.

Keywords:

equilibrium problems; pseudomonotone bifunction; extragradient method; strongly pseudomonotone bifunction; strong convergence MSC:

26A33; 34B10; 34B15

1. Introduction

Let be a real Hilbert space equipped with the inner product and the corresponding norm . Denote by a nonempty, closed, and convex subset of . This paper focuses on identifying a common solution to both equilibrium and fixed-point problems within the framework of real Hilbert spaces. These problems have broad applications in diverse mathematical models, where equilibrium or fixed-point conditions frequently arise. As highlighted in numerous research works [1,2,3,4,5,6], these concepts are particularly relevant in practical fields such as signal processing, composite minimization, optimal control, and image restoration. Consider a bifunction , satisfying for all . The objective of the equilibrium problem (EP) is to find a point such that

Equilibrium problems (EPs) provide a comprehensive mathematical framework encompassing a range of problems, such as fixed-point problems, variational inequalities, optimization problems, saddle-point problems, complementarity problems, and Nash equilibria in non-cooperative games. The main goal of EPs is to determine equilibrium conditions that ensure the stability of a system. Solutions to EPs are particularly important in fields like economics and game theory, as demonstrated in Nash’s seminal work [7], where equilibrium is a central concept. Formally represented by (1), the equilibrium problem has garnered considerable attention and has diverse applications, including poroelasticity in petroleum engineering [8], economics [9], image reconstruction [10], telecommunications networks, public infrastructure [11], and Nash equilibria in game theory [12]. Despite their broad applicability, the formal study of EPs only began after the establishment of Ky Fan’s inequality [13]. Following this, Nikaido recognized Nash equilibria as solutions to EPs [14], while Gwinner clarified the relationships among EPs, optimization, and variational inequalities [15]. However, these early studies did not initially identify EPs as a distinct class of mathematical problems.

The primary method for addressing the problem outlined in (1), based on the auxiliary problem principle, was first introduced in [16]. This approach, commonly known as the proximal-like method, established a foundation for subsequent research into its convergence properties, as investigated in [17]. The analysis in [17] extends to cases where the equilibrium bifunction exhibits pseudo-monotonicity and satisfies Lipschitz-type conditions. The extragradient method, an iterative optimization approach for variational inequality and equilibrium problems, extends the classical gradient method by adding an extra step to improve convergence. As presented in [17], this method generates sequences and as follows:

- 1.

- Initialization: Start from any in the feasible set .

- 2.

- Iterative steps: Compute and usingwhere is a positive parameter, and is the norm in .

- 3.

- Stopping criterion: The process stops when , with as the solution to (1).

The method in (2) requires careful selection of the parameter , which must satisfy , where and are constants with Lipschitz-type conditions. The extragradient methods, as introduced by Korpelevich in [18] and further developed in [16,17], are effective in solving equilibrium problems and are widely used in optimization and variational analysis.

This paper focuses on the fixed-point problem (FPP) associated with a mapping , defined as finding such that . The set of fixed points, , consists of solutions to the FPP. The goal is to provide a unified solution for both the EPs and FPPs in real Hilbert spaces, with applications in areas like signal processing, network resource management, and image restoration, where constraints are often modeled as fixed-point problems. The equilibrium problem with a fixed point constraint (EPFPP) has gained significant attention, leading to various proposed solution methods, as shown in studies such as [19,20,21,22,23,24,25,26,27,28,29]. This paper addresses the following problem:

The method presented in (2) serves as a foundational reference in the field. Building on the work of Tran et al. [17], recent advancements in extragradient-type methods have been developed to address equilibrium problems in infinite-dimensional Hilbert spaces, with significant contributions documented in studies such as [30,31,32,33]. The approach outlined in (2) requires solving a strongly convex programming problem on the set twice per iteration, which can substantially increase computational costs, particularly when dealing with complex sets . To overcome this challenge, adaptations of the subgradient extragradient method, as proposed by Censor, Gibali, and Reich [34,35], have been introduced for equilibrium problems in infinite-dimensional Hilbert spaces. Notable improvements in [30,33,36] reformulate the problem on as a half-space optimization at each iteration, thereby improving computational efficiency compared to traditional methods. It is important to note that the implementation of the method in (2) requires prior knowledge of the Lipschitz constants associated with the bifunction . However, obtaining such information can be challenging in practical applications. Self-adaptive methods are particularly advantageous in situations where the Lipschitz constants are unknown, as they are specifically designed to operate effectively without relying on this information.

Motivated by the methods presented in [37,38], we propose a novel inertial algorithm that is both simple and efficient for approximating solutions to equilibrium and fixed-point problems, utilizing a subgradient extragradient approach. The main contributions of this study are summarized as follows:

- (a)

- The proposed algorithm enhances the iterative process by incorporating inertial terms, which introduce momentum-like effects. The inclusion of these inertial components significantly accelerates the convergence rate of the iterative sequence.

- (b)

- The method employs a monotonic stepsize scheme, enabling dynamic adjustment of the stepsize throughout the iterative process. This adaptive stepsize strategy eliminates the need for prior knowledge of the Lipschitz constants of the bifunction, which are often difficult to determine in practical applications.

- (c)

- Our study demonstrates that the sequence generated by the method we propose exhibits strong convergence to the unified fixed points within the framework of demimetric mappings. These fixed points correspond to solutions of equilibrium problems associated with pseudomonotone operators, which play a crucial role in various mathematical models. The observed convergence to these common fixed points highlights the versatility and effectiveness of the algorithm in solving a broad class of equilibrium problems.

- (d)

- To evaluate the effectiveness of the proposed approach, we conduct a series of extensive numerical experiments. These experiments involve solving several instances of equilibrium and fixed-point problems using our algorithm and comparing its performance with other methods available in the literature. Through detailed numerical examples and a comparative analysis, we illustrate the superior performance and efficiency of our method in terms of convergence speed, accuracy, and robustness.

2. Preliminaries

Let be a nonempty, closed, and convex subset of the real Hilbert space . We denote weak convergence by and strong convergence by . For any and scalar , the following properties hold [39]:

- (i)

- (ii)

- (iii)

Definition 1

([39]). Let be a subset of a real Hilbert space , and let be a convex function.

- (i)

- The normal cone at is

- (ii)

- The subdifferential of ϰ at is

Definition 2

([40]). Let be a bifunction on with a positive constant . We say that is

- (i)

- Strongly monotone if

- (ii)

- Monotone if

- (iii)

- Strongly pseudomonotone if

- (iv)

- Pseudomonotone if

- (v)

- Lipschitz-type continuous if there exist constants such that

Definition 3

([41]). Let with . The mapping is classified as follows:

- (i)

- Nonexpansive if

- (ii)

- Quasi-nonexpansive if

- (iii)

- -Demicontractive if for ,

- (iv)

- -Demimetric if and for

Lemma 1

([37]). Let be a subdifferentiable, convex, and lower semi-continuous function on . An element is a minimizer of ϰ if and only if

where is the subdifferential of ϰ at , and is the normal cone to at .

Lemma 2

([29]). Let be a Hilbert space and a nonempty, closed, and convex subset. Let , and define the mapping satisfying the k-demimetric condition, ensuring that is non-empty. Let , and define . Then,

- (i)

- ;

- (ii)

- is closed and convex;

- (iii)

- is quasi-nonexpansive from to .

Lemma 3

([42]). Let be a Hilbert space and a nonempty closed convex subset of . Consider a sequence of mappings , where each is a -demimetric mapping with and . Let be a positive sequence with . Then, the mapping

is a σ-demimetric mapping with , and

Lemma 4

([43]). Let be a nonexpansive mapping. Then, is demiclosed on if, for any sequence weakly converging to , with strongly converging to 0, we have .

Lemma 5

([44]). Let be a sequence of non-negative real numbers, and a sequence in with . Suppose is a sequence such that for all ,

If for any subsequence ,

then

3. Main Results

This section presents a novel algorithm for solving the problem in (3). The proposed method combines a monotonic adaptive step-size rule with an inertial subgradient extragradient framework. By incorporating inertial techniques into a hybrid approach, the algorithm effectively addresses both EPs and FPPs, which play a fundamental role in optimization, game theory, and variational analysis. The algorithm updates the solution iteratively through a sequence of projection and minimization steps, guaranteeing convergence.

The algorithm begins with initial points and generates a sequence as follows. Step 1 dynamically determines the inertial term to balance speed and stability. Step 2 computes an intermediate point and minimizes a function, adjusted by regularization, to obtain . In Step 3, a minimization over the constrained set is performed, followed by a weighted update for . Step 4 adapts the step size based on progress, enhancing efficiency and ensuring convergence.

This method integrates inertial terms with self-adaptive stepsizes, improving convergence rates for complex fixed-point and equilibrium problems. The inclusion of regularization and adaptive parameters ensures robustness and flexibility, making it suitable for diverse optimization applications. To prove convergence analysis and initialize the main method, we assume the following conditions hold.

Assumption 1.

Let be a real Hilbert space, and a non-empty, closed, convex set. The following conditions hold:

- (c1)

- The bifunction is pseudomonotone and Lipschitz continuous on .

- (c2)

- is an infinite family of -demimetric mappings, each demiclosed at zero, with . Let . Define , where , and conclude from Lemma 3 that Ψ is a σ-demimetric mapping. Define with .

- (c3)

- The sequence is positive and satisfies , with . Additionally, there exists such that , where , , and .

- (c4)

- The set is non-empty, i.e., .

The following lemma establishes that the sequence defined in (6), which represents the stepsize in the algorithm, is well-defined and bounded. This property is essential for the performance of the algorithm, as the boundedness of ensures the stability of the iterative process and is critical for demonstrating the convergence of the method.

Lemma 6.

The sequence generated by the update rule in (6) from Algorithm 1 is non-increasing and satisfies the following inequality:

Proof.

From (6), it is evident that the sequence is non-increasing. Additionally, by applying the Lipschitz condition to the function , we obtain the following inequality:

Thus, the sequence is non-increasing and bounded below. Furthermore, we have . □

| Algorithm 1 Inertial Hybrid Algorithm for Fixed-Point and Equilibrium Problems |

|

Remark 1.

From condition (c3) in Assumption 1, we have that , which implies that . Furthermore, from Equation (4), it follows that for all , provided that . Consequently, we obtain

The following lemma is crucial for establishing the boundedness of the iterative sequence, which is essential for confirming the strong convergence of the proposed sequence to a common solution.

Lemma 7.

Consider the sequences , , and generated by Algorithm 1. For every , it holds that

Proof.

Using Lemma 1, we establish that

Consequently, there exist elements and such that

This leads us to the following expression:

Since , we conclude that for all . This yields

Given that , we obtain

Substituting into (10), we obtain

Since , we know that . Given the pseudomonotonicity of the bifunction , it follows that . Therefore, based on (11), we can infer

From the definition of the half-space , we obtain

Since , it follows that

Substituting into this inequality, we obtain

Using the definition of , we derive the following inequality:

which can also be expressed as

Additionally, we have the following:

and

□

The following theorem constitutes the main result of our study. It establishes that the sequence generated by the proposed method converges strongly to a unique solution of the problem (3) within the solution set . To prove the strong convergence of the iterative sequence, we employ the central theorem stated below, which guarantees both the boundedness of the sequence and its strong convergence to a common solution.

Theorem 1.

Let be the sequence generated by Algorithm 1 under the conditions outlined in Assumption 1. Then, the sequence converges strongly to an element .

Proof.

Claim 1: The sequence is bounded.

Based on Lemma 7, for and , we can derive the following inequality:

By combining (22) with Lemma 7, for every , we obtain

Considering the definition of the sequence , we find

Given that the sequence converges to zero, as stated in Remark 1, there exists a positive constant such that

for all . Consequently, we have

From the definition of the sequence and utilizing (23), we derive the following inequalities:

Given that satisfies the condition of being a -demimetric as per Assumption 1(c2), and applying Lemma 2, we assert that is a quasinonexpansive mapping. Therefore, from the previous inequality and the definition of , we have

Thus, this sequence of inequalities demonstrates that is bounded.

Claim 2.

Claim 3.

By employing the definition of and applying Lemma 7, we derive

Thus, by using (24), we establish Claim 3.

Claim 4: The sequence generated by Algorithm 1 converges strongly to an element in Γ.

Let be a subsequence of such that

Thus, we obtain

We now define the sequence as follows:

By employing Claim 3, we obtain

Consequently, we obtain

which leads to the following result:

Using the definition of the sequence adn (7), we obtain

Moreover, by the definition of and Assumption 1 (c3), we gain

Additionally, we find

Furthermore, from Assumption 1 condition and (26), we conclude

Given that is bounded, there exists a subsequence of such that weakly converges to as . From expression (30), it follows that also weakly converges to . Moreover, by Assumption 1 condition , the demimetric and demiclosed properties of at zero, and expression (31), we conclude that . Furthermore, applying Lemma 3 yields . Additionally, we establish that . From expressions (11) and (16), we obtain

Since and we have

Thus, , and hence . We now show that the sequence converges strongly to . Next, we have

Since the sequence converges weakly to u as , we have . This implies that

Application to Solve Variational Inequalities

In this section, we apply our findings to address variational inequality problems that involve a pseudomonotone and Lipschitz continuous operator, as well as an infinite family of demimetric mappings, within the context of real Hilbert spaces. The primary objective is to establish a theorem that identifies a common solution for both the variational inequalities and the infinite collection of demimetric mappings. Let be an operator. We begin by defining the classical variational inequality problem as follows:

Additionally, consider the bifunction defined by

The equilibrium problem is reformulated as a variational inequality with the Lipschitz constant for the mapping defined as . The subsequent corollary is derived from Algorithm 1 and its associated minimization problem, which addresses equilibrium problems reformulated as projections onto a convex set. This result facilitates the identification of a common solution for both the variational inequality and the fixed-point problem.

Corollary 1.

Let be a pseudomonotone mapping satisfying the L-Lipschitz continuity condition for some . Assume that the intersection is non-empty. Let , , and . Define , where is given by

Additionally, assume there exists a positive sequence such that . Compute the following:

where . Next, compute

where the stepsize is updated as

The sequence converges strongly to an element of .

4. Numerical Experiments

This section demonstrates the effectiveness of the newly proposed low-cost inertial extragradient method through a series of carefully selected numerical examples. These examples underscore the method’s efficient convergence and its capability to address computational challenges in problems related to equilibrium and fixed points under convex constraints. The numerical results affirm that our method represents not only a theoretical advancement but also a practical and adaptable tool, providing valuable insights for researchers in this domain.

All computations were conducted using MATLAB R2018b on a standard HP laptop equipped with an Intel Core i5-6200 processor and 8 GB of RAM. This setup ensures the reproducibility and reliability of the findings within typical research and industry computing environments.

Example 1.

Consider the HpHard problem, which has been thoroughly investigated in numerical experiments by Harker and Pang [45]. Define the operator as where , and

In our numerical experiments, we use a random matrix , a skew-symmetric matrix defined as , where , and a diagonal matrix . Define the bifunction as

The feasible set is given by It is evident that is both monotone and Lipschitz continuous, with constants . Additionally, consider the mapping defined by . In our experiments, we initialize with .

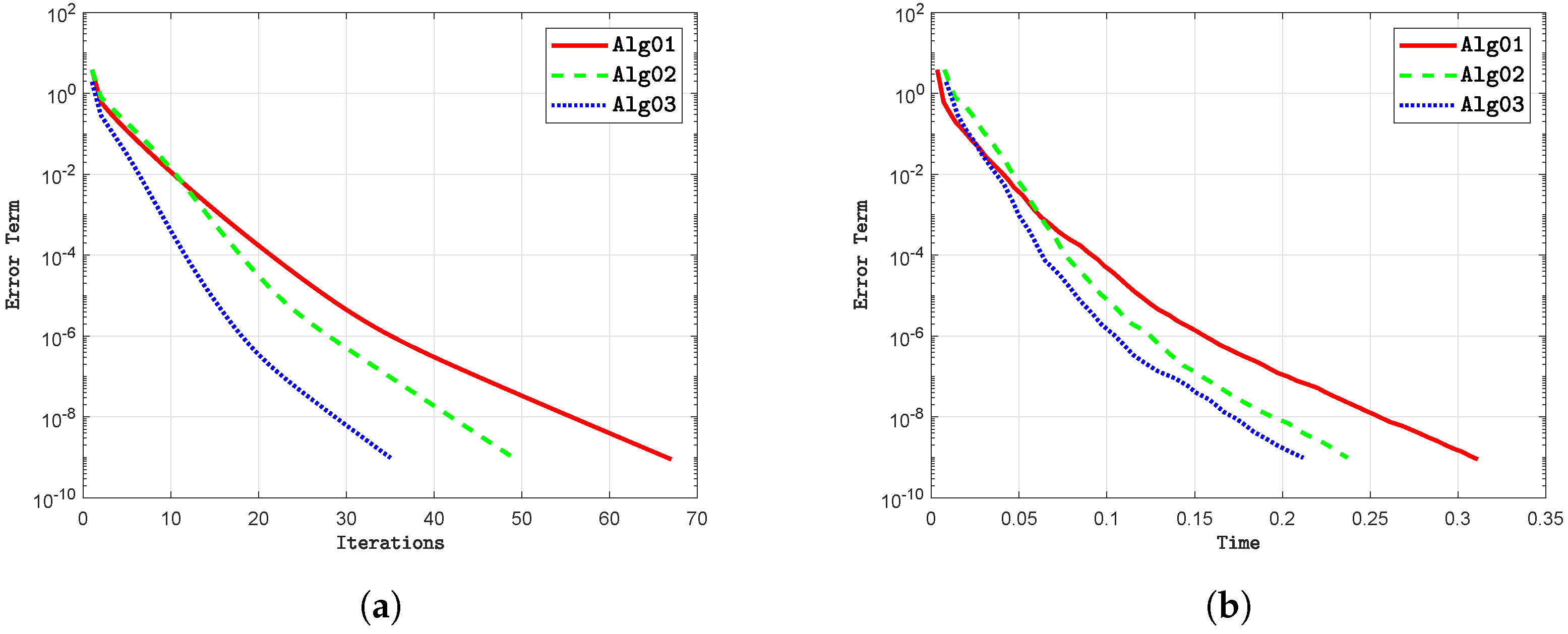

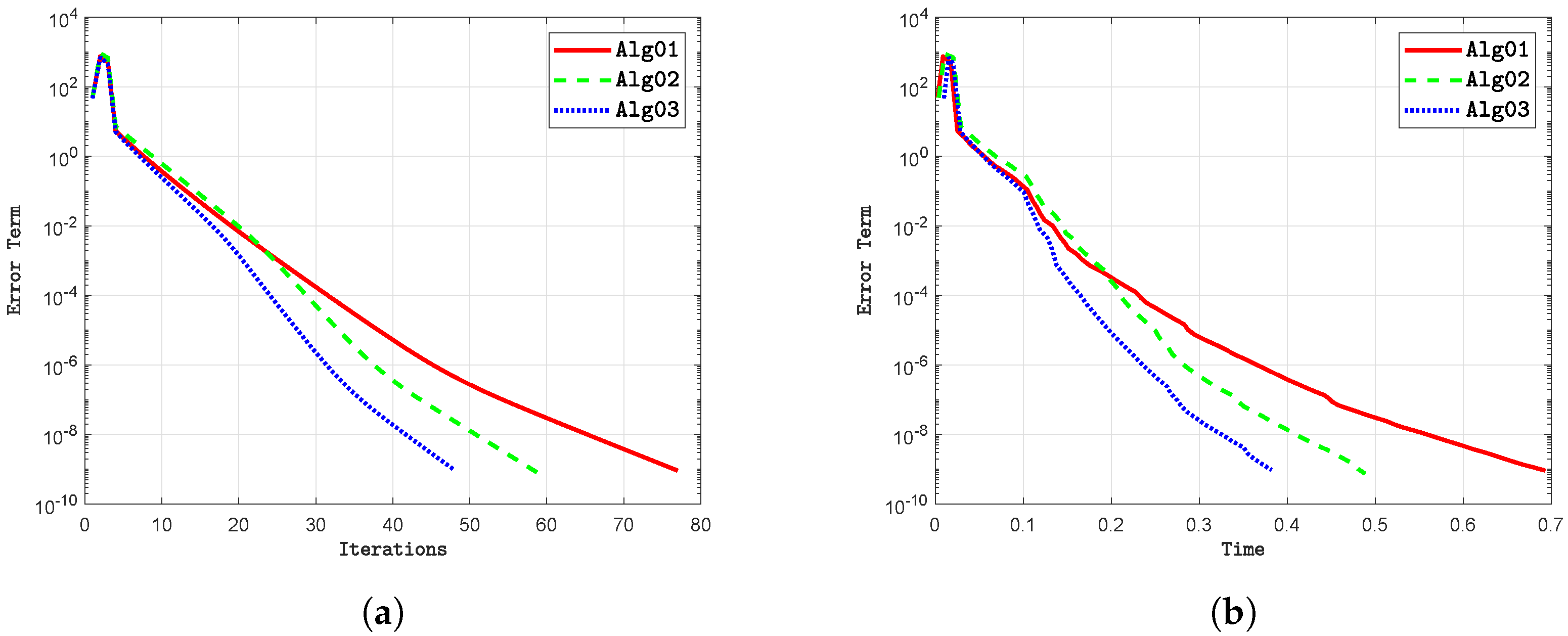

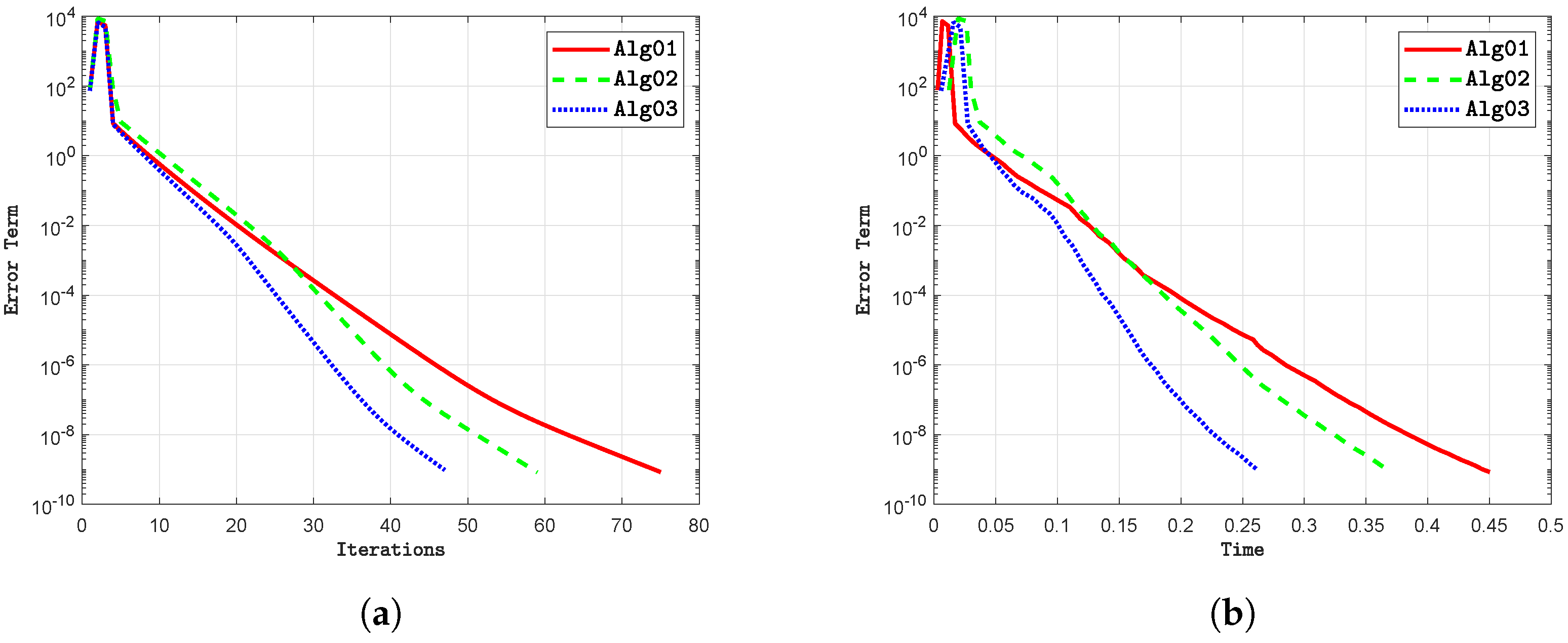

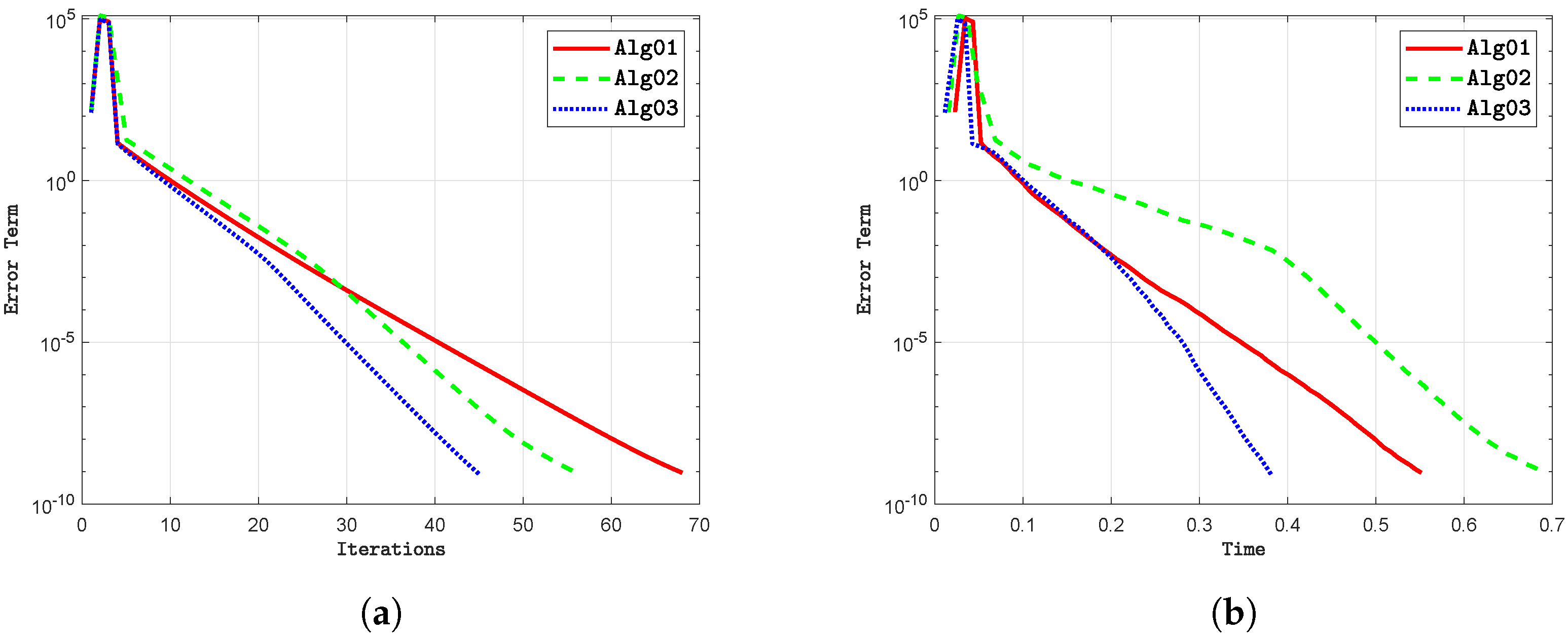

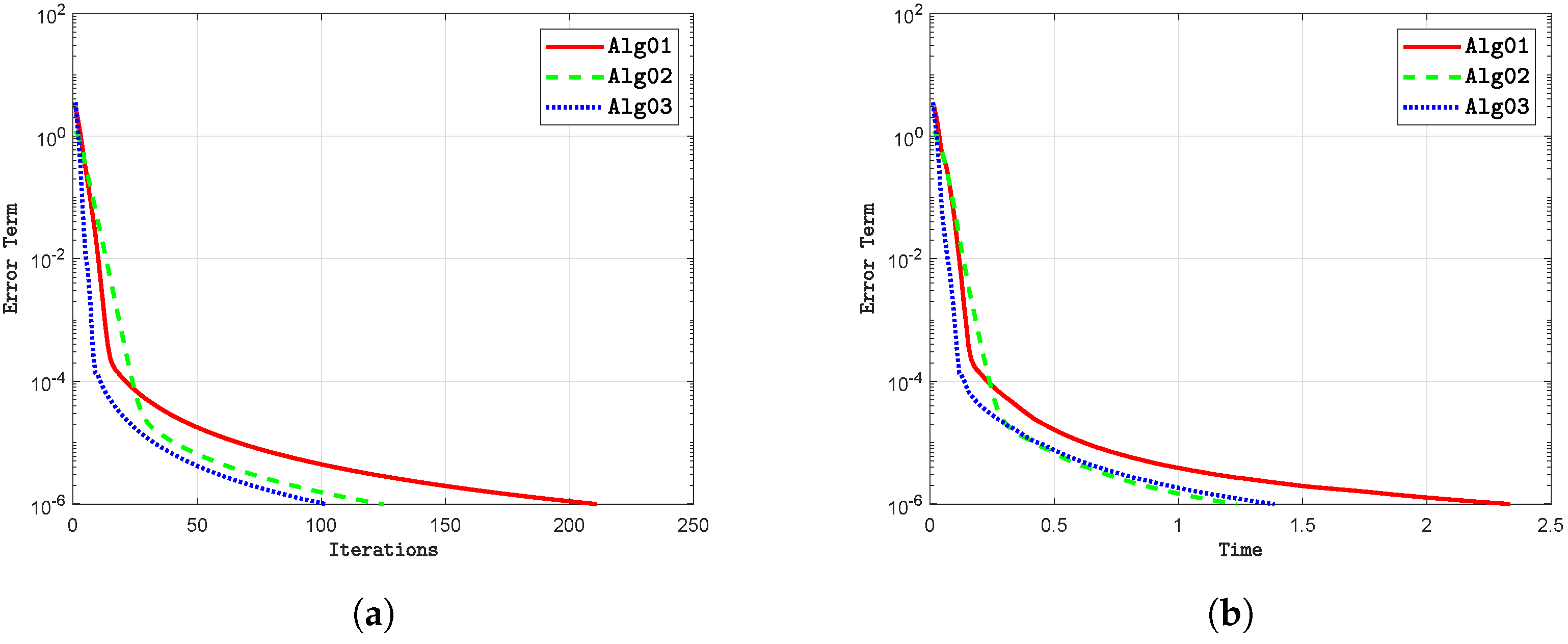

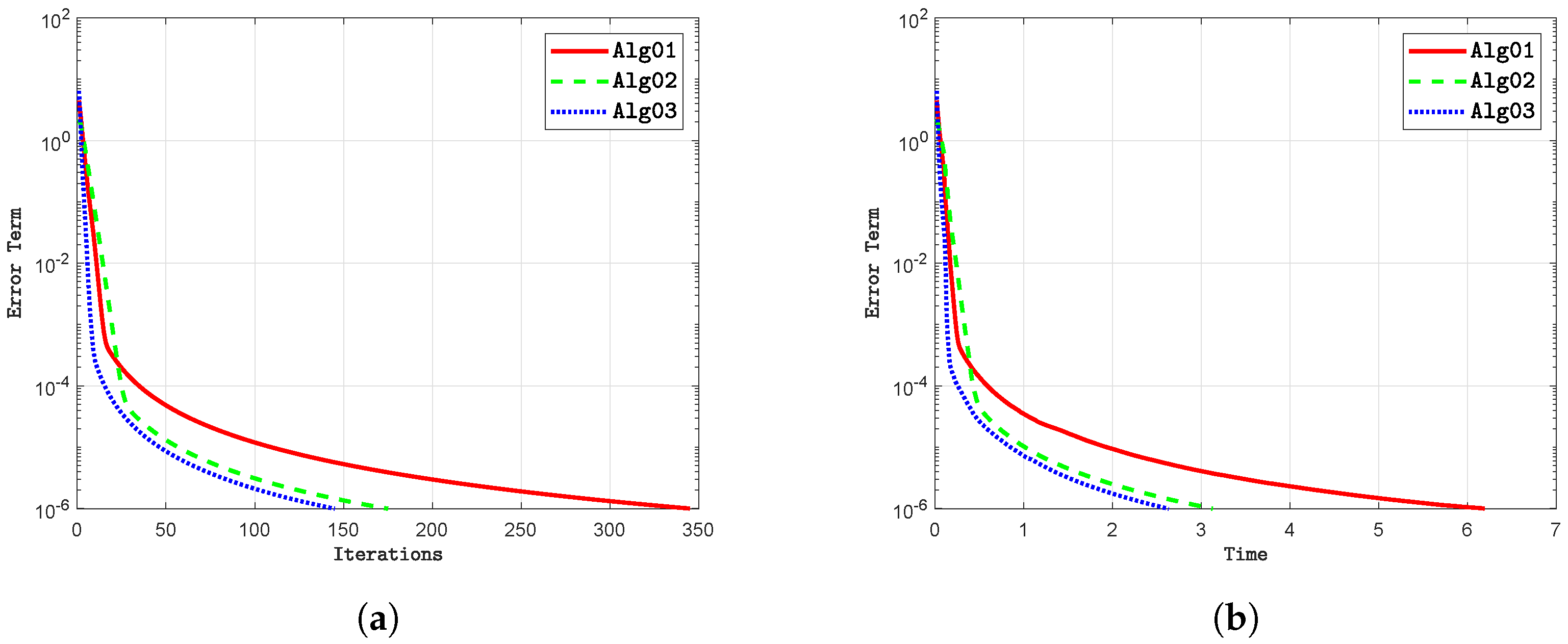

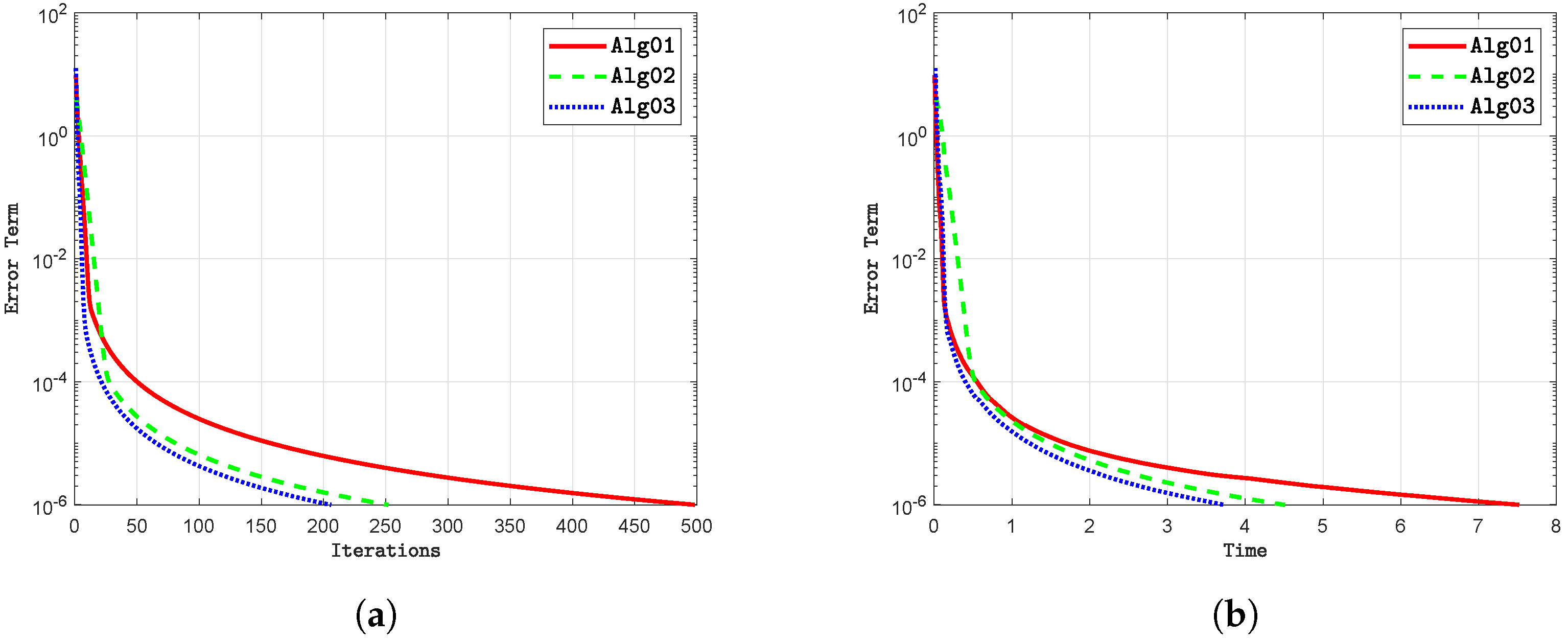

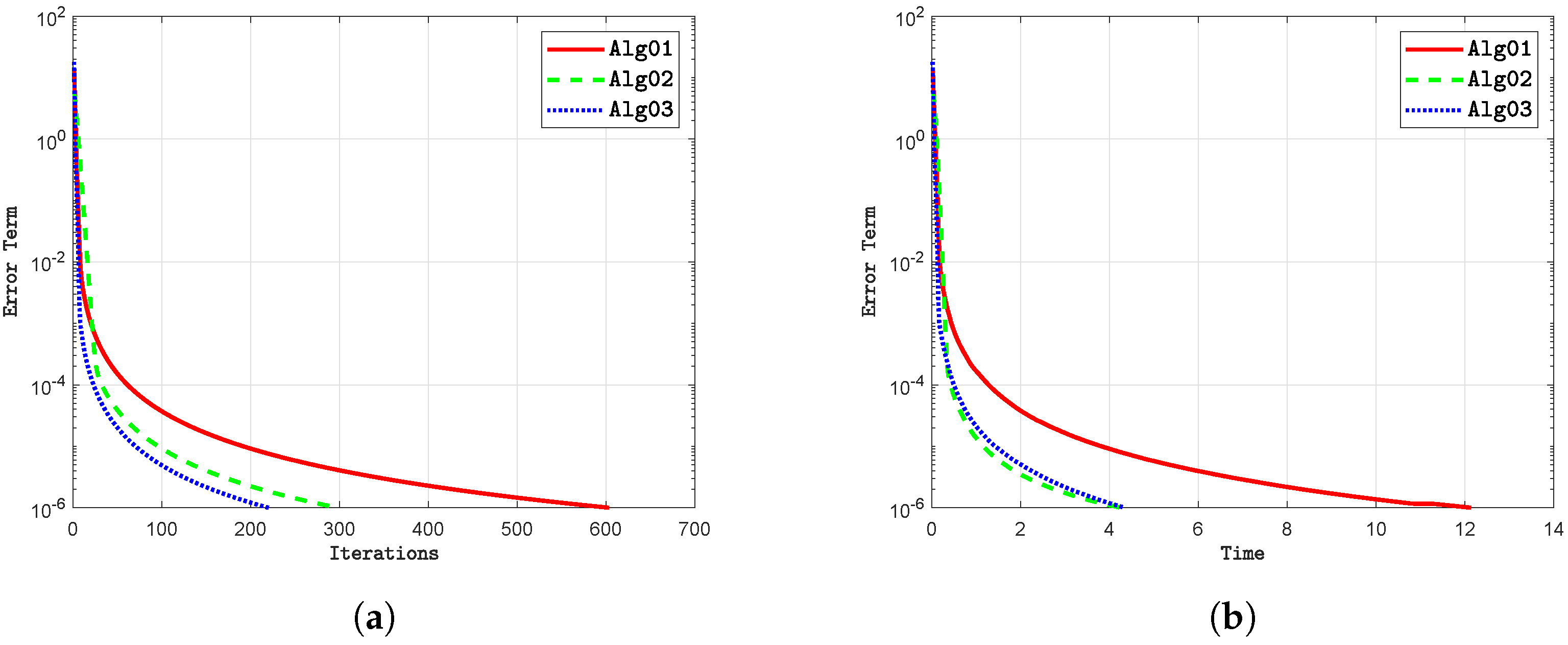

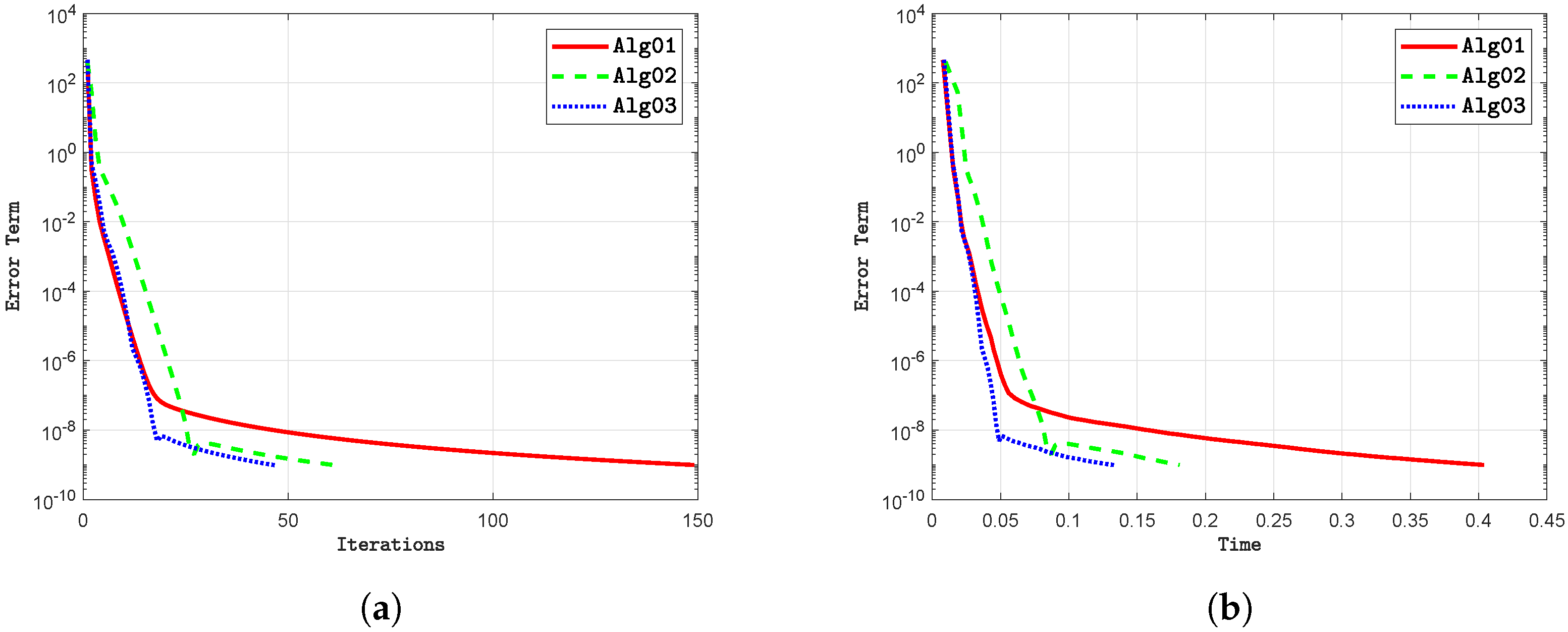

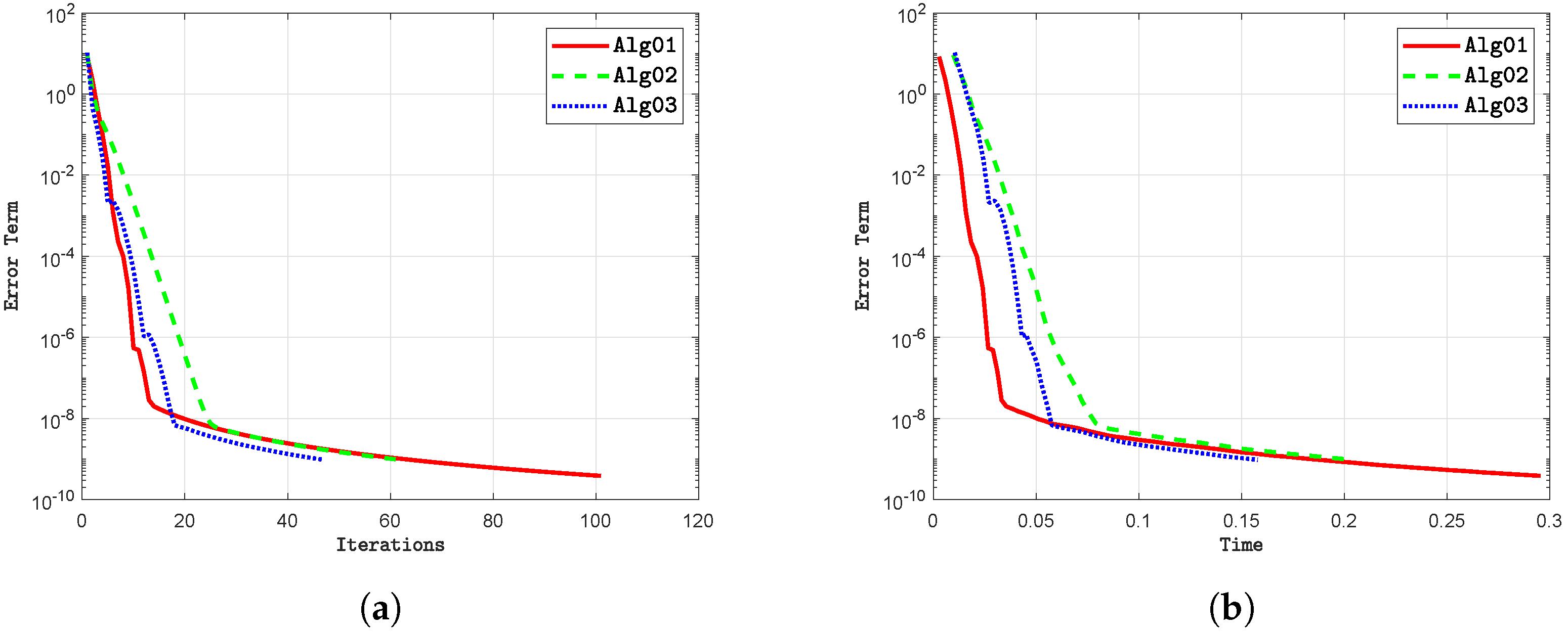

The numerical results for Example 1 are shown in Figure 1, Figure 2, Figure 3 and Figure 4, as well as in Table 1. These results were obtained by varying the dimensions within the Hilbert space . Upon analyzing the figures and the table, the following observations can be made:

Figure 1.

Comparison of algorithms using Example 1 with . (a) Total number of iterations executed with . (b) Execution time in seconds with .

Figure 2.

Comparison of algorithms using Example 1 with . (a) Total number of iterations executed with (b) Execution time in seconds with

Figure 3.

Comparison of algorithms using Example 1 with . (a) Total number of iterations executed with (b) Execution time in seconds with

Figure 4.

Comparison of algorithms using Example 1 with . (a) Total number of iterations executed with (b) Execution time in seconds with

- i

- The variation in dimensions within a real Hilbert space has minimal impact on the iteration count across all algorithms. Our proposed algorithm consistently demonstrates a lower iteration count compared to alternative methods in all scenarios. Thus, the dimensionality of the Hilbert space appears to have a negligible influence on the iteration count, regardless of the algorithm employed.

- ii

- The execution time may vary slightly depending on the dimensions of the Hilbert space , even when the number of iterations remains constant. Generally, our proposed algorithm tends to complete tasks more quickly, typically measured in seconds, compared to other methods. However, it is challenging to identify a clear trend from these results. Consequently, the choice of dimensions in the Hilbert space seems to have little effect on execution time across all algorithms.

The execution of the algorithms follows specific configurations of control parameters. First, we apply Algorithm 1 from [37], denoted as (Alg01), with the following parameter settings Second, we utilize Algorithm 3.1 from [38], referred to as (Alg02), with the following parameter specifications: Lastly, we implement Algorithm 1, denoted as (Alg03), with the following parameter values

Example 2.

The second problem under consideration arises from the Nash–Cournot Oligopolistic Equilibrium model, as discussed in [17]. Let us consider a function defined as

The subgradient projection is defined by

In this context, let . As a result, is quasi-nonexpansive, demiclosed at zero, and its fixed point set is given by . Additionally, let us define the bifunction as

where and are matrices. The matrix is symmetric and negative semidefinite, whereas P is symmetric and positive semidefinite, with Lipschitz constants (for further details, see [17]).

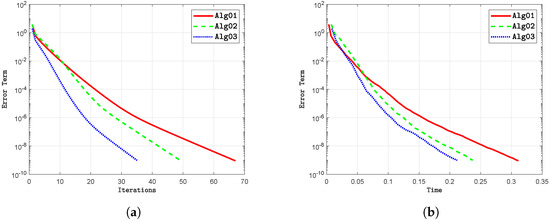

In the numerical study, the initial points are defined as . The dimensionality of the space varies, and the stopping condition is established by the error term . Figure 5, Figure 6, Figure 7 and Figure 8, along with Table 2, show the numerical results from analyzing different dimensions in the Hilbert space . Upon reviewing these visual representations and the data presented in the table, we can draw the following conclusions:

Figure 5.

Comparison of algorithms using Example 2 with . (a) Total number of iterations with (b) Execution time in seconds with

Figure 6.

Comparison of algorithms using Example 2 with . (a) Total number of iterations with (b) Execution time in seconds with

Figure 7.

Comparison of algorithms using Example 2 with . (a) Total number of iterations with (b) Execution time in seconds with

Figure 8.

Comparison of algorithms using Example 2 with . (a) Total number of iterations with (b) Execution time in seconds with

- (i)

- The proposed algorithm consistently demonstrates a lower number of iterations compared to alternative methods across different scenarios. It is evident that, regardless of the algorithm employed, the dimensionality of the Hilbert space significantly influences the iteration count. Notably, as the dimensionality increases, the iteration count also increases for all algorithms.

- (ii)

- The proposed algorithm consistently exhibits shorter execution times, measured in seconds, compared to alternative methods across various scenarios. It is apparent that, irrespective of the algorithm utilized, the dimensionality of the Hilbert space has a significant impact on execution time. Importantly, as the dimensionality increases, execution time also tends to increase for all algorithms.

The code is executed using the following control criterion settings. First, we apply Algorithm 1 from [37], denoted as (Alg01), with the parameter values Next, we use Algorithm 3.1 from [38], referred to as (Alg02), with the following parameter settings Finally, we implement Algorithm 1, denoted as (Alg03), with the following parameter values

Example 3.

Let denote the real Hilbert space , with an inner product given by

The corresponding norm is

Let the operator be defined by

where represents the unit ball in , and

Let us define the bifunction as

The bifunction is Lipschitz continuous with constants and satisfies the monotonicity property as described in [46]. The metric projection onto is defined as

Now, consider the mapping defined by

A straightforward calculation shows that is 0-demicontractive, with the solution given by .

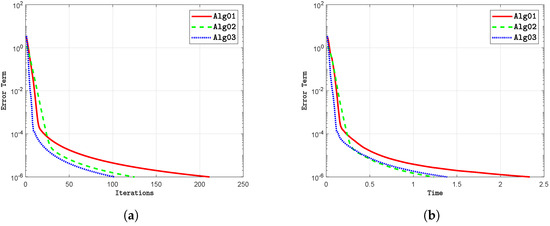

Figure 9 and Figure 10 present the numerical results obtained from analyzing two sets of initial points, and , selected from the set . The following observations can be made:

Figure 9.

Comparison of algorithms using Example 3: performance evaluation with (a) Total number of iterations executed with (b) Execution time in seconds with

Figure 10.

Comparison of algorithms using Example 3: performance evaluation with (a) Total number of iterations executed with (b) Execution time in seconds with

- i

- Modifying the initial points and influences the number of iterations required by the algorithms. Our proposed method generally requires fewer iterations compared to existing approaches.

- ii

- The execution time for each algorithm varies based on the selected initial points; however, our method typically completes in less time than the alternatives.

The algorithms are executed with the specified control criterion settings. Firstly, we implement Algorithm 1 from [37], referred to as (Alg01), with the following parameter settings Secondly, we utilize Algorithm 3.1 from [38], denoted as (Alg02), with the following parameter specifications: Finally, we implement Algorithm 1, referred to as (Alg03), with the following parameter values:

5. Conclusions

This paper introduces a new inertial hybrid algorithm designed to address equilibrium and fixed-point problems within real Hilbert spaces. The proposed algorithm improves upon the classical extragradient method by incorporating inertial terms and implementing a self-adaptive stepsize, leading to enhanced convergence speed and computational efficiency. Under appropriate conditions, the algorithm exhibits strong convergence, thereby providing a solid theoretical foundation. The main contributions of this work include the development of an algorithm that integrates inertial momentum with a dynamically adjusted stepsize. This feature makes the method particularly effective in scenarios where Lipschitz constants are not readily available. The algorithm guarantees convergence to a common fixed point under conditions of pseudomonotonicity and other pertinent assumptions, demonstrating its versatility for a variety of equilibrium and fixed-point problems.

Numerical experiments indicate that the proposed method outperforms existing algorithms in terms of convergence rate, accuracy, and robustness, underscoring its practical applicability in optimization, game theory, and variational analysis. Future research may explore extending the algorithm to infinite-dimensional spaces and non-convex problems, as well as applying inertial techniques and adaptive stepsizes to other optimization challenges.

Author Contributions

All authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

No new data were created or analyzed in this study.

Acknowledgments

J. Alzabut and M. A. Azim would like to thank Prince Sultan University for its endless support. Habib ur Rehman acknowledges financial support from Zhejiang Normal University, Jinhua 321004, China.

Conflicts of Interest

The authors declare that they have no competing interests.

References

- Qin, X.; An, N.T. Smoothing algorithms for computing the projection onto a Minkowski sum of convex sets. Comput. Optim. Appl. 2019, 74, 821–850. [Google Scholar] [CrossRef]

- An, N.T.; Nam, N.M.; Qin, X. Solving k-center problems involving sets based on optimization techniques. J. Glob. Optim. 2019, 76, 189–209. [Google Scholar] [CrossRef]

- Hussien, A.G.; Hassanien, A.E.; Houssein, E.H.; Amin, M.; Azar, A.T. New binary whale optimization algorithm for discrete optimization problems. Eng. Optim. 2019, 52, 945–959. [Google Scholar] [CrossRef]

- Elmorshedy, M.F.; Almakhles, D.; Allam, S.M. Improved performance of linear induction motors based on optimal duty cycle finite-set model predictive thrust control. Heliyon 2024, 10, e34169. [Google Scholar] [CrossRef] [PubMed]

- Banerjee, P.; Roy, S.; Sinha, A.; Hassan, M.M.; Burje, S.; Agrawal, A.; Bairagi, A.K.; Alshathri, S.; El-Shafai, W. MTD-DHJS: Makespan-Optimized Task Scheduling Algorithm for Cloud Computing with Dynamic Computational Time Prediction. IEEE Access 2023, 11, 105578–105618. [Google Scholar] [CrossRef]

- Debnath, P.; Torres, D.F.M.; Cho, Y.J. Advanced Mathematical Analysis and Its Applications; Chapman and Hall/CRC: Boca Raton, FL, USA, 2023. [Google Scholar] [CrossRef]

- Nash, J.F. Equilibrium points in n-person games. Proc. Natl. Acad. Sci. USA 1950, 36, 48–49. [Google Scholar] [CrossRef]

- Long, G.; Liu, S.; Xu, G.; Wong, S.W.; Chen, H.; Xiao, B. A Perforation-Erosion Model for Hydraulic-Fracturing Applications. SPE Prod. Oper. 2018, 33, 770–783. [Google Scholar] [CrossRef]

- Arrow, K.J.; Debreu, G. Existence of an Equilibrium for a Competitive Economy. Econometrica 1954, 22, 265–290. [Google Scholar] [CrossRef]

- Kundur, D.; Hatzinakos, D. Blind image deconvolution. IEEE Signal Process. Mag. 1996, 13, 4–64. [Google Scholar] [CrossRef]

- Patriksson, M. The Traffic Assignment Problem: Models and Methods; Courier Dover Publications: Chicago, IL, USA, 2015. [Google Scholar]

- Nash, J. Non-Cooperative Games. Ann. Math. 1951, 54, 286. [Google Scholar] [CrossRef]

- Fan, K. A Minimax Inequality and Applications. In Inequalities III; Shisha, O., Ed.; Academic Press: New York, NY, USA, 1972. [Google Scholar]

- Nikaidô, H.; Isoda, K. Note on non-cooperative convex game. Pac. J. Math. 1955, 5, 807–815. [Google Scholar] [CrossRef]

- Gwinner, J. On the penalty method for constrained variational inequalities. Optim. Theory Algorithms 1981, 86, 197–211. [Google Scholar]

- Flåm, S.D.; Antipin, A.S. Equilibrium programming using proximal-like algorithms. Math. Program. 1996, 78, 29–41. [Google Scholar] [CrossRef]

- Tran, D.Q.; Dung, M.L.; Nguyen, V.H. Extragradient algorithms extended to equilibrium problems. Optimization 2008, 57, 749–776. [Google Scholar] [CrossRef]

- Korpelevich, G. The extragradient method for finding saddle points and other problems. Matecon 1976, 12, 747–756. [Google Scholar]

- Rehman, H.U.; Kumam, W.; Sombut, K. Inertial modification using self-adaptive subgradient extragradient techniques for equilibrium programming applied to variational inequalities and fixed-point problems. Mathematics 2022, 10, 1751. [Google Scholar] [CrossRef]

- Ceng, L.C.; Yao, J.C. A hybrid iterative scheme for mixed equilibrium problems and fixed point problems. J. Comput. Appl. Math. 2008, 214, 186–201. [Google Scholar] [CrossRef]

- Zeng, L.C.; Yao, J.C. Strong convergence theorem by an extragradient method for fixed point problems and variational inequality problems. Taiwan. J. Math. 2006, 10, 1293–1303. [Google Scholar] [CrossRef]

- Peng, J.W.; Yao, J.C. A new hybrid-extragradient method for generalized mixed equilibrium problems, fixed point problems and variational inequality problems. Taiwan. J. Math. 2008, 12, 1401–1432. [Google Scholar] [CrossRef]

- Kumam, W.; Rehman, H.U.; Kumam, P. A new class of computationally efficient algorithms for solving fixed-point problems and variational inequalities in real Hilbert spaces. J. Inequalities Appl. 2023, 2023, 48. [Google Scholar] [CrossRef]

- Rehman, H.; Kumam, P.; Suleiman, Y.I.; Kumam, W. An adaptive block iterative process for a class of multiple sets split variational inequality problems and common fixed point problems in hilbert spaces. Numer. Algebr. Control Optim. 2023, 13, 273–298. [Google Scholar] [CrossRef]

- Khunpanuk, C.; Panyanak, B.; Pakkaranang, N. A New Construction and Convergence Analysis of Non-Monotonic Iterative Methods for Solving ρ-Demicontractive Fixed Point Problems and Variational Inequalities Involving Pseudomonotone Mapping. Mathematics 2022, 10, 623. [Google Scholar] [CrossRef]

- Anh, P.N. A hybrid extragradient method extended to fixed point problems and equilibrium problems. Optimization 2013, 62, 271–283. [Google Scholar] [CrossRef]

- Vuong, P.T.; Strodiot, J.J.; Nguyen, V.H. Extragradient methods and linesearch algorithms for solving Ky Fan inequalities and fixed point problems. J. Optim. Theory Appl. 2012, 155, 605–627. [Google Scholar] [CrossRef]

- Vuong, P.T.; Strodiot, J.J.; Nguyen, V.H. On extragradient-viscosity methods for solving equilibrium and fixed point problems in a Hilbert space. Optimization 2015, 64, 429–451. [Google Scholar] [CrossRef]

- Takahashi, W.; Wen, C.; Yao, J. The shrinking projection method for a finite family of demimetric mapping with variational inequality problems in a Hilbert space. Fixed Point Theor. 2018, 19, 407–420. [Google Scholar] [CrossRef]

- Dadashi, V.; Iyiola, O.S.; Shehu, Y. The subgradient extragradient method for pseudomonotone equilibrium problems. Optimization 2020, 69, 901–923. [Google Scholar] [CrossRef]

- Hieu, D.V.; Gibali, A. Strong convergence of inertial algorithms for solving equilibrium problems. Optim. Lett. 2020, 14, 1817–1843. [Google Scholar] [CrossRef]

- Hieu, D.V.; Quy, P.K.; Vy, L.V. Explicit iterative algorithms for solving equilibrium problems. Calcolo 2019, 56, 11. [Google Scholar] [CrossRef]

- Shehu, Y.; Iyiola, O.S.; Thong, D.V.; Van, N.T.C. An inertial subgradient extragradient algorithm extended to pseudomonotone equilibrium problems. Math. Meth. Oper. Res. 2021, 93, 213–242. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 2011, 148, 318–335. [Google Scholar] [CrossRef] [PubMed]

- Censor, Y.; Gibali, A.; Reich, S. Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim. Methods Softw. 2011, 26, 827–845. [Google Scholar] [CrossRef]

- Thong, D.V.; Cholamjiak, P.; Rassias, M.T.; Cho, Y.J. Strong convergence of inertial subgradient extragradient algorithm for solving pseudomonotone equilibrium problems. Optim. Lett. 2021, 73, 1329–1354. [Google Scholar] [CrossRef]

- Hieu, D.V. Strong convergence of a new hybrid algorithm for fixed point problems and equilibrium problems. Math. Model. Anal. 2018, 24, 1–19. [Google Scholar] [CrossRef]

- Tan, B.; Cho, S.Y.; Yao, J.C. Accelerated inertial subgradient extragradient algorithms with non-monotonic stepsizes for equilibrium problems and fixed point problems. J. Nonlinear Var. Anal. 2022, 6, 89–122. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces, 2nd ed.; CMS Books in Mathematics; Springer International Publishing: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Bianchi, M.; Schaible, S. Generalized monotone bifunctions and equilibrium problems. J. Optim. Theory Appl. 1996, 90, 31–43. [Google Scholar] [CrossRef]

- Takahashi, W. A general iterative method for split common fixed point problems in Hilbert spaces and applications. Pure Appl. Funct. Anal. 2018, 3, 349–370. [Google Scholar]

- Song, Y. Iterative methods for fixed point problems and generalized split feasibility problems in Banach spaces. J. Nonlinear Sci. Appl. 2018, 11, 198–217. [Google Scholar] [CrossRef]

- Goebel, K.; Kirk, W. Topics in Metric Fixed Point Theory; Cambridge University Press: Cambridge, UK, 1990. [Google Scholar] [CrossRef]

- Saejung, S.; Yotkaew, P. Approximation of zeros of inverse strongly monotone operators in Banach spaces. Nonlinear Anal. Theor. 2012, 75, 742–750. [Google Scholar] [CrossRef]

- Harker, P.T.; Pang, J.S. For the Linear Complementarity Problem; Lectures in Applied Mathematics; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1990; Volume 26. [Google Scholar]

- Hieu, D.V.; Anh, P.K.; Muu, L.D. Modified hybrid projection methods for finding common solutions to variational inequality problems. Comput. Optim. Appl. 2016, 66, 75–96. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).