Time Series Prediction Based on Multi-Scale Feature Extraction

Abstract

1. Introduction

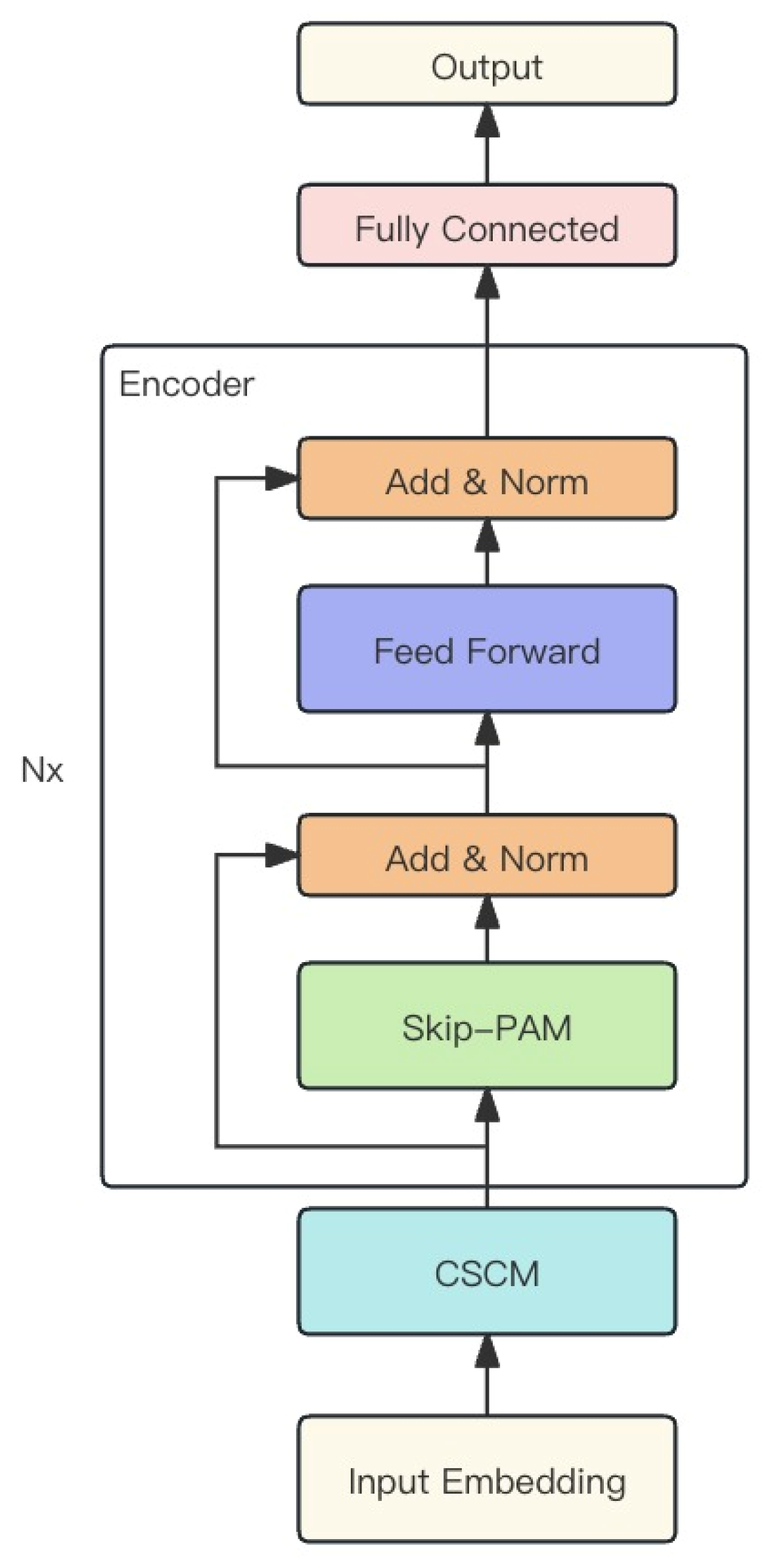

- Introduction of the Skip-PAM aimed at enabling deep learning models to effectively capture long-term and short-term features in long time series. In the encoder, the implementation of a pyramid attention mechanism processes previous feature vectors to obtain long-term dependencies and local features.

- Improvement of the CSCM aimed at establishing a pyramid data structure for the Skip-PAM. After encoding the input data, coarse-grained feature information across different time step lengths is obtained through the proposed feature convolution.

- Based on three time series datasets, our approach is compared with three baseline methods, achieving a favorable performance.

2. Recent Work

2.1. Time Series Prediction Based on CNN and LSTM

2.2. Transformer-Based Time Series Prediction Approaches

3. Model

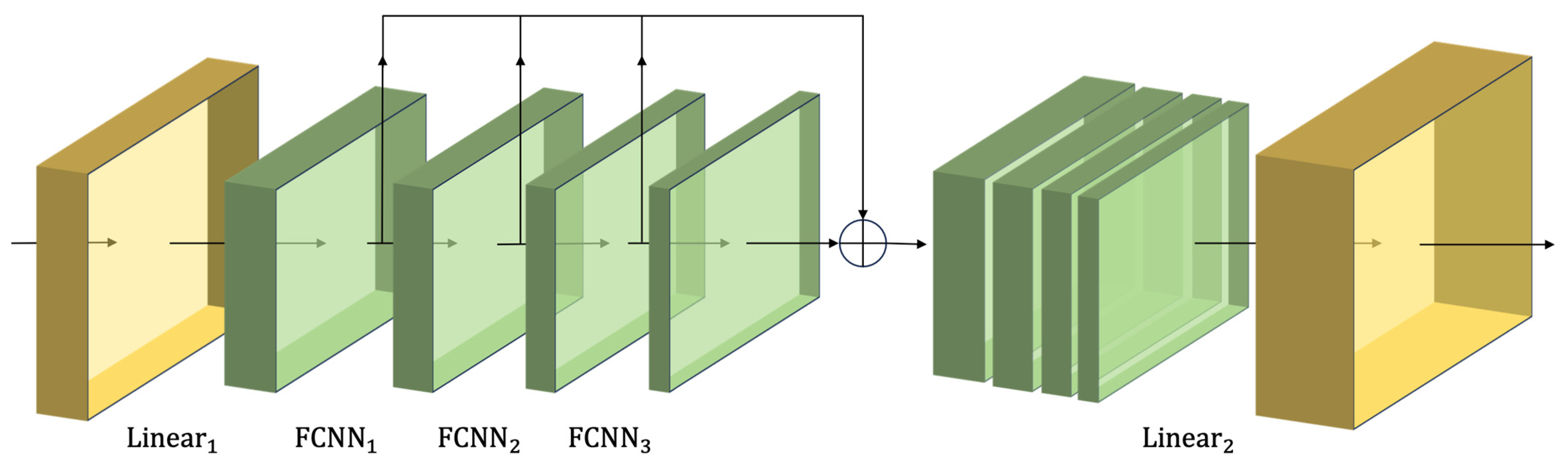

3.1. Skip-PAM

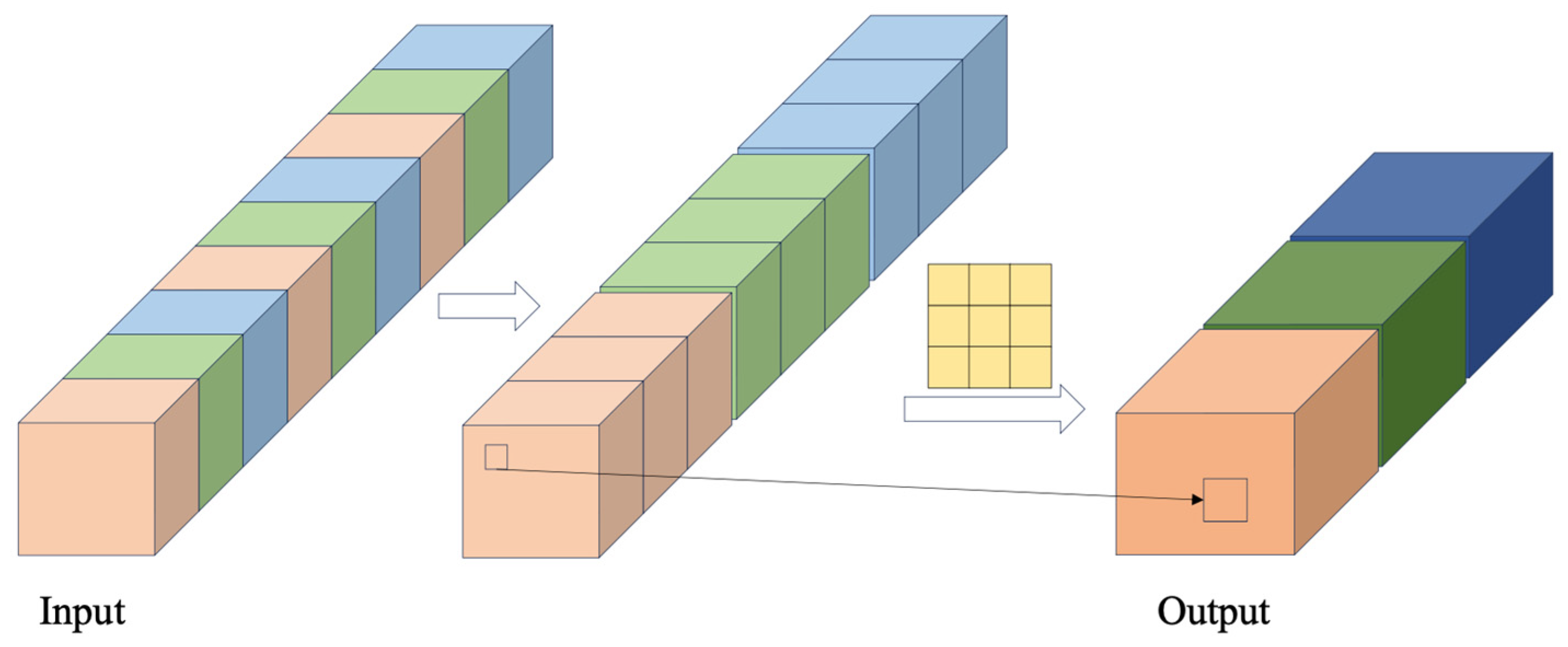

3.2. Coarser-Scale Construction Module

4. Experiment

4.1. Experimental Data

- ETTh1: The ETT dataset [24] comprises temperature records collected from transformers and six indicators related to voltage load in the period from July 2016 to July 2018. The ETTh1 data, with their hourly collection frequency, are ideal for evaluating the model’s capability to capture cyclical daily patterns and longer-term seasonal trends in energy usage related to environmental temperature fluctuations.

- ETTm1: As a more granular subset of the ETT dataset, ETTm1 provides data at 15 min intervals. This higher-resolution dataset challenges the model to discern subtler short-term variations and abrupt changes in electrical load, which are critical for operational decisions in energy distribution and for responding to rapid demand shifts.

- Electricity [29]: This dataset records the hourly electricity consumption from 2012 to 2014 for 321 customers. It serves as a rich source for examining consumer behavior over multiple years, including variations in consumption due to individual lifestyle patterns, societal events, and differing business operations.

4.2. Evaluation Metrics

4.3. Parameter Settings and Experiment Details

4.4. Comparative Experiments

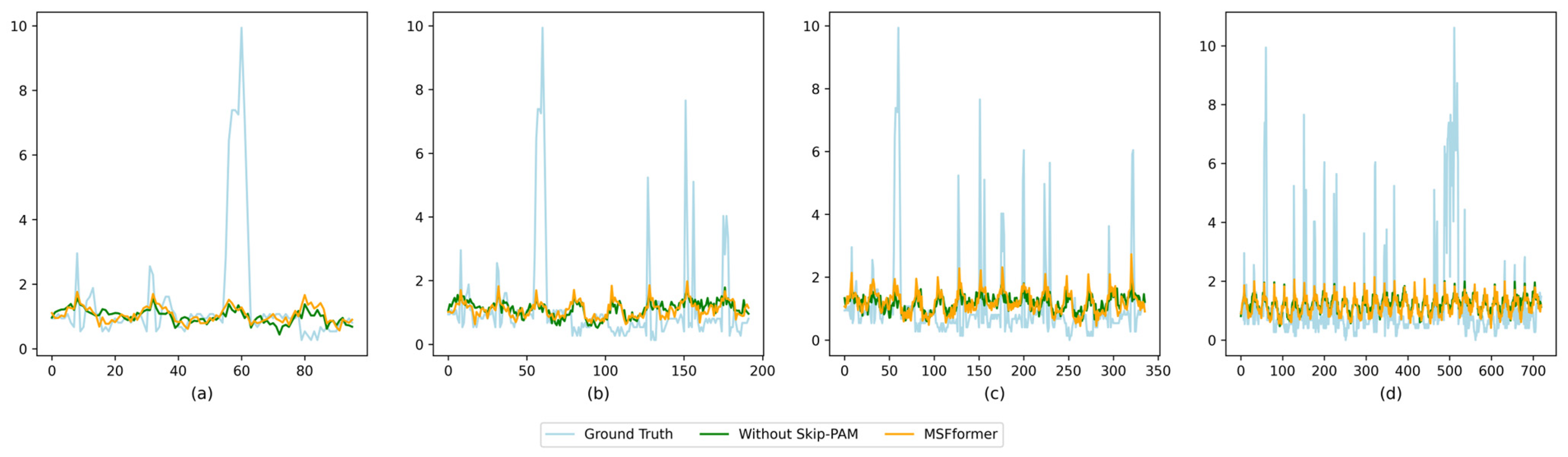

4.5. Ablation Experiments

4.6. Hyperparameter Study

- (1)

- Study on Stride Window Size Parameter

- (2)

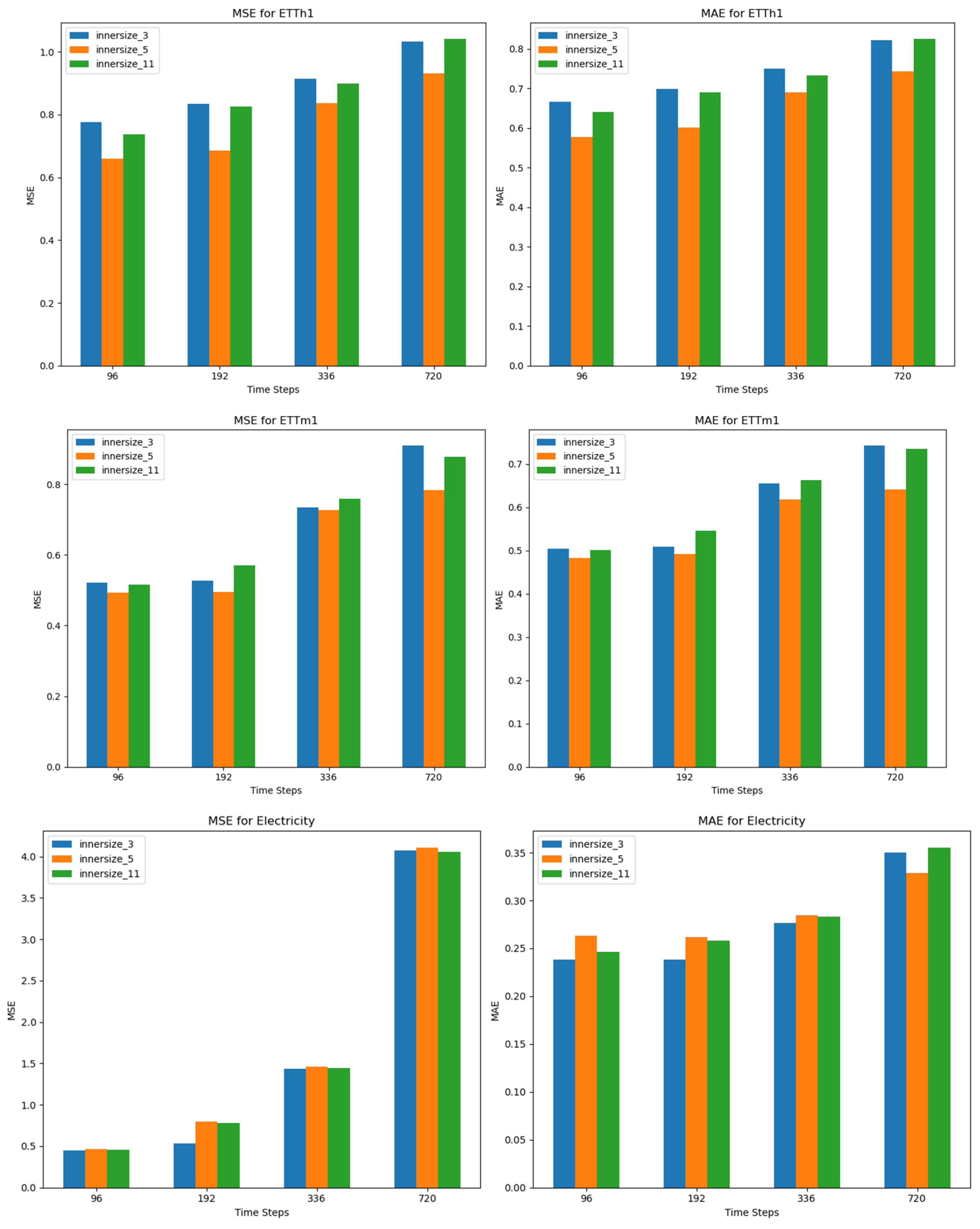

- Inner Size Parameter Study

- (3)

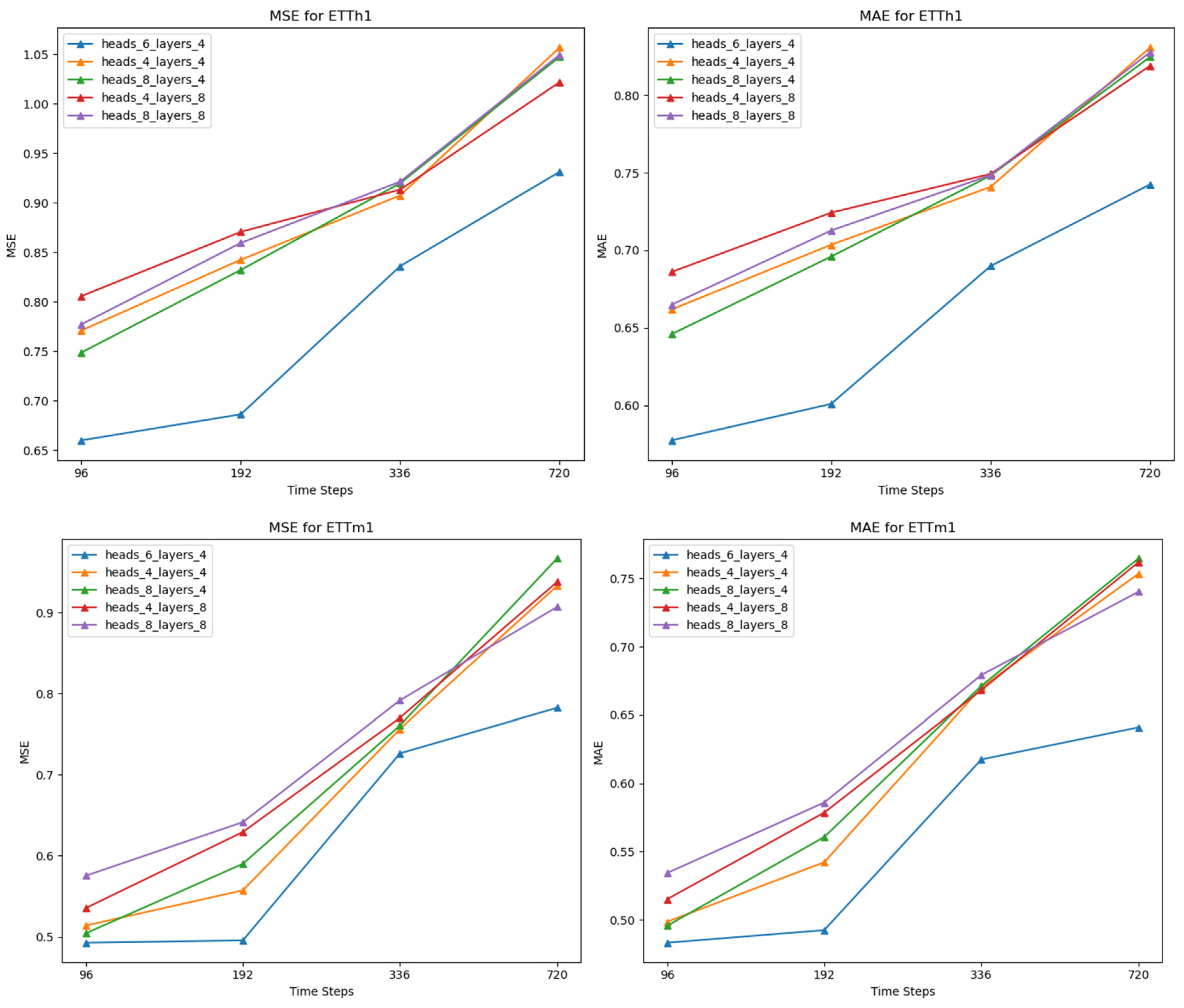

- Study on Attention Heads and Encoder Layers Parameters

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bao, W.; Yue, J.; Rao, Y. A deep learning framework for financial time series using stacked autoencoders and long-short term memory. PLoS ONE 2017, 12, e0180944. [Google Scholar] [CrossRef] [PubMed]

- Chase, C.W. Demand-Driven Forecasting: A Structured Approach to Forecasting; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- McGovern, A.; Elmore, K.L.; Gagne, D.J.; Haupt, S.E.; Karstens, C.D.; Lagerquist, R.; Smith, T.; Williams, J.K. Using artificial intelligence to improve real-time decision-making for high-impact weather. Bull. Am. Meteorol. Soc. 2017, 98, 2073–2090. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Athanasopoulos, G. Forecasting: Principles and Practice; OTexts: Melbourne, Australia, 2018. [Google Scholar]

- Tran, T.H.; Nguyen, L.M.; Yeo, K.; Nguyen, N.; Phan, D.; Vaculin, R.; Kalagnanam, J. An End-to-End Time Series Model for Simultaneous Imputation and Forecast. arXiv 2023, arXiv:2306.00778. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent neural network regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Ismail Fawaz, H.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.A. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef]

- Borovykh, A.; Bohte, S.; Oosterlee, C.W. Conditional time series forecasting with convolutional neural networks. arXiv 2017, arXiv:1703.04691. [Google Scholar]

- Lipton, Z.C.; Kale, D.C.; Elkan, C.; Wetzel, R. Learning to diagnose with LSTM recurrent neural networks. arXiv 2015, arXiv:1511.03677. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Zeng, A.; Chen, M.; Zhang, L.; Xu, Q. Are transformers effective for time series forecasting? In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37. [Google Scholar]

- Shih, S.Y.; Sun, F.K.; Lee, H.Y. Temporal pattern attention for multivariate time series forecasting. Mach. Learn. 2019, 108, 1421–1441. [Google Scholar] [CrossRef]

- Liu, M.; Zeng, A.; Chen, M.; Xu, Z.; Lai, Q.; Ma, L.; Xu, Q. Scinet: Time series modeling and forecasting with sample convolution and interaction. Adv. Neural Inf. Process. Syst. 2022, 35, 5816–5828. [Google Scholar]

- Van Den Oord, A.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.; Kavukcuoglu, K. Wavenet: A generative model for raw audio. arXiv 2016, arXiv:1609.03499. [Google Scholar]

- Budhathoki, K.; Vreeken, J. Origo: Causal inference by compression. Knowl. Inf. Syst. 2018, 56, 285–307. [Google Scholar] [CrossRef]

- Bai, S.; Kolter, J.Z.; Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar]

- Chen, Y.; Lin, W.; Wang, J.Z. A dual-attention-based stock price trend prediction model with dual features. IEEE Access 2019, 7, 148047–148058. [Google Scholar] [CrossRef]

- Wagner, A.K.; Soumerai, S.B.; Zhang, F.; Ross-Degnan, D. Segmented regression analysis of interrupted time series studies in medication use research. J. Clin. Pharm. Ther. 2002, 27, 299–309. [Google Scholar] [CrossRef]

- Lai, G.; Chang, W.C.; Yang, Y.; Liu, H. Modeling long-and short-term temporal patterns with deep neural networks. In Proceedings of the 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018. [Google Scholar]

- Woo, G.; Liu, C.; Sahoo, D.; Kumar, A.; Hoi, S. CoST: Contrastive learning of disentangled seasonal-trend representations for time series forecasting. arXiv 2022, arXiv:2202.01575. [Google Scholar]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond efficient transformer for long sequence time-series forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35. [Google Scholar]

- Wu, H.; Xu, J.; Wang, J.; Long, M. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. Adv. Neural Inf. Process. Syst. 2021, 34, 22419–22430. [Google Scholar]

- Kitaev, N.; Kaiser, Ł.; Levskaya, A. Reformer: The efficient transformer. arXiv 2020, arXiv:2001.04451. [Google Scholar]

- Liu, S.; Yu, H.; Liao, C.; Li, J.; Lin, W.; Liu, A.X.; Dustdar, S. Pyraformer: Low-complexity pyramidal attention for long-range time series modeling and forecasting. In Proceedings of the International Conference on Learning Representations, Virtually, 3–7 May 2021. [Google Scholar]

- Zhou, T.; Ma, Z.; Wen, Q.; Wang, X.; Sun, L.; Jin, R. Fedformer: Frequency enhanced decomposed transformer for long-term series forecasting. In Proceedings of the International Conference on Machine Learning, PMLR, Baltimore, MD, USA, 17–23 July 2022. [Google Scholar]

- Electricity Load Diagrams 2011–2014. Available online: https://archive.ics.uci.edu/ml/datasets/ElectricityLoadDiagrams20112014 (accessed on 1 November 2023).

- Willmott, C.J.; Matsuura, K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim. Res. 2005, 30, 79–82. [Google Scholar] [CrossRef]

- Chai, T.; Draxler, R.R. Root mean square error (RMSE) or mean absolute error (MAE)?–Arguments against avoiding RMSE in the literature. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Koehler, A.B. Another look at measures of forecast accuracy. Int. J. Forecast. 2006, 22, 679–688. [Google Scholar] [CrossRef]

| Dataset | Feature Counts | Sampling Frequencies | Data Point Numbers | Mean | Std | Min | Max |

|---|---|---|---|---|---|---|---|

| ETTh1 | 7 | 1 h | 121,940 | 4.58 | 25.59 | −25.09 | 46.01 |

| ETTm1 | 7 | 15 min | 487,760 | 4.59 | 25.62 | −26.37 | 46.01 |

| Electricity | 321 | 1 h | 7,506,264 | 2551.88 | 231,879,417.19 | 0.00 | 764,500.00 |

| Model | Transformer [13] | Informer [24] | Pyraformer [27] | MSFformer (Ours) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Metric | MSE | MAE | MAPE | MSE | MAE | MAPE | MSE | MAE | MAPE | MSE | MAE | MAPE | |

| ETTh1 | 96 | 0.9306 | 0.7750 | 9.2998 | 0.9749 | 0.7814 | 7.9607 | 0.7671 | 0.6515 | 9.1344 | 0.6598 | 0.5774 | 8.3353 |

| 192 | 1.0529 | 0.8226 | 8.7659 | 1.0265 | 0.7917 | 10.8025 | 0.8567 | 0.7038 | 8.7809 | 0.6861 | 0.6009 | 8.3445 | |

| 336 | 1.1429 | 0.8757 | 11.4834 | 1.0334 | 0.7751 | 14.3382 | 0.9408 | 0.7564 | 12.9577 | 0.8359 | 0.6898 | 12.2144 | |

| 720 | 1.0951 | 0.8278 | 18.3381 | 1.2032 | 0.8694 | 18.3253 | 1.0680 | 0.8346 | 23.7789 | 0.9312 | 0.7424 | 24.5435 | |

| ETTm1 | 96 | 0.6548 | 0.5905 | 3.0617 | 0.6374 | 0.5635 | 2.5444 | 0.5293 | 0.5002 | 2.4246 | 0.4926 | 0.4831 | 2.3761 |

| 192 | 0.8633 | 0.7138 | 3.7736 | 0.7303 | 0.6198 | 2.5095 | 0.5432 | 0.5167 | 2.2955 | 0.4955 | 0.4923 | 2.3117 | |

| 336 | 1.0364 | 0.7898 | 3.8568 | 1.1851 | 0.8039 | 3.5393 | 0.6930 | 0.6307 | 3.3065 | 0.7266 | 0.6175 | 3.1333 | |

| 720 | 1.1136 | 0.8301 | 3.9590 | 1.2199 | 0.8373 | 3.5921 | 0.9290 | 0.7305 | 3.6573 | 0.7825 | 0.6410 | 3.2721 | |

| Electricity | 96 | 0.3326 | 0.3971 | 4.0659 | 0.4854 | 0.4917 | 5.4207 | 0.4965 | 0.2939 | 1.7223 | 0.4698 | 0.2636 | 1.4362 |

| 192 | 0.3434 | 0.4083 | 4.6065 | 0.5001 | 0.5102 | 5.7366 | 0.5854 | 0.3061 | 1.9153 | 0.7943 | 0.2619 | 1.6628 | |

| 336 | 0.3631 | 0.4271 | 5.1759 | 0.4352 | 0.4734 | 5.3652 | 1.4643 | 0.3134 | 2.3761 | 1.4602 | 0.2843 | 2.1562 | |

| 720 | 0.3832 | 0.4244 | 5.2572 | 0.5197 | 0.5311 | 5.7170 | 4.0697 | 0.3762 | 3.1227 | 4.1038 | 0.3290 | 2.5450 | |

| Model | MSE | MAE |

|---|---|---|

| Transformer | 3.550 | 1.477 |

| Informer | 7.546 | 2.092 |

| Pyraformer | 1.176 | 0.849 |

| 1.103 | 0.818 | |

| 1.054 | 0.802 |

| Model | MSFformer | Without Skip-PAM | |||

|---|---|---|---|---|---|

| Metric | MSE | MAE | MSE | MAE | |

| ETTh1 | 96 | 0.6598 | 0.5774 | 0.7530 | 0.6487 |

| 192 | 0.6861 | 0.6009 | 0.8308 | 0.6957 | |

| 336 | 0.8359 | 0.6898 | 0.9078 | 0.7418 | |

| 720 | 0.9312 | 0.7424 | 1.0365 | 0.8223 | |

| ETTm1 | 96 | 0.4926 | 0.4831 | 0.5541 | 0.5082 |

| 192 | 0.4955 | 0.4923 | 0.5365 | 0.5071 | |

| 336 | 0.7266 | 0.6175 | 0.7585 | 0.6262 | |

| 720 | 0.7825 | 0.6410 | 0.8831 | 0.7326 | |

| Electricity | 96 | 0.4698 | 0.2636 | 0.4816 | 0.2791 |

| 192 | 0.7943 | 0.2619 | 0.8023 | 0.2667 | |

| 336 | 1.4602 | 0.2843 | 1.4744 | 0.3011 | |

| 720 | 4.1038 | 0.3290 | 4.1433 | 0.3576 | |

| Window Size | {4, 4, 6} | {3, 6, 6} | {3, 4, 8} | ||||

|---|---|---|---|---|---|---|---|

| Metric | MSE | MAE | MSE | MAE | MSE | MAE | |

| ETTh1 | 96 | 0.6598 | 0.5774 | 0.7577 | 0.6531 | 0.7696 | 0.6595 |

| 192 | 0.6861 | 0.6009 | 0.8374 | 0.6982 | 0.8262 | 0.6923 | |

| 336 | 0.8359 | 0.6898 | 0.9116 | 0.7431 | 0.9023 | 0.7401 | |

| 720 | 0.9312 | 0.7424 | 1.0564 | 0.8337 | 1.0450 | 0.8190 | |

| ETTm1 | 96 | 0.4926 | 0.4831 | 0.5125 | 0.4993 | 0.5048 | 0.4933 |

| 192 | 0.4955 | 0.4923 | 0.5893 | 0.5589 | 0.5592 | 0.5474 | |

| 336 | 0.7266 | 0.6175 | 0.6934 | 0.6302 | 0.7043 | 0.6334 | |

| 720 | 0.7825 | 0.6410 | 0.9332 | 0.7443 | 0.8564 | 0.7175 | |

| Electricity | 96 | 0.4698 | 0.2636 | 0.4509 | 0.2401 | 0.4443 | 0.2311 |

| 192 | 0.7943 | 0.2619 | 0.7830 | 0.2568 | 0.7783 | 0.2483 | |

| 336 | 1.4602 | 0.2843 | 1.4362 | 0.2740 | 1.4341 | 0.2713 | |

| 720 | 4.1038 | 0.3290 | 4.0574 | 0.3261 | 4.0695 | 0.3472 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, R.; Hao, Y. Time Series Prediction Based on Multi-Scale Feature Extraction. Mathematics 2024, 12, 973. https://doi.org/10.3390/math12070973

Zhang R, Hao Y. Time Series Prediction Based on Multi-Scale Feature Extraction. Mathematics. 2024; 12(7):973. https://doi.org/10.3390/math12070973

Chicago/Turabian StyleZhang, Ruixue, and Yongtao Hao. 2024. "Time Series Prediction Based on Multi-Scale Feature Extraction" Mathematics 12, no. 7: 973. https://doi.org/10.3390/math12070973

APA StyleZhang, R., & Hao, Y. (2024). Time Series Prediction Based on Multi-Scale Feature Extraction. Mathematics, 12(7), 973. https://doi.org/10.3390/math12070973