Abstract

Particle Swarm Optimization (PSO) is facing more challenges in solving high-dimensional global optimization problems. In order to overcome this difficulty, this paper proposes a novel PSO variant of the hybrid Sine Cosine Algorithm (SCA) strategy, named Velocity Four Sine Cosine Particle Swarm Optimization (VFSCPSO). The introduction of the SCA strategy in the velocity formulation ensures that the global optimal solution is found accurately. It increases the flexibility of PSO. A series of experiments are conducted on the CEC2005 test suite with compositional algorithms, algorithmic variants, and good intelligent algorithms. The experimental results show that the algorithm effectively improves the overall performance of compositional algorithms; the Friedman test proves that the algorithm has good competitiveness. The algorithm also performs better in PID parameter tuning. Therefore, the VFSCPSO is able to solve the high-dimensional global optimization problems in a better way.

Keywords:

Particle Swarm Optimization; Sine Cosine Algorithm; hybrid algorithm; high-dimensional global optimization problem MSC:

68W50

1. Introduction

Over the past half century, human beings have taken inspiration from biological behavior and natural phenomena and proposed a variety of intelligent algorithms. Intelligent algorithms have been deeply applied in different fields. Intelligent algorithms show better global search capability and adaptability. These algorithms are able to adapt more successfully to a variety of optimization problems and overcome many limitations of traditional optimization algorithms. Therefore, intelligent algorithms outperform traditional optimization algorithms in many optimization problems, making them more effective and feasible choices.

Intelligent algorithms can be roughly divided into the following four types according to different classification standards: Firstly, evolutionary algorithms are a class of intelligent algorithms proposed by modeling the substantive abstraction of evolutionary law, these include Genetic Algorithm (GA) [1], Differential Evolution (DE) [2], Dandelion Optimizer (DO) [3], and Biogeography-Based Optimization (BBO) [4]. Secondly, swarm intelligent optimization algorithms are a class of intelligent algorithms that integrates the characteristics of biological groups into the solution of optimization problems, and include Particle Swarm Optimization (PSO) [5], Shuffled Frog Leaping Algorithm (SFLA) [6], Firefly Algorithm (FA) [7], Mayfly Algorithm (MA) [8], and Peafowl Optimization Algorithm (POA) [9]. Thirdly, physics-based algorithms are a class of intelligent algorithms formed by introducing the laws of nature into the solution of optimization problems, these include the Simulated Annealing (SA) [10] algorithm and the Sine Cosine Algorithm (SCA) [11]. Fourth, human-based algorithms are a class of intelligent algorithms that introduces human behavior characteristics, such as Student Psychology-Based Optimization (SPBO) [12] and the Optical Microscope Algorithm (OMA) [13].

PSO [14] is an optimization technique based on evolution and iteration, and the algorithm is used in various fields to solve real optimization problems, such as path planning problems [15], parameter identification problems [16], image processing problems [17], biomedical technologies problems [18], and other optimization problems. The advantages of PSO can be found mainly in the following four aspects. Firstly, the PSO emulates the characteristics of biological behavior, its theory and structure are relatively simple, the number of control parameters are few so that a large number of parameters do not need to be adjusted, and it is relatively easy to adjust the parameters according to the optimization problems [19]. Secondly, PSO has a strong global search ability, through cooperation and information sharing it can search for potential optimal solutions quickly in a wide scope and help the algorithm to approach the global optimal solution. Thirdly, PSO does not rely on the objective function’s gradient information, so it has certain advantages in solving complex optimization problems, and it is more flexible than traditional optimization algorithms and can solve large-scale problems. Fourthly, PSO performs well in dealing with continuous optimization problems and usually finds high-quality solutions. Based on the above discussion, we can find that PSO has more obvious advantages than other intelligent algorithms in solving high-dimensional optimization problems. Therefore, based on the unique advantages of PSO, we opt to employ PSO in order to obtain the effective resolution of high-dimensional optimization problems.

Similar to other intelligent algorithms, PSO has some disadvantages, such as a slow convergence velocity, premature convergence, and falling into local optima. Researchers have carried out many improvements. Among the current inertia weight selection strategies, the most popular one is still that of Shi et al. [20], who proposed a linear dynamic decreasing inertia weight strategy, which increases the exploration and exploitation capability in the process of evolution. Liu et al. [21] proposed Adaptive Weighted Particle Swarm Optimization (AWPSO) to improve the convergence velocity of the algorithm. Ahmed et al. [22] proposed a novel tracking technique based on Self-Tuning Particle Swarm Optimization (ST-PSO). This method maximizes power output from the power generated by a proton-exchange membrane fuel cell. Zhang et al. [23] proposed a corrective strategy, combining the characteristics of two strategies to propose Particle Swarm Optimization with Self-Corrective and Dimension-by-Dimension Learning Capabilities (SCDLPSO), which is more competitive in different dimensional optimization problems. Xue et al. [24] gave a strategy pool consisting of four strategies in solving high-dimensional feature selection problems. In addition, the introduction of other intelligent algorithm’s features can result in hybrid algorithms with better performance: Kaseb et al. [25] added the crossover and mutation operators of GA to PSO, which further balanced the exploration and exploitation capability of the algorithm, and improved its ability to deal with wind conditions in cities. Shams et al. [26] proposed a hybrid Dipper-Throated Optimization and Particle Swarm Optimization (DTPSO), combining the advantages of the Dipper-Throated Optimization algorithm and PSO for hepatocellular carcinoma prediction. Despite the existence of a large number of improvement studies, there is still space for the PSO [27] to enhance its overall performance, and improving the shortcomings of the PSO [28] while maintaining its advantages still requires further work by researchers. Although PSO performs well on many problems, it still has some limitations, such as easily falling into local optimal solutions and its slow convergence velocity. Meanwhile, different optimization problems have different characteristics and requirements, the PSO variant applicable to a certain problem may not be applicable to other problems, and cannot satisfy a broader range of application scenarios. Making improvements to PSO can not only increase the overall performance of the algorithm, but also adapt it to new research trends and requirements. Therefore, although the classical PSO method has many types of variants, it still has many aspects that can be improved and optimized. The improvement of PSO is still an important research direction, which can promote algorithmic innovation and development, and bring new ideas to the field of optimization algorithms.

SCA is an optimization technique based on objective laws, the algorithm performs a search based on the circular properties of the sine–cosine function, which can be applied to engineering design optimization problems [29], neural network problems [30], path planning problems [31], and others. SCA has the following three obvious advantages. Firstly, SCA can make full use of the circular property of the sine–cosine function in order to balance the exploration and exploitation capability of the algorithm and make the algorithm have better overall performance. Secondly, SCA does not rely on gradient information [32] and has a wide range of applicability. Thirdly, SCA has fewer adjustment parameters, which reduces the tedious work of tuning parameters and makes the algorithm more convenient to use. SCA performs well in many optimization problems. As the complexity of the optimization problem increases, it may take more time to find the optimal solution. Researchers have made improvements to SCA. Zhou et al. [33] used Gaussian mutation to increase the diversity of SCA, and the proposed FGSCA became a powerful tool. Ma et al. [34] used a nonlinear strategy and added a hill-climbing strategy in the local search part. The ISCA optimized the learning rate of GRU and the number of hidden layer neurons to further accurately predict the storage and utilization of solar energy by photovoltaic power generation. Hamad et al. [35] proposed QLESCA, which is an algorithm that has obtained superior fitness values. Although SCA has shown excellent performance in many optimization problems, as the complexity of optimization problems increases, more computer resources may be required to find the best strategy. Based on the aforementioned advantages, we selected SCA as our hybrid algorithmic strategy.

Most of the current intelligent algorithms cannot effectively solve large-scale problems [36]. Hence, enhancing the capability of intelligent algorithms to tackle high-dimensional global optimization problems [37] is a highly valuable research area. Problems with more than 100 dimensions are considered high-dimensional optimization problems. These include large-scale problems. Large-scale problems are abstracted from complex real-world optimization problems and have gradually become a hot research topic in optimization problems. As the number of decision variables increases, the computational complexity of calculating the objective function value and constraint increases dramatically, making the solution more difficult. The solution space of such a problem is very large and contains a massive number of potential solutions, but only a small portion of them are feasible and meaningful. At this point, it is easy to fall into local optimal solutions, and efficient algorithms are required for global search. Xu et al. [38] constructed a pool of selection strategies from PSO variants and deduced new PSO strategy sets. The algorithm was found to ultimately improve the overall performance of PSO in solving large-scale problems. Wang et al. [39] proposed a PSO based on a reinforcement learning layer, which adopts a hierarchical number-controlled reinforcement learning strategy and introduces a level competition mechanism, which effectively improves the PSO convergence in large-scale problems. Yi et al. [40] improved a GA variant to achieve good performance in large-scale problems based on human electroencephalography signal processing. The complexity of the problems faced by human beings is increasing. The algorithms in the above studies show some improvement in performance for solving large-scale problems, but can only solve problems with at most a thousand dimensions. Solving large-scale problems is a challenging task that requires a combination of knowledge, a suitable algorithm, and computational resource to obtain a feasible solution. Therefore, in this context, it is still necessary to improve the existing intelligent algorithms to solve high-dimensional global optimization problems.

With the increasing degree of automation, the real-life requirements for motor control are also increasing. Servo motor systems usually choose a PID controller as the executor to adjust the behavior of the entire system by tuning parameters. Since Ziegler and Nichols proposed the Ziegler–Nichols rectification method [41] in 1942, many classical parameter rectification methods have emerged. Selecting appropriate PID parameters is a critical step in the control process, and PID controllers based on intelligent algorithms can play an important role after parameter tuning. Most of the intelligent algorithms are suitable for optimizing the PID controller parameters of servo motors, helping the PID controller to determine the optimal parameter and significantly improving the performance of the whole control system. GA finds the best parameter combinations by selecting and mutating between different PID parameter sets [42] in order to minimize the performance metrics, such as system errors or vibrations, and thus, the system exhibits a good response characteristic. SA [43] discovers more appropriate PID parameters by progressively decreasing the probability of accepting a worse solution in the search solution space. The improved FA [44] self-tunes the PID parameters by introducing inertia weights and tuning factors, which ultimately improves the capability of the PID controller. While the application of intelligent algorithms in PID parameter tuning is relatively mature, this problem has not been perfectly solved. There is still room for further enhancement of this application with improved intelligent algorithms.

Over the past three decades, PSO has continuously enriched its theoretical material. Although PSO has been widely used in different fields, most of the PSO variants can only solve global optimization problems, but relatively little research has been carried out in the field of high-dimensional problems, especially large-scale problems, and a large number of complex optimization problems need to be solved urgently nowadays. Existing PSO variants are not able to solve them efficiently. SCA is a novel intelligent algorithm, introducing the advantages of SCA into PSO, which can open up more possibilities in the field of optimization. The obtained hybrid algorithm has better performance and solves optimization problem in a more efficient way. PSO suffers from the disadvantage of a worse local search ability, and SCA suffers from the disadvantage of the lower stability of the algorithm and lower adaptability in high-dimensional environments. Although there has been a great deal of research, there is no best algorithm, only better ones. In order to cope with this challenge, this paper proposes a new method, the Velocity Four Sine Cosine Particle Swarm Optimization (VFSCPSO) algorithm. VFSCPSO introduces the influence of SCA strategy on the basis of PSO. This new algorithm has high optimization accuracy and stability, effectively solving optimization problems. The primary contributions of this paper are summarized as follows:

- A new SCA strategy is proposed, this strategy is highly flexible by regulating the search range through the periodic variation of the sine–cosine function.

- A novel framework of PSO is proposed, which is more efficient than the original PSO algorithm. Meanwhile, VFSCPSO does not increase the computational complexity compared to PSO.

- The ductability of VFSCPSO in solving large-scale problems is verified through 10,000-dimensional numerical experiments on the CEC2005 test suite. The robustness of VFSCPSO in the PID parameter-tuning problem is verified through simulation experiments.

2. Standard PSO and SCA

2.1. Particle Swarm Optimization (PSO)

In 1995, Eberhart and Kennedy collaborated to propose PSO, which is a swarm intelligence algorithm that abstracts the foraging behavior of bird communities into a mathematical model. In the standard PSO [45], a set of random solutions is first obtained by assuming that there a total of N particles, and the information of particle i is represented as a D-dimensional vector, where the position of particle i can be denoted as , the velocity can be denoted as , the individual extreme position can be denoted as , and the global extreme position of the population can be denoted as , and the formula for updating the velocity and position of the particle i in the ()th iteration is denoted as

The velocity update formula Equation (1) is one of the core formulas of the standard PSO. Where w is the inertia weight, this parameter maintains the motion inertia of the particle and reflects the degree of inheritance of the origin velocity. When the kth iteration is performed, denotes the velocity of particle i in the dth dimension of the search space; denotes the position of particle i in the dth dimension of the search space; denotes the individual extreme position of particle i; denotes the global extreme position of the whole population. and are the learning factors, and and are random numbers between .

From the other core formula of the PSO, Equation (2), the position equation consists of two key components. The first part is the position of particle i, corresponding to the candidate solution when the kth iteration is performed; the second part determines the adjustment of the position of particle i when the ()th iteration is performed, i.e., the direction of particle i’s motion . The two parts interact with each other to ultimately determine the position of the particle to select the solution when the ()th iteration is performed. The formula for the velocity and position boundary conditions of particle i in the ()th iteration is denoted as

In Equations (3) and (4), before updating the position of particle i at the kth iteration, it is judged whether the current velocity within the given velocity range is exceeded. If it is, the current velocity is replaced by the velocity boundary value. The same is true for the judgment of the current position pair. The boundary conditions are used to improve the particle searching ability and efficiency, to avoid the population dispersion caused by the particles jumping out of the searching range. The individual, global position update formula is denoted as

In Equations (5) and (6), updating the individual extreme value and global extreme value in the particle population according to function f can guide the particle population to perform iterative optimization search in the direction of the global optimal solution. The termination condition is usually set to reach the maximum number of iterations in comparison experiments. The pseudocode of PSO is shown in Algorithm 1.

| Algorithm 1 Particle Swarm Optimization |

| Input: Initialize population N, dimension D, iteration K, learning factors , , inertia weight w, velocity boundaries , , position boundaries , Output: Optimal fitness value

|

2.2. Sine Cosine Algorithm (SCA)

Mirjalili proposed SCA in 2016, inspired by the properties of the sine–cosine function, whose central idea is to find the optimal solution by using the periodic law of variation of the sine–cosine function in the search space combined with a random search strategy. Firstly, a set of candidate solutions are randomly initialized in the search space, the fitness of each candidate solution is calculated and the current optimal solution is recorded. In the exploration phase, due to the high random ratio, SCA makes the change in the solutions in the solution set more obvious, and imposes large fluctuations to control the current solutions in the solution space in order to search the unknown region in the solution space rapidly. In the exploitation phase, the degree of random solution variation is smaller, and weak random perturbations are applied to the obtained solution set to fully search the neighborhood of the current solution. Its optimal process is divided into two phases, exploration and exploitation, and the position update formula of SCA is denoted as

The position of the solution is updated using the mathematical expression defined in Equation (7), which is the core formula of SCA. is the position of the current particle i at the kth iteration; is the historical individual optimal solution; is an adaptive parameter; , , and are random numbers; and “” denotes the absolute value.

The role of is to balance the range of variation of the sine and cosine to control the shift of the algorithm from global to local search gradually; a larger improves the global search capability of the algorithm, and on the contrary a smaller facilitates the local exploitation of the algorithm; is a random number in the range that is used to adjust the update solution to lie in or outside the solution space of the current and optimal solutions, defining the direction of the target of the solution and the extreme value of the iterative step size that can be achieved; is a random number in the range that gives a random weight for the current optimal solution, randomly emphasizing or weakening the influence of the target point, which defines the effect level of the candidate solution when it goes a distance away; is a random number in the range that is used to determine the sine or cosine updating formula, which helps to balance out the randomness in switching between sine and cosine to remove the connection that exists between iterative step size and direction.

Equation (8) is the formula for the random number , which adjusts the change in the position of the solution by making the phase change of the sine–cosine function change gradually with iterations to indicate the direction of the next solution’s movement, and thus, adjust the change in the position of the solution. The value of a determines the distance that a particle moves in the search space and is used to control the phase change of the sine–cosine function. Usually, the value of a is taken as a constant value of 2. The value of affects the change in amplitude, and as the number of iterations increases, the amplitude of the change in phase decreases gradually. Therefore, a reasonable choice of is crucial for the algorithm’s performance and convergence. The SCA pseudocode is shown in Algorithm 2.

| Algorithm 2 Sine Cosine Algorithm |

| Input: Initialize dimension D, iteration K, constant parameter a Output: Optimal fitness value |

3. Proposed Method

3.1. Proposed SCA Strategy

SCA inherently benefits from high exploration, thus avoiding local optimality, and the adaptive range of the sine–cosine function allows for a smooth transition from exploration to exploitation when the algorithm utilizes the sine–cosine term to explore different regions of the search space. At the same time, the current solution always updates its position around the current best solution, and during the optimization process the solution moves towards the most promising region in the search space. The current optimal solution is stored in a variable as a target point and is retained during the optimization process, resulting ultimately in a global optimal value. Here, the current optimal solution is replaced with the global optimum solution. This means that more particles are focused on searching around the global optimal solution, which enables faster convergence to the global optimal solution. Firstly, the particles are guided by collective intelligence. The global optimal solution represents the best position of the whole population, introducing it into the SCA strategy amounts to introducing more collective wisdom, which makes the SCA strategy make better usage of the global information. Secondly, the global optimal solution corresponds to a better position in the solution space, using it as a guiding element can help SCA avoid falling into local optima, which improves the global search ability. The SCA strategy can effectively avoid falling into local optima. Thirdly, integrating the global optimal solution into the SCA strategy implies that the information exchange is enhanced, which makes the particles more likely to search along the direction of the global optimal solution, which strengthens the information exchange in populations, and it benefits them in finding the global optimal solution more rapidly. Finally, the introduction of the global optimal solution into SCA can accelerate the convergence process of the SCA strategy, increasing the efficiency of the algorithm and accelerating the search process. To sum up, changing the current optimal solution position of SCA to the global optimal solution not only enhances the global search capability and information exchange, but also can accelerate the convergence process and avoid falling into local optima, which improves the performance and efficiency of the algorithm. In this case, the SCA strategy proposed is denoted as

In Equation (9), stands for the learning factor, is a random number that takes values in the range of , and are random numbers that control the direction in which the particles move, and determine the region where the solution will be located, controls the degree of influence of the global optimal solution , and ensures that the velocity update switches equally between the sine–cosine function. Parameter is a random number in the range and a is a constant number, 2. and are composed of SCA influence terms.

3.2. Proposed Velocity Four Sine Cosine Particle Swarm Optimization (VFSCPSO)

The velocity formula Equation (1) is the core formula of the PSO, which is used to guide the particle to move in the direction of the global optimal solution. When updating the particle velocity, the particle is affected by two parts, the individual cognitive part and the group cognitive part, by adjusting the individual historical optimal and group historical optimal term in order to balance the exploration and exploitation in the optimization process, and ultimately converge to the global optimal solution in a rapid way. PSO has a strong global search ability, but the ability to jump out of a local optimal value at a later stage of the algorithm’s search is poor, which is due to the failure to take into account the possible influence of other factors on the particles. In order to improve the diversity of the algorithm and avoid local optima, other reasonable influence factors are integrated into the velocity updating process to improve the performance of the algorithm. We introduce the influence factors of SCA during the velocity updating to improve the diversity of the PSO in the whole searching process, which can make the particles more flexible in searching for the global optimum solution, increase the searching capability of the particles, and further guide the particles to move towards the direction of the global optimal solution. This paper proposes a Velocity Four Sine Cosine Particle Swarm Optimization (VFSCPSO) algorithm. Accordingly, the velocity update formula for the ()th iteration in VFSCPSO is denoted as

Equation (10) is the velocity update formula for the VFSCPSO algorithm model. is the velocity formula Equation (1) for PSO. S is the SCA strategy. The other steps and formulas of VFSCPSO remain unchanged, therefore will not be further described here. VFSCPSO proposes a new algorithmic framework based on the PSO framework in combination with the SCA strategy, which ultimately improves PSO’s flexibility. The main steps of VFSCPSO can be described as follows:

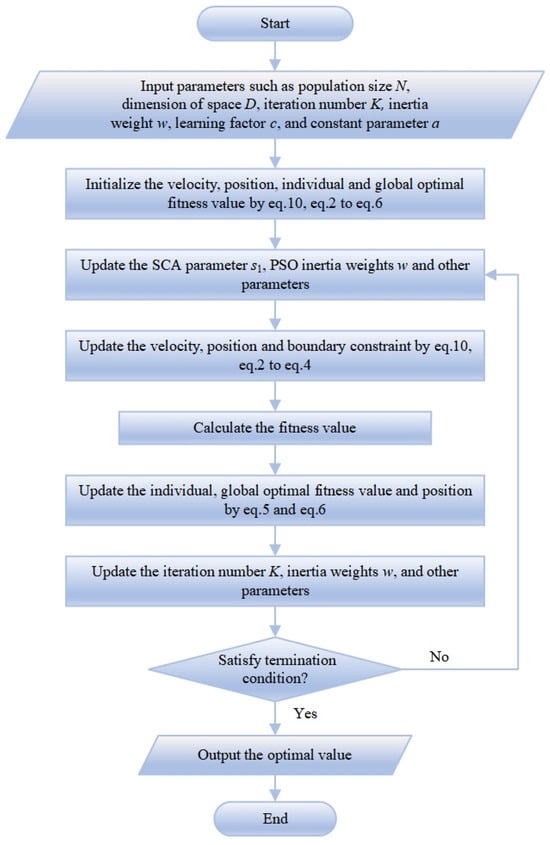

Step 1: Input the required parameters such as particle population size N, particle search space dimension D, iteration K, inertia weight w, learning factors , , , velocity boundaries , , position boundaries , , SCA constant parameter a, and so on;

Step 2: Randomly initialize the velocity and position of the particles through Equation (10), using Equations (2)–(6) to update the initial individual and global optimal positions, and individual and global fitness values;

Step 3: Update the SCA parameter , inertia weights w, and other parameters after entering the iterative loop;

Step 4: Update the velocity of each particle after entering an iterative loop through Equation (10), and Equations (2)–(4), and determine whether the velocity crosses the boundary. If it crosses the boundary, change the velocity value to the boundary velocity value. Similarly the position update and judgment is applied;

Step 5: Calculate the fitness value;

Step 6: Update the individual and global optimal fitness values and positions by judging the conditional formulas in Equations (5) and (6);

Step 7: Update the parameters such as the iteration K;

Step 8: Determine whether the termination condition is reached or not, otherwise return to step 3;

Step 9: Output the result when the termination condition is reached.

Based on the main steps, the flowchart of VFSCPSO shown in Figure 1 can be obtained.

Figure 1.

Flowchart of VFSCPSO.

Finally, the VFSCPSO algorithm pseudocode Algorithm 3 is shown below.

| Algorithm 3 Velocity Four Sine Cosine Particle Swarm Optimization |

| Input: Initialize population N, dimension D, iteration K, learning factors , , , inertia weight w, velocity boundaries , , position boundaries , constant parameter a Output: Optimal fitness value

|

3.3. Complexity Discussion

In this subsection, we delve into the computational complexity of the VFSCPSO. The complexity of the VFSCPSO is compared with those of the standard PSO and SCA. We use O to denote an incremental upper bound on the time complexity. The population size of VFSCPSO is set to be N, the dimension of the optimization problem is D, the maximum number of iterations is T, and the required time to compute the fitness function is . The complexity of VFSCPSO is calculated as follows:

Step 1: The initialization phase takes time to set the parameters of the algorithm; the time complexity of this phase should be .

Step 2: It takes time to randomly generate the positions and velocities of the individuals of the population particles, time to compute the values of the fitness function, and time to find the optimal solution individual setups and record them, so the time complexity of this phase should be .

Step 3: It takes time to record the initialization values and initialize the SCA adaptive parameter , inertia weight w, and other parameters, and the time complexity of this phase should be .

Step 4: The optimization takes the same total time as PSO and the time complexity of this phase should be .

Step 5–step 9: The computation, update, judgment, and output take a constant time and the time complexity is .

To sum up, the computational complexity of VFSCPSO can be expressed as

The random number ensures that the sine–cosine function is selected with approximately equivalent probability, and the complexity of the upper and lower equations in Equation (11) is the same, which results in the time complexity of VFSCPSO being . In order to objectively compare the quality of the algorithms, the time complexity of PSO and SCA is , the complexity of the VFSCPSO is only increased by an order of magnitude compared to that of PSO and SCA. In this paper, we verify the possibility of the implementation of the VFSCPSO through experiments, and VFSCPSO can converge to higher-quality values faster, which gives it a greater application value in reality.

4. Experiment and Analysis

4.1. Experimental Settings

The experimental environment was configured as Intel(R) Core(TM) i7-10700 CPU @ 2.90 GHz, and the software environment was MATLAB 2021b. In order to ensure the comparative effectiveness and fairness of the experiments, we selected the Competition on Evolutionary Computation 2005 (CEC2005) [46] test suite for comprehensive validation. The CEC2005 test suite was a test suite for the Optimization Algorithm Domain Competition, which provides 23 benchmark functions, aiming at comparing and evaluating the performance and efficiency of different algorithms through a series of benchmark functions to reveal the strengths and weaknesses of different algorithms on different optimization problems. In summary, the CEC2005 test suite is an important resource for evaluating the performance of optimization algorithms, and by testing and comparing algorithms on these functions, researchers are able to gain a more comprehensive understanding of the strengths and limitations of different algorithms. Details of the CEC2005 test suite are presented in Table 1 and Appendix A.

Table 1.

CEC2005 test suite information.

To make VFSCPSO more convincing, the comparison experiment covers a total of 12 intelligent algorithms, including the classical algorithms GA [1] and DE [2], the compositional algorithms PSO [5] and SCA [11] to verify the validity of the hybrid algorithm VFSCPSO, and the novel similar algorithm variants AWPSO [21] and SCDLPSO [23], which have better performance; these are selected to verify the superiority of the hybrid algorithm VFSCPSO. AWPSO is cited from “IEEE TCYB”. AWPSO and SCDLPSO are more representative PSO variants. These algorithms has high influence, convergence accuracy, overall performance, and scope of application and low reproduction error. In addition, the more novel and better performing algorithms SFLA [6] and BBO [4], and the advanced algorithms MA [8], POA [9], DO [3], and OMA [13], which were proposed between 2020 and 2023, respectively, are included to verify the advancement of VFSCPSO, having the values of references, and the specific parameter settings are taken from the original studies. The parameter values of VFSCPSO are based on the settings in Section 3. Meanwhile, the evaluation indexes use the best, mean, worst, and standard deviation (std), where the best results are shown in bold. Finally, the performance of the proposed VFSCPSO is further demonstrated by the Friedman test.

The Friedman test is a simple, flexible, and widely applicable statistical method, especially for situations where three or more related samples need to be compared. This method is also effective for data that do not follow a normal distribution or have a skewed distribution. Compared with other parametric tests, the Friedman test requires a relatively small sample size and can produce reliable results with small sample sizes. The Friedman test is one of the most commonly used methods in experimental design and data analysis. A detailed tutorial on using this nonparametric statistical test to compare the strengths and weaknesses of intelligent algorithms can be found in [47]. The following is the Friedman test process:

Step 1: Gather observed results for each algorithm/problem pair.

Step 2: For each problem i, rank values from 1 (best result) to k (worst result). Denote these ranks as .

Step 3: For each algorithm j, average the ranks obtained in all problems to obtain the final rank .

Reasonable experimental design, cross validation, and evaluation methods are important steps to verify the overall performance of the hybrid algorithm; a series of numerical experiments are carried out, along with a detailed analysis of the results. The maximum number of function evaluations (MNFEs) is used as a termination condition for more accurate comparison of algorithmic performance. Compared with the other 12 comparison algorithms, VFSCPSO does not have an additional number of function evaluations, and the same number of iterations means the same MNFEs, so the maximum number of iterations is set to 1000 as the termination condition. To avoid the influence of chance on the results, each algorithm was run independently for 30 runs on each benchmark function separately.

4.2. Experimental Results and Analysis

4.2.1. Performance of Algorithm in Standard Environment

This series of experiments aims to assess the efficacy of VFSCPSO by conducting a comparative analysis with 11 other algorithms. The evaluation includes the determination of best, mean, worst, and std values. The corresponding experimental and test results are presented in Table 2, Table 3, Table 4 and Table 5.

Table 2.

Experimental results and Friedman test results of VFSCPSO on CEC2005 test suite –.

Table 3.

Experimental results and Friedman test results of VFSCPSO on CEC2005 test suite –.

Table 4.

Experimental results and Friedman test results of VFSCPSO on CEC2005 test suite –.

Table 5.

Experimental results and Friedman test results of VFSCPSO on CEC2005 test suite –.

Based on the findings presented in Table 2 and Table 3, it is evident that GA demonstrates suboptimal performance across various benchmark function tests when compared to other algorithms. GA exhibits a notably high best value and a relatively low worst value, especially in functions such as and , showing a significant performance gap compared to alternative algorithms. Additionally, GA displays a relatively large std, with substantial fluctuations in performance. DE exhibits commendable performance in some functions, with low fitness values observed in functions like , , and –. However, its performance is average on other functions, suggesting that DE is more suited for optimization problems with a smaller number of dimensions. PSO performs moderately well across most functions, with its best, mean, and worst values falling in the middle range compared to other algorithms, and performs relatively consistently, with a small std. SCA also performs moderately well in optimization problems with a small number of dimensions, particularly excelling in single-peak function and multi-peak function . However, the larger std of SCA indicates lower stability. Considering the convergence accuracy and stability of PSO and SCA, their effective combination could result in a hybrid algorithm with enhanced performance. AWPSO performs well across most function tests, significantly improving on the performance of PSO and inheriting its advantage of higher stability with a smaller std value. SCDLPSO achieves relatively good results in functions such as and , displaying a low fitness value. However, SCDLPSO exhibits a larger std value compared to AWPSO on functions like and , indicating a higher performance fluctuation. While SCDLPSO enhances the accuracy of PSO, it compromises the stability. VFSCPSO demonstrates superior values of best, mean, and worst on functions such as , , , and . It outperforms the other compared algorithms, boasting a relatively small std value and high stability. In summary, each comparison algorithm exhibits varying performance across different functions, and no single algorithm excels in all scenarios. VFSCPSO, in the comprehensive series of experiments, emerges as a better solution relative to other algorithms. It excels in both single-peak and multi-peak functions, and shows superior global search ability and stability. While achieving favorable results in functions –, its performance in other fixed-dimensional functions is relatively poorer, possibly due to the lower dimensionality of fixed-dimensional functions. VFSCPSO may be better suited for high-dimensional optimization problems. The Friedman test results in Table 3 show that VFSCPSO has an average rank of 2.60 on the 23 tested functions and ranks first in the overall ranking, indicating that the VFSCPSO algorithm performs better overall. GA has an average rank of 5.69 and ranks seventh in the overall ranking, performing the worst in this group of environments. DE has an average rank of 2.65. This indicates that DE performs better as well, but is not the best algorithm. PSO is ranked sixth in the overall ranking and its average ranking is at the back of the pack. This indicates that VFSCPSO has a large improvement on the performance of the PSO algorithm, but is average in other metrics. SCA and SCDLPSO are ranked fourth in the overall ranking, with their average ranking in the middle of the pack. This indicates that SCA and SCDLPSO perform between VFSCPSO and GA. AWPSO ranks third in the overall ranking and is in the middle of the average ranking. This suggests that AWPSO may perform better overall.

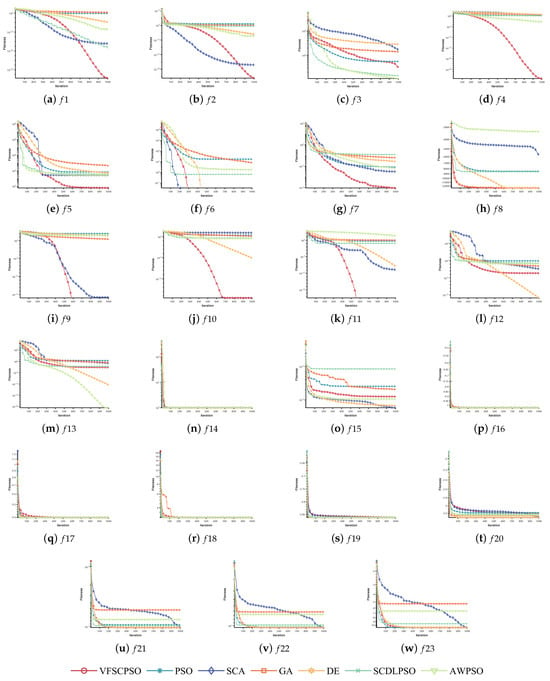

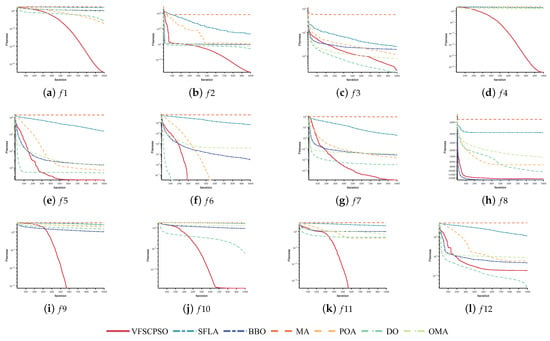

The mean convergence curve is produced based on the results of 30 optimizations of the seven algorithms on the 23 benchmark functions in the CEC2005 test suite, and is used to compare the convergence performance of the algorithms, as shown in Figure 2.

Figure 2.

Convergence curves of VFSCPSO, PSO, SCA, GA, DE, SCDLPSO, and AWPSO on the CEC2005 test suite.

From Figure 2 it is evident that each algorithm displays substantial differences on –, showing their distinct advantages. In contrast, for –, each comparison algorithm experiences rapid declines during the initial phase and converges similarly in the iterative termination conditions. Notably, exceptions include , , and , where the algorithms exhibit minimal differences. This can be attributed to – being fixed-dimensional functions, characterized by relatively lower difficulty. The majority of the compared algorithms effectively solve simpler optimization problems. GA and PSO show a slower decreasing trend and lack competitive superiority. DE performs relatively well on , , and –, as corroborated by Table 2 and Table 3, where it excels on fixed-dimensional functions. However, Figure 2 reveals that the performance of DE fails to significantly distinguish itself from other algorithms, and its advantages are less pronounced compared to VFSCPSO. SCA exhibits diverse performance across different functions, for instance, it performs well on but comparatively worse on . This suggests that the effectiveness of SCA varies, with a relatively slower descent velocity observed on the fixed-dimensional functions –, resulting in poorer performance. The variants of PSO, namely, AWPSO and SCDLPSO, surpass classical algorithms such as GA and DE, as well as their component algorithms PSO and SCA, in the majority of optimization search processes. Notably, both algorithm variants demonstrate faster descent velocities on and , with relatively small performance differences on and . This indicates that both improved algorithms exhibit enhanced competitiveness.

Based on the insights derived from the experimental results detailed in Table 4 and Table 5, diverse algorithmic performances are observed. SFLA demonstrates moderate performance across all metrics, displaying an average performance on functions – without a discernible advantage. BBO exhibits suboptimal performance on most functions, except for , where it outperforms the other algorithms with notably low values for best, mean, and worst, coupled with a relatively low std, indicating enhanced stability. BBO appears more suitable for addressing complex optimization problems. MA performs poorly across all metrics, particularly struggling on and . It exhibits weaker performance, a relatively larger std, and poorer stability, suggesting that MA may be less versatile and better suited for a specific class of optimization problem. POA and DO display comparable performance with results on , featuring relatively low best, mean, and worst values. Additionally, both algorithms perform well on and , with low mean values. Normal performance is observed on , , and with higher mean values. POA exhibits relatively higher stability and proves applicable to various optimization problems, while DO is slightly less stable. OMA performs moderately well on most functions, boasting relatively high best and mean values along with low worst values. Although overall performance is suboptimal on and , it excels on –, showing its suitability for lower-dimensional optimization problems. VFSCPSO stands out with excellent performance on most functions, featuring low values for best, mean, worst, and std. Notably, it excels on multi-peak functions such as –, demonstrating superior convergence accuracy and stability compared to other algorithms. The Friedman test results in Table 5 show that VFSCPSO has an average rank of 2.47 and ranks first in the overall ranking, and the overall performance of the VFSCPSO algorithm is still optimal. BBO has an average rank of 4.65 and it ranks sixth in the overall ranking, which is poor in this group of environments. MA has an average rank of 7, which suggests that MA has a poor overall performance and is likely to be less efficient in practical applications. POA, DO, and OMA are ranked in the middle in the overall ranking and their average rank is also in the middle. This indicates that the performance of the novel algorithms is more prominent. In summary, VFSCPSO excels, especially in multi-peak functions. The performance of other algorithms varies significantly across different objective functions, emphasizing the problem-specific nature of optimization algorithms. The selection of algorithms should consider the characteristics and applicability of each algorithm to specific problem types.

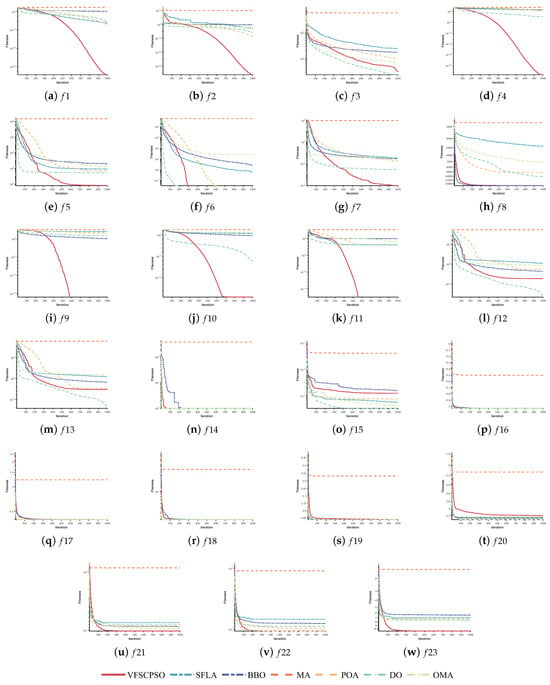

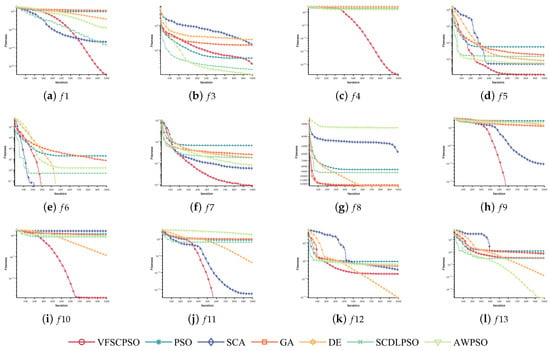

Mean convergence diagrams were produced based on the results of 30 optimizations of the seven algorithms on the 23 benchmark functions on the CEC2005 test suite. These were used to compare the convergence performance of the algorithms, and are shown in Figure 3.

Figure 3.

Convergence curves of VFSCPSO, SFLA, BBO, MA, POA, DO, and OMA on the CEC2005 test suite.

In Figure 3, the convergence curves of VFSCPSO on the single-peak functions , , , , and and the multi-peak functions – demonstrate a notably rapid descent, highlighting a distinct advantage over other comparative algorithms. Moreover, the convergence velocity of DO surpasses VFSCPSO on functions , , , and , implying its competitiveness in these specific contexts. Remarkably, POA exhibits swift convergence on . In addressing the intricate optimization challenge posed by , both VFSCPSO and BBO manifest robust competitiveness, indicating their suitability for solving complex optimization problems. Nevertheless, it is recognized that no algorithm can universally dominate all problem instances. Notably, VFSCPSO exhibits relative weakness on and . Conversely, on and , VFSCPSO demonstrates conspicuous advantages, suggesting its applicability not only to demanding optimization scenarios like but also to simpler cases. The overall performance of VFSCPSO is superior across various problem complexities. In contrast, MA consistently grapples with falling into local optima across all convergence curves, revealing its inferior competitiveness compared to other algorithms.

4.2.2. Performance of Algorithms in High-Dimensional Environment

This series of experiments verifies the performance of VFSCPSO in a high-dimensional environment. In real life, addressing real optimization problem places heightened demands on algorithm performance, necessitating swift convergence to efficiently tackle high-dimensional challenges within predefined time constraints. The ensuing analysis includes the computation and documentation of the mean and std derived from this experimental set. The detailed experimental outcomes are encapsulated in Table 6, Table 7, Table 8, Table 9 and Table 10, while the corresponding Friedman test results are presented in Table 8 and Table 11.

Table 6.

Experimental results of VFSCPSO on CEC2005 test suite – (D = 50, 100, and 500).

Table 7.

Experimental results of VFSCPSO on CEC2005 test suite – (D=50, 100, and 500).

Table 9.

Experimental results and Friedman test results of VFSCPSO on CEC2005 test suite – (D = 50, 100, and 500).

Table 10.

Experimental results and Friedman test results of VFSCPSO on CEC2005 test suite – (D = 50, 100, and 500).

Based on the experimental results from Table 6 and Table 7, the evaluation across 50, 100, and 500 dimensions reveals distinctive algorithmic performances. GA demonstrates relatively good performance on but exhibits poorer efficacy on other functions. SCA displays superior performance on , , , , and . Particularly noteworthy is its excellence on , which is inherited by VFSCPSO. DE holds a clear advantage on , , and . However, its overall performance is slightly lower compared to SCA. The introduction of the sine–cosine strategy in SCA contributes significantly to this advantage. AWPSO performs well on , , , and , demonstrating better overall performance and remarkable algorithmic stability. SCDLPSO excels on and performs relatively well on , indicating its suitability for addressing single-peak problems. In terms of generality, AWPSO outperforms SCDLPSO. VFSCPSO exhibits a lower mean across different dimensions and relatively poorer performance on . However, it outperforms other comparison algorithms, especially on , , , and , with significantly better performance and lower std, indicating superior global search ability and stability. Despite a higher average ranking in higher dimensions, the removal of fixed-dimensional functions from the experiment affirms VFSCPSO’s suitability for complex optimization problem in higher dimensions. AWPSO and SCDLPSO exhibit better performance on functions such as and compared to GA and DE, thereby demonstrating specific advantages and the efficacy of the algorithm variants. From the Friedman test results in Table 8, GA and PSO still receive lower rankings. The competitiveness of DE diminishes compared to SCA after the dimensionality increase, suggesting that SCA is more suitable for solving high-dimensional optimization problems. The two algorithm variants, AWPSO and SCDLPSO, exhibit comparable performances with competitive outcomes. In summary, VFSCPSO proves effective, particularly in complex optimization problems of higher dimensions, demonstrating improved global search ability and stability.

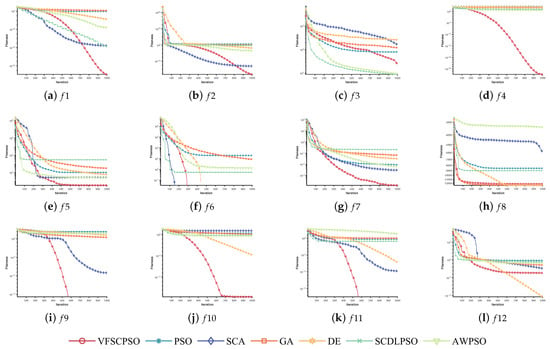

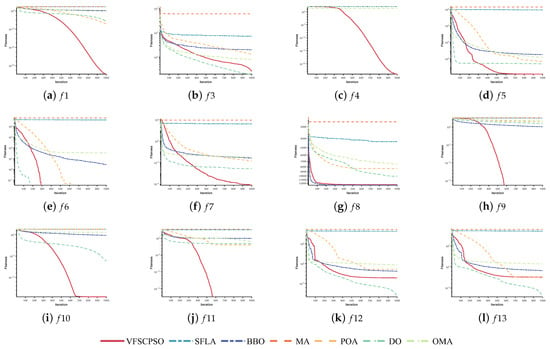

Mean convergence diagrams were produced based on the results of 30 optimizations of the seven algorithms on the 12 benchmark functions on the CEC2005 test suite in high-dimensional environments, which are used to compare the algorithms’ convergence performance. They are shown in Figure 4.

Figure 4.

Convergence curves of VFSCPSO, PSO, SCA, GA, DE, SCDLPSO, and AWPSO on the CEC2005 test suite (D = 100).

As can be seen from Figure 4, it is evident that, compared to the standard CEC2005 test environment, the convergence rates of each algorithm remain relatively stable when elevated to 100 dimensions. Specific observations can be made for individual functions. On , the search accuracy of SCA diminishes, falling short of the performance achieved by SCDLPSO after the dimensionality increase. For and , VFSCPSO demonstrates improved search accuracy despite the higher dimensionality. On , while the search accuracy of the variant AWPSO surpasses that of SCDLPSO, the overall convergence accuracy decreases compared to the experiment with 30 dimensions. On , all algorithms, excluding VFSCPSO, experience a decline in search accuracy. For , SCDLPSO exhibits reduced capability to escape local optima, indicating its unsuitability for high-dimensional optimization problems. Regarding , DE exhibits a degree of competitiveness, but a notable decrease in optimal search on is observed. SCA achieves slightly higher search accuracy on , indicating that algorithmic search performance is influenced to varying degrees based on problem characteristics and changes in dimensionality. There is no significant change in the convergence velocity of each comparison algorithm on and . VFSCPSO, notably, does not show significant accuracy degradation; instead, an improvement in the optimization search of individual functions is observed. This suggests that VFSCPSO is well suited for solving optimization problems with higher dimensionality.

According to the experimental results presented in Table 9 and Table 10, the overall performance of SFLA is generally satisfactory, demonstrating relative stability, particularly on . MA exhibits slightly inferior performance compared to SFLA across various functions. BBO performs suboptimally on single-peak functions such as , , and , but excels on multi-peak functions, particularly achieving the best performance on . DO outperforms others on , , , and , demonstrating competitiveness but still falling short compared to VFSCPSO. OMA displays strong competitiveness on , but its overall performance is subpar in other tests. POA stands out, with strong competitiveness on and commendable performance on . In contrast, VFSCPSO consistently attains optimal performance across all test functions and dimensions, characterized by a small std, indicating remarkable stability. In summary, algorithmic performance varies significantly across different test functions and dimensions, demonstrating successes for the best value and challenges that necessitate problem-specific considerations. The Friedman test results in Table 11 show that MA continues to exhibit a lack of overall advantage, suggesting its potential suitability for specific problem types rather than high-dimensional optimization. The advantage of POA becomes more pronounced, elevating its ranking from third to second. DO and BBO struggle to adapt to the high-dimensional optimization environment, resulting in drops from second to fifth and third to sixth in the ranking, respectively. The change in OMA’s ranking is not substantial. VFSCPSO consistently maintains its first rank throughout the entire series of experiments, clearly distinguishing itself from other algorithms and proving highly suitable for high-dimensional optimization problems.

Mean convergence curves were made based on the results of 30 optimizations of the seven algorithms on the 12 benchmark functions on the CEC2005 test suite in a high-dimensional environment, and are used to compare the algorithms’ convergence performance. They are shown in Figure 5.

Figure 5.

Convergence curves of VFSCPSO, SFLA, BBO, MA, POA, DO, and OMA on the CEC2005 test suite (D = 100).

As depicted in Figure 5, the comparison of this series of experiments with 30 dimensions reveals notable alterations in the search velocity and accuracy of SFLA across various functions, such as , , , , , and . Particularly on , , and , the decrease in performance is conspicuous. In contrast, VFSCPSO exhibits a substantial improvement in convergence accuracy on , whereas other algorithms show minimal variations. While slight performance declines are observed on , the search velocity remains relatively stable for each algorithm, and no significant changes occur on individual functions, such as . DO, however, undergoes significant performance changes on . In summary, SFLA experiences more pronounced alterations in convergence velocity and accuracy with rising dimensions. In contrast, for other algorithms, convergence rates do not exhibit significant changes when progressing to 100 dimensions, showing only marginal decreases. VFSCPSO consistently outperforms in all search aspects, highlighting its superior convergence performance in more complex optimization problems.

4.2.3. Performance of Algorithms in Large-Scale Environment

This series of experiments verifies the performance of VFSCPSO in a large-scale environment. Finally, the mean and std of this set of experiments are calculated and recorded. Table 12, Table 13, Table 14, Table 15 and Table 16 show the experimental results and Table 14 and Table 17 show the Friedman test results.

Table 12.

Experimental results and Friedman test results of VFSCPSO on CEC2005 test suite – (D = 1000, 2000, and 5000).

Table 13.

Experimental results and Friedman test results of VFSCPSO on CEC2005 test suite – (D = 1000, 2000, and 5000).

Table 15.

Experimental results and Friedman test results of VFSCPSO on CEC2005 test suite – (D = 1000, 2000, and 5000).

Table 16.

Experimental results and Friedman test results of VFSCPSO on CEC2005 test suite – (D = 1000, 2000, and 5000).

The experimental results presented in Table 12 and Table 13 show substantial variations in algorithmic performance across functions and dimensions, spanning from to . The algorithm ranking on produces no significant change as the number of dimensions changes. At 1000 dimensions, VFSCPSO emerges as the best performer, with the smallest mean and std, indicative of enhanced stability. GA excels in mean performance, while both GA and PSO exhibit relatively poorer results. The results for 2000 dimensions are similar to those for 1000 dimensions, but the differences are more pronounced at higher numbers of dimensions. At 5000 dimensions VFSCPSO performs well on the mean, alongside competitive performances by SCDLPSO and SCA. All algorithms demonstrate a relatively small std on at this number of dimensions. While the compared algorithms struggle with dimensionality, VFSCPSO maintains its robust performance, as is particularly evident on at 5000 dimensions. On both and , SCA and GA perform relatively poorly and show instability. On , VFSCPSO does not have a competitive advantage, and the best performer is AWPSO. VFSCPSO pulls away from the other comparison algorithms on , with the AWPSO performing better on the mean and VFSCPSO performing best for 1000 and 2000 dimensions. On , DE and AWPSO consistently perform admirably on the mean, with relatively minor stds across all dimensions. There are still three algorithms SCA, DE, and VFSCPSO that can converge to the global optimum on . Additionally, DE and AWPSO lead on in mean performance, while PSO and SCA consistently deliver commendable mean values on . Across various functions –, –, –, VFSCPSO consistently attains optimal results, showing stability across all dimensions. DE performs well on the mean, and the std is relatively small and performs well on some functions. On and , DE performs well on the mean for 1000 and 2000 dimensions, while AWPSO has a relatively small mean for 5000 dimensions. SCA and AWPSO are more competitive on and . AWPSO, SCDLPSO, and VFSCPSO perform well and are relatively stable under several functions and dimensions, and can be used as alternative algorithms for large-scale optimization problems. PSO is affected by dimensionality in some cases. The Friedman test results in Table 14 show that VFSCPSO consistently achieves optimal outcomes, which is similar to the results produced in the 50-, 100-, and 500-dimensional settings.

Mean convergence diagrams were produced based on the results of 30 optimizations of the seven algorithms on the 12 benchmark functions on the CEC2005 test suite in a large-scale environment, which are used to compare the algorithms’ convergence performance. They are shown in Figure 6.

Figure 6.

Convergence curves of VFSCPSO, PSO, SCA, GA, DE, SCDLPSO, and AWPSO on the CEC2005 test suite (D = 2000).

As can be seen from Figure 6, the impact of increasing the number of dimensions on the algorithmic convergence accuracy is evident. Notably, as the dimensionality rises, the convergence accuracy of SCA on notably lags behind that of AWPSO. This discrepancy suggests that AWPSO may be better suited for tackling large-scale optimization problem. The convergence accuracy on experiences a collective decrease among all algorithms, with SCA and PSO demonstrating the most significant declines. A certain differences is observable in the performances of algorithmic variants, with VFSCPSO showing relatively superior results, though the differences are not obvious. While the convergence accuracy decreases on for all algorithms, the convergence velocity remains relatively stable. Noteworthy is the pronounced decrease in the search rate of PSO on , while and – exhibit minimal changes. On , the performance of PSO directly descends to the lowest rank, showing a diminished convergence velocity and accuracy compared to low-dimensional searches. A similar significant decline is observed in the performance of SCDLPSO. On , VFSCPSO demonstrates heightened competitiveness with increasing dimensionality. In summary, VFSCPSO excels in overall performance and maintains significant competitiveness in addressing large-scale problems.

The experimental results in Table 15 and Table 16 demonstrate the better performance of the VFSCPSO across all dimensions on , exhibiting advantage in the mean and std metrics and showing good stability. POA demonstrates aptitude for handling , likely due to its consistent performance even with the rise in dimensionality. In contrast, other algorithms witness a relative decline in performance as the number of dimensions increases, reaffirming VFSCPSO’s sustained leadership. For , the performance of all algorithms, excluding VFSCPSO, fails to diminish, highlighting the unique effectiveness of VFSCPSO in tackling this function. On , DO emerges as the top performer in mean, indicating its suitability for addressing across diverse dimensions. VFSCPSO not only competes better but also maintains superior performance stability. Conversely, MA lags in mean performance. , , , , , and underscore VFSCPSO’s consistent superiority in mean and standard deviation metrics across varying dimensions. VFSCPSO exhibits particular aptitude for handling . In the case of and , the DO excels in both metrics, showing its adaptability to these functions. VFSCPSO, while maintaining competitive performance, also exhibits enhanced stability. The algorithms perform differently in different environments. The SFLA, BBO, MA, DO, OMA, and POA algorithms perform better in some specific contexts, but the VFSCPSO algorithm is relatively more competitive, especially in terms of mean and std. The selection of the most suitable algorithm still needs to consider the characteristics of the specific problem and practical application requirements. The Friedman test results in Table 17 show that the VFSCPSO algorithm has the first overall ranking under all dimensions and performs the best. The DO algorithm has the next best overall ranking under 2000 and 5000 dimensions, coming in second and also higher. The BBO and POA algorithms also perform better in the overall rankings, coming in third and fourth. VFSCPSO has the highest counts under all the dimensions, suggesting that it achieves the best performance under each dimension. The DO does not achieve the best performance under 1000 dimensions. However, it performs well under all other dimensions. Other algorithms did not achieve the best performance under some dimensions, and overall the VFSCPSO and DO algorithms performed relatively well in all dimensions.

Mean convergence curves were produced based on the results of 30 optimizations of the seven algorithms on the 12 benchmark functions on the CEC2005 test suite in a large-scale environment for comparing the algorithms’ convergence performance, as shown in Figure 7. Since there is no decrease on except VFSCPSO, the convergence curve of is not shown.

Figure 7.

Convergence curves of VFSCPSO, SFLA, BBO, MA, POA, DO, and OMA on the CEC2005 test suite (D = 2000).

From Figure 7, it can be seen that as the dimension increases, the convergence trend of each algorithm on – is almost the same as that in 100 dimension, and on although some algorithms are able to iterate to the global optimum, the number of iterations of DO and POA increases in the search of global optimum, and the number of iterations of the VFSCPSO is almost unchanged. SFLA has a change in convergence trend on some functions, the accuracy on , –, – shows a decreasing trend, the other algorithms do not change significantly, the accuracy of convergence velocity on decreases more obviously. DO also has some competitiveness, the convergence velocity on is fast and the number of iterations needed to converge to the global optimum is the least, although it does not converge to the global optimum on , but it achieves the best result among the comparative algorithms. The convergence trend of the convergence curve of the VFSCPSO algorithm is almost the same as that in 100 dimensions, and it achieves the first place in the overall rankings.

4.2.4. Performance of Algorithm in Ultra-Large-Scale Environment

In order to compare and analyze the performance of the hybrid algorithm in solving large-scale optimization problems in a deeper way and to demonstrate the superiority of the hybrid algorithm VFSCPSO proposed in this paper, this series of experiments verifies the performance of the VFSCPSO in an ultra-large-scale environment with D = 10,000. Finally, the best, mean, worst, and std of this series of experiments are calculated and recorded. Table 18 and Table 19 show the experimental results and Friedman test results.

Table 18.

Experimental results and Friedman test results of VFSCPSO on CEC2005 test suite – (D = 10,000).

Table 19.

Experimental results and Friedman test results of VFSCPSO on CEC2005 test suite – (D = 10,000).

According to the experimental results in Table 18 and Table 19, the other twelve comparative algorithms show significant differences in the 10,000-dimensional environment. VFSCPSO achieves the best results in each of the metrics on , , , , , , , , and , which means that VFSCPSO performs well on most of the test functions. It is highly competitive in most cases, can be applied to different environments, shows diversity and adaptability, and has high convergence and stability. On , only the VFSCPSO algorithmic model is able to descend, and the comparative algorithms are all caught in the dilemma of being severely unable to descend, and this hybrid algorithmic model has a better global searchability even when it is difficult to optimize, and a relatively good solution can be found. On , the DE, SCA, DO, POA, and VFSCPSO algorithms are still able to converge to the global optimal solution. The different algorithms show their advantages under different benchmark functions and metrics. PSO and SCA perform well on some functions, but as constituent algorithms do not perform as well as VFSCPSO in most cases. VFSCPSO in 10,000 dimensions still does not produce significant changes on the 12 benchmark functions, with small fluctuations in the solution accuracy, high stability, and good performance consistency across different runs. This further proves that the VFSCPSO has excellent comprehensive performance in dealing with ultra-large-scale optimization problems, and is able to effectively cope with the challenges in large-scale environments. The Friedman test results show that VFSCPSO has the best performance and can be used for solving large-scale optimization problems. In the Friedman test results in Table 18, GA and PSO are relatively balanced and at a disadvantage, which may not be applicable to ultra-large-scale problems. DE is ranked second in the overall ranking, similar to the results of previous experiments, indicating that DE is more stable. SCA and SCDLPSO are ranked in the middle of the list, which is a better overall performance, but not as good as VFSCPSO. AWPSO ranked fifth in overall rank and average rank and the overall performance declined faster, indicating that the algorithm is less stable. According to the analysis results, VFSCPSO ranks first in both the overall ranking and the average ranking, and its performance is still excellent. In the Friedman test results in Table 19, SFLA, MA, and OMA have a high average rank and a low overall rank. These algorithms may not be applicable to ultra-large-scale problems. BBO, POA, and OMA have moderate overall performance in the ranking, with no particularly outstanding performance, and are relatively well balanced to each other. DO has a higher average rank of 2.15 and an overall rank of 2. It performs well on the overall ranking and has a more outstanding performance. VFSCPSO has an average rank of 1.38 and an overall rank of 1, with the highest ranking, indicating that its performance is excellent on all indicators. The average rank is the best performing algorithm on the overall ranking, with the most outstanding performance, and is most suitable for solving ultra-large-scale optimization problems. This is attributed to the SCA strategy that increases the flexibility of the algorithm and avoids the algorithm from falling into local optima, and the 10,000 dimensions run reflects the advantages of VFSCPSO more.

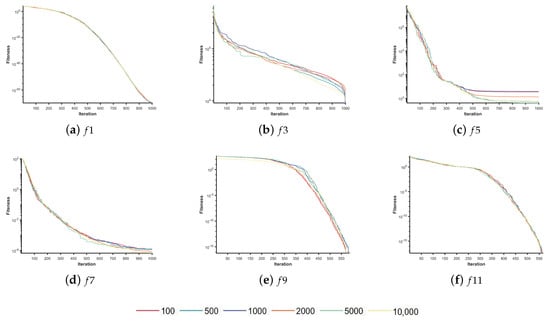

In order to demonstrate the performance of VFSCPSO, the convergence curves of VFSCPSO on the benchmark functions in 100, 500, 1000, 2000, 5000, and 10,000 dimensions were produced. They are shown in Figure 8.

Figure 8.

Convergence curves of VFSCPSO in different dimensions of the CEC2005 test suite.

As can be seen from Figure 8, the convergence curves of the hybrid algorithm VFSCPSO are basically the same as the number of dimensions increases. There is no obvious separation of the convergence curves as the number of dimensions continues to rise. Therefore, the VFSCPSO has strong search capability and extensibility, and the performance is basically unaffected by the number of dimensions, meaning it can effectively solve ultra-large-scale problems.

4.2.5. Application in PID Parameter Tuning

Optimization problems includes four elements. These elements are described in the following example of PID parameter tuning.

- Variables: In optimization problems, variables are quantities that can be adjusted or changed. They represent the decision variables or parameters of the optimal solution sought by the optimization method. In problems in engineering, science, or economics, variables can be various adjustable parameters such as size, velocity, temperature, etc. In the PID parameter-tuning problem, the variables usually are the parameters of the PID controller, i.e., the proportionality coefficients, the integration time, and the differentiation time. The response characteristics of the PID controller are controlled by tuning these parameters.

- Objective function: The objective function is the quantity to be maximized or minimized in an optimization problem. It represents the objective of the optimization. The form of the objective function depends on the specific problem; it can represent cost, benefit, efficiency, distance, or any other metric to be optimized. The objective is to bring these performance metrics to their optimal values or to satisfy certain requirements. In PID parameter-tuning problems, the objective function is usually the performance metric of the control system.

- Constraints: Constraints are conditions or restrictions that must be satisfied so that the solution is considered feasible or acceptable. Constraints define the space of feasible solutions and within this space the optimal solution is sought. Constraints usually come from physical limitations, technical limitations, regulations, or other constraints. In the case of PID parameter-tuning problems, constraints may come from the stability requirements of the control system. There may also be engineering constraints from practical applications, such as the range of PID parameter values or the performance requirements of the control system. The constraints are handled using a penalty function approach in this paper. There may also be engineering constraints from practical applications, such as the range of PID parameter values or the performance requirements of the control system. The constraints are handled using a penalty function approach in the paper. By introducing a penalty function, the constraints are incorporated into the objective function, thus transforming the original problem into an unconstrained optimization problems.

- Optimization method: An optimization method is a technique used to search for optimal solutions that minimize or maximize an objective function. The selection of an appropriate optimization method depends on the nature of the problem, constraints, objective function, and the availability of computational resources. The optimization method described in Section 1 can be used in the PID parameter-tuning problem, and therefore, will not be repeated here.

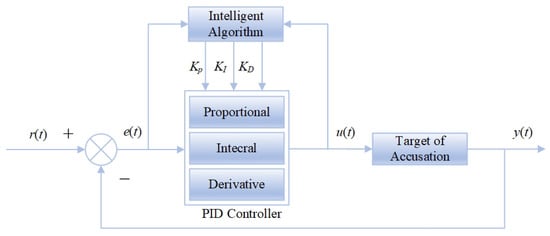

Understanding these elements is essential to understanding and solving optimization problems. These elements together shape the nature of the optimization problem and the possibilities for its solution, and so the impact of each element needs to be carefully considered and analyzed when undertaking the research and solution of the optimization problem. The structural diagram of PID tuning based on intelligent algorithms is shown in Figure 9.

Figure 9.

Structural diagram of PID tuning.

The main goal of the optimal design of PID controller parameters is to optimize certain performance indicators of the control system. It is difficult for a single error performance index to simultaneously meet the needs of the control system for speed, stability, and robustness, etc. Therefore, the integral of absolute error (IAE) is used as an adaptation function. The IAE criterion takes into account the dynamic nature of the iterative process and measures the integral of the absolute value of the error in the system response with time, which is used to quantify the tracking performance of the system with respect to a setting or target value. The parameters are then optimized using an intelligent algorithm. The IAE is used as the objective function f to obtain the demand of dynamic characteristics to satisfy the transition process, and the squared term of the control input is added to f to avoid too much control, and the final objective function is chosen as Equation (12):

In Equation (12), is the system deviation, which is the error between the input and output values, and is the squared term of the PID controller input, which is the control value. The weights and take values in . Normally, = 0.999 and = 0.001 are taken, in addition, a penalty function is necessary to avoid overshooting, and once overshooting is generated, the systematic deviation is used as an item of the optimality index, at which point Equation (13) is chosen as the f that introduces the overshooting term:

In Equation (13), is also a weight, , which is usually taken as = 100. is the penalty term. By combining the penalty function and IAE, the control performance of the system can be considered more comprehensively, and the PID parameter tuning carried out to achieve more stable and accurate control.

This paper introduces the basic concepts of servo motor systems and PID controllers and also describes the basic model of a servo motor system. The system performance can be significantly improved by adjusting and selecting appropriate PID controller parameters. A PID controller is a feedback control algorithm commonly used in control systems and its parameters need to be adjusted in order to optimize the performance of the system. VFSCPSO has a good adaptability in optimization problems and can be used to solve the problem of optimizing the PID control parameters of a servo motor. In this experiment, the maximum number of iterations is set to 20, the population size is 30, and the variable dimension is 3. In addition, the other parameters in VFSCPSO are set in accordance with numerical experiments, and for other algorithms we refer to the original studies.

The parameter tuning of the PID controller is a key part of the control system design, which usually needs to determine the values of , , and according to the characteristics of the controlled process. Since the PID controller was proposed, many parameter-tuning methods with excellent performance have emerged, but mankind has always been in pursuit of higher effectiveness. VFSCPSO is able to improve the shortcomings of poor adaptivity, complex control processes, and insufficient accuracy in PID control parameter optimization more effectively than traditional methods. In addition to the fitness value , the following metrics are selected:

The overshoot is the percentage of the steady-state value accounted for by the difference between the output step response peak and the steady-state value , . The adjustment time refers to the time when the output step response enters steady state and starts to arrive at and remain in steady state within the specified error range, and it is the moment corresponding to the range of the steady-state value error band, which is generally taken to be or .

In order to objectively evaluate the effect of using these five intelligent algorithms in optimizing the PID control system of direct current servo motors, the following three evaluation metrics are selected based on the fitness function: best fitness value , overshoot , and tuning time . The experimental results are shown in Table 20.

Table 20.

System performance parameters tuned by the five algorithms.

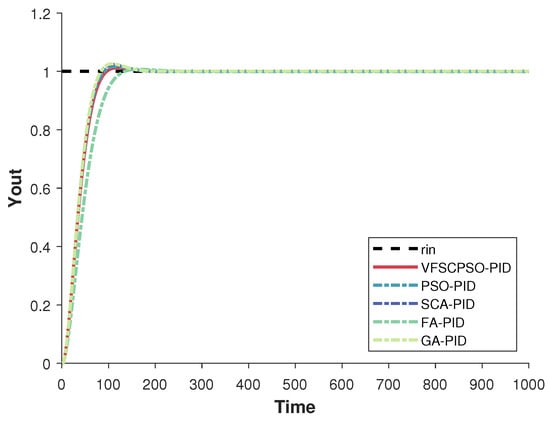

From the simulation results shown in Table 20 and Figure 10, it can be seen that the PID controller optimized by VFSCPSO fully integrates the advantages and characteristics of proportional, integral, and differential control methods. Intelligent algorithms improve the performance of the direct current servo motor system to a greater extent, making the step response of the whole system optimal in terms of stability, velocity, and accuracy. Adjusting is conducive to accelerating the response velocity of the system and increasing the stability of the system, and adjusting can accelerate the response velocity of the system and improve the response velocity of the system. On the metric, the quality of the optimal solution found during the VFSCPSO search is the best, followed by PSO and SCA. FA and GA have relatively high values and relatively poor performance in finding the optimal parameters. On the metric, GA has a smaller amount of overshooting, indicating better control performance, VFSCPSO is second, and FA has a larger amount of overshooting, requiring more time to reach stability. On metrics, VFSCPSO takes less time to adjust from the initial state to the steady state and the system responds faster, while FA has a longer adjustment time. In the comparison experiments of the five algorithms, the response curve of FA is the most unstable, and the response curve of GA is smoother but slower in response. Meanwhile, the overall performance of VFSCPSO is better than the two constituent algorithms, achieving the best overall performance.

Figure 10.

PID simulation result diagram.

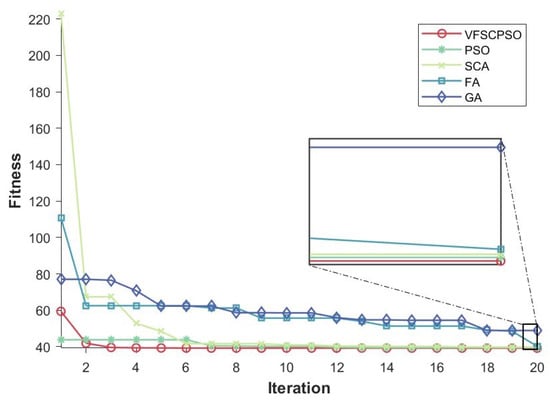

As can be seen from the convergence curves in Figure 11, the convergence curve of the VFSCPSO algorithm decreases faster than those of the two algorithms GA and FA, which indicates that VFSCPSO is able to achieve better results than the other intelligent algorithms for this type of problem. SCA achieves worse results at the time of initialization but performs better at the later stage, which is exactly the opposite of PSO. In summary, VFSCPSO combines the advantages of the two compositional algorithms, with higher accuracy and faster convergence in the PID parameter-tuning problem, indicating that VFSCPSO has greater feasibility in practical engineering optimization problems.

Figure 11.

Convergence curves of five algorithms in PID parameter tuning of direct current servo motor.

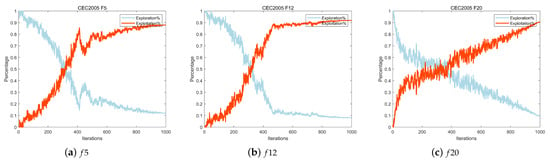

4.2.6. Analysis of VFSCPSO’s Exploration and Exploitation