Abstract

Software evolution is driven by changes made during software development and maintenance. While source control systems effectively manage these changes at the commit level, the intent behind them are often inadequately documented, making understanding their rationale challenging. Existing commit intent classification approaches, largely reliant on commit messages, only partially capture the underlying intent, predominantly due to the messages’ inadequate content and neglect of the semantic nuances in code changes. This paper presents a novel method for extracting semantic features from commits based on modifications in the source code, where each commit is represented by one or more fine-grained conjoint code changes, e.g., file-level or hunk-level changes. To address the unstructured nature of code, the method leverages a pre-trained transformer-based code model, further trained through task-adaptive pre-training and fine-tuning on the downstream task of intent classification. This fine-tuned task-adapted pre-trained code model is then utilized to embed fine-grained conjoint changes in a commit, which are aggregated into a unified commit-level vector representation. The proposed method was evaluated using two BERT-based code models, i.e., CodeBERT and GraphCodeBERT, and various aggregation techniques on data from open-source Java software projects. The results show that the proposed method can be used to effectively extract commit embeddings as features for commit intent classification and outperform current state-of-the-art methods of code commit representation for intent categorization in terms of software maintenance activities undertaken by commits.

Keywords:

software maintenance; code commit; mining software repositories; adaptive pre-training; fine-tuning; semantic code embedding; CodeBERT; GraphCodeBERT; classification; code intelligence MSC:

68T01; 68N01

1. Introduction

Software changes serve as core building blocks of software evolution [1] in both the software development and maintenance phases of a software development life cycle. Throughout the development of a software system, every change made to the software aims to make a step forward towards producing and delivering a software product that meets the users’ needs [2]. In contrast, changes performed after the delivery of software, i.e., during software maintenance, are necessary to ensure the product’s continuous usefulness for the users and, ultimately, its survival [3,4,5]. For these reasons, software changes are viewed as being at the core of software engineering practices. To efficiently manage the changes made to source code during a software’s lifetime, source control systems, such as Git and SVN, have become the de facto standard in modern software engineering [6]. In such systems, the tracked changes are organized as commits. Commits are stored within a code repository of a software project and represent a snapshot of source code at a certain time in the software project’s version history.

Behind every commit in a software repository lies a reason that drove that particular set of code changes. This underlying rationale can provide insightful information for both software researchers and practitioners; however, when changes are committed to the repository, the intent of the changes is rarely properly documented [7,8]. One way to identify the intent of the undertaken changes in a commit is to manually inspect the commit by taking a closer look at, for example, the modifications to the code, the committer’s notes that can be found in the form of a commit message, software documentation, and external documentation. However, prior studies have demonstrated how labor-intensive, time-consuming, and expensive such an approach is, in addition to questionable scalability for large software projects in real-world use cases [6,9]. Furthermore, when intent identification is performed by humans for an extensive number of commits and projects, in particular retrospectively, it tends to be error-prone [7]. To address the need for automated approaches for intent identification, many researchers have attempted to construct machine-learning-based commit classification models as an alternative to resorting to manual efforts. Recent studies in the field have demonstrated that such models are adequate for the task [6,7,8,10,11,12,13,14,15,16,17].

In this context, the majority of existing research focuses on identifying the intent of the commit change in terms of the type of maintenance activity carried out in a commit. The importance given to maintenance activities mainly lies in the substantial amount of resources these activities consume; ranging from approximately 50% [18] to as much as 90% [19] of total expenses devoted to a software project. Therefore, there is significant interest in deepening the understanding of software maintenance as a crucial part of software evolution and improving software practices related to software maintenance; the previously discussed commit classification models can be of assistance to this in many ways. Firstly, by having a historical record of maintenance activities performed throughout maintenance, managers can gain a better understanding of how effectively they are planning maintenance tasks, managing maintenance expenses, and allocating available resources [11,14,15,20]. In addition to analyzing commit intents retrospectively, commit intent information can assist managers in making decisions regarding planning future activities, allocating resources, assessing the project’s health, managing technical debt, etc., all of which can collectively make maintenance more efficient, minimize costs and efforts, and preempt potential issues [11,14,15,20]. For example, by having an insight into resources needed for a type of maintenance activity in the past, it is easier to plan for future maintenance needs [6]. As another example, by obtaining current data on the number of commits associated with each type of maintenance activity, managers can reallocate resources accordingly or investigate the underlying causes of unexpected increases in commits related to a specific activity type [11]. The classifications produced by an accurate commit intent classification model can also be used to identify anomalies in software evolution, such as verifying if the unplanned type of activity is carried out. For instance, in projects presumed to be in the feature freeze phase, the work on adding new features should not be undertaken [7,21]. Additionally, many research endeavors benefit from commit intent information to support empirical research in investigating software changes, evolution, and maintenance [8] or to improve the maintenance processes for software practice. As an example, some researchers aim to focus only on a specific type of maintenance activity [6], for which the commit classification models can be utilized as a starting point. Similarly, they can be helpful to direct a developer to a specific type of software change [6]. Commit intent information can also be used to build developer’s maintenance profiles [22], to prioritize code reviews [8], and to aid in the early detection of software projects with degrading software quality [7] or lacking in proper maintenance support [23]. Hence, there is a need to have robust and reliable automated approaches for commit intent classification, as their ability to determine the intent of a change accurately has a direct impact on these aforementioned use cases and applications.

While existing approaches to commit intent classification have been shown to be somewhat successful at the task, there are still many open challenges to address associated with the complex and challenging nature of software changes and the subtle distinctions between different intent types. Models with high accuracy, robustness, and reliability are still lacking. Existing models that base their classification entirely on the content of a commit message are likely to perform poorly in cases where the messages are not present, incomplete, or of low quality [5,7,8]. Additionally, these models do not consider the changes made to the source code at all, even though the actual changes are in the code itself. The approaches that leverage the actual changes in the source code, characterized by its unstructured nature, mainly rely on code change metrics derived from the code, capturing size-related characteristics of the code or patterns identified by code change distiller tools. However, the potential benefits of using the semantics of the code for commit intent classification have yet to be fully explored [24].

To address this research gap, this work is the first to present an approach to commit-level software change intent classification into software maintenance activities based on embedding code changes using a pre-trained transformer-based code model. To tackle the unstructured nature of source code, we build upon the recent advancements in code representation learning models by simultaneously exploiting the representation capabilities of these models to capture code semantics. Thus, our proposed method is based on a pre-trained code model that is further trained using task-adaptive pre-training and fine-tuned for the task of classifying code changes based on the maintenance activities performed in those changes. The resulting model is then employed as a semantic embedding extractor, i.e., to encode fine-grained conjoint code changes, e.g., file-level or hunk-level changes, represented as additions and deletions of code lines, as code change embeddings. Finally, using a chosen aggregation technique, code change embeddings, representing fine-grained conjoint changes performed by a commit operation, are aggregated into a unified commit-level embedding.

This research aims to investigate whether the proposed method is effective when used for commit intent classification and how it compares to the current state of the art (SOTA). To achieve this, we evaluated the proposed method using two BERT-based code models, i.e., CodeBERT and GraphCodeBERT. These two code models were selected based on existing studies indicating their success in capturing code syntax and semantics, making them suitable for tasks requiring source code understanding [25,26,27,28,29,30,31,32]. To further train the pre-trained models of CodeBERT and GraphCodeBERT in a self-supervised manner, i.e., to perform task-adaptive pre-training, we constructed a dataset of more than 45,000 files affected by commit operations. To fine-tune the resulting models and build supervised machine-learning-based models for commit intent classification, we used an existing dataset of 935 commits from eleven open-source Java software projects labeled with respective intent categories in terms of maintenance activities performed in commits. For commit intent classification, commit-level embeddings obtained with the proposed method were used as inputs. Additionally, code-based commit representation features from prior studies were also considered as inputs. The output of the classification is the type of maintenance activity that was performed in a commit. The experimental results show that the build classification models based on the proposed method can accurately classify commits, capable of outperforming the SOTA. Despite this, several potential future research directions are highlighted.

In summary, this research paper makes several contributions to the field:

- (1)

- We propose a novel method for extracting semantic features of code changes in order to represent a commit as a vector embedding for commit intent classification into software maintenance activities.

- (2)

- We provide an ablation study and in-depth analyses demonstrating the effectiveness of the proposed method and its steps when used for commit intent classification into software maintenance activities.

- (3)

- We provide empirical evaluations of commit intent classification performance when using the proposed method compared to the existing methods of code-based commit representations for intent categorization.

The rest of this paper is organized as follows. Section 2 gives an overview of related work on commit classification and the usage of pre-trained models for code-related tasks. Section 3 introduces background knowledge relevant to our work, including the notions of commit-level software changes, commit intent classification, intent-based categorization of software maintenance activities, and transformer-based models for programming languages. Section 4 details the semantic commit feature extraction method proposed in this work. Section 5 presents the experimental setup, while Section 6 reports and discusses the obtained experimental results. Section 7 discusses threats to construct, internal, external, and conclusion validity. Section 8 concludes the paper by summarizing our findings and highlighting future research directions.

2. Related Work

2.1. Commit Classification

With the widespread adoption of source control in software development, commits emerged as a valuable unit of analysis for improving understanding of software changes, collaborative development, and software evolution [33,34,35,36]. In addition, the classification of commits was found to be beneficial for various software-engineering-related tasks, including detecting security-relevant commits [37,38], predicting the defect-proneness of commits [39,40], and demystifying the intent behind commits [6,7,8,10,11,12,13,14,15,16,17]. To tackle such challenges, the research community has extensively leveraged data associated with commits, namely, the committed code [6,7,8,11,14,37,38,41] and data from commit logs, such as commit messages [10,12,13,16,17].

In early attempts to construct commit intent classification models, researchers mainly relied on word frequency analysis with text normalization on commit messages to extract keywords indicative of maintenance categories [10]. Also utilizing commit messages as a basis for classification, several researchers employed models based on word embedding methods, notably Word2Vec [16] and various variants of BERT [12,13,17]. Our work is related to recent advances in commit intent classification, particularly those that base classification on the commit’s source code.

Levin and Yehudai [11] proposed a set of 48 code change metrics as defined by the taxonomy proposed by Fluri [42], each representing a meaningful action performed by developers. The set of metrics encompasses changes made to both the body and declaration parts of classes, methods, etc. Some examples of metrics include statement insertion/deletion and object state addition/deletion for the former, and method overridability addition/deletion and attribute modifiability addition/deletion for the latter. The authors combined the change metrics obtained using the ChangeDistiller tool with a set of 20 indicative keywords extracted from commit messages to build compound commit classification models. The approach was evaluated on a dataset the authors created using commits from GitHub repositories of eleven Java software projects. The authors manually labeled 1151 commits as corrective, adaptive, or perfective following the classification scheme suggested by Swanson [20] with the help of the committed code snippets, commit messages provided by the developer performing the changes, and information from issues tracked in the projects’ issue-tracking system.

Meqdadi et al. [6] proposed a binary commit classification model based on eight code change metrics that count the number of changed code lines, comment lines, include statements, new statements, enum statements, hunks, methods, and files. The study was performed on a manually curated dataset of 70,226 adaptive and non-adaptive commits from six C++ software projects, which the authors created themselves by inspecting commit messages.

Hönel et al. [7] proposed 20 size-based code change metrics, e.g., the number of added files, deleted, renamed, and modified files. Each of them was presented in two variations. The first variation featured unique code that only affected functionalities (i.e., code without considering whitespace, comments, and code clones). The second included all code. Two other metrics representing ratios between the size-based code change variations on the level of affected files and on the level of affected lines were proposed as well. The study was conducted on the existing dataset of Levin and Yehudai [11] with proposed code change metrics extracted from the source code using the Git Density tool. Furthermore, the authors also investigated the metrics from the perspective of considering code change metric values from preceding commits.

Ghadhab et al. [14] proposed 23 refactoring code change metrics, e.g., the presence of the extract method, move method, and rename variable operation, gathered from the code using the RefactoringMiner tool. In addition, they proposed one bug-fixing code change metric, i.e., the presence of a bug-fixing pattern, gathered using the FixMiner tool. Together with independent variables already proposed by Levin and Yehudai [11] and a fine-tuned DistilBERT for encoding commit messages, the authors assessed the performance of the proposed approach on a dataset of 1793 commits. The dataset was created from three existing datasets; labeled instances of the data of Levin and Yehudai [11], single-labeled instances from data of Mauczka et al. [43], and unlabeled instances from data of AlOmar et al. [44], which the authors labeled manually based on the content of the commit messages.

Mariano et al. [15] proposed three code change metrics, i.e., the number of added lines, deleted lines, and changed files, extracted from the source code using the GitHub GraphQL API. The authors evaluated the proposed approach using the existing dataset of Levin and Yehudai [11].

Meng et al. [8] proposed a graph representation of commits in which changed entities in a commit were represented as nodes in the graph and their relationships (i.e., dependencies recognized using static program analysis) as edges, while other characteristics were encoded as attributes of the graph’s nodes and edges. The proposed approach identified a set of relevant nodes in each graph and created the graph’s vector representation that served as input to a convolutional neural network. The approach was evaluated on a dataset of 7414 commits from five Java software projects that the authors built based on category labels defined and assigned to commits in an issue-tracking system by developers of the subject software projects. In contrast to the aforementioned related work, the authors here used their own label classification scheme; however, they claim it can be mapped directly to the one suggested by Swanson [20].

A concise overview of relevant related work that has proposed the use of features obtained from the commits’ source code is presented in Table 1. It should be noted that for each study, only proposed novel independent variables extracted from the source code (i.e., not other data sources) that were not previously proposed by others are provided in the table. This, however, means that in the studies, the authors might have combined their proposed features with the ones already proposed by others with the aim of experimenting and achieving better overall performances. Regarding classification performances, the highest evaluation metric scores achieved in cross-project settings for models based only on features extracted from code and hybrid models based on all available features (may include features extracted from other data sources than code, e.g., commit message) are reported. The classifiers used for the best-performing classification models are reported as well. As many different classifiers were employed for the task, we only report the best-fitting high-level category that the classifier belongs to, distinguishing six groups as follows: decision-tree-based, rule-based, probabilistic, support vector machines, instance-based, and neural network classifiers [45].

Table 1.

Overview of related work utilizing features extracted from the source code for commit-level software change intent classification into software maintenance activities.

In addition to the reported related work, a more comprehensive overview may be found in a recently conducted systematic literature review on supervised-learning-based commit classification models into maintenance activities [5]. Our research builds on these previous efforts to represent software commits based on source code changes carried out in commits. One key difference distinguishing our work from the existing one is that no one has attempted to employ code-representation-learning models to tackle the challenge.

2.2. Pre-Trained Models in Code-Related Tasks

Due to pre-trained code-representation-learning models demonstrating good performance in code-related tasks without requiring enormous data quantities, such models have been employed across a wide variety of tasks within the domain of software engineering. Pan et al. [25] encoded Java source code files using a pre-trained CodeBERT model to aid software defect prediction. Kovačević et al. [46] conducted experiments with the Code2Vec, Code2Seq, and CuBERT models to represent Java methods or classes as code embeddings, facilitating machine-learning-based detection of two code smells, i.e., long method and god class, while Ma et al. [26] leveraged the CodeT5, CodeGPT, and CodeBERT models to detect the feature envy code smell. To compare software systems, Karakatič et al. [47] utilized a pre-trained Code2Vec model to embed Java methods. The work of Fatima et al. [27] employed the CodeBERT model to represent Java test cases, assisting in the prediction of flaky (i.e., non-deterministic) test cases. The CodeBERT model was also used by Zeng et al. [28] in order to represent C functions, aiding in detecting vulnerabilities at the function level. Mashhadi and Hemmati [29] used the CodeBERT model to represent single-line Java statements, enabling automated bug repair, while Huang et al. [48] experimented with CodeBERT and GraphCodeBERT to encode single- and multi-line segments of C code for automated repair of security vulnerabilities. The pre-trained CodeBERT model was also utilized to embed code changes to help predict the commit’s defectiveness [30] and identify silent vulnerability fixes [31]. Our research closely relates to these recent attempts to employ pre-trained code models to tackle software engineering challenges. In an effort to add to the body of knowledge, our research examines the suitability and effectiveness of code models within the specific problem domain of commit intent classification.

3. Background

3.1. Commit-Level Software Changes

A commit refers to a set of code changes a developer makes to the codebase that are tracked together as a single unit of change within a software project’s repository. Given a project’s version history containing a number of commits, which can be defined formally as

a specific commit randomly selected from the history represents a snapshot of the codebase at a given time in the project’s history. Each commit starts from the existing state of the codebase and makes modifications based on the required changes’ intent; hence, the changes introduced in a commit can be defined as the differences between the preceding and current states of the codebase , where each change to the code can be thought of as either an addition of a code line or a deletion of a code line. Note that a modification of a code line effectively combines both an addition and a deletion. Accordingly, given a commit , we may formalize it as a set of changed lines of code distributed across one or more affected files by a commit in the codebase as

where and represent added and deleted lines of code in a file f, respectively, with and N representing the total number of affected files by a commit operation. In the context of Git source control, changes spread across files are organized into hunks, i.e., contiguous blocks of code changes within a file. Thus, a commit can be formalized as a set of changed lines of code distributed across one or more hunks as

where and represent the lines of code added to and deleted from a hunk h, respectively, with and N representing the total number of affected files by a commit operation, and and M representing the total number of hunks within a file f.

3.2. Commit Intent Classification

Commit intent classification models are supervised-learning-based models that use labeled data to learn how to predict the intent of a commit, i.e., dependent variable, based on the data associated with the commit, i.e., independent variables [24]. Formally, the problem of commit intent classification can be formulated as follows. A set of labeled commits C can be defined as

where every commit instance from the set C is presented as a pair of , with and m representing the total number of labeled instances. The first part of the pair is a feature vector that describes the commit. The feature vector has d dimensions, with each dimension of representing a certain feature. Considering the context of commit intent classification, features can be extracted from a variety of commit artifacts, including commit messages, source code, commit metadata, and data from external systems, such as issue tracking tools (e.g., Jira) and software quality tools (e.g., SonarQube) [5]. The second part of the pair is a label that represents the intent of the commit. The commit’s label is one of a predefined set of class labels , with k representing the total number of classes. Class labels are categories of maintenance activities, which can be either binary (two categories) or multi-class (multiple categories), following a specific intent-based categorization scheme of software maintenance activities, such as the one introduced by Swanson [20], which is most commonly used [5]. Finally, given such a labeled set C, we aim to train a model M, defined formally as

where model M can predict the intent class of a commit given its feature vector in a way that is a good enough prediction of the class . The trained model M can then be used to classify new, unseen commits into their respective classes.

3.3. Intent-Based Categorization of Software Maintenance Activities

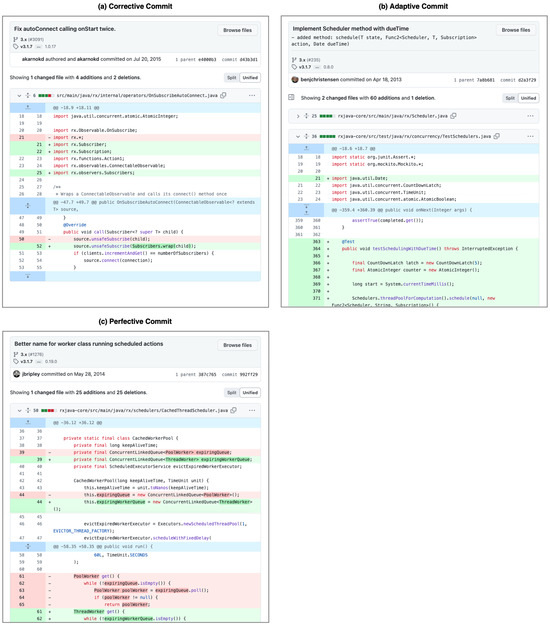

Considering what was intended to be achieved when performing maintenance activities, Swanson [20] discussed the three types of maintenance activities carried out during software maintenance: corrective maintenance, which is performed to correct functional and non-functional faults; adaptive maintenance, which is performed in response to changes in the environment and requirements; and perfective maintenance, which is performed to improve software performance and quality attributes [20,49]. According to Lientz et al. [50], 60.3% of maintenance tasks are perfective, 18.2% adaptive, and only 17.4% corrective. A follow-up study by Schach et al. [51] observed that corrective tasks are more prevalent (56.7%), while fewer tasks are perfective (39%) and adaptive (2.2%) in their nature. Figure 1 illustrates the three maintenance activities performed with a commit operation on real-world software.

Figure 1.

Three examples of commit-level software changes, extracted from a dataset made available by Levin and Yehudai [11], each illustrating a different type of software maintenance activity performed on the RxJava software project: (a) corrective commit (bug fixing); (b) adaptive commit (new feature implementation); (c) perfective commit (refactoring operation). In each commit, additions are highlighted in green with a “+” symbol, signifying added code lines, while deletions are marked in red with a “-” symbol, signifying deleted code lines.

3.4. Transformer-Based Models for Programming Languages

The advent of transformers, a neural network architecture initially proposed by Vaswani et al. [52], has revolutionized the field of natural language processing. Transformers represent a subset of deep learning models that introduced self-attention mechanisms and positional encodings to natural language modeling. By enabling parallel processing, thereby enhancing scalability for working with large data, transformer models addressed some key challenges associated with previously utilized recurrent neural networks. From a high-level overview of the architecture of transformers, they traditionally consist of two main parts, i.e., the encoder and the decoder. The former processes the input sequence with the aim of understanding it, while the latter aims to generate the target output based on that understanding [52]. Transformer-based models have significantly improved the performance of the SOTA in a wide range of natural language processing tasks, e.g., text classification, machine translation, text generation, sentiment analysis, question answering, and text summarization, across various domains. In the domain of software engineering, transformer models have demonstrated efficiency in understanding and generating not only human language but also programming code. Several models specifically designed for programming languages that leverage the power of transformers to encapsulate syntactic structures and the semantics of code by training on vast amounts of source code examples have been proposed. Notable representatives of such code models are CodeBERT [53], GraphCodeBERT [54], CuBERT [55], CodeT5 [56], and Codex [57].

CodeBERT and GraphCodeBERT

CodeBERT is an encoder-only transformer-based model proposed by Feng et al. [53]. It is a language model specifically designed to support understanding and generation tasks related to programming languages. The model is able to produce general-purpose contextual representations that encapsulate the syntactic and semantic patterns inherent in programming code by pre-training the model on a large and diverse corpus of both uni-modal and bi-modal data. The former refers to inputs in terms of source code snippets from various software projects and programming languages, while the latter refers to paired inputs of code snippets and their natural language documentation [53]. GraphCodeBERT is an enhancement of CodeBERT proposed by Guo et al. [54] that additionally encapsulates the inherent structure of programming code by including the data flow of code in the pre-training of code representations, which encodes the dependency relations between variables and can additionally benefit the code-understanding process.

Both CodeBERT and GraphCodeBERT use a multi-layer transformer-based neural architecture, following the BERT (Bidirectional Encoder Representations from Transformers) architecture; more specifically, they are based on the architecture of RoBERTa [53,54]. In CodeBERT, each input to the model is represented as a sequence of two distinct subsequences of tokens as , where and represent tokens of two different subsequences, corresponding to natural language inputs, i.e., words, and programming language inputs, i.e., code, respectively, represents a special classification token at the beginning, and represents a special separation token that separates the two subsequences or signifies the end of the sequence [53]. In GraphCodeBERT, the input to the model is extended with an additional variable subsequence as , where represents a token corresponding to the variable. The subsequence of variables follows the subsequence of tokens of paired natural language-programming language inputs [54]. The output of both CodeBERT and GraphCodeBERT includes the contextual vector representation of each token from the sequence and the representation of the special classification token , which is considered as the aggregated representation of the entire sequence [53,54].

During pre-training of CodeBERT, the model is trained in an unsupervised manner on large-scale unlabeled data using a hybrid self-supervised learning objective loss combining two pre-training objectives as , where is the loss of the masked language modeling (MLM) pre-training objective and of the replaced token detection (RTD) pre-training objective [53]. The MLM objective uses bi-modal data and masks out a percentage of the tokens from both subsequences at randomly selected positions, and the pre-training task is to predict the original tokens that are masked out given surrounding contexts. The loss function of MLM is defined as

where acts as the discriminator predicting the probability of a token based on the context of masked tokens, and are the random set of positions of tokens for the natural language and programming language to mask, and and are the masked tokens at these positions [53,58]. The second pre-training objective of CodeBERT, RTD, uses both bi-modal and uni-modal data, and the pre-training task is to detect plausible alternatives of the generated masked-out tokens [53,59]. During pre-training of GraphCodeBERT, three self-supervised training objectives are used. In addition to the MLM pre-training objective, GraphCodeBERT introduces two additional structure-aware objectives, i.e., data-flow edge prediction and node alignment pre-training. The former is designed to learn representation from data flow, while the latter is designed to align variable representations between source code and data flow [54]. To keep this paper concise, we present the loss function of the MLM pre-training objective only, as it is directly relevant to this study. For additional information on other objectives, please refer to the original papers by Feng et al. [53] and Guo et al. [54].

The publicly available pre-trained CodeBERT model and pre-trained GraphCodeBERT model were pre-trained using a large-scale dataset CodeSearchNet [60], which consists of data from publicly available open-source non-fork GitHub code repositories, including 6.4 million uni-modal and 2.1 million bi-modal data points, where each data point considers source code snippets or natural language documentation, i.e., code comments, at a function-level. Pre-trained models are designed to process input sequences of a maximum of 512 tokens, including special tokens, and output 768-dimensional embeddings [53,54].

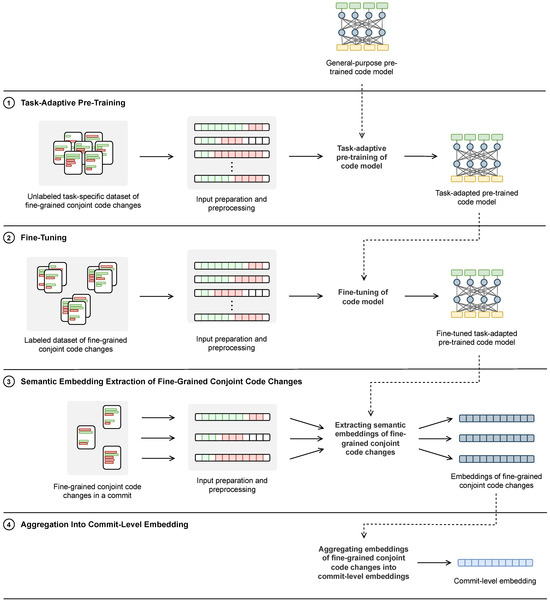

4. Proposed Semantic Commit Feature Extraction Method

From a high-level overview, the proposed method of semantic feature extraction from code changes in a commit consists of four steps: ➀ task-adaptive pre-training, ➁ fine-tuning, ➂ semantic embedding extraction of fine-grained conjoint code changes, and ➃ aggregation into commit-level embedding, as illustrated in Figure 2. In the following subsection, we outline each step of the proposed method and provide details on the implementations using CodeBERT and GraphCodeBERT, which are the models employed in this study.

Figure 2.

An overview of the proposed semantic commit feature extraction method.

4.1. Task-Adaptive Pre-Training

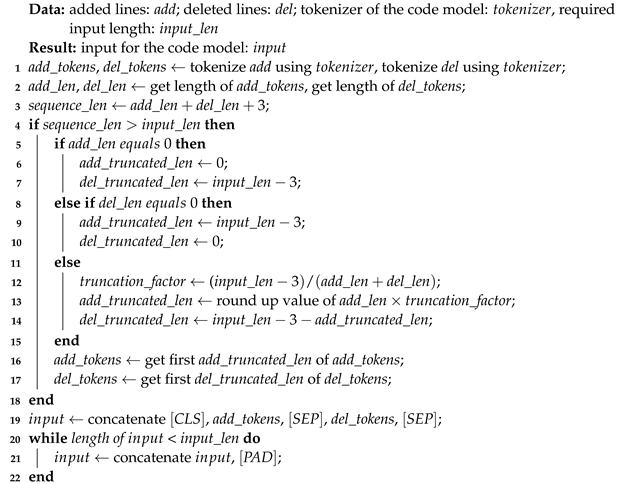

In the first step, a selected pre-trained code model, trained on a large and general dataset, undergoes further training as a second training phase, using a smaller, unlabeled dataset with data relevant to the given task under study. This adaptation strategy aims to refine the code model’s capabilities in performing a particular task [61]. In our case, adaptive training aims to adjust the model to reflect code changes and capture the semantics of paired inputs of added and deleted lines of code at a level of fine-grained conjoint code changes, e.g., file-level or hunk-level code changes, affected by a commit operation, which differs from the CodeBERT and GraphCodeBERT initial training on paired inputs of function-level natural language code comments and code snippets. To perform task-adaptive pre-training, a corpus closely related to the task is necessary and should ideally be drawn from the task distribution [61]. In our context, the dataset should consist of representative code additions and deletions within a single commit at the level of fine-grained conjoint changes. Such datasets can be gathered from unlabeled code repository data of software projects, based on which commit intent classification models are built, or data available in open-source code repositories. For the dataset to be usable in the training process, it needs to be prepared and preprocessed according to the selected model’s requirements, including tokenization, truncation, and padding. Specifically, for BERT-based code models, the input sequence should be constructed as detailed in Algorithm 1. This first involves converting added and deleted lines of code into tokens using the provided tokenizer of a selected code model. If these tokenized lines, along with necessary special tokens, i.e., at the beginning of the sequence and between the two subsequences and at the end, exceed the maximum input size that the model is designed to process, truncation of added and deleted tokens is required. The truncation is performed proportionally for both added and deleted code lines to ensure their balanced representation in the sequence. Alternatively, tail or head truncation can be performed. If the sequence does not meet the length requirement, it is padded with the special token to ensure that all input sequences are of the same length. Using such a task-specific dataset, task-adaptive pre-training starts from parameters learned through general pre-training and adjusts them through a selected self-supervised learning objective; thus building upon the existing knowledge and adapting it for the given task.

| Algorithm 1 Input preparation and processing for a BERT-based code model with dynamic token length allocation, truncation, and padding |

|

4.2. Fine-Tuning

In the second step, the task-adapted pre-trained code model from the first step undergoes further training through a process of fine-tuning for a classification task. In our case, this involves training the model on a labeled dataset specific to the task, i.e., code changes at the level of fine-grained conjoint changes, which are represented as additions and deletions of code lines, each with a known label corresponding to the intent of the change. The goal of fine-tuning is to enhance the model’s ability to accurately reflect the various intents behind code changes, represented by pairs of added and deleted code lines. To achieve this, a classification head should be added on top of the task-adapted pre-trained code model, which aids in slightly adjusting the model’s parameters to better suit the given task, based on the provided labeled dataset. Note that the same process as outlined in the previous subsection should be employed to prepare and preprocess the dataset, ensuring that the inputs are suitably formatted to meet the requirements of the selected code model.

4.3. Semantic Embedding Extraction of Fine-Grained Conjoint Code Changes

In the third step, the fine-tuned task-adapted pre-trained code model serves as a semantic embedding extractor through model inference. This entails using added and deleted lines of code of fine-grained conjoint code changes, that have been prepared and preprocessed as outlined in the previous subsections, as inputs to the model. Upon inputting this data, the embedding of the model’s special token is extracted. This embedding acts as a contextual aggregated vector representation of the entire input sequence, encapsulating the nuanced semantic information pertinent to the code changes.

4.4. Aggregation into Commit-Level Embedding

In the last, or fourth, step, since a commit may involve changes across several fine-grained conjoint change units, e.g., files or hunks, the embeddings of code changes affected by a specific commit operation are aggregated into a single, unified vector representation. This is achieved using a selected aggregation technique, which can involve simple dimension-wise statistical aggregations, graph-based aggregations, clustering-based aggregations, etc. The result of this step, i.e., the output of the proposed method, is a commit-level embedding representation. Such commit embeddings, derived from source code changes, can subsequently be used for a downstream task. For instance, a classifier utilizing these commit embeddings can be trained using a labeled dataset to categorize commits according to their intents. This could be, for example, based on the type of maintenance activity performed within a commit, in order to perform commit intent classification.

5. Experiments

5.1. Research Questions

With this research, our objective is to investigate and provide answers to two research questions (RQs), formulated as follows, alongside the rationales behind them:

- RQ1 How effective is the proposed method when used for commit intent classification?This question aims to empirically assess the effectiveness of the proposed semantic commit feature extraction method for commit intent classification. By focusing on classification performance, this evaluation aims to determine the resulting model’s ability to accurately distinguish between various types of intents behind commit-level software changes. Such an assessment is crucial as it directly relates to the practical applicability and relevance of the method in the field of software engineering.

- RQ2 How does the proposed method compare to the SOTA when used for commit intent classification?This question aims to benchmark the proposed method against the current SOTA in commit intent classification. By conducting this comparative analysis, the research aims to position the proposed method within the broader context of commit intent classification.

5.2. Datasets

To address the research questions, several experiments were conducted on the dataset made available by Ghadhab et al. [14], which was originally prepared by Levin and Yehudai [11]. The dataset used in the experiments consists of 935 commits from eleven open-source software projects based on Java, with manually annotated labels of commit intents in terms of the three software maintenance activities. Out of all commits, 49.4%, 26.1%, and 24.5%, are corrective, adaptive, and perfective, respectively. More detailed commit-level characteristics of the dataset used in our experiments are presented in Table 2.

Table 2.

Commit-level characteristics of the dataset used in the experiments.

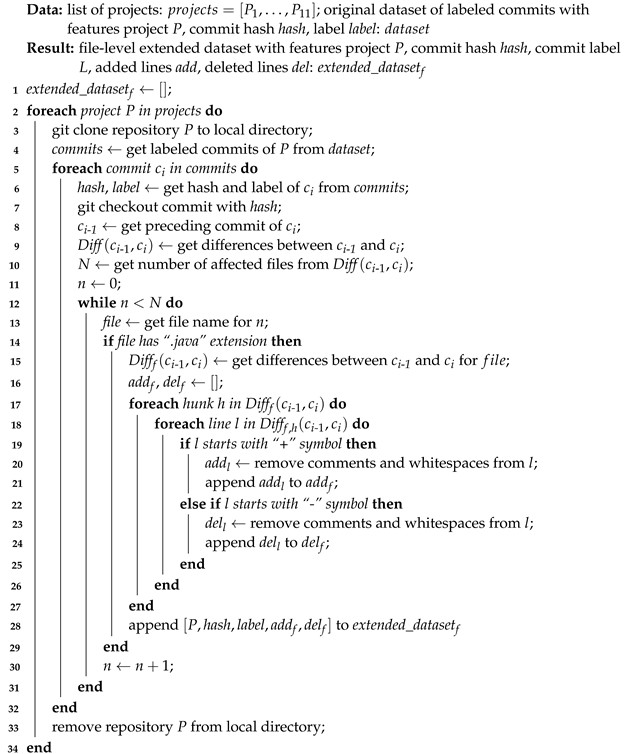

As the original dataset only contains a commit message and label for each commit, we had to extend the dataset with additional data related to fine-grained conjoint code changes to be able to use the proposed method on the dataset. In the experiments, we considered one fine-grained conjoint code change unit, i.e., file-level code changes. The steps taken to prepare and preprocess the data to obtain the extended dataset used in the experiments are presented in Algorithm 2. First, we cloned each software project’s repository to a local directory. For each labeled commit for that project, we retrieved the source code of that commit and extracted the differences in the source code between the current working directory of a commit and the preceding commit. For each file affected by the commit operation that corresponded to the Java programming language, we obtained the differences in code lines. It should be noted that different algorithms in the Git diff utility produce unequal results [62]. We used the Myers algorithm, which is used as the default in Git. We parsed the obtained difference in each file to obtain hunks with modified lines of code of a commit compared to the preceding commit by simultaneously determining whether a line was an addition or a deletion. Each modified code line was preprocessed in order to remove code comments and whitespaces, i.e., indentations and empty lines. Thus, the resulting code lines only represent the actual meaningful changes to the code itself without considering the natural language documentation in the code and code layout or formatting. Each file was assigned the same label as the commit they were part of. The resulting extended dataset contains file-level detailed information on the changes in the source code of each commit from the original dataset. Altogether, the extended dataset consists of 4513 files that were affected by a commit operation across 935 commits in eleven software projects. Across all projects, the median was two files (IQR = 3.00) were affected by a single commit, and, the median in a single file was eight lines of code (IQR = 20.00) were modified, i.e., either added or deleted. More detailed file-level characteristics of the dataset used in the experiments are available in Appendix A in Table A1.

For task-adaptive pre-training, we prepared our own representative datasets of file-level code changes. From the code repositories of the eleven software projects included in the study, we randomly selected 1000 non-initial and non-merge commits. For each commit, files affected by a commit operation were extracted. The same steps presented in Algorithm 2, used to extend the labeled dataset, were undertaken herein to obtain the additions and deletions in each file, with the exception of assigning a label, as the dataset in this context is unlabeled. Altogether, the dataset for task-adaptive pre-training comprises 45,352 files.

| Algorithm 2 Data preparation and preprocessing steps to obtain the extended dataset used in the experiments |

|

5.3. Experimental Settings of the Proposed Method

5.3.1. Task-Adaptive Pre-Training

For task-adaptive pre-training, we used the prepared task-specific datasets of file-level code changes. Two code models were considered for task-adaptive pre-training, i.e., a pre-trained CodeBERT and a pre-trained GraphCodeBERT model. A tokenizer specific to each code model was utilized for tokenizing inputs, i.e., sequences of added and deleted code lines, for these models, with the maximum input sequence length set to 512. From the available data, 80% were used for training and 20% for validation, with a random seed value set to 42 when splitting the data to ensure reproducibility. We used the MLM pre-training objective and selected 15% of tokens to randomly mask out. We employed a training strategy that included four epochs with a batch size of 32 and implemented gradient accumulation by aggregating over four mini-batches. The training utilized the AdamW optimizer with a learning rate of 2e-5, complemented by a weight decay of 1e-2, and 100 warmup steps. Two training strategies were employed, i.e., full-model and partial-model task-adaptive pre-training. For the former, which was primarily used, all parameters were trainable, while for the latter, the encoder layer was frozen, thus, only parameters in the embedding and dense layers were trainable, as suggested by Ladkat et al. [63].

5.3.2. Fine-Tuning

For fine-tuning the code models we utilized the expanded dataset with file-level code changes and respective labels. Four code models were used for fine-tuning, i.e., a pre-trained CodeBERT, a task-adapted pre-trained CodeBERT, a pre-trained GraphCodeBERT, and a task-adapted pre-trained GraphCodeBERT. The inputs to the code model, consisting of sequences of added and deleted code lines, were tokenized using a respective model-specific tokenizer with a maximum input sequence length of 512. From the available data, stratified splitting based on labels with a random seed value of 42 was used, with 80% used for training and 20% for validation. Random undersampling was performed to tackle the unbalanced nature of the dataset. We employed a training strategy that included 15 epochs with a batch size of 16, a gradient accumulation strategy over two mini-batches, and a learning rate of the AdamW optimizer of 1e-5, complemented by a weight decay of 1e-2 to regulate model complexity. To ensure a gradual adaptation of the learning rate, we incorporated 500 warmup steps. To prevent overfitting, early stopping was implemented with the patience of three epochs. Out of twelve encoder layers, we opted to freeze the initial layers of our model in addition to the embedding layer, under the assumption that these layers encapsulated more general knowledge [64,65], and trained the top three encoder layers of the model only. A classification head with a dense layer and dropout of 0.1 was added to the model for the classification task used in fine-tuning.

5.3.3. Semantic Embedding Extraction of Fine-Grained Conjoint Code Changes

For extracting file-level embeddings of code changes of the extended dataset, six code models were included in the experiment, i.e., a pre-trained CodeBERT (CodeBERTPT), a fine-tuned pre-trained CodeBERT (CodeBERTPT+FT), a fine-tuned task-adapted pre-trained CodeBERT (CodeBERTPT+TAPT+FT), a pre-trained GraphCodeBERT (GraphCodeBERTPT), a fine-tuned pre-trained GraphCodeBERT (GraphCodeBERTPT+FT), and a fine-tuned task-adapted pre-trained GraphCodeBERT (GraphCodeBERTPT+TAPT+FT). Additionally, we included CodeBERT with randomly initialized weights (CodeBERTrand) and GraphCodeBERT with randomly initialized weights (GraphCodeBERTrand) as baselines, using a random state of 1 for reproducibility. We again used the tokenizer with regard to the code model used in order to prepare inputs for the code models. The special classification token with a 768-dimensional vector representation, obtained from a code model, was considered as an aggregated semantic embedding of code changes in each file.

5.3.4. Aggregation into Commit-Level Embedding

Based on the output file-level embeddings of each commit, for aggregations into commit-level embeddings, three simple dimension-wise aggregation techniques, i.e., AggMin, AggMax, and, AggMean, and their four concatenation variants, i.e., AggMin⊕Max, AggMin⊕Mean, AggMax⊕Mean, and AggMin⊕Max⊕Mean, were used, following the work of Compton et al. [66]. The formulas for each aggregation technique used in commit aggregation are presented in Table 3. It is important to note that the symbol ⊕ denotes the concatenation of vectors, i.e., combining the outputs of two or more aggregation techniques into a single vector representation. The resulting commit embeddings are of the following dimensions: 768 dimensions when using AggMin, AggMax, or AggMean aggregation techniques; 1536 dimensions when using AggMin⊕Max, AggMin⊕Mean, or AggMax⊕Mean aggregation techniques; and 2304 dimensions when using the AggMin⊕Max⊕Mean aggregation technique. To ensure uniformity in dimension scales in the resulting commit embedding representations, we normalized each dimension to a range between −1 and 1.

Table 3.

Overview of aggregation techniques used in the experiments.

5.4. Commit Intent Classification

Following existing research [5], we formulated the commit intent classification problem as a single-label multi-class problem. This means that each commit within our dataset is exclusively categorized into one of three distinct and non-overlapping classes . Several classifiers were employed for the tasks, selected based on the findings from related work. From each category of classifiers that have been shown to provide good results for the task under study, i.e., neural network, probabilistic, and decision-tree-based, we opted for one representative, namely, Neural, Gaussian Naive Bayes, and Random Forest Classifiers, respectively.

For RQ1, the Neural Classifier was configured with three hidden layers, employing the ReLU activation function. For input embeddings of 768 dimensions, the hidden layers were configured to sizes 512, 256, and 128; for embeddings of 1536 dimensions, to 1024, 512, and 256; and for embeddings of 2304 dimensions, to 2048, 1024, and 512. The models utilized the Adam optimizer with a learning rate of 1e-3 and a batch size of 32. The maximum number of iterations was set to 500, and L2 regularization was set to 1e-4 to prevent overfitting. To aid reproducibility, the random state was set at 42. The loss function employed was categorical cross-entropy, appropriate for our multi-class classification task. The Gaussian Naive Bayes models were employed with a variance smoothing parameter of 1e-9. The Random Forest models were configured with 100 trees, using the entropy criterion for splitting. The minimum number of samples required to split a node was set to 2, whereas each leaf node was required to have at least five samples. The random state was set at 42 to ensure the reproducibility of results. To compare the built commit intent classification models with a simple baseline, we additionally employed a majority class classifier that always predicts the most frequent class in the dataset, which in our case is corrective maintenance.

For RQ2, hyperparameter tuning across three classifiers for classification models using the proposed and SOTA methods was employed, with the aim of improving the classification performance and ensuring that any observed differences in performance were attributable to certain methods’ effectiveness rather than to biased hyperparameter configurations. To find the optimal hyperparameters for each classification model, we used grid search with nested 10-fold cross-validation and weighted F-score as a performance measure. The search space that was systematically explored for each classifier is reported in Appendix B in Table A2, Table A3 and Table A4 for the Neural, Gaussian Naive Bayes, and Random Forest Classifiers, respectively.

5.5. Evaluation

To evaluate commit intent classification models in a cross-project setting, we employed a group 11-fold cross-validation, where the number of folds directly corresponds to the number of software projects included in the dataset. This ensures that all data from one project are either in the train set or the test set, but not both. We opted for such an evaluation strategy to resemble a real-world scenario where the classification models are applied to projects they have not been trained on, thereby providing a more realistic measure of their generalizability and effectiveness in cross-project contexts. More detailed information on each fold is available in Appendix C in Table A5. In each fold, the train set was used to train the model, i.e., perform fine-tuning and commit classification, while the evaluations were performed on the test set. As evaluations were performed for each fold, the values of performance metrics reported in the results include descriptive statistics, e.g., average and standard deviation, considering the performance results from all folds. Due to the imbalanced nature of the dataset, we primarily employed the F-score (weighted and per-class) and Cohen’s Kappa Coefficient as measures of classification performance. Another reason why we decided to use these two measures is that they have been commonly employed in related work; presented in Section 2.

5.6. Experiment Setup and Implementation Details

The experiments were conducted with Python3. To work with the data, the pandas library [67] was used. To implement the data preparation and preprocessing of the extended labeled dataset and task-adaptive pre-training dataset, we used the GitPython [68] and codeprep [69] libraries. In addition, to obtain a set of commits for datasets for task-adaptive pre-training, GitHub REST API [70] was used. The two code models, i.e., CodeBERT and GraphCodeBERT, were obtained from the Hugging Face Transformers library [71], which was also used to work with code models (e.g., tokenization, training, fine-tuning, inference). For performing embedding aggregations we used the NumPy library [72]. Commit intent classification using the three classifiers, i.e., Neural, Gaussian Naive Bayes, and Random Forest, and evaluations were implemented using the scikit-learn library [73]. The library was also used for data preprocessing, e.g., normalizing embeddings and encoding labels, and to build the majority class classifier as a baseline. For sampling, due to the imbalanced nature of the data we employed imbalanced-learn [74]. Our experiments were conducted using Google Colab with an Intel Xeon CPU @ 2.00 GHz. Additionally, NVIDIA A100-SXM4-40 GB GPU resources were employed for task-adaptive pre-training, while NVIDIA V100-SXM2-16 GB GPU resources were used for fine-tuning processes.

To compare the proposed method with the current SOTA of code-based commit representations for commit intent classification, we reproduced related work for our dataset used in the experiments. To obtain the values of 48 code change metrics proposed by Levin and Yehudai [11], we used their publicly available replication package [75]. For the 22 proposed code change metrics by Hönel et al. [7], we utilized the provided replication package [76]. Additionally, the 24 metrics suggested by Ghadhab et al. [14] were extracted using their replication package [77]. To obtain the three metrics proposed by Mariano et al. [15], we followed the extraction procedure as presented by the authors using GitHub GraphQL API [78].

Data analysis of the experimental results was performed using Python3 and IBM SPSS Statistics [79]. The visualizations were made using R and ggplot2 package [80].

6. Results and Discussion

6.1. RQ1 How Effective Is the Proposed Method When Used for Commit Intent Classification?

First, an ablation study was conducted to evaluate the effectiveness of the proposed semantic embedding extraction method and each of its steps for commit intent classification. To explore the impact of the inclusion of the method’s steps on the classification performance, commit intent classification was performed based on the CodeBERT and GraphCodeBERT models with randomly initialized weights (CodeBERTrand, GraphCodeBERTrand), general-purpose pre-trained CodeBERT and GraphCodeBERT models (CodeBERTPT, GraphCodeBERTPT), fine-tuned pre-trained CodeBERT and GraphCodeBERT models (CodeBERTPT+FT, GraphCodeBERTPT+FT), and fine-tuned task-adapted pre-trained CodeBERT and GraphCodeBERT models (CodeBERTPT+TAPT+FT, GraphCodeBERTPT+TAPT+FT). In general, the results of the ablation study, reported in Table 4, with the averaged F-score and standard deviation on test datasets across folds, separated by the classifier used and the technique employed for aggregations into commit-level embeddings, show that regardless of the chosen code model, aggregation technique, and classifier, the classification performance gradually improves with each step of the proposed method. When Neural Classifier is used, on average, CodeBERTPT+TAPT+FT provides an 18.88%, 5.26%, and 2.49% improvement over CodeBERTrand, CodeBERTPT, and CodeBERTPT+FT, respectively, while GraphCodeBERTPT+TAPT+FT shows a 12.33% improvement over GraphCodeBERTrand, a 2.24% decrease compared to GraphCodeBERTPT, and a 0.66% improvement over GraphCodeBERTPT+FT. With the Gaussian Naive Bayes Classifier, on average, the improvements with CodeBERTPT+TAPT+FT are 17.69%, 5.56%, and 1.64% over CodeBERTrand, CodeBERTPT, and CodeBERTPT+FT, respectively, while the improvements with GraphCodeBERTPT+TAPT+FT are 8.87% over GraphCodeBERTrand, and 5.99% over GraphCodeBERTPT, while there is a 0.23% decrease compared to GraphCodeBERTPT+FT. For the Random Forest Classifier with CodeBERTPT+TAPT+FT, on average, there is a 14.01% improvement over CodeBERTrand, 2.59% over CodeBERTPT, yet a 1.59% decrease compared to CodeBERTPT+FT, while with GraphCodeBERTPT+TAPT+FT, on average there is a 8.35%, 7.37%, and 0.75% improvement over GraphCodeBERTrand, GraphCodeBERTPT, and GraphCodeBERTPT+FT, respectively. These results demonstrate the effectiveness of the defined steps of the proposed method, i.e., using a pre-trained model, fine-tuning, and task-adaptive pre-training, in improving the commit intent classification performance, alongside the impact that the pre-training on a general corpus and fine-tuning and task-adaptive pre-training on a dataset directly relevant to the classification task have on the overall classification performance. However, it can be observed that the models based on CodeBERT appear to perform better than GraphCodeBERT. This suggests that the semantic features captured by CodeBERT are more effective for commit intent classification than those captured by GraphCodeBERT. Thus, the relationships and dependencies within code that are additionally captured by GraphCodeBERT compared to CodeBERT might not aid in identifying various types of change intent with the herein-proposed semantic embedding extraction method. Given these findings, the subsequent analyses presented in the paper will only focus on CodeBERT-based classification models.

Table 4.

Performance of commit intent classification, measured by F-score, using the proposed semantic embedding extraction method with different aggregation techniques and across various classifiers, with an ablation study of the methods’ steps on classification performance.

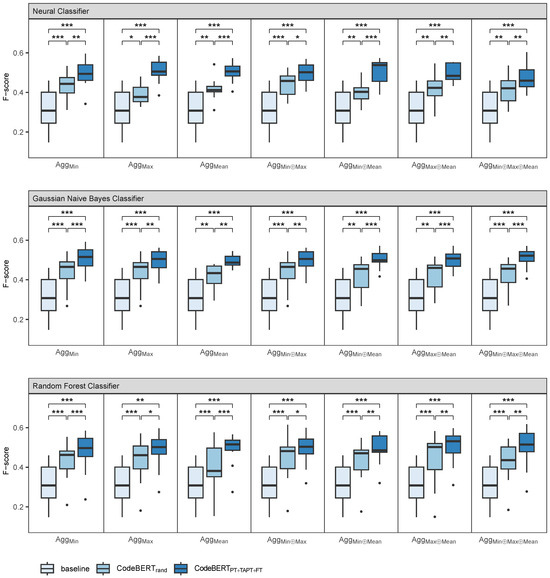

A more comprehensive analysis examined the differences in commit intent classification performances. Figure 3 presents classification performances reported by the F-score of a simple baseline model, i.e., majority class classifier, serving as a reference, and classification models using CodeBERTrand and CodeBERTPT+TAPT+FT with different aggregation techniques and classifiers. Annotations in the figure indicate the statistically significant differences among the three comparison groups. Detailed results of the statistical tests are provided in Appendix D in Table A6, Table A7 and Table A8 for the Neural, Gaussian Naive Bayes, and Random Forest Classifiers, respectively. Depending on the data distribution, appropriate tests were applied. Where the Shapiro–Wilk test showed that the distribution of the differences in F-score was not significantly different from a normal distribution (e.g., W(11) = 0.950, p = 0.648 in the case of baseline-CodeBERTPT+TAPT+FT using AggMin and the Neural Classifier), the Student’s paired-samples t-test was used to assess the differences in classification performance. Alternatively, where the Shapiro–Wilk test showed that the differences in F-score did not meet the assumption of normality (e.g., W(11) = 0.849, p = 0.042 for baseline-CodeBERTPT+TAPT+FT using AggMax and the Random Forest Classifier), the Wilcoxon signed-rank test was used. The analysis confirms statistically significant performance differences across all classifiers and aggregation techniques between the three classification models, i.e., between the baseline and CodeBERTrand, baseline and CodeBERTPT+TAPT+FT, as well as between CodeBERTrand and CodeBERTPT+TAPT+FT. In each paired comparison, the classification performance of the latter model is statistically significantly better. These findings reveal that even when utilizing a code model with randomly initialized weights, the resulting classification models can distinguish between various types of intents to a limited extent, outperforming the majority class baseline. Thus, the increased complexity of the model, resulting from the incorporation of a code model, in comparison to the baseline reference, indeed leads to enhanced performance. At the same time, these findings indicate that the inherent architecture of the code model, even without pre-training, is capable of capturing certain patterns in the data relevant to change intent; a phenomenon of language models with random parameterizations that prior studies have already discussed [65,81,82]. Nevertheless, a significant difference in performance can be observed when a code model with a fine-tuned task-adaptive pre-training strategy is employed, marking significant improvements over its randomly initialized counterpart.

Figure 3.

Boxplots representing the commit intent classification performance across folds, measured by F-score, for three models (baseline model, and CodeBERTrand- and CodeBERTPT+TAPT+FT-based models with various aggregation techniques) using Neural, Gaussian Naive Bayes, and Random Forest Classifiers, annotated with statistically significant performance differences between the models (* p < 0.05, ** p < 0.01, *** p < 0.001).

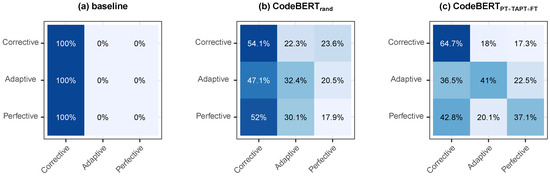

To further analyze the ability of the three models to distinguish between the three classes of commit intents, Figure 4 presents the normalized confusion matrices for the test dataset across all folds. These matrices compare the performance of a majority class baseline model with classification models based on CodeBERTrand and CodeBERTPT+TAPT+FT on the example of using the AggMax aggregation technique and Neural Classifier. The baseline model classifies all commits as corrective. As it does not correctly identify any commits of the other two classes, this shows its inability to distinguish between change intents. In comparison, the CodeBERTrand-based classification model achieves a more balanced classification across the three classes, though with a persistent bias towards the corrective class. The model is able to classify 54.1% of corrective, 32.4% of adaptive, and 17.9% of perfective commits correctly, yet incorrectly classifies 47.1% and 52% of adaptive and perfective commits, respectively, as corrective. In contrast, the CodeBERTPT+TAPT+FT-based classification model demonstrates a better per-class classification performance than the previously discussed models. The model can correctly classify 64.7% of corrective, 41% of adaptive, and 37.1% of perfective commits, indicating it performs better for all three classes. There is still considerable confusion between classes, but it is reduced compared to the CodeBERTrand-based model. Overall, although the improvement in classification performance from the baseline through CodeBERTrand to CodeBERTPT+TAPT+FT can be observed, and the models become progressively better at distinguishing between different types of intents, there are still considerable misclassifications present, suggesting that the proposed method has potential for further improvement to achieve even better classification performance.

Figure 4.

Overall normalized confusion matrix for (a) baseline model, and classification models using (b) CodeBERTrand and (c) CodeBERTPT+TAPT+FT with AggMax aggregation technique and Neural Classifier.

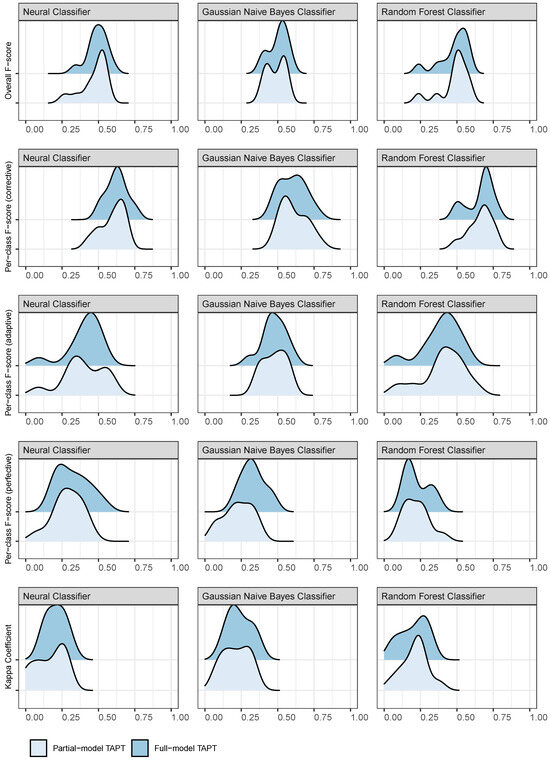

As task-adaptive pre-training can be computationally heavy, studies have shown that training only a part of the language model, where not all parameters are trainable during task adaptation, can still provide comparative results compared to training the entire model. To assess this in the context of commit intent classification, we analyzed the influence of the two training strategies of task-adaptive pre-training, i.e., full-model and partial-model training, on commit intent classification performance. This was achieved by observing the differences in performance, measured by the overall F-score, per-class F-score (for corrective, adaptive, and perfective classes), and Kappa Coefficient under the same experimental settings aside from the training strategy. In our experimental design, the partial-model training involved training only 31.8% of the model’s parameters during task adaptation. Similar to before, depending on the data distribution, appropriate tests were applied: either the Student’s paired-samples t-test, which was used when the assumptions of normality, assessed by the Shapiro–Wilk test, were met or the non-parametric counterpart, the Wilcoxon signed-rank test, when the assumptions of normality were not met. Detailed results of the statistical tests are provided in Appendix D in Table A9, Table A10 and Table A11 for the Neural, Gaussian Naive Bayes, and Random Forest Classifiers, respectively. To illustrate the differences in classification performance between the two training approaches, Figure 5 represents the distribution of performance on the commit intent classification models based on CodeBERTPT+TAPT+FT with AggMin aggregation technique, using full-model and partial-model task adaptation. In a large majority of observations, the results show that there are no statistically significant differences in classification performance between the two training strategies. More specifically, in 95.2%, 100%, 95.2%, 71.4%, and 90.5% of observations, no statistically significant differences were observed in terms of F-score, per-class F-score for the corrective class, per-class F-score for the adaptive class, per-class F-score for the perfective class, and Kappa Coefficient, respectively. Only in one observation was the F-score statistically significantly higher for full-model training than partial-model training (in the case of AggMin⊕Max using the Gaussian Naive Bayes Classifier). In two cases, the Kappa Coefficient was statistically significantly higher for full-model training than partial-model training (in the cases of AggMin⊕Max and AggMin⊕Max⊕Mean using the Gaussian Naive Bayes Classifier). In one case, the per-class F-score for the adaptive class was statistically significantly higher for full-model training compared to partial-model training. In six cases, the per-class F-score for the perfective class was statistically significantly higher for full-model training compared to partial-model training. These findings suggest that task-adaptive pre-training can be conducted effectively with only partial-model training to reduce the computational resources needed without significantly sacrificing classification performance. This is especially relevant for environments with limited computational resources. This might be due to the similarity between the pre-training and target tasks, where the representations learned during pre-training are already well-suited to the target task. Consequently, adapting the model by partially adjusting the weights is almost as effective as adapting all weights.

Figure 5.

Density plots of classification performance, measured by the overall F-score, per-class F-score (for corrective, adaptive, and perfective class), and Kappa Coefficient, of commit intent classification models based on CodeBERTPT+TAPT+FT with AggMin aggregation technique using full-model and partial-model task-adaptive pre-training strategy.

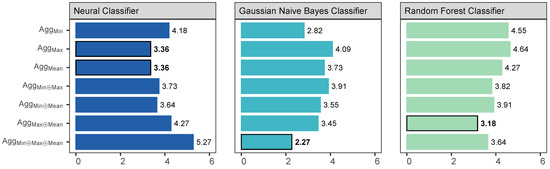

Lastly, as part of the proposed method, we evaluated the different aggregation techniques used within the experiments. Separated by the classifier used, each aggregation technique was assigned a rank from one to seven based on its classification performance per fold on CodeBERTPT+TAPT+FT, with rank one representing the highest and seven the lowest performance in terms of the F-score in a fold. To gain deeper insight into the effectiveness of the aggregation techniques, we averaged the per-fold ranks across all folds. The resulting averaged ranks, presented in Figure 6, provide a rough estimate of each aggregation technique’s overall performance, where a lower rank signifies better average performance across folds for a specific classifier. Although previous analyses have already shown varying performances across different aggregation techniques, the ranks presented here demonstrate that no single technique consistently outperforms the others across all three classifiers. For the Neural Classifier, the best-performing techniques are AggMax and AggMean. The top technique for the Gaussian Naive Bayes Classifier is AggMin⊕Max⊕Mean. For Random Forest Classifier, AggMax⊕Mean performs the best. It appears that the techniques performing the best with the Neural Classifier are the least effective for the Gaussian Naive Bayes Classifier, and vice versa. These observations suggest that while certain combinations of classifiers and aggregation techniques may yield optimal results for commit intent classification, further exploring these combinations is beyond the scope of this paper. Furthermore, our findings underscore the potential need for developing task-specific aggregation techniques that could more effectively address the target task, for instance, by considering relationships between changes, as opposed to the simple dimension-wise aggregation approaches employed in this study.

Figure 6.

Average ranks of aggregation techniques across folds using Neural, Gaussian Naive Bayes, and Random Forest Classifiers.

6.2. RQ2 How Does the Proposed Method Compare to the SOTA When Used for Commit Intent Classification?

To benchmark the classification performance of models using the proposed semantic commit feature extraction method against the current SOTA, we employed the best-performing aggregation technique for each classifier, identified by the analysis in the context of RQ1, to build commit intent classification models based on the proposed method. Specifically, for the Neural Classifier, we used CodeBERTPT+TAPT+FT with AggMax. For Gaussian Naive Bayes, we combined CodeBERTPT+TAPT+FT with AggMin⊕Max⊕Mean, and for Random Forest, we used CodeBERTPT+TAPT+FT with AggMax⊕Mean. In Table 5, we present the average classification performance, measured by F-score and Kappa Coefficient, of the commit intent classification models based on the proposed method with the aforementioned settings and on SOTA, alongside comparative analysis of the differences in classification performance for each paired comparison. Appropriate statistical tests were applied based on the data distribution to evaluate performance differences. Again, based upon the results of the Shapiro–Wilk test, either the Student’s paired-samples t-test or the Wilcoxon signed-rank test was used. Across all comparisons, our proposed method’s classification model demonstrated performance comparable to, and in several instances superior to, the SOTA models. Specifically, in the majority of comparisons, the proposed method’s classification performance outperformed that of the SOTA. Notably, in 50.0% of these comparisons, the difference in performance in favor of the proposed method was statistically significant. At the same time, there were no observed comparisons where the SOTA-based classification models statistically outperformed those based on the proposed method. For instance, with the Gaussian Naive Bayes Classifier, the models based on the proposed method outperform all SOTA-based models, with a statistically significant difference in performance compared to classification models based on features proposed by Hönel et al. [7], Ghadhab et al. [14], and Mariano et al. [15]. In the case of the model based on features proposed by Levin and Yehudai [22], although the model based on the proposed method yielded a higher average F-score and Kappa Coefficient, the performance differences were not statistically significant. These results demonstrate the effectiveness of the proposed method despite it representing the first attempt to apply semantic source code embeddings for commit intent classification. While further research is necessary to build upon these initial findings, the work presented in this paper lays a strong foundation, indicating performance that is not only comparable but, in many cases, superior to the existing SOTA.

Table 5.

Comparative analysis of the classification performance, measured by F-score and Kappa Coefficient, of commit intent classification models based on the proposed method in comparison to the SOTA.

7. Threats to Validity

7.1. Threats to Construct Validity

To address the threat to construct validity related to inadequately defined concepts, we provide precise definitions of software change intents by referring to an existing, widely accepted, and the most frequently used intent-based categorization scheme of software maintenance activities, introduced by Swanson [20]. It is important to note that the selected categorization scheme and presented definitions are in alignment with the ones used to prepare a labeled dataset on which our work is built. Our work has a construct representation bias regarding how software changes are represented. When embedding commits with a fine-tuned task-adapted pre-trained transformer-based code model, only modified code lines are taken into account. This might neglect the surrounding context in which the changes are made, e.g., the project’s domain, the part of the modified codebase (i.e., production or test), and the code context in which the code lines are modified. To address the threat of construct under-representation related to mono-operation bias, we operationalize classification model performance by employing several measures, including F-score, and Cohen’s Kappa Coefficient. By using multiple metrics, we mitigate a narrow interpretation of models’ performances and obtain a more comprehensive evaluation as different metrics capture different aspects of classification performance. However, we do not consider any other metrics that could address the performance in terms of the training and use of the model, e.g., model complexity, time complexity of training, and inference time, which may present intriguing research directions for future studies.

7.2. Threats to Internal Validity

There is a threat to internal validity related to anomalies in instrumentation, i.e., tools and procedures. To ensure reliability and consistency in the data preparation procedure, data are gathered using GitHub REST API, GitPython, and codeprep along with our custom implementations when needed. Existing tools are used preferably as we consider that popular, actively maintained tools used by prior studies have a high level of confidence in the correctness of the retrieved data. We automate the data preparation procedure to mitigate inconsistencies due to human errors. Despite manual verification that the data preparation procedure is performed correctly, just one tool is used for each task, and tool-related errors in the procedure are still possible. There is a threat related to overfitting and underfitting classification models; k-fold cross-validation is performed to help with identifying the two issues. Another threat to internal validity is data leakage. In a cross-project setting, we utilize project-based train–test data splits to mitigate the threat. This helps to ensure that a model does not learn about the test data during training, as all data from a specific project is either in the train or the test set, but not both.

7.3. Threats to External Validity