Adaptive Graph Attention and Long Short-Term Memory-Based Networks for Traffic Prediction

Abstract

1. Introduction

- Generally, the prediction accuracy decreases as the prediction horizon increases, with this trend noted in almost all prediction models.

- Super parameters configured for a specific model may have significant impacts on its prediction performance. Researchers often need to spend a great deal of time and effort to tune model parameters and obtain the optimal modeling results based on certain expert experience.

- Furthermore, when varying the spatial and temporal dependencies between one traffic network and another, a well-tuned model with specific parameters may have different performance for a new dataset application scenario.

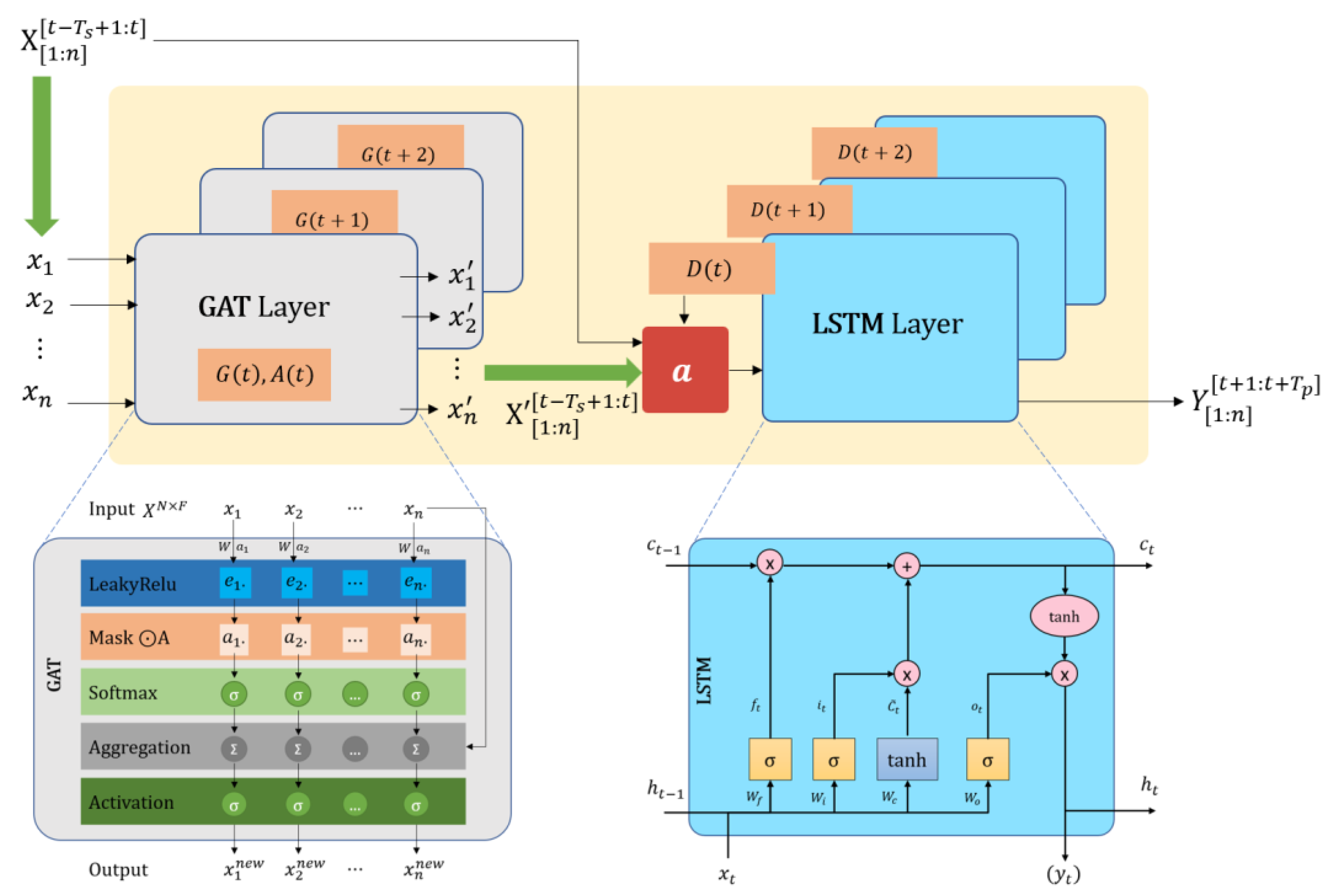

- We propose a novel GAT-LSTM architecture that is applicable to both global and local graphs, according to the road network and prediction tasks. In this architecture, an extra input called Dayfeature is designed to include external factors affecting traffic conditions, such as extreme weather, public holidays, and other special uncertainties that may arise over time, which greatly improves the prediction accuracy.

- The GAT network and LSTM network are not simply connected in series within the model. An attention block is designed to learn the weights of the GAT network, original traffic data, and Dayfeature before passing them into the LSTM network. These weights vary from one node to another. Thus, within the global GAT-LSTM model, the GAT network and LSTM network may automatically have different combinations to adaptively predict traffic conditions for each local sensor. This design also allows the model to be easily applied to other traffic networks using new datasets.

- The proposed model achieved state-of-the-art performance in traffic flow prediction using the PeMS08 open dataset (also known as PeMSD8 in some literature). In addition, weaker nodes within the traffic network can be detected, and local adaption algorithms can be designed to further improve the local performance of the model.

2. Methods

2.1. Problem Description

2.1.1. Traffic Network Graphs

2.1.2. Traffic States

2.1.3. Temporal and Other External Features

2.1.4. Problem

2.2. GAT-LSTM Model

3. Experiments

3.1. Dataset and Baselines

3.2. Evaluation Metrics

- Mean absolute error (MAE):

- Rooted mean square error (RMSE):

- Mean absolute percentage error (MAPE):

- However, the traffic state (flow, speed, or occupancy) can be equal to zero. To include these zero values, we introduce another metric to include all traffic states, namely the symmetric mean absolute percentage error (SMAPE):

3.3. Experimental Design

3.4. Results

3.4.1. Overall Performance on the Traffic Network

3.4.2. Node-Wise Performance

3.4.3. Adaptive Attentions

4. Discussion

4.1. Impact of the Historical Data Time Window

4.2. Weak Nodes Detection for Further Optimization

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Lee, S.; Fambro, D.B. Application of subset autoregressive integrated moving average model for short-term freeway traffic volume forecasting. Transp. Res. Rec. 1999, 1678, 179–188. [Google Scholar] [CrossRef]

- Dervoort, M.; Dougherty, M.; Watson, S. Combining kohonen maps with ARIMA time series models to forecast traffic flow. Transp. Res. Part C Emerg. Technol. 1996, 4, 307–318. [Google Scholar] [CrossRef]

- Williams, B.M.; Asce, M.; Hoel, L.A.; Asce, F. Modeling and forecasting vehicular traffic flow as a seasonal ARIMA process: Theoretical basis and empirical results. J. Transp. Eng. 2003, 129, 664–672. [Google Scholar] [CrossRef]

- Wu, C.-H.; Ho, M.-J.; Lee, D.T. Travel-time prediction with support vector regression. IEEE Trans. Intell. Transp. Syst. 2004, 5, 276–281. [Google Scholar] [CrossRef]

- Castro-Neto, M.; Jeong, Y.; Jeong, M.; Han, L.D. Online-SVR for short-term traffic flow prediction under typical and atypical traffic conditions. Expert Syst. Appl. 2009, 36, 6164–6173. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Q.; Yang, W.; Wei, N.; Dong, D. An improved K-Nearest Neighbor model for short-term traffic flow prediction. Procedia Soc. Behav. Sci. 2013, 96, 653–662. [Google Scholar] [CrossRef]

- Mallek, A.; Klosa, D.; Buskens, C. Enhanced K-Nearest Neighbor model for multi-steps traffic flow forecast in urban roads. In Proceedings of the 2022 IEEE International Smart Cities Conference (ISC2), Pafos, Cyprus, 26–29 September 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Lv, Y.; Duan, Y.; Kang, W.; Li, Z.; Wang, F. Traffic flow prediction with big data: A deep learning approach. IEEE Trans. Intell. Transp. Syst. 2015, 16, 865–873. [Google Scholar] [CrossRef]

- Ma, X.; Tao, Z.; Wang, Y.; Yu, H.; Wang, Y. Long Short-Term Memory neural network for traffic speed prediction using remote microwave sensor data. Transp. Res. Part C Emerg. Technol. 2015, 54, 187–197. [Google Scholar] [CrossRef]

- Mou, L.; Zhao, P.; Xie, H.; Chen, Y. T-LSTM: A Long Short-Term Memory neural network enhanced by temporal information for traffic flow prediction. Access 2019, 7, 98053–98060. [Google Scholar] [CrossRef]

- Karimzadeh, M.; Aebi, R.; Souza, A.M.d.; Zhao, Z.; Braun, T.; Sargento, S.; Villas, L. Reinforcement learning-designed LSTM for trajectory and traffic flow prediction. In Proceedings of the 2021 IEEE Wireless Communications and Networking Conference (WCNC), Nanjing, China, 29 March–1 April 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Zhuang, W.; Cao, Y. Short-term traffic flow prediction based on a K-Nearest Neighbor and bidirectional Long Short-Term Memory model. Appl. Sci. 2023, 13, 2681. [Google Scholar] [CrossRef]

- Li, Z.; Xiong, G.; Chen, Y.; Lv, Y.; Hu, B.; Zhu, F.; Wang, F. A hybrid deep learning approach with GCN and LSTM for traffic flow prediction. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 1929–1933. [Google Scholar] [CrossRef]

- Han, X.; Gong, S. LST-GCN: Long Short-Term Memory embedded graph convolution network for traffic flow forecasting. Electronics 2022, 11, 2230. [Google Scholar] [CrossRef]

- Kumar, R.; Mendes Moreira, J.; Chandra, J. DyGCN-LSTM: A dynamic GCN-LSTM based encoder-decoder framework for multistep traffic prediction. Appl. Intell. 2023, 53, 25388–25411. [Google Scholar] [CrossRef]

- Wu, T.; Chen, F.; Wan, Y. Graph attention LSTM network: A new model for traffic flow forecasting. In Proceedings of the 5th International Conference on Information Science and Control Engineering (ICISCE), Zhengzhou, China, 20–22 July 2018; pp. 241–245. [Google Scholar] [CrossRef]

- Si, C.; Chen, W.; Wang, W.; Wang, L.; Tan, T. An attention enhanced graph convolutional LSTM network for Skeleton-based action recognition. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1227–1236. [Google Scholar] [CrossRef]

- Ma, X.; Dai, Z.; He, Z.; Ma, J.; Wang, Y.; Wang, Y. Learning traffic as images: A deep convolutional neural network for large-scale transportation network speed prediction. Sensors 2017, 17, 818. [Google Scholar] [CrossRef]

- Karimzadeh, M.; Esposito, A.; Zhao, Z.; Braun, T.; Sargento, S. RL-CNN: Reinforcement learning-designed convolutional neural network for urban traffic flow estimation. In Proceedings of the 2021 International Wireless Communications and Mobile Computing (IWCMC), Harbin City, China, 28 June–2 July 2021; pp. 29–34. [Google Scholar] [CrossRef]

- Méndez, M.; Merayo, M.G.; Núñez, M. Long-term traffic flow forecasting using a hybrid CNN-BiLSTM model. Eng. Appl. Artif. Intell. 2023, 121, 106041. [Google Scholar] [CrossRef]

- Wu, Y.; Tan, H. Short-term traffic flow forecasting with spatial-temporal correlation in a hybrid deep learning framework. arXiv 2016, arXiv:1612.01022. [Google Scholar]

- Li, Y.; Yu, R.; Shahabi, C.; Liu, Y. Diffusion convolutional recurrent neural network: Data-driven traffic forecasting. arXiv 2017, arXiv:1707.01926. [Google Scholar]

- Zhao, L.; Song, Y.; Zhang, C.; Liu, Y.; Wang, P.; Lin, T.; Deng, M.; Li, H. T-GCN: A temporal graph convolutional network for traffic prediction. IEEE Trans. Intell. Transp. Syst. 2020, 21, 3848–3858. [Google Scholar] [CrossRef]

- Yu, B.; Yin, H.; Zhu, Z. Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting. arXiv 2017, arXiv:1709.04875. [Google Scholar]

- Pan, C.; Zhu, J.; Kong, Z.; Shi, H.; Yang, W. DC-STGCN: Dual-channel based graph convolutional networks for network traffic forecasting. Electronics 2021, 10, 1014. [Google Scholar] [CrossRef]

- Song, C.; Lin, Y.; Guo, S.; Wan, H. Spatial-temporal synchronous graph convolutional networks: A new framework for spatial-temporal network data forecasting. Proc. AAAI Conf. Artif. Intell. 2020, 34, 914–921. [Google Scholar] [CrossRef]

- Wu, Z.; Pan, S.; Long, G.; Jiang, J.; Zhang, C. Graph wavenet for deep spatial-temporal graph modeling. arXiv 2019, arXiv:1906.00121. [Google Scholar]

- Guo, S.; Lin, Y.; Feng, N.; Song, C.; Wan, H. Attention based spatial-temporal graph convolutional networks for traffic flow forecasting. Proc. AAAI Conf. Artif. Intell. 2019, 33, 922–929. [Google Scholar] [CrossRef]

- Zheng, C.; Fan, X.; Wang, C.; Qi, J. GMAN: A graph multi-attention network for traffic prediction. Proc. AAAI Conf. Artif. Intell. 2020, 34, 1234–1241. [Google Scholar] [CrossRef]

- Wang, Z.; Ding, D.; Liang, X. TYRE: A dynamic graph model for traffic prediction. Expert Syst. Appl. 2023, 215, 119311. [Google Scholar] [CrossRef]

- Liu, S.; Feng, X.; Ren, Y.; Jiang, H.; Yu, H. DCENet: A dynamic correlation evolve network for short-term traffic prediction. Phys. A 2023, 614, 128525. [Google Scholar] [CrossRef]

- Yin, X.; Zhang, W.; Jing, X. Static-dynamic collaborative graph convolutional network with meta-learning for node-level traffic flow prediction. Expert Syst. Appl. 2023, 227, 120333. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, Y.; Song, H.; Dong, H. Multiple dynamic graph based traffic speed prediction method. Neurocomputing 2021, 461, 109–117. [Google Scholar] [CrossRef]

- Lee, K.; Rhee, W. DDP-GCN: Multi-graph convolutional network for spatiotemporal traffic forecasting. Transp. Res. Part C Emerg. Technol. 2022, 134, 103466. [Google Scholar] [CrossRef]

- Ni, Q.; Zhang, M. STGMN: A gated multi-graph convolutional network framework for traffic flow prediction. Appl. Intell. 2022, 52, 15026–15039. [Google Scholar] [CrossRef]

- Li, H.; Yang, S.; Song, Y.; Luo, Y.; Li, J.; Zhou, T. Spatial dynamic graph convolutional network for traffic flow forecasting. Appl. Intell. 2022, 53, 14986–14998. [Google Scholar] [CrossRef]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

| Metrics | MAE | RMSE | MAPE (%) | SMAPE (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Models | 15 min | 30 min | 60 min | 15 min | 30 min | 60 min | 15 min | 30 min | 60 min | 15 min | 30 min | 60 min | |

| ASTGCN * | 18.61 | 28.16 | 13.08 | -- | |||||||||

| STSGCN * | 17.13 | 26.80 | 10.96 | -- | |||||||||

| LST-GCN ** | 17.93 | 27.47 | 12.81 | -- | |||||||||

| LSTM | 15.96 | 17.87 | 21.08 | 22.34 | 24.90 | 29.08 | 10.39 | 11.55 | 13.60 | 10.03 | 11.04 | 12.76 | |

| LSTM_D | 17.95 | 18.38 | 18.99 | 24.96 | 25.60 | 26.34 | 11.73 | 11.90 | 12.26 | 11.21 | 11.36 | 11.67 | |

| GAT-LSTM_D | 16.23 | 17.08 | 18.27 | 22.06 | 23.20 | 24.79 | 11.02 | 11.46 | 12.05 | 10.56 | 10.89 | 11.44 | |

| GAT-LSTM_D_a | 15.32 | 16.24 | 17.16 | 21.05 | 22.69 | 24.21 | 10.39 | 11.02 | 11.51 | 10.39 | 10.56 | 10.93 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, T.; Boada, M.J.L.; Boada, B.L. Adaptive Graph Attention and Long Short-Term Memory-Based Networks for Traffic Prediction. Mathematics 2024, 12, 255. https://doi.org/10.3390/math12020255

Zhu T, Boada MJL, Boada BL. Adaptive Graph Attention and Long Short-Term Memory-Based Networks for Traffic Prediction. Mathematics. 2024; 12(2):255. https://doi.org/10.3390/math12020255

Chicago/Turabian StyleZhu, Taomei, Maria Jesus Lopez Boada, and Beatriz Lopez Boada. 2024. "Adaptive Graph Attention and Long Short-Term Memory-Based Networks for Traffic Prediction" Mathematics 12, no. 2: 255. https://doi.org/10.3390/math12020255

APA StyleZhu, T., Boada, M. J. L., & Boada, B. L. (2024). Adaptive Graph Attention and Long Short-Term Memory-Based Networks for Traffic Prediction. Mathematics, 12(2), 255. https://doi.org/10.3390/math12020255