Abstract

Two problems concerning detecting change-points in linear regression models are considered. One involves discontinuous jumps in a regression model and the other involves regression lines connected at unknown places. Significant literature has been developed for estimating piecewise regression models because of their broad range of applications. The segmented (SEG) regression method with an R package has been employed by many researchers since it is easy to use, converges fast, and produces sufficient estimates. The SEG method allows for multiple change-points but is restricted to continuous models. Such a restriction really limits the practical applications of SEG when it comes to discontinuous jumps encountered in real change-point problems very often. In this paper, we propose a piecewise regression model, allowing for discontinuous jumps, connected lines, or the occurrences of jumps and connected change-points in a single model. The proposed segmentation approach can derive the estimates of jump points, connected change-points, and regression parameters simultaneously, allowing for multiple change-points. The initializations of the proposed algorithm and the decision on the number of segments are discussed. Experimental results and comparisons demonstrate the effectiveness and superiority of the proposed method. Several real examples from diverse areas illustrate the practicability of the new method.

Keywords:

piecewise regression model; change-point; discontinuous jump; segmented regressions; break-point; threshold MSC:

62-08

1. Introduction

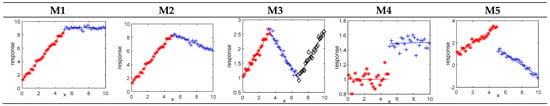

Piecewise regression models have been widely used in many practical situations where the relationship between the response and explanatory variables changes abruptly at certain points. These places are called change-points, break-points, or thresholds. Figure 1 illustrates some possible piecewise regressions between the response and generic covariate x.

Figure 1.

Some possible continuous or discontinuous broken-line regressions with one or two break- points.

In analyzing regressions with change-points, spline methods and piecewise regressions have been commonly adopted in the literature. Spline regressions are mainly used to approximate smooth regression functions. A spline function (q-spline) is a piecewise polynomial of degree q with q − 1 continuous derivatives at the change-point (c.f. [1]). The change-points are called knots in spline regressions. Seber [1] commented that regression splines are appropriate when one aims at finding the best fitted model to utilize in prediction or in finding extreme values of the regression curve. More applications and discussion on spline methods can be found in references [1,2,3]. The emphasis of regression spline is on modelling smooth regression relationships rather than illustrating structural changes in the regression relations (c.f. [1]). Thus, the regression coefficients resulted from splines may not measure the effect on the response and the estimated parameters are not explicitly interpretable (c.f. [4]).

By contrast, piecewise regression analyses focus on addressing the tendency relationships between response and explanatory variables, as indicated by abrupt changes between well-mannered regimes [1]. Therefore, locating change-points and obtaining adequate and meaningful estimates of parameters are the primary purpose for fitting piecewise regression models. The estimations for change-points and regression coefficients are important in piecewise regressions because they are often the sign for knowing when and how the trend of data changes. This information is critical to decision making. Change-point detection has been identified as a challenging problem for modern, big data applications [5].

Multiphase models provide a simple and interpretable way to deal with non-linear problems and provide meaningful regression parameters. Therefore, researchers have advocated piecewise regression models as better alternatives to solve regression problems which involve unknow change-points. This paper aims at dealing with regression problems involving abrupt changes, with continuous or discontinuous jumps at unknown places. This approach involves piecewise regression analyses.

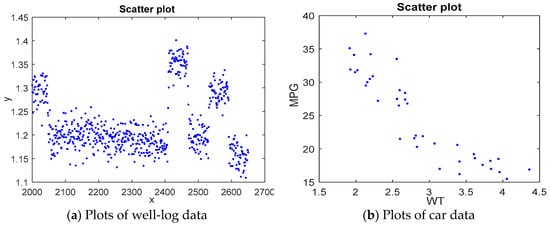

Change-point regression problems occur in many fields such as molecular biology, medicine, climatology, engineering, and econometrics [4,6,7,8]. In medical science, segmented regression models are employed to see whether the effect of some risk factor on the response alters abruptly. For example, the risk of cancer is low for young people, but the risk rises quickly for people of age beyond a certain age threshold [9]. In the oil and gas industry, sequential well-log data often appear as abrupt jumps at the transitions of rock strata. Each change-point in the well-log sequence indicates that a new rock type is encountered. Thus, change-point detection is crucial in oil-drilling. An example of well-log data from [10] was analyzed via many different change-point methods [5,11,12,13]. Figure 2a displays data plots of the indexed measurements from 2000 to 2650 in the well-log data set and discloses five discontinuous jumps in the data series. Moreover, piecewise regressions are also serviceable for clustering objects into groups based on a certain explanatory variable. For instance, one may want to classify cars based on the relationship between fuel efficiency (measured by miles per gallon, denoted by MPG), weights (WTs), and horsepower (HP). The graph of a data set taken from [8] shows a discontinuous and nonlinear relationship between MPG and WT (see Figure 2b).

Figure 2.

Scatter plots of well-log data and the car data with MPG vs. WT.

This paper is presented as follows. Section 2 presents the literature review. Section 3 describes Muggeo’s segmented method and proposes an advanced segmentation approach for piecewise regression models free of the continuity restriction. The IJD method is introduced for choosing proper initial values. Section 4 provides experimental examples and comparisons to demonstrate the effectiveness and superiority of the proposed method. Severa real data sets are used to illustrate the practicability of the new approach. Finally, Section 5 provides concluding remarks.

2. Literature Review

Change-point problems, first introduced in the quality control context, have developed into a fundamental issues in many areas. Numerous methodologies have been introduced for solving change-point problems. Maximum likelihood approaches, Bayesian analyses, grid-searching approaches, quantile regression, and non-parametric methods are among the approaches which have been employed to resolve change-point problems. A major difficulty in the estimations for change-point regression models is the non-smoothness of the likelihood function with respect to the change-point parameter. Many authors have tried to bypass this problem through various smooth transitions between two adjoining segments. Muggeo [4] presented a segmented (SEG) regression method thorough a linear transition to estimate change-points and the regression model. The SEG method is available for simultaneous inference on change-points and regression coefficients, but it is only feasible for connected regression models. Lu and Chang [12] (2018) adopted mixture modelling approaches to bypass the non-smooth difficulty and created two algorithms, called EM-BP and FCM-BP, to obtain estimates. These two algorithms can produce maximum likelihood estimates for change-points and regression coefficients simultaneously, but they are computationally intensive in cases of multiple change-points or large data sets due to the calculations required for each possible collection of change-points. Bayesian approaches are also considered for change-point regressions [14,15]. One concern with Bayesian analysis is the computational effort, due to a large number of iterations required in Markov chain Monte Carlo simulations.

Nonparametric methods are needed when data cannot be well approximated through parametric distributions. An efficient nonparametric maximum likelihood approach was proposed via dynamic programming (DP) [16]. Garreau and Arlot [17] proposed a nonparametric kernel change-point algorithm (KCP) to locate CPs through model selection associated with a penalized kernel criterion. Thus, the performance of KCP depends on a suitable penalty. Moreover, Frick et al. [18] introduced a simultaneous multiscale change-point estimator (SMUCE) method which does not only give the estimates of the change-point but also the confidence intervals. Pein et al. [19] further advanced SMUCE to be used for heterogeneous models. Moreover, some authors proposed a wild binary segmentation (WBS) method for improving the binary segmentation (BS) approach [20,21]. These two methods assume that regression errors are normally distributed; hence, they are not robust to atypical data. Some researchers focused on the issue of computational efficiency. Haynes et al. [22] presented an efficient algorithm to find a suitable penalty choice so that the computational cost can be reduced. Recently, the resistance of algorithms in the presence of abnormal observations has been studied [23,24,25,26]. A common aspect of these methods is the computational effort. For a review of more research on change-point issues, we refer to articles [27] and the references therein.

There are often some restrictions on the aforementioned methods. Muggeo [4] proposed a segmented regression method by using Taylor expansions to deal with change-point regressions. The segmented method is allowable for multiple change-points but restricted to continuous regression lines. Muggeo’s segmented (SEG) method is easy to implement, converges fast, and produces adequate estimators. Particularly, Muggeo [28] further introduced an R package called “segmented” to facilitate the use of the segmented regression method. The SEG method has been widely employed in a great many applications [28,29,30,31,32], especially in several recent analyses of the COVID-19 epidemic curve [33,34,35]. Because of its easiness and high efficiency, the segmented regression method was further combined with rank-based estimation to make the segmented method more resistant against outliers (c.f. [7,32]). Many researchers [7,12,32,36] recognized that the SEG method works well when the piecewise model is known to be continuous at change-points. But small deviations from continuity in the regression lines may distort the estimations made by SEG method. For real applications, continuity cannot be simply assumed when solving a change-point problem. Continuity may be very probable and reasonable for medical or biological problems, while such a supposition may not be appropriate in economics, finance, and quality control [36]. As shown in Figure 2, continuity is clearly infeasible for both well-log data and car data; hence, the segmented method is not attainable for these real problems. The restriction of continuity really limits the practical applications of the SEG method. This motivates us to develop a new method for change-point regressions, without requiring continuity limitation.

In this paper, we introduce an advanced segmented regression model to solve piecewise regression problems. We propose a segmentation procedure to fit the piecewise regression model, allowing for multiple change-points free of the continuity restriction. We further propose an intuitive jump detection (IJD) method to make the new proposed method more robust to initializations and, hence, increase the precision of estimates. The proposed method is applicable to problems in diverse areas. It is useful for areas where jumps are very likely to occur, such as applications in finance and engineering [37,38,39].

3. Methodology

3.1. Muggeo’s Segmented Regression Method

We first review Muggeo’s [4] segmented (SEG) regression method. Suppose a piecewise regression model of K segments is represented by

where is the response, represents the link function for , is the predictor allowing any variable with a linear parameter, and is the non-linear term for the Z variable with parameter . For simplicity, the predictor will be omitted because there is no difference among regression models in the following. Thus, a segmented regression model can be represented by

where are change-points and for . Muggeo [4] proposed to approximate the non-linear term using a first-order Taylor’s expansion around :

Thus, Equation (1) can be rewritten as

where

Hence, in the (s + 1)th iteration,

We summarize the SEG method as follows.

Given the initial and stop criterion , perform the following steps in the (s + 1)th iteration:

- Calculate and by (3).

- Fit the model given by (2) to derive .

- Update by (4).

- Repeat steps 1–3 until .

3.2. The Proposed DSR Method

3.2.1. The New Defined Piecewise Regression Model

Following the previous illustrations, Muggeo [4] introduced a segmented regression model with K change-points, as given by Equation (1). For simplicity, other predictors are omitted. Next, we propose an approach to deal with the infeasibility of SEG in detecting discontinuous jumps. The new proposed method is attainable for locating both continuous change-points and discontinuous jump points simultaneously.

Since disjoint jump points are exactly the break-points of piecewise regression models, we define a piecewise regression model allowing for the occurrence of jumps at change-points by

where are change-points and Similar to Muggeo’s SEG method, the other predictors are omitted for simplicity. In accordance with the parameterization in (5), and , respectively, represent the intercept and the slope of the leftmost segment line (i.e., for ), is the jump of the regression line at , and is the difference-in-slopes parameter between the (k + 1)th and the kth slopes. Note that when the segmented regression model is continuous at . Since the log-likelihood function for estimating the piecewise model given by (5) is not differentiable, with change-points regarded as parameters, estimations for model (5) cannot be solved analytically but require iterative methods. Referring to [1,4], we approximate the nonlinear term through a first-order Taylor expansion around :

Thus,

At the (s + 1)th iteration, we define

The model given by (6) is suitable for model errors following normal distributions or heavy tailed distributions, such as t or Laplace distributions. As an illustration, we assume the model error follows a normal distribution and fit the model by the least square method as follows:

where the design matrix is

After fitting the regression model by (9), we can derive the updated estimates , , , and . To update the estimates of and , we can use the Equation (8) only because these two parameters merely appear in (8). However, a single equation is not enough for solving two unknowns. This is the difficulty of Muggeo’s segmented regression model in estimating piecewise models with jumps at unknown places. This may be the reason why the SEG method requires the restriction of continuity. To solve this difficulty, we consider updating through Equation (8) as follows:

For updating , one possible solution is based on traditional regression methods. Considering the (k + 1)th sub-model,

Note that, for a simple linear regression model, , the least square estimate for the intercept is . Hence, the intercept of the (k + 1)th sub-model can be estimated by

Thus, the updated equations for s are

where and are the means of response and independent variables, respectively, for data lying in the kth sub-segments. By giving initial values and , iteratively refit the model to derive new estimates until the algorithm converges. The estimates for the regression parameters and the change-points are obtained simultaneously. However, the segmented algorithm is highly dependent on the initial values and hence it is likely to result in unsatisfactory estimates due to improper initial values. Thus, we further propose an intuitive jump detection (IJD) method for selecting suitable initial values of jump points .

3.2.2. The Proposed IJD Method

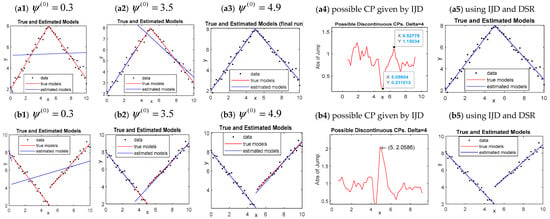

It is a common problem for most iterative approaches that the performance of the algorithm depends on initial values [4,40]. This problem may be stressed in situations where the objective function is not always concave, and hence the algorithm may converge to local maxima with improper initials used. We illustrate the dependence of DSR on initialization by using models (12) and (13),

and

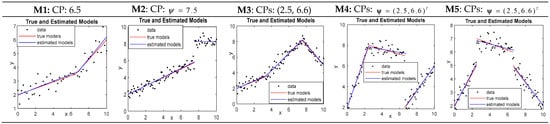

Two samples were generated individually from the above two models with each having 50 points and regression errors following normal distributions, with . From extensive simulation results, we observe that when the initial values of x are far away from the true change-point 5 such as or , DSR fails because data points are too few to obtain estimates of regression parameters. For , the DSR algorithm can converge, but it may result in inadequate estimates, such as the fitted results shown in Figure 3a1,a2,b1,b2; on the other hand, if initial values are close enough to the true value, DSR produces an estimated model of good fit such as the cases depicted in Figure 3a3,b3. These results illustrate that proper initial values are critical to the success of DSR. Next, we describe the IJD method for selecting suitable initial values to be used in DSR.

Figure 3.

DSR depends on initialization using continuous and discontinuous models, respectively, and IJD works for providing suitable initials.

Intuitively, if a regression model exhibits an abrupt jump at some point, the difference between the regression means before and after the jump point should be much larger than the differences between data points lying on the same segment. According to Equation (5), the change-points in a piecewise regression model are defined in terms of the regressor z. Assume that the sample has been arranged in the increasing order of z, with denoting the response of the ith sample point. Given a positive integer , , and , define as

where and . Let and r = [], i.e., the largest integer that is less than or equal to . Sort the s and select the smallest r of them as

Given , define a critical value for ‘jump’ as

where and are the sample mean and sample standard deviation. If

a possible jump is considered to be around data point i.

Since neighboring s are highly correlated, and it is impractical to have two change-points close together, deleting nearby jumps is necessary. Assume each of is no less than , one way of deleting nearby jumps is as follows:

- (i)

- Select the largest on and delete every in such that and .

- (ii)

- Select the second largest on the remaining set of and delete every nearby in the remaining set of as (i).

- (iii)

- Continue the process on the next largest on the remaining set of as (a) and so on until no more nearby jumps in .

Since it is likely to find more jump points than the assumed change-point number, deleting overestimated jumps is required. Simply remove the extra number of those smallest jumps s in the remaining .

Assume is the remaining set; then are the preliminary estimates of change-points with jumps, where

The steps of the IJD method:

- Calculate by (14).

- Calculate by (15).

- Test to find possible jumps by (16).

- Delete nearby jumps.

- Remove extra jumps.

- Calculate and output preliminary estimates of change-points s with jumps by (17).

For easy understanding, we illustrate the IJD method via an example with graphic representation.

The model considered is specified as

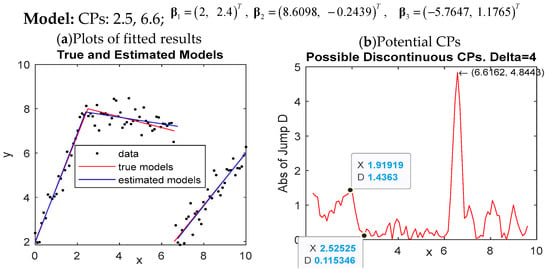

where and follow the normal distribution, with , and the model includes two change-points (one continuous change-point at x = 2.5 and one jump at x = 6.6). Figure 4a displays the scatter plots of one simulated sample of 100 data points (i.e., n = 100), the true model, the estimated model, and the detected possible jump at x = 6.6162. To apply IJD to the simulated sample, we set

Figure 4.

Graphic presentation of the IJD method for detecting possible jump points at the point indicated by an arrow with the corresponding coordinates.

We have and . Therefore, those places with will be considered as possible jumps. Figure 4b shows at x = 6.6162 is the only one larger than the critical value 1.888674; therefore, there is a possible jump at x = 6.6162, which is very close to the true place 6.6. The detected potential jump point is pointed out with black coordinates on the right plot in Figure 4. Except for the extreme large at x = 6.6162, we notice a relatively larger difference in Di for , where Di = 1.4363 (at x = 1.91919) decreases to Di = 0.115346 (at x = 2.52525). Note that the absolute difference in Di is relatively larger than those at the other places. This evidence indicates the existence of a possible connected change-point for . In fact, there is indeed a continuous change-point at x = 2.5. Moreover, there are approximately two smaller protuberances in the interval (7.5, 9.5). Thus, we fitted data with models having K = 1, 2, 3, 4, respectively. It resulted in the model of 2 change-points fitting the data set best for the smallest mean squared errors defined by

where RSS is the residual sum of squared errors and K is the number of change-points. Thus, the proposed IJD method is not only helpful for recognizing possible jumps but also useful for finding potential continuous change-points. Furthermore, the closeness of the detected possible change-points by IJD to the true ones is beneficial for speeding up the convergence of the algorithm and increasing the precisions of the estimations. This example shows the proposed IJD method is useful for finding appropriate initial values to derive sufficient estimates. Summarize the rule for determining the possible jump points and connected change-points as follows: consider those points having , , with a fixed m ( and were defined above in this sub-section) as possible jump points, such as those denoted by black coordinates in Figure 4. Then, find possible connected change-points in the interval where the variations of are relatively larger than those in other places. As noted by Seber [1], piecewise regression models are intended for circumstances where the number of change-points is small. Muggeo [4] also commented that a few change-points (maybe from one to three) are probably enough for handling realistic situations since the meaning of change-points may become doubtful as the number of regimes increases. Therefore, the IJD method should be serviceable for finding potential jump points and connected change-points.

When using IJD, one needs to select the numbers for , , and m, respectively. Usually, the selections depend on the sample size and the deviations of the data set. From our numerous experiments with samples of different sizes generated from various types of models, we found that the setting of , and m = 4 is workable for most data of moderate size. For sample sizes larger than 500, a larger may be needed, and it may be even larger than 15. One may try different K (the number of change-points), using IJD for each individual K to select initial values. For each individual K, the estimates of change-points and regression parameters can be derived by iteratively fitting the model given by Equation (6) until the algorithm converges. Accordingly, the best fitted model can be chosen based on the rules of model selection in regression analyses. The set of and m = 4 had been used with the IJD method in almost all our simulations and real applications, resulting in adequate estimates for all data sets.

Now, we return to Figure 3. The results in Figure 3a4,b4 show that IJD detected a potential connected change-point and a jump near to 5 for models (a) and (b), respectively. Figure 3a5,b5 show that, with the use of the initial values obtained by IJD, DSR resulted in a well-fitted model for both continuous and discontinuous models, respectively. These results support that IJD is helpful for finding proper initial values for DSR to make adequate estimations for piecewise regression models.

For the clarity of our method, we denote for and non-negative integer by

Based on the estimates of (8), we can update and by the following equations:

We denote the proposed segmentation regression method by DSR and summarize the DSR algorithm as follows:

- 0.

- Given dataset , with , integer , , , , , and CP number K:

- 1.

- Employ the IJD method to select initial values with a jump.

- 2.

- If the number of , , is less than K, randomly assign continuous change-pointsat the (s + 1)th iteration.

- 3.

- Calculate and by (7).

- 4.

- Fit the model given by (9) for obtaining and

- 5.

- (i) Alternatively update and by (19), (20) for discontinuous change-points.(ii) Update and by (21) for continuous change–points.

- 6.

- Update by and repeat steps 3–5 until , is fix to be a stop criterion.

3.3. The Determination of the Number of Segments

The decision on the number of subdivisions has been recognized as an important but challenging issue because the commonly used likelihood test statistic does not have an asymptotic chi-squared distribution, due to the failure of Cramér regularity conditions. As noted by Hawkins [41], determining the number of segments is technically difficult because little is known about the asymptotic distribution of the likelihood tests.

Even for the easiest case where regression errors are normally distributed, with unknown the generalized likelihood test statistic for testing the existence of a change-point is far from having an asymptotic distribution; even worse, these statistics may increase without limit. Although several researchers have studied “testing for the existence of change-points” [4,6,42], they confirmed that the statistic test is rather complicated, and it also depends on the alternative hypotheses. Thus, information criterion-based methods have been adopted often in the literature for determining the number of change-points [8,43,44]. Moreover, in practice, several techniques may be useful, such as the smoothed scatter plot suggested by [4] and the intuitive approaches introduced by [41,45].

In this paper, we consider incorporating the proposed IJD with the idea of Venter and Steel [45] and Hawkins [41] to decide the number of segmentations. The rationale of these authors is that, when the real segment borders are fitted, the residual sum of squared errors (RSS) should decrease significantly, but once all real segment borders have been detected, the reduction will be more or less. In fact, it is likely that an overfitted model (using the number of change-points, K, larger than the true one, ) results in larger RSS(K) than or just close to that of the data fitted with the model of the true . In particular, for K much larger than , RSS(K) is usually larger than RSS(), to some extent. (This fact can be seen from Table 1).

Table 1.

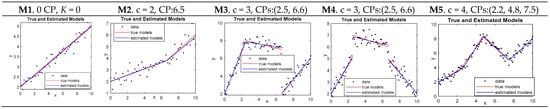

Determine the number of segments for the models shown in Figure 5.

Here, we assess the fit of the segmented regression model based on the changes in RSS and the mean of RSS (MRS). Recall:

where K is the number of segments of the model fitted to data. As commented by Hawkins [41], although this heuristic method does not rely on a substantial foundation, it can serve as a rough and practical tool. In this paper, we propose to use IJD first to find potential change-points and a possible number of change-points, say . Then, fit the data with several values K around (including those possible K larger or smaller than , as many as possible) and choose the most likely number of change-points based on the changes in MRS(K) through the idea of [41,45]. The incorporation of IJD with the changes in MRS makes the estimation for the number of segments easier and more precise. For illustrations, we employ the compounded method to five models shown in Figure 5. The generalized Bayesian information criterion (BIC) by [44] is also considered, in which the smallest BIC indicates the best fitted model. Table 1 presents the results in which those values corresponding to the optimum K are depicted with fluorescent yellow. It can be seen from Table 1 that, for the detection of jump points in the models M3 and M4, the IJD method produces one and two possible jumps for M3 and M4, respectively, which match with the true values, and the detected possible jump points are very close the true ones. On the other hand, as illustrated previously, the absolute difference in the regression mean Di (recall:

shows relatively larger changes around the connected change-points compared with those Dis associated with the points where no change occurs, for instance, the areas around those points with blue coordinates in Figure 5. For example, model M2 actually has a connected change-point at x = 6.5. The plots of Di resulted from IJD show relatively large changes in Di from almost Di = 0 around x = 5 to the highest Di = 0.64791 at x = 6.73469, which indicates a possible continuous change-point in the interval (5, 7) approximately. Similar results can be seen from Figure 5 for models M3 and M5. IJD produces quite large changes in Di around those points with blue coordinates, which are possible continuous change-points and are really close to the true ones. Accordingly, a possible number of change-points can be speculated from the results of IJD. Then, the idea from [41,45] can be utilized by using several different values around the speculated K. For instance, the plots of Di associated with Model M2 indicate a possible connected change-point, i.e., the speculated K equal to 1. Table 1 shows that the largest change in MRS (MRSch) is at K = 1, and MRSch becomes more or less for K greater than 1. Similar conclusions can be derived from the results of the other models in Figure 5. Moreover, if data are fitted with some K larger than the true , Table 1 shows the resulted RSS(K) is larger or just close to RSS(), (e.g., the comparison of RSS(5) with RSS() for all models in Table 1). This evidence implies the fit of overfitted models with is not so good as that of the model with the true . These results corroborate the rationale of our proposed method to select the change-point number. Thus, the incorporation of IJD with the idea of [41,45] can be a practical tool for deciding the number of segmentations.

Figure 5.

Models used for illustrating the determination of the number of segments by the suggested indices.

4. Experiments and Real Applications

4.1. Simulation Study

We demonstrate the efficacy and superiority of the proposed method through a simulation study. We consider both continuous and dis-continuous models with single and multiple change-points, respectively. The scheme for generating data is as follows. First, n uniformly distributed values over the interval [0,10] were chosen as the values of the partition variable x. Then, the values of the response y were computed according to the settings of the model, where the regression noises follow a normal distribution In following simulations, we set , m = 4, and let be the stop criterion.

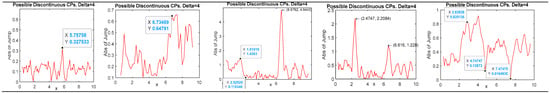

The performance of DSR is first examined based on continuous and discontinuous frames with five different types of models described in Table 2. For all models in Table 2, ,, and are independent and normally distributed . For illustrative purposes, plots of one simulated sample with the estimated model associated with each individual model in Table 2 are shown in Figure 6.

Table 2.

Descriptions of four models used in the simulation study.

Figure 6.

Plots of a simulated sample associated with each individual model used for comparisons.

We first evaluate the performance of DSR using Model 4 with simulations carried out under different scenarios: different sample sizes (n), different change-point locations (), different standard deviations (). The behavior of change-point estimators is observed on the basis of 1000 replicates through the mean (CPhat) and the standard deviation (stdCP). The IJD was used with the values of (see Section 3.2.1) chosen as 4, 8, and 15 for the sample sizes n = 150, 300, and 600, respectively. The performance of the parameter estimator was accessed by means of 1000 replicates for each model through several measures, defined as follows:

- (1)

- , is the estimator of , in the rth replication;

- (2)

- ;

- (3)

- , , is the estimator of in the rth replication;

- (4)

- RSS and the time (in seconds) consumed by DSR over 1000 replications.

Moreover, the goodness of fit of the fitted model is accessed through a criterion using the concept of statistical tests. Since the considered models are assumed to be normally distributed, the estimator of regression coefficients follows a multivariate normal distribution (c.f. [46]), where is a matrix in , with as its jth row, given , , (K is the number of change-points), and is the number of data in the ith segment. Thus, based on the properties of multivariate normal distributions, the random variable follows a distribution (c.f. [46]). Utilizing the concept of statistical tests, we think is acceptable if . Let . Then, a fitted model can be viewed as acceptable only if , which is quite strict. Table 3 reports the simulation results.

Table 3.

Simulation results of DSR employed to Model M4 in Figure 6 for estimating change-points and regression coefficients (1000 replicates) under different scenarios.

As expected, the estimations generally get better when n increases. For instance, under the case , the change point estimators with n = 300 are much less varied than those with n = 150, for less stdCP1 (0.043 < 0.115) and stdCP2 (0.078 < 0.248), resulting in a much better fitted model with n = 300, for less SSEC (1.365 < 4.828). Moreover, as increases to 0.8, the impact of large deviation on the estimators can be alleviated by a larger sample size. For instance, the estimations with n = 600 and are almost as good as those with n = 150 and in the case of . The locations of change-points also affect the estimations. Based on many simulations, we found that, if the true change-points were at , the estimates of change-points and regression coefficients were inferior to those with the true change-points different from . For example, the estimations with the true change-points at are better than those with in all considered cases (see Table 3). Furthermore, in all cases with , the acceptable proportions of the estimates for regression coefficients are above 85%, which is much higher than those in cases of (see Table 3). Note that the case means two change-points evenly divide the whole range of independent variable x, , i.e., the lengths of three sub-segments are equal. It seems reasonable that change-point detection is harder when the length of each sub-segment is the same than when the length of each sub-segment is different. Additionally, the time taken by DSR over 1000 replications with the sample size equal to 600 is very short, and the time increases mildly as n increases (the computational complexity will be discussed later). Therefore, DSR could be applicable to most real data sets effortlessly. Specifically, Seber [1] commented that piecewise regression models are intended for circumstances where the number of regimes is small and there are fairly sharp changes between sub-segments. Muggeo [4] also mentioned that a few change-points might be enough for dealing with practical situations, and the significance of change-points may become disputable as the number of change-points gets large. Thus, DSR could be a very practical technique for solving change-point regression problems.

Next, we compare the proposed DSR with the following four methods: the segmented method (SEG) [4], the EM-BP algorithm [12], the FCP algorithm [47], and the FCM-BP method (FCM) [12]. The estimations for change-points and regression coefficients are observed on the basis of 1000 and 200 replicates with the sample of size equal to 50 and 100 for models of one and multiple change-points, respectively. Note that the sample sizes utilized in the comparisons are small because the existing methods are very time-consuming due to their high computational cost. The measures used for making comparisons are the same as those used earlier for evaluating DSR. Table 4 and Table 5 report the estimations for the five models given in Table 2 by using the five methods, respectively.

Table 4.

Comparisons of DSR with existing methods using 1 CP Models (M1 and M2) in Figure 6.

Table 5.

Comparisons of DSR with existing methods using 2 CP Models (M3-M5) in Figure 6.

Table 4 shows that SEG performs best in the estimations for model M1 because SEG produced a change-point estimator with very small biases and variations (note that the stdCP obtained by SEG equal to 0.315 is the least small among the five methods). Furthermore, SEG results in the best fitted model with the least SSEC (0.208) and the highest acceptance rate (0.837). However, SEG is totally unworkable for the discontinuous model M2. On the other hand, the other four methods also perform well in all cases reported in Table 4. They are competitive in the estimations for models M1 and M2 regarding the precision and variation of the change-point estimators and the adequacy of the estimated model.

For the estimations of multiple change-point models M3-M5 shown in Table 5, DSR generally outperforms the other four methods for producing highly accurate estimates of change-points and regression coefficients in a very short time. In particular, DSR is much more economical in terms of time-consumption than the EM-BP, FCP, and FCM. In particular, the time consumed by EM-BP is extremely high. Even worse, EM-BP could not produce adequate estimates for model M5 due to a very large bias with the estimate of (bias = 6.6 − 3.741 = 2.859) and the poor fit of the estimated model with a large SSEC (5.836) and a very low acceptance rate (0.04). Although the estimations made by FCP and FCM for M3-M5 are satisfactory, they are also much more computationally expensive than DSR. Notice that the time taken by FCP and FCM looks tolerable because of the small sample size, n, and the small number of change-points, K. In fact, their computational complexity increases drastically with n and k, and the computational time would increase drastically even if n or K increased slightly. This evidence can be understood through the computational complexity analysis below.

We noted from the above comparisons that the superiority of DSR over EM-BP, FCP, and FCM is particularly significant in view of time consumption. This fact can be attributed to the incorporation of IJD with DSR and the much less complexity of DSR. IJD helps DSR converge faster by producing suitable initial values to be used (see the illustrations of IJD in Section 3.2). But the computational complexity may account for the preference of DSR mostly. The computational complexity of DSR is where n is the data size, K is the number of regimes, d is the data dimension, and i is the number of iterations. For EM-BP, FCP, and FCM, the computations for estimated parameters must be performed for each possible collection of change-points which include combinations. Thus, the computational complexity of EM-BP, FCP, and FCM is . That may be the reason why the time consumed by DSR is much less than that of the other three methods as the number of change-points increases. Table 6 summaries the time consumed by DSR, EM-BP, FCP, and FCM in the cases used for comparisons previously. Note the simulation results shown in Table 4 and Table 5 are based on 1000 and 200 replications for one and multiple change-points in the models, respectively. The results in Table 6 indicate that the time taken by DSR increases slowly with the number of change-points, K. But the time taken by the other three methods increases sharply as K increases, especially for EM-BP. Notice that the time consumed by DSR in case M2 is much higher due to the detection of jumps.

Table 6.

Comparisons between DSR and EM-BP in the time (in seconds) consumed over 1000 replicates or fewer.

To sum up, the comparison results obtained with the models in Table 2 reveal that DSR can work well in all cases of continuous and discontinuous models. More importantly, DSR produces better estimates for change-points and regression coefficients in virtue of smaller biases and variations of change-point estimators, as well as the small mean squared errors of estimated regression coefficients in almost all cases of continuous and discontinuous models. Moreover, DSR is much more economic in terms of the time consumed than EM-BP, FCP, and FCM, especially in cases involving multiple change-points. By contrast, SEG performs well for continuous models but is totally unworkable for discontinuous models. Simulation results show that the time consumed by DSR over 1000 replications increases slowly with K. This fact indicates that the computational effort of DSR increases mildly with the complexity of the model. As remarked by Muggeo [4] and Seber [1], piecewise regression models with a few change-points are sufficient for handling many practical situations because the meaning of change-points may become questionable as the number of regimes increases. Thus, the proposed DSR is feasible for most practical problems.

4.2. Real Applications

We demonstrate the practicability of the proposed DSR through five real examples from different areas.

Example 1.

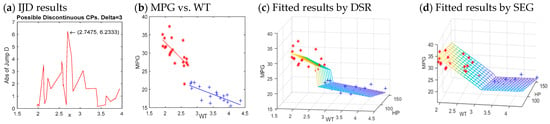

We employed the proposed DSR to a data set previously analyzed by [8,12,47]. The data set includes the measurements of three variables, miles per gallon (MPG), weight (WT), and horsepower (HP) on 38 automobiles of different models made in 1978–1979. The three papers concluded that the following segmented representation given by (22) is the best model based on the modified Schwarz’ Criterion (c.f. [8]) and the mean of the residual sum of squares (MRS) defined in (23) respectively.

The mean of the residual sum of squares (MRS) is

where K is the number of change-points. They also concluded that the dependence of MPG on WT and HP shows an abrupt change after WT becomes larger than 2.7 (in 1000 lbs). The graphical display of data in Figure 7b,c also discloses a jump in the relationship between MPG and WT when. As suggested by these three previous papers, we applied DSR to the car data using model (22). A possible discontinuous jump at (2.7475, 6.2333) is detected (see Figure 7a) by using IJD and DSR and the resulted model is

Figure 7.

The fitted results of the car data produced by DSR.

The model produced by DSR is very close to those obtained by [8,12,47]. Moreover, we also utilized Muggeo’s SEG to the car data with model (22), resulting in a fitted model graphically shown in Figure 7d, with a change-point at WT = 3.1375. Apparently, the estimated change-point by SEG (see Figure 7b,c) is irrational. This further illustrates the failure of SEG in detecting discontinuous jumps.

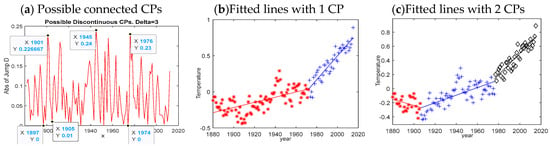

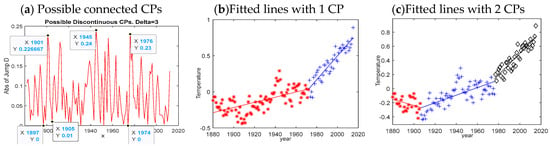

Example 2.

The climate trend research is important for understanding the development of global warming, which has been a world-wide issue and received great attention. Regression analysis has been adopted by several researchers [48,49] to analyze the changes in climate tendency. Many studies pointed out that the most remarkable change in the time series of global temperature occurred during the 1970s. We implemented DSR to the global temperature data, HadCRUT3, collected by the Met Office Hadley Center, also studied by [12,49]. The irregularities of the global temperature were re-computed, with the mean of temperatures over the period 1961–1990 regarded as the baseline. Figure 8 exhibits the estimated results obtained by DSR. Figure 8a indicates that there is no significant jump in the temperature series, but relatively large deviations appear at several places; for example, around the years 1901, 1945, and 1978, connected change-points may exist. Referring to the comments made by [4,6], piecewise models are intended for situations where the number of segments is small. Muggeo [4] suggested that a few change-points (e.g., one to three) for a sample of moderate size may be sufficient for dealing with several practical circumstances, since the implication of change-points might become questionable as the number of change-points increases. Thus, we fitted the data using piecewise regression models with the number of change-points K = 1, 2, and 3, respectively. According to the illustrations on the use of IJD in Section 3.2.2, the plots in Figure 8a indicate that no discontinuous jump was detected, but Di shows three relatively large variations around the years 1901, 1945, and 1978, suggesting the existence of three connected change-points. Table 7 reports the estimations for models with different K values. With K = 1, DSR produced a noteworthy change in 1971, which is in accordance with the finding of previous research [49]. However, when comparing the MRS resulted from models with different K values, the three-segment model in Figure 8c is preferable because it presents the smallest MRS among the three models considered in Table 7. Furthermore, the development of temperature changes can be seen more clearly and completely through the three-segment model. The fitted lines in Figure 8c and the estimations in Table 7 indicate there is no significant relation between global temperature and time before 1905. After 1905, the temperature started increasing with time gradually and the increasing speed became much faster after 1975. These discoveries illustrate the much faster rate of global warming effect in recent years.

Figure 8.

The fitted results of the global temperature data (HadCRUT3) by DSR.

Table 7.

The estimates of HadCRUT3 data derived via DSR with K = 1, 2, and 3, respectively.

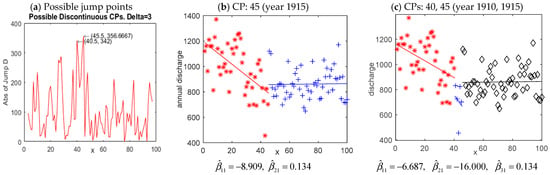

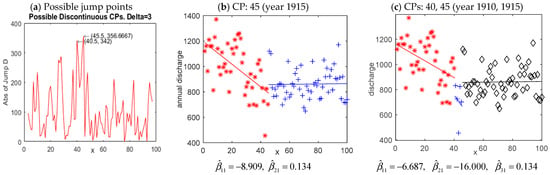

Example 3.

We employ DSR to the data set including measurements of the annual volume (in 108 m3) of discharge from the Nile River at Aswan, from year 1871 to 1970. The researchers [50,51] wanted to know whether abrupt changes in the mean of the annual discharge occur at some time points during this period. The plot of the data shown in Figure 9b reveals that the mean discharges decreased with time from the beginning (year 1871), followed a markedly large decrease around point 40 (year 1940), and then remains constant after the time of extremely low discharge. Figure 9a shows two possible jumps at time points 40.5 and 45.5. Thus, we fitted the data with models of two and three segments, respectively. Figure 9c indicates that the annual mean discharge decreased with time significantly () before point 40, i.e., year 1910. Note that the jump detected at 40.5 is because of the occurrence of an exceptionally low discharge around point 40. As commented by Cobb [50], the abnormally low change is real and can be found in the records of tropical weather stations. Both models (b) and (c) in Figure 9 demonstrate that the mean annual discharge remains constant around 861 (in 108 m3) after point 45 (year 1915). As remarked by Zeileis et al. [51], the unchanged mean discharge from the Nile River after 1915 may be partly attributed to the operation of the Aswan dam, starting from 1902.

Figure 9.

The fitted results of the Nile River data by DSR.

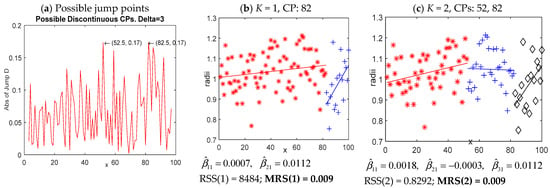

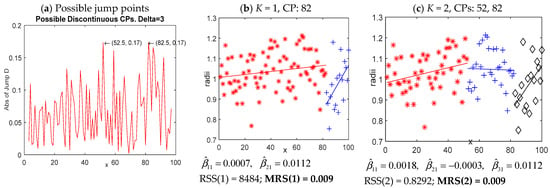

Example 4.

Change-point detection is important in the context of quality control. This example considers a dataset from manufacturing. The data analyzed by Lombard [52], Lu and Chang [11,13] contain 100 radii of consecutive circular indentations made by a milling machine, which were obtained for comparing the effects of two service routines on the productions of the machine. The estimated results by DSR are shown in Figure 10. IJD produced two potential jumps at time point 52.5 and 82.5 respectively. Therefore, we fitted the data with models including two and three pieces, respectively. Both models in Figure 10b,c present a precipitous change at point 82, after which the radii significantly increase with time for the estimated slope, which is significantly different from 0. Moreover, the estimated slope of the second line inFigure 10c is very small (), and the mean of the residual sum of squared errors for the two models is the same, i.e., MRS(2) equals MRS((1). Thus, in view of the parsimony in model selections, the two-segment model in Figure 10b is an adequate model to the milling machine data. The resulted model by DSR indicates a sharp jump in the radii data at time point 82. After the change-point at 82, the radii data significantly increase with time.

Figure 10.

The fitted results of milling machine data by DSR.

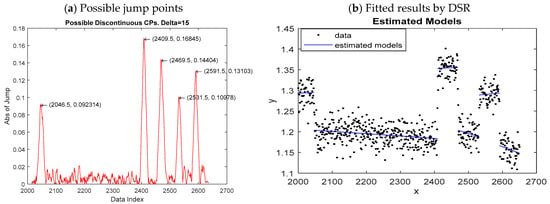

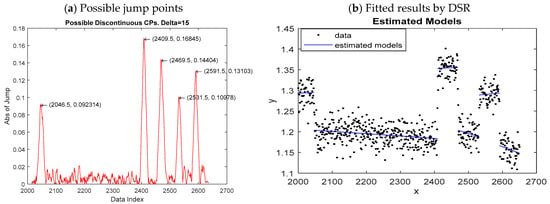

Example 5.

In this example, we employ DSR to the well-log data (c.f. [53]), which include 4050 measurements of nuclear magnetic response obtained when digging a well. Sharp shifts in the mean of the process measurements often occur at the transitions of rock strata. Thus, locating change-points is crucial for oil drilling (c.f. [13,53]). We applied DSR to a subset of indexed measurements from 2000 to 2650. DSR resulted in five potential discontinuous change-points at 2046.5, 2409.5, 2469.5, 2531.5, and 2591.5, respectively, which are close to the apparent five jumps shown in Figure 11b. The final estimated model with five abrupt jumps at 2046, 2409, 2469, 2531, 2591 is reasonable. Thus, the proposed DSR identified the five jump points properly.

Figure 11.

The fitted results of well-log data by DSR.

5. Conclusions and Discussion

In this paper, we have introduced an advanced piecewise regression model for dealing with regression relationships with abrupt changes. The main advantage of the proposed piecewise model is its availability for problems with discontinuous and/or continuous change-points. Fong et al. [54] emphasized that it is really challenging to recognize whether any jump occurs somewhere, making it difficult to choose proper methods to analyze data. This indicates the difficulty of jump point detection. It may be the reason why many existing methods are restricted to continuous models, e.g., [4,7,28,30,32]. However, continuity cannot be simply assumed in a real problem, unless it is known theoretically. In practice, continuity may be highly probable in medical or biological problems, but it may not be appropriate for problems in economics, finance, or geological prospecting (c.f. [36]). Thus, the flexibility of the proposed piecewise model in dealing with both continuous and discontinuous change-point locations is very useful in practice.

A new algorithm, called DSR, has been proposed for estimating piecewise regression models. A linear Taylor expansion has been adopted to circumvent the non-smoothness of the likelihood function. Accordingly, the estimation for piecewise models is simplified to the iterative fitting of linear regression models. The estimates of change-points and regression parameters can be obtained. The new approach has the advantages of being simple, fast, and highly efficient, regardless of whether the piecewise regression model is continuous or not. Furthermore, the proposed model is feasible for fitting generalized linear models, whereas the IJD method is less sensitive in the cases of generalized linear models. The regression errors can follow other distributions such as t, Laplace, or a mixture of normal distributions. The least squared error estimations can be replaced by the least absolute deviation criterion for obtaining more robust estimators. With the utilization of first-order Taylor approximations, the DSR algorithm is like a Gauss-Newton-based method. Thus, DSR is convergent provided that suitable initial values are used (c.f. [1], pp. 25–26). Muggeo [4] also noted that the segmented approach using linear approximations can be shown to be exact, ensuring so that it will always converge in a deterministic model provided that the change-point exists. Simulation results have demonstrated that for Gaussian data with moderate variance, DSR, with appropriate starting points, always attains the correct solutions with negligible biases. Therefore, if the DSR algorithm fails in some cases, it may be due to improper initial values or a particular configuration of data (for instance, too few data points contained in a regime to estimate parameters, or when the relationship is unstable).

Since the performance of DSR depends on initialization, the IJD method has been created for detecting possible jump points. The IJD method is helpful for accelerating the convergence of DSR because of the proper starting values chosen. The IJD method may be also informative in finding potential connected change-points. The incorporation of IJD with the idea of [41,45] is a practical tool for determining the number of change-points.

Several examples with numerical datasets demonstrated the effectiveness and superiority of the proposed DSR. The preference of DSR was particularly significant in the aspect of computational efficiency, especially in cases of multiple change-points. The applications to several real datasets from diverse areas indicate the wide applicability of DSR.

Multicollinearity is an issue often considered in regression analysis. Specifically, for fitting piecewise regression models, there is a chance to have multicollinearity on the design matrix of variables, which leads to the problem that the matrix in the normal equation is singular or close to singular. There are three causes of the multicollinearity problem in piecewise regressions:

- A poor dataset;

- Overfitting due to the number of change-points used for estimations larger than the true one;

- Too few data points contained in one (or more than one) regime to estimate regression coefficients during the iteration process.

Possible strategies for handling multicollinearity problems include:

- Re-assign new initial values (change points), especially for those problems resulting from cause 3;

- Apply the Ridge regression estimators (c.f. [55]).

An important merit of the Ridge regression strategy is that it always works for a singular or close to singular matrix only if a proper nonnegative biasing constant c is chosen. Since the biasing constant c reflects the amount of bias in the estimations, we apply Ridge regressions with a very small c (say, less than 1.0 × 10−10) for cases having a singular matrix, with c = 0 for cases without having a singular matrix. It is possible that a singular matrix occurs in one fitting iteration, and it turns out to be the occurrence of a non-singular matric in the following fitting iteration after the application of the Ridge method.

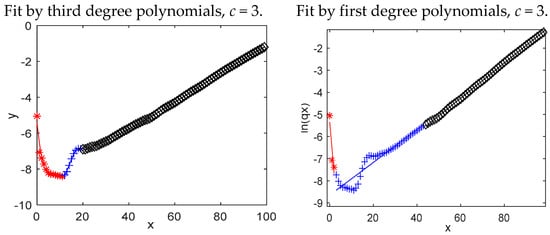

For our future work, we consider relaxing the linearity assumption. For some real data, linear regression lines cannot work well, but higher orders of polynomial may perform better. For example, the results in Figure 12 show that simple linear regression lines cannot produce adequate estimates for change-points and regression parameters, but third-degree models fit the data well and provide reasonable estimates for change-points. Moreover, the variance is assumed to be constant over all subdivisions, but the heterogeneity of variances occurs often in change-point problems. We consider relaxing these assumptions in the future work. The weighted least squares estimations may be helpful for handling the problem of heterogeneity. As to the approximation by higher order polynomials, it is much more complicated than first-order approximation. Just as stated in [56], higher order polynomials are much more difficult to deal with. More effort and deeper thinking are needed to find a feasible approach.

Figure 12.

Results of the same data fitted by first- and third-degree polynomials.

Moreover, the problems of outliers and missing data are often encountered in regression analysis. Possible feasible methods for alleviating the influence of outliers include using the absolute least deviation method instead of the least squares estimations or assuming model errors having t or Laplace distributions to increase the resistance of estimators against outliers. As to the problem of missing data, several techniques of dealing with missing data may be useful, but they need further research. These are important and difficult problems in regression analysis. Further studies on these issues are required in the future.

Author Contributions

S.-T.C.: Conceptualization and methodology, software, formal analysis, visualization, funding acquisition; K.-P.L.: formal analysis, investigation, resources, data curation, writing—original draft, writing—review and editing, supervision, project administration, and funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the National Science and Technology Council, Taiwan (Grant numbers MOST 111-2118-M-025-001, MOST 111-2118-M-003-002, NSTC 112-2118-M-025-001, and NSTC 112-2118-M-003-001).

Data Availability Statement

The sources of the datasets are respectively stated in each example in which they are considered.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Seber, G.A.F.; Wild, C.J. Nonlinear Regression; Wiley: New York, NY, USA, 2003. [Google Scholar]

- Perperoglou, A.; Sauerbrei, W.; Abrahamowicz, M.; Schmid, M. A review of spline function procedures in R. BMC Med. Res. Methodol. 2019, 19, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Ren, J.; Tapert, S.; Fan, C.C.; Thompson, W.K. A semi-parametric Bayesian model for semi-continuous longitudinal data. Stat. Med. 2022, 41, 2354–2374. [Google Scholar] [CrossRef] [PubMed]

- Muggeo, V.M.R. Estimating regression models with unknown breakpoints. Stat. Med. 2003, 22, 3055–3071. [Google Scholar] [CrossRef] [PubMed]

- Fearnhead, P.; Rigaill, G. Changepoint Detection in the Presence of Outliers. J. Am. Stat. Assoc. 2019, 114, 169–183. [Google Scholar] [CrossRef]

- Ulm, K. A statistical methods for assessing a threshold in epidemiological studies. Stat. Med. 1991, 10, 341–349. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Li, Q. Robust bent line regression. J. Stat. Plan. Inference 2017, 185, 41–55. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Wu, S.; Zidek, J.V. On segmented multivariate regression. Stat. Sin. 1997, 7, 497–525. [Google Scholar]

- MacNeill, I.B.; Mao, Y. Change-point analysis for mortality and morbidity rate. In Applied Change Point Problems in Statistics; Sinha, B., Rukhin, A., Ahsanullah, M., Eds.; Nova Science: Hauppauge, NY, USA, 1995; pp. 37–55. [Google Scholar]

- Ruanaidh, J.J.K.Ó.; Fitzgerald, W.J. Numerical Bayesion Methods Applied to Signal Processing; Springer: New York, NY, USA, 1996. [Google Scholar]

- Lu, K.P.; Chang, S.T. Change-point detection for shifts in control charts using fuzzy shift change-point algorithms. Comput. Ind. Eng. 2016, 93, 12–27. [Google Scholar] [CrossRef]

- Lu, K.P.; Chang, S.T. A fuzzy classification approach to piecewise regression models. Appl. Soft Comput. 2018, 69, 671–688. [Google Scholar] [CrossRef]

- Lu, K.P.; Chang, S.T. Fuzzy maximum likelihood change-point algorithms for identifying the time of shifts in process data. Neural Comput. Appl. 2019, 31, 2431–2446. [Google Scholar] [CrossRef]

- Bardwell, L.; Fearnhead, P. Bayesian detection of abnormal segments in multiple time series. Bayesian Anal. 2017, 12, 193–218. [Google Scholar] [CrossRef]

- Melnyk, I.; Banerjee, A. A spectral algorithm for inference in hidden semi-Markov models. J. Mach. Learn. Res. 2017, 18, 1–39. [Google Scholar]

- Haynes, K.; Fearnhead, P.; Eckley, I.A. A computationally efficient nonparametric approach for changepoint detection. Stat. Comput. 2017, 27, 1293–1305. [Google Scholar] [CrossRef] [PubMed]

- Garreau, D.; Arlot, S. Consistent change-point detection with kernels. arXiv 2016, arXiv:1612.04740. [Google Scholar] [CrossRef]

- Frick, K.; Munk, A.; Sieling, H. Multiscale change point inference. J. R. Stat. Soc. Ser. B Stat. Methodol. 2014, 76, 495–580. [Google Scholar] [CrossRef]

- Pein, F.; Sieling, H.; Munk, A. Heterogeneuous change point inference. J. R. Stat. Soc. Ser. B Stat. Methodol 2017, 79, 1207–1227. [Google Scholar] [CrossRef]

- Fryzlewicz, P. Wild binary segmentation for multiple change-point detection. Ann. Stat. 2014, 42, 2243–2281. [Google Scholar] [CrossRef]

- Korkas, K.; Fryzlewicz, P. Multiple change-point detection for non-stationary time series using wild binary segmentation. Stat. Sin. 2017, 27, 287–311. [Google Scholar] [CrossRef]

- Haynes, K.; Eckley, I.A.; Fearnhead, P. Computationally Efficient Changepoint Detection for a Range of Penalties. J. Comput. Graph. Stat. 2017, 26, 134–143. [Google Scholar] [CrossRef]

- Elhanashi, A.; Gasmi, K.; Begni, A.; Dini, P.; Zheng, Q.; Saponara, S. Machine Learning Techniques for Anomaly-Based Detection System on CSE-CIC-IDS2018 Dataset. In Applications in Electronics Pervading Industry, Environment and Society. ApplePies 2022. Lecture Notes in Electrical Engineering; Springer: Cham, Switzerland, 2023; p. 1036. [Google Scholar] [CrossRef]

- Lu, K.P.; Chang, S.T. Robust algorithms for multiphase regression models. Appl. Math. Model. 2020, 77, 1643–1661. [Google Scholar] [CrossRef]

- Lu, K.P.; Chang, S.T. Robust Algorithms for Change-Point Regressions Using the t-Distribution. Mathematics 2021, 9, 2394. [Google Scholar] [CrossRef]

- Lu, K.P.; Chang, S.T. Robust Switching Regressions Using the Laplace Distribution. Mathematics 2022, 10, 4722. [Google Scholar] [CrossRef]

- Truong, C.; Oudre, L.; Vayatis, N. Selective review of offline change point detection methods. Signal Process. 2010, 167, 107299. [Google Scholar] [CrossRef]

- Muggeo, V.M.R. Segmented: An R package to fit regression models with broken-line relationships. News. R Proj. 2008, 8, 20–25. [Google Scholar]

- Muggeo, V.M.R.; Attanasio, M.; Porcu, M. A segmented regression model for event history data: An Application to fertality Patterns in Italy. J. Appl. Stat. 2009, 36, 973–988. [Google Scholar] [CrossRef]

- Muggeo, V.M.R.; Adelfio, G. Efficient change point detection for genomic sequences of continuous measurements. Bioinformatics 2011, 27, 161–166. [Google Scholar] [CrossRef] [PubMed]

- Muggeo, V.M.R.; Atkins, D.C.; Gallop, R.J.; Dimidjian, S. Segmented mixed models with random changepoints: A maximum likelihood approach with application to treatment for depression study. Stat. Model. 2014, 14, 293–313. [Google Scholar] [CrossRef]

- Shi, S.; Li, Y.; Wan, C. Robust continuous piecewise linear regression model with multiple change points. J. Supercomput. 2018, 76, 1–23. [Google Scholar] [CrossRef]

- Muggeo, V.M.R.; Sottile, G.; Porcu, M. Modelling COVID-19 outbreak: Segmented regression to assess lockdown effectiveness. Tech. Rep. 2020, 10, 16. [Google Scholar] [CrossRef]

- Küchenhoff, H.; Günther, F.; Höhle, M.; Bender, A. Analysis of the early Covid-19 epidemic curve in Germany by regression models with change points. Epidemiol. Infect. 2020, 149, e68. [Google Scholar] [CrossRef]

- Shaukat, M.H.; Alotaibi, N.; Hussain, I.; Shrahili, M. The Analysis of the Incidence Rate of the COVID-19 Pandemic Based on Segmented Regression for Kuwait and Saudi Arabia. Hindawi Math. Problems Eng. 2021, 2021, 2644506. [Google Scholar] [CrossRef]

- Chen, C.W.S.; Chan, J.S.; Gerlach, K.R.; Hsieh, W.Y.L. A comparison of estimators for regression models with change points. Stat. Comput. 2011, 21, 395–414. [Google Scholar] [CrossRef]

- Alipour, P.; Bastani, A.F. Value-at-Risk-Based Portfolio Insurance: Performance Evaluation and Benchmarking Against CPPI in a Markov-Modulated Regime-Switching Market. arXiv 2023, arXiv:2305.12539. [Google Scholar]

- Yang, H.Q.; Xing, S.G.; Wang, Q.; Li, Z. Model test on the entrainment phenomenon and energy conversion mechanism of flow-like landslides. Eng. Geol. 2018, 239, 119–125. [Google Scholar] [CrossRef]

- Yang, H.; Wang, Z.; Song, K. A new hybrid grey wolf optimizer-feature weighted-multiple kernel-support vector regression technique to predict TBM performance. Eng. Comput. 2020, 38, 2469–2485. [Google Scholar] [CrossRef]

- Yang, M.S.; Nataliani, Y. Robust-learning fuzzy c-means clustering algorithm with unknown number of clusters. Pattern Recognit. 2017, 71, 45–59. [Google Scholar] [CrossRef]

- Hawkins, D.M. Fitting multiple change-point models to data. Comput. Stat. Data Anal. 2001, 37, 323–341. [Google Scholar] [CrossRef]

- Beckman, R.J.; Cook, R.D. Testing for two-phase regressions. Technometrics 1979, 21, 65–69. [Google Scholar] [CrossRef]

- Yao, Y.C. Estimating the number of change-points via Schwarz’ criterion. Statist. Probab. Lett. 1988, 6, 181–189. [Google Scholar] [CrossRef]

- Ciuperca, G. A general criterion to determine the number of change-points. Statist. Probab. Lett. 2011, 81, 1267–1275. [Google Scholar] [CrossRef]

- Venter, J.H.; Steel, S.J. Finding multiple abrupt change points. Comput. Stat. Data Anal. 1996, 22, 481–504. [Google Scholar] [CrossRef]

- Hogg, R.V.; McKean, J.W.; Craig, A.T. Introduction to Mathematical Statistics, 7th ed.; Pearson: London, UK, 2013. [Google Scholar]

- Chang, S.T.; Lu, K.P.; Yang, M.S. Fuzzy Change-point algorithms for regression models. IEEE Trans. Fuzzy Syst. 2015, 23, 2343–2357. [Google Scholar] [CrossRef]

- Menne, J.M. Abrupt global temperature change and the instrumental record. In Proceedings of the 18th Conference on Climate Variability and Change, Atlanta, GA, USA, 29 January–2 February 2005; p. 4. [Google Scholar]

- Werner, R.; Valev, D.; Danov, D.; Guineva, V. Study of structural break points in global and hemispheric temperature series by piecewise regression. Adv. Space Res. 2015, 56, 2323–2334. [Google Scholar] [CrossRef]

- Cobb, G.W. The Problem of the Nile: Conditional solution to a changepoint problem. Biometrika 1978, 65, 243–251. [Google Scholar] [CrossRef]

- Zeileis, A.; Kleiber, C.; Krämer, W. Testing and dating of structural changes in practice. Comput. Stat. Data Anal. 2003, 44, 109–123. [Google Scholar] [CrossRef]

- Lombard, F. Rank tests for changepoint problems. Biometrika 1987, 74, 15–624. [Google Scholar] [CrossRef]

- Neubauer, J.; Vesely, V. Detection of multiple changes in mean by sparse parameter estimation. Nonlinear Anal. Model. Control 2013, 18, 177–190. [Google Scholar] [CrossRef]

- Fong, Y.; Huang, Y.; Gilbert, P.B.; Permar, S.R. chngpt: Threshold regression model estimation and inference. BMC Bioinform. 2017, 18, 454. [Google Scholar] [CrossRef]

- Kutner, M.H.; Nachtseim, C.J.; Neter, J.; Li, W. Applied Linear Statistical Models; McGraw-Hill Irwin: New York, NY, USA, 2005. [Google Scholar]

- Box, G.E.P.; Tidwell, P.W. Transformation of the Independent Variables. Technometrics 1962, 4, 531–550. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).