1. Introduction

Artistic sketch is a genre of fine arts that has long been beloved. Many researchers have proposed various schemes for synthesizing sketches from images [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19]. However, many of them focus on synthesizing portrait sketches [

10,

11,

13,

14,

15,

20]. Furthermore, many sketch studies produce doodle-like sketches, which can be used for sketch-based retrieval [

21,

22,

23,

24,

25]. Therefore, we focus on synthesizing artistic landscape sketch, which is one of the important artistic sketch genres.

Our primary goal is to develop a diffusion model-based sketch synthesizing model that mimics artistic landscape sketch drawing techniques. Important considerations on the techniques include vanishing points and perspective levels of detail. In artistic landscape sketch, the objects in a scene are aligned along vanishing points. Artists can control the number of vanishing points for their artworks. Perspective level of detail is a technique that artists use to draw objects in detail or in abstract according to perspective level.

Another goal is to build a sketch generation framework that relieves the burden of collecting datasets. Generative models that synthesize sketches have long suffered from the lack of data, since a well-drawn sketch requires a lot of time and effort from an artist. Recently, the progress of diffusion models has greatly improved the generative models. Since existing diffusion models are trained with vast datasets, they have rich prior knowledge of objects and they produce impressive image synthesis results with zero-shot learning. Therefore, we employ the pre-trained diffusion model for the backbone of our framework to resolve the lack of sketch datasets.

To facilitate vanishing points and perspective levels of detail, we propose a three-channel perspective map (3CPM). Landscape sketch artists depict a scene by decomposing it into three parts: background, midrange, and foreground. The objects in the foreground are depicted in detail and those in background in abstract. The objects in the midrange are depicted gradually. 3CPM arranges objects in the background, midrange, and foreground in different maps. Then, we train a module that feeds 3CPM and pass representation of it to our framework.

3CPM is technically a semantic segmentation map which segments objects in a scene into three categories: foreground, midrange and background. 3CPM can be produced by manually segment sketches or images or can be synthesized by a depth estimator. 3CPMs with paired sketches are used to train our backbone model.

A serious challenge of deep learning-based sketch generation approach is lack of data. To resolve this challenge, we employ a pre-trained diffusion model as a backbone network of our framework. Since well-known diffusion models are pre-trained using a variety of datasets, most of them can generate convincing images. Among diffusion models, we employ Stable Diffusion [

26], which is recognized as producing visually pleasing images and easily trainable framework. However, vanilla Stable Diffusion shows limitations for synthesizing artistic sketch. The synthesized sketches show many artifacts such as unwanted tiny strokes or a color-remaining artifact on the result image. Therefore, we improve vanilla Stable Diffusion in order to synthesize artistic landscape sketch images.

For effective sketch synthesis, we consider both text-to-sketch and image-to-sketch frameworks for sketch synthesis. Although vanilla Stable Diffusion supports the image-to-image framework using CLIP that converts images into semantic tokens, the image-to-sketch framework of vanilla Stable Diffusion is heavily affected by the texture or colors of input images. In order to preserve salient visual information of the input image, we employ edge maps for the image-to-sketch framework.

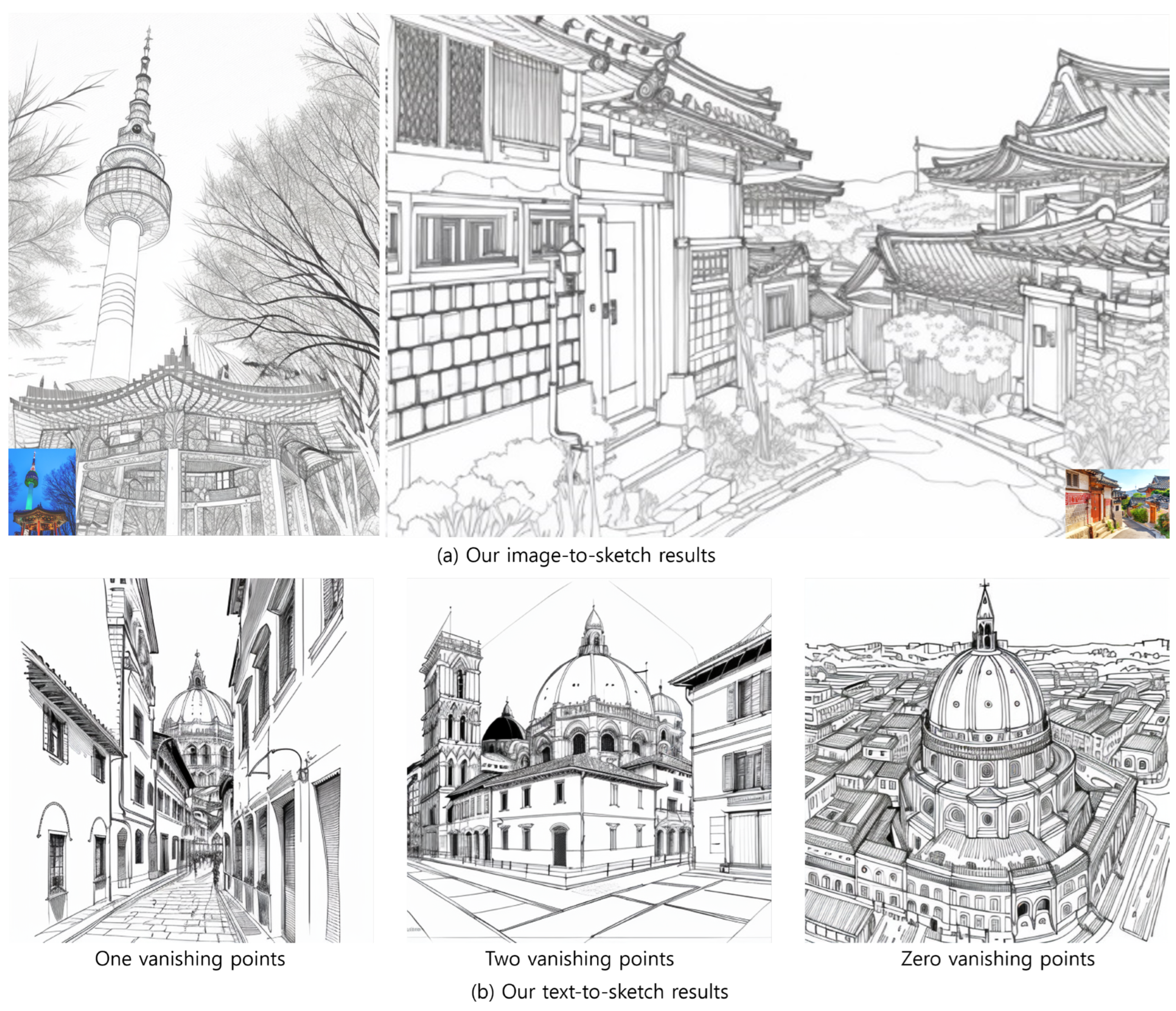

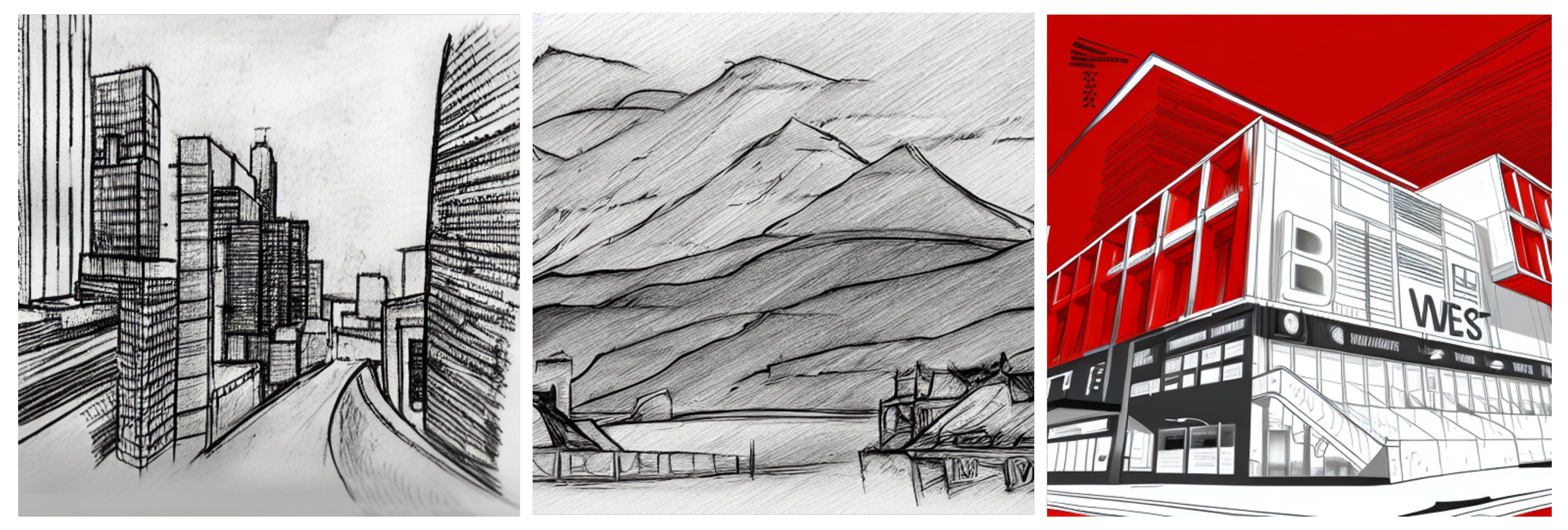

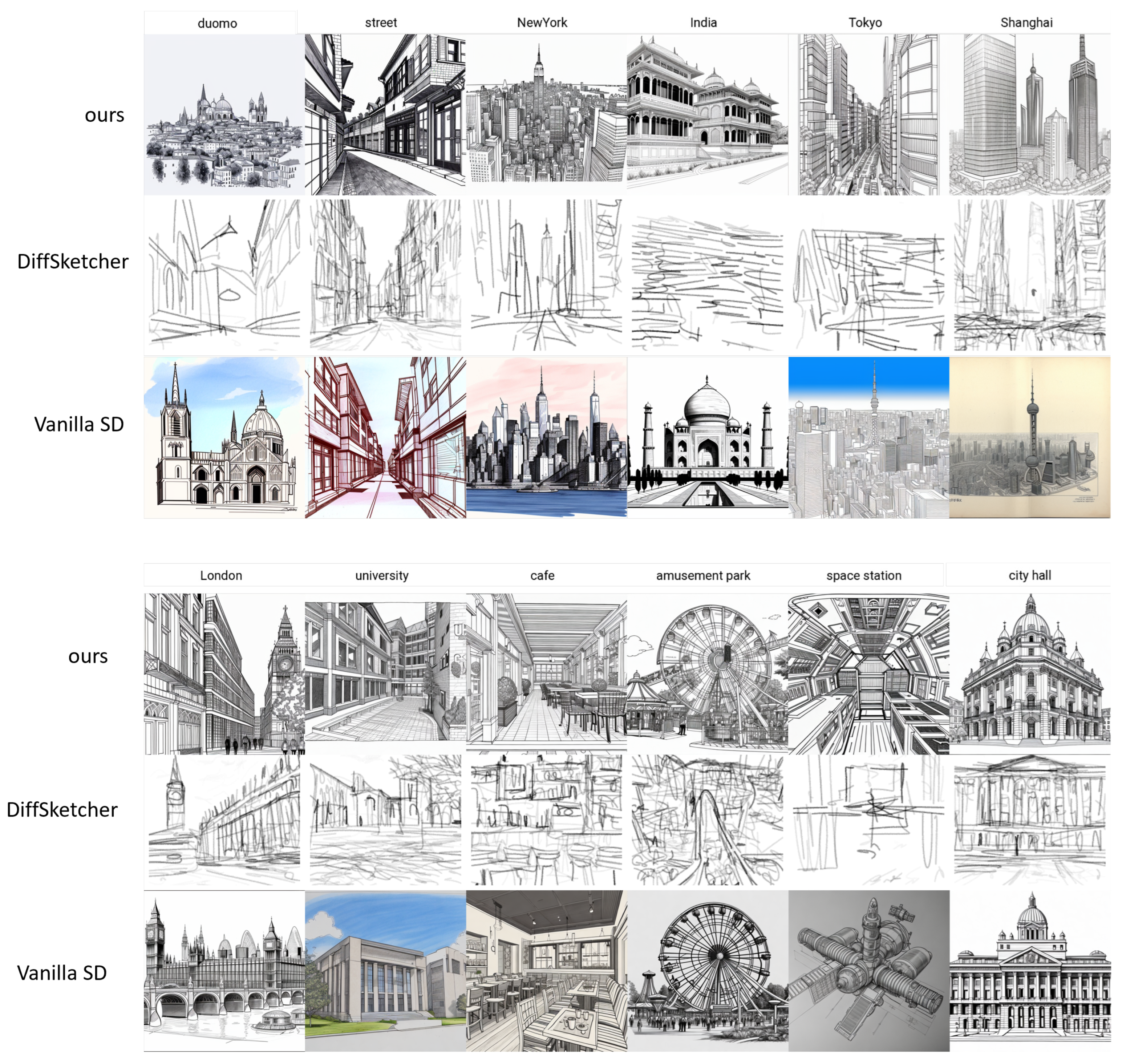

We present landscape sketch image using both text-to-sketch and image-to-sketch approaches. We present some results of our frameworks in

Figure 1. We also evaluate our results using a quantitative approach by estimating various metrics including Frechet Inception Distance (FID), Art Frechet Inception Distance (ArtFID) [

27], Contrastive Language-Image Pre-training (CLIP) score, Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM) and Multi-Scale Structural Similarity Index (MS-SSIM). We also execute a qualitative approach using a user study.

Our contributions are listed as follows:

We present a three-channel perspective map (3CPM), which controls the perspective levels of detail for the landscape sketch, which has not been resolved in the existing sketch generation schemes.

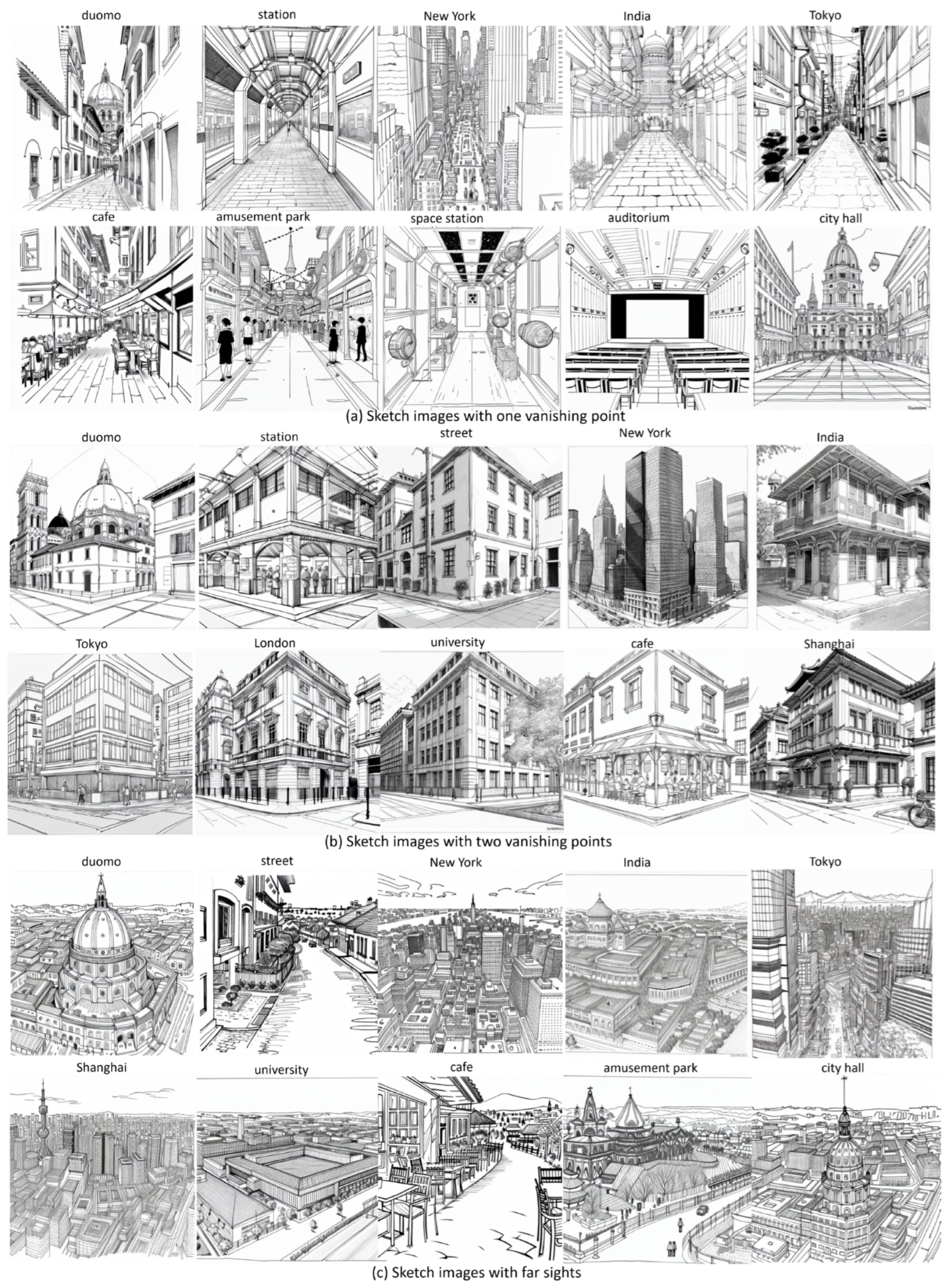

We present a landscape sketch generation framework that controls the number of vanishing points, which has not been controlled in the existing schemes.

We present a bi-modal sketch generation framework, which allows both image-to-sketch and text-to-sketch frameworks.

2. Related Work

Various sketch synthesizing methods can be categorized into four groups: (1) pre-deep learning methods [

1,

2,

3,

28] that use mathematical principles to extract prominent lines and to create hatching patterns using kernel-based techniques, (2) general deep learning schemes [

4,

5,

6,

7,

8,

9,

10,

11] including neural style transfer (NST) or generative adversarial networks (GAN) that synthesize sketch images from input images, (3) specialized deep learning schemes [

12,

13,

14,

15,

16,

17,

18,

19,

20] designed for sketch synthesis, such as portrait sketch synthesis, forensic facial sketch synthesis, and architectural sketch synthesis, and (4) diffusion model-based methods [

24,

25,

26,

29,

30,

31,

32,

33,

34], which are relatively less explored for sketch synthesis due to the dearth of high-quality sketch datasets.

In the following sections, we explain those groups in detail and discuss seminal and recent works with their contributions.

2.1. Pre-Deep Learning Schemes

A notable approach in this category is the Canny edge detection algorithm [

28], which has been widely used in computer vision society. With agreement that edges contain salient lines of objects, the edges extracted by Canny edge detection algorithm could be regarded as a sketch.

Some non-photorealistic rendering schemes apply mathematical principles to depict images. The coherent line [

1] was designed to produce coherent and salient lines on an input image that portray the shape of prominent objects in an image in an aesthetic style.

A successive approach [

2] describes an image abstraction and stylization method that acknowledges the direction of the local image structure in shape or color filtering. Notably, the Flow-based bilateral (FBL) smoothing and Edge Tangent Flow (ETF) presented in this work enhance the quality of the extracted lines.

Additionally, the innovative XDoG filter [

3] extended the Difference-of-Gaussian (DoG) filter to a framework that synthesizes diverse and controllable sketch from a photograph. This technique can produce a range of styles, including hatching, pastel, and monotone images that resemble sketches.

Pre-deep learning sketch generation schemes can produce visually convincing sketch images in a relatively low computational load. However, they heavily depend on their sketch generation models. Furthermore, they are very sensitive for noise or obscure objects. They also have limitation in presenting a text-to-sketch framework.

2.2. General Deep Learning Schemes

Gatys et al. [

4] present a seminal work for producing artistic image synthesis using a deep neural network. Their work demonstrates the separation and recombination of style and content using convolutional neural networks (CNN). However, the model has limitations in generating sketch images, since it applies style in a texture-based approach.

Many image synthesis frameworks are devised using GAN [

5] which consists of two networks: a generator that synthesizes images and a discriminator that distinguishes whether the images are real or fake.

Pix2Pix [

6] employs ConditionalGAN [

35] to address an image-to-image translation problem. This model learns the mapping from input images to output images directly from paired images. They can produce sketch images from photographs. However, Pix2Pix is trained only through paired datasets, which consist of a corresponding pair of images from each domain. In addition, Zhu et al. [

7] present CycleGAN which introduces cycle consistency to resolve the burden of a paired dataset from Pix2Pix.

Furthermore, Liu et al. [

8] present an unsupervised image-to-image translation (UNIT) framework which implements an unsupervised image-to-image translation methodology based on CoupledGAN [

36]. Huang et al. [

9] extend this work to a multimodal unsupervised image-to-image (MUNIT) framework by applying novel losses and architecture to handle more complex image translation problems.

As of recent, Yeom et al. [

10] employ an attention-based mechanism [

11] and RCCL [

37] to produce illustrative sketches from input photographs.

General deep learning-based sketch generation schemes can produce sketch images of improved details. They also present robust generation schemes that can avoid a lot of unwanted artifacts, which are observed in the results of pre-deep learning schemes. However, they still suffer from unwanted artifacts. Another limitation is that their results do not reflect the structure of a sketch such as vanishing points or level of detail.

2.3. Sketch-Specific Deep Learning Schemes

Li et al. [

12] highlighted the importance of edges, boundaries, and contours in sketches, and collected several human-drawn contour images to facilitate better sketch synthesis. They exploited ConditionalGAN [

35] and compared a synthesized sketch with various ground-truth sketch images.

Yi et al. [

13] presented APDrawingGAN, which is a unique structure comprising hierarchical GANs for individual facial components such as hair, eyes, nose and lips. The sketches of the components are synthesized to produce a sketch of higher-quality. Su et al. [

14] proposed MangaGAN to apply a manga-styled sketch from an input image. They observed that experienced manga artists complete a manga-styled face by combining facial parts such as eyes, nose and mouth, etc. Their architecture mimics this observation for producing manga-styled sketches from an input face photo. In order to enhance the fidelity of line strokes, Yi et al. [

13] employed DT loss and Su et al. [

14] employed structural smoothing loss, respectively. Kim et al. [

15] presented a transfer learning scheme that leverages a pseudo face sketch dataset for face sketch synthesis. This technique introduces an innovative approach leveraging existing knowledge from similar domains. Peng et al. [

16] introduced a two-stage strategy for face sketch synthesis, while the Dual Conditional Normalization Pyramid (DCNP) [

17] was proposed to synthesize face sketch images using reference samples.

In addition, progress in other domains is observed with the introduction of the Self-Sparse GAN [

19] and Koh et al.’s work [

18] for architectural design sketch synthesis. Even though the results from these studies are visually convincing, they still have limitations in aligning lines in their sketch images and those in real scene collected from Google maps.

Sketch-specific deep learning-based generation schemes successfully produce visually pleasing sketch images in a specific domain such as portrait. However, they require a paired dataset composed of a portrait image and its corresponding sketch to train these models. They also do not support various domains such as landscape.

2.4. Diffusion Model

Recently, diffusion models have been showing remarkable performance in image synthesis. A consensus has been reached that diffusion models beat GANs [

29] in fidelity and diversity.

DDPM [

30], DDIM [

31], and SDE [

32] provide novel methods that process to transform a simple initial random distribution (like Gaussian noise) into a complex data distribution (like the distribution of natural images).

Relatively few studies have been devoted to sketch synthesis using a diffusion model. Jain et al. [

33] introduced Vectorfusion that synthesizes an image as a scalable vector graphics (SVG) format leveraging text-to-image diffusion model trained with vast captioned datasets. They achieved their goal using an optimization rasterizer which converts an SVG image to a pixel image. Wang et al. [

24] presented SketchKnitter that applies the diffusion process on pen states and stroke points. A sketch is synthesized from noisy scattered stroke points. These points are gradually deformed and then finally become a recognizable sketch. Xing et al. [

25] developed DiffSketcher that demonstrates impressive results in synthesizing a vectorized sketch. They successfully conveyed prior knowledge of a pretrained diffusion model to its differentiable rasterizer to synthesize a vectorized sketch.

“Stable Diffusion” [

26] has shown remarkable results in txt2img generation. “Stable Diffusion” successfully generates images from texts and some other options explored by members of the open community, such as Automatic1111 [

38] and civitai [

39]. Automatic1111 flourishes Stable Diffusion by attaching various extensions. Among the various extensions, we highlight ControlNet [

34] and LoRA extensions [

40,

41].

ControlNet [

34] offers controllability to Stable Diffusion to synthesize images with “hints”, such as canny edge, depth map, etc. LoRA [

40,

41] offers the ability of customization to Stable Diffusion to learn new objects, persons and styles efficiently.

Diffusion model-based schemes can produce a semantically meaning sketch without a sketch dataset. They also present both image-to-sketch and text-to-sketch generation frameworks. Their results are more visually pleasing than those from the schemes belonging to other categories. However, they show unwanted artifacts such as color-remaining problems. They also do not reflect artistic sketch drawing techniques such as vanishing points or level of detail.

3. Overview

Our model employs Stable Diffusion as a backbone network and ControlNet to control the edge map and 3CPM. We further improve our model using LoRA to enhance the quality of the results. Our approach resembles Ryu et al.’s work [

41], where LoRA components are added to calculate query, the key value in every attention module.

We implement both text-to-sketch and image-to-sketch approaches. In both case, a guide token, “*”, is used so that the backbone network synthesizes a sketch. During the inference period, we use “ldsktch” as “*”.

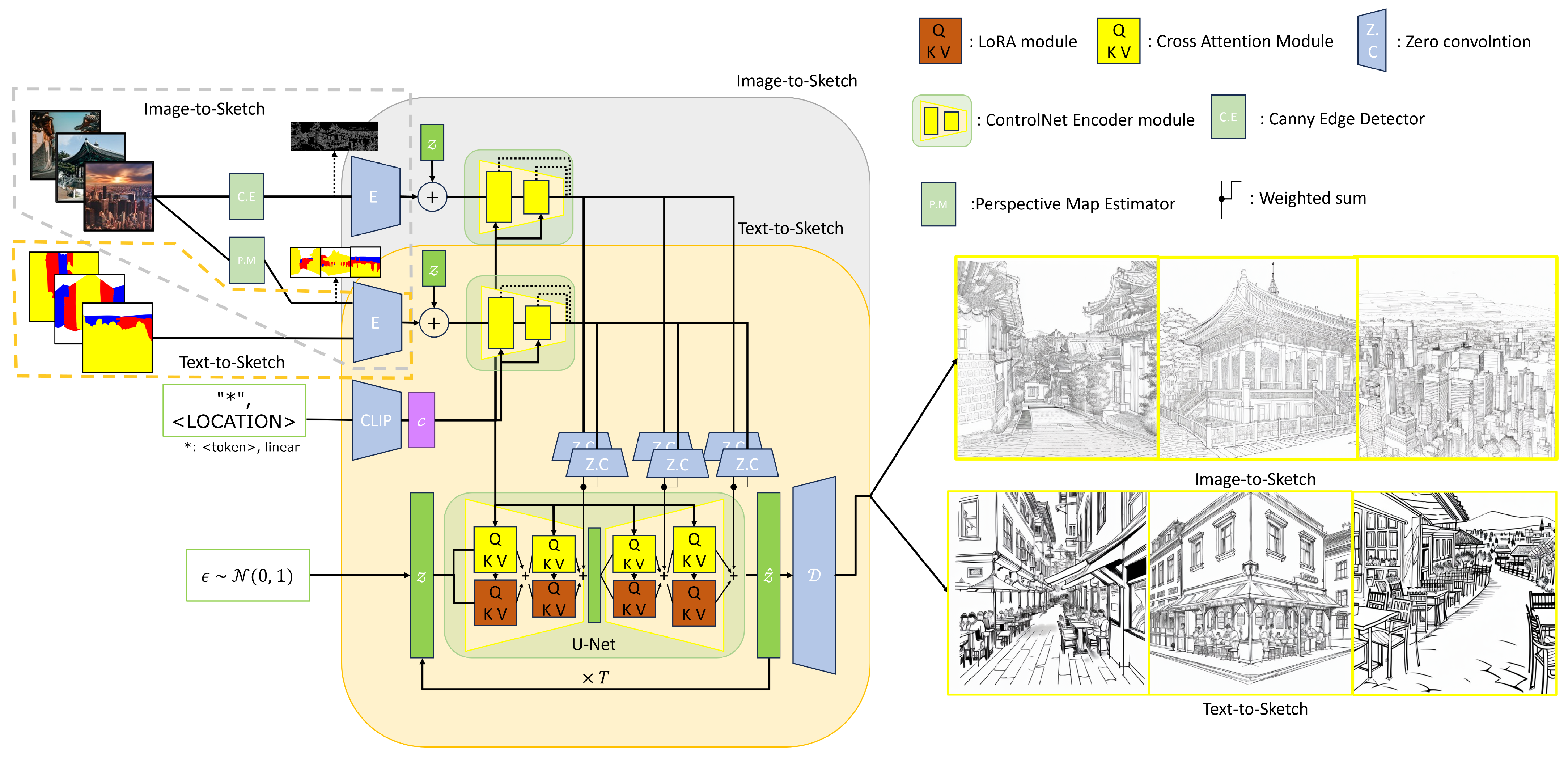

Figure 2 illustrates the overview of our framework. In text-to-sketch generation, which is in the orange box, prompt and 3CPM are fed into our model. The prompt, which is given as

“*, cafe”, is encoded into tokens and the tokens are fed into both ControlNet and Stable Diffusion. 3CPM is optional and can be constructed by a perspective map estimator (PM) or an artist.

In image-to-sketch generation, prompt and an image are given as an input. A prompt is given as “*”. An image is fed into two preprocessors, a canny edge detector and a perspective map estimator, and then a preprocessed edge map and a 3CPM are fed into two different ControlNet models.

In the denoising step, noise which is fed into UNet of Stable Diffusion is considered as a latent seed, .

Text tokens,

and

, are fed into cross-attention and LoRA. Two results of each module are added and a feature from ControlNet is added to the outcome of cross-attention and the LoRA module of the decoder of UNet in Stable Diffusion. After several denoising steps, the latent vector is gradually denoised. The results from both text-to-sketch and image-to-sketch frameworks are presented in

Figure 2.

4. Method

4.1. Preliminaries on Stable Diffusion

The architecture of Stable Diffusion is a variation of variational auto-encoder (VAE) [

42], which employs a re-parameterization strategy. On the contrary, Stable Diffusion employs a denoising process.

Stable Diffusion belongs to the category of Latent Diffusion Models (LDM) where a denoising process is executed in latent space to reduce training time and to enhance training stability. Stable Diffusion encodes a

image in a shape of

and then diffuses to finally form

resolution. The diffusion process is performed in the inner UNet [

43]. The UNet consists of an encoder,

, a decoder,

, and a middle block, which consists of several ResNets [

44] and ViTs [

45] models. To synthesize a sketch, conditions are fed into cross-attention modules in the ViTs. Every cross-attention module in transformers in each layer is calculated as follows:

where

, and

is a condition vector such as text embedding.

The training phase is composed of two steps. The first step is the training of the encoder that maps input images to the latent space and the decoder that recovers the latent vector to the image.

where

is an input image and

is an reconstructed image.

The second step is the inner UNet, which is trained minimizing loss term such that

where

is an encoder as VAE,

is sampled from normal distribution and

is the UNet of Stable Diffusion.

Since Stable Diffusion is basically a text-to-image model, it processes text as a condition. For this purpose, Equation (

3) is manipulated as follows:

where

is the text condition.

4.2. A Three-Channel Perspective Map (3CPM)

Artists who draw landscape sketches align objects in their sketch along the vanishing points of the scene and draw the objects in perspective levels of detail. For example, they view a scene and determine vanishing points. Afterward, they draw a horizontal line and perspective lines extended from the vanishing points, explicitly or implicitly. Finally, they split the objects into perspective levels of detail by distance and draw them according to their lines and separations.

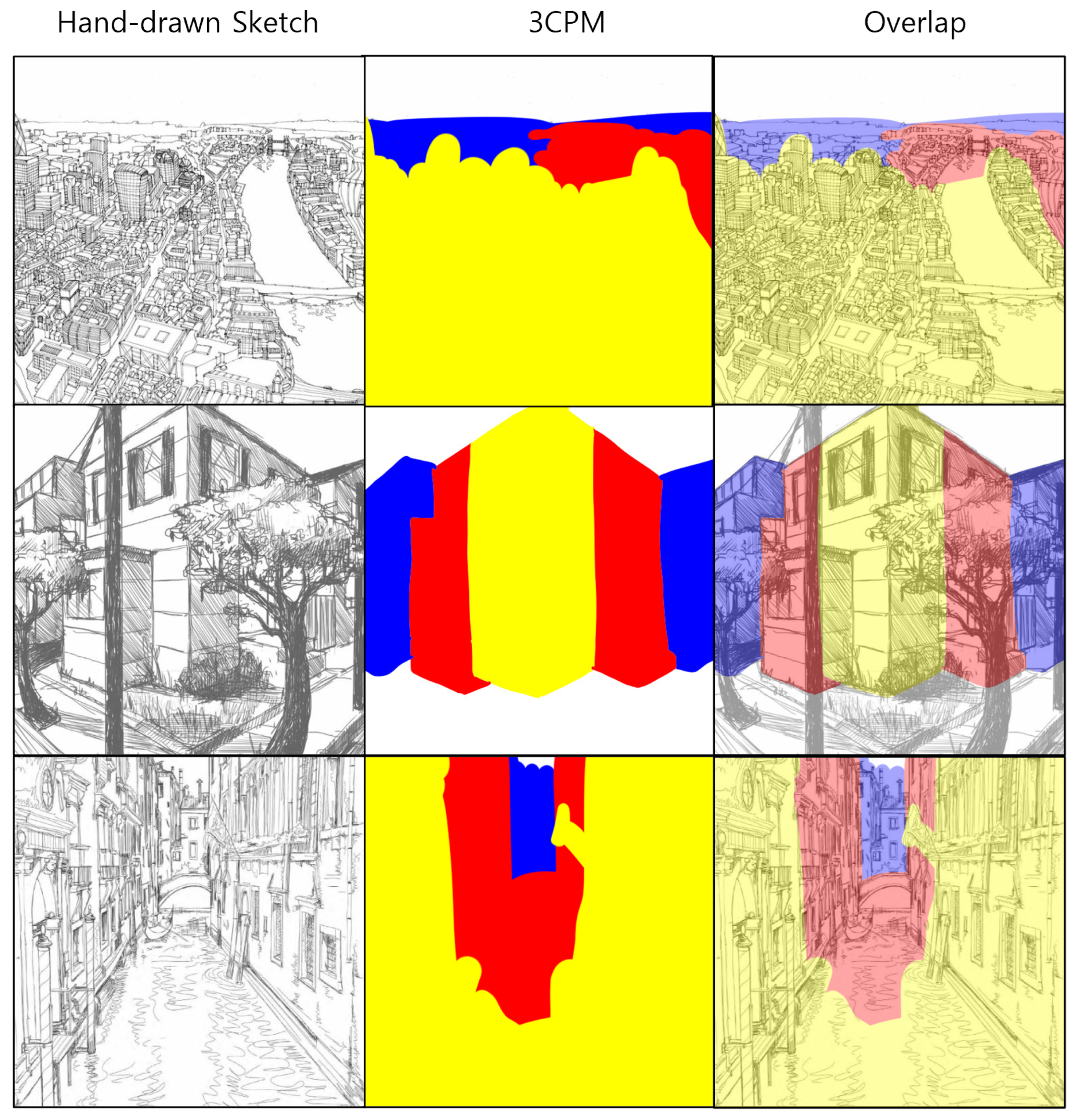

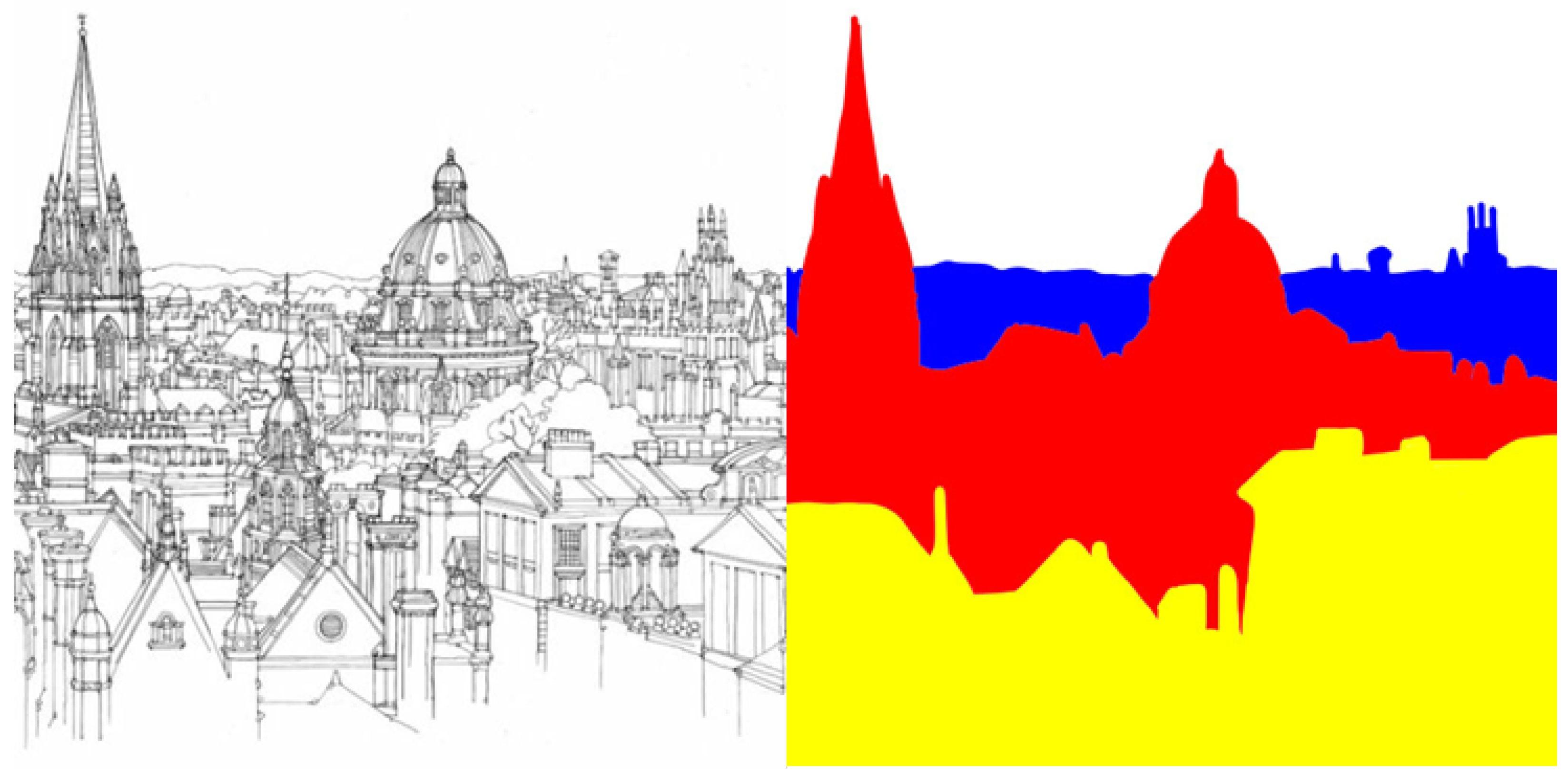

We propose the 3-channel perspective map (3CPM) in order to the artistic sketch drawing technique. 3CPM is a segmentation map which organizes the scene into three categories: background, midrange, foreground. They are represented in different colors. Yellow is for the foreground, red is for the midrange and blue for the background (see

Figure 3). 3CPM can be easily crafted by hand or can be produced by segmenting the depth map of a scene into 3 parts with respect to pixel value of the distance map extracted by a depth map estimator.

In our study, we train ControlNet by collecting 3CPMs from hundreds of artistic open-source sketches. An example pair of our dataset is illustrated in

Figure 4. ControlNet incorporates Stable Diffusion for sketch image generation.

The inner UNet

consists of an encoder and a decoder. Since this architecture is designed following UNet [

43] architecture, each layer of the encoder is connected to each corresponding layer of the decoder via skip connection. Therefore, we can say that

is the corresponding pair of

.

For the training phase of ControlNet, encoder

E of

is copied as

, and we denote each layer of

as

. A specific condition is that 3CPM or a Canny edge map, which is notated as

.

, are fed into the zero convolution layer,

, and added to latent vector

. Finally, it is fed into

.

is the notation of every intermediate term of every

for brevity.

where

consists of intermediate terms

of every

and

is fed into the corresponding

layer in order to feed into the corresponding decoder layer,

. We can formulate this process as follows:

where

is the intermediate term of

and ControlNet is trained with a loss term that is employed in Stable Diffusion. The loss term to train ControlNet for 3CPM is defined as follows:

where

.

4.3. Enhancing Sketch Quality

We aim to implement a framework that synthesizes artistic landscape sketch. As a backbone network that fits into our framework, we employ a diffusion model able to synthesize high fidelity and diverse images, specifically widely used and released as an open-source model, Stable Diffusion. However, vanilla Stable Diffusion is less feasible to synthesize artistic sketch.

In

Figure 5, four sketches in Columns 1 and 2 are synthesized with vanilla Stable Diffusion with prompt “<LOCATION>, sketch, lineart”. <LOCATION> is “duomo”, “landscape”, “city”, “cafe” for each result. These four results have low quality, and two sketches in Column 3 are synthesized by vanilla Stable Diffusion with ControlNet that uses canny edge map. Unfortunately, colors are left on the sketches.

To address these two problems, (1) low quality and (2) colors on the sketch, we fine-tune our backbone model following an efficient fine tuning method, LoRA, using collected artistic landscape sketches.

Low Rank Adaptation, LoRA, is proposed in the paper of Hu et al. [

40]. They propose a method that trains its tiny LoRA modules with gigantic weights. A tiny LoRA module can be formulated as follows:

, where

and

, and a gigantic weight

. Finally, a layer that LoRA modules adapt can be formulated such that

In the fine-tuning phase, a gigantic weight, W, is locked, and only LoRA modules, , are trained.

Ryu et al. [

41] provide scripts that adapt this method to Stable Diffusion. They implement

and

V of cross-attention modules of the inner UNet of Stable Diffusion which are added by LoRA modules.

and

v can be formulated as follows:

The loss term is the same as Stable Diffusion, since it is a fine-tuning procedure.

As a result, we fine-tune vanilla Stable Diffusion that works efficiently and resolves the existing limitations of the existing diffusion-based sketch generation schemes including low quality and color blurring on a sketch.

4.4. Multimodal Sketch Generation

We implement text-to-sketch and image-to-sketch functions using ControlNet and LoRA trained in the previous stage. Both text-to-sketch and image-to-sketch functions are commonly assigned prompt “∗” in order to guide sketch synthesis. In the text-to-sketch mode, location words such as “cafe”, “station”, and “NewYork” can be attached after a basic prompt. In the case of the image-to-sketch mode, a prompt is fixed as “∗” and information of a scene is given through preprocessed information such as 3CPM or canny edge map.

4.4.1. Text to Sketch

In the text-to-sketch mode, a basic prompt such as “*, lineart” and a location prompt such as “cafe” or “city” are assigned as an input. To synthesize a sketch without an input photograph, only seed noise and a prompt are fed into our framework. Sampling formulation for this process is presented as follows:

Sampling formulation with 3CPM can be presented as follows:

where

is a denoising scheduling parameter with respect to time

t and

.

is a latent vector,

is a text condition,

is an encoder of the inner UNet,

t is time sampled from

,

is an encoder of ControlNet, and

is 3CPM.

is variance according to time step

t.

4.4.2. Image to Sketch

In the image-to-sketch mode, a basic prompt and an input photograph are given to our framework. The input photograph is fed into the preprocessing modules including a Canny edge estimator and a perspective map estimator. The preprocessed information is fed into ControlNet. Notably, since two ControlNet models trained using different schemes are employed, two outcomes from ControlNets are merged through a weighted average scheme and are fed into the inner UNet of our framework. The weights for different ControlNets are and , respectively.

Finally, a sketch is synthesized using the following sampling formulation:

where

and

is 3CPM,

is Canny edge map,

is encoder of ControlNet trained with canny edge map. Other variables are identical to the case of text-to-image generation.

Finally, latent vector, which is recovered by decoder of VAE

, is defined as follow:

where

is sampled from

, which is noise, by

T times.

5. Implementation and Results

We train and test our framework in Naver Clova NSML cloud environment. CPU is Intel(R) Xeon(R) Gold 5220 CPU @ 2.20GHz with 16 cores, GPUs are two Tesla V100-SXM2-32GB.

To fine-tune and test our model, we employ the Automatic1111 [

38] open-source framework, which supports not only web UI but also API so that we can use Stable Diffusion with some snippets. Furthermore, it provides various extensions by combining ControlNet and LoRA with Stable Diffusion.

5.1. Results

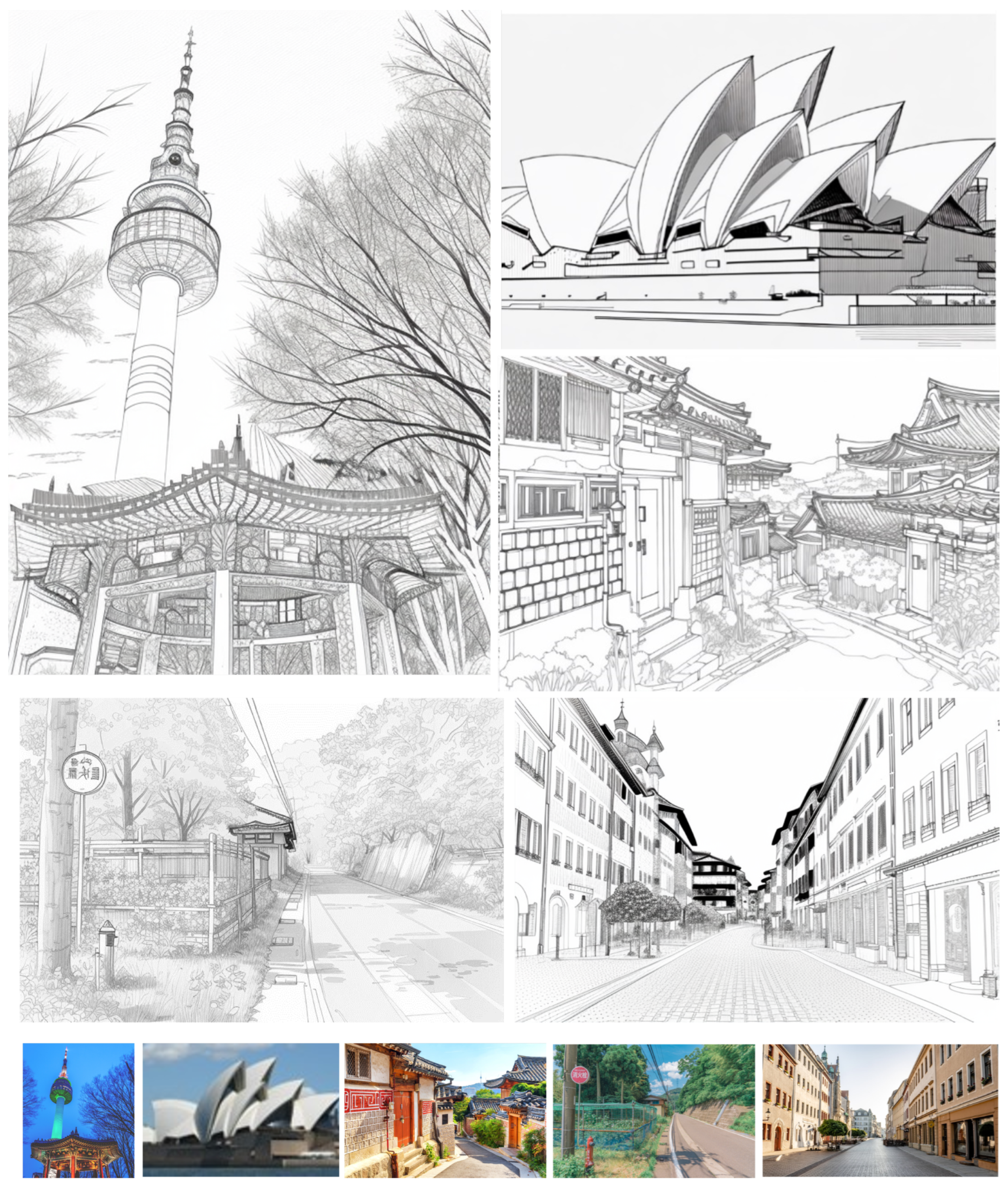

We present two categories of sketch images synthesized using our framework. In

Figure 6, sketch images synthesized from input images are presented. We apply five landscape photographs and produce sketch images. The details of the photograph such as trees and brick tiles are successfully generated. The objects in the foreground are illustrated in detail and those in the background are abstracted. In

Figure 7, sketch images generated from keywords are suggested. As input, we employ the following keywords in different 3CPMs and numbers of viewpoints: “duomo”, “station”, “New York”, “India”, “Tokyo”, “Shanghai”, “London”, “cafe”, “amusement park”, “space station”, “auditorium”, “city hall”, and “university”. In addition to these keywords, we present three different 3CPMs, which lead different sketch images.

5.2. Dataset

We collect 147 artistic landscape sketches for training and 12 images for testing from [

46,

47]. Additionally, we produce hand-crafted 3CPMs for each collected sketch to train ControlNet. Therefore, we use 147 sketches to train LoRA and 147 pairs of sketches and their corresponding 3CPMs to train ControlNet, and we use 12 landscape images to test our framework.

To evaluate our framework, we estimate Sketch-FID. We download an imagenet-sketch dataset from kaggle [

48] and train ResNet50 [

44].

5.3. Hyperparameters

In order to train LoRA, we set the rank of LoRA, r, to eight and attach LoRA modules only on of UNet of our framework. We set the batch size as four, epochs as 500, learning rate as 0.0001, and network as one. We employ a cosine LR Scheduler whose input image size is .

A prompt used for training LoRA is “ldsktch”, which is a guide token. When it comes to synthesizing, text-to-sketch and image-to-sketch modes use a default prompt, “ldsktch”, that guides our framework to synthesize the result in an artistic landscape sketch style. The text-to-sketch mode uses a location prompt in addition to the default prompt. A location prompt specifies the keyword of a place including “cafe”, “city” or “beach”, etc.

To train ControlNet, we employ Stable Diffusion v1.5. We set the batch size as four, epochs as 1000, learning rate as 0.00085, which is gradually increased to 0.012 using linear scheduler, image size as 512 by 512, and latent size as 64.

A prompt used for training ControlNet is “landscape sketch line-art, drawing detail is separated by distance”.

5.4. Comparison

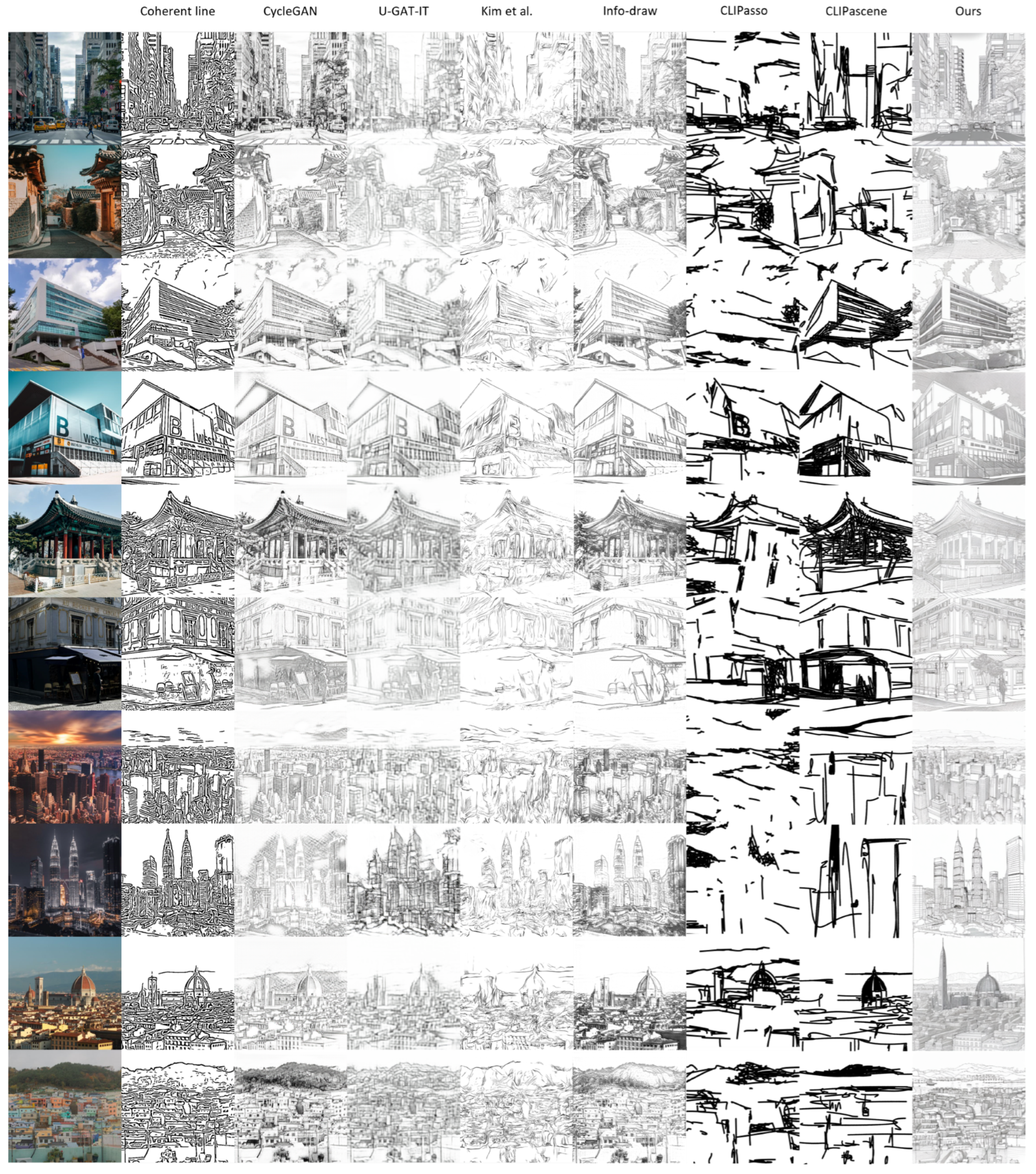

We compare our results produced by the image-to-sketch framework and the text-to-sketch framework. For the comparison of image-to-sketch framework, we sample seven important studies that generate sketch images [

1,

7,

11,

15,

21,

22,

23]. Among various categories of sketch synthesis studies, a Coherent Line [

1] is selected from pre-deep learning schemes, and CycleGAN [

7] is from general deep learning schemes. U-GAT-IT [

11] and Kim et al.’s [

15] are from sketch-specific deep learning schemes. Chan et al.’s [

21], Vinker et al.’s [

22] and Vinker et al.’s [

23] are from diffusion model-based schemes.

Figure 8 illustrates the sketch images from these schemes and ours. For the comparison of text-to-sketch framework, we sample two important studies [

25,

26].

Figure 9 illustrates the sketch images produced by these schemes and ours.

6. Evaluation

We evaluate sketch images synthesized using our framework using both a quantitative approach and a qualitative approach. In quantitative evaluation, we compare the results of the sampled studies [

1,

7,

11,

15,

21,

22,

23] with ours. Since these studies produce sketch images from an input photograph, we evaluate sketch images produced using our image-to-sketch framework. In qualitative evaluation, we evaluate both image-to-sketch results and text-to-sketch results. We hire twenty human participants and execute surveys on the results.

6.1. Quantitative Evaluation

For the seven important studies presented in

Figure 8, we estimate the following metrics, which have been frequently used for the evaluation of the synthesized images in many studies:

FID (Frechet Inception Distance): a metric that evaluates the quality and diversity of generated images, particularly in the context of generative models including GANs and diffusion models. FID (Frechet Inception Distance) is estimated for trained ResNet50 [

44] with an ImageNet-Sketch [

49] dataset.

ArtFID: an enhanced FID metric that measures the similarity of the contents of a stylized image and its original image. It not only calculates FID but also considers identity consistency between an input image and a synthesized sketch.

CLIP (Contrastive Language-Image Pre-training) score: a metric that measures text-to-image framework similarity. We extract features from both input photograph and sketch image using the CLIP encoder and compute a cosine similarity from the features.

PSNR (Peak Signal-to-Noise Ratio): a metric that measures the quality of a reconstructed image by comparing it to the original image.

SSIM (Structural Similarity Index): a metric that evaluates the similarity between two images considering luminance, contrast, and structure.

MS-SSIM (Multi-Scale Structural Similarity Index): an extension of the traditional SSIM metric. MS-SSIM takes into account variations in structure and texture across multiple scales of two images.

We estimate these metrics on the images in

Figure 8 and present the values in

Table 1. According to

Table 1, our result shows best scores for three metrics including FID, ArtFID and CLIP scores and a second-best score for PSNR. Our scheme is ranked low for SSIM and MS-SSIM. Among the six metrics, our result records three best ranks and one second best rank. Therefore, we conclude that our scheme produces higher-quality sketch images than the compared existing studies.

According to our comparison in

Table 1, our results show lower FID and ArtFID scores than other compared models. In our analysis, both FID and ArtFID measure the distances of two images. Therefore, the coincidence of the structures of the images affects both FID and ArtFID. Furthermore, the absence of unwanted artifacts in the sketch image also affects them. Since the structures of our results show best coincidence with those of the input photographs and the unwanted artifacts of our results are very rare, our results show lowest FID and ArtFID scores.

6.2. Qualitative Evaluation

As a qualitative evaluation, we execute a user study by hiring twenty participants. Seventeen of them are in their twenties and three are in their thirties. Eleven of them are female and nine are male. We present three questions as follows:

- Q1:

Evaluate the visual quality of the sketch image in a ten-point metric. Mark 10 for the best and mark 1 for the worst.

- Q2:

Evaluate the artifact in the sketch image in a ten-point metric. Mark 10 for an image free from artifacts and mark 1 for the image full of artifacts.

- Q3:

Evaluate the preservation of the content in the sketch image in a ten-point metric. Mark 10 for the image whose content coincides with that of the input and mark 1 for the image whose content is not preserved at all.

We execute our user study on both image-to-sketch and text-to-sketch frameworks.

6.2.1. Image to Sketch

For the user study on image-to-sketch frameworks, we sample the images in

Figure 8 and pose three questions to the participants. The scores averaged over the images for a sketch generation scheme are presented in

Table 2. In

Table 2, we can conclude that our scheme shows best results for the sampled image-to-sketch studies [

1,

7,

11,

15,

21,

22,

23].

6.2.2. Text to Sketch

For the user study on text-to-sketch frameworks, we sample the images in

Figure 9 and pose questions Q1 and Q2 to the participants. Since the text-to-sketch framework does not require an input photograph, question Q3 is not valid. The scores averaged over the images for a sketch generation scheme are presented in

Table 3. In

Table 3, we can conclude that our scheme show best results for the sampled text-to-sketch studies [

25,

26].

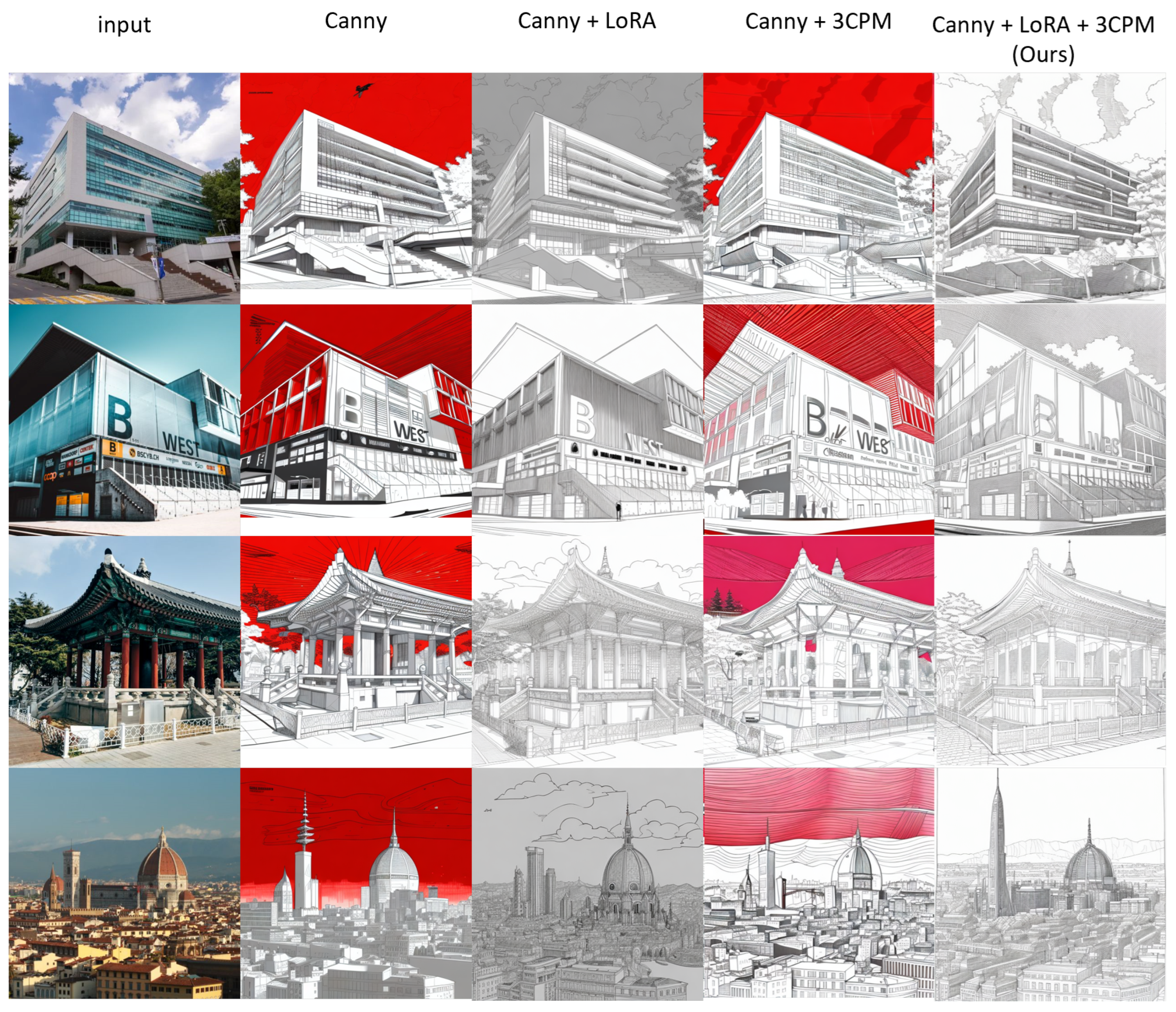

6.3. Ablation Study

In the ablation study, we combine various configurations of our framework and synthesize sketch images. We identify the configurations as Canny edge, LoRA, and 3CPM. We combine these configurations as Canny only, Canny + LoRA, Canny + 3CPM and Canny + LoRA + 3CPM. The results of this ablation study are illustrated in

Figure 10 and presented in

Table 4. As illustrated in

Figure 10, our framework with Canny only and Canny + 3CPM cannot successfully manage the color remaining problem, which results in red sky. The Configuration of Canny+LoRA resolves the color remaining problem. However, they cannot successfully produce details of sketch images. Our approach that combines Canny and LoRA and 3CPM simultaneously can produce sketch images that describe the details of the objects and resolves the color remaining problem.

6.4. Limitation

Our framework shows several limitations. The major limitation is that the results of our framework depend on the Canny edge. The scenes whose Canny edges are clear show better sketch results than those whose Canny edges are obscure and noisy. Another limitation comes from the pre-trained Stable Diffusion model, which is the backbone network of our framework. Since the backbone network is pre-trained, those scenes in the dataset used for the pre-training affect our sketch images. Therefore, our framework sometimes produces unexpected objects in the sketch. Finally, our framework is short for controlling the level of abstraction or the sketch style in sketch synthesis.

7. Conclusions and Future Work

In this paper, we present DALS: a Diffusion-based Artistic Landscape Sketch generation framework. Even though landscape sketch is an important genre of sketch artwork, few studies have tried to learn and apply artistic landscape sketch drawing techniques such as vanishing point and level of detail. The pre-deep learning schemes have limitations in conducting artistic techniques and in controlling unwanted artifacts. The deep learning schemes have limitations in collecting proper training datasets for sketch generation models. Our framework analyzes artistic landscape drawing techniques and applies techniques such as vanishing points and a three-channel perspective map (3CPM) for generating sketch images. Furthermore, since our framework requires a relatively small-sized dataset, we employ a pre-trained Stable Diffusion model as a backbone network in our framework. Our framework can produce sketch images in bi-modal frameworks: image to sketch and text to sketch.

Our first plan is to extend the capability of our framework to cover more diverse landscape images by collecting diverse sketch datasets. We also plan to devise and implement an optimal structure that can produce and control the sketch images in various formats including vectorized sketch. We also plan to enhance our framework to control the levels of abstraction and style of sketch.