1. Introduction

The era of information technology allows the collection of massive amounts of data, and the association networks between variables have gained wide attention from researchers. With the most common assumption in statistics and machine learning applications where variables are multivariate normally distributed, Gaussian graphical models have been widely applied to measure the association networks with complex interaction patterns for a large number of continuous variables. In particular, it describes the conditional dependencies among random variables using the inverse of the covariance matrix, also known as the precision matrix or concentration matrix. When the data have the number of observations n smaller than the number of variables p, the inverted maximum likelihood estimation of the covariance matrix is considered an optimal solution to estimate the precision matrix. However, under high-dimensional settings with , the covariance matrix is usually sparse with a mass of zero elements due to conditional independencies, and its maximum likelihood estimation is not invertible, so the precision matrix is unavailable.

Most existing methods of the sparse precision matrix estimation and structure learning can be categorized as a likelihood-based approach, where the penalized likelihood function is maximized for estimation, such as in graphical Lasso [

1,

2], or a regression-based approach, estimating sparse regression models separately while considering the neighborhood selection, such as [

3,

4]. Under the Bayesian framework, algorithms have been proposed through both approaches with applications of the horseshoe prior [

5], the state-of-the-art shrinkage prior, using multivariate normal scale mixtures. Wang [

6] proposed a fully Bayesian treatment with block Gibbs sampler to maximize the penalized log-likelihood through column-wise updating. Li et al. [

7] adopted the horseshoe priors into Wang’s work. Williams et al. [

8] used the horseshoe estimates of projection predictive selection given in Piironen and Vehtari [

9] to determine the precision matrix structure. Most selection methods using shrinkage priors cannot shrink small numbers to exactly 0. It is usually implemented with a pre-specified parameter threshold or cross-validation to choose a tuning parameter that maximizes the log-likelihood of either precision matrix or regression models.

When practicing statistics on real-world data, missing data are ubiquitous and inevitably obstruct the application of existing methods. The simple ignorance of missing data is not recommended, especially for high-dimensional data that have a small number of observations. In addition to the loss of information, the complete case or the available case of those data missing at random (MAR) do not correctly represent the whole population, as they are not sampled at random [

10,

11]. Single imputation that replaces the missing values by a real number, for example, observed data means, tends to distort the data distribution and underestimate the variance, which is crucial for covariance or precision matrix estimation. Thus, it is necessary to find an appropriate approach to deal with the missing values and to give the estimation of the model.

Under incomplete high-dimensional data settings, only several methods have been proposed to give precision matrix estimation and covariance selection. Employing the graphical lasso with

-penalized term, missGLasso proposed by Städler and Bühlmann [

12] is a likelihood-based method estimating the precision matrix with missing value imputed by conditional distributions. Kolar and Xing [

13] improved this work by plugging the sample covariance matrix into the penalized log-likelihood maximization problem. Following this, the cGLasso proposed by Augugliaro et al. [

14,

15,

16] allows the process of censored data or a combination of both. As a local optimization method, the EM algorithm can be slow in handling high-dimensional data, and stability is not guaranteed.

To resolve this challenge, we develop an approach to estimate the precision matrix and impute missing values, as well as perform covariance selection. Yang et al. [

17] shows that the simultaneously impute and select (SIAS) method outperforms the impute then selection (ITS) method for the problem of fitting a linear regression model using a traditional dataset that has

. Inheriting the advantage of SIAS, Zhang and Kim [

18] proposed an approach to the imputation and selection for the problem of fitting linear regression models using high-dimensional data. We extend these approaches to solve the problem of estimating sparse precision matrix on high-dimensional data. Similar to SIAS and the approach proposed in Zhang and Kim [

18], the key feature of this paper is to nest both data imputation and model shrinkage estimation in a combined Gibbs sampling process such that we optimize imputation for model estimation without losing of valuable information. We utilize the multiple imputation for missing values and horseshoe shrinkage prior to precision matrix estimation. After that, we introduce the 2-means clustering into the covariance selection by clustering shrinkage factors into signal and noise groups, which, as a post-iteration selection, is fast and efficient. This study fills the gap of the sparse precision matrix estimation and covariance selection for incomplete high-dimensional data under the Bayesian framework.

The remainder of this paper is organized as follows. In

Section 2, we start with a brief review of regression-based precision matrix estimation for the Gaussian graphical model. Then, we implement the linear regression model estimation using horseshoe prior in the precision matrix estimation and introduce the covariance selection using 2-means clustering. After that, we propose our approach to simultaneously impute missing values and estimate the precision matrix followed by the covariance selection. In

Section 3, we conduct extensive simulation analyses to show the efficiency and accuracy of our proposed algorithm in comparison to available methods. In

Section 4, we illustrate it on real data and show the necessity of it. In

Section 5, we provide further discussion.

2. Materials and Methods

In this section, we define notations with a brief review of the regression-based precision matrix estimation of the Gaussian graphical model and its covariance selection through neighborhood selection. Our approach to estimating the sparse precision matrix is described together with 2-means clustering covariance selection. We propose an algorithm for multiple imputation with Gaussian graphical model estimation by horseshoe (MI-GEH) algorithm.

2.1. Gaussian Graphical Model Estimation and Neighborhood Selection

Consider random variables following a multivariate normal distribution with mean and covariance matrix . The precision matrix is the inverse of that .

In the form of an undirected graph with a set of vertices and a set of edges , the Gaussian graphical model characterizes conditional relationships of variables . If two variables and , , are conditionally dependent given all remaining variables, which corresponds to a non-zero element in the precision matrix that , then an edge in connects vertices i and j as neighbors in . On the contrary, any unconnected pairs of vertices indicate conditional independence corresponding to zero elements in the precision matrix.

The neighborhood selection, a method for covariance selection, proposed by Meinshausen and Bühlmann [

3], aims at identifying the smallest subset of vertices for each node that given variables indexed by selected vertices, the node is independent of all remaining variables. This can be approached by fitting node-wise regression models. Conducting a linear regression of

on all remaining variables that:

where

is the regression coefficients, and residual

. Then, using the conditional normal distribution, elements of the precision matrix

can be written in terms of regression coefficients and residual variance [

19] that:

Thus, if , in other words if variable is not selected into the regression of , then such that and are not connected in . Recognizing the potential difference between and , the estimation of precision matrix will be the average of.

Moreover, the difficulty in estimating the precision matrix is with its positive definite constraint. Several works [

20,

21] have shown that the node-wise regression estimates of the precision matrix are asymptotically positive definite under regularity conditions.

2.2. Gaussian Graphical Model Estimation by Horseshoe Prior

Given the advantages of horseshoe priors in identifying signals in high-dimensional settings by utilizing half-Cauchy distributions for local and global shrinkage parameters [

5], Makalic [

22] proposed a Gibbs sampling for linear regression model on standardized data applying horseshoe priors on regression coefficients, and Zhang and Kim [

18] modified it for data without standardization. We apply Zhang and Kim’s work on our nodewise linear regression that, for variable

, the full conditionals of model (

1) are:

where

.

is the local shrinkage parameter of variable

with variance

,

is the global shrinkage parameter in the regression on variable

, and

and

are auxiliary variables for

and

. Through the use of horseshoe priors, the local and global shrinkage parameters

and

shrink coefficients of noise towards zero while allowing coefficients for signals to remain relatively large [

5,

18,

22].

If no thresholding is applied, then implementing the above Gibbs sampler with Equation (

1) produces an estimation of precision matrix with many entries close to 0. Fan et al. [

21] elaborated on thresholds usually applied with the precision matrix estimation. Given that soft-thresholding usually outperforms hard-thresholding with more flexible regularization methods, we propose a post-iteration 2-means clustering strategy that clusters the variables by the value of shrinkage factors. The shrinkage factor:

measures the magnitude of shrinkage for each coefficient

, which corresponds to variable

, when regressed on variable

, from its maximum likelihood estimate. When

is close to 0, the corresponding variable

is an important variable that should be selected for the regression of

. On the contrary, when

is close to 1, then variable

is a noise variable that should not be selected for the regression of

. We select

variables for

through 2-means clustering with the objective to minimize:

where

is the set of

of selected variables,

is the maximum of

in the regression of

, and

is the set of

of unselected variables. Sets

and

are decided through the number of variables selected

that

contains the first

smallest

s, and

contains the rest

s. Through a greedy search on Equation (

5), we minimize the sum of the distance between expected shrinkage factors and their respective cluster centers, while keeping the cluster centers fixed at 0 and

. To satisfy the symmetry constraint of precision matrix, we consider

and

as conditionally dependent in the final covariance selection only if both

and

are selected in the regression of the other variable.

2.3. Multiple Imputation with Graphical Model Estimation by Horseshoe (MI-GEH)

When there are missing values, we optimize the data imputation with consideration of the Gaussian graphical model estimation. For notation simplification, we use

to indicate the set

. Considering incomplete variable

and remaining variables

that

, we impute

following the conditional distribution that:

Sweeping through all regression and imputation parameters with calculation of precision and covariance matrix in MCMC procedure, we present the MI-GEH Algorithm as follows.

In each chain, initial values may be set as follows: 0 for , a random number of either 0 or 1 for , 1 for , and . Then:

- 1.

Regression Parameters.

- (a)

For each of

p variables, update horseshoe estimates and get

p sets of

,

following conditional distributions in (

3).

- (b)

Calculate

using Equation (

4).

- 2.

Precision Matrix and Covariance Matrix.

- (a)

Update precision matrix

with regression estimates

and

following their relationship in Equation (

2).

- (b)

Symmetrize the precision matrix and calculate the covariance matrix .

- 3.

Imputation of data . Draw random numbers following normal distribution described in (

6) to impute data and get

.

- 4.

Iteration of steps 1–3. Run the above steps to produce a Markov chain of regression and imputation parameters.

After iteration, calculate the posterior mean of regression parameters, precision matrix, and covariance matrix, then select covariance following the 2-means clustering strategy described in

Section 2.2. Covariance that has been selected in all chains is included in the final model. Lastly, set all indexes of the precision and covariance matrix that were not selected to zero.

4. Application

We now illustrate the MI-GEH on a high-dimensional dataset of Utah residents with northern and western European ancestry (CEU). A detailed description of this dataset is given in Bhadra and Mallick [

26], and it is available on Wellcome Sanger Institute website (

ftp://ftp.sanger.ac.uk/pub/genevar (accessed on 20 February 2024) and R package

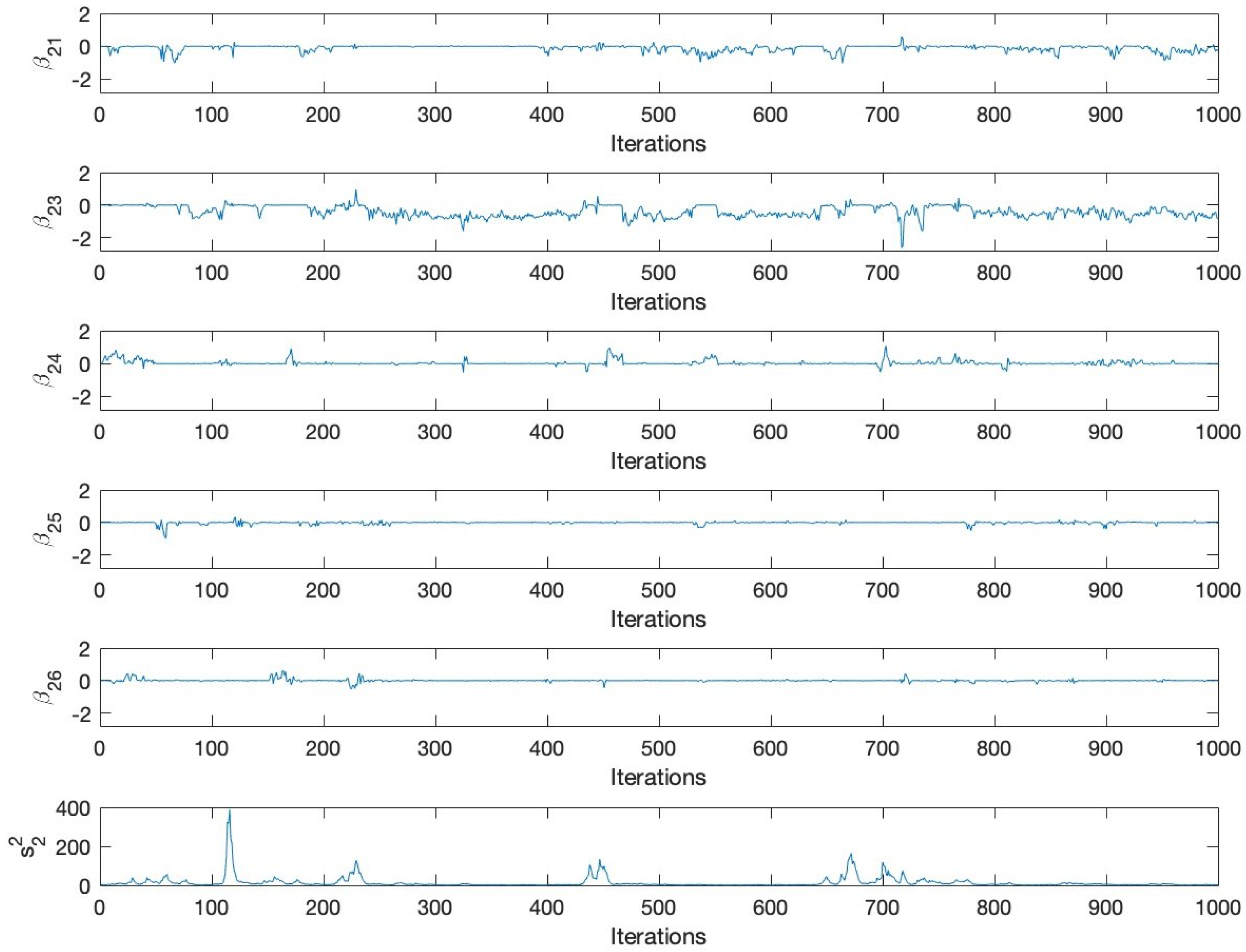

BDgraph. This dataset is fully observed with 60 observations and 100 gene variables. To examine the performance of MI-GEH, we generate missing values for 5 gene variables with 10% and 20% missing rates under MCAR and MAR mechanisms. The probability of missing under MAR follows a logistic regression model depending on the fully observed variable with a coefficient of 1. We replicate each of the 4 scenarios 100 times.

In this application, we generate 5 chains with 1000 iterations including a 500 burn-in period for MI-GEH. To summarize the result, we take the mean of each index over all estimated precision matrix. Besides, we applied the approach GHS by Li et al. [

27] and GLasso with cross-validation [

25] on the original dataset without missing values.

Figure 2 displays the inferred graphs and

Table 6 gives the numbers of vertices and edges selected. Among the three methods, MI-GEH provides the most refined neighborhood selection for most variables in the dataset. It reduces the graphical structures to the smallest number of edges for 99 out of 100 vertices. Though GHS selected 30 more edges than MI-GEH does, it covered 15 less vertices. Moreover, as a real data analysis, it is difficult for us to know which edges should be selected as true positives from the dataset itself. To further examine the selection, we compare the results of MI-GEH and GHS with that of GLasso, the most widely used method for GGM. There are 85 same edges selected by MI-GEH and GLasso, which is 87.6% of edges selected by MI-GEH, and 109 same edges selected by GHS and GLasso, which is 85.8% of edges selected by GHS, showing that there is a significant amount of overlap with a potential that MI-GEH has the selection precision no smaller than GHS does.

5. Discussion

This study proposes a Bayesian approach of sparse precision matrix estimation and selection on high-dimensional incomplete data. The proposed MI-GEH optimizes the multiple imputation for precision matrix estimation by nesting them into one MCMC procedure. The post-iteration selection with 2-means clustering on shrinkage factors lets the data speak for themselves and offers efficient selection as demonstrated by simulation analysis and the genetics data analysis. The predominant selection results of simulation analysis show the advantage of the proposed methods in covariance selection compared to the three commonly used alternative approaches, mean-GLasso, MF-GLasso, and missGLasso. Both mean-GLasso and MF-GLasso are ITS approaches and have similar performance. MissGLasso is a useful procedure and performs well in many settings in case the mean and variance of complete cases are representative of the data. However, in real settings, there are situations where complete cases are only a small proportion of full data, resulting in a challenging condition where it is not representative of the full data. The proposed MI-GEH outperforms other approaches in most settings including these challenging conditions, performing well for selection and has an adequate performance in matrix accuracy, which happens for shrinkage methods. The illustration of the proposed MI-GEH on genetic data compared with GLasso [

1] and GHS [

27] on completed data shows that this is useful for real-world data.

In line with the work of Yang et al. [

17] and Zhang and Kim [

18], our simulation results provide further evidence that the simultaneous imputation and selection strategy performs better than conducting imputation and selection sequentially for precision matrix estimation in terms of the regression-based estimation. Multiple imputation employed in these methods are designed to provide valid inference of model estimation. The horseshoe priors that unequally scale regression parameters allow shrinkage factors to vary among variables so that applying a post-iteration 2-means clustering gives us efficient selection with outstanding results.

Future research can take several perspectives, including consideration of estimation accuracy. While the shrinkage estimator provides efficient model selection, it yields biased results. It would also be interesting to combine multiple imputation approaches with different priors applied to complex precision matrix structures. Notably, the proposed MI-GEH algorithm might also be applicable to complete datasets by omitting the imputation steps. As such, further investigation of its performance further in such contexts, along with a thorough evaluation of common variable selection issues, such as overfitting, may be warranted.