Optimal Combination of the Splitting–Linearizing Method to SSOR and SAOR for Solving the System of Nonlinear Equations

Abstract

1. Introduction

2. Reviews of Two Symmetric Iterative Methods for Linear Equations

3. Nonlinear Equations

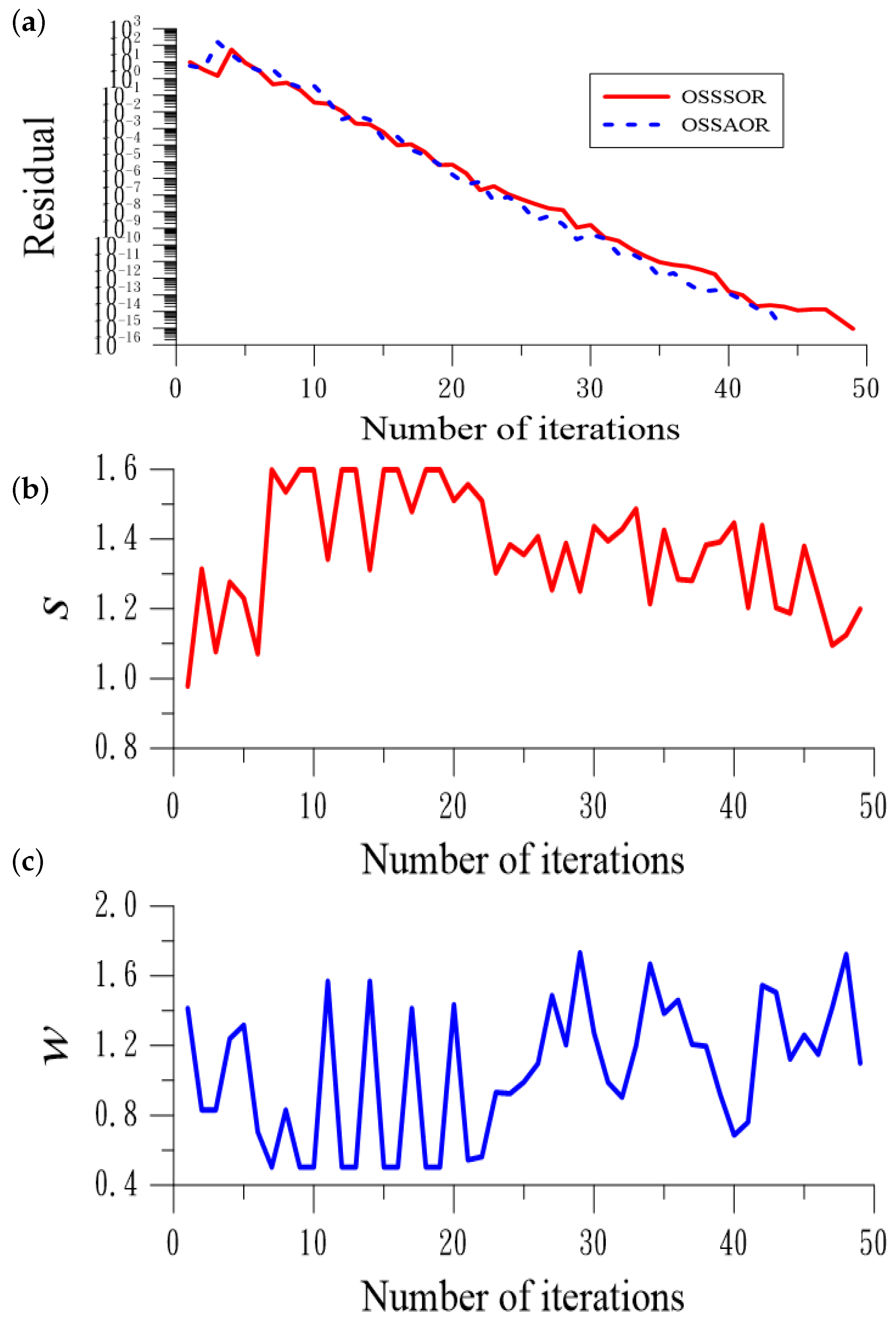

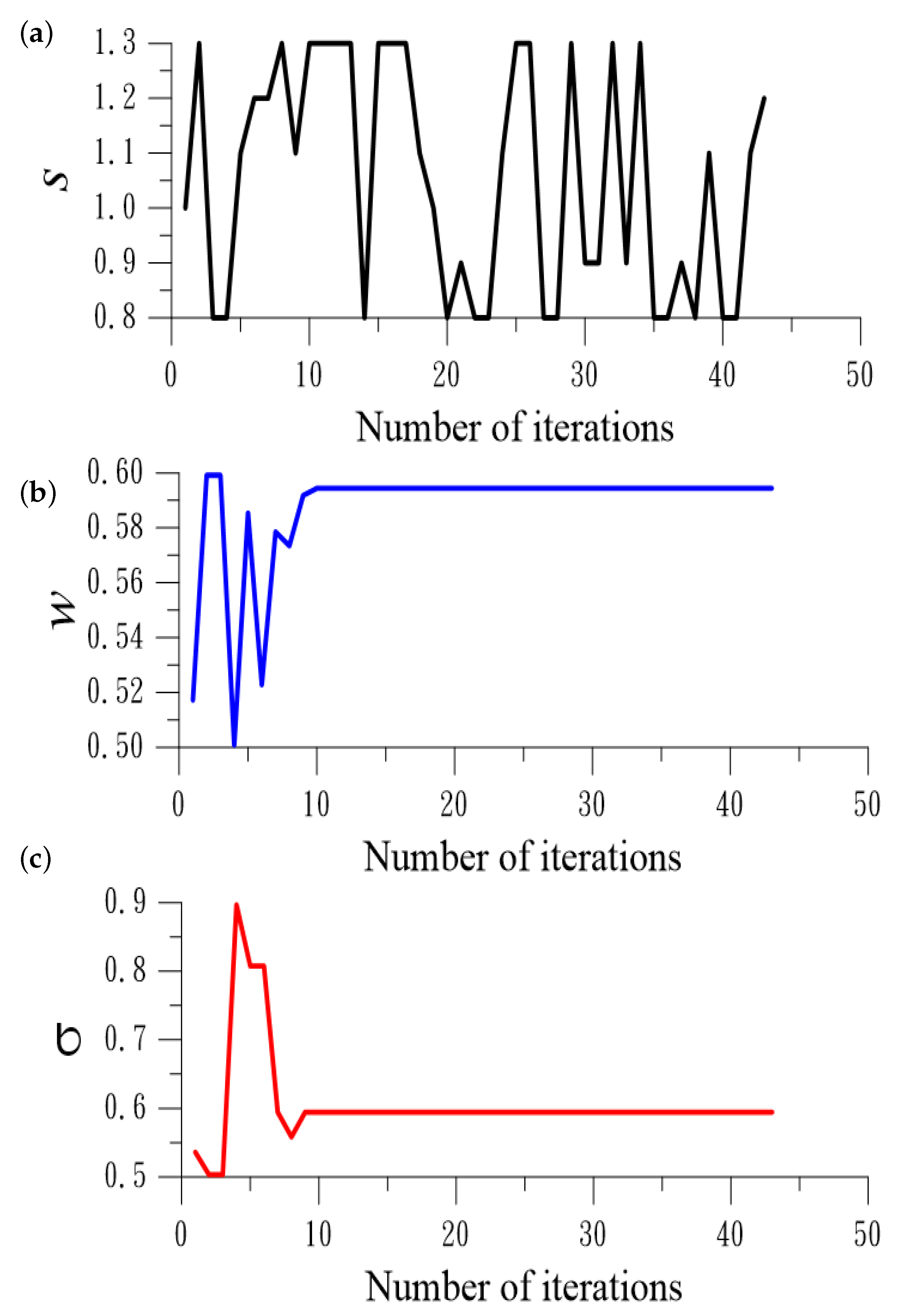

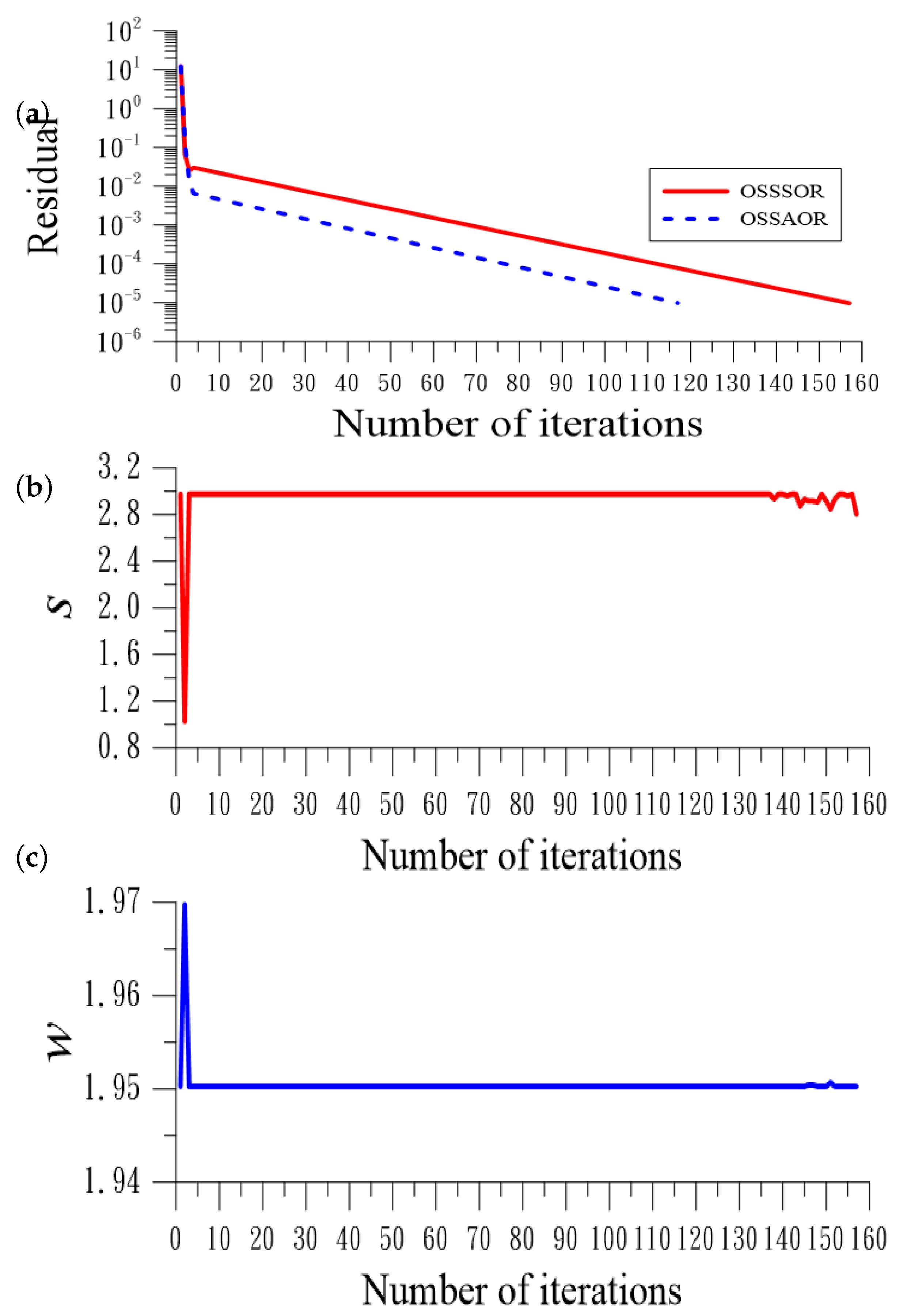

3.1. Optimal Splitting Symmetric Successive Over-Relaxation Method

3.2. Optimal Splitting Symmetric Accelerated Over-Relaxation Method

4. Numerical Algorithm

| Algorithm 1: FSOL (n,A,b,c) |

Give n, and DO I = 1,n SUM = 0 DO J = 1, I − 1 SUM = SUM + A(I,J) × c(J) Enddo of J c(I) = (b(I) − SUM)/A(I,I) Enddo of I |

| Algorithm 2: BSOL (n,A,b,c) |

Give n, and DO K = 1,n I = n − K+1 SUM = 0 DO J = I + 1,n SUM = SUM + A(I,J)×c(J) Enddo of J c(I) = (b(I) − SUM)/A(I,I) Enddo of K |

| Algorithm 3: OSSSOR |

1: Give n, , initial value , and 2: Give a, b, c and d 3: Do 4: Call GOLDEN(a, b, c, d, , , ) 5: Compute 6: Compute 7: Compute , and 8: Compute 9: Compute 10: Call FSOL(n,,,) 11: Compute 12: Compute 13: Call BSOL(n,,,) 14: If , stop 15: Otherwise, go to 3 |

5. Local Convergence

6. Numerical Tests of Nonlinear Equations

6.1. Example 1

6.2. Example 2

6.3. Example 3

6.4. Example 4

6.5. Example 5

6.6. Example 6

7. Conclusions

- There are two parameters in the OSSSOR, while that in the OSSAOR, there are three.

- Using the maximal projection technique, we derived optimal values of the parameters in the OSSSOR and OSSAOR to accelerate the convergence speed.

- Searching the minimization in a preferred range is easily performed through a few operations in the golden section search algorithms.

- The new methods could provide a good choice of the optimal values of the parameters at each iteration.

- Numerical tests indicated that the OSSAOR is convergent faster than the OSSSOR; however, the OSSAOR is more expensive than the OSSSOR to compute three parameters.

- The proposed OSSSOR and OSSAOR can provide very accurate solutions through a few iterations, as reflected in the very small value of the residual, and the high values of COC.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Liu, C.S. A manifold-based exponentially convergent algorithm for solving non-linear partial differential equations. J. Mar. Sci. Tech. 2012, 20, 441–449. [Google Scholar]

- Ahmad, F.; Tohidi, E.; Carrasco, J.A. A parameterized multi-step Newton method for solving systems of nonlinear equations. Numer. Algorithms 2016, 71, 631–653. [Google Scholar] [CrossRef]

- Ullah, M.Z.; Serra-Capizzano, S.; Ahmad, F. An efficient multi-step iterative method for computing the numerical solution of systems of nonlinear equations associated with ODEs. Appl. Math. Comput. 2015, 250, 249–259. [Google Scholar] [CrossRef]

- Ahmad, F.; Tohidi, E.; Ullah, M.Z.; Carrasco, J.A. Higher order multi-step Jarratt-like method for solving systems of nonlinear equations: Application to PDEs and ODEs. Comput. Math. Appl. 2015, 70, 624–636. [Google Scholar] [CrossRef]

- Budzko, D.; Cordero, A.; Torregrosa, J.R. Modifications of Newton’s method to extend the convergence domain. SeMA J. 2014, 66, 2254–3902. [Google Scholar] [CrossRef]

- Qasima, S.; Ali, Z.; Ahmadb, F.; Serra-Capizzano, S.; Ullah, M.Z.; Mahmoode, A. Solving systems of nonlinear equations when the nonlinearity is expensive. Comput. Math. Appl. 2016, 71, 1464–1478. [Google Scholar] [CrossRef]

- AL-Obaidi, R.H.; Darvish, M.T. A comparative study on qualification criteria of nonlinear solvers with introducing some new ones. J. Math. 2022, 2022, 4327913. [Google Scholar] [CrossRef]

- Capdevila, R.R.; Cordero, A.; Torregrosa, J.R. Convergence and dynamical study of a new sixth order convergence iterative scheme for solving nonlinear systems. AIMS Math. 2023, 8, 12751–12777. [Google Scholar] [CrossRef]

- Qureshi, S.; Chicharro, F.I.; Argyros, I.K.; Soomro, A.; Alahmadi, J.; Hincal, E. A new optimal numerical root-solver for solving systems of nonlinear equations using local, semi-local, and stability analysis. Axioms 2024, 13, 341. [Google Scholar] [CrossRef]

- Quarteroni, A.; Sacco, R.; Saleri, F. Numerical Mathematics; Springer Science: New York, NY, USA, 2000. [Google Scholar]

- Hadjidimos, A. Accelerated overrelaxation method. Math. Comput. 1978, 32, 149–157. [Google Scholar] [CrossRef]

- Hadjidimos, A.; Yeyios, A. Symmetric accelerated overrelaxation (SAOR) method. Math. Comput. Simul. 1982, XXIV, 72–76. [Google Scholar] [CrossRef]

- Hadjidimos, A. Successive overrelaxation (SOR) and related methods. J. Comput. Appl. Math. 2000, 123, 177–199. [Google Scholar] [CrossRef]

- Liu, C.S. A double optimal iterative algorithm in an affine Krylov subspace for solving nonlinear algebraic equations. Comput. Math. Appl. 2015, 70, 2376–2400. [Google Scholar] [CrossRef]

- Yeyios, A. A necessary condition for the convergence of the accelerated overrelaxation (AOR) method. J. Comput. Appl. Math. 1989, 26, 371–373. [Google Scholar] [CrossRef]

- Yeih, W.; Chan, I.Y.; Ku, C.Y.; Fan, C.M.; Guan, P.C. A double iteration process for solving the nonlinear algebraic equations, especially for ill-posed nonlinear algebraic equations. Comput. Model. Eng. Sci. 2014, 99, 123–149. [Google Scholar]

- Golub, G.H.; Van Loan, C.F. Matrix Computations; The Johns Hopkins University Press: Baltimore, MD, USA, 1996. [Google Scholar]

- Liu, C.S.; El-Zahar, E.R.; Chang, C.W. Dynamical optimal values of parameters in the SSOR, AOR and SAOR testing using the Poisson linear equations. Mathematics 2023, 11, 3828. [Google Scholar] [CrossRef]

- Hirsch, M.; Smale, S. On algorithms for solving f(x)=0. Commun. Pure Appl. Math. 1979, 32, 281–312. [Google Scholar] [CrossRef]

- Liu, C.S.; Atluri, S.N. A novel time integration method for solving a large system of non-linear algebraic equations. Comput. Model. Eng. Sci. 2008, 31, 71–83. [Google Scholar]

- Atluri, S.N.; Liu, C.S.; Kuo, C.L. A modified Newton method for solving non-linear algebraic equations. J. Marine Sci. Tech. 2009, 17, 238–247. [Google Scholar] [CrossRef]

- Brown, K.M. Computer oriented algorithms for solving systems of simultaneous nonlinear algebraic equations. In Numerical Solution of Systems of Nonlinear Algebraic Equations; Byrne, G.D., Hall, C.A., Eds.; Academic Press: New York, NY, USA, 1973; pp. 281–348. [Google Scholar]

- Han, T.; Han, Y. Solving large scale nonlinear equations by a new ODE numerical integration method. Appl. Math. 2010, 1, 222–229. [Google Scholar] [CrossRef]

| n | 4 | 5 | 6 | 7 | 10 | 15 |

|---|---|---|---|---|---|---|

| NI (Newton) | 12 | 15 | 15 | 8 | >1000 | >1000 |

| NI (OSSSOR) | 28 | 41 | 55 | 71 | 134 | 199 |

| ME (Newton) | 7.9 | 11.59 | 10 | 15 | ||

| ME (OSSSOR) |

| m | 3 | 5 | 10 | 15 | 20 | 25 |

|---|---|---|---|---|---|---|

| NI | 13 | 17 | 25 | 32 | 38 | 42 |

| ME | ||||||

| RES |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, C.-S.; El-Zahar, E.R.; Chang, C.-W. Optimal Combination of the Splitting–Linearizing Method to SSOR and SAOR for Solving the System of Nonlinear Equations. Mathematics 2024, 12, 1808. https://doi.org/10.3390/math12121808

Liu C-S, El-Zahar ER, Chang C-W. Optimal Combination of the Splitting–Linearizing Method to SSOR and SAOR for Solving the System of Nonlinear Equations. Mathematics. 2024; 12(12):1808. https://doi.org/10.3390/math12121808

Chicago/Turabian StyleLiu, Chein-Shan, Essam R. El-Zahar, and Chih-Wen Chang. 2024. "Optimal Combination of the Splitting–Linearizing Method to SSOR and SAOR for Solving the System of Nonlinear Equations" Mathematics 12, no. 12: 1808. https://doi.org/10.3390/math12121808

APA StyleLiu, C.-S., El-Zahar, E. R., & Chang, C.-W. (2024). Optimal Combination of the Splitting–Linearizing Method to SSOR and SAOR for Solving the System of Nonlinear Equations. Mathematics, 12(12), 1808. https://doi.org/10.3390/math12121808