Abstract

Transformer-based models for action segmentation have achieved high frame-wise accuracy against challenging benchmarks. However, they rely on multiple decoders and self-attention blocks for informative representations, whose huge computing and memory costs remain an obstacle to handling long video sequences and practical deployment. To address these issues, we design a light transformer model for the action segmentation task, named LASFormer, with a novel encoder–decoder structure based on three key designs. First, we propose a receptive field-guided distillation to realize mode reduction, which can overcome more generally the gap in semantic feature structure between the intermediate features by aggregated temporal dilation convolution (ATDC). Second, we propose a simplified implicit attention to replace self-attention to avoid its quadratic complexity. Third, we design an efficient action relation encoding module embedded after the decoder, where the temporal graph reasoning introduces an inductive bias that adjacent frames are more likely to belong to the same class of model global temporal relations, and the cross-model fusion structure integrates frame-level and segment-level temporal clues, which can avoid over-segmentation independent of multiple decoders, thus reducing further computational complexity. Extensive experiments have verified the effectiveness and efficiency of the framework. Against the challenging 50Salads, GTEA, and Breakfast benchmarks, LASFormer significantly outperforms the current state-of-the-art methods in accuracy, edit score, and F1 score.

MSC:

68T01; 68T07

1. Introduction

Action segmentation algorithms for automatic recognition and segmentation of human activities are crucial due to their wide application, such as in robot automatics, video surveillance, and skill assessment [1], and whose goal is to assign the action category to every frame from an untrimmed video. To obtain a global temporal understanding of video sequences, most action segmentation methods [2,3,4] focus on capturing complex temporal structures with various multi-stage temporal models. They mainly use temporal convolution networks (TCNs) [5,6] to model the temporal relations among frames. The TCNs enhance long-range receptive fields by stacking larger temporal kernels increasingly; however, the fine-grained information for frame recognition will be reduced with the increasing depth of the convolutional layers. Thus, some work [7] focuses on introducing transformer architecture to action segmentation tasks in view of its strong ability of sequence modeling.

Transformers, originally stemming from natural language processing (NLP) tasks [8], have obtained various state-of-the-art performances for many vision tasks, including image classification [9], object detection [10,11,12], and semantic segmentation [13]. ASFormer [7] is the first transformer architecture for action segmentation and explicitly introduced local connectivity inductive and hierarchical representation to rebuild the transformer, obtaining impressive improvement, and whose self-attention mechanism plays a big role in hugely improving performance. However, existing transformer-based models [7,14] rely on multiple decoders built with multiple self-attention blocks and a large number of input tokens, whose huge computational complexity and memory cost limit their application for handling long video sequences. In addition, they are hard to apply in excavating the contextual relations among adjacent actions, which leads to incorrect predictions at local temporal regions. There have been three kinds of approaches in other research fields for reducing computational complexity. First is reducing redundant tokens by sampling [15,16,17]; however, this can easily lead to temporally discontinuous outputs. Second is replacing self-attention with other operations, which generally comes at the cost of reduced performance [18,19]. Third is the integration of convolution structures and the transformer [20,21]. This strategy has been adopted in previous action segmentation approaches [7], and while they failed to overcome the fundamental problem of the quadratic complexity caused by self-attention, the computational complexity is still very high.

In this work, we propose a light yet effective transformer framework for action segmentation called LASFormer. LASFormer is motivated by two discoveries. First, we discovered that self-attention actually obtains the channel attention from token connection, which motivated us to design a simplified implicit attention (SIA) and a simplified implicit cross-attention (SICA) to replace self-attention and cross-attention, respectively, to avoid their quadratic complexity. Second, we discovered that the main function of multiple decoders is to improve the EDIT score and avoid over-segmentation while maintaining frame-wise accuracy. Thus, we design an action relation encoding (ARE) module that realizes the same function independent of the stacking operation by temporal graph reasoning (TGR) and a cross-model fusion structure (CMF), which models segment-level temporal relations and integrates frame-level feature clues to increase the representation capability. By jointly training the decoder and encoder, our LASFormer can obtain superior performance than existing transformer-based methods, but with less computation and a lower memory cost. In conclusion, the main contributions of this work are as follows:

- We propose a light yet effective transformer framework for action segmentation called LASFormer, which can be effective in learning temporal structure representation with lower computational costs and is thus more efficient for handling long video sequences.

- We propose receptive field-guided distillation (RFD) to realize mode reduction, which can overcome more generally the gap in semantic feature structure between the intermediate features of teacher and student by the aggregated temporal dilation convolution (ATDC).

- We propose the SIA and SICA, which can replace SA and CA, respectively, thus reducing the inference latency. Also, we propose an ARE embedded after the decoder, which utilizes the designed TGR and CMF to excavate contextual relations among actions more accurately, reducing over-segmentation errors.

- Our approach achieves state-of-the-art performance in accuracy, edit score, and F1 score on three popular benchmarks, 50Salads, GTEA, and Breakfast, and LASFormer is demonstrated to be more efficient than existing transformer-based methods in the number of model parameters, FLOPs, and GPU memory cost.

2. Related Work

2.1. Action Segmentation

We focus on fully supervised action segmentation, a unique video understanding task on complex videos, instead of other learning methods [22,23]. Early works [24] on this task mainly focus on capturing short-term temporal dependency to extract spatial and temporal features from the sliding-window paradigm and Markov models for multi-stream neural networks. Since then, perceiving long-range temporal dependencies has become a paradigm goal. To achieve it, sequential models [25,26] have been designed iteratively, but this is limited by information forgetting [5,6]. To solve this issue, a rich variety of temporal models have been employed, where TCNs [3,27,28] are sought after due to their efficient stacking for capturing long-term temporal dependencies; subsequent complementary techniques [29,30] have also been explored based on this. For example, representation learning with hierarchical patterns [31], text prompts [32], domain adaptation [2], structure search [4], uncertainty awareness by Monte Carlo sampling [33], and boundary awareness [34,35]. Compared with TCNs, transformer-based structures [7,14] are more competitive due to their strong ability of sequence modeling, but they carry huge computational costs. For example, ASFormer [7] relies on multiple decoders and self-attention (SA) modules with high computational complexity to achieve good performance. TUT [14] employs a pure transformer model that adopts temporal sampling to reduce computational costs, but its effect is little due to multiple SA modules and other pure transformer structures. These concerns inspired us to explore an effective light transformer structure to reduce the computational cost and, especially, to resolve the issue of the huge cost of SA. We also explore relational reasoning at the segment level in the light transformer, which existing transformers fail to realize. Although GTRM [36] and UVAST [22] -leveraged graph neural networks and sequence-to-sequence translation have realized this, they ignored the frame-level temporal details, thus leading to many over-segmentation errors. Our action relational reasoning takes care of the frame-level temporal details and can overcome over-segmentation errors.

2.2. Study on Efficient Transformers

Transformers, originally stemming from NLP tasks [8], utilize a self-attention (SA) mechanism to capture dependencies among elements. Due to the strong capability of sequence modeling, hybrid transformers have been successfully applied to many vision tasks [9,10,13], including image classification, object detection, and semantic segmentation. However, few works [7,14] have applied action segmentation, as it is limited by its huge computational costs. Some researchers in other fields have studied efficient transformer models that explore attention restrictions to local windows, such as Spvit [37], A-Vit [17], and patch slimming [16], where a sampling [15,16,17] strategy is usually adopted; however, such methods also easily lead to spatial discontinuous outputs. The integration of convolution structures and a transformer [7,21,38] is a typical method of lightening the transformer, such as in typical models like MobileNet in Uniformer [20] or MobileNetV2 in MobileViT [39] and so on, but they fail to overcome the fundamental problem of the quadratic complexity caused by SA. Also, there are works exploring the alternative of SA, such as a cyclic shift in Swin [40] and a spatial pooling operator in MetaFormer [19]. However, they generally come at the cost of a reduced performance [18,19]. This issue inspired us to design an effective and efficient SA alternative solution.

3. Methods

3.1. Overview

3.1.1. Main Components

In this work, we propose LASFormer, a light transformer built with a novel encoder–decoder structure, to tackle the action segmentation task based on three main designs. First, we propose the SIA and SICA, which replace self-attention and cross-attention, respectively, to avoid their quadratic complexity and are essentially the SA mechanisms but implemented with lower complexity and nearly without the cost of reduced performance. Second, we propose the RFD to realize mode reduction from the teacher LASFormerV2 to the student LASFormer, which can overcome the gap in semantic feature structure between the intermediate features of the teacher and student by the aggregated temporal dilation convolution (ATDC). Third, we design an ARE module to facilitate action relational reasoning at the segment level, which can integrate frame-level and segment-level temporal clues to overcome over-segmentation accurately with no need to stack multiple decoders, thus reducing computational and memory costs. Compared to the existing transformer-based methods, our LASFormer is super light and applicable to processing long input sequences. The details of each technique are shown in other sections.

3.1.2. The Pipeline of Proposed Framework

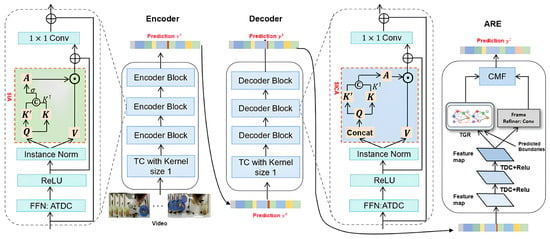

Figure 1 shows the structure of the student network, LASFormer. The T-frame untrimmed video is first fed to a pre-trained network, I3D [24], to obtain frame-wise features, which are then fed to an encoder for outputting the initial action probability of every frame, and a decoder is followed to refine the predictions. After that, an action relation encoding (ARE) module follows to perform a refinement. Both the encoder and decoder have blocks, and the feed-forward network (FFN) in every block is built with an ATDC. The first layer of the encoder is a temporal convolution (TC) with kernel size 1 for feature compression, which is followed by serval encoder blocks. Then, a TC with kernel size 1 predicts the initial segmentation from the last encoder block, where C is the number of action categories. To make the hypothesis space in a reliable scope, we constraint each SIA and FFN with a hierarchical representation pattern (HRP) based on the temporal dilation convolution (TDC) layers, which build local to global dependencies as the layers increase by gradually enlarging their receptive fields. is further refined to by a decoder, where a TC adjusts the feature dimension of input features first, and then a series of decoder blocks follows. The only structural difference between the encoder block and the decoder block is that the SIA module is replaced with SICA, which brings in an informative clue from the encoder for discriminative features. Similar to the encoder, the decoder also adopts the same hierarchical pattern but is implemented with the FFN and SICA in the decoder blocks. is further refined to by an ARE.

Figure 1.

The LASFormer framework. LASFormer is a light transformer built with a novel encoder–decoder structure based on the designed sub-structures for action segmentation.

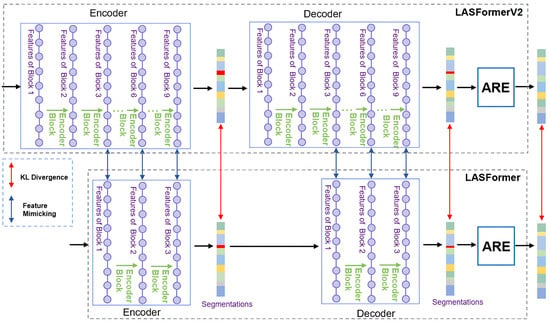

The teacher LASFormerV2 follows the same pattern as LASFormer: a “one encoder + one decoder + ARE” structure, where the encoder and decoder have the same structure as that of ASFormer [7] and both the encoder and decoder have blocks. This design lays the groundwork for conducting the RFD as described in detail in Section 3.4. In our settings, we set and . During training, the outputs of the encoder, decoder, and ARE are supervised jointly under the classification loss built with the cross-entropy and smoothing loss as formulated by

where is the c-th item (ground truth category) of the t-th frame output, is the hyper-parameter, and is to keep temporal consistency and is given by

where is the hyper-parameter. To conduct the RFD, the student is also trained under the loss functions (9) and (10), thus the total loss for training is given by

where is the hyper-parameter for adjusting the weight and and are the sets of matched layer pairs that need to be supervised. The output of the ARE is the final prediction.

3.2. Encoder Block

Each encoder block contains two residual connections: an FFN (FFN) followed by instance normalization (IN), a SIA layer, and a TC () layer with kernel size 1, as shown in Figure 1. Suppose the features are the input features of the m-th encoder block. The computation of the encoder block can be formulated by

where the SIA layer utilizes pure temporal convolution operations to obtain implicit attention to update the features, which avoids the quadratic computational complexity in the number of temporal locations that SA suffers from, as analyzed in Section 4.4.1. Similar to the SA layer, the SIA layer constraints each SIA operation in a local window n, as given by

where , and are value, query, and key embeddings of window size n, computed from three independent TC layers with kernel size 1, and are derived from and is derived from Q. Function first maps the features to the features , then converts it to by the repeating operation, which is to build correlations among local tokens. Function is a TC layer with kernel size 1 to obtain weights based on the observation. Both SA and SIA obtain attention from the token correlations, making SIA have a similar function to SA.

Considering that a transformer with serials of SIA is not effective enough to learn representations over a long-duration video, we constrain each SIA and FFN with an HRP, make the low-level SIA layers capture local temporal relations, and gradually enlarge the temporal range of the relations with the increasing block depth. Thus, for the teacher, all the TDC layers in each block have the same dilation rates for constraining the receptive fields, and dilation rate S is doubled with the increasing of the block index m as formulated by for building an HRP, and the FFN is built with a TDC with kernel size 3 followed by a Relu activation. For the student, the FFN is built with an ATDC for conducting RFD. Suppose the max dilation rate in ATDC is , then all the TDC layers in SIA adopt a dilation rate of , and the min dilation rate in ATDC of the next block would be . This setting is to build an HRP and lay the groundwork for RFD, as described in Section 3.4 in detail. Such a pattern makes SIA blocks cooperate to improve performance.

3.3. Decoder Block

The decoder block has a similar structure to the encoder block, as shown in Figure 1; the only difference is that the SIA is replaced with the SICA to bring in the output of the last encoder block for discriminative features. In SICA, the embedding Q is obtained by feeding the concatenation of and to a TC layer with kernel size 1, while the value embedding V is only from . Such design is inspired by the works [7], which demonstrate that the refinement process is super sensitive to the feature space from the predictions. Thus, the formulation of SICA is given by

where K and are derived from Q with two differnt TC layers with kernel size 1 for the same functions as those in SIA, and has the same pattern and function as , which builds the same hierarchical representation pattern by adopting the same strategy of dilation rate assignments.

3.4. Receptive Field-Guided Distillation

RFD is a knowledge distillation method for model reduction based on feature mimicking and logic distillation. Feature mimicking distils intermediate features to guide the student, whose positive effect on improving the performance is hugely discounted by the gap in semantic feature structure between the features of teacher and student, which is mainly due to different perceptual information [41]. RFD narrows this gap by conducting feature mimicking under the same receptive fields, as shown in Figure 2. To achieve this goal, the feed-forward layer of each block in LASFormer is built with the ATDC. The ATDC first utilizes k parallel TDC layers with kernel size 3 and dilations to obtain information from different receptive fields, whose outputs are concatenated and then fed to a TDC () with kernel size 1 for extracting informative information adaptively, as formulated by

where , and is a Relu function.

Figure 2.

Receptive field-guided distillation (RFD). LASFormerV2 and LASFormer are the teacher and student, respectively. RFD narrows the gap in the semantic feature structure of two network outputs by conducting feature mimicking under the same receptive fields.

Since the feed-forward layer in the teacher is built with a TDC layer followed by Relu activation and follows the HRP criterion, as described in Section 3.2, an ATDC layer can correspond to k successive blocks, whose dilation rates are from to in the teacher network. Thus, for conducting the RFD, the block outputs , whose dilation rate is , are regarded as the target features for the block outputs , whose max dilation rate is , in the student network, and we apply loss to realize the supervision:

where and are the j-th elements of the i-th frame in and , respectively. It aims to keep the effective receptive fields between the two channel sets as consistent as possible. For logic distillation, the outputs of the encoder, decoder, and ARE from the teacher are labels for supervising the corresponding outputs from the student by applying KL divergence:

where and are the softmax outputs of the frame in the student and teacher, respectively.

3.5. Action Relation Encoding

ARE first updates the features with the first four layers of SSTCN [5] to learn long-range temporal relations, which are then followed by two branches, where one branch refines the frame representations with the frame refiner and provides features for conducting CMF. Another branch first facilitates relational reasoning at the segment level with temporal graph reasoning (TRG), then provides features for conducting CMF, which integrates frame-level and segment-level clues adaptively to update the features. As such, the design introduces the inductive bias that adjacent frames are more likely to belong to the same class and thus can overcome the over-segmentation errors.

3.5.1. Temporal Graph Reasoning

TGR conducts reasoning on the graph denoted by , where is a set of N nodes denoting segments whose representations ( denotes the i-th segment) are given by

where the i-th segment starts from the frame to the frame derived from the detected boundaries, and is the mapped features of the t-th frame computed by a temporal convolution followed by a Relu function. denotes the weight edge between node i and node j to model the relationships among adjacent actions, which are constituted by the inter-node similarity, as defined by

where is the neighborhood of node i. Suppose is the representation of node i in the m-th layer, TGR updates the node representations with learnable parameters :

where is the e exponential function.

3.5.2. Cross-Model Fusion

CMF aims to combine two clues, where one clue is the representations from TGR containing segment-level temporal relations, and another is the representations from the frame refiner, containing frame-level temporal relations. CMF first utilizes a cross-attention with parameters to summarize the relations between a segment and the nearby features from ( is the i-th frame representation) by

where associates the nearby frames with the hyper-parameter , and are the key and value embeddings from the -th to the -th frame computed from two 1×1 temporal convolutions by feeding . Then, CMF obtains frame-level representations by

where is the parameters to be learned.

4. Experiments and Discussion

4.1. Datasets and Evaluation Metrics

The 50Salads [42] dataset contains 50 videos with a total of 17 action categories, where every video with an average duration of 6.4 min usually contains 20 action instances of various temporal durations, recoding salad preparation activities of 25 actors, where every actor prepares 2 kinds of salads. The GTEA [43] dataset involves 28 egocentric videos of 11 action categories, where every video with an average duration of a minute usually contains 20 action instances of various temporal durations, recording 7 kinds of daily activities conducted by 4 actors. The Breakfast [44,45] dataset contains 1712 videos of 48 action categories about breakfast activities in 18 different kitchens. On average, there are six action instances in every video. Following the default setting [5], five-fold, four-fold, and four-fold cross-validations are designed to obtain their average values for evaluation on the 50Salads, GTEA, and Breakfast, respectively.

For quantitative evaluation, we adopt the following evaluation metrics, as used in previous works [27]: frame-wise accuracy (Acc), segmental edit score (Edit), and F1 score at overlapping thresholds of 0.1, 0.25, and 0.5 (F1@0.1, F1@0.25, and F1@0.5). The Acc directly assesses the frame-level results, while the Edit and F1 scores assess the temporal consistency between ground truth and inference at the segment level, which can reflect the over-segmentation errors. The Edit is computed using the Levenshtein distance, a function of segment insertions, deletions, and substitutions [2] to obtain the minimum number of edits for converting between two segment lists from prediction and the ground truth, which evaluates the ordering of actions and thus can evaluate over-segmentation errors indirectly. F1 is computed using the harmonic mean of precision and recall based on the IoU thresholds, which makes the scores dependent on the number of actions [5]. We report the peak value of the GPU memory (GPU) for batch size 1 during training and the inference latency (Latency) during testing. Specifically, we record the time cost of reasoning every video in testing datasets and regard the peak time as the inference time (Latency). We utilize Pam to denote the model parameters.

4.2. Implementation Details

Consistent with [5,34], we adopt the temporal resolution of 15 frames per second (fps) to sample the videos for all the datasets. We set hyper-parameters and to 0.15 and 4, respectively, which control the weight of , which affects the temporal structures of predictions. The encoder/decoder blocks have 64, 64, and 256 feature maps for GTEA, 50Salads, and Breakfast, respectively. The best set of is 25, according to the ablation studies, and decides the temporal locations of the associated frames for CMF; this setting indicates that the nearby frames for a segment are beneficial for frame recognition, while faraway interactions for CMF are harmful. All the models are trained with learning rates of 0.005 and the Adam optimizer and are implemented using PyTorch 1.6.0 [46].

4.3. Compared with State-of-the-Art Methods

To evaluate the overall performance of our framework, we compare LASFormer with recent state-of-the-art methods, as shown in Table 1 and Table 2, where ASFormer [7] is the transformer-based method but which is not efficient due to relying on deep decoders and self-attention blocks. Most other models are built with TCN [5] and introduce usually some complementary techniques to improve the performance, such as structure search [4] and temporal domain adaption [2]; such operations need usually high computational costs and they are less competitive than ASFormer, as shown in Table 1 and Table 2. It is shown that our LASFormer achieves the best performance at all metrics compared to recent state-of-the-art methods, and it has significant improvements compared to the baseline ASFormer. For example, on the GTEA dataset, LASFormer outperforms ASFormer by 2.2%, 1.4%, and 0.5% for the Acc, Edit, and F1@50 scores, respectively. Moreover, our framework is super light and efficient compared to existing transformer-based methods, as shown in Table 3. It shows that LASFormer requires 0.667 M model parameters, 4.1 G FLOPs, and 1.9 G GPU memory, while ASFormer requires 1.146 M model parameters, 7.5 G FLOPs, and 2.7 G GPU memory; the improvement is obvious. It should be mentioned that LASFormer even requires fewer model parameters than many TCN-based methods. For example, ASRF, SSTDA, and BCN require 2.31 M, 4.60 M, and 5.72 M parameters, respectively. Our LASFormer obtains better performance using fewer parameters, which demonstrates the high efficiency and effectiveness of our framework.

Table 1.

Comparisons with recent state-of-the-art methods on the 50Salads and GTEA. All the results are in percentage units (%), and the best result of every item is written in a bold number.

Table 2.

Comparison (in percentage units (%)) with recent state-of-the-art methods on the Breakfast dataset, and the best result of every item is written in a bold number.

Table 3.

Performance comparison on the 50Salads dataset, and the best result of every item is written in a bold number.

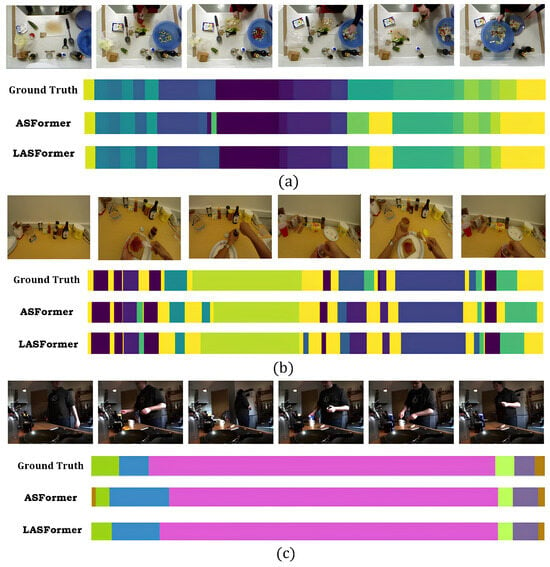

Compared with existing transformer-based models for action segmentation, all our LASFormerV1, LASFormerV2, and LASFormer require less computational cost, GPU memory cost, and model parameters, as shown in Table 3. For further verification, we visualize some high-quality results predicted by LASFormer and some other methods, as shown in Figure 3. It shows that the predictions from LASFormer are less over-segmented and more correct than those from ASFormer, and we attribute this to the SIA, SICA, ATDC, and ARE structure, as analyzed in Section 4.4. Furthermore, we analyze the efficiency of our model by comparing it with recent state-of-the-art models, as shown in Table 3, where ASFormer+KARI+BMP [50] introduces an effective activity grammar to improve the performance by employing ASFormer, BEP, and KARI, and ASFormer provides the frame-level class prediction for conducting BEP. Reference [50] shows that the BEP and KARI would result in high computation complexity. Thus, the FLOPs and Latency values of ASFormer+KARI+BMP must be larger than those of ASFormer. We utilize “number+” to denote the value that is either greater than or equal to the number. Table 3 shows that our LASFormer outperforms the UARL + MSTCN++ in segmentation results with smaller model sizes and GPU values. The large GPU value of UARL + MSTCN++ is due to the training of multiple samplings. LASFormer obtains predictions of equivalent quality to those of ASFormer + KAPI + BEP while needing less inference time, much lower memory costs, and smaller model parameters and FLOPs. The comparisons demonstrate the strong ability of our methods to address computational and memory challenges in existing video action segmentation models while improving recognition performance.

Figure 3.

Qualitative results of action segmentation with color coding for three datasets: (a) 50Salads; (b) GTEA; (c) Breakfast.

4.4. Ablation Study

4.4.1. Overall Analysis of the Effect of Three Main Components

We test the effect of RFD, SIA, and ARE on computational time and memory cost, and the results are shown in Table 3, where ASFormer [7] relies on three decoders for improving the Edit score, and LASFormerV2 replaces the last two decoders of ASFormer [7] with one ARE. Table 3 shows that LASFormerV2 obtains segmentations of comparable quality to those of ASFormer but has a smaller GPU, FLOPs, and Latency. Thus, employing ARE can reduce the inference time and memory cost, which is in line with the following analysis.

In ARE, the computation cost for the first four convolution layers and the frame refiner is consistent with that of the FFN layers in the first five blocks of a decoder in ASFormer because their calculations are the same. The computational complexity of TGR is proportional to the number of edges and nodes, the number of nodes is small since every node tries to denote an action segment, and the number of edges is also small since a total of five nodes in neighborhood is enough to capture large-range video frames, thus it has a small computation cost. Taking a random video in 50Salads as an input, a self-attention layer usually needs to compute the features with over 8000 frames, but TGR may only need to compute 20 nodes. So, the computational cost of a TGR is generally small. The small number of nodes also induces the CMF operation in ARE with a small computational complexity. Thus, the complexity of ARE is much smaller than that of a decoder, which induces less inference time. By adopting ARE to substitute two decoders, LASFormerV2 has fewer model parameters (Table 3), which contributes to the reduction in GPU cost.

In Table 3, LASFormerV1 is the framework derived from the RFD by regarding LASFormerV2 as the teacher, which obtains segmentation results with a quality comparable to those of LASFormerV2 while taking smaller Pam and Latency values and larger GPU values. The smaller Pam and Latency values are derived from the RFD technique, which realizes model reduction, allowing both the encoder and decoder to contain three blocks instead of nine blocks, which also reduces the inference time. Although RFF utilizes an ATDC as an FFN layer, the total time and space complexity is still smaller than the original blocks. The greater GPU cost of LASFormerV1 is caused by conducting distillation learning. The above phenomenon demonstrates the positive effect of RFD in reducing inference time and the quantities of model parameters.

In Table 3, LASFormer replaces the SA and CA in LASFormerV1 with SIA and SICA, respectively, and it obtains better segmentation results than LASFormerV1 while taking the equivalent GPU memory cost and smaller inference time cost (Latency). Their GPU values are equivalent due to the similar quantities of model parameters. One of the reasons for less inference time is that SIA avoids the high quadratic complexity of SA, as analyzed below.

Complexity: Self-attention (SA) layer vs. SIA layer. Suppose that an SA layer contains q self-attention operations to make the receptive fields of each operation within a local window size n, and the SA operation can be formulated by , where , and are the query, key, and value embeddings of local temporal regions, which results in a complexity of , that is the high quadratic complexity caused by n due the fact that n should not be a small value for capturing long-term dependencies effectively and should also not be a too large value for being trained adequately on small-scale data. In addition, the query, key, and value embeddings result in a complexity of . For a fair comparison, the SIA layer constraints the receptive fields of each SIA operation within the same local window size n. In each SIA layer, the key, query, and value embeddings of generating , and V result in a complexity of , which is smaller than the corresponding embeddings of the SA layer due to . For SIA operation, the complexity of , , and are , , and , respectively, so the total complexity of each SIA operation is , where . Since can be satisfied by adjusting , where is also feasible in some datasets, the complexity of SIA layer can be smaller than the SA layer.

4.4.2. Ablations of the Receptive Field-Guided Distillation

Compared with other distillation methods. To give a detailed analysis of distillation methods for action segmentation, we conducted an ablation study on RFD, as shown in Table 4, where the results of the second and third rows are predicted from the student where the FFN adopts the same computation as that of the teacher; the results of the fourth row are predicted by the student where each FFN is built with an ATDC. From Table 4, we can draw the following two conclusions: (i) Feature mimicking and logit distillation have a positive effect on action segmentation. For example, logit distillation and feature mimicking increase the baseline by 0.3% Acc, 0.4% Edit, and 0.7% F1@10. (ii) Our RFD is more effective than other distillation methods since the results of the last row achieve the best performance. Thus, pursuing the consistent receptive fields with the teacher in the intermediate layers can overcome the difficulty of feature mimicking and improve the effectiveness of distillation for action segmentation.

Table 4.

Performance comparison (in percentage units (%)) on the Breakfast dataset, and the best result of every item is written in a bold number.

Ablations of the number of blocks in the student network. The number of blocks in the encoder/decoder is an important hyper-parameter, where more blocks can bring more pairs of feature mimicking to achieve knowledge distillation in more detail but also higher memory costs. We conducted an ablation study about of RFD, as shown in Table 5, where the first row shows the testing results without using RFD. Comparing the experimental data in the first and second rows of Table 5, we can see that the model parameters are reduced hugely after using RFD. For example, the model parameters are changed from 1.146 M to 0.667 M after using RFD in the 50Salads example. Thus, adopting RFD can reduce the model size hugely. Table 5 also shows that the performance is increased with the increasing number of blocks, but also the memory cost; the best setting is when both the memory costs and performance are relatively more satisfied. We did not test the setting of because the results from were almost the same as the results from the teacher, and the GPU memory cost would increase dramatically with the increase in the value. Thus, we chose for all our experiments.

Table 5.

Comparison of different numbers of blocks on 50Salads and GTEA datasets, and the best result of every item is written in a bold number.

4.4.3. Analysis of Simplified Implicit Attention and Cross-Attention

We conducted an ablation study on SIA and SICA by controlling whether to replace SA and CA with our SIA and SICA, as shown in Table 6, where the results of the first row are from LASFormer adopting SA and CA. It can be seen that the results of the second row show nearly the same performance as the first row while needing less inference time. The SIA and SICA techniques can reduce the inference time of the model. More detail about the efficiency of SIA and SICA can be seen in Table 3. By comparing the results of LASFormerV1 and LASFormer, we found that SIA and SICA need fewer FLOPs and Latency values. For example, LASFormer needs 4.1G FLOPs but LASFormerV1 needs 4.3 G FLOPs. Thus, SIA and SICA can achieve performances like those of SA and CA but with a much lower computational cost. This demonstrates the effectiveness and efficiency of SIA and SICA in extracting informative features. The operations of obtaining channel attention based on inter-token communication are effective in extracting informative information and can approximate the performance of SA and CA by conducting RFD.

Table 6.

Testing the effect of simplified implicit attention (SIA) and simplified implicit cross-attention (SICA) on the 50Salads and GTEA datasets, and the best result of every item is written in a bold number.

4.4.4. Analysis of the Action Relation Encoding and the Number of Decoders

We conducted ablation studies on ARE and the number of decoder blocks, as shown in Table 7. This shows that the main work of stacking decoders is to improve the edit score and F1 score since they are gradually improved with the increasing number of decoders; adding an ARE with fewer computational costs after the decoder can also achieve it efficiently. Thus, the main function of our ARE is to improve the Edit and F1 scores. Table 7 shows that the costs of different operations and the computation costs of stacking the decoders are huge. It shows that the best setting is one decoder followed by an ARE, where the memory cost is super low and the performance is good enough. For example, the model parameter of this setting is 0.766 M, while ASFormer requires 1.146 M. Figure 3 illustrates clearly the effect of our ARE; it shows the model with ARE poses a more accurate action boundary and reduces over-segmentation errors hugely.

Table 7.

Comparison of different numbers of decoders and action relation encoding (ARE) for LASFormerV2 on 50Salads, and the best result of every item is written in a bold number.

We attributed the positive effect of ARE to TGR and CMF, and we conducted an ablation study on the two components by replacing them with other structures, as shown in Table 8, where the first row shows the results from LASFormer where TGR is replaced with stacking TDC with Relu activation functions, and the second row shows the results from LASFormer where CMF is replaced with an adding operation, as given by

where is the representation of the i-th segment, is the representation of the i-th frame, and all the symbols have the same meaning as those described in Section 3.4. It shows that ARE built with both CMF and TGR achieves better performance at all metrics than by using only one of the techniques. It can also be seen that TGR has the function of improving the Edit score, since the results from both the second and third rows show higher Edit scores than the first row, and CMF has the function of improving Acc scores. For example, the TGR+CMF outperforms the Stacking TDC+CMF by 2.4% for Edit and 1.1% for frame-wise accuracy, and the TGR+CMF outperforms the TGR+ADD by 2.4% for Edit and 1.1% for the frame-wise accuracy. It can be concluded that facilitating action relational reasoning at the segment level with TGR can reduce the over-segmentation errors hugely, and CMF has a structural advantage in extracting informative features.

Table 8.

Testing the effect of temporal graph reasoning (TGR) and cross-model fusion structure (CMF) on the 50Salads and GTEA datasets, and the best result of every item is written in a bold number.

5. Conclusions

In our work, we propose a super light transformer framework for action segmentation called LASFormer, which explores a novel encoder–decoder structure based on three main designs for processing long input sequences. First, our SIA and SICA can replace SA and CA, respectively, which have much lower computational costs. Second, our RFD can realize mode reduction and overcome the gap in semantic feature structure between the intermediate features of teacher and student, thus has a better distillation effect. Third, our ARE can facilitate action relational reasoning at the segment level and overcome over-segmentation accurately without needing to stack multiple decoders, which can reduce computational and memory costs hugely. Extensive ablation studies illustrated our superiority over existing methods both in efficiency and effectiveness and that our LASFormer outperforms the state-of-the-art methods on the 50Salads, GTEA, and Breakfast datasets.

Author Contributions

Conceptualization, Z.M.; methodology, Z.M.; software, Z.M.; validation, Z.M.; formal analysis, Z.M.; investigation, Z.M.; resources, K.L.; data curation, Z.M.; writing—original draft preparation, Z.M.; writing—review and editing, Z.M. and K.L.; visualization, Z.M.; supervision, K.L.; project administration, K.L.; funding acquisition, K.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Beijing Natural Science Foundation, China (No.4222037, L181010), and the National Natural Science Foundation of China (No. 61972035).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Liu, D.; Li, Q.; Jiang, T.; Wang, Y.; Miao, R.; Shan, F.; Li, Z. Towards Unified Surgical Skill Assessment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Computer Vision Foundation, IEEE, Nashville, TN, USA, 20–25 June 2021; pp. 9522–9531. [Google Scholar] [CrossRef]

- Chen, M.H.; Li, B.; Bao, Y.; AlRegib, G.; Kira, Z. Action Segmentation With Joint Self-Supervised Temporal Domain Adaptation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 9451–9460. [Google Scholar] [CrossRef]

- Chen, W.; Chai, Y.; Qi, M.; Sun, H.; Pu, Q.; Kong, J.; Zheng, C. Bottom-up improved multistage temporal convolutional network for action segmentation. Appl. Intell. 2022, 52, 14053–14069. [Google Scholar] [CrossRef]

- Gao, S.H.; Han, Q.; Li, Z.Y.; Peng, P.; Wang, L.; Cheng, M.M. Global2Local: Efficient Structure Search for Video Action Segmentation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 16800–16809. [Google Scholar] [CrossRef]

- Farha, Y.A.; Gall, J. MS-TCN: Multi-Stage Temporal Convolutional Network for Action Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–19 June 2019; pp. 3570–3579. [Google Scholar] [CrossRef]

- Li, S.J.; AbuFarha, Y.; Liu, Y.; Cheng, M.M.; Gall, J. MS-TCN++: Multi-Stage Temporal Convolutional Network for Action Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 45, 6647–6658. [Google Scholar] [CrossRef] [PubMed]

- Yi, F.; Wen, H.; Jiang, T. ASFormer: Transformer for Action Segmentation. In Proceedings of the The British Machine Vision Conference, London, UK, 20–25 November 2021. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Zhou, Q.; Li, X.; He, L.; Yang, Y.; Cheng, G.; Tong, Y.; Ma, L.; Tao, D. TransVOD: End-to-End Video Object Detection with Spatial-Temporal Transformers. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 7853–7869. [Google Scholar] [CrossRef] [PubMed]

- Kim, B.; Lee, J.; Kang, J.; Kim, E.; Kim, H.J. HOTR: End-to-End Human-Object Interaction Detection With Transformers. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Virtual Event, Nashville, TN, USA, 20–25 June 2021; pp. 74–83. [Google Scholar] [CrossRef]

- Bao, L.; Zhou, X.; Zheng, B.; Yin, H.; Zhu, Z.; Zhang, J.; Yan, C. Aggregating transformers and CNNs for salient object detection in optical remote sensing images. Neurocomputing 2023, 553, 126560. [Google Scholar] [CrossRef]

- Vecchio, G.; Prezzavento, L.; Pino, C.; Rundo, F.; Palazzo, S.; Spampinato, C. MeT: A graph transformer for semantic segmentation of 3D meshes. Comput. Vis. Image Underst. 2023, 235, 103773. [Google Scholar] [CrossRef]

- Du, D.; Su, B.; Li, Y.; Qi, Z.; Si, L.; Shan, Y. Do we really need temporal convolutions in action segmentation? arXiv 2022, arXiv:cs.CV/2205.13425. [Google Scholar]

- Fayyaz, M.; Koohpayegani, S.A.; Jafari, F.R.; Sengupta, S.; Joze, H.R.V.; Sommerlade, E.; Pirsiavash, H.; Gall, J. Adaptive Token Sampling for Efficient Vision Transformers. In Proceedings of the Computer Vision—ECCV 2022—17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Volume 13671, pp. 396–414. [Google Scholar] [CrossRef]

- Tang, Y.; Han, K.; Wang, Y.; Xu, C.; Guo, J.; Xu, C.; Tao, D. Patch Slimming for Efficient Vision Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition CVPR, New Orleans, LA, USA, 18–24 June 2022; pp. 12155–12164. [Google Scholar] [CrossRef]

- Yin, H.; Vahdat, A.; Álvarez, J.M.; Mallya, A.; Kautz, J.; Molchanov, P. A-ViT: Adaptive Tokens for Efficient Vision Transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10799–10808. [Google Scholar] [CrossRef]

- Chu, X.; Tian, Z.; Wang, Y.; Zhang, B.; Ren, H.; Wei, X.; Xia, H.; Shen, C. Twins: Revisiting the Design of Spatial Attention in Vision Transformers. In Proceedings of the Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems 2021, Vancouver, BC, Canada, 6–21 December 2021; pp. 9355–9366. [Google Scholar]

- Yu, W.; Luo, M.; Zhou, P.; Si, C.; Zhou, Y.; Wang, X.; Feng, J.; Yan, S. MetaFormer is Actually What You Need for Vision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10809–10819. [Google Scholar] [CrossRef]

- Li, K.; Wang, Y.; Gao, P.; Song, G.; Liu, Y.; Li, H.; Qiao, Y. UniFormer: Unified Transformer for Efficient Spatial-Temporal Representation Learning. In Proceedings of the the Tenth International Conference on Learning Representations, ICLR, Virtual, 25–29 April 2022. [Google Scholar]

- Pan, J.; Bulat, A.; Tan, F.; Zhu, X.; Dudziak, L.; Li, H.; Tzimiropoulos, G.; Martínez, B. EdgeViTs: Competing Light-Weight CNNs on Mobile Devices with Vision Transformers. In Proceedings of the Computer Vision—ECCV 2022—17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G.J., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Volume 13671, pp. 294–311. [Google Scholar] [CrossRef]

- Behrmann, N.; Golestaneh, S.A.; Kolter, Z.; Gall, J.; Noroozi, M. Unified Fully and Timestamp Supervised Temporal Action Segmentation via Sequence to Sequence Translation. In Proceedings of the Computer Vision—ECCV 2022—17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Volume 13695, pp. 52–68. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, Y.; Yin, Z.; Gao, C. Unsupervised video segmentation for multi-view daily action recognition. Image Vis. Comput. 2023, 134, 104687. [Google Scholar] [CrossRef]

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4724–4733. [Google Scholar] [CrossRef]

- Kuehne, H.; Richard, A.; Gall, J. A Hybrid RNN-HMM Approach for Weakly Supervised Temporal Action Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 765–779. [Google Scholar] [CrossRef]

- Richard, A.; Kuehne, H.; Gall, J. Weakly Supervised Action Learning with RNN Based Fine-to-Coarse Modeling. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1273–1282. [Google Scholar] [CrossRef]

- Lea, C.; Flynn, M.D.; Vidal, R.; Reiter, A.; Hager, G.D. Temporal Convolutional Networks for Action Segmentation and Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1003–1012. [Google Scholar] [CrossRef]

- Lei, P.; Todorovic, S. Temporal Deformable Residual Networks for Action Segmentation in Videos. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6742–6751. [Google Scholar] [CrossRef]

- Ishikawa, Y.; Kasai, S.; Aoki, Y.; Kataoka, H. Alleviating Over-segmentation Errors by Detecting Action Boundaries. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Montreal, QC, Canada, 10–17 October 2021; pp. 2321–2330. [Google Scholar] [CrossRef]

- Zhang, Y.; Tang, S.; Muandet, K.; Jarvers, C.; Neumann, H. Local Temporal Bilinear Pooling for Fine-Grained Action Parsing. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 11997–12007. [Google Scholar] [CrossRef]

- Ahn, H.; Lee, D. Refining Action Segmentation with Hierarchical Video Representations. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 16282–16290. [Google Scholar] [CrossRef]

- Li, M.; Chen, L.; Duan, Y.; Hu, Z.; Feng, J.; Zhou, J.; Lu, J. Bridge-Prompt: Towards Ordinal Action Understanding in Instructional Videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, New Orleans, LA, USA, 18–24 June 2022; pp. 19848–19857. [Google Scholar] [CrossRef]

- Chen, L.; Li, M.; Duan, Y.; Zhou, J.; Lu, J. Uncertainty-Aware Representation Learning for Action Segmentation. In Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, IJCAI-22, Vienna, Austria, 23–29 July 2022; pp. 820–826. [Google Scholar] [CrossRef]

- Wang, Z.; Gao, Z.; Wang, L.; Li, Z.; Wu, G. Boundary-Aware Cascade Networks for Temporal Action Segmentation. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; pp. 34–51. [Google Scholar] [CrossRef]

- Long, F.; Yao, T.; Qiu, Z.; Tian, X.; Luo, J.; Mei, T. Gaussian Temporal Awareness Networks for Action Localization. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 344–353. [Google Scholar] [CrossRef]

- Huang, Y.; Sugano, Y.; Sato, Y. Improving Action Segmentation via Graph-Based Temporal Reasoning. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 14021–14031. [Google Scholar] [CrossRef]

- Kong, Z.; Dong, P.; Ma, X.; Meng, X.; Niu, W.; Sun, M.; Shen, X.; Yuan, G.; Ren, B.; Tang, H.; et al. SPViT: Enabling Faster Vision Transformers via Latency-Aware Soft Token Pruning. In Proceedings of the Computer Vision—ECCV 2022—17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Volume 13671, pp. 620–640. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, New Orleans, LA, USA, 18–24 June 2022; pp. 11966–11976. [Google Scholar] [CrossRef]

- Mehta, S.; Rastegari, M. MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer. In Proceedings of the The Tenth International Conference on Learning Representations, ICLR, Virtual, 25 April 2022. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, ICCV 2021, Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

- Heo, B.; Kim, J.; Yun, S.; Park, H.; Kwak, N.; Choi, J.Y. A Comprehensive Overhaul of Feature Distillation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1921–1930. [Google Scholar] [CrossRef]

- Stein, S.; Mckenna, S.J. Combining Embedded Accelerometers with Computer Vision for Recognizing Food Preparation Activities. In Proceedings of the the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Zurich, Switzerland, 8–12 September 2013; Volume 33, pp. 3281–3288. [Google Scholar]

- Fathi, A.; Ren, X.; Rehg, J.M. Learning to recognize objects in egocentric activities. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 3281–3288. [Google Scholar] [CrossRef]

- Kuehne, H.; Arslan, A.; Serre, T. The Language of Actions: Recovering the Syntax and Semantics of Goal-Directed Human Activities. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 780–787. [Google Scholar] [CrossRef]

- Kuehne, H.; Gall, J.; Serre, T. An end-to-end generative framework for video segmentation and recognition. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Advances in Neural Information Processing Systems 32, Vancouver, BC, Canada, 8–14 December 2019; pp. 8024–8035. [Google Scholar]

- Wang, D.; Hu, D.; Li, X.; Dou, D. Temporal Relational Modeling with Self-Supervision for Action Segmentation. In Proceedings of the Thirty-Fifth AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; pp. 2729–2737. [Google Scholar]

- Xu, Z.; Rawat, Y.S.; Wong, Y.; Kankanhalli, M.S.; Shah, M. Don’t Pour Cereal into Coffee: Differentiable Temporal Logic for Temporal Action Segmentation. In Proceedings of the NeurIPS, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Yang, D.; Cao, Z.; Mao, L.; Zhang, R. A temporal and channel-combined attention block for action segmentation. Appl. Intell. 2023, 53, 2738–2750. [Google Scholar] [CrossRef]

- Tian, X.; Jin, Y.; Tang, X. TSRN: Two-stage refinement network for temporal action segmentation. Pattern Anal. Appl. 2023, 26, 1375–1393. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).