Abstract

In the field of rough set, feature reduction is a hot topic. Up to now, to better guide the explorations of this topic, various devices regarding feature reduction have been developed. Nevertheless, some challenges regarding these devices should not be ignored: (1) the viewpoint provided by a fixed measure is underabundant; (2) the final reduct based on single constraint is sometimes powerless to data perturbation; (3) the efficiency in deriving the final reduct is inferior. In this study, to improve the effectiveness and efficiency of feature reduction algorithms, a novel framework named parallel selector for feature reduction is reported. Firstly, the granularity of raw features is quantitatively characterized. Secondly, based on these granularity values, the raw features are sorted. Thirdly, the reordered features are evaluated again. Finally, following these two evaluations, the reordered features are divided into groups, and the features satisfying given constraints are parallel selected. Our framework can not only guide a relatively stable feature sequencing if data perturbation occurs but can also reduce time consumption for feature reduction. The experimental results over 25 UCI data sets with four different ratios of noisy labels demonstrated the superiority of our framework through a comparison with eight state-of-the-art algorithms.

MSC:

68U35

1. Introduction

In the real world, data play the role of recording abundant information from objects. Thus, an open issue is how to effectively obtain valuable information from high-dimensional data.

Thus far, based on different deep learning models, various popular feature selection devices with respect to this issue have been provided. For instance, Gui et al. [1] proposed a neural network-based feature selection architecture by employing attention and learning modules, which aimed to improve the computation complexity and stability on noisy data. Li et al. [2] proposed a two-step nonparametric approach by combining the strengths of both neural networks and feature screening, which aimed to overcome the challenging problems if feature selection occurs in high-dimension, low-sample-size data. Chen et al. [3] proposed a deep learning-based method, which aimed to select important features for high-dimensional and low-sample size data. Xiao et al. [4] reported a federated learning system with enhanced feature selection, which aimed to produce high recognition accuracy to wearable sensor-based human activity recognition. In addition, based on probability theory, some classical mathematical models were also used to obtain a qualified feature subset. For example, the Bayesian model was employed in mixture model training and feature selection [5]. The trace of the conditional covariance operator was also used to perform feature selection [6].

Currently, in rough set theory [7,8,9,10], feature reduction [11,12,13,14,15,16,17] is drawing considerable attention in regard to this topic by virtue of its high efficiency in alleviating the case of overfitting [18,19], reducing the complexity of learners [20,21,22], and so on. It has been widely employed in general data preprocessing [23] because of its typical advantage in that redundant features can be removed from data without influencing the structure of raw features. A key point of traditional feature reduction devices is the search strategy. With an extensive review, most of the accepted search strategies employed in previous devices can be categorized into the following three fundamental aspects.

- Forward searching. The core of such a phase is to discriminate appropriate features in each iteration and add them into a feature subset named the reduct pool. The specific process of one popular forward search strategy named forward greedy searching (FGS) [24,25,26,27,28,29,30] is a follows: (1) given a predefined constraint, each feature is evaluated by a measure [31,32,33,34], and the most qualified feature is selected; (2) the selected feature is added into a reduct pool; (3) if the constraint is satisfied, the search process is terminated. Obviously, the most effective feature in each iteration constitutes the final feature subset.

- Backward searching. The core of such a phase is to discriminate those features with inferior quality and remove them from the raw features. The specific process of one popular backward searching named backward greedy searching (BGS) [35,36] is as follows: (1) given a predefined constraint, each object in raw feature is evaluated by a measure, and those unqualified features are selected; (2) selected features from raw features are removed; (3) if the constraint is satisfied by the remaining features, the search process is terminated.

- Random searching. The core of such a phase is to randomly select qualified features from candidate features and add them into a reduct pool. The specific process of one classic random searching strategy is named simulated annealing (SA) [37,38] is as follows: (1) for given a predefined constraint, a randomly generated binary sequence is used to picture features (“1” indicates the corresponding feature is selected; “0” indicates the corresponding feature is not selected; the number of binary digits represents the number of raw features.); (2) multiple random changes are exerted upon the sequence, the corresponding fitness values are recorded, i.e., the selected features are evaluated; (3) the sequence turns into a new state with the highest fitness value; (4), (2), and (3) are executed iteratively until the given constraint is satisfied.

Obviously, there mainly exist three limitations regarding the previous feature reduction algorithms. (1) Lack of diverse evaluations. The evaluation originating from different measures may have a disparity; that is, the features selected in single measure are likely to be ineffective when evaluated by some other measures with different semantic explanations. (2) Lack of stable selection. The previous selected feature(s) based on single constraint may seriously mislead the subsequent selection if data perturbation occurs. (3) Lack of efficient selection. For each iteration, all candidate features are required to be evaluated; thus, the time consumption tends to be unsatisfactory with the increasing of feature dimensions.

Based on these three limitations discussed above, a novel framework named parallel selector for feature reduction is reported in the context of this paper. Compared with previous research, our framework mainly consists of three differences: (1) more viewpoints for evaluating features are introduced; that is, different measures are employed for acquiring more qualified features; (2) data perturbation exerts no obvious effect on our framework because the constraints related to different measures are employed and a stable feature sequence sorted by granularity values also works; (3) the iterative selection process is abandoned and replaced by a parallel selection mechanism, through which the efficiency of deriving a final reduct is then improved.

Significantly, the detailed calculation process of our framework should also be plainly expressed. Firstly, to reveal the distinguishing ability of different features over samples, the granularity of feature is combined with our framework; that is, the granularity values of all features are calculated respectively. Secondly, based on the obtained granularity values, all features are sorted. Note that a smaller granularity value means that a corresponding feature could make samples more distinguishable. Thirdly, another measure is used to evaluate the importance of the reordered features. Fourthly, features are divided into groups by considering their comprehensive performance. Finally, the qualified features are parallel selected from these groups according to the required constraints. Immediately, a few distinct advantages emerge from such a framework.

- Providing diverse viewpoints for feature evaluation. In most existing search strategies, the richness of the measure is hard to take into account; that is, the importance of candidate features generated from single measure is usually deemed to be sufficient, e.g., the final reduct of greedy-based forward searching algorithm which was proposed by Hu et al. [39] is derived from a measure named dependency and a corresponding constraint. From this point of view, the selected feature subset may be unqualified if another independent measure is used to evaluate the importance of features. However, in our framework, different measures are employed for evaluating features; thus, more comprehensive evaluations about features can then be obtained. In view of this, our framework is then more effective than are previous feature reduction strategies.

- Improving data stability for feature reduction. In previous studies, the reduct pool is composed of the qualified features selected from each iteration, which indicates the reduct pool is iteratively updated. Thus, it should be pointed out that for each iteration, all features that have been added into the reduct pool are involved in the next evaluation, e.g., the construction of final feature subset in feature reduction strategy proposed by Yang et al. [40] is affected by the selected features. From this point of view, if data perturbation occurs, the selected features will mislead subsequent selection. However, in our framework, each feature is weighted by its granularity value and a feature sequence is then obtained, which is relatively stable in the face of data perturbation.

- Accelerating searching process for feature reduction. In most search strategies for selecting features, e.g., heuristic algorithm and backward greedy algorithm, all iterative features should be evaluated for characterizing their importance. However, the redundancy of evaluation is inevitable in the iteration. This will bring extra time consumption if selection occurs in higher dimensional data. However, in our framework, according to different measures, the process of feature evaluation should be respectively carried out only once. Moreover, the introduction of a grouping mechanism makes it possible to select qualified features in parallel.

In summary, the main contributions regarding our framework are listed as follows: (1) a diverse evaluation mechanism is designed, which can produce different viewpoints for evaluating features; (2) granularity is used for not only evaluating features but also for providing the stability of the selection results if data perturbation occurs; (3) an efficient parallel selection mechanism is developed to accelerate the process of deriving a final reduct; (4) a novel feature reduction framework is reported, which can be combined with various existing feature reduction strategies to improve the quality of their final reduct.

The remainder of this paper is organized as follows. Section 2 provides the reviews of some basic concepts concerning feature reduction. Section 3 details basic contents of our framework and elaborates its application regarding feature reduction. The results of comparative experiments and the corresponding analysis are reported in Section 4. Finally, conclusions and future prospects are outlined in Section 5.

2. Preliminaries

2.1. Neighborhood Rough Set

In rough set field, a decision system can be represented by a pair such that . is a nonempty set of samples, is a nonempty set of conditional features, and d is a specific feature which aims to unlock the labels of samples. Particularly, the set of all distinguished labels in is . , represents the label of sample .

Given a decision system , assume that a classification task is considered, an equivalence relation over U can be established with d such that . Immediately, U is separated into a set of disjoint blocks such that . ; it is the p-th decision class that contains all samples with label . This process is considered to be the information granulation in the field of granular computing [41,42,43,44].

Nevertheless, equivalence relation may be powerless to perform information granulation if conditional features are introduced, mainly because continuous values instead of categorical values are frequently recorded over such type of features. In view of this, various substitutions have been proposed. For instance, fuzzy relation [45,46] induced by kernel function and neighborhood relation [47,48] based on distance function are two widely accepted devices. Both of them are equipped with an advantage of performing information granulation in respect to different scales. The parameter used in these two binary relations is the key to offering multiple scales. Given a decision system , is a radius such that , , and a neighborhood relation over A is

in which is a distance between and over A.

A higher value of will produce a large-sized neighborhood. Conversely, a smaller value of will generate a small-sized neighborhood. The detailed formulation of neighborhood is then given by .

In the field of rough set, one of the important tasks is to approximate the objective by the result of information granulation. Generally speaking, the objectives which should be approximated are decision classes in . The details of lower and upper approximations which are based on the neighborhood are then shown in the following. Given a decision system and a radius , , , is the p-th decision class related to label , and neighborhood lower and upper approximations of are

Following the above definition, it is not difficult to present the following approximations related to the specific feature d. Given a decision system and a radius , , and the neighborhood lower and upper approximations of d are

2.2. Neighborhood-Based Measures

2.2.1. Granularity

Information granules with adjustable granularity are becoming one of the most genuine goals of data transformation due to two fundamental reasons: (1) fitting granularity-based granular computing leads to processing that is less time-demanding when dealing with detailed numeric problems; (2) information granules with fitted granularity have emerged as a sound conceptual and algorithmic vehicle because of their way of offering a more overall view of the data to support an appropriate level of abstraction aligned with the nature of specific problems. Thus, granularity has becomes a significant concept, and various models regarding it can be developed and utilized.

Given a pair in which U is a finite nonempty set of samples, and R is a binary relation over U, , the R-related set [34] of is

Given a pair , the granularity [49] related to R can be defined as

in which is the cardinality of set X.

Following Equation (7), it is not difficult to see that . Without loss of generality, the binary relation R can be regarded as one of the most intuitive representations of information granulation over U. The granularity corresponding to the binary relation R then plainly reveals the discriminability of the information granulation results (all R-correlation sets). A smaller value contains fewer ordered pairs, which means R becomes more discriminative; that is, most samples in U can be distinguished from each other.

Note that mentioned in Equation (1) is also supposed to be a kind of binary relation, which furnishes the possibility of proposing the following concept of granularity based on neighborhood relation. Given a decision system and a radius , , the based granularity can be defined as follows:

Granularity characterizes the inherent performance of information granulation from the perspective of the distinguishability of features. However, it should be emphasized that the labels of samples do not participate in the process of feature evaluation, which may bring some potential limitations to subsequent learning tasks. In view of this, a classical measure called conditional entropy can be considered.

2.2.2. Conditional Entropy

The conditional entropy is another important measure corresponding to neighborhood rough set, which can characterize the discriminating performance of with respect to d. Thus far, various forms of conditional entropy [50,51,52,53] have been proposed in respect to different requirements. A special form which is widely used is shown below.

Given a decision system , , is a radius such that , and the conditional entropy [54] of d with respect to A is defined as follows:

Obviously, holds. A lower value of conditional entropy represents a better discrimination performance of A. Immediately, . Supposing , we then have ; that is, the conditional entropy monotonously decreases with the increasing scale of A.

2.3. Feature Reduction

In the field of rough set, one of the most significant tasks is to abandon redundant or irrelevant conditional features, which can be considered to be feature reduction. Various measures have been utilized to construct corresponding constraints with respect to different requirements [55,56], with various feature reduction approaches subsequently being explored. A general form of feature reduction presented by Yao et al. [57] is introduced as follows.

Given a decision system , , is a radius such that , -constraint is a constraint based on the measure which is related to radius , and A is referred to as a -based qualified feature subset (-reduct) if and only if the following conditions are satisfied:

- A meets the -constraint;

- , B does not meet the -constraint.

It is not difficult to observe that A is actually a optimal and minimal subset of and satisfies the -constraint. For the purpose of achieving such a subset, various search strategies have been proposed. For example, an efficient searching strategy named forward greedy searching is widely accepted, whose core process is to evaluate all candidate features and select qualified features according to some measure and corresponding constraint. Based on such a strategy, it is possible for us to determine which feature should be added into or removed from A. For achieving more details about forward greedy searching strategy, the readers can refer to [58].

3. The Construction of a Parallel Selector for Feature Reduction

3.1. Isotonic Regression

In the field of statistical analysis, isotonic regression [59,60] has become a typical topic of statistical inference. For instance, concerning the medical clinical trial, it can be assumed that as the dose of a drug increases, so to does its efficacy and toxicity. However, the estimation of the ratio of patient toxicity at each dose level may be inaccurate; that is, the probability of toxicity at the corresponding dose level may not be a nondecreasing function with respect to the dose level, which will prevent the statistically observation of the average reaction of patients with the increase in drug dosage. In view of this, isotonic regression can then be employed in revealing the variation rule of clinical data. Generally, given a nonempty and finite set , an ordering relation “⪯” over can be defined as follows.

The ordering relation “⪯” is considered as a total-order over if and only if the following entries are satisfied:

- Reflexivity: .

- Transitivity: , .

- Antisymmetry: , if and , then .

- Comparability: , we always have or .

Without loss of generality, the ordering relation “⪰” can be defined in similar way. Specifically, if ordering relation “⪯” or “⪰” with respect to satisfies reflexivity, transitivity, and Antisymmetry only, they will be considered as a semiorder. Now we take “⪯” into discussion. Suppose that , the definition of isotonic function can then be obtained as follows.

Given a function such that which is based on ; if we have , then Y is called an isotonic function according to the ordering relation “⪯” over .

Let represent all isotonic functions over such that , then we can obtain the following definition of isotonic regression.

Given a function , is the isotonic regression of Y if it satisfies

in which is the weight coefficient and .

Following Equation (10), we can observe that , i.e., solutions of isotonic regression, can be viewed as the projection of Y onto when given the inner product . Immediately, an open problem about how to find such a projection is then intuitively revealed. Thus far, various algorithms [61,62] have been proposed to address such issue, the pool adjacent violators algorithm (PAVA) proposed by Ayer et al. [62] is considered to be the most widely utilized version under the situation of total order. The following Algorithm 1 gives us a detailed process of PAVA for obtaining the shown in Equation (10).

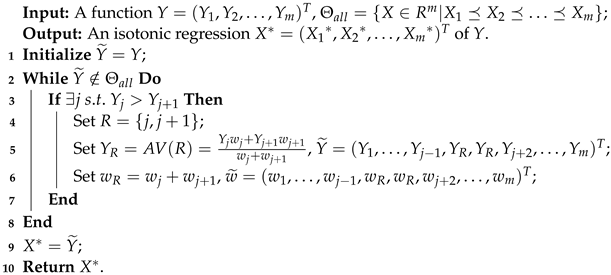

| Algorithm 1: Pool adjacent violators algorithm (PAVA). |

|

For the process of updating values in Algorithm 1, it is not difficult to observe that each should be considered for value correction. In the worst case, if all elements of Y need to be corrected, it follows that the time complexity of Algorithm 1 is . To further facilitate the understanding of the above process, an example will be presented.

Example 1.

Let us introduce the statistical model through a medical example.

- 1.

- Suppose the dosage in a kind of animal is gradually increased such thatN animals are tested corresponding to dosage , and means the reaction of the j-th animal regarding the dosage such thatmeans that the active proportion at the dosage is , which is usually estimated from sample proportion such that

- 2.

- Following Equation (11), suppose that has the same order such thatfollows binomial distribution and the likelihood function of P is

- 3.

- To give a further explanation, suppose ; the specific calculation process is shown in Table 1.

Table 1. A specific calculation process.

Table 1. A specific calculation process.- (a)

- ;

- (b)

- .

Due to , we have , ; that is, , , and so Equation (14) holds, which facilitates the general statistical analysis of the medicine’s effects.

3.2. Isotonic Regression-Based Numerical Correction

It should be emphasized that isotonic regression can be understood as a kind of general framework, which has been demonstrated to be valuable not only in providing inexpensive technical supports for data analysis in the medical field but also in bringing new motivation to other research in the academic community. Correspondingly, by reviewing the relevant contents of two feature measures mentioned in Section 2.2, we find the following: although two measures have been specifically introduced, the statistical correlation between them still lacks explanation. Therefore, an interesting idea is then naturally guided: Can we explore and analyze the statistical laws between these two measures by means of isotonic regression? Moreover, it is not difficult to realize this kind of analysis.

Given a decision system , , and is a radius such that , , we then have -based granularity and conditional entropy . Now, we sort conditional features in ascending order by following their values of granularity such that . In particular, a conditional entropy-based function according to the same feature order of can be obtained.

Definition 1.

Given a function such that which is based on , if we have or , then is called an isotonic function according to the ordering relation“⪯” over .

is employed in representing all isotonic functions over such that . Likewise, we can obtain the following definition.

Definition 2.

where is the weight coefficient, .Given a function , , is the isotonic regression of if it satisfies

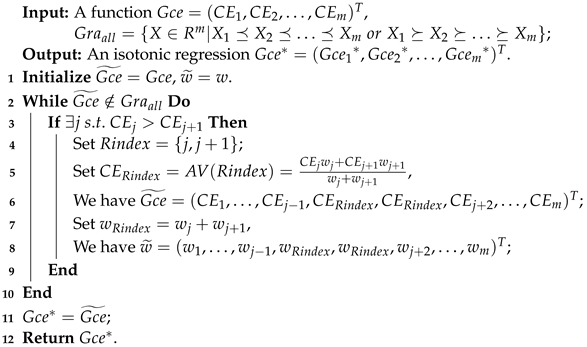

Similarly, we can still apply the PAVA shown in Section 3.1 to calculate , which can be denoted by Algorithm 2.

| Algorithm 2: Pool Adjacent Violators Algorithm for Feature Measure (PAVA_FM). |

|

Following the process of Algorithm 2, the time complexity of Algorithm 2 is similar to that of Algorithm 1, i.e., . Specifically, the time complexity of Algorithm 2 can also be written as because m represents the number of raw features.

3.3. Isotonic Regression-Based Parallel Selection

By reviewing what has been discussed of traditional feature reduction algorithms, we can observe that all candidate features should be evaluated in the process of selecting qualified features, which result in a redundant evaluation process. Additionally, although various measures have been explored and corresponding constraints can be constructed, the fact that the single viewpoint of evaluation is underabundant and the reducts derived by single constraint are relatively unstable should not be forgetting. Rather, how to explore the relevant resolution with respect to the above issues becomes significantly urgent. In view of this, motivated by Section 3.2, we introduce the framework for feature reduction.

- Calculate the granularity of each conditional feature in turn, sort these features in ascending order by granularity value, and record the original location index of sorted features.

- Based on 1, calculate the conditional entropy of each sorted feature according to the recorded location index.

- Based on 2, obtain the isotonic regression of conditional entropy according to Definitions (12) and (13). Inspired by Example 1, we group features through updated conditional entropy; that is, features with the same value of conditional entropy are placed into one group. Assume that the number of groups is , and m is the number of raw features.

- Based on 3, when becomes too large, i.e., approaches m, the grouping mechanism is obviously meaningless. To prevent this from happening, we propose a mechanism to reduce the number of groups. That is, to begin with , calculate D-value between and (the value of a group means the corrected value of the conditional entropy of features in the group arrived via isotonic regression), obtain the sum of all D-values such that , and calculate the mean D-value of . To begin with again, if , merge with .

The main contributions of the above framework are as follows: (1) different measures can be combined in the form of grouping and (2) a parallel selection mechanism to select features is provided. Furthermore, the related reduction strategy is called isotonic regression-based fast feature reduction (IRFFR), and the process of IRFFR is a follows: (1) from to , select features with the minimum of granularity in each group and put them into a reduct pool; (2) from group to , select features with the minimum of original conditional entropy in each group and put them into a reduct pool.

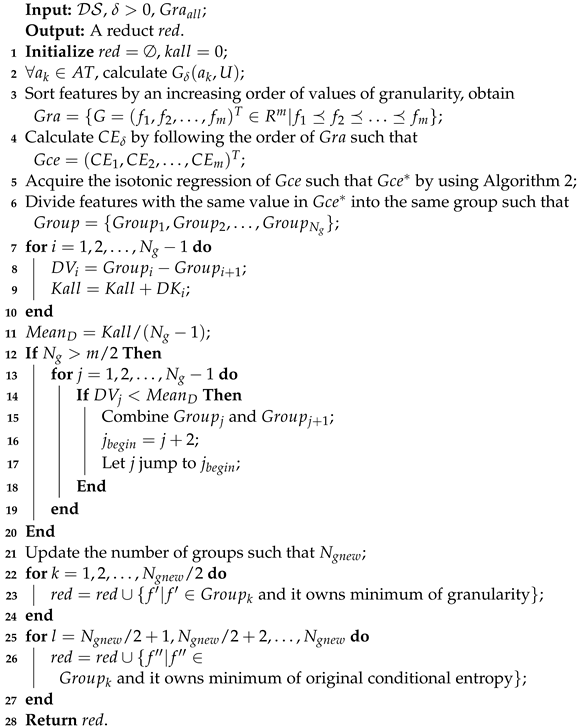

Based on the above discussion, further details of IRFFR are shown in Algorithm 3.

| Algorithm 3: Isotonic Regression-based Fast Feature Reduction (IRFFR). |

|

Obviously, different from what occurs in the greedy-based forward searching strategy, the raw features are added in groups. Notably, IRFFR offers a pattern of parallel selection which can reduce corresponding time consumption greatly.

The time complexity of IRFFR mainly comprises three components: obtaining the isotonic regression feature sequence and dividing features into groups in Steps 2 to 6; in the worst case, all features in are required to be queried, and then the scanning times for feature sorting is , i.e., , the time complexity of such a phase is , where is raw conditional features over . Updating the number of groups in Steps 7 to 21, in the worst case, considering holds, the time complexity of such a phase can be ignored. Selecting feature from groups in Steps 22–27 requires times (), and the time complexity of such a phase can also be ignored. Therefore, in general, the time complexity of IRFFR is . It is worth noting that the time complexity of the forward greedy searching strategy is [16]. From this point of view, the efficiency of feature reduction can be improved.

Example 2.

The following example of data which contains 12 samples and 11 features is given to further explain Algorithm 3; all samples are classified into four categories by d (see Table 2).

Table 2.

A Toy Data.

- 1.

- For each feature, we have , , , , , , , , , , .

- 2.

- Sort features in ascending order such that , , , , , , , , , , .

- 3.

- Calculate the corresponding conditional entropy such that , , , , , , , , , , .

- 4.

- The isotonic regression of is , then , , , , , .

- 5.

- , , , , , , we then have , , , .

- 6.

- For , we put and into a reduct pool; for , we put and into a reduct pool. That is, we have the final reduct .

4. Experiments

4.1. Datasets

To demonstrate the effectiveness of our proposed framework for feature reduction, the 25 UCI data sets were used to conduct the experiments. The following Table 3 shows the details of these data sets.

Table 3.

Data description.

During the experiments, each dataset participates in the calculation in the form of a two-dimensional table. Specifically, the “rows” of these tables represent “samples”, and the number of rows reveals how many samples participate in the calculation; the “columns” of these tables represent different features of samples, and the number of columns reveals how many features a sample has.

It is worth noting that in practical applications, data perturbation is sometimes inevitable. Therefore, when data perturbation occurs, it is necessary to investigate immunity of the proposed algorithm. In our experiments, the label noise is used to generate data perturbation. Specifically, the perturbated labels are used to inject into raw labels, and if the perturbation ratio is given as , the injection is realized by randomly selecting number of samples and injecting white Gaussian noise(WGN) [63] into their labels. It should be emphasized that excessive WGN ratio of raw labels will lead to the data losing their original semantics. From this point of view, the experimental results may be meaningless. Thus, in the following experiments, to better observe the performance of our proposed algorithm in response to the increasing noise ratio of raw labels, we conduct 4 WGN ratios such that , , and .

4.2. Experimental Configuration

In the context of this experiment, the neighborhood rough set is constructed by 20 different neighborhood radii such that . Moreover, 10-fold cross-validation [64] is applied to the calculation of each reduct, whose details are as follows: (1) each data set is randomly partitioned into two groups with the same size of samples, with the first group being regarded as the testing samples and the second group being regarded as the training samples; (2) the set of training samples is further partitioned into 10 groups with the same size such that , and for the first round of computation, is combined such that , which is used to derive reduct, with derived reduct then being used to predict the labels of testing samples; …; for the last round of computation, is used to derive the reduct. In the same way, the derived reduct is used to predict the labels of the testing samples.

All experiments were carried out on a personal computer with Windows 10 and an Intel Core i9-10885H CPU (2.40 GHz) with 16.00 GB memory. The programming language used was MATLAB R2017b.

4.3. The First Group of Experiments

In the first group of experiments, to perform IRFFR, Algorithm 3 is employed in conducting the final reducts. Based on the final reducts, we verifies the effectiveness of our IRFFR by comparing it with six state-of-art feature reduction methods from three aspects: classification accuracy, classification stability, and elapsed time. It is worth noting that the comparative method “Ensemble Selector for Attribute Reduction (ESAR)” is based on the ensemble [65] framework. The comparative methods are as follows:

- Knowledge Change Rate(KCR) [33].

- Forward Greedy Searching(FGS) [39].

- Self Information(SI) [32].

- Attribute Group(AG) [66].

- Ensemble Selector for Attribute Reduction(ESAR) [40].

- Novel Fitness Evaluation-based Feature Reduction(NFEFR) [67].

4.3.1. Comparison of Classification Accuracy

The index called classification accuracy was employed to measure the classification performance of the seven algorithms. Two classic classifiers named KNN (K-nearest neighbor, K = 3) [68], CART (classification and regression tree) [69], and SVM (support vector machine) [70] were employed to reflect the classification performance. Generally, given a decision system , assuming that the set U is divided into z (Note that as 10-folds cross-validation was employed in this experiment, holds) groups which are disjointed and with the same size, i.e., (). The classification accuracy related to reduct ( is the reduct derived over ) which is

in which is the predicted label of x through employing reduct .

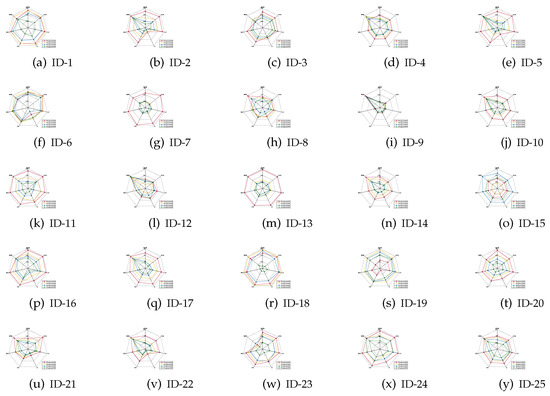

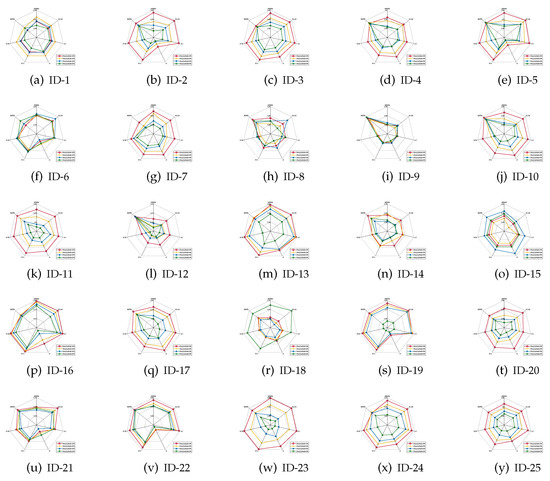

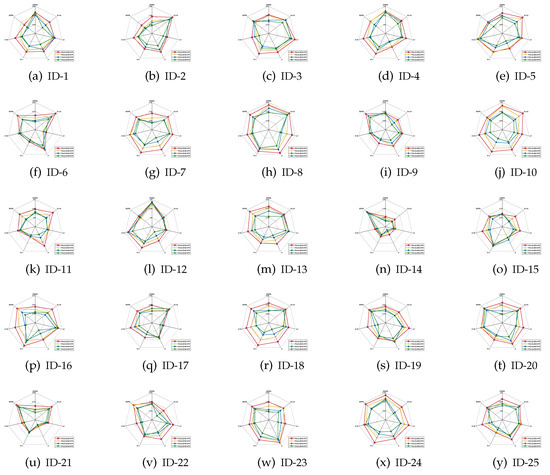

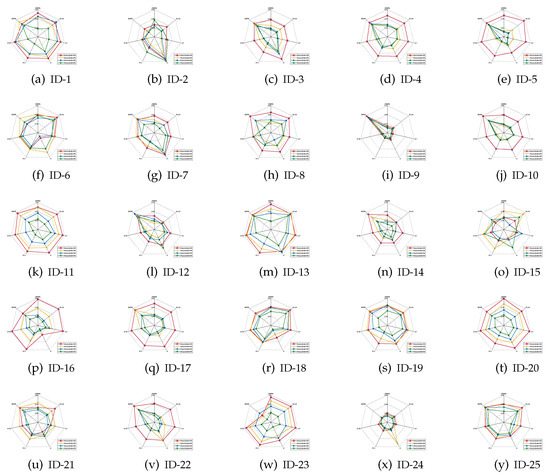

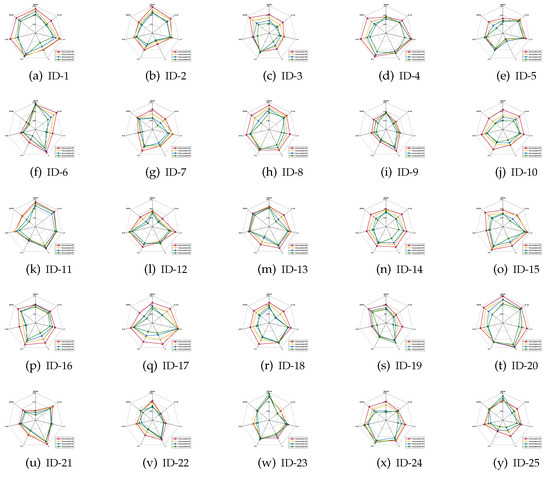

The mean values of the classification accuracies are the radar charts shown in Figure 1, Figure 2 and Figure 3, in which four different colors are used to represent four different ratios of noisy labels.

Figure 1.

Classification accuracies (KNN).

Figure 2.

Classification accuracies (CART).

Figure 3.

Classification accuracies (SVM).

Based on the specific experimental results expressed by Figure 1, Figure 2 and Figure 3, the following becomes apparent.

- For most of data sets, no matter which ratio of label noise is injected into the raw data, compared with six popular algorithms, the predictions generated through the reducts derived by our IRFFR possess superiorities. The essential reason is that the feature sequence regarding granularity is helpful for selecting out more stable features. In the example of “Parkinson Multiple Sound Recording” (ID-10, Figure 1j)’, all classification accuracies of IRFFR over four label noise ratios are greater than 0.6; in contrast, when the label noise ratio reaches 20%, 30%, and 40%, all classification accuracies of the six comparative algorithms are less than 0.6. Moreover, for some data sets, no matter which classifier is adopted, the classification accuracies regarding our IRFFR are greatly superior to the six comparative algorithms. The essential reason is that diverse evaluations do bring more qualified features out. With the example of “QSAR Biodegradation” (ID-12, Figure 1l and Figure 2l)’, in KNN, all classification accuracies of IRFFR are greater than 0.66 over four label noise ratios; in contrast, the classification accuracies of all comparative algorithms are less than 0.66 over these noise ratios. In CART, all classification accuracies of IRFFR are greater than 0.67 over four label noise ratios; in contrast, the classification accuracies of all comparative algorithms are less than 0.67 over these noise ratios. In SVM, with “Sonar” (ID-14, Figure 3n) as an example, all classification accuracies of IRFFR are greater than 0.76 over four label noise ratios; in contrast, the classification accuracies of all comparative algorithms are around 0.68 over these noise ratios. Therefore, it can be observed that our IRFFR can derive the reducts with outstanding classification accuracy.

- For most data sets, a higher label noise ratio led to a negative impact on the classification accuracies of all seven algorithms. In other words, with the increase in the label noise ratio ( increases from 10 to 40), the classification accuracies of all seven algorithms show a significant decrease, which can be seen in Figure 1, Figure 2 and Figure 3. With “Twonorm” (ID-20, Figure 1t and Figure 2t)’ as an example, the increase of does discriminate the stripes with different colors. However, it should be noted that for some data sets, such as “LSVT Voice Rehabilitation” (ID-8, Figure 1h and Figure 2h) and “SPECTF Heart” (ID-15, Figure 1o and Figure 2o) and “QSAR Biodegradation” (ID-12, Figure 3l, the changes in these figures are quite unexpected, which can be attributed to a higher label noise ratio leading to the lower stability of the classification results. Furthermore, for some data sets, such as “Diabetic Retinopathy Debrecen” (ID-4, Figure 1d and Figure 2d)’, “Parkinson Multiple Sound Recording” (ID-10, Figure 1j, Figure 2j and Figure 3j)’, and “Statlog” (Vehicle Silhouettes) (ID-17, Figure 1q and Figure 2q)’, the increasing label noise ratio does not have a significant effect on the classification accuracies of our IRFFR. In other words, compared with other algorithms, our IRFFR has a better antinoise ability.

4.3.2. Comparison of Classification Stability

In this subsection, the classification stability [40] is discussed, which was obtained over different classification results with respect to all seven algorithms. Similar to the classification accuracy, all experimental results are based on the CART, KNN, and SVM classifiers. Given a decision system , suppose that the set U is divided into z (10-folds cross-validation is employed; S thus, ) groups which are disjoint and with the same size such that (). Then, the classification stability related to reduct ( is the reduct derived over ), which is

in which represents the agreement of the classification results and can be defined based on Table 4.

Table 4.

Joint distribution of classification results.

In Table 4, means the predicted label of x obtained by . , , , and represents the number of samples meeting the corresponding conditions in Table 4. Following this, is

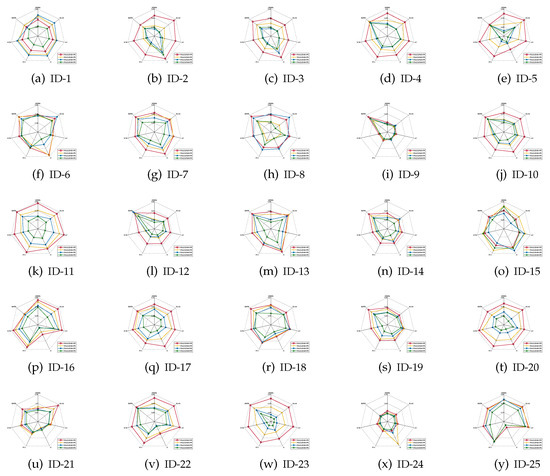

It should be emphasized that the index of classification stability describes the degree of deviation of the predicted labels if data perturbation occurs. A higher value of classification stability indicates that the predicted labels are more stable, i.e., the corresponding reduct has better quality. As for what follows, the mean values of the classification stabilities are shown in Figure 4, Figure 5 and Figure 6.

Figure 4.

Classification stabilities (KNN).

Figure 5.

Classification stabilities (CART).

Figure 6.

Classification stabilities (SVM).

Based on the experimental results reported in Figure 4, Figure 5 and Figure 6, it is not difficult to conclude the following.

- For most of data sets, regardless of which ratio of label noise was injected into raw data, compared with six popular algorithms, the classification stabilities of the reducts derived by our IRFFR were not the greatest out-performers in SVM; rather, the classification stabilities in KNN and CART were superior. Especially, for some data sets, the predictions conducted by the reducts of our IRRFR obtained absolute dominance. With “Musk” (Version 1)(ID-9, Figure 4i and Figure 5i) as an example, regarding KNN and CART, the classification stabilities of our IRFFR are respectively greater than 0.66 and 0.65; S in contrast, the classification stabilities of the six comparative algorithms are only around 0.56 and 0.58. Therefore, it can be observed that by introducing the new grouping mechanism proposed in Section 3.3, from the viewpoint of both stability and accuracy, our IRFFR is effective in improving the classification performance.

- Following Figure 4 and Figure 5, similar to the classification accuracy, we can also observe that a higher ratio does have a negative impact on the classification stability. Moreover, the classification stability regarding our IRFFR has superior antinoise ability that is similar to that of the classification accuracy. With “Parkinson Multiple Sound Recording” (ID-10, Figure 4j and Figure 5j)” and “QSAR Biodegradation” (ID-12, Figure 4l and Figure 5l)” as examples, although the increasing ratio of label noise was injected into the raw data, the classification stabilities corresponding to our IRFFR over four different label noise ratios do not show dramatic change.

4.3.3. Comparison of Elapsed Time

In this section, the elapsed time for deriving reducts by employing different approaches are compared. The detailed results are shown in the following Table 5 and Table 6. The bold texts indicate the optimal method for each row.

Table 5.

The elapsed time of deriving reducts (noisy label ratio of 10% and 20%)(s).

Table 6.

The elapsed time of deriving reducts (noisy label ratio of 30% and 40%)(s).

With a deep investigation of Table 5 and Table 6, it is not difficult to arrive at the following conclusions.

- The time consumption for selecting features by our IRFFR was much less than that of all the comparative algorithms. The essential reason is that IRFFR can reduce the searching space for candidate features, which indicates that our IRFFR has superior efficiency. With the “Wine quality” (ID-24, Table 5)” data set as an example, if , the time consumption to obtain the reducts of IRFFR, KCR, FGS, SI, AG, ESAR, and NFEFR are 2.5880, 59.9067, 1480.2454, 19.2041, 9.1134, 10.0511, and 98.9501 s, respectively. Our IRFFR requires only 2.5880 s.

- It should be pointed out that for IRFFR and FGS, the time consumption has the largest difference. With “Pen-Based Recognition of Handwritten Digits” (ID-11) as an example, the elapsed time of our IRFFR over four different noisy label ratios are 25.2519, 17.5276, 16.1564, and 19.5612 s respectively; in contrast, the elapsed time of the FGS over four different noisy label ratios are 1083.2167, 4033.1561, 4023.1564, and 4057.2135 s, respectively. Therefore, the mechanism of parallel selection can significantly improve the efficiency in selecting features.

- With the increase in the label noise ratio, the elapsed time of seven different algorithms express different change tendencies. For example, when increases from 10 to 20, all elapsed time according to seven algorithms over the data set of “Breast Cancer Wisconsin” (Diagnostic) (ID-1) show a downward tendency. However, when is 30, the case is quite different, as is the case when is 40. That is, some algorithms require more time for reduct construction. In addition, we can observe that for the average elapsed time, the change of six comparative algorithms is gradual. On the contrary, the elapsed time of our IRFFR shows a clear descending trend. Therefore, the increase in the noisy label ratio does not significantly affect the time consumption of our IRFFR.

To further show the superiority of our IRFFR, the values of speed-up ratio are further presented in Table 7 and Table 8.

Table 7.

The speed-up ratio related to the elapsed time of obtaining reducts (noisy label ratios of 10% and 20%).

Table 8.

The speed-up ratio related to the elapsed time of obtaining reducts (noisy label ratios of 30% and 40%).

Following Table 7 and Table 8, it is not difficult to observe that in the comparison with the other six famous devices, not only are all the values of speed-up ratio with respect to four different noisy labels over 25 data sets much higher than 35%, but all average values of the speed-up ratio exceed 45%. Therefore, our IRFFR does possess the ability to accelerate the process of deriving reducts. Moreover, the Wilcoxon signed rank test [71] was also used to compare the algorithms. As can be analyzed from the experimental results, the p-values derived from our IRFFR and other six devices are all please use scientific notations throughout the text. which are obviously far less than 0.05. In addition, it can be reasonably conjectured that there exists a tremendous difference between our IRFFR and the other six state-of-the-art devices in terms of efficiency; therefore, the obtained p-values reach the lower bound of Matlab.

On the whole, the conclusion of that our proposed IRFFR does possess a significant advantage in time efficiency as seen by comparison with the other six algorithms can finally be obtained.

4.4. The Second Group of Experiments

In the second group of experiments, to verify the performance of IRFFR, two famous accelerators regarding feature reduction were employed to a conduct a comparison with our framework.

- Quick Random Sampling for Attribute Reduction (QRSAR) [58].

- Dissimilarity-Based Searching for Attribute Reduction (DBSAR) [72].

4.4.1. Comparison of Elapsed Time

In this section, the elapsed time derived from all feature reduction algorithms are compared. Table 9, Table 10 and Table 11 show the mean values of the different elapsed time obtained over 25 datasets.

Table 9.

The elapsed time of deriving reducts (label noise ratio of 10% to 20%)(s).

Table 10.

The elapsed time of deriving reducts (label noise ratio of 30% to 40%)(s).

Table 11.

The speed-up ratio related to the elapsed time of obtaining reducts (label noise ratios of 10% to 40%).

With an in-depth analysis of Table 9, Table 10 and Table 11, it is not difficult to obtain the following conclusions.

- Compared with those of other advanced accelerators, the time consumptions for deriving the final reduct of our IRFFR were considerably superior, meaning the mechanism of grouping and parallel selection does improve the efficiency of selecting features. In other words, our IRFFR substantially reduces the time needed to complete the process of selecting features. With the data set “Pen-Based Recognition of Handwritten Digits” (ID-11)’ as an example, when , the elapsed time of the three algorithms are 16.2186, 76.8057, and 99.5899 s, respectively. Moreover, regarding three other ratios (), the elapsed time also shows great differences.

- With the examples of both IRFFR and QRSAR, the change of ratio does not bring distinct oscillation to the elapsed time of our IRFFR. The essential reason for this is that the mechanism of the diverse evaluation is especially significant for the selection of more qualified features if data perturbation occurs. However, this mechanism does not exist in QRSAR, which may results in some abnormal changes to QRSAR. For instance, when changes from 10 to 30, the elapsed times of QRSAR for “Twonorm” (ID-20) are 50.6085, 35.4501, and 42.5415 s, respectively.

- Although our IRFFR is not faster than the two comparative algorithms in all cases, the speed-up ratios related to elapsed time of IRFFR are all higher than 40%. This is mainly because IRFFR selects the qualified features in parallel; that is, IRFFR places the optimal feature at a specific location in each group, and the final feature subset is then derived. From this point of view, QRSAR and DBSAR are more complicated than IRFFR.

4.4.2. Comparison of Classification Performances

In this section, the classification performances of the selected features with respect to three feature reduction approaches are examined. The classification accuracies and classification stabilities are recorded Table 12, Table 13, Table 14, Table 15, Table 16 and Table 17. Note that the classifiers are KNN, CART and SVM.

Table 12.

The KNN classification accuracies (label noise ratio of 10% to 40%).

Table 13.

The CART classification accuracies (label noise ratio of 10% to 40%).

Table 14.

The SVM classification accuracies (label noise ratio of 10% to 40%).

Table 15.

The KNN classification stabilities (label noise ratio of 10% to 40%).

Table 16.

The CART classification stabilities (label noise ratio of 10% to 40%).

Table 17.

The SVM classification stabilities (label noise ratio of 10% to 40%).

Observing Table 12, Table 13, Table 14, Table 15, Table 16 and Table 17, it is not difficult to draw the following conclusions.

- Compared with QRSAR and DBSAR, when , in KNN, our IRFFR achieves slightly superior rising rates of classification accuracy such that 2.36% and 0.59% (see Table 12). With the increase of , the advantage of our IRFFR is gradually revealed. For instance, when , regarding the KNN classifier, the rising rates of classification accuracy with respect to the comparative algorithms are 6.93% and 4.49%, respectively, which shows a significant increase. The essential reason is that the granularity has been introduced into our framework, the corresponding feature sequence is achieved, and the final subset is then relatively stable. Although the rising rates of QRSAR and DBSAR are slightly lower when increases from 30 to 40, compared with the case of lower ratio of , i.e., , our IRFFR does yield great success.

- Different from classification accuracy, regardless of which label noise ratio is injected and which classifier employed, the classification stabilities of our IRFFR show steady improvement (see Table 15, Table 16 and Table 17). Specifically, if , concerning all three classifiers, all rising rates of average classification stabilities exceed 5.0 %. Such an improvement is especially significant in a higher label noise ratio because diverse evaluation is helpful for deriving a more stable reduct, and our IRFFR can then posses a better classification performance if data perturbation occurs.

In addition, Table 18 and Table 19 show the counts of wins, ties, and losses regarding the classification stabilities and accuracies in the different classifiers. As has been reported in in [73], the number of wins in s the datasets obeys the normal distribution under the null hypothesis in the sign test for a given learning algorithm. We assert that the IRFFR is significantly better than are those under the significance level , when the number of wins is at least . In our experiments, , , then . This implies that our IRFFR will achieve statistical superiority if the number of wins and ties over 25 datasets reaches 17.

Table 18.

Counts of wins, ties, and losses regarding the classification stabilities.

Table 19.

Counts of wins, ties and losses regarding classification accuracies.

Considering the above discussions, we can clearly conclude that our IRFFR can not only accelerate the process of deriving reducts but can also provide qualified reducts with better classification performance.

5. Conclusions

In this study, considering the predictable shortcomings of the application of a single feature measure, we developed a novel parallel selector which includes the following: (1) the evaluation of features from diverse viewpoints and (2) a reliable paradigm which can be used for improving the effectiveness and efficiency of final selected features. Therefore, the additive time consumption with respect to incremental evaluation is then reduced. Different from previous devices which only consider single measure-based constraints for deriving qualified reducts, our selector pays considerable attention to the pattern of fusing different measures for attaining reducts with better generalization performance. Furthermore, It is worth emphasizing that our new selector can be seen as an effective framework which can be easily combined with other recent measures and other acceleration strategies. The results of the persuasive experiments and the corresponding analysis strongly prove the superiority of our selector.

Many follow-up comparison studies can be proposed on the basis of our strategy, with the items warranting further exploration being the following.

- It should not be ignored that the problems caused by multilabeling have aroused extensive discussion in the academic community. Therefore, it is urgent to further introduce the proposed method to dimension reduction problems with multilabel distributed data sets.

- The type of data perturbation considered in this paper involves only the aspect of the label. Therefore, can simulate other data perturbation forms, such as injecting feature noise [74], to make the proposed algorithm more robust.

Author Contributions

Conceptualization, Z.Y. and Y.F.; methodology, J.C.; software, Z.Y.; validation, Y.F., P.W. and J.C.; formal analysis, J.C.; investigation, J.C.; resources, P.W.; data curation, Z.Y.; writing—original draft preparation, Z.Y.; writing—review and editing, J.C.; visualization, J.C.; supervision, P.W.; project administration, P.W.; funding acquisition, J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (no. 62076111), the Key Research and Development Program of Zhenjiang-Social Development (grant no. SH2018005), the Industry-School Cooperative Education Program of the Ministry of Education (grant no. 202101363034, and the Postgraduate Research & Practice Innovation Program of Jiangsu Province (no. SJCX22_1905).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gui, N.; Ge, D.; Hu, Z. AFS: An attention-based mechanism for supervised feature selection. Proc. AAAI Conf. Artif. Intell. 2019, 33, 3705–3713. [Google Scholar] [CrossRef]

- Li, K.; Wang, F.; Yang, L.; Liu, R. Deep feature screening: Feature selection for ultra high-dimensional data via deep neural networks. Neurocomputing 2023, 538, 126186. [Google Scholar] [CrossRef]

- Chen, C.; Weiss, S.T.; Liu, Y.Y. Graph Convolutional Network-based Feature Selection for High-dimensional and Low-sample Size Data. arXiv 2022, arXiv:2211.14144. [Google Scholar] [CrossRef] [PubMed]

- Xiao, Z.; Xu, X.; Xing, H.; Song, F.; Wang, X.; Zhao, B. A federated learning system with enhanced feature extraction for human activity recognition. Knowl.-Based Syst. 2021, 229, 107338. [Google Scholar] [CrossRef]

- Constantinopoulos, C.; Titsias, M.K.; Likas, A. Bayesian feature and model selection for Gaussian mixture models. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1013–1018. [Google Scholar] [CrossRef]

- Chen, J.; Stern, M.; Wainwright, M.J.; Jordan, M.I. Kernel feature selection via conditional covariance minimization. Adv. Neural Inf. Process. Syst. 2017, 30, 6946–6955. [Google Scholar]

- Zhang, X.; Yao, Y. Tri-level attribute reduction in rough set theory. Expert Syst. Appl. 2022, 190, 116187. [Google Scholar] [CrossRef]

- Gao, Q.; Ma, L. A novel notion in rough set theory: Invariant subspace. Fuzzy Sets Syst. 2022, 440, 90–111. [Google Scholar] [CrossRef]

- Jiang, Z.; Liu, K.; Yang, X.; Yu, H.; Fujita, H.; Qian, Y. Accelerator for supervised neighborhood based attribute reduction. Int. J. Approx. Reason. 2020, 119, 122–150. [Google Scholar] [CrossRef]

- Liu, K.; Yang, X.; Yu, H.; Fujita, H.; Chen, X.; Liu, D. Supervised information granulation strategy for attribute reduction. Int. J. Mach. Learn. Cybern. 2020, 11, 2149–2163. [Google Scholar] [CrossRef]

- Kar, B.; Sarkar, B.K. A Hybrid Feature Reduction Approach for Medical Decision Support System. Math. Probl. Eng. 2022, 2022, 3984082. [Google Scholar] [CrossRef]

- Sun, L.; Zhang, J.; Ding, W.; Xu, J. Feature reduction for imbalanced data classification using similarity-based feature clustering with adaptive weighted K-nearest neighbors. Inf. Sci. 2022, 593, 591–613. [Google Scholar] [CrossRef]

- Sun, L.; Wang, X.; Ding, W.; Xu, J. TSFNFR: Two-stage fuzzy neighborhood-based feature reduction with binary whale optimization algorithm for imbalanced data classification. Knowl.-Based Syst. 2022, 256, 109849. [Google Scholar] [CrossRef]

- Xia, Z.; Chen, Y.; Xu, C. Multiview pca: A methodology of feature extraction and dimension reduction for high-order data. IEEE Trans. Cybern. 2021, 52, 11068–11080. [Google Scholar] [CrossRef]

- Su, Z.g.; Hu, Q.; Denoeux, T. A distributed rough evidential K-NN classifier: Integrating feature reduction and classification. IEEE Trans. Fuzzy Syst. 2020, 29, 2322–2335. [Google Scholar] [CrossRef]

- Ba, J.; Liu, K.; Ju, H.; Xu, S.; Xu, T.; Yang, X. Triple-G: A new MGRS and attribute reduction. Int. J. Mach. Learn. Cybern. 2022, 13, 337–356. [Google Scholar] [CrossRef]

- Liu, K.; Yang, X.; Yu, H.; Mi, J.; Wang, P.; Chen, X. Rough set based semi-supervised feature selection via ensemble selector. Knowl.-Based Syst. 2019, 165, 282–296. [Google Scholar] [CrossRef]

- Li, Z.; Kamnitsas, K.; Glocker, B. Analyzing overfitting under class imbalance in neural networks for image segmentation. IEEE Trans. Med. Imaging 2020, 40, 1065–1077. [Google Scholar] [CrossRef]

- Park, Y.; Ho, J.C. Tackling overfitting in boosting for noisy healthcare data. IEEE Trans. Knowl. Data Eng. 2019, 33, 2995–3006. [Google Scholar] [CrossRef]

- Ismail, A.; Sandell, M. A Low-Complexity Endurance Modulation for Flash Memory. IEEE Trans. Circuits Syst. II Express Briefs 2021, 69, 424–428. [Google Scholar] [CrossRef]

- Wang, P.X.; Yao, Y.Y. CE3: A three-way clustering method based on mathematical morphology. Knowl.-Based Syst. 2018, 155, 54–65. [Google Scholar] [CrossRef]

- Tang, Y.J.; Zhang, X. Low-complexity resource-shareable parallel generalized integrated interleaved encoder. IEEE Trans. Circuits Syst. I Regul. Pap. 2021, 69, 694–706. [Google Scholar] [CrossRef]

- Ding, W.; Nayak, J.; Naik, B.; Pelusi, D.; Mishra, M. Fuzzy and real-coded chemical reaction optimization for intrusion detection in industrial big data environment. IEEE Trans. Ind. Inform. 2020, 17, 4298–4307. [Google Scholar] [CrossRef]

- Jia, X.; Shang, L.; Zhou, B.; Yao, Y. Generalized attribute reduct in rough set theory. Knowl.-Based Syst. 2016, 91, 204–218. [Google Scholar] [CrossRef]

- Ju, H.; Yang, X.; Yu, H.; Li, T.; Yu, D.J.; Yang, J. Cost-sensitive rough set approach. Inf. Sci. 2016, 355, 282–298. [Google Scholar] [CrossRef]

- Qian, Y.; Liang, J.; Pedrycz, W.; Dang, C. An efficient accelerator for attribute reduction from incomplete data in rough set framework. Pattern Recognit. 2011, 44, 1658–1670. [Google Scholar] [CrossRef]

- Ba, J.; Wang, P.; Yang, X.; Yu, H.; Yu, D. Glee: A granularity filter for feature selection. Eng. Appl. Artif. Intell. 2023, 122, 106080. [Google Scholar] [CrossRef]

- Gong, Z.; Liu, Y.; Xu, T.; Wang, P.; Yang, X. Unsupervised attribute reduction: Improving effectiveness and efficiency. Int. J. Mach. Learn. Cybern. 2022, 13, 3645–3662. [Google Scholar] [CrossRef]

- Jiang, Z.; Liu, K.; Song, J.; Yang, X.; Li, J.; Qian, Y. Accelerator for crosswise computing reduct. Appl. Soft Comput. 2021, 98, 106740. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, P.; Yang, X.; Mi, J.; Liu, D. Granular ball guided selector for attribute reduction. Knowl.-Based Syst. 2021, 229, 107326. [Google Scholar] [CrossRef]

- Qian, W.; Xiong, C.; Qian, Y.; Wang, Y. Label enhancement-based feature selection via fuzzy neighborhood discrimination index. Knowl.-Based Syst. 2022, 250, 109119. [Google Scholar] [CrossRef]

- Wang, C.; Huang, Y.; Shao, M.; Hu, Q.; Chen, D. Feature selection based on neighborhood self-information. IEEE Trans. Cybern. 2019, 50, 4031–4042. [Google Scholar] [CrossRef] [PubMed]

- Jin, C.; Li, F.; Hu, Q. Knowledge change rate-based attribute importance measure and its performance analysis. Knowl.-Based Syst. 2017, 119, 59–67. [Google Scholar] [CrossRef]

- Qian, Y.; Liang, J.; Pedrycz, W.; Dang, C. Positive approximation: An accelerator for attribute reduction in rough set theory. Artif. Intell. 2010, 174, 597–618. [Google Scholar] [CrossRef]

- Hu, Q.; Yu, D.; Xie, Z.; Li, X. EROS: Ensemble rough subspaces. Pattern Recognit. 2007, 40, 3728–3739. [Google Scholar] [CrossRef]

- Liu, K.; Li, T.; Yang, X.; Yang, X.; Liu, D.; Zhang, P.; Wang, J. Granular cabin: An efficient solution to neighborhood learning in big data. Inf. Sci. 2022, 583, 189–201. [Google Scholar] [CrossRef]

- Pashaei, E.; Pashaei, E. Hybrid binary COOT algorithm with simulated annealing for feature selection in high-dimensional microarray data. Neural Comput. Appl. 2023, 35, 353–374. [Google Scholar] [CrossRef]

- Tang, Y.; Su, H.; Jin, T.; Flesch, R.C.C. Adaptive PID Control Approach Considering Simulated Annealing Algorithm for Thermal Damage of Brain Tumor During Magnetic Hyperthermia. IEEE Trans. Instrum. Meas. 2023, 72, 1–8. [Google Scholar] [CrossRef]

- Hu, Q.; Pedrycz, W.; Yu, D.; Lang, J. Selecting discrete and continuous features based on neighborhood decision error minimization. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2009, 40, 137–150. [Google Scholar]

- Yang, X.; Yao, Y. Ensemble selector for attribute reduction. Appl. Soft Comput. 2018, 70, 1–11. [Google Scholar] [CrossRef]

- Niu, J.; Chen, D.; Li, J.; Wang, H. A dynamic rule-based classification model via granular computing. Inf. Sci. 2022, 584, 325–341. [Google Scholar] [CrossRef]

- Yang, X.; Li, T.; Liu, D.; Fujita, H. A temporal-spatial composite sequential approach of three-way granular computing. Inf. Sci. 2019, 486, 171–189. [Google Scholar] [CrossRef]

- Han, Z.; Huang, Q.; Zhang, J.; Huang, C.; Wang, H.; Huang, X. GA-GWNN: Detecting anomalies of online learners by granular computing and graph wavelet convolutional neural network. Appl. Intell. 2022, 52, 13162–13183. [Google Scholar] [CrossRef]

- Xu, K.; Pedrycz, W.; Li, Z. Granular computing: An augmented scheme of degranulation through a modified partition matrix. Fuzzy Sets Syst. 2022, 440, 131–148. [Google Scholar] [CrossRef]

- Rao, X.; Liu, K.; Song, J.; Yang, X.; Qian, Y. Gaussian kernel fuzzy rough based attribute reduction: An acceleration approach. J. Intell. Fuzzy Syst. 2020, 39, 679–695. [Google Scholar] [CrossRef]

- Yang, B. Fuzzy covering-based rough set on two different universes and its application. Artif. Intell. Rev. 2022, 55, 4717–4753. [Google Scholar] [CrossRef]

- Sun, L.; Wang, T.; Ding, W.; Xu, J.; Lin, Y. Feature selection using Fisher score and multilabel neighborhood rough sets for multilabel classification. Inf. Sci. 2021, 578, 887–912. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, X.; Li, J.; Wang, P.; Qian, Y. Fusing attribute reduction accelerators. Inf. Sci. 2022, 587, 354–370. [Google Scholar] [CrossRef]

- Liang, J.; Shi, Z. The information entropy, rough entropy and knowledge granulation in rough set theory. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 2004, 12, 37–46. [Google Scholar] [CrossRef]

- Xu, J.; Yang, J.; Ma, Y.; Qu, K.; Kang, Y. Feature selection method for color image steganalysis based on fuzzy neighborhood conditional entropy. Appl. Intell. 2022, 52, 9388–9405. [Google Scholar] [CrossRef]

- Sang, B.; Chen, H.; Yang, L.; Li, T.; Xu, W. Incremental feature selection using a conditional entropy based on fuzzy dominance neighborhood rough sets. IEEE Trans. Fuzzy Syst. 2021, 30, 1683–1697. [Google Scholar] [CrossRef]

- Américo, A.; Khouzani, M.; Malacaria, P. Conditional entropy and data processing: An axiomatic approach based on core-concavity. IEEE Trans. Inf. Theory 2020, 66, 5537–5547. [Google Scholar] [CrossRef]

- Gao, C.; Zhou, J.; Miao, D.; Yue, X.; Wan, J. Granular-conditional-entropy-based attribute reduction for partially labeled data with proxy labels. Inf. Sci. 2021, 580, 111–128. [Google Scholar] [CrossRef]

- Zhang, X.; Mei, C.; Chen, D.; Li, J. Feature selection in mixed data: A method using a novel fuzzy rough set-based information entropy. Pattern Recognit. 2016, 56, 1–15. [Google Scholar] [CrossRef]

- Ko, Y.C.; Fujita, H. An evidential analytics for buried information in big data samples: Case study of semiconductor manufacturing. Inf. Sci. 2019, 486, 190–203. [Google Scholar] [CrossRef]

- Huang, H.; Oh, S.K.; Wu, C.K.; Pedrycz, W. Double iterative learning-based polynomial based-RBFNNs driven by the aid of support vector-based kernel fuzzy clustering and least absolute shrinkage deviations. Fuzzy Sets Syst. 2022, 443, 30–49. [Google Scholar] [CrossRef]

- Yao, Y.; Zhao, Y.; Wang, J. On reduct construction algorithms. Trans. Comput. Sci. II 2008, 100–117. [Google Scholar]

- Chen, Z.; Liu, K.; Yang, X.; Fujita, H. Random sampling accelerator for attribute reduction. Int. J. Approx. Reason. 2022, 140, 75–91. [Google Scholar] [CrossRef]

- Fokianos, K.; Leucht, A.; Neumann, M.H. On integrated l 1 convergence rate of an isotonic regression estimator for multivariate observations. IEEE Trans. Inf. Theory 2020, 66, 6389–6402. [Google Scholar] [CrossRef]

- Wang, H.; Liao, H.; Ma, X.; Bao, R. Remaining useful life prediction and optimal maintenance time determination for a single unit using isotonic regression and gamma process model. Reliab. Eng. Syst. Saf. 2021, 210, 107504. [Google Scholar] [CrossRef]

- Balinski, M.L. A competitive (dual) simplex method for the assignment problem. Math. Program. 1986, 34, 125–141. [Google Scholar] [CrossRef]

- Ayer, M.; Brunk, H.D.; Ewing, G.M.; Reid, W.T.; Silverman, E. An empirical distribution function for sampling with incomplete information. Ann. Math. Stat. 1955, 26, 641–647. [Google Scholar] [CrossRef]

- Oh, H.; Nam, H. Maximum rate scheduling with adaptive modulation in mixed impulsive noise and additive white Gaussian noise environments. IEEE Trans. Wirel. Commun. 2021, 20, 3308–3320. [Google Scholar] [CrossRef]

- Hu, Q.; Yu, D.; Liu, J.; Wu, C. Neighborhood rough set based heterogeneous feature subset selection. Inf. Sci. 2008, 178, 3577–3594. [Google Scholar] [CrossRef]

- Wu, T.F.; Fan, J.C.; Wang, P.X. An improved three-way clustering based on ensemble strategy. Mathematics 2022, 10, 1457. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, K.; Song, J.; Fujita, H.; Yang, X.; Qian, Y. Attribute group for attribute reduction. Inf. Sci. 2020, 535, 64–80. [Google Scholar] [CrossRef]

- Ye, D.; Chen, Z.; Ma, S. A novel and better fitness evaluation for rough set based minimum attribute reduction problem. Inf. Sci. 2013, 222, 413–423. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Breiman, L. Classification and Regression Trees; Routledge: Cambridge, MA, USA, 2017. [Google Scholar]

- Fu, C.; Zhou, S.; Zhang, D.; Chen, L. Relative Density-Based Intuitionistic Fuzzy SVM for Class Imbalance Learning. Entropy 2022, 25, 34. [Google Scholar] [CrossRef]

- Demšar, J. Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Rao, X.; Yang, X.; Yang, X.; Chen, X.; Liu, D.; Qian, Y. Quickly calculating reduct: An attribute relationship based approach. Knowl.-Based Syst. 2020, 200, 106014. [Google Scholar] [CrossRef]

- Cao, F.; Ye, H.; Wang, D. A probabilistic learning algorithm for robust modeling using neural networks with random weights. Inf. Sci. 2015, 313, 62–78. [Google Scholar] [CrossRef]

- Xu, S.; Ju, H.; Shang, L.; Pedrycz, W.; Yang, X.; Li, C. Label distribution learning: A local collaborative mechanism. Int. J. Approx. Reason. 2020, 121, 59–84. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).