Abstract

In this paper, an iterative method was considered for solving the absolute value equation (AVE). We suggest a two-step method in which the well-known Gauss quadrature rule is the corrector step and the generalized Newton method is taken as the predictor step. The convergence of the proposed method is established under some acceptable conditions. Numerical examples prove the consistency and capability of this new method.

Keywords:

Gauss quadrature method; absolute value equation; convergence analysis; numerical analysis; Euler–Bernoulli equation MSC:

65F10; 65H10

1. Introduction

Consider the AVE of the form:

where , , and represents a vector in whose components are . AVE (1) is a particular case of

and was introduced by Rohn [1]. AVE (1) arises in linear and quadratic programming, network equilibrium problems, complementarity problems, and economies with institutional restrictions on prices. Recently, several iterative methods were investigated to find the approximate solution of (1). For instance, Khan et al. [2] proposed a new technique based on Simpson’s rule for solving the AVE. Feng et al. [3,4] considered certain two-step iterative techniques to solve the AVE. Shi et al. [5] proposed a two-step Newton-type method for solving the AVE, and the linear convergence was discussed. Noor et al. [6,7] studied the solution of the AVE using minimization techniques, and the convergence of these techniques was proven. The Gauss quadrature method is a powerful technique to evaluate the integrals. In [8], it was used to solve the system of nonlinear equations. For other interesting methods for solving the AVE, the interested readers may refer to [9,10,11,12,13,14,15,16,17,18] for details.

The notations are defined in the following. For , denotes the two-norm . Let be a vector with entries based on the entries of x that are zero, positive, or negative. Assume that is a diagonal matrix. A generalized Jacobian of is given by

For , will represent the n singular values of A.

In the present paper, the Gauss quadrature method with the generalized Newton method is considered to solve (1). Under the condition that , we establish the proposed method’s convergence. A few numerical examples are given to demonstrate the performance of the proposed method.

2. Gauss Quadrature Method

Consider

A generalized Jacobian of g is given by

where as defined in (3). Let be a solution of (1). The two-point quadrature rule is

Now, by the fundamental theorem of calculus, we have

As is a solution of (1), that is , therefore, (7) can be written as

From (6) and (8), we obtain

Thus,

From the above, the Gauss quadrature method (GQM) can be written as follows (Algorithm 1):

| Algorithm 1: Gauss Quadrature Method (GQM) |

| 1: Select |

| 2: For k, calculate |

| 3: Using Step 2, calculate |

| 4: If , then stop. If not, move on to Step 2. |

3. Analysis of Convergence

In this section, the convergence of the suggested technique is investigated. The predictor step:

is well defined; see Lemma 2 [14]. To prove that

is nonsingular, first we assume that

and

Then,

where and are diagonal matrices with entries 0 or .

Lemma 1.

If exceeds 1, then exists for any diagonal matrix D defined in (3).

Proof.

If is singular, then for some . As the singular values of A are greater than one, therefore, using Lemma 1 [14], we have

which is a contradiction; hence, is nonsingular, and the sequence in Algorithm 1 is well defined. □

Lemma 2.

If then the sequence of GQMs is bounded and well defined. Hence, an accumulation point exists such that

and

Proof.

The proof is in accordance with [14]. It is hence omitted. □

Theorem 1.

If , then the GQM converges to a solution ζ of (1).

Proof.

Theorem 2.

Proof.

The unique solvability directly follows from ; see [13]. Since exists, therefore, by Lemma 2.3.2 (p. 45) [16], we have

Hence, the proof is complete. □

4. Numerical Results

In this section, we compare the GQM with other approaches that are already in use. We took the initial starting point from the references cited in each example. K, CPU and RES represent the number of iterations, the time in seconds, and the norm of the residual, respectively. We used MATLAB (R2018a), with an Intel(R) Core (TM)-i5-3327, 1.00 GHz, CPU @0.90GHz, and 4 GB RAM, for the computations.

Example 1

The comparison of the GQM with the MSOR-like method [11], the GNM [14], and the residual method (RIM) [15] is given in Table 1.

Table 1.

Numerical comparison of the GQM with the RIM and MSOR-like method.

From the last row of Table 1, it can be seen that the GQM converges to the solution of (1) very quickly. The residuals show that the GQM is more accurate than the MSOR [14] and RIM [15].

Example 2

([3]). Consider

Select a random and .

Table 2.

Comparison of the GQM with the TSI and INM.

From Table 2, we see that our suggested method converges in two iterations to the approximate solution of (1) with high accuracy. The other two methods are also two-step methods and performed a little worse for this problem.

Example 3

([10]). Let

with the same initial vector as given in [10].

We compared our proposed method with the modified iteration method (MIM) [9] and the generalized iteration methods (GIMs) [10].

The last row of Table 3 reveals that the GQM converges to the solution of (1) in two iterations. Moreover, it is obvious from the residual that the GQM is more accurate than the MIM and GIM.

Table 3.

Comparison of the GQM with the MIM and GIM.

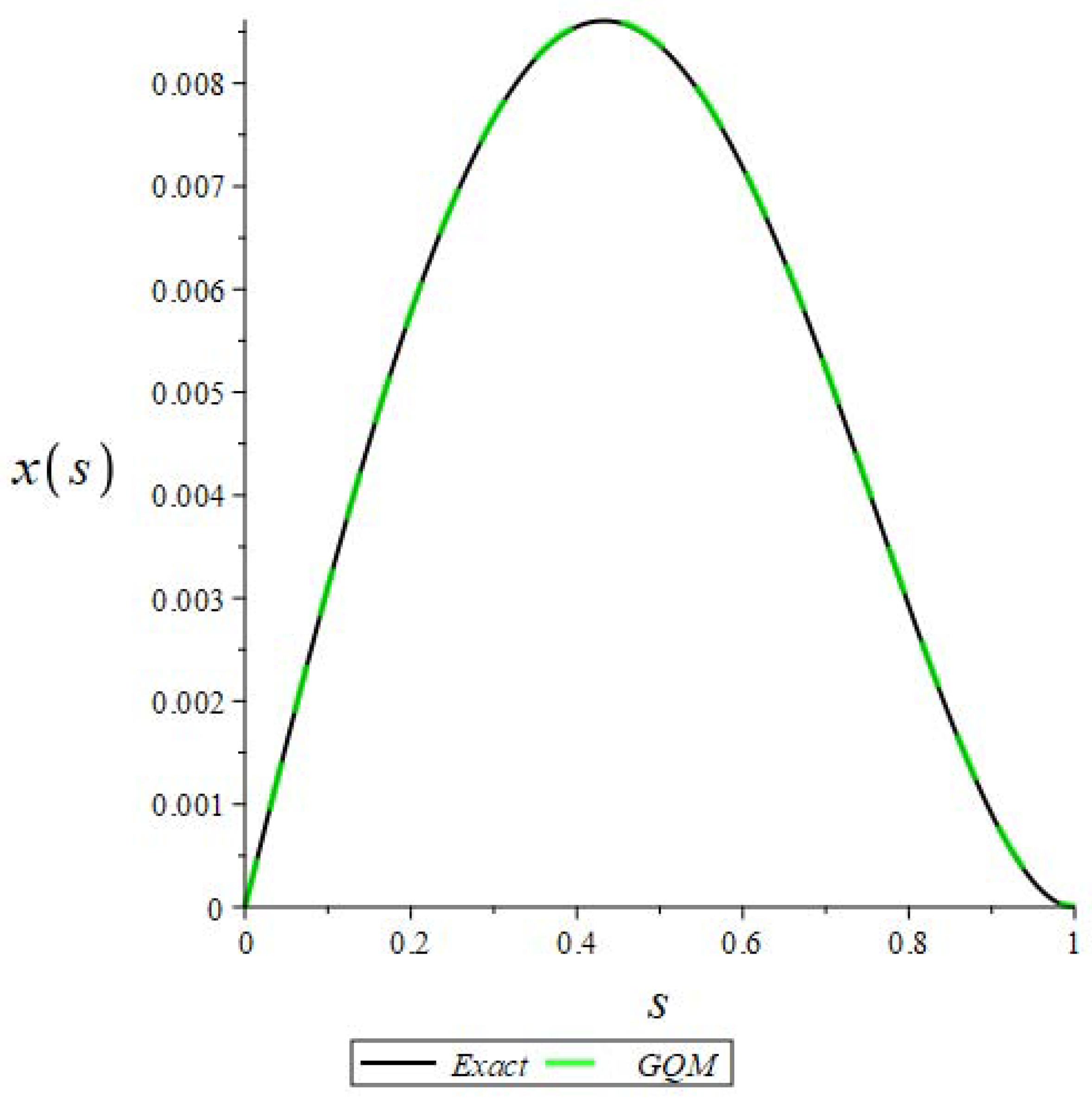

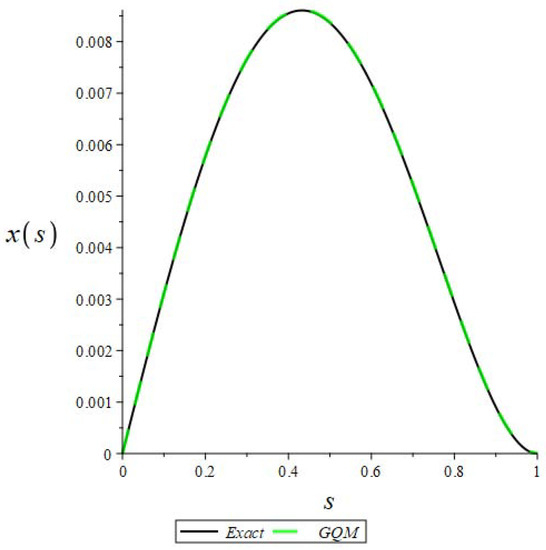

Example 4.

Consider the Euler–Bernoulli equation of the form:

with boundary conditions:

We used the finite difference method to discretize the Euler–Bernoulli equation. The comparison of the GQM with the Maple solution is given in Figure 1.

Figure 1.

Comparison of the GQM with the Maple solution for (step size).

From Figure 1, we see that the curves overlap one another, which shows the efficiency and implementation of the GQM for solving (1).

Example 5

([6]). Consider the following AVE with

Choose the constant vector b such that is the actual solution of (1). We took the same initial starting vector as given in [6].

Table 4.

Comparison for Example 5.

5. Conclusions

In this paper, we considered a two-step method for solving the AVE, and the well-known generalized Newton method was taken as the predictor step and the Gauss quadrature rule as the corrector step. The convergence was proven under certain suitable conditions. This method was shown to be effective for solving AVE (1) compared to the other similar methods. This idea can be extended to solve generalized absolute value equations. It is also interesting to study the three-point Gauss quadrature rule as the corrector step for solving the AVE.

Author Contributions

The idea of the present paper was proposed by J.I.; L.S. and F.R. wrote and completed the calculations; M.A. checked all the results. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rohn, J. A theorem of the alternatives for the equation Ax+Bx=b. Linear Multilinear Algebr. 2004, 52, 421–426. [Google Scholar] [CrossRef]

- Khan, A.; Iqbal, J.; Akgul, A.; Ali, R.; Du, Y.; Hussain, A.; Nisar, K.S.; Vijayakumar, V. A Newton-type technique for solving absolute value equations. Alex. Eng. J. 2023, 64, 291–296. [Google Scholar] [CrossRef]

- Feng, J.M.; Liu, S.Y. An improved generalized Newton method for absolute value equations. SpringerPlus 2016, 5, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Feng, J.M.; Liu, S.Y. A new two-step iterative method for solving absolute value equations. J. Inequalities Appl. 2019, 2019, 39. [Google Scholar] [CrossRef]

- Shi, L.; Iqbal, J.; Arif, M.; Khan, A. A two-step Newton-type method for solving system of absolute value equations. Math. Probl. Eng. 2020, 2020, 2798080. [Google Scholar] [CrossRef]

- Noor, M.A.; Iqbal, J.; Khattri, S.; Al-Said, E. A new iterative method for solving absolute value equations. Inter. J. Phys. Sci. 2011, 6, 1793–1797. [Google Scholar]

- Noor, M.A.; Iqbal, J.; Noor, K.I.; Al-Said, E. On an iterative method for solving absolute value equations. Optim. Lett. 2012, 6, 1027–1033. [Google Scholar] [CrossRef]

- Srivastava, H.M.; Iqbal, J.; Arif, M.; Khan, A.; Gasimov, Y.M.; Chinram, R. A new application of Gauss Quadrature method for solving systems of nonlinear equations. Symmetry 2021, 13, 432. [Google Scholar] [CrossRef]

- Ali, R. Numerical solution of the absolute value equation using modified iteration methods. Comput. Math. Methods 2022, 2022, 2828457. [Google Scholar] [CrossRef]

- Ali, R.; Khan, I.; Ali, A.; Mohamed, A. Two new generalized iteration methods for solving absolute value equations using M-matrix. AIMS Math. 2022, 7, 8176–8187. [Google Scholar] [CrossRef]

- Huang, B.; Li, W. A modified SOR-like method for absolute value equations associated with second order cones. J. Comput. Appl. Math. 2022, 400, 113745. [Google Scholar] [CrossRef]

- Liang, Y.; Li, C. Modified Picard-like method for solving absolute value equations. Mathematics 2023, 11, 848. [Google Scholar] [CrossRef]

- Mangasarian, O.L.; Meyer, R.R. Absolute value equations. Lin. Alg. Appl. 2006, 419, 359–367. [Google Scholar] [CrossRef]

- Mangasarian, O.L. A generalized Newton method for absolute value equations. Optim. Lett. 2009, 3, 101–108. [Google Scholar] [CrossRef]

- Noor, M.A.; Iqbal, J.; Al-Said, E. Residual iterative method for solving absolute value equations. In Abstract and Applied Analysis; Hindawi: London, UK, 2012. [Google Scholar] [CrossRef]

- Ortega, J.M.; Rheinboldt, W.C. Iterative Solution of Nonlinear Equations in Several Variables; Academic Press: Cambridge, MA, USA, 1970. [Google Scholar]

- Yu, Z.; Li, L.; Yuan, Y. A modified multivariate spectral gradient algorithm for solving absolute value equations. Appl. Math. Lett. 2021, 21, 107461. [Google Scholar] [CrossRef]

- Zhang, Y.; Yu, D.; Yuan, Y. On the Alternative SOR-like Iteration Method for Solving Absolute Value Equations. Symmetry 2023, 15, 589. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).