Finding Patient Zero and Tracking Narrative Changes in the Context of Online Disinformation Using Semantic Similarity Analysis

Abstract

:1. Introduction

- We designed a solution to analyze information propagation and narrative changes and to identify the patient zero in the context of online disinformation, based on text similarity techniques.

- We collected and annotated a set of news from the websites of some Romanian publications to be used for the evaluation of the proposed solution.

- We implemented and evaluated some text similarity and semantic textual similarity techniques in order to assess their performance in terms of the quality of the results, execution times and scalability.

2. Related Work

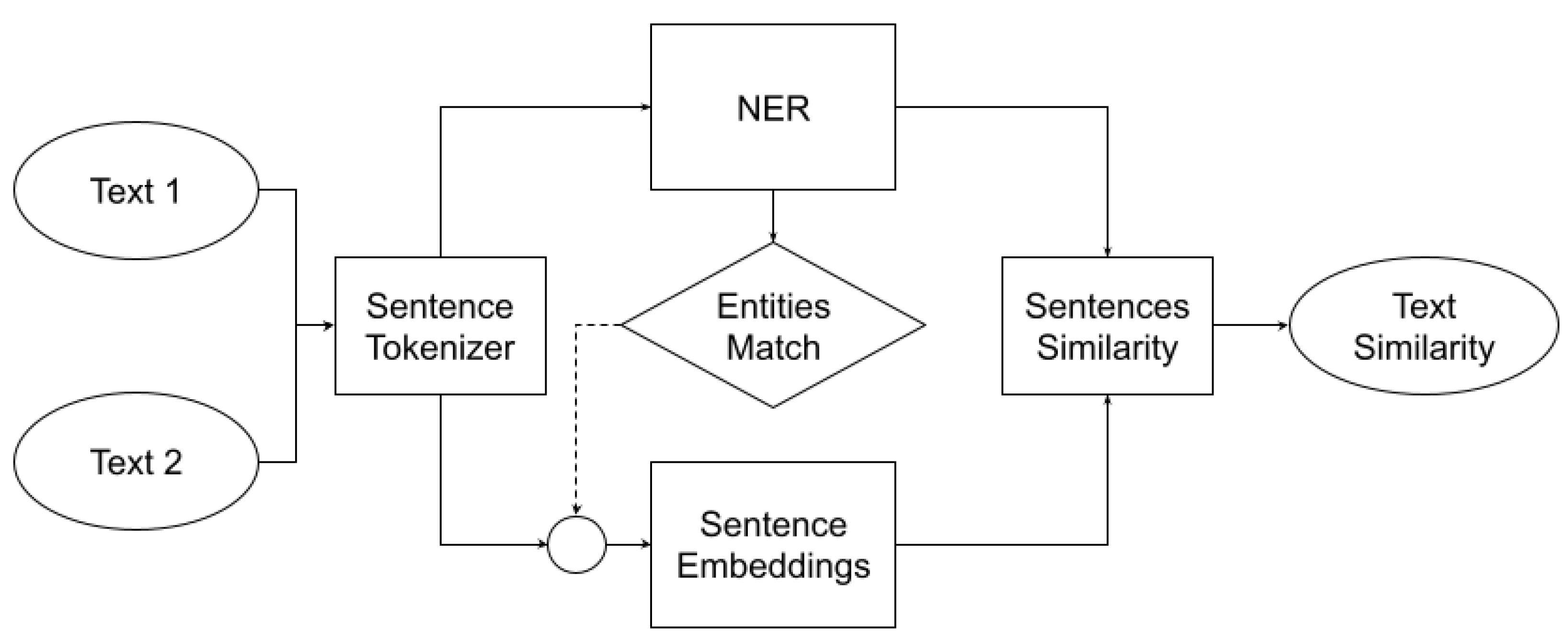

3. Solution Description

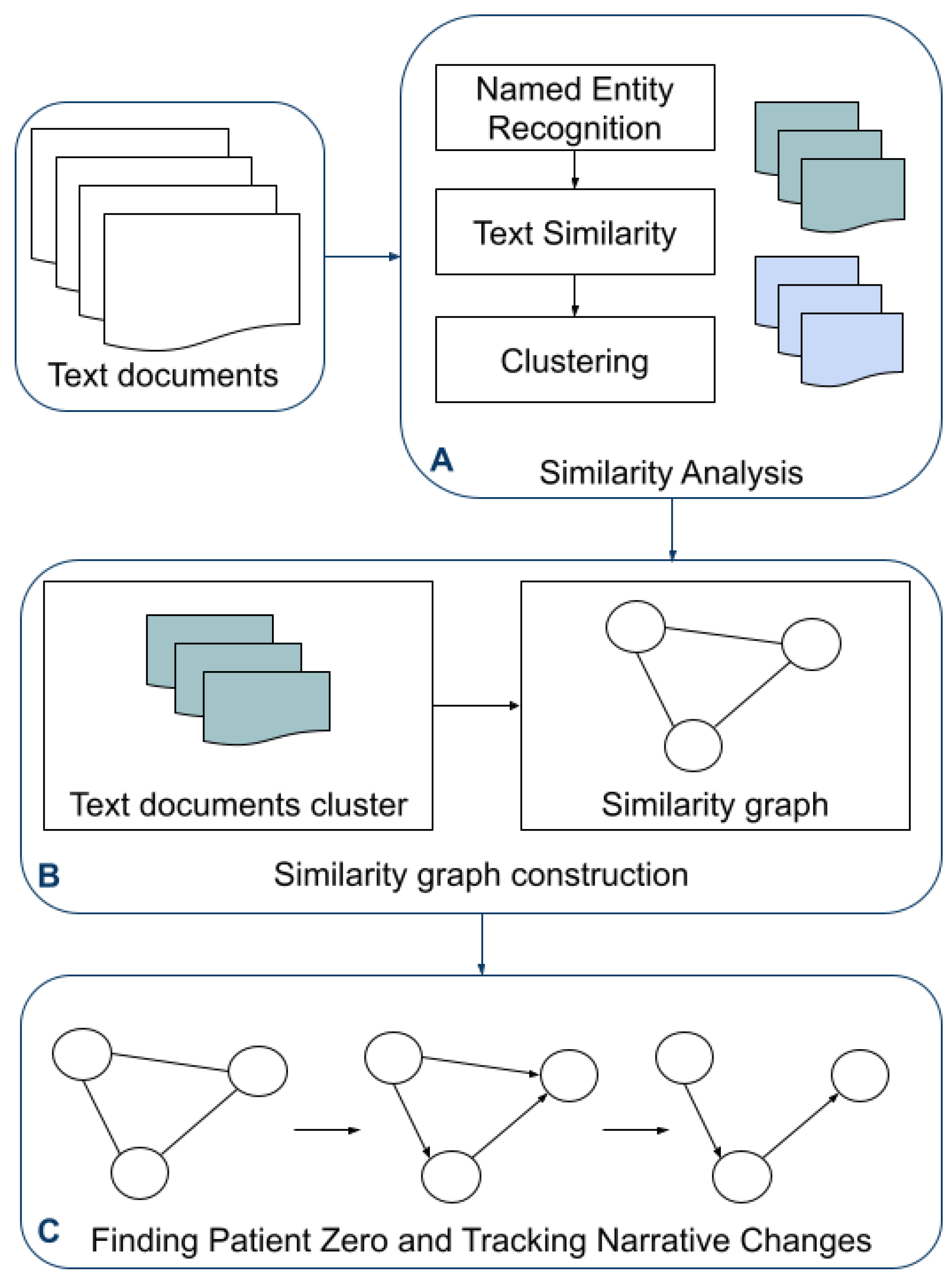

- (A)

- First, a collection of text documents is processed by a Similarity Analysis module. We apply Named Entity Recognition and text similarity techniques to compute similarity scores between pairs of text documents. Based on the computed similarity scores, we form clusters of documents.

- (B)

- Each cluster of text documents is then further processed and a similarity graph is constructed based on the similarity scores.

- (C)

- The similarity graph is further processed including additional information, such as the date and time when the text documents were fetched from the Internet, allowing us to find the patient zero and to track narrative changes.

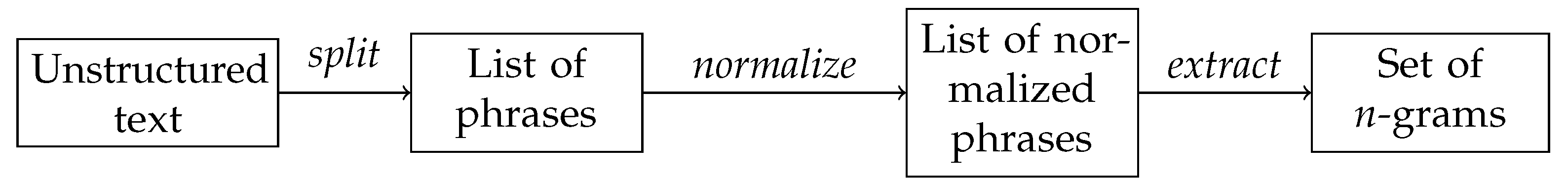

3.1. Textual Similarity

3.1.1. Article Representation and Similarity Score

3.1.2. Enabling Scalability with Locality-Sensitive Hashing

3.2. Semantic Similarity

3.2.1. Sentence Tokenizer

3.2.2. Named Entity Recognition

- We use the lemmatizer from the same Romanian NLP pipeline to extract the base form of each entity.

- Then, a Romanian language stemmer is used to extract the stem of the tokens that make up each entity. (The NLTK Romanian Snowball stemmer is available at https://www.nltk.org/api/nltk.stem.snowball.html#nltk.stem.snowball.RomanianStemmer, accessed on 8 March 2023).

3.2.3. Entities Match

3.2.4. Sentence Embeddings

- We take the dense vector representation of the [CLS] token as the semantic representation of the input sentence.

- We take the average of the hidden state of the last layer on the time axis.

3.2.5. Sentence Similarity

3.2.6. Text Similarity

3.3. Algorithm Parallelization

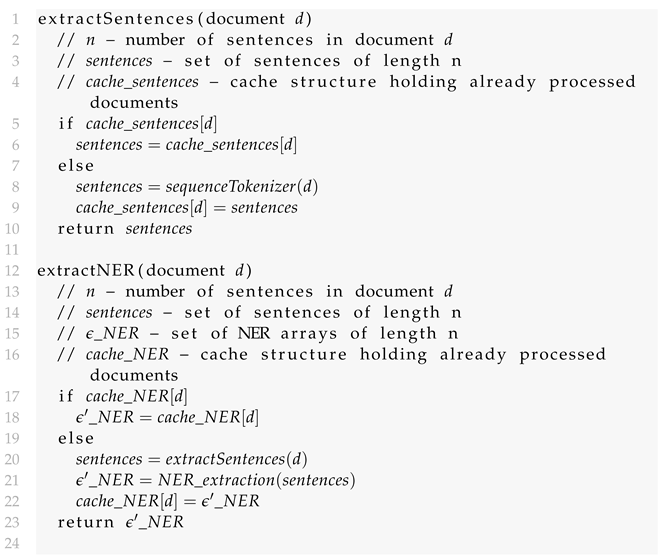

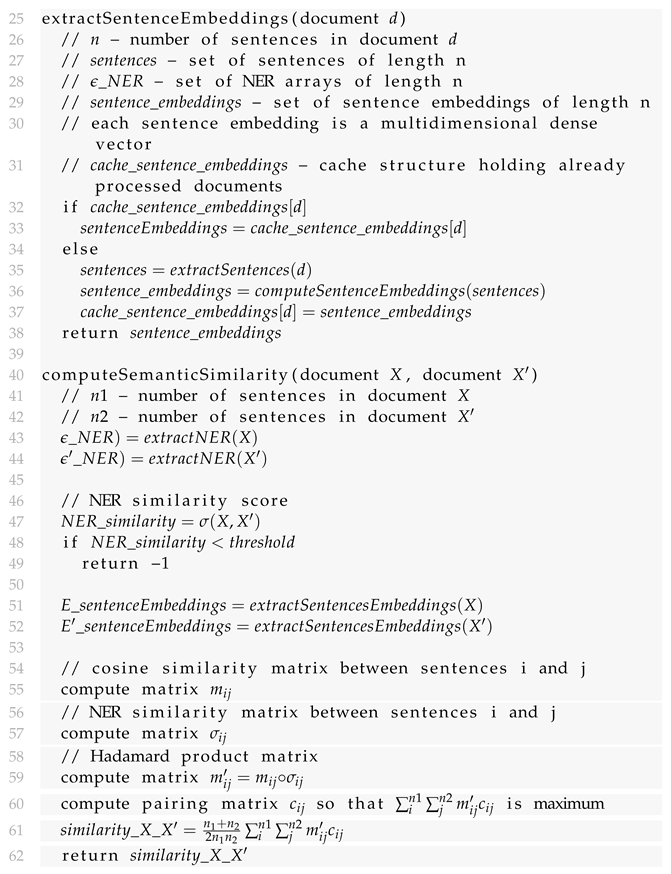

| Listing 1. Semantic Similarity Algorithm. |

|

|

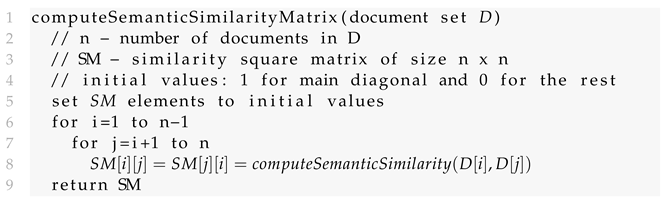

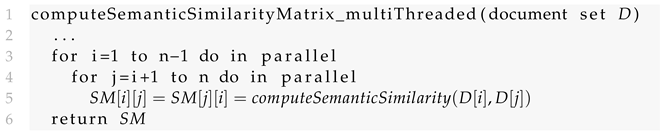

| Listing 2. Semantic Similarity Algorithm on document database. |

|

| Listing 3. Semantic Similarity Algorithm on document database—multi-threaded. |

|

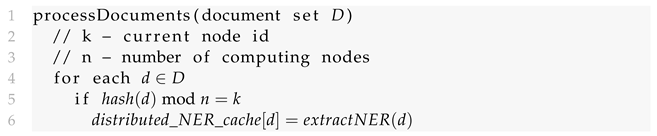

- The sentence tokenization and NER extraction phase—this phase takes all the documents from the document database D and extracts the sentences, sentence embeddings and NER entities. Each node in the cluster analyzes a portion of the document database according to a hash function. A document is processed by a node k ifwhere n is the number of computing nodes.The document d is processed by the function extractNER (document d) from Listing 1.The processing results of document d are then stored in a distributed cache.The pseudocode for this phase is as following Listing 4:

Listing 4. Distributed Semantic Similarity Algorithm—document processing. - The NER-based clustering phase. This phase distributes the documents from the document database D in clusters according to their NER similarity. The reasoning for clusterization is based on the fact that semantically similar documents also exhibit NER similarity. Clustering the documents by NER saves large amounts of computations since only the documents belonging to the same cluster have to be analyzed for semantic similarity. Moreover, this phase is not computing-intensive, since it only analyzes the NER representation of the documents. At the end of this phase, the document database D is split into different document clusters.where are the clusters and is the total number of clusters.

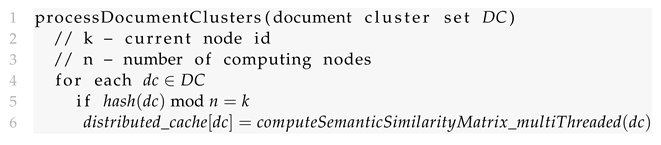

- The semantic similarity analysis phase. Each computing node is assigned a document cluster from the previous phase, according to a hash function. A document cluster is processed by a node k ifwhere n is the number of computing nodes.We define hash(dc) as follows:where is the number of documents in the cluster .The document cluster is processed by the function computeSemanticSimilarityMatrix_multiThreaded (document set D) from Listing 3.The processing results of document cluster are then stored in a distributed cache for further analysis.The pseudocode for this phase is as following Listing 5:

Listing 5. Distributed Semantic Similarity Algorithm—document cluster processing. The result of processing a document cluster is a semantic similarity matrix. In the context of online disinformation, this matrix can be used to find the patient zero news article and to track narrative changes, as described in Section 3.4.

3.4. Finding Patient Zero and Tracking Narrative Changes

- Figure 7a illustrates the semantic similarity graph of the document cluster. Each node in the graph represents a document. This graph is based on the semantic similarity adjacency matrix computed by the algorithms presented in the previous sections. The minimum document similarity threshold used for this cluster is 35%. This graph is an undirected graph.

- Figure 7b further filters this undirected graph by applying a stronger document similarity threshold of 75%. At the same time, we remove all the edges between the nodes whose timestamps do not follow a timeline. This time relation transforms the initial undirected graph into a directed graph, where a node points to another node only if it meets the similarity threshold and its timestamp is higher.

- Figure 7c illustrates the similarity paths, marked with red, of each document in the graph. These paths are obtained for each node, by selecting the parent with the highest similarity.

- Figure 7d illustrates the results of removing all edges from the directed graph, except the ones marked with red. The result is a forest of semantic similarity trees.

- Figure 7e illustrates how we can track the common source of propagation given a series of documents. Here, we wanted to find the common source for documents 8 and 10, which is 4. The problem of finding the common sources is known as the Lowest Common Ancestor (LCA) and there are algorithms that solve it in linear time [39].

4. Experimental Results

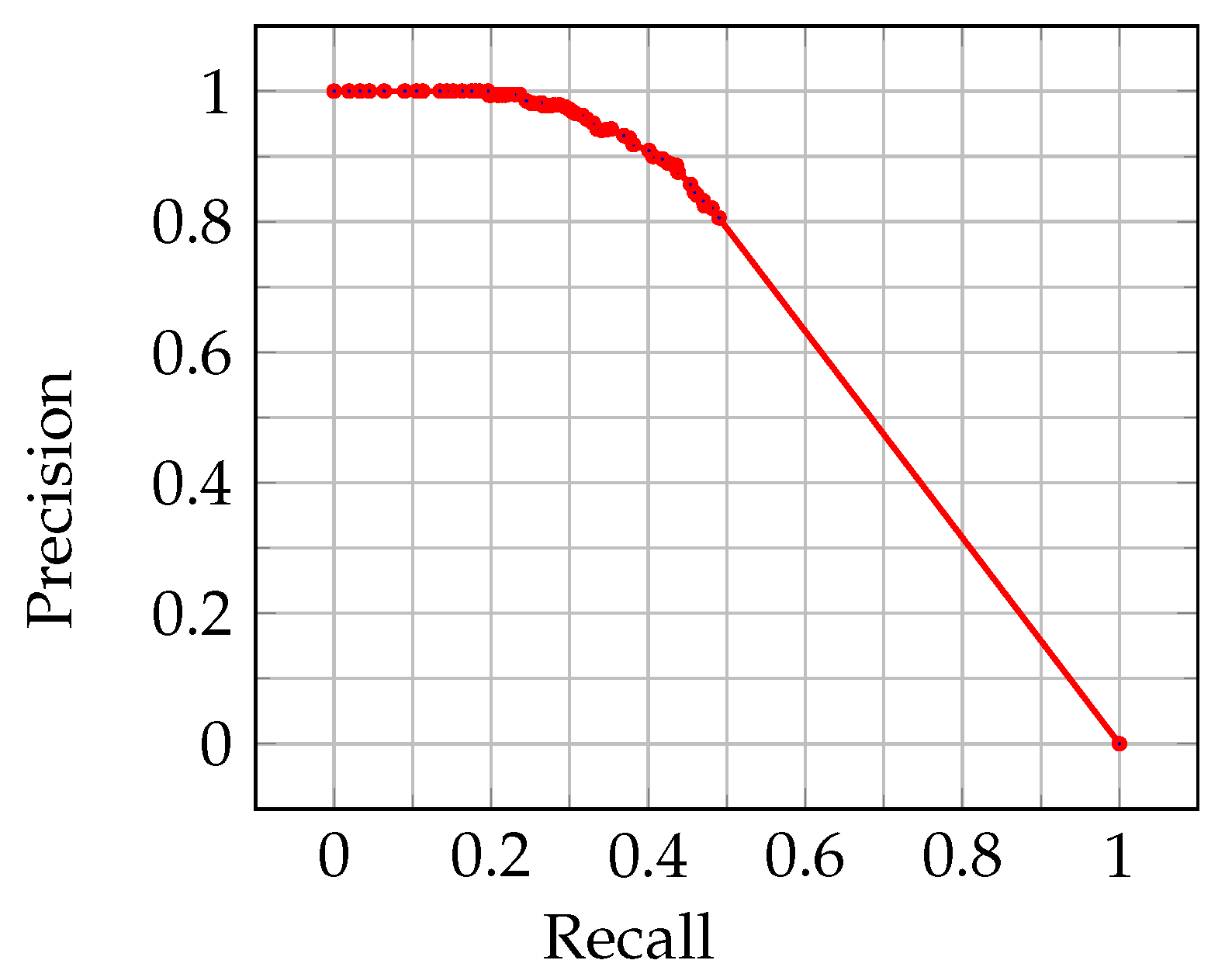

4.1. Quality Analysis

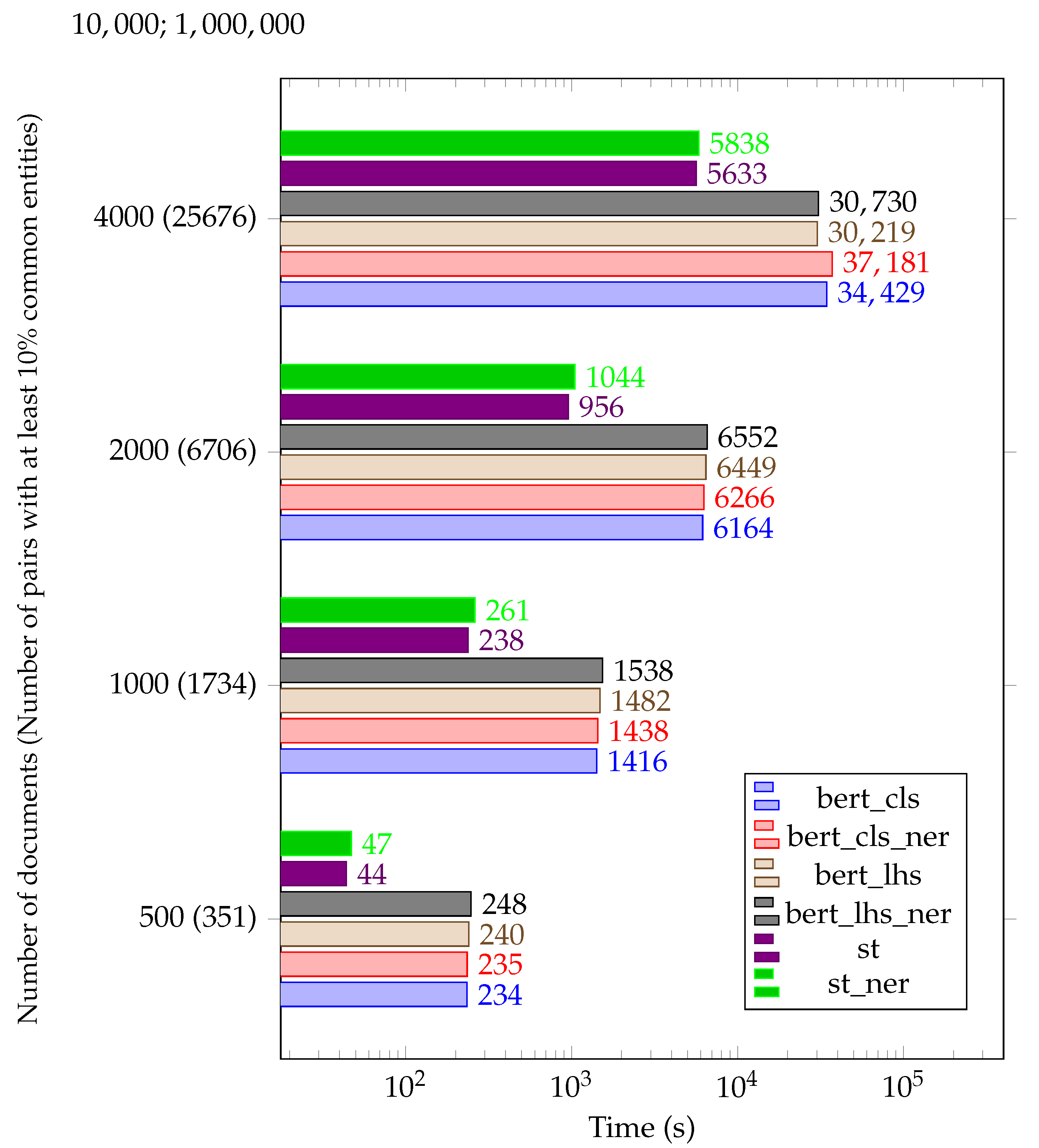

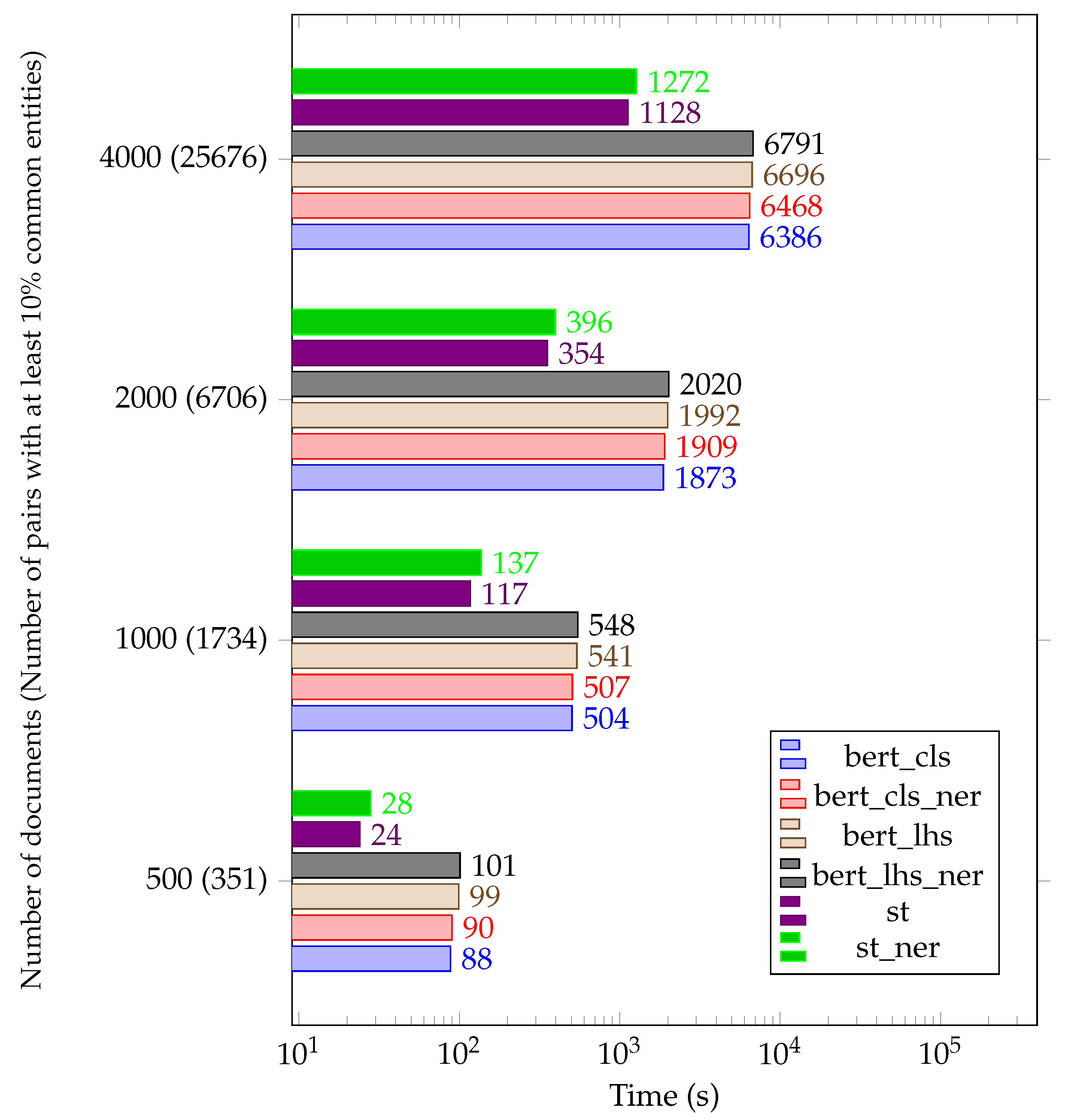

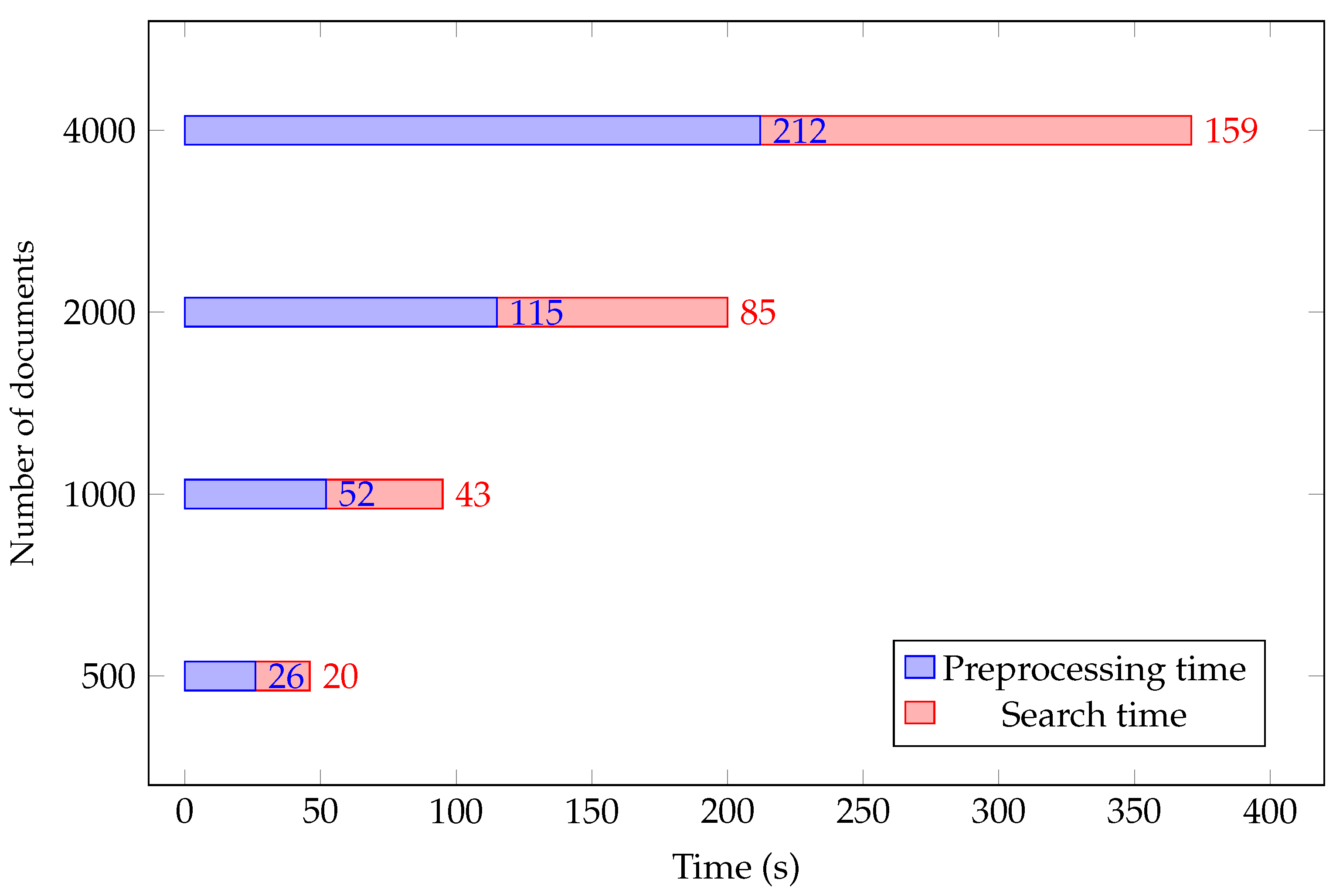

4.2. Time Efficiency Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ASCII | American Standard Code for Information Interchange |

| AUC | Area Under the Curve |

| BERT | Bidirectional Encoder Representations from Transformers |

| CRC32 | 32-bit Cyclic Redundancy Checksum |

| FiDisD | “Fighting Disinformation using Decentralized Actors” project |

| LCA | Lowest Common Ancestor |

| LSH | Locality-Sensitive Hashing |

| MLM | Masked Language Modelling |

| NER | Named Entity Recognition |

| NLP | Natural Language Processing |

| NLTK | Natural Language Toolkit |

| PR | Precision Recall |

| ROC | Receiver Operator Characteristic |

| TF-IDF | Term Frequency-Inverse Document Frequency |

References

- Kanoh, H. Why do people believe in fake news over the Internet? An understanding from the perspective of existence of the habit of eating and drinking. Procedia Comput. Sci. 2018, 126, 1704–1709. [Google Scholar] [CrossRef]

- Kreps, S.; McCain, R.M.; Brundage, M. All the news that’s fit to fabricate: AI-generated text as a tool of media misinformation. J. Exp. Political Sci. 2022, 9, 104–117. [Google Scholar] [CrossRef]

- Susukailo, V.; Opirskyy, I.; Vasylyshyn, S. Analysis of the attack vectors used by threat actors during the pandemic. In Proceedings of the 2020 IEEE 15th International Conference on Computer Sciences and Information Technologies (CSIT), Zbarazh, Ukraine, 23–26 September 2020; Volume 2, pp. 261–264. [Google Scholar]

- Zhou, X.; Wu, J.; Zafarani, R. SAFE: Similarity-Aware Multi-modal Fake News Detection. In Proceedings of the Advances in Knowledge Discovery and Data Mining: 24th Pacific-Asia Conference, PAKDD 2020, Singapore, 11–14 May 2020; pp. 354–367. [Google Scholar]

- Singh, R.; Singh, S. Text similarity measures in news articles by vector space model using NLP. J. Inst. Eng. Ser. 2021, 102, 329–338. [Google Scholar] [CrossRef]

- Bisandu, D.B.; Prasad, R.; Liman, M.M. Clustering news articles using efficient similarity measure and N-grams. Int. J. Knowl. Eng. Data Min. 2018, 5, 333–348. [Google Scholar] [CrossRef]

- Sarwar, T.B.; Noor, N.M.; Miah, M.S.U. Evaluating keyphrase extraction algorithms for finding similar news articles using lexical similarity calculation and semantic relatedness measurement by word embedding. PeerJ Comput. Sci. 2022, 8, e1024. [Google Scholar] [CrossRef]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. In Proceedings of the NeurIPS (NIPS) 2013 Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013; Volume 26. [Google Scholar]

- Rupnik, J.; Muhic, A.; Leban, G.; Skraba, P.; Fortuna, B.; Grobelnik, M. News across languages-cross-lingual document similarity and event tracking. J. Artif. Intell. Res. 2016, 55, 283–316. [Google Scholar] [CrossRef]

- Dumais, S.T.; Letsche, T.A.; Littman, M.L.; Landauer, T.K. Automatic cross-language retrieval using latent semantic indexing. In AAAI Spring Symposium on Cross-Language Text and Speech Retrieval; Stanford University: Stanford, CA, USA, 1997; Volume 15, p. 21. [Google Scholar]

- Hotelling, H. The most predictable criterion. J. Educ. Psychol. 1935, 26, 139. [Google Scholar] [CrossRef]

- Baraniak, K.; Sydow, M. News articles similarity for automatic media bias detection in Polish news portals. In Proceedings of the 2018 Federated Conference on Computer Science and Information Systems (FedCSIS), Poznan, Poland, 9–12 September 2018; pp. 21–24. [Google Scholar]

- Neculoiu, P.; Versteegh, M.; Rotaru, M. Learning text similarity with siamese recurrent networks. In Proceedings of the 1st Workshop on Representation Learning for NLP, Berlin, Germany, 11 August 2016; pp. 148–157. [Google Scholar]

- Choi, S. Internet News User Analysis Using Deep Learning and Similarity Comparison. Electronics 2022, 11, 569. [Google Scholar] [CrossRef]

- Wang, J.; Dong, Y. Measurement of text similarity: A survey. Information 2020, 11, 421. [Google Scholar] [CrossRef]

- Chandrasekaran, D.; Mago, V. Evolution of semantic similarity—A survey. Acm Comput. Surv. (Csur) 2021, 54, 1–37. [Google Scholar] [CrossRef]

- Peinelt, N.; Nguyen, D.; Liakata, M. tBERT: Topic models and BERT joining forces for semantic similarity detection. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 7047–7055. [Google Scholar]

- Li, Z.; Lin, H.; Shen, C.; Zheng, W.; Yang, Z.; Wang, J. Cross2Self-attentive bidirectional recurrent neural network with BERT for biomedical semantic text similarity. In Proceedings of the 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Seoul, Republic of Korea, 16–19 December 2020; pp. 1051–1054. [Google Scholar]

- Feifei, X.; Shuting, Z.; Yu, T. Bert-based Siamese Network for Semantic Similarity. Proc. J. Phys. Conf. Ser. 2020, 1684, 012074. [Google Scholar] [CrossRef]

- Viji, D.; Revathy, S. A hybrid approach of Weighted Fine-Tuned BERT extraction with deep Siamese Bi–LSTM model for semantic text similarity identification. Multimed. Tools Appl. 2022, 81, 6131–6157. [Google Scholar] [CrossRef] [PubMed]

- Reimers, N.; Gurevych, I. Sentence-bert: Sentence embeddings using siamese bert-networks. arXiv 2019, arXiv:1908.10084. [Google Scholar]

- Ma, X.; Wang, Z.; Ng, P.; Nallapati, R.; Xiang, B. Universal text representation from bert: An empirical study. arXiv 2019, arXiv:1910.07973. [Google Scholar]

- Li, B.; Zhou, H.; He, J.; Wang, M.; Yang, Y.; Li, L. On the sentence embeddings from pre-trained language models. arXiv 2020, arXiv:2011.05864. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Manning, C.; Schutze, H. Foundations of Statistical Natural Language Processing; MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Tufiş, D.; Chiţu, A. Automatic diacritics insertion in Romanian texts. In Proceedings of the International Conference on Computational Lexicography COMPLEX, Pecs, Hungary, 16–19 June 1999; Volume 99, pp. 185–194. [Google Scholar]

- Peterson, W.W.; Brown, D.T. Cyclic codes for error detection. Proc. Ire 1961, 49, 228–235. [Google Scholar] [CrossRef]

- Sobti, R.; Geetha, G. Cryptographic hash functions: A review. Int. J. Comput. Sci. Issues (IJCSI) 2012, 9, 461. [Google Scholar]

- Jaccard, P. The distribution of the flora in the alpine zone. 1. New Phytol. 1912, 11, 37–50. [Google Scholar] [CrossRef]

- Leskovec, J.; Rajaraman, A.; Ullman, J.D. Mining of Massive Data Sets; Cambridge University Press: Cambridge, UK, 2020. [Google Scholar]

- Oprisa, C. A MinHash approach for clustering large collections of binary programs. In Proceedings of the 2015 20th International Conference on Control Systems and Computer Science, Bucharest, Romania, 27–29 May 2015; pp. 157–163. [Google Scholar]

- Oprişa, C.; Checicheş, M.; Năndrean, A. Locality-sensitive hashing optimizations for fast malware clustering. In Proceedings of the 2014 IEEE 10th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj, Romania, 4–6 September 2014; pp. 97–104. [Google Scholar]

- Marrero, M.; Urbano, J.; Sánchez-Cuadrado, S.; Morato, J.; Gómez-Berbís, J.M. Named entity recognition: Fallacies, challenges and opportunities. Comput. Stand. Interfaces 2013, 35, 482–489. [Google Scholar] [CrossRef]

- Dumitrescu, S.; Avram, A.M.; Pyysalo, S. The birth of Romanian BERT. In Findings of the Association for Computational Linguistics: EMNLP 2020; Association for Computational Linguistics: Online, 2020; pp. 4324–4328. [Google Scholar] [CrossRef]

- Artene, C.G.; Tibeică, M.N.; Leon, F. Using BERT for Multi-Label Multi-Language Web Page Classification. In Proceedings of the 2021 IEEE 17th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 28–30 October 2021; pp. 307–312. [Google Scholar]

- Reimers, N.; Gurevych, I. Making monolingual sentence embeddings multilingual using knowledge distillation. arXiv 2020, arXiv:2004.09813. [Google Scholar]

- Yang, Y.; Cer, D.; Ahmad, A.; Guo, M.; Law, J.; Constant, N.; Abrego, G.H.; Yuan, S.; Tar, C.; Sung, Y.H.; et al. Multilingual universal sentence encoder for semantic retrieval. arXiv 2019, arXiv:1907.04307. [Google Scholar]

- Ethayarajh, K. How contextual are contextualized word representations? comparing the geometry of BERT, ELMo, and GPT-2 embeddings. arXiv 2019, arXiv:1909.00512. [Google Scholar]

- Bender, M.A.; Farach-Colton, M.; Pemmasani, G.; Skiena, S.; Sumazin, P. Lowest common ancestors in trees and directed acyclic graphs. J. Algorithms 2005, 57, 75–94. [Google Scholar] [CrossRef]

- Murtagh, F.; Contreras, P. Algorithms for hierarchical clustering: An overview. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2012, 2, 86–97. [Google Scholar] [CrossRef]

- Sibson, R. SLINK: An optimally efficient algorithm for the single-link cluster method. Comput. J. 1973, 16, 30–34. [Google Scholar] [CrossRef]

- Tharwat, A. Classification assessment methods. Appl. Comput. Inform. 2021, 17, 168–192. [Google Scholar] [CrossRef]

- Van Rijsbergen, C. Information retrieval: Theory and practice. In Proceedings of the Joint IBM/University of Newcastle upon Tyne Seminar on Data Base Systems, Newcastle upon Tyne, UK, 4–7 September 1979; Volume 79. [Google Scholar]

- Cook, J.; Ramadas, V. When to consult precision-recall curves. Stata J. 2020, 20, 131–148. [Google Scholar] [CrossRef]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

| Method | Best Threshold | Precision | Recall | Score | AUC Score |

|---|---|---|---|---|---|

| lsh | 40% | 0.806 | 0.490 | 0.609 | 0.675 |

| bert_cls | 90% | 0.574 | 0.317 | 0.409 | 0.305 |

| bert_cls_ner | 38% | 0.702 | 0.699 | 0.700 | 0.725 |

| bert_lhs | 90% | 0.688 | 0.401 | 0.507 | 0.386 |

| bert_lhs_ner | 40% | 0.721 | 0.684 | 0.702 | 0.729 |

| st | 90% | 0.656 | 0.701 | 0.678 | 0.716 |

| st_ner | 49% | 0.590 | 0.585 | 0.588 | 0.631 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Artene, C.-G.; Oprișa, C.; Buțincu, C.N.; Leon, F. Finding Patient Zero and Tracking Narrative Changes in the Context of Online Disinformation Using Semantic Similarity Analysis. Mathematics 2023, 11, 2053. https://doi.org/10.3390/math11092053

Artene C-G, Oprișa C, Buțincu CN, Leon F. Finding Patient Zero and Tracking Narrative Changes in the Context of Online Disinformation Using Semantic Similarity Analysis. Mathematics. 2023; 11(9):2053. https://doi.org/10.3390/math11092053

Chicago/Turabian StyleArtene, Codruț-Georgian, Ciprian Oprișa, Cristian Nicolae Buțincu, and Florin Leon. 2023. "Finding Patient Zero and Tracking Narrative Changes in the Context of Online Disinformation Using Semantic Similarity Analysis" Mathematics 11, no. 9: 2053. https://doi.org/10.3390/math11092053

APA StyleArtene, C.-G., Oprișa, C., Buțincu, C. N., & Leon, F. (2023). Finding Patient Zero and Tracking Narrative Changes in the Context of Online Disinformation Using Semantic Similarity Analysis. Mathematics, 11(9), 2053. https://doi.org/10.3390/math11092053