An Approach Based on Cross-Attention Mechanism and Label-Enhancement Algorithm for Legal Judgment Prediction

Abstract

1. Introduction

- 1.

- We propose a new LJP method that avoids the gap in information fusion by using keywords extracted from cases as label-enhanced information instead of established articles.

- 2.

- We propose a novel cross-attention fusion distillation mechanism to fuse keywords, which not only identifies the distinctive keywords of each case but also optimizes the representation of keywords into a distinguishable representation.

- 3.

- We use the LE algorithm to add a subtask consistency constraint to the one-hot distribution of labels, which not only improves the rationality and consistency of the prediction results but also alleviates the issue of the over-confidence of the model caused by confusing labels.

- 4.

- We conduct experiments on two real datasets and achieve excellent results, outperforming all baseline models.

2. Related Works

2.1. Legal Judgment Prediction

2.2. Attention Mechanism

2.3. Label Enhancement

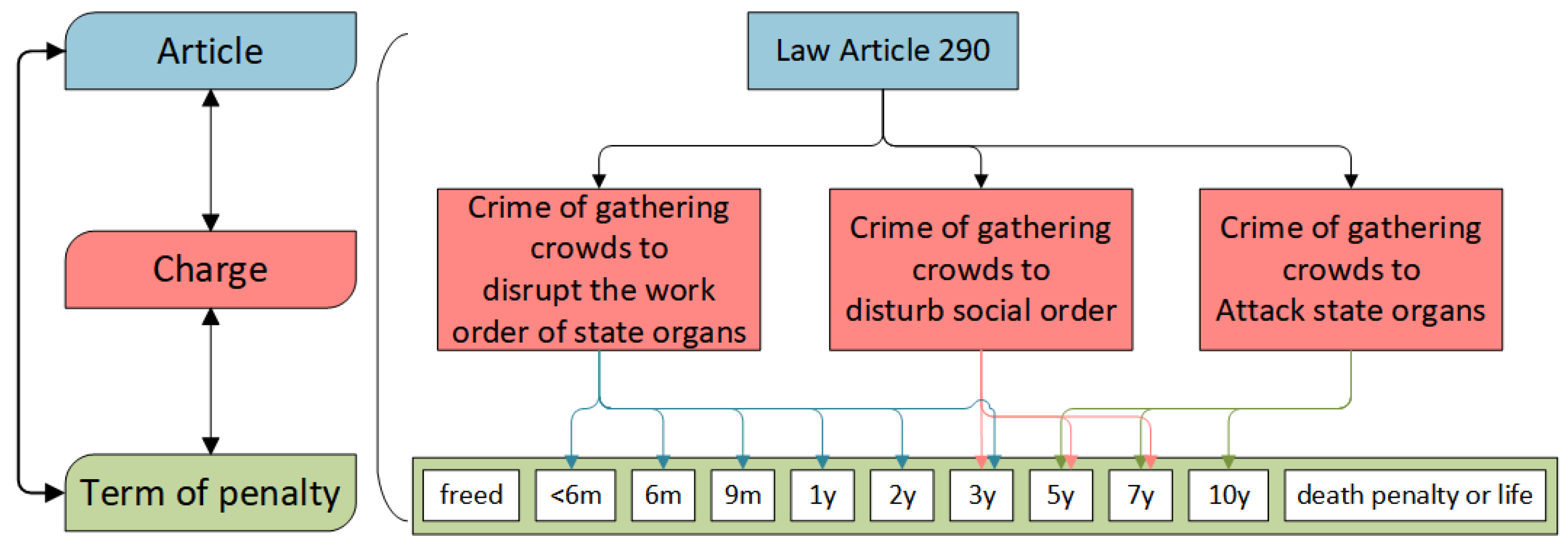

3. Problem Formalization

4. Methodology

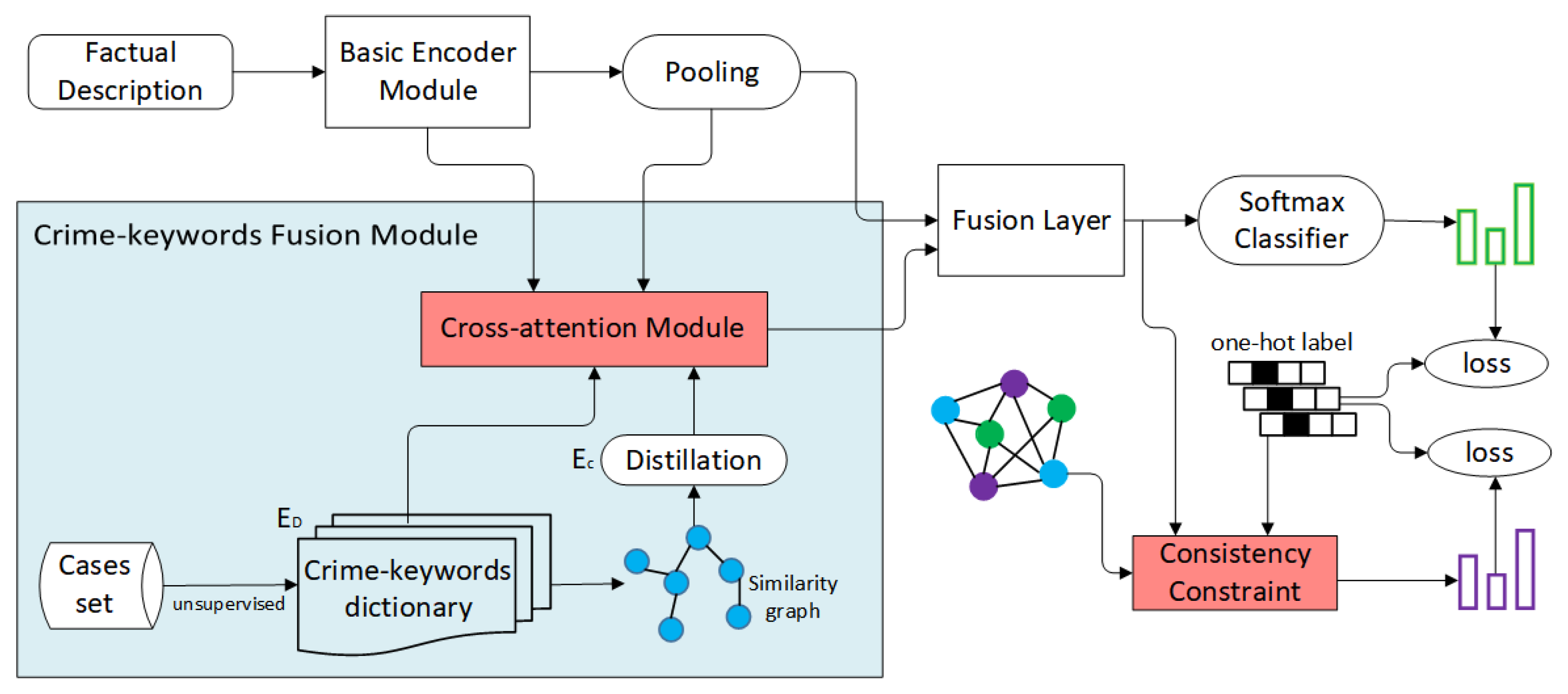

4.1. Overview of the Proposed Framework

4.2. Basic Encoder Module

4.3. Crime-Keywords Fusion Module Based on Cross-Attention Mechanism

4.3.1. Crime-Keywords Dictionary Construction

4.3.2. Charge Similarity Graph Construction

| Algorithm 1 Construction of crime-keywords dictionary and charge similarity graph. |

|

4.3.3. Distillation Operation

4.3.4. Cross-Attention Module

4.4. Fusion Layer

4.5. Subtask Consistency Constraint Module

4.6. Prediction and Training

| Algorithm 2 Optimization Algorithm. |

|

5. Experiments

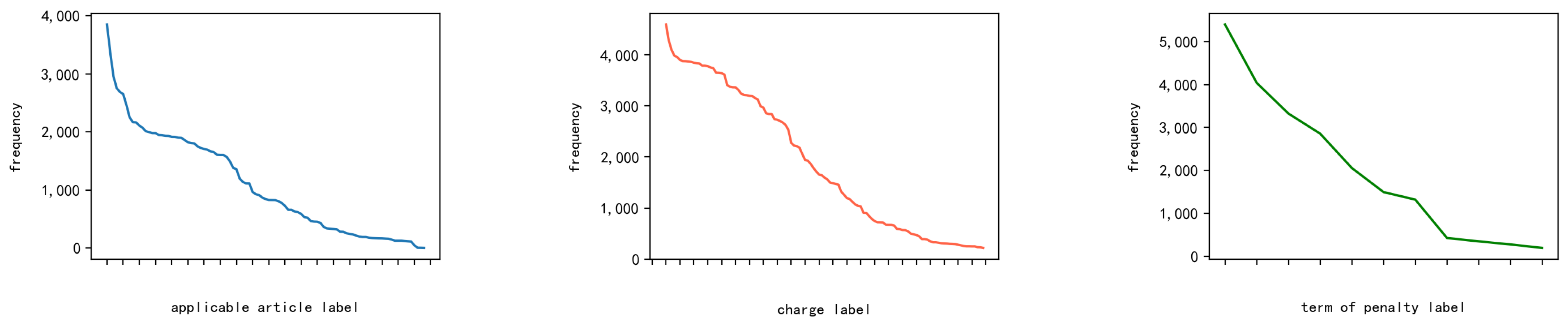

5.1. Datasets

5.2. Baseline Methods

- FLA [18]: a neural network with attention mechanisms for capturing the interaction between the factual description and applicable articles.

- TopJudge [2]: a topological multi-task learning model that incorporates the DAG dependencies among multiple subtasks into LJP.

- Few-Shot [14]: an attribute-attentive charge prediction model that can predict few-shot charges and alleviate confusing charge issues.

- LADAN [21]: an attention-based model that employs a graph neural network to learn the distinction between confusing legal articles and further distinguishes between confusing charges.

- NeurJudge+ [22]: incorporates the semantics of established articles into facts to help divide the factual description into various subtasks for LJP.

- R-former [7]: utilizes a masked transformer network to obtain case-discriminative representations, and achieves local consistency of each node’s label distribution through relational learning.

5.3. Experimental Setup and Evaluation Metrics

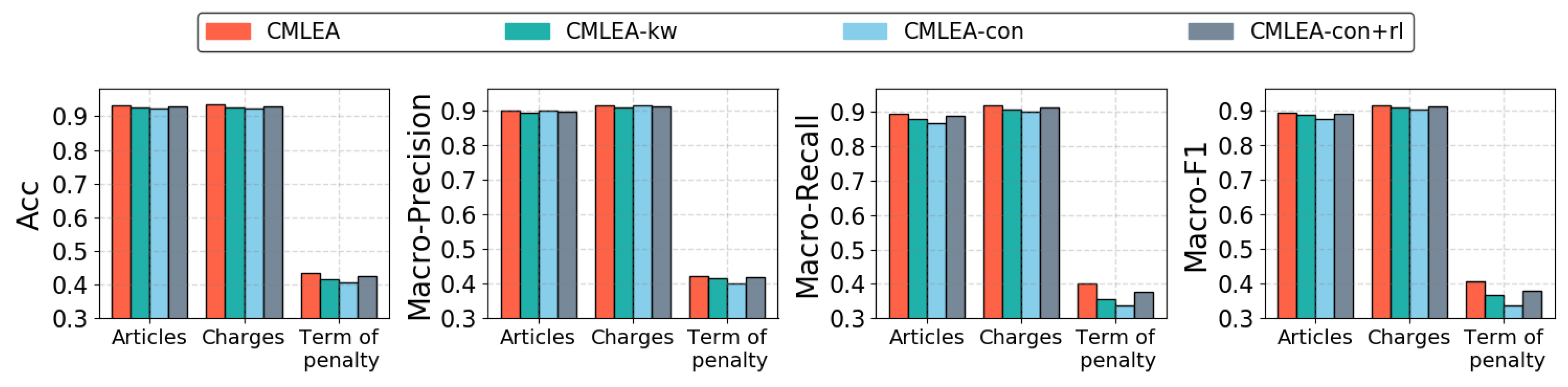

5.4. Experimental Results

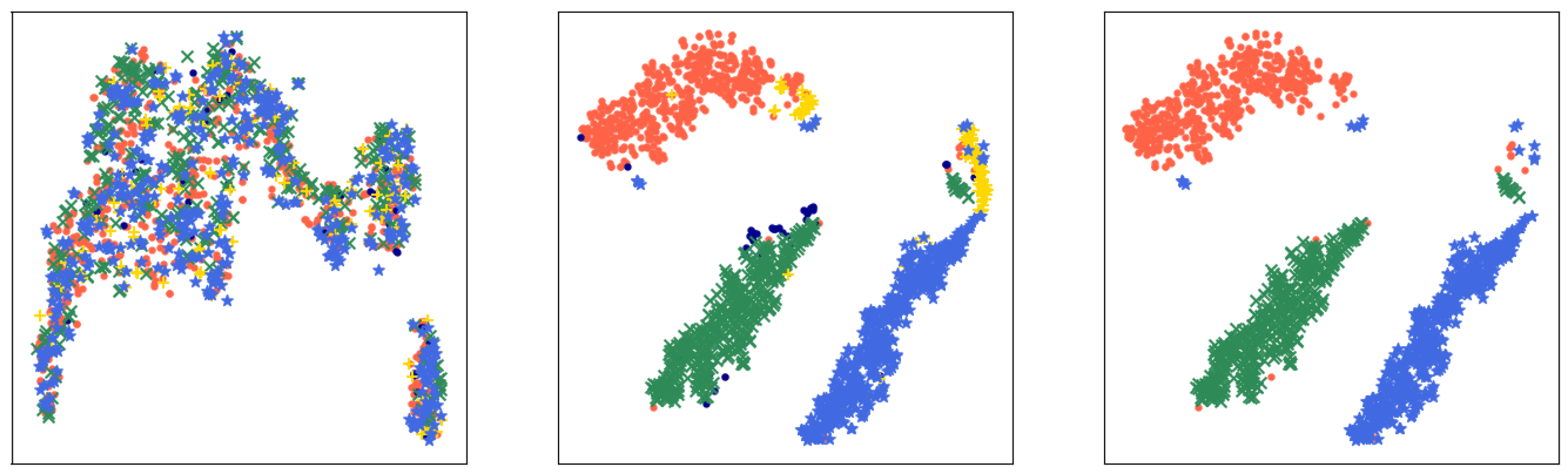

5.5. Case Study

6. Ethical Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Running Environment

Appendix A.2. Subtask Consistency Constraint and Data Statistics

Appendix A.3. Crime-Keywords Dictionary

| Content of Established Articles | Crime-Keywords |

|---|---|

| Law Article 338—Crime of Polluting the Environment: Any person who, in violation of state regulations, discharges, dumps, or disposes of radioactive waste, waste containing infectious disease pathogens, toxic substances, or other harmful substances that seriously pollute the environment shall be sentenced to a fixed-term sentence of not more than three years imprisonment, criminal detention, or a fine. If the circumstances are serious, the sentence shall be fixed-term imprisonment of not less than three years but not more than seven years, and a fine shall be imposed. Under any of the following circumstances, the sentence shall be fixed-term imprisonment of not less than seven years and a fine. | Discharge, Wastewater, Chromium, Monitoring, Zinc, PH, Concentration, Environment, Pollutant, Environmental Protection Agency, Waste, Total Chromium, Department of Environment Protection, Standard, Exceeding Standard, Content, Monitoring Station |

| Law Article 266—Crime of Fraud: For defrauding public or private property, if the amount is relatively large, the sentence shall be fixed-term imprisonment of not more than three years, criminal detention, or public surveillance, and a fine. If the amount involved is large or there are other serious circumstances, the sentence shall be three years imprisonment. If the amount involved is substantially large or other extremely serious circumstances exist, the sentence shall be fixed-term imprisonment of not less than ten years or life imprisonment and a fine or confiscation of property. Where there are other provisions in this law, the provisions shall prevail. | Trust, Fake, Borrow Money, Bank Card, Cheat, Clothing Fee, Stolen Money, Tipping for Desk Fees, Hide, Fabricate, For the Reason, Squander, Take Possession of, Amount of Money, Real Situation, Lie, Mortgage, Bank, Defraud, Property |

| Law Article 193—Crime of Loan Fraud: Under any of the following circumstances, for the purpose of illegal possession or defrauding loans from banks or other financial institutions, if the amount is relatively large, the sentence shall be fixed-term imprisonment of not more than five years or criminal detention and a fine of not less than CNY 20,000 but not less than CNY 200,000. If the amount is substantially large or there are other extremely serious circumstances, the sentence shall be fixed-term imprisonment of not less than five years and not more than ten years and a fine of not less than CNY 50,000 but not more than CNY 500,000, or life imprisonment and a fine of not less than CNY 50,000 but not more than CNY 500,000. | Finance, Credit Union, Principal and Interest, Student, Borrow Money, Repayment, Loan, Credit, Take Possession of, Associated Agency, Fraud, Fake, Sub-branch, Bank, Mortgage, Cheat, Repay, Principal Money, Interest, Installments |

| Law Article 175—Crime of Fraudulent Loan, Bill Acceptance, Financial Documents: Any person who obtains loans, bill acceptances, letters of credit, letters of guarantee, etc., from banks or other financial institutions through fraudulent means and causes heavy losses to banks or other financial institutions shall be sentenced to fixed-term imprisonment of not more than three years or criminal detention and shall also be fined. Any person who causes substantially heavy losses to banks or other financial institutions or there are other extremely serious circumstances shall be sentenced to fixed-term imprisonment of not less than three years but not more than seven years and shall also be fined. | Finance, Credit Union, Company, Borrow Money, Loan, Acceptance Bill, Credit, Cheat, Fake, Associated Agency, Sub-branch, Bank, Maturity, Guarantee, Fraud, Principal Money, Interest, Exchange Bill, Repay |

| Law Article 192—Crime of Fundraising Fraud: Any person who illegally raises funds through fraudulent means for the purpose of illegal possession, if the amount is relatively large, shall be sentenced to fixed-term imprisonment of not less than three years but not more than seven years and shall also be fined. If the amount is substantially large or there are other extremely serious circumstances, the sentence shall be fixed-term imprisonment of not less than seven years or life imprisonment, a fine, or confiscation of property. Units that commit crimes in the preceding paragraph shall be fined, and the persons directly in charge and other directly responsible personnel shall be punished in accordance with the provisions of the preceding paragraph. | Borrow Money, Fundraising, Funds, High Amount of Money, Absorption, High Interest, Take Possession of, Amount, Monthly Interest, Fraud, Illegal, Cheat, Public, Investment, Repay, Principal Money, Interest, Bait, Raise Funds |

| Law Article 176—Crime of Illegally Absorbing Public Deposits: Any person who illegally absorbs public deposits or absorbs public deposits in a disguised form, thereby disturbing the financial order, shall be sentenced to fixed-term imprisonment of not more than three years or criminal detention and shall also be sentenced to a fine. If the amount is large or there are other serious circumstances, the sentence shall be fixed-term imprisonment of not less than three years but not more than ten years and a fine. If the amount is substantially large or there are other extremely serious circumstances, the sentence shall be fixed-term imprisonment of not less than ten years and a fine. | Finance, Member, Borrow Money, Disruption, Fundraising, Investors, Biao Hui, Funds, High Amount of Money, Absorption, Membership, Monthly Interest, Public, Repay, Investment, Bank Savings, Principal Money, Interest, Bait, Meeting Day |

References

- Medvedeva, M.; Wieling, M.; Vols, M. Rethinking the field of automatic prediction of court decisions. Artif. Intell. Law 2023, 31, 195–212. [Google Scholar] [CrossRef]

- Zhong, H.; Guo, Z.; Tu, C.; Xiao, C.; Liu, Z.; Sun, M. Legal Judgment Prediction via Topological Learning. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 3540–3549. [Google Scholar]

- Zhong, H.; Zhou, J.; Qu, W.; Long, Y.; Gu, Y. An Element-aware Multi-representation Model for Law Article Prediction. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 6663–6668. [Google Scholar]

- Meng, Y.; Zhang, Y.; Huang, J.; Xiong, C.; Ji, H.; Zhang, C.; Han, J. Text Classification Using Label Names Only: A Language Model Self-Training Approach. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 9006–9017. [Google Scholar]

- Chen, J.; Du, L.; Liu, M.; Zhou, X. Mulan: A Multiple Residual Article-Wise Attention Network for Legal Judgment Prediction. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2022, 21, 1–15. [Google Scholar] [CrossRef]

- Xiong, C.; Zhong, V.; Socher, R. Dynamic coattention networks for question answering. In Proceedings of the 5th International Conference on Learning Representations(ICLR ’17), Toulon, France, 24–26 April 2017; pp. 15–28. [Google Scholar]

- Dong, Q.; Niu, S. Legal Judgment Prediction via Relational Learning. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Online, 11–15 July 2021; pp. 983–992. [Google Scholar]

- Xu, N.; Liu, Y.P.; Geng, X. Label enhancement for label distribution learning. IEEE Trans. Knowl. Data Eng. 2019, 33, 1632–1643. [Google Scholar] [CrossRef]

- Kort, F. Predicting Supreme Court decisions mathematically: A quantitative analysis of the “right to counsel” cases. Am. Political Sci. Rev. 1957, 51, 1–12. [Google Scholar] [CrossRef]

- Chen, H.; Cai, D.; Dai, W.; Dai, Z.; Ding, Y. Charge-Based Prison Term Prediction with Deep Gating Network. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 6362–6367. [Google Scholar]

- Pan, S.; Lu, T.; Gu, N.; Zhang, H.; Xu, C. Charge Prediction for Multi-defendant Cases with Multi-scale Attention. In Proceedings of the CCF Conference on Computer Supported Cooperative Work and Social Computing, Kunming, China, 16–18 August 2019; Springer: Singapore, 2019; pp. 766–777. [Google Scholar]

- Wang, P.; Yang, Z.; Niu, S.; Zhang, Y.; Zhang, L.; Niu, S. Modeling Dynamic Pairwise Attention for Crime Classification over Legal Articles. In Proceedings of the 41st international ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018; pp. 485–494. [Google Scholar]

- Li, S.; Liu, B.; Ye, L.; Zhang, H.; Fang, B. Element-Aware Legal Judgment Prediction for Criminal Cases with Confusing Charges. In Proceedings of the 2019 IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI), Portland, OR, USA, 4–6 November 2019; pp. 660–667. [Google Scholar]

- Hu, Z.; Li, X.; Tu, C.; Liu, Z.; Sun, M. Few-Shot Charge Prediction with Discriminative Legal Attributes. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 487–498. [Google Scholar]

- Guo, J.; Liu, Z.; Yu, Z.; Huang, Y.; Xiang, Y. Few Shot and Confusing Charges Prediction with the Auxiliary Sentences of Case. J. Softw. 2021, 32, 3139–3150. [Google Scholar]

- Zhong, H.; Wang, Y.; Tu, C.; Zhang, T.; Liu, Z.; Sun, M. Iteratively Questioning and Answering for Interpretable Legal Judgment Prediction. In Proceedings of the 34th AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 1250–1257. [Google Scholar]

- Gan, L.; Kuang, K.; Yang, Y.; Wu, F. Judgment Prediction via Injecting Legal Knowledge into Neural Networks. In Proceedings of the 35th AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; pp. 12866–12874. [Google Scholar]

- Luo, B.; Feng, Y.; Xu, J.; Zhang, X.; Zhao, D. Learning to Predict Charges for Criminal Cases with Legal Basis. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 2727–2736. [Google Scholar]

- Bao, Q.; Zan, H.; Gong, P.; Chen, J.; Xiao, Y. Charge prediction with legal attention. In Proceedings of the 8th CCF International Conference on Natural Language Processing and Chinese Computing, Dunhuang, China, 9–14 October 2019; pp. 447–458. [Google Scholar]

- Yang, W.; Jia, W.; Zhou, X.; Luo, Y. Legal Judgment Prediction via Multi-Perspective Bi-Feedback Network. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 4085–4091. [Google Scholar]

- Xu, N.; Wang, P.; Chen, L.; Pan, L.; Wang, X.; Zhao, J. Distinguish Confusing Law Articles for Legal Judgment Prediction. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 3086–3095. [Google Scholar]

- Yue, L.; Liu, Q.; Jin, B.; Wu, H.; Zhang, K.; An, Y.; Cheng, M.; Yin, B.; Wu, D. NeurJudge: A Circumstance-aware Neural Framework for Legal Judgment Prediction. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Online, 11–15 July 2021; pp. 973–982. [Google Scholar]

- Mnih, V.; Heess, N.; Graves, A.; Kavukcuoglu, k. Recurrent Models of Visual Attention. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; MIT Press: Cambridge, MA, USA, 2014; pp. 2204–2212. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Yang, Z.; Yang, D.; Dyer, C.; He, X.; Smola, A.; Hovy, E. Hierarchical attention networks for document classification. In Proceedings of the 2016 conference of the North American chapter of the Association for Computational Linguistics: Human language technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1480–1489. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 3–9 December 2017; pp. 5999–6009. [Google Scholar]

- Wu, Y.; Zhu, L.; Yan, Y.; Yang, Y. Dual Attention Matching for Audio-Visual Event Localization. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6291–6299. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar]

- Geng, X. Label distribution learning. IEEE Trans. Knowl. Data Eng. 2016, 28, 1734–1748. [Google Scholar] [CrossRef]

- Gao, Y.; Zhang, Y.; Geng, X. Label Enhancement for Label Distribution Learning via Prior Knowledge. In Proceedings of the 29th International Joint Conference on Artificial Intelligence, Online, 7–15 January 2021; pp. 3223–3229. [Google Scholar]

- Guo, B.; Han, S.; Han, X.; Huang, H.; Lu, T. Label confusion learning to enhance text classification models. In Proceedings of the 35th AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; pp. 12929–12936. [Google Scholar]

- Cui, Y.; Che, W.; Liu, T.; Qin, B.; Yang, Z. Pre-training with whole word masking for chinese bert. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 3504–3514. [Google Scholar] [CrossRef]

- Campos, R.; Mangaravite, V.; Pasquali, A.; Jorge, A.; Nunes, C.; Jatowt, A. YAKE! Keyword extraction from single documents using multiple local features. Inf. Sci. 2020, 509, 257–289. [Google Scholar] [CrossRef]

- Liu, X.; Yin, D.; Feng, Y.; Wu, Y.; Zhao, D. Everything Has a Cause: Leveraging Causal Inference in Legal Text Analysis. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 1928–1941. [Google Scholar]

- Jones, K.S. A statistical interpretation of term specificity and its application in retrieval. J. Doc. 1972, 28, 11–21. [Google Scholar] [CrossRef]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. Adv. Neural Inf. Process. Syst. 2013, 26, 3111–3119. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. In Proceedings of the 5th International Conference on Learning Representations(ICLR ’17), Toulon, France, 24–26 April 2017; pp. 1–14. [Google Scholar]

- Xiao, C.; Zhong, H.; Guo, Z.; Tu, C.; Liu, Z.; Sun, M.; Feng, Y.; Han, X.; Hu, Z.; Wang, H.; et al. Cail2018: A large-scale legal dataset for judgment prediction. arXiv 2018, arXiv:1807.02478. [Google Scholar]

- Suykens, J.A.; Vandewalle, J. Least squares support vector machine classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR’15), San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Proceedings of the 33th Conference on Neural Information Processing Systems, Vancouver, Canada, 8–14 December 2019; pp. 8026–8037. [Google Scholar]

- Zhou, Z.H. Model Selection and Evaluation. In Machine Learning; Springer: Singapore, 2021; pp. 25–55. [Google Scholar] [CrossRef]

- Zhong, H.; Zhang, Z.; Liu, Z.; Sun, M. Open Chinese Language Pre-trained Model Zoo. Technical Report. 2019. Available online: https://github.com/thunlp/openclap (accessed on 2 March 2023).

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Tsarapatsanis, D.; Aletras, N. On the Ethical Limits of Natural Language Processing on Legal Text. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, Online, 1–6 August 2021; pp. 3590–3599. [Google Scholar]

- Leins, K.; Lau, J.H.; Baldwin, T. Give Me Convenience and Give Her Death: Who Should Decide What Uses of NLP are Appropriate, and on What Basis? In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 2908–2913. [Google Scholar]

| Notation | Description |

|---|---|

| a word sequence of the factual description of the case | |

| the set of applicable article labels | |

| the set of charge labels | |

| the set of term-of-penalty labels | |

| the dictionary of crime keywords |

| Dataset | CAIL-Small | CAIL-Big |

|---|---|---|

| #Training Set Cases | 108,619 | 1,593,982 |

| #Test Set Cases | 26,120 | 185,721 |

| #Validation Set Cases | 13,738 | - |

| #Law Articles | 99 | 118 |

| #Charges | 115 | 129 |

| #Term of Penalty | 11 | 11 |

| Methods | Law Articles (%) | Charges (%) | Term of Penalty (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ACC. | MP | MR | MF1 | ACC. | MP | MR | MF1 | ACC. | MP | MR | MF1 | |

| SVM+word2vec | 84.17 | 80.74 | 75.96 | 77.09 | 83.37 | 80.78 | 77.30 | 78.25 | 33.00 | 25.56 | 25.11 | 22.50 |

| FLA | 85.63 | 83.46 | 73.83 | 74.92 | 84.72 | 83.71 | 73.75 | 75.04 | 35.04 | 33.91 | 27.14 | 24.79 |

| TopJudge | 87.28 | 85.81 | 76.25 | 78.24 | 86.48 | 84.23 | 78.39 | 80.15 | 38.43 | 35.67 | 32.15 | 31.31 |

| Few-Shot | 88.44 | 86.76 | 77.93 | 79.51 | 88.15 | 87.51 | 80.57 | 81.98 | 39.62 | 37.13 | 30.93 | 31.61 |

| LADAN | 88.78 | 85.15 | 79.45 | 80.97 | 88.28 | 86.36 | 80.54 | 82.11 | 38.13 | 34.04 | 31.22 | 30.20 |

| NeurJudge+ | 90.37 | 87.22 | 85.82 | 86.13 | 89.92 | 87.76 | 86.75 | 86.96 | 41.65 | 40.44 | 37.20 | 37.27 |

| R-former | 92.55 | 89.99 | 88.18 | 88.62 | 92.87 | 91.07 | 90.92 | 90.88 | 42.94 | 41.15 | 38.97 | 38.82 |

| CMLEA | 93.19 | 89.96 | 89.37 | 89.58 | 93.40 | 91.59 | 91.99 | 91.71 | 43.45 | 42.23 | 40.11 | 40.73 |

| Methods | Law Articles (%) | Charges (%) | Term of Penalty (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ACC. | MP | MR | MF1 | ACC. | MP | MR | MF1 | ACC. | MP | MR | MF1 | |

| SVM+word2vec | 92.62 | 77.92 | 61.03 | 64.29 | 92.09 | 82.26 | 65.28 | 69.06 | 46.73 | 28.98 | 20.92 | 20.91 |

| FLA | 93.51 | 74.94 | 70.40 | 70.70 | 93.01 | 76.56 | 72.75 | 72.94 | 54.29 | 38.39 | 29.34 | 30.85 |

| TopJudge | 93.24 | 74.24 | 71.19 | 70.40 | 93.19 | 79.44 | 75.52 | 75.50 | 53.52 | 44.58 | 30.41 | 30.61 |

| Few-Shot | 93.74 | 78.51 | 73.79 | 74.18 | 93.24 | 80.59 | 76.62 | 76.89 | 54.54 | 39.09 | 33.36 | 33.48 |

| LADAN | 93.27 | 75.10 | 72.04 | 71.26 | 93.26 | 81.21 | 77.65 | 77.60 | 53.62 | 41.52 | 37.53 | 36.06 |

| NeurJudge+ | 95.58 | 82.01 | 77.05 | 78.05 | 95.57 | 85.57 | 78.81 | 80.54 | 57.07 | 47.65 | 40.01 | 41.18 |

| R-former | 97.02 | 86.40 | 81.87 | 82.64 | 97.08 | 90.67 | 86.90 | 87.57 | 59.78 | 48.87 | 45.55 | 45.81 |

| CMLEA | 97.39 | 89.04 | 84.62 | 86.10 | 97.46 | 92.14 | 88.86 | 89.99 | 60.65 | 50.66 | 46.40 | 47.44 |

| Methods | Law Articles (%) | Charges (%) | Term of Penalty (%) | |||

|---|---|---|---|---|---|---|

| ACC. | MF1 | ACC. | MF1 | ACC. | MF1 | |

| BERT | 90.81 | 86.06 | 90.68 | 87.69 | 40.37 | 34.09 |

| BERT-Crime | 91.30 | 85.70 | 91.26 | 87.81 | 40.90 | 34.65 |

| R-former | 92.55 | 88.62 | 92.87 | 90.88 | 42.94 | 38.82 |

| NeurJudge+ | 92.64 | 88.75 | 92.91 | 90.89 | 43.81 | 39.76 |

| CMLEA | 93.19 | 89.58 | 93.40 | 91.71 | 43.45 | 40.73 |

| Factual Description of the Case | Without Consistency Constraint | With Consistency Constraint |

|---|---|---|

| Defendant Chen invited more than 20 relatives and neighbors to place wreaths at the Boca Chemical Plant to obstruct production because of the death of her husband, an employee of the Chemical Plant, which seriously affected the normal production and operation of the Boca Chemical Plant. | 290, ✓ Crime of sabotaging producti- on and operation, ✗ More than nine months in pri- son. ✓ | 290, ✓ Crime of gathering crowds to disturb social order, ✓ More than nine months in pri- son. ✓ |

| Defendant Lin organized Fang to appeal to the State Letters and Calls Bureau, causing a large number of petitioners to gather for a long time on the sidewalk and bicycle lane opposite the reception desk of the State Letters and Calls Bureau, seriously disrupting social order. | 290, ✓ Crime of picking quarrels and provoking trouble, ✗ More than one year in prison. ✗ | 290, ✓ Crime of gathering crowds to disturb social order, ✓ More than three years in pris- on. ✓ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Zhang, X.; Zhou, X.; Han, Y.; Zhou, Q. An Approach Based on Cross-Attention Mechanism and Label-Enhancement Algorithm for Legal Judgment Prediction. Mathematics 2023, 11, 2032. https://doi.org/10.3390/math11092032

Chen J, Zhang X, Zhou X, Han Y, Zhou Q. An Approach Based on Cross-Attention Mechanism and Label-Enhancement Algorithm for Legal Judgment Prediction. Mathematics. 2023; 11(9):2032. https://doi.org/10.3390/math11092032

Chicago/Turabian StyleChen, Junyi, Xuanqing Zhang, Xiabing Zhou, Yingjie Han, and Qinglei Zhou. 2023. "An Approach Based on Cross-Attention Mechanism and Label-Enhancement Algorithm for Legal Judgment Prediction" Mathematics 11, no. 9: 2032. https://doi.org/10.3390/math11092032

APA StyleChen, J., Zhang, X., Zhou, X., Han, Y., & Zhou, Q. (2023). An Approach Based on Cross-Attention Mechanism and Label-Enhancement Algorithm for Legal Judgment Prediction. Mathematics, 11(9), 2032. https://doi.org/10.3390/math11092032