Abstract

In this paper, we propose two novel inertial forward–backward splitting methods for solving the constrained convex minimization of the sum of two convex functions, in Hilbert spaces and analyze their convergence behavior under some conditions. For the first method (iFBS), we use the forward–backward operator. The step size of this method depends on a constant of the Lipschitz continuity of hence a weak convergence result of the proposed method is established under some conditions based on a fixed point method. With the second method (iFBS-L), we modify the step size of the first method, which is independent of the Lipschitz constant of by using a line search technique introduced by Cruz and Nghia. As an application of these methods, we compare the efficiency of the proposed methods with the inertial three-operator splitting (iTOS) method by using them to solve the constrained image inpainting problem with nuclear norm regularization. Moreover, we apply our methods to solve image restoration problems by using the least absolute shrinkage and selection operator (LASSO) model, and the results are compared with those of the forward–backward splitting method with line search (FBS-L) and the fast iterative shrinkage-thresholding method (FISTA).

Keywords:

constrained convex minimization problems; forward–backward splitting method; fixed points; image inpainting; image restoration MSC:

47H10; 47J25; 90C30; 65K05

1. Introduction and Preliminaries

In this study, and denote the set of all positive integers and the set of all real numbers, respectively. Let be a real Hilbert space and be the identity operator. The symbols → and ⇀ denote strong convergence and weak convergence, respectively.

The forward–backward splitting method is a popular method for solving the following convex minimization problems:

where is a convex proper lower semi-continuous function, is a convex proper lower semi-continuous and differentiable function, and is Lipschitz continuous with constant L.

In 2005, Combettes and Wajs [1] proposed a relaxed forward–backward splitting method as follows:

where , and .

In 2016, Cruz and Nghia [2] presented a technique for selecting the stepsize that is independent of the Lipschitz constant of by using line search process as follows:

We denote as Algorithm 1 with respect to the functions and at The forward–backward splitting method, FBS-L, where the stepsize is generated using Algorithm 1, is as follows:

where , , and

| Algorithm 1 Let and |

Input While do End Output |

Inertial techniques are applied in order to speed up the forward–backward splitting method. Various inertial techniques and fixed point methods have been studied, see [3,4,5,6,7,8] for example. Recently, Moudafi, and Oliny [9] introduced an inertial forward–backward splitting method as follows:

where The an inertial parameter, controls the momentum In 2009, Beck and Teboulle [3] proposed a fast iterative shrinkage-thresholding algorithm (FISTA) for solving the convex minimization problems, Problem (1) and they also generated the sequence as follows:

when

Recently, Cui et al. proposed an inertial three-operator splitting (iTOS) method [10] for solving the following general convex minimization problems:

where and are the convex proper lower semi-continuous functions, is the convex proper lower semi-continuous and differentiable function, and is Lipschitz continuous with constant L. The iTOS method is defined by the following:

where is non-decreasing, and and such that

They proved the convergence of the iTOS method and applied the method to solve the constrained image inpainting problem as follows:

where u is the matrix of known entries is the linear map that selects a subset of the entries of an matrix by setting each unknown entry in the matrix to and is the nonempty closed convex subset of The regularization parameter is is the nuclear matrix norm, and is the Frobenius matrix norm. The constrained image inpainting problem, Problem (4), is equivalent to the following unconstrained image inpainting problem:

when is the indicator function.

In this paper, we introduce two novel inertial forward–backward splitting methods for solving the following constraint convex minimization problems:

where and are convex proper lower semi-continuous functions. Note that is a solution to problem (6) if it satisfies the common minimizers of the following convex minimization problems:

Then, we prove the weak convergence of the proposed methods by using the fixed point method and line search technique, respectively. Moreover, we apply our methods for solving constrained image inpainting problem, Problem (4), and compare the performance of our methods with the iTOS method (3).

The basic definitions and Lemmas needed for our paper are let be a convex proper lower semi-continuous function. The proximal operator [11,12] of , denoted by is defined for each , is the unique solution of the minimization problem:

The equivalent form of the proximity operator of from to can be written as:

when the subdifferential of defined by

We notice that when is the nonempty closed convex subset of and is the orthogonal projection operator. The following useful fact is also needed:

If is differentiable in the solution of the convex minimization problem, Problem (1), is a fixed point of the forward–backward operator, i.e.,

where Note that the subdifferential operator is a maximal monotone [13] and the operator is a nonexpansive mapping where

We consider the following class of Lipschitz continuous and nonexpansive mappings. A mapping is said to be Lipschitz continuous if there exists such that

The mapping S is said to be nonexpansive if S is 1-Lipschitz continuous. If then a point is said to be fixed point of S The set of all fixed points of S is denoted by

The mapping is called demiclosed at zero if sequence in such that , and as , then . It is known that if S is a nonexpansive mapping, then is demiclosed at zero [14]. Let and be such that . Then, is said to satisfy NST-condition (I) with S [15] if, for all bounded sequence in ,

A sequence is said to satisfy the condition (Z) [16,17] if is a bounded sequence in such that

it follows that every weak cluster point of belongs to . The following identity in will be used in the paper (see [11]): for any and

Lemma 1

([4]). Let be a convex and differentiable function such that is Lipschitz continuous with constant L and be a convex proper lower semi-continuous function. Let and , when with Then satisfies NST-condition (I) with S.

Lemma 2

([5]). Let and be sequences of nonnegative real numbers such that

Then Moreover, if then is bounded.

Lemma 3

([18]). If is a convex proper lower semi-continuous function, then the graph of defined by is demiclosed, i.e., if the sequence satisfies that and then

Lemma 4

([19]). Let and be convex proper lower semi-continuous functions from to Then for all and we have

Lemma 5

([20]). Let and be sequences of nonnegative real numbers such that . If then exists.

Lemma 6

([14,21]). Let be a sequence in such that there exists a nonempty set satisfying:

- (i)

- For every exists;

- (ii)

- where is the set of all weak-cluster points of

Then, converges weakly to a point in

2. An Inertial Forward–Backward Splitting Method Based on Fixed Point Inertial Techniques

In this part, we propose an inertial forward–backward splitting method for finding common elements of the convex minimization problem, Problem (7), by using a fixed-point inertial technique and proving a weak convergence of the proposed method.

In the first part, we propose a fixed-point inertial method that approximates the common fixed point of two countable families of nonexpansive mappings. The details and assumptions of the iterative method are represented as:

- (A1)

- and are two countable family of nonexpansive mappings which satisfy condition (Z);

- (A2)

Now, we prove the first convergence theorem for Algorithm 2.

| Algorithm 2 A fixed-point inertial method. |

|

Theorem 1.

Let be the sequence created by Algorithm 2. Suppose that the sequences and satisfy the following conditions:

- (C1)

- for some ;

- (C2)

- and

Then the following hold:

- (i)

- where and

- (ii)

- converges weakly to some element in

Proof.

(i) Let Using (13), we have

Using (14) and the nonexpansiveness of we have

Using (15) and the nonexpansiveness of and we obtain

This implies

Applying Lemma 2, we get where

(ii) We conclude that is bounded by condition (C2) and that and are also bounded. This implies Using (19) and Lemma 5, we obtain that exists. Using (12) and (13), we obtain

Using (11), (14), (15), and the nonexpansiveness of and we obtain

and

From (24) and by condition (C1), and exists, we have

From (25), we obtain

From (13) and , we have

Remark 1.

Assuming that for all the iterative Algorithm 2 becomes the following Algorithm 3:

| Algorithm 3 The inertial Picard–Mann hybrid iterative process. |

|

Remark 2.

If and for all the inertial Picard–Mann hybrid iterative process (Algorithm 3) is reduced to Picard–Mann hybrid iterative process [22].

We are presently in a position to state the inertial forward–backward splitting method and show its convergence properties. We set the standing assumptions used in this method as follows:

- (D1)

- and are differentiable and convex functions;

- (D2)

- and are Lipschitz continuous with constants and respectively;

- (D3)

- and are convex proper lower semi-continuous functions;

- (D4)

Remark 3.

Let If then and S are nonexpansive mappings with Moreover, if by Lemma 1, we obtain that satisfies NST-condition (I) with S.

Now, we prove the convergence theorem of Algorithm 4.

| Algorithm 4 An inertial forward–backward splitting (iFBS) method. |

Let Choose and For do end for. |

Theorem 2.

Let be the sequence created by Algorithm 4. Suppose that the sequences and satisfy the following conditions:

- (C1)

- for some ;

- (C2)

- and ;

- (C3)

- such that and as .

Then the following hold:

- (i)

- where and

- (ii)

- converges weakly to some element in

Proof.

Operators are defined as follows:

By applying Remark 3 and condition (C3), we have and , two families of nonexpansive mappings, which satisfy NST-condition (I) with S and respectively. This implies that and satisfy the condition (Z). Therefore, the result is obtained directly from Theorem 1. □

Remark 4.

If , , and then the Algorithm 4 is reduced to the method for solving the minimization problem of the sum of two convex function, Problem (1), as follows Algorithm 5:

| Algorithm 5 An inertial Picard–Mann forward–backward splitting (iPM-FBS) method. |

|

3. An Inertial Forward–Backward Splitting Method with Line Search

In this part, we modify the inertial forward–backward splitting method proposed in Section 2 for finding common elements of the convex minimization problem, Problem (7), by using the linesearch technique (Algorithm 1 [2]) and we prove the weak convergence of the proposed method. We set standing assumptions used throughout this part as follows:

- (B1)

- and are convex proper lower semi-continuous functions and

- (B2)

- and are differentiable on . The gradient of and are uniformly continuous on .

Lemma 7

([2]). Let is a sequence created using the following:

where Then for each and

Theorem 3.

Let be the sequence created using Algorithm 6. Suppose that the sequences and satisfy the following conditions:

- (C1)

- for some ;

- (C2)

- and .

Then the sequence converges weakly to some element in

| Algorithm 6 An inertial forward–backward splitting method with line search (iFBS-L). |

|

Proof.

We denote

Applying Lemma 7, we have for any and

Let and set in (28)–(30), we have

From Algorithm 6 and (31), we have

Using Lemma 2 and condition (C2), we have is bounded. This implies Using (19) and Lemma 5, we obtain that exists. Using (11), (28), (30)–(32), we obtain

and

Since exiats, and (C2), we have

Next, we show that Since is bounded, we find that is nonempty. Let i.e., there exists a subsequence of such that Since and (36), we have and

Now, we show that by considering the following two cases.

- Case 1. Suppose that the sequence does not converge to zero. Thus there exists such that Using (B2), we have

From (10), we get

- Case 2. Suppose that the sequence converges to zero. Define and

Using Lemma 4, we have

Since we have Using (B2), we have

From the definition of Algorithm 1, we have

From (10), we get

Remark 5.

If and then Algorithm 6 is reduced to the method for solving the minimization problem of the sum of two convex functions, Problem (1), as follows Algorithm 7:

| Algorithm 7 An inertial Picard–Mann forward–backward splitting method with line search (iPM-FBS-L). |

|

4. Numerical Examples

The following experiments are executed in MATLAB and run on a PC with Intel Core-i7/16.00 GB RAM/Windows 11/64-bit. For image quality measurements, we utilize the peak signal-to-noise ratio (PSNR) in decibels (dB), which is defined as follows:

where and u are the number of image samples and the original image, respectively.

4.1. The Constrained Image Inpainting Problems

In this subsection, we apply the constrained convex minimization problem, Problem (6), to the constrained image inpainting problem, Problem (4). We analyze and illustrate the convergence behavior of the iFBS method (Algorithm 4) and iFBS-L method (Algorithm 6) for the image restoration problem and also compare those methods’ efficiency with that of the iTOS method [10].

In this experiment, we discuss the following constrained image inpainting problem [10]:

where is a given image. We define by

where are observed, is a subset of the index set which indicates where data are available in the image domain, and the rest are missed and

It is obvious that the constrained image inpainting problem, Problem (44), is a special case of the constrained convex minimization problem, Problems (6), i.e., we can set

So, we have is differentiable and convex, and is Lipschitz continuous with constant . The proximity operator of is the orthogonal projection onto the closed convex set and the proximity operator of can be computed using singular value decomposition (SVD), see [23]. Thus, the iFBS method (Algorithm 4) and iFBS-L method (Algorithm 6) can be employed to solve the constrained image inpainting problem, Problem (44).

In this demonstration, we identify and correct the damaged area of the Wat Chedi Luang image in the default gallery, and we recommend stopping the calculation when

The suggested parameters setting in Table 1 are used as parameters for the iFBS, iFBS-L, and iTOS methods. It is noted that the sequence can be defined with

which satisfies the condition (C2).

Table 1.

Details of parameters for each method.

The PSNR value for the restored images, CPU time (second), and the number of iterations (Iter.) are listed in Table 2.

Table 2.

Performance evaluation of the restored images.

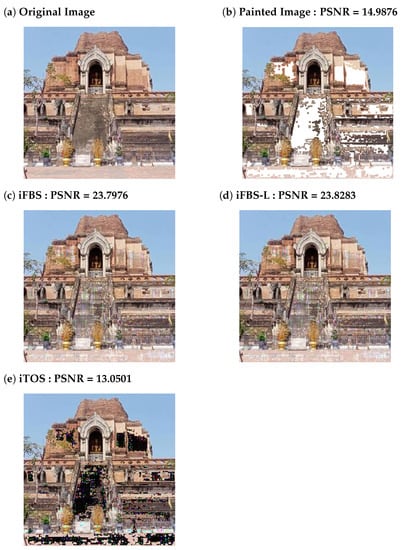

As shown in Table 2, the numerical examples indicate that our proposed methods perform better than the inertial three-operator splitting (iTOS) method [10] in both terms of PSNR performance and the number of iterations. The running time of the iFBS method is less than those of the iFBS-L and iTOS methods. We exhibit the original image, painted image, and restored images in the case of a regularization parameter of in Figure 1 as a visual demonstration.

Figure 1.

The original image, painted image, and restored images in the case of a regularization parameter of .

4.2. The Least Absolute Shrinkage and Selection Operator (LASSO)

In this subsection, we provide numerical results by comparing Algorithm 5 (iPM-FBS), Algorithm 7 (iPM-FBS-L), the FISTA method [3], and the FBS-L method [2] for the image restoration problems by using the LASSO model specified by the formula:

where , is the kernel matrix, is 2-D fast Fourier transform, b is the observed image, , is the -norm, and is the Euclidean norm.

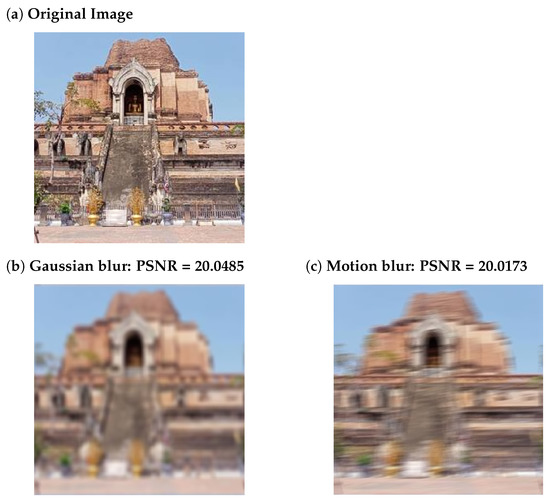

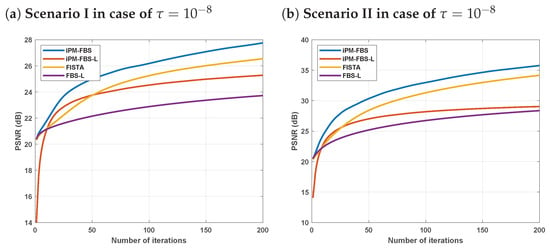

In this experiment, we use the RGB image test with a size of 256 × 256 pixels as the original image and evaluate the performance of the iPM-FBS, iPM-FBS-L, FISTA, and FBS-L methods in two typical image blurring scenarios which are summarized in Table 3 (see the test images in Figure 2). The parameters for the methods are set in Table 4.

Table 3.

Description of image blurring scenarios.

Figure 2.

The test images. (a) original image, (b) Blurred image in scenario I, and (c) Blurred image in scenario II.

Table 4.

Details of parameters for each method.

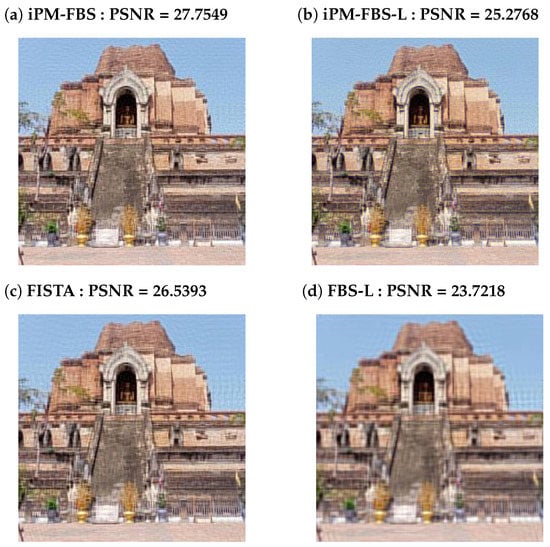

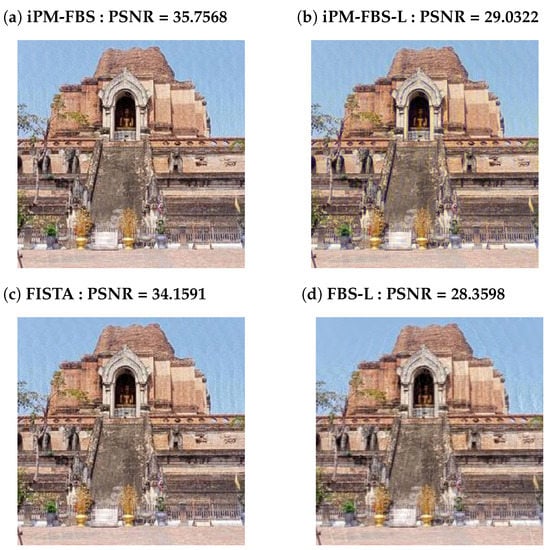

In the results from Table 5, it is evident that the iPM-FBS method produces the best PSNR results. We exhibit the restored images in the case of a regularization parameter of in Figure 3 and Figure 4 as a visual demonstration. The PSNR graphs in Figure 5 further show that the iPM-FBS method has considerable advantages in terms of recovery performance when compared to the iPM-FBS-L, FISTA, and FBS-L methods.

Table 5.

The comparison of the PSNR performance of four methods at iteration.

Figure 3.

The images recovered in scenario I in the case of . (a–d) Images recovered using iPM-FBS, iPM-FBS-L, FISTA, and FBS-L, respectively.

Figure 4.

The images recovered in scenario II in case of . (a–d) Images recovered using iPM-FBS, iPM-FBS-L, FISTA, and FBS-L, respectively.

Figure 5.

The PSNR comparisons of iPM-FBS, iPM-FBS-L, FISTA, and FBS-L methods.

5. Conclusions

This paper proposed two novel inertial forward–backward splitting methods, the iFBS method and the iFBS-L method, for finding common elements of the convex minimization of the sum of two convex functions in real Hilbert spaces. The key idea was to examine the weak convergence results of the proposed methods by utilizing the fixed point conditions of the forward–backward operators and line search strategies, respectively. The proposed methods were applied to solve image inpainting problems. Numerical simulations showed that our methods (iFBS and iFBS-L) performed better than the inertial three-operator splitting (iTOS) method [10] both in terms of PSNR performance and the number of iterations. Finally, we reduced our methods to solve the convex minimization of the sum of two convex functions, called the iPM-FBS method and iPM-FBS-L method, and applied them to solve image recovery problems. Numerical simulations showed that the iPM-FBS method performed better than the iFBS-L method, FISTA method [3], and FBS-L method [2] in terms of PSNR performance.

Author Contributions

Formal analysis, writing—original draft preparation, methodology, writing—review and editing, software, A.H.; Conceptualization, Supervision, revised the manuscript, S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research project was supported by the Science Research and Innovation Fund. Contract No. FF66-P1-011, the NSRF via the program Management Unit for Human Resources & Institutional Development, Research and Innovation [grant number B05F640183] and Fundamental Fund 2023, Chiang Mai University.

Data Availability Statement

Not applicable.

Acknowledgments

This research project was supported by Science Research and Innovation Fund, Contract No. FF66-P1-011 and Fundamental Fund 2023, Chiang Mai University. The second author has received funding support from the NSRF via the program Management Unit for Human Resources & Institutional Development, Research, and Innovation [grant number B05F640183]. We also would like to thank Chiang Mai University and the Rajamangala University of Technology Isan for their partial financial support.

Conflicts of Interest

The authors declare that they have no competing interests.

References

- Combettes, P.L.; Wajs, V.R. Signal recovery by proximal forward–backward splitting. Multiscale Model. Simul. 2005, 4, 1168–1200. [Google Scholar] [CrossRef]

- Cruz, J.Y.B.; Nghia, T.T.A. On the convergence of the forward–backward splitting method with linesearchs. Optim. Methods Softw. 2016, 31, 1209–1238. [Google Scholar] [CrossRef]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Bussaban, L.; Suantai, S.; Kaewkhao, A. A parallel inertial S-iteration forward–backward algorithm for regression and classification problems. Carpathian J. Math. 2020, 36, 35–44. [Google Scholar] [CrossRef]

- Hanjing, A.; Suantai, S. A fast image restoration algorithm based on a fixed point and optimization. Mathematics. 2020, 8, 378. [Google Scholar] [CrossRef]

- Saluja, G.S. Some common fixed point theorems on S-metric spaces using simulation function. J. Adv. Math. Stud. 2022, 15, 288–302. [Google Scholar]

- Suantai, S.; Kankam, K.; Cholamjiak, P. A novel forward–backward algorithm for solving convex minimization problem in Hilbert spaces. Mathematics 2020, 8, 42. [Google Scholar] [CrossRef]

- Zhao, X.P.; Yao, J.C.; Yao, Y. A nonmonotone gradient method for constrained multiobjective optimization problems. J. Nonlinear Var. Anal. 2022, 6, 693–706. [Google Scholar]

- Moudafi, A.; Oliny, M. Convergence of a splitting inertial proximal method for monotone operators. J. Comput. Appl. Math. 2003, 155, 447–454. [Google Scholar] [CrossRef]

- Cui, F.; Tang, Y.; Yang, Y. An inertial three-operator splitting algorithm with applications to image inpainting. arXiv 2019, arXiv:1904.11684. [Google Scholar]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: New York, NY, USA, 2011. [Google Scholar]

- Moreau, J.J. Proximité et dualité dans un espace hilbertien. Bull. Société Mathématique Fr. 1965, 93, 273–299. [Google Scholar] [CrossRef]

- Burachik, R.S.; Iusem, A.N. Set-Valued Mappings and Enlargements of Monotone Operator; Springer Science Business Media: New York, NY, USA, 2007. [Google Scholar]

- Opial, Z. Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull. Am. Math. Soc. 1967, 73, 591–597. [Google Scholar] [CrossRef]

- Nakajo, K.; Shimoji, K.; Takahashi, W. On strong convergence by the hybrid method for families of mappings in Hilbert spaces. Nonlinear Anal. Theory Mothods Appl. 2009, 71, 112–119. [Google Scholar] [CrossRef]

- Aoyama, K.; Kimura, Y. Strong convergence theorems for strongly nonexpansive sequences. Appl. Math. Comput. 2011, 217, 7537–7545. [Google Scholar] [CrossRef]

- Aoyama, K.; Kohsaka, F.; Takahashi, W. Strong convergence theorems by shrinking and hybrid projection methods for relatively nonexpansive mappings in Banach spaces. In Proceedings of the 5th International Conference on Nonlinear Analysis and Convex Analysis; Yokohama Publishers: Yokohama, Japan, 2009; pp. 7–26. [Google Scholar]

- Rockafellar, R.T. On the maximal monotonicity of subdifferential mappings. Pac. J. Math. 1970, 33, 209–216. [Google Scholar] [CrossRef]

- Huang, Y.; Dong, Y. New properties of forward–backward splitting and a practical proximal-descent algorithm. Appl. Math. Comput. 2014, 237, 60–68. [Google Scholar] [CrossRef]

- Tan, K.; Xu, H.K. Approximating fixed points of nonexpansive mappings by the ishikawa iteration process. J. Math. Anal. Appl. 1993, 178, 301–308. [Google Scholar] [CrossRef]

- Moudafi, A.; Al-Shemas, E. Simultaneous iterative methods for split equality problem. Trans. Math. Prog. Appl. 2013, 1, 1–11. [Google Scholar]

- Khan, S.H. A Picard–Mann hybrid iterative process. Fixed Point Theory Appl. 2013, 2013, 69. [Google Scholar] [CrossRef]

- Cai, J.F.; Candes, E.J.; Shen, Z. A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 2010, 20, 1956–1982. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).