Attention and Pixel Matching in RGB-T Object Tracking

Abstract

1. Introduction

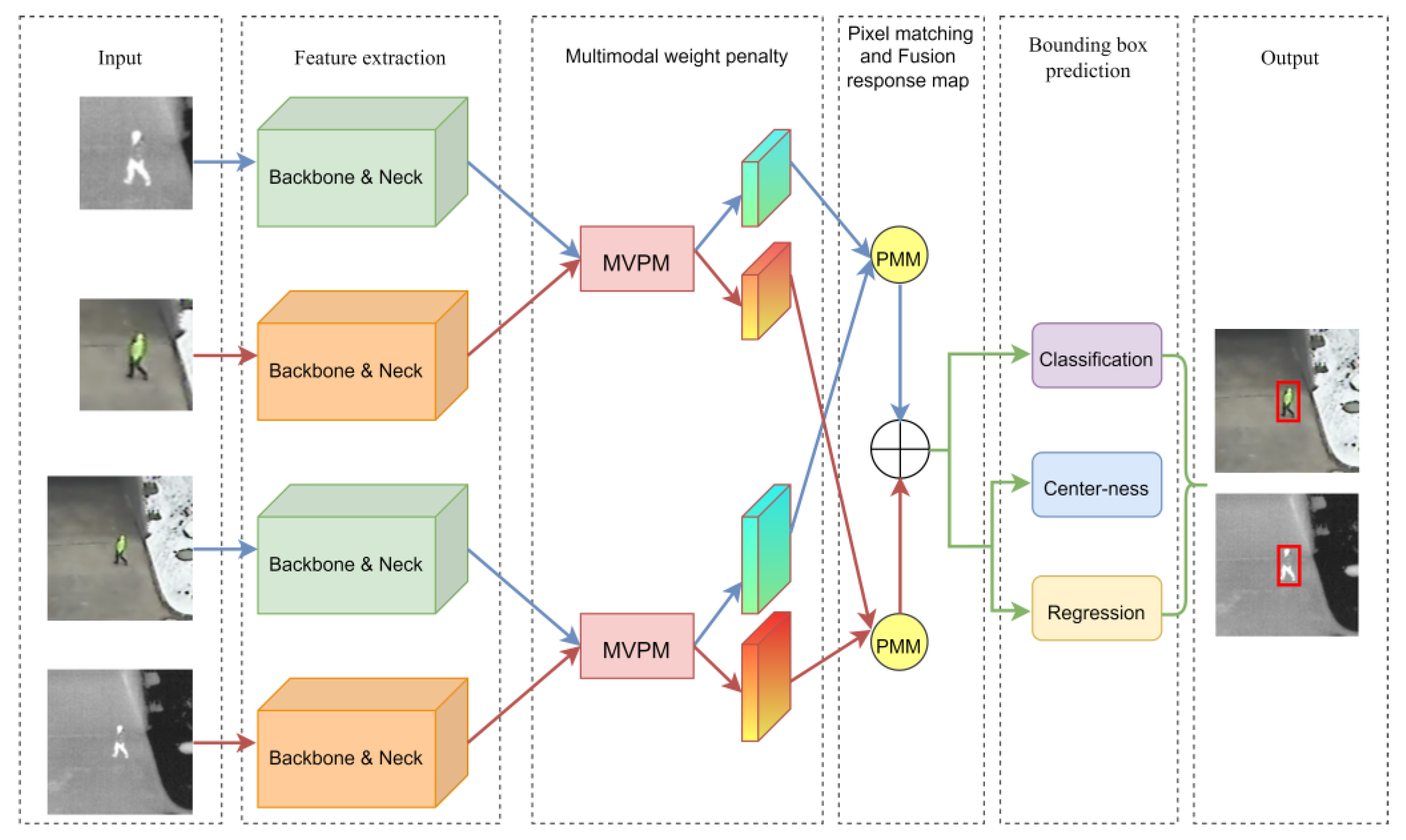

- A multi-modal weight penalty module is proposed to fully use the advantages of the two modal features and deal with various complex illumination challenges.

- A pixel-matching module and an improved anchor-free position prediction network are proposed to suppress the influence of cluttered background on the localized object and locate the object accurately and quickly for tracking.

- A new end-to-end RGB-T tracker based on Siamese-net is proposed, which can satisfy the robustness and real-time tracking. The experimental results on two standard datasets show our new tracker is effective.

2. Related Works

3. Method

3.1. Siamese Network Architecture

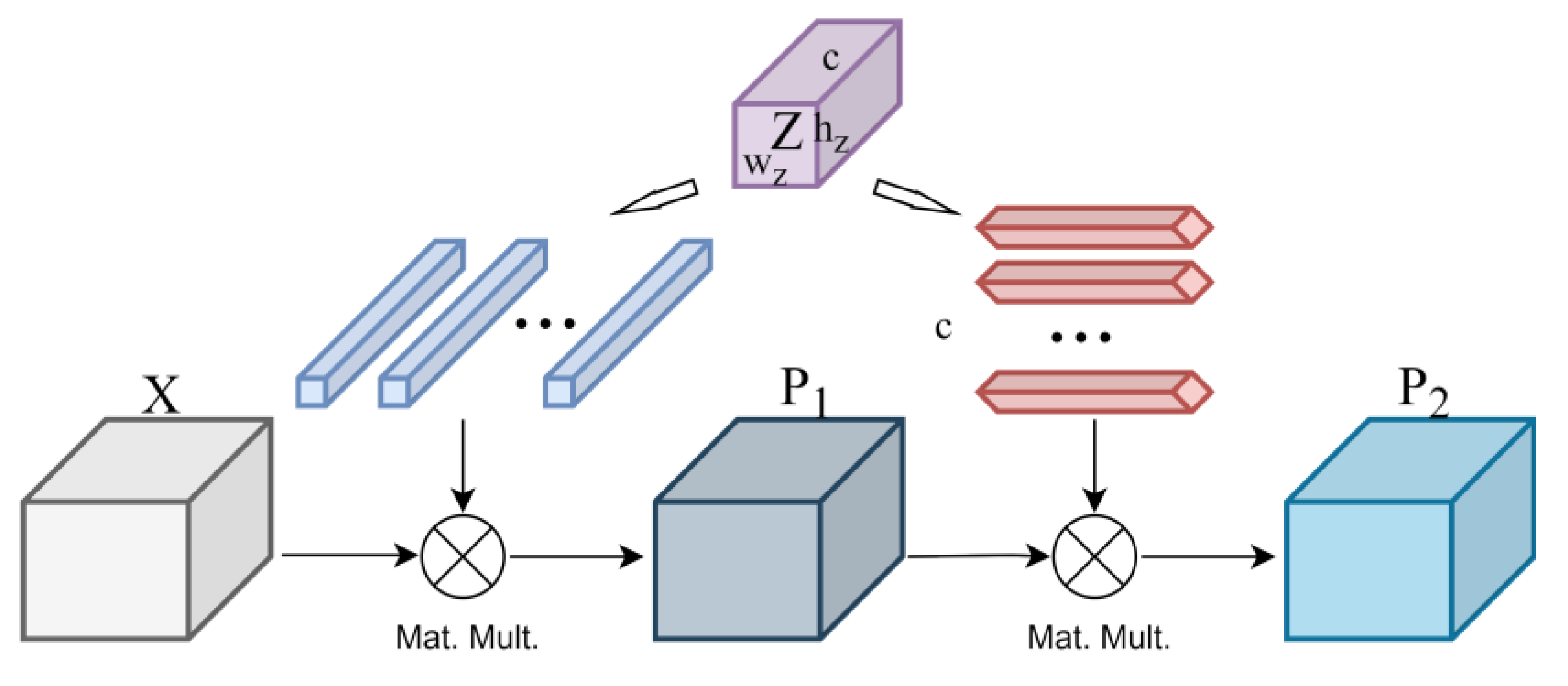

3.2. Multi-Modal Weight Penalty Module (MWPM)

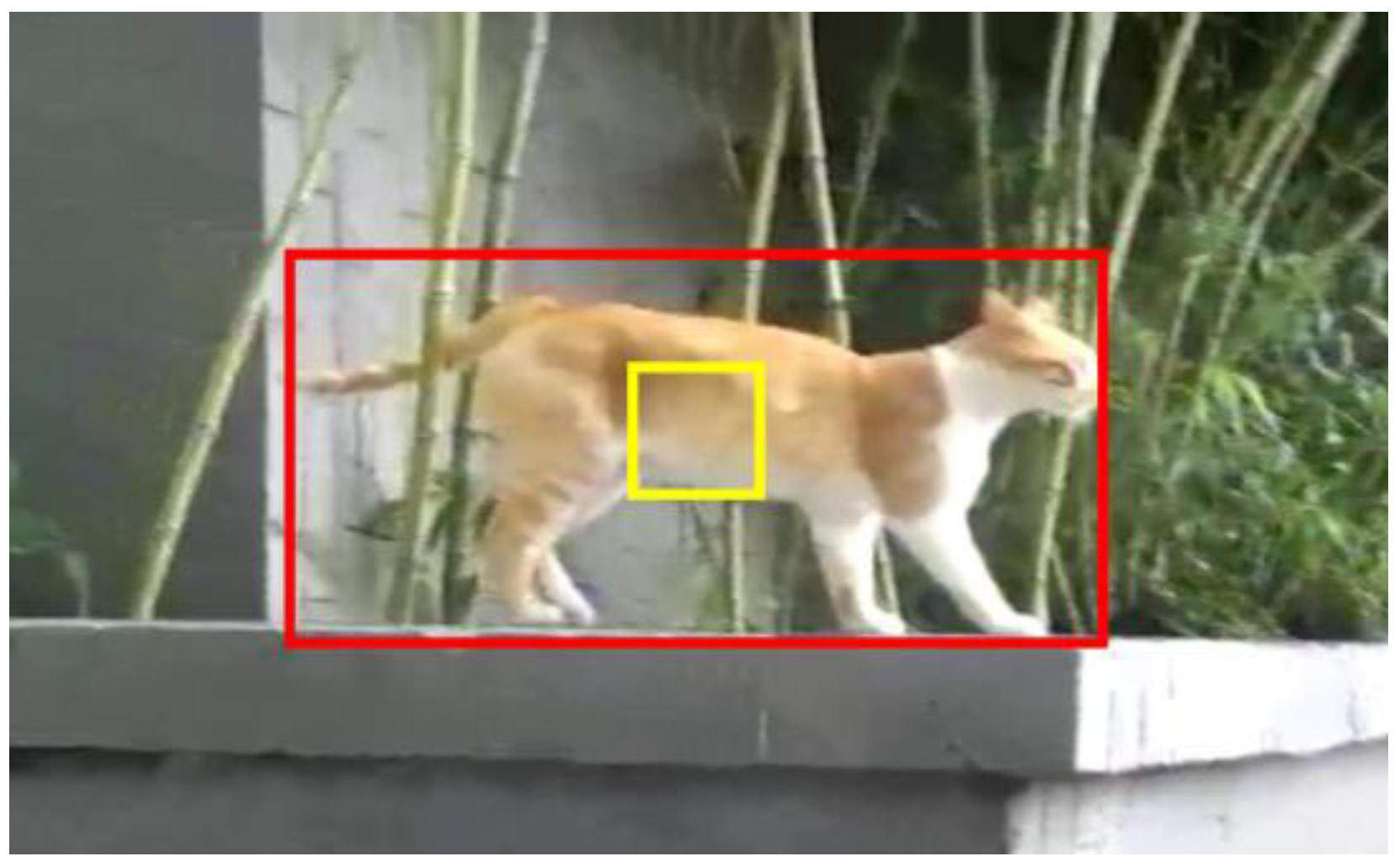

3.3. Pixel Matching Module (PMM)

4. Results

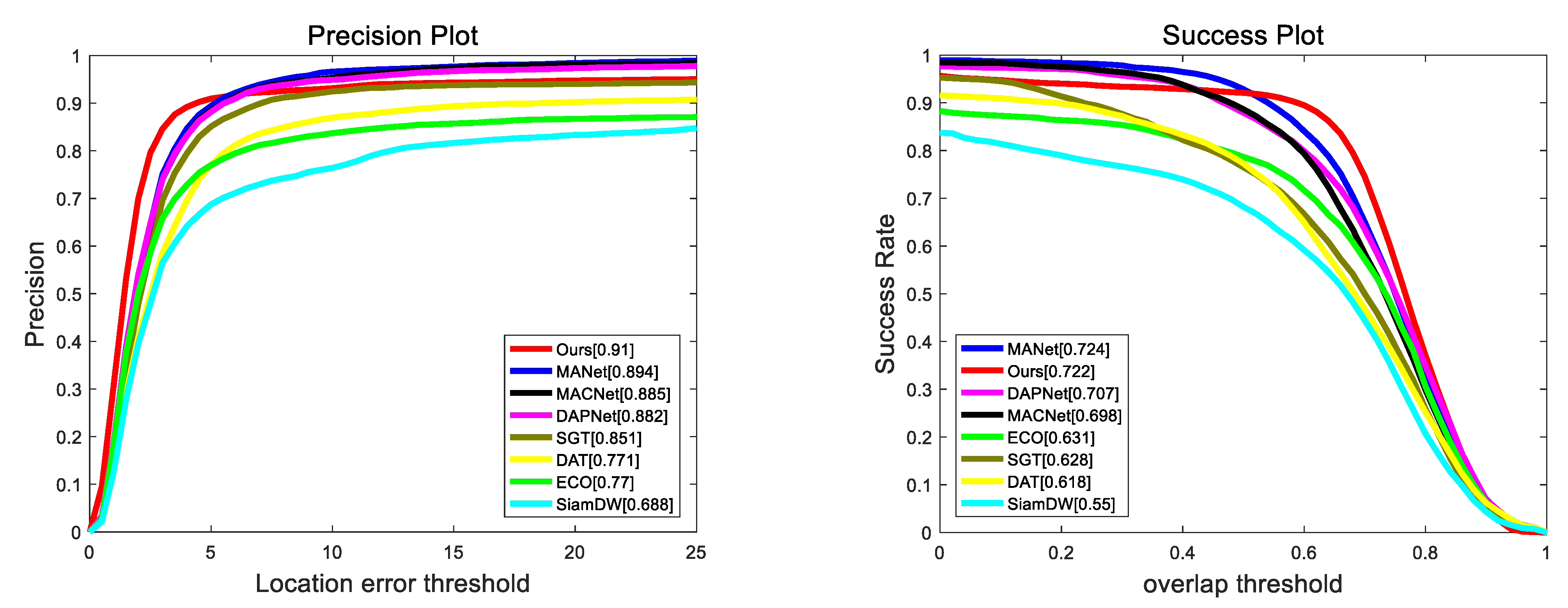

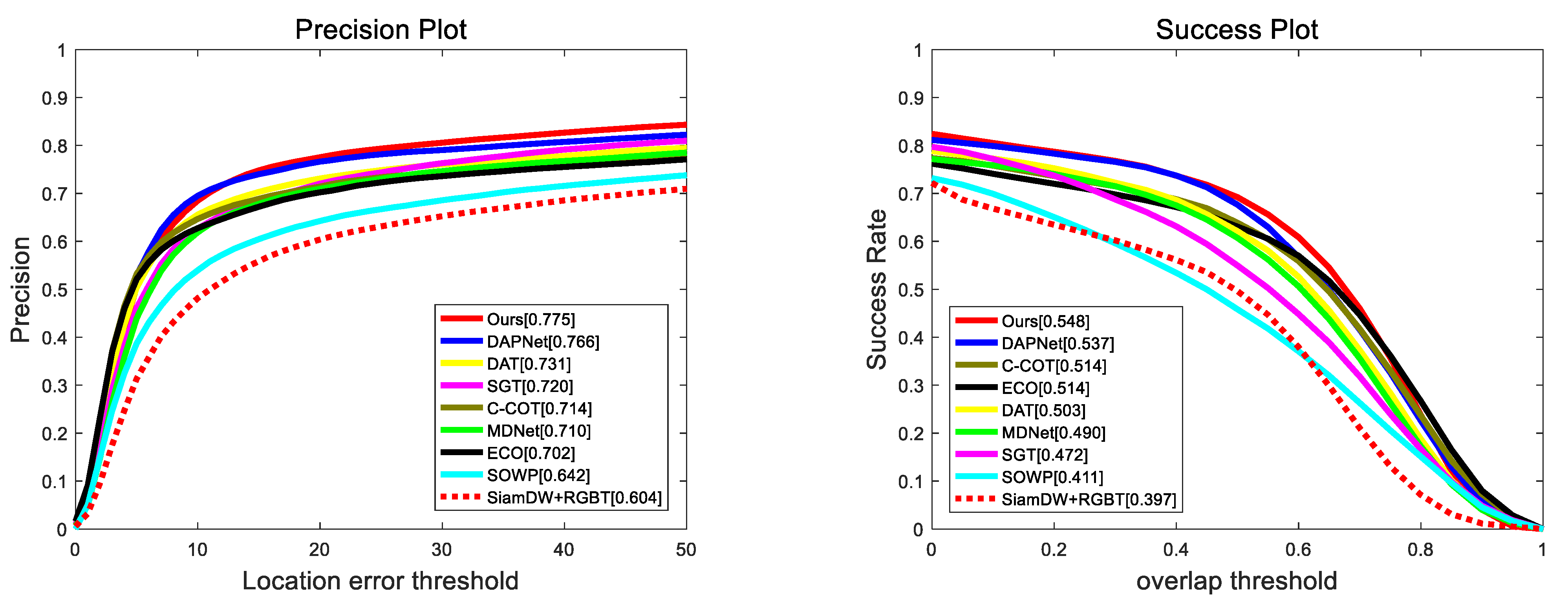

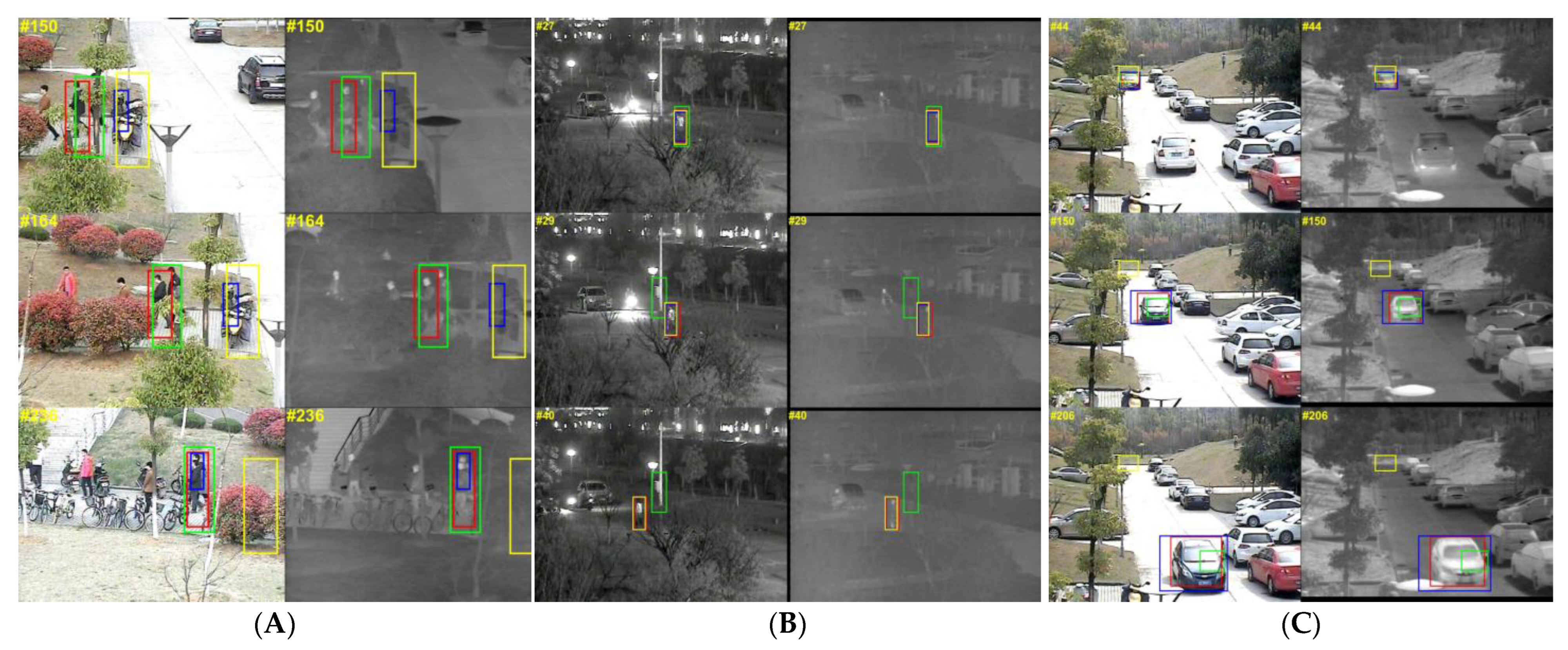

4.1. Results on GTOT

4.2. Results on RGBT234

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, F.; Wang, X.; Zhao, Y.; Lv, S.; Niu, X. Visual object tracking: A survey. Comput. Vis. Image Underst. 2022, 222, 103508. [Google Scholar] [CrossRef]

- Zhang, X.; Ye, P.; Leung, H.; Gong, K.; Xiao, G. Object fusion tracking based on visible and infrared images: A comprehensive review. Inf. Fusion 2020, 63, 166–187. [Google Scholar] [CrossRef]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4293–4302. [Google Scholar]

- Li, C.; Liu, L.; Lu, A.; Ji, Q.; Tang, J. Challenge-aware RGBT tracking. In Proceedings of the Computer Vision—ECCV 2020, Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Part XXII. Springer International Publishing: Cham, Switzerland, 2020; pp. 222–237. [Google Scholar]

- Wang, C.; Xu, C.; Cui, Z.; Zhou, L.; Zhang, T.; Zhang, X.; Yang, J. Cross-modal pattern-propagation for RGB-T tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 14–19 June 2020; pp. 7064–7073. [Google Scholar]

- Zhu, Y.; Li, C.; Tang, J.; Luo, B. Quality-aware feature aggregation network for robust RGBT tracking. IEEE Trans. Intell. Veh. 2020, 6, 121–130. [Google Scholar] [CrossRef]

- Zhang, P.; Wang, D.; Lu, H.; Yang, X. Learning adaptive attribute-driven representation for real-time RGB-T tracking. Int. J. Comput. Vis. 2021, 129, 2714–2729. [Google Scholar] [CrossRef]

- Zhang, X.; Ye, P.; Peng, S.; Liu, J.; Gong, K.; Xiao, G. SiamFT: An RGB-infrared fusion tracking method via fully convolutional Siamese networks. IEEE Access 2019, 7, 122122–122133. [Google Scholar] [CrossRef]

- Guo, C.; Yang, D.; Li, C.; Song, P. Dual Siamese network for RGBT tracking via fusing predicted position maps. Vis. Comput. 2022, 38, 2555–2567. [Google Scholar] [CrossRef]

- Zhang, T.; Liu, X.; Zhang, Q.; Han, J. SiamCDA: Complementarity-and distractor-aware RGB-T tracking based on the Siamese network. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 1403–1417. [Google Scholar] [CrossRef]

- Xiao, Y.; Jing, Z.; Xiao, G.; Bo, J.; Zhang, C. A compressive tracking based on time-space Kalman fusion model. Inf. Sci. 2016, 59, 012106. [Google Scholar]

- Xiao, G.; Yun, X.; Wu, J. A new tracking approach for visible and infrared sequences based on tracking-before-fusion. Int. J. Dyn. Control 2016, 4, 40–51. [Google Scholar] [CrossRef]

- Zhai, S.; Shao, P.; Liang, X.; Wang, X. Fast RGB-T tracking via cross-modal correlation filters. Neurocomputing 2019, 334, 172–181. [Google Scholar] [CrossRef]

- Yun, X.; Sun, Y.; Yang, X.; Lu, N. Discriminative fusion correlation learning for visible and infrared tracking. Math. Probl. Eng. 2019, 2019, 2437521. [Google Scholar] [CrossRef]

- Xiong, Y.J.; Zhang, H.T.; Deng, X. RGBT Dual-modal Tracking with Weighted Discriminative Correlation Filters. J. Signal Process. 2020, 36, 1590–1597. [Google Scholar]

- Xu, N.; Xiao, G.; Zhang, X.; Bavirisetti, D.P. Relative object tracking algorithm based on convolutional neural network for visible and infrared video sequences. In Proceedings of the ACM International Conference on Virtual Reality, Hong Kong, China, 24–26 February 2018. [Google Scholar]

- Li, C.; Lu, A.; Zheng, A.; Tu, Z.; Tang, J. Multi-adapter RGBT tracking. In Proceedings of the 2019 IEEE International Conference on Computer Vision Workshop, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2262–2270. [Google Scholar]

- Zhu, Y.; Li, C.; Luo, B.; Tang, J.; Wang, X. Dense feature aggregation and pruning for RGBT tracking. In Proceedings of the ACM Multimedia Conference, Nice, France, 21–25 October 2019; pp. 465–472. [Google Scholar]

- Lu, A.; Qian, C.; Li, C.; Tang, J.; Wang, L. Duality-gated mutual condition network for RGBT tracking. IEEE Trans. Neural Netw. Learn. Syst. 2022. [Google Scholar] [CrossRef] [PubMed]

- Bertinetto, L.; Jack Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Proceedings of the European Conference on Computer Vision Workshops, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; pp. 850–865. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4282–4291. [Google Scholar]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese box adaptive network for visual tracking. In Proceedings of the 2020 IEEE Conference on Computer Vision and Pattern Recognition, Online, 14–19 June 2020; pp. 6667–6676. [Google Scholar]

- Zhang, X.; Ye, P.; Xiao, G.; Qiao, D.; Zhao, J.; Peng, S.; Xiao, G. Object fusion tracking based on visible and infrared images using fully convolutional siamese networks. In Proceedings of the International Conference on Information Fusion, Ottawa, ON, Canada, 2–5 July 2019. [Google Scholar]

- Zhang, X.; Ye, P.; Qiao, D.; Zhao, J.; Peng, S.; Xiao, G. DSiamMFT: An RGB-T fusion tracking method via dynamic Siamese networks using multi-layer feature fusion. Signal Process. Image Commun. 2020, 84, 115756. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Guo, D.; Wang, J.; Cui, Y.; He, K.; Hariharan, B.; Belongie, S. SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 14–19 June 2020; pp. 6269–6277. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.Y.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 658–666. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning (PMLR), Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Li, C.; Cheng, H.; Hu, S.; Liu, X.; Tang, J.; Lin, L. Learning collaborative sparse representation for grayscale-thermal tracking. IEEE Trans. Image Process. 2016, 25, 5743–5756. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Liang, X.; Lu, Y.; Zhao, N.; Tang, J. RGB-T object tracking: Benchmark and baseline. Pattern Recognit. 2019, 96, 106977. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Li, C.; Xue, W.; Jia, Y.; Qu, Z.; Luo, B.; Tang, J.; Sun, D. LasHeR: A large-scale high-diversity benchmark for RGBT tracking. IEEE Trans. Image Process. 2021, 31, 392–404. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Zhang, L.; Zhuo, L.; Zhang, J. Object tracking in RGB-T videos using modal-aware attention network and competitive learning. Sensors 2020, 20, 393. [Google Scholar] [CrossRef] [PubMed]

- Danelljan, M.; Bhat, G.; Shahbaz Khan, F.; Felsberg, M. Eco: Efficient convolution operators for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6638–6646. [Google Scholar]

- Pu, S.; Song, Y.; Ma, C.; Zhang, H.; Yang, M.H. Deep attentive tracking via reciprocative learning. Adv. Neural Inf. Process. Syst. 2018, 31. Available online: https://proceedings.neurips.cc/paper/2018/hash/c32d9bf27a3da7ec8163957080c8628e-Abstract.html (accessed on 2 December 2018).

- Li, C.; Zhao, N.; Lu, Y.; Zhu, C.; Tang, J. Weighted sparse representation regularized graph learning for RGB-T object tracking. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1856–1864. [Google Scholar]

- Zhang, Z.; Peng, H. Deeper and wider siamese networks for real-time visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4591–4600. [Google Scholar]

- Danelljan, M.; Robinson, A.; Shahbaz Khan, F.; Felsberg, M. Beyond correlation filters: Learning continuous convolution operators for visual tracking. In Proceedings of the Computer Vision—ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Part V; Springer International Publishing: Cham, Switzerland, 2016; pp. 472–488. [Google Scholar]

- Kim, H.U.; Lee, D.Y.; Sim, J.Y.; Kim, C.S. Sowp: Spatially ordered and weighted patch descriptor for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 3011–3019. [Google Scholar]

| Attributes | OOC | LSV | FM | LI | TC | SO | DEF | ALL |

|---|---|---|---|---|---|---|---|---|

| SiamDW | 63.4/49.2 | 72.0/55.7 | 63.2/48.4 | 68.8/55.1 | 68.4/53.6 | 73.2/53.4 | 69.8/55.9 | 68.8/55.0 |

| ECO | 77.5/62.2 | 85.6/70.5 | 77.9/64.5 | 75.2/61.7 | 81.9/65.3 | 90.7/69.1 | 75.2/59.8 | 77.0/63.1 |

| DAT | 77.2/59.2 | 78.6/62.4 | 82.0/61.5 | 76.0/60.9 | 80.9/62.6 | 88.6/64.4 | 76.9/63.3 | 77.1/61.8 |

| SGT | 81.0/56.7 | 84.2/54.7 | 79.9/55.9 | 88.4/65.1 | 84.8/61.5 | 91.7/61.8 | 91.9/73.3 | 85.1/62.8 |

| DAPNet | 87.3/67.4 | 84.7/64.8 | 82.3/61.9 | 90.0/72.2 | 89.3/69.0 | 93.7/69.2 | 91.9/77.1 | 88.2/70.7 |

| MACNet | 86.7/67.6 | 84.2/66.2 | 84.4/63.3 | 90.1/70.7 | 89.3/68.6 | 93.2/67.8 | 92.2/74.4 | 88.5/69.8 |

| MANet | 88.2/69.6 | 86.9/70.6 | 87.9/69.4 | 91.4/73.6 | 88.9/70.2 | 93.2/70.0 | 92.3/75.2 | 89.4/72.4 |

| Ours | 84.8/67.6 | 93.2/74.5 | 87.0/70.0 | 94.8/73.9 | 88.9/70.4 | 93.2/72.0 | 94.6/73.2 | 91.0/72.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, D.; Zhang, Y.; Chen, M.; Chai, H. Attention and Pixel Matching in RGB-T Object Tracking. Mathematics 2023, 11, 1646. https://doi.org/10.3390/math11071646

Li D, Zhang Y, Chen M, Chai H. Attention and Pixel Matching in RGB-T Object Tracking. Mathematics. 2023; 11(7):1646. https://doi.org/10.3390/math11071646

Chicago/Turabian StyleLi, Da, Yao Zhang, Min Chen, and Haoxiang Chai. 2023. "Attention and Pixel Matching in RGB-T Object Tracking" Mathematics 11, no. 7: 1646. https://doi.org/10.3390/math11071646

APA StyleLi, D., Zhang, Y., Chen, M., & Chai, H. (2023). Attention and Pixel Matching in RGB-T Object Tracking. Mathematics, 11(7), 1646. https://doi.org/10.3390/math11071646