An Improved Deep-Learning-Based Financial Market Forecasting Model in the Digital Economy

Abstract

1. Introduction

2. Related Work

2.1. Deep Learning

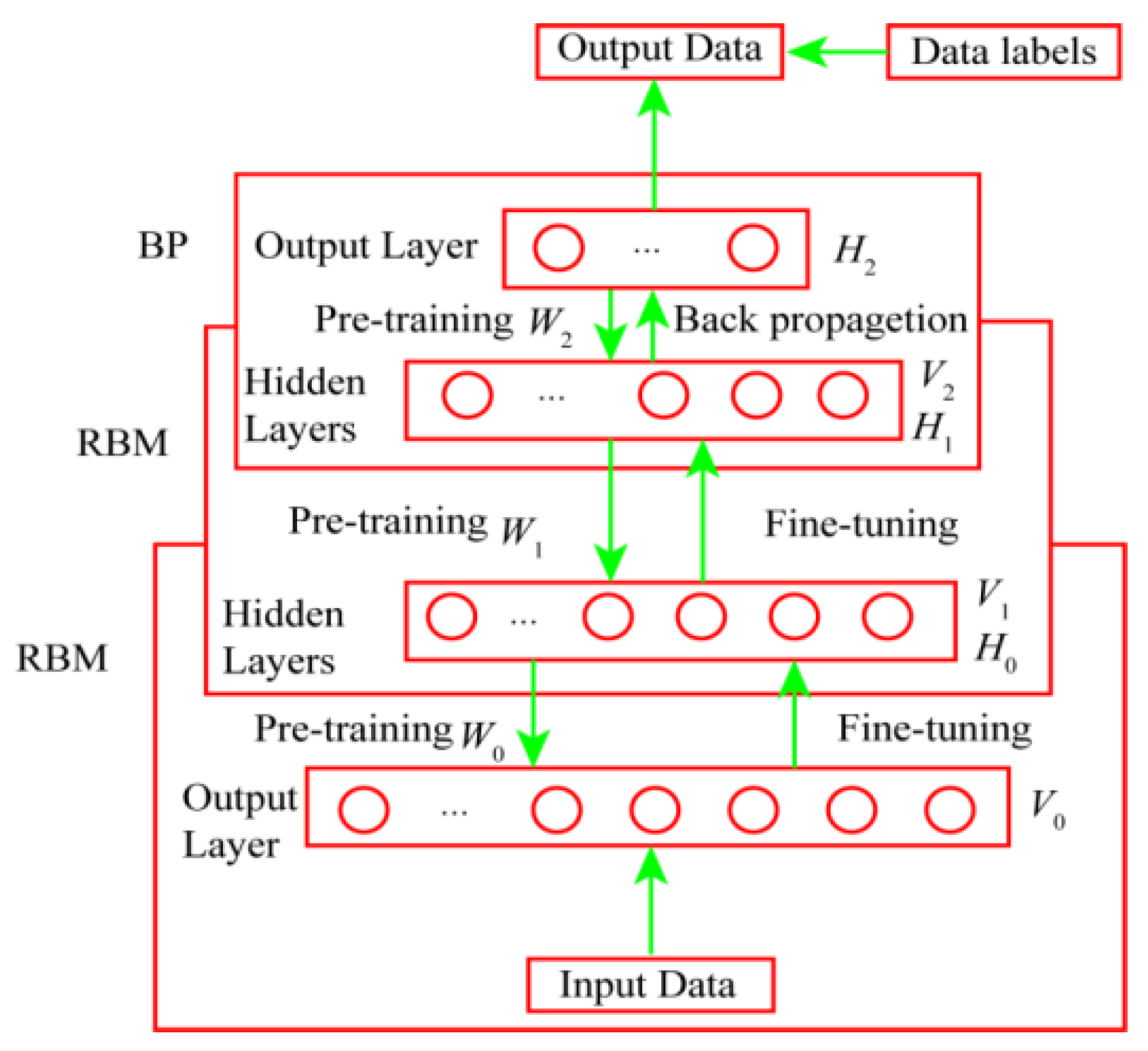

2.1.1. Deep Belief Network

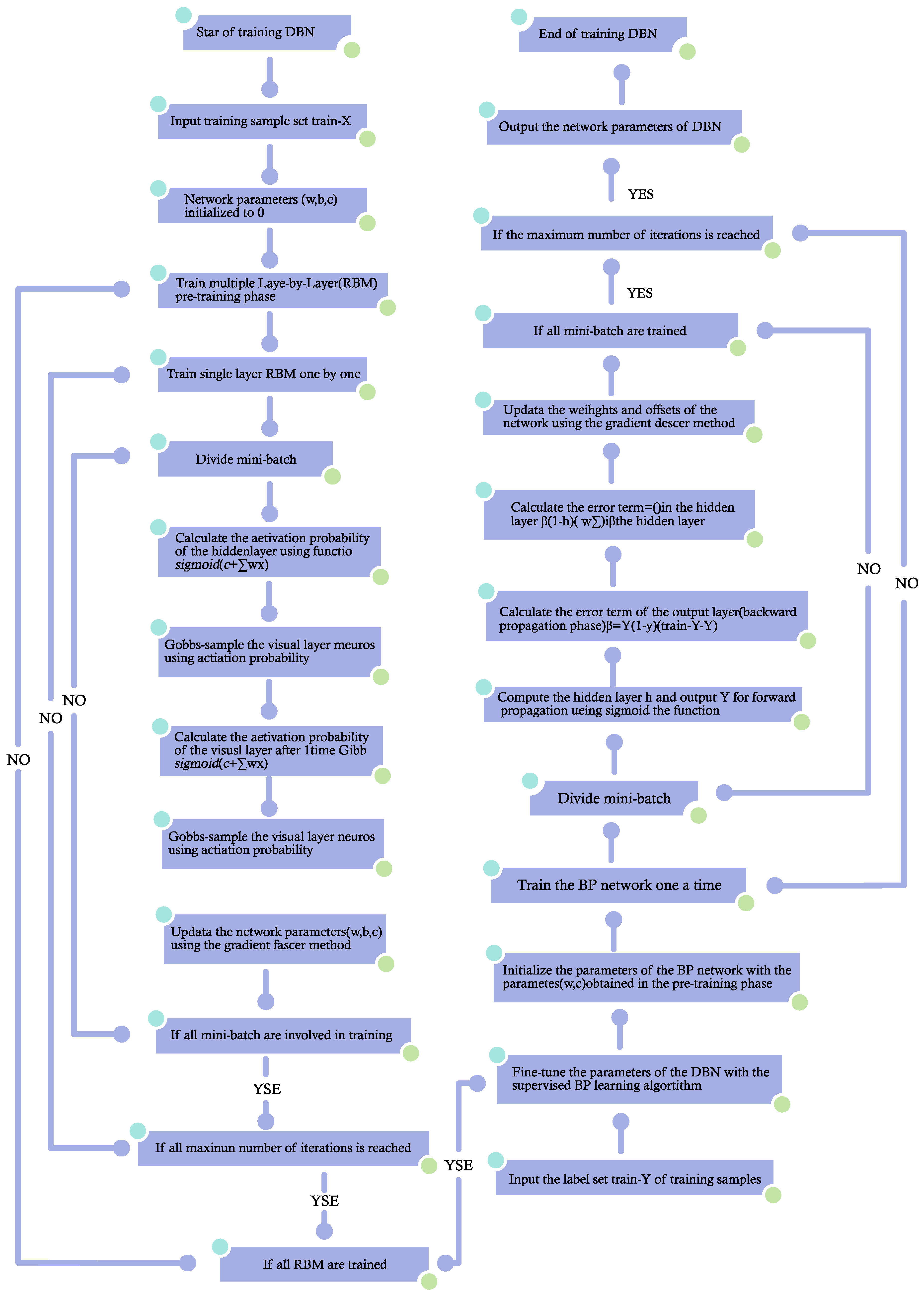

2.1.2. Training of Deep Confidence Networks

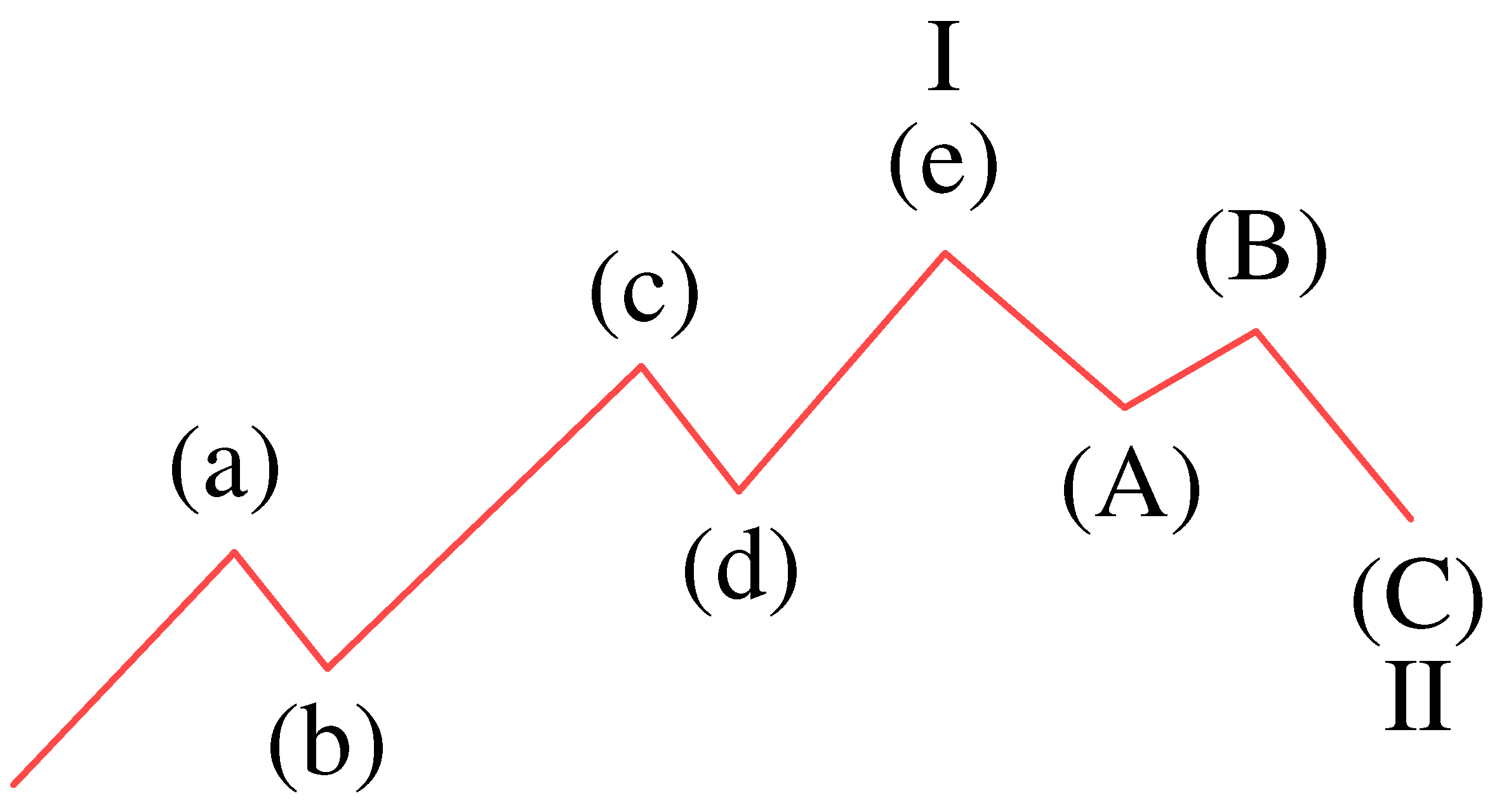

2.2. Elliott Wave Theory

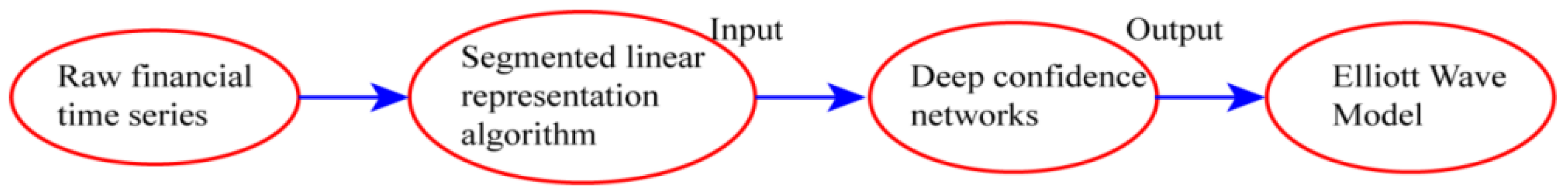

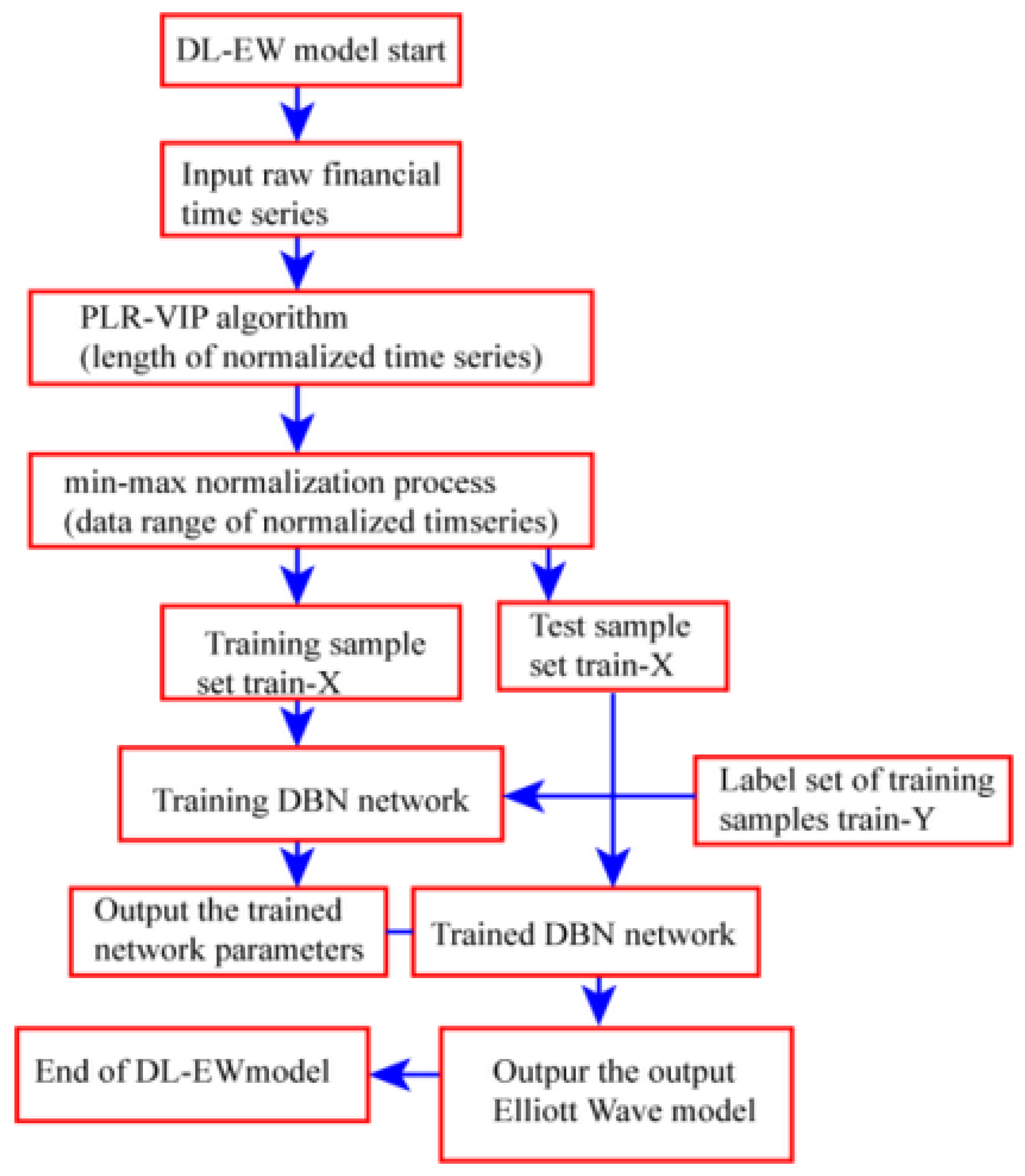

3. Constructing a Model Integrating Deep Learning and Elliott Wave Theory

4. Empirical Validity of the DL-EWP Model

4.1. Data Selection

4.2. Data Preprocessing

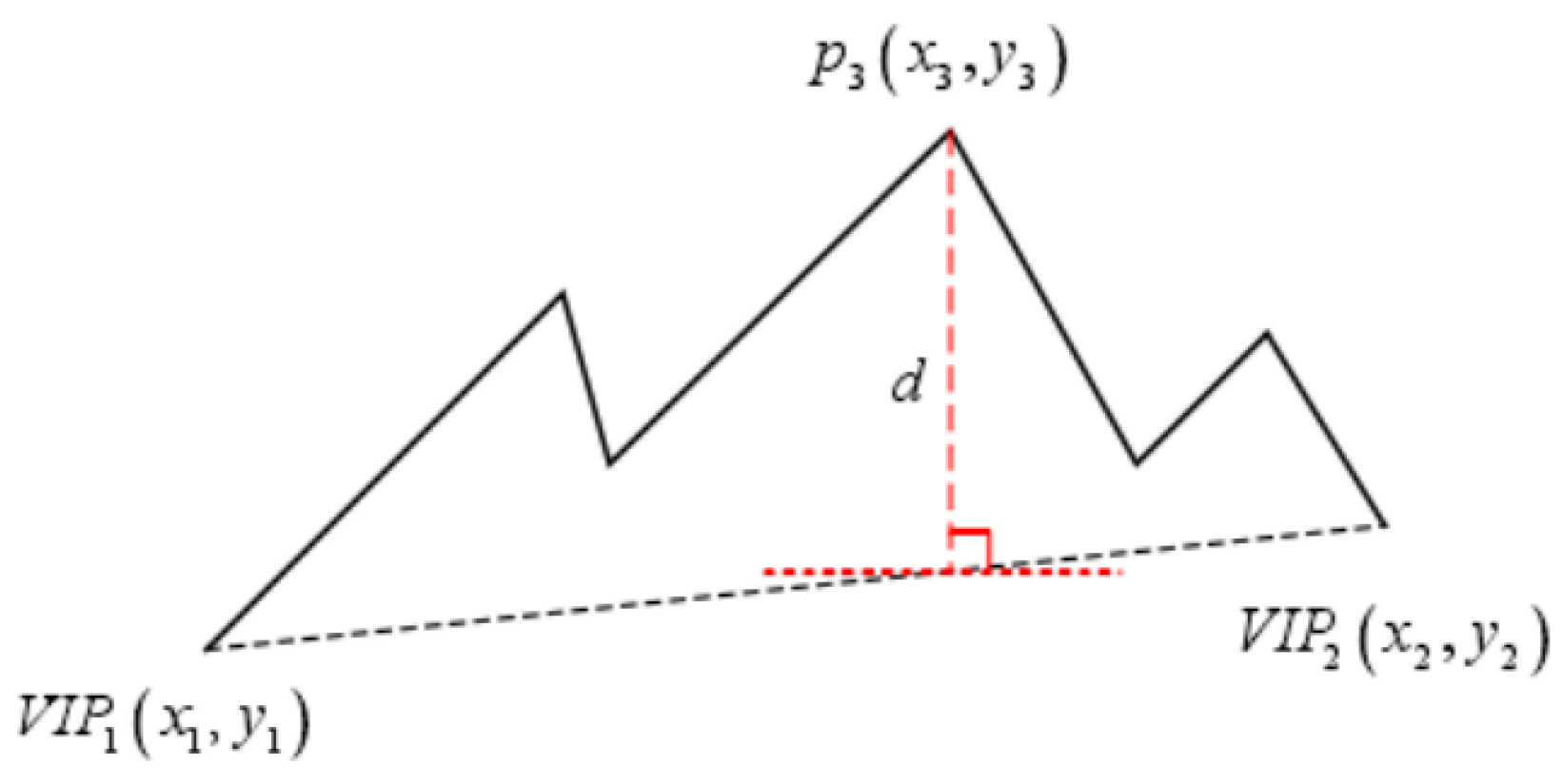

4.2.1. Segmented Linear Representation Algorithm

|

4.2.2. Min-Max Normalization

4.2.3. Example of Data Preprocessing

4.3. Design of the Elliott Wave Model

4.4. Design of DBN Network Parameters

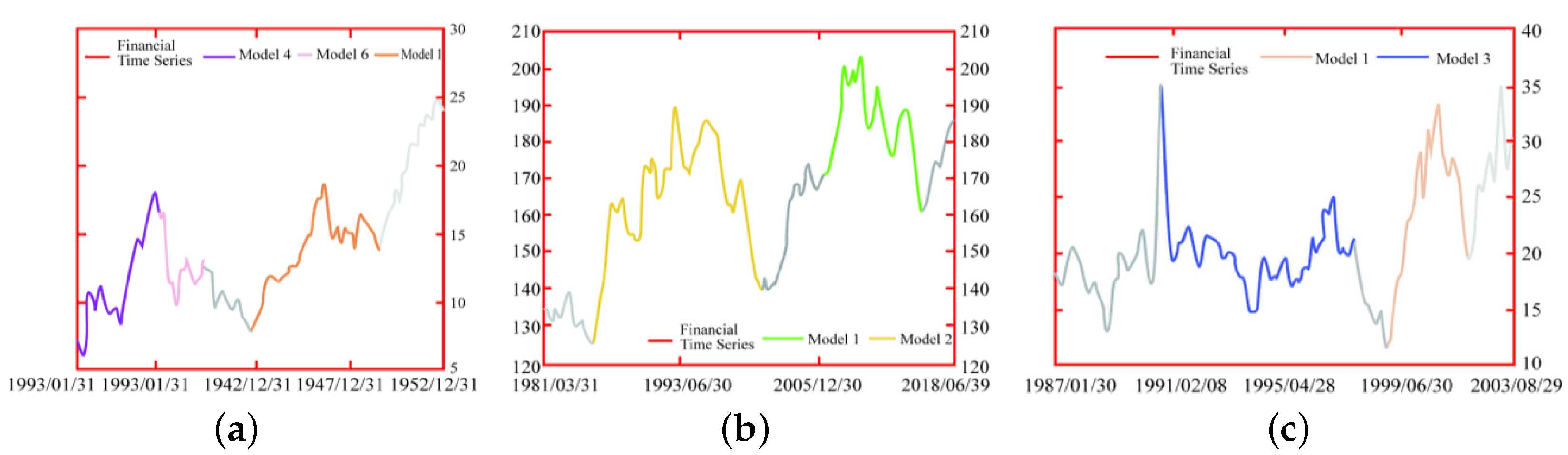

4.5. Empirical Results of the DL-EWP Model

5. Comparison of Models

5.1. Selecting Evaluation Criteria

5.2. Parameter Design of the Reference Model

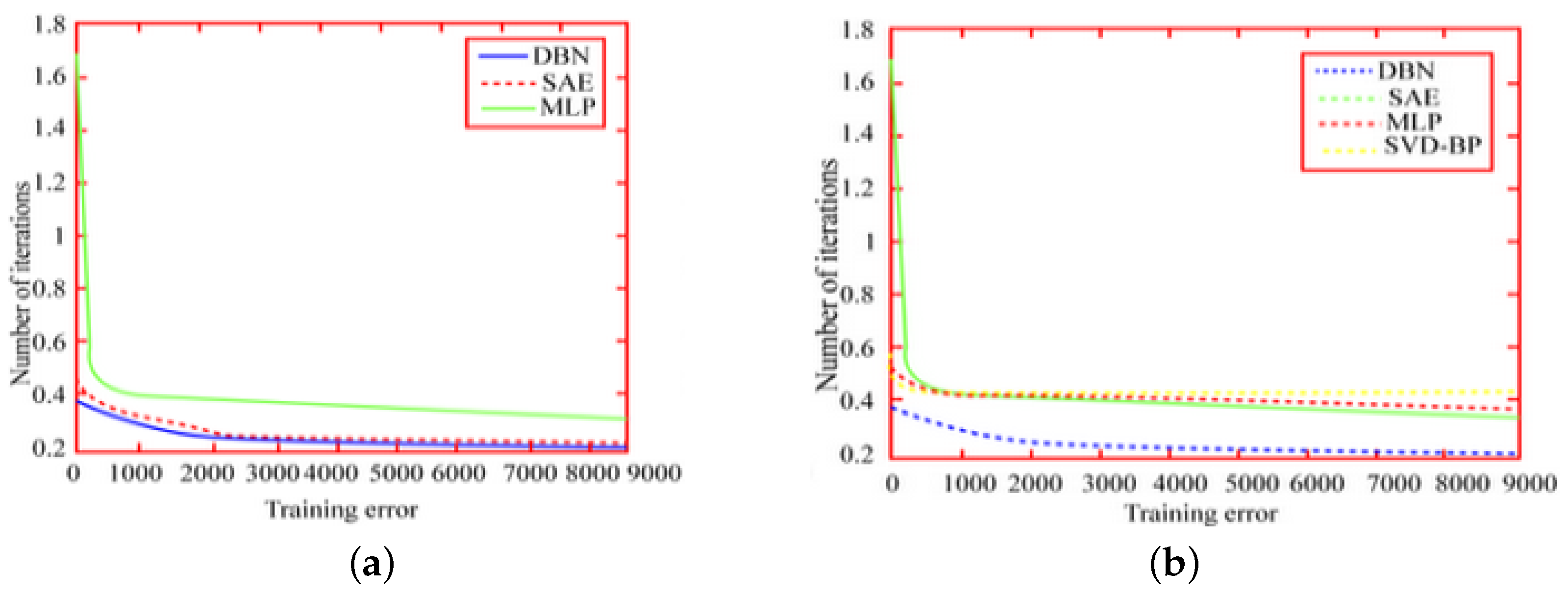

5.3. Comparison of the DL-EWP Model’s Performance

6. Conclusions and Discussion

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| SAE | Stacked autoencoder |

| MLP | Multilayer perceptron |

| BP | Backpropagation |

| PCA | Principal component analysis |

| SVD | Singular value decomposition |

| PCA-BP | Principal component analysis backpropagation |

| SVD-BP | Singular value decomposition backpropagation |

| DL | Deep learning |

| DL-EWP | Deep learning + Elliott wave principle |

| DNNs | Deep neural networks |

| LSTM | Long short-term memory |

| WASP | Wave analysis stock prediction |

| RBM | Restricted Boltzmann machine |

| DBN | Deep belief network |

| MSE | Mean square error |

| RMSE | Root-mean-square error |

| MAE | Mean absolute error |

| ER | Error rate |

| PLR_VIP | Piecewise linear representation |

References

- Huang, D.S.; Han, K.; Hussain, A. High-Frequency Trading Strategy Based on Deep Neural Networks. Lect. Notes Comput. Sci. 2016, 42, 166–178. [Google Scholar]

- Chong, E.; Han, C.; Park, F. Deep learning networks for stock market analysis and prediction. Expert Syst. Appl. Int. J. 2017, 83, 187–205. [Google Scholar] [CrossRef]

- Fischer, T.; Krauss, C. Deep learning with long short-term memory networks for financial market predictions. Eur. J. Oper. Res. 2018, 270, 654–669. [Google Scholar] [CrossRef]

- Bao, W.; Yue, J.; Rao, Y.; Boris, P. A deep learning framework for financial time series using stacked autoencoders and long-short term memory. PLoS ONE 2017, 12, e0180944. [Google Scholar] [CrossRef] [PubMed]

- Basu, T.; Menzer, O.; Ward, J.; SenGupta, I. A Novel Implementation of Siamese Type Neural Networks in Predicting Rare Fluctuations in Financial Time Series. Risks 2022, 10, 39. [Google Scholar] [CrossRef]

- Navon, D.; Olearczyk, N.; Albertson, R.C. Genetic and developmental basis for fin shape variation in African cichlid fishes. Mol. Ecol. 2017, 26, 291–303. [Google Scholar] [CrossRef] [PubMed]

- SenGupta1, I.; Nganje, W.; HansonJie, E. Refnements of Barndorf Nielsen and Shephard Model: An Analysis of Crude Oil Price with Machine Learning. Ann. Data Sci. 2021, 8, 39–55. [Google Scholar] [CrossRef]

- Moews, B.; Herrmann, J.M.; Ibikunle, G. Lagged correlation-based deep learning for directional trend change prediction in financial time series. Expert Syst. Appl. 2018, 120, 197–206. [Google Scholar] [CrossRef]

- Jarusek, R.; Volna, E.; Kotyrba, M. FOREX rate prediction improved by Elliott waves patterns based on neural networks. Neural Netw. Off. J. Int. Neural Netw. Soc. 2022, 145, 342–355. [Google Scholar] [CrossRef] [PubMed]

- Volna, E.; Kotyrba, M.; Jarusek, R. Multi-classifier based on Elliott wave’s recognition. Comput. Math. Appl. 2013, 66, 213–225. [Google Scholar] [CrossRef]

- Wen, J.; Fang, X.; Cui, J.; Fei, L.; Yan, K.; Chen, Y.; Xu, Y. Robust Sparse Linear Discriminant Analysis. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 390–403. [Google Scholar] [CrossRef]

- Li, Q.; Jin, J. Evaluation of Foundation Fieldbus H1 Networks for Steam Generator Level Control. IEEE Trans. Control. Syst. Technol. 2011, 19, 1047–1058. [Google Scholar] [CrossRef]

- Elaal, M.; Selim, G.; Fakhr, W. Stock Market Trend Prediction Model for the Egyptian Stock Market Using Neural Networks and Fuzzy Logic. In Proceedings of the Bio-Inspired Computing & Applications-International Conference on Intelligent Computing, Lugano, Switzerland, 12–15 December 2022. [Google Scholar]

- Atsalakis, G.S.; Tsakalaki, K.I.; Zopounidis, C. Forecasting the Prices of Credit Default Swaps of Greece by a Neuro-fuzzy Technique. Int. J. Econ. Manag. 2012, 4, 45–58. [Google Scholar]

- Wen, J.; Xu, Y.; Liu, H. Incomplete Multiview Spectral Clustering With Adaptive Graph Learning. IEEE Trans. Cybern. 2018, 50, 1418–1429. [Google Scholar] [CrossRef] [PubMed]

- Shipard, J.; Wiliem, A.; Fookes, C. Does Interference Exist When Training a Once-For-All Network? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18 June–24 June 2022. [Google Scholar]

- Zhang, K.; Shi, S.; Liu, S.; Wan, J.; Ren, L. Research on DBN-based Evaluation of Distribution Network Reliability. In Proceedings of the 7th International Conference on Renewable Energy Technologies (ICRET 2021), Kuala Lumpur, Malaysia, 8–10 January 2021; p. 03004. [Google Scholar]

- Kathleen, M. Elliott Young. Forever Prisoners: How the United States Made the World’s Largest Immigrant Detention System. Am. Hist. Rev. 2022, 126, 1611–1615. [Google Scholar]

- Wen, J.; Xu, Y.; Li, Z.; Ma, Z.; Xu, Y. Inter-class sparsity based discriminative least square regression. Neural Netw. 2018, 102, 36–47. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.J.; Zeng, W.S.; Ho, J.M. Optimal Segmented Linear Regression for Financial Time Series Segmentation. In Proceedings of the 2021 International Conference on Data Mining Workshops (ICDMW), Auckland, New Zealand, 7–10 December 2021. [Google Scholar]

- Hamzehzarghani, H.; Behjatnia, S.; Delnavaz, S. Segmented linear Model to characterize tolerance to tomato yellow leaf curl virus and tomato leaf curl virus in two tomato cultivars under greenhouse conditions. Iran Agric. Res. 2020, 39, 17–28. [Google Scholar]

- Gajera, V.; Shubham; Gupta, R.; Jana, P.K. An effective Multi-Objective task scheduling algorithm using Min-Max normalization in cloud computing. In Proceedings of the 2016 2nd International Conference on Applied and Theoretical Computing and Communication Technology (iCATccT), Bangalore, India, 21–23 July 2016. [Google Scholar]

- Dimitrios, D.; Anastasios, P.; Thanassis, K.; Dimitris, K. Do confidence indicators lead Greek economic activity? Bull. Appl. Econ. 2021, 8, 1. [Google Scholar]

- Nevela, A.Y.; Lapshin, V.A. Model Risk and Basic Approaches to its Estimation on Example of Market Risk Models. Finans. žhurnal—Financ. J. 2022, 2, 91–112. [Google Scholar] [CrossRef]

| Foreign Exchange Market | Global Stock Indices | Commodities (Futures) |

|---|---|---|

| US Dollar Index | Dow Jones Industrial Average (U.S.) (“Dow”) | COMEX Copper |

| Euro Index | Standard & Poor’s 500 Index (U.S.) (“S&P”) | COMEX Gold |

| EUR/USD | FTSE 100 (UK) (“FTSE 1000”) | WTI Crude Oil |

| EUR/GBP | DAX (Germany) (“DAX”) | CBOT Soybeans |

| GBP/USD | SSE (China) (“SSE”) | CBOT Wheat |

| USD/CNY | Hang Seng Index (Hong Kong, China) (“Hang Seng”) | ICE Cocoa |

| Market Category | Trading Varieties | Samples Size | Time Range of Data | Data Cycle |

|---|---|---|---|---|

| Foreign exchange market | Data Summaries | 6092 | ||

| Euro Index | 509 | 4 January 1971–9 November 2018 | Season/month/week/day | |

| EUR/USD | 337 | 4 January 1971–9 November 2018 | Season/month/week/day | |

| EUR/GBP | 373 | 4 January 1971–9 November 2018 | Season/month/week/day | |

| US Dollar Index | 201 | 19 March 1975–7 November 2018 | Season/month/week/day | |

| GBP/USD | 391 | 1 March 1900–9 November 2018 | Season/month/week/day | |

| USD/CNY | 64 | 9 April 1991–9 November 2018 | Week/day | |

| Forex Total | 1875 | |||

| Commodities | CMX Copper | 421 | 1 July 1959–7 November 2018 | Year/season/month/week/day |

| CBOT Wheat | 504 | 1 April 1959–9 November 2018 | Year/season/month/week/day | |

| ICE Cocoa | 491 | 1 July 1959–21 November 2018 | Year/season/month/week/day | |

| CMX Gold | 510 | 2 June 1969–9 November 2018 | Season/month/week/day | |

| WTI Crude Oil | 317 | 1 January 1982–7 November 2018 | Season/month/week/day | |

| CBOT Soybeans | 329 | 1 July 1959–9 November 2018 | Season/month/week/day | |

| Commodity Summaries | 2572 | |||

| S&P | 356 | 1 November 1928–8 November 2018 | Year/season/month/week/day | |

| Global stock indices | FTSE 100 | 578 | 13 November 1935–7 November 2018 | Year/season/month/week/day |

| Dow | 284 | 1 October 1928–2 November 2018 | Year/season/month/week/day | |

| DAX | 164 | 28 July 1959–8 November 2018 | Year/season/month/week/day | |

| SSE | 149 | 19 December 1990–7 November 2018 | Season/month/week/day | |

| Hang Seng | 114 | 19 December 1990–7 November 2018 | Season/month/week/day | |

| Total Stock Index | 1645 |

| Number of Hidden Layers | Number of Hidden Layer Units | Learning Rate | Number of Iterations | Momentum Factor |

|---|---|---|---|---|

| 2 | 10/10 | 0.1/0.12 | 1000/9000 | 0.51/0.8 |

| Evaluation Criteria | Formula of Calculation |

|---|---|

| MSE | |

| RMSE | |

| MAE | |

| ER |

| Number of Hidden Layers | Number of Hidden Layer Units | Learning Rate | Number of Iterations | Momentum Factor |

|---|---|---|---|---|

| 2 | 10/10 | 0.1/0.12 | 950/9000 | 0.51/0.5 |

| Number of Hidden Layers | Number of Hidden Layer Units | Learning Rate | Number of Iterations | Momentum Factor |

|---|---|---|---|---|

| 2 | 10/10 | 0.015 | 9000 | 0.6 |

| Number of Hidden Layers | Learning Rate | Number of Iterations | Momentum Factor |

|---|---|---|---|

| 2 | 0.015 | 9000 | 0.6 |

| Prediction Model | Evaluation Criteria | ||||

|---|---|---|---|---|---|

| MSE | RMSE | MAE | ER | ||

| Deep network model | DL-EWP | 0.4366 | 0.6577 | 0.9128 | 31.06% |

| SAE | 0.4306 | 0.6538 | 0.9392 | 34.74% | |

| MLP | 0.6349 | 0.8407 | 1.4562 | 43.62% | |

| Shallow network model | BP | 0.7721 | 0.8715 | 1.482 | 51.34% |

| PCA-BP | 0.7001 | 0.8207 | 1.3092 | 55.55% | |

| SVD-BP | 0.8745 | 0.9198 | 1.6135 | 79.97% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dexiang, Y.; Shengdong, M.; Liu, Y.; Jijian, G.; Chaolung, L. An Improved Deep-Learning-Based Financial Market Forecasting Model in the Digital Economy. Mathematics 2023, 11, 1466. https://doi.org/10.3390/math11061466

Dexiang Y, Shengdong M, Liu Y, Jijian G, Chaolung L. An Improved Deep-Learning-Based Financial Market Forecasting Model in the Digital Economy. Mathematics. 2023; 11(6):1466. https://doi.org/10.3390/math11061466

Chicago/Turabian StyleDexiang, Yang, Mu Shengdong, Yunjie Liu, Gu Jijian, and Lien Chaolung. 2023. "An Improved Deep-Learning-Based Financial Market Forecasting Model in the Digital Economy" Mathematics 11, no. 6: 1466. https://doi.org/10.3390/math11061466

APA StyleDexiang, Y., Shengdong, M., Liu, Y., Jijian, G., & Chaolung, L. (2023). An Improved Deep-Learning-Based Financial Market Forecasting Model in the Digital Economy. Mathematics, 11(6), 1466. https://doi.org/10.3390/math11061466